Abstract

OBJECTIVE:

Evolving primary care models require methods to help practices achieve quality standards. This study assessed the effectiveness of a Practice-Tailored Facilitation Intervention for improving delivery of 3 pediatric preventive services.

METHODS:

In this cluster-randomized trial, a practice facilitator implemented practice-tailored rapid-cycle feedback/change strategies for improving obesity screening/counseling, lead screening, and dental fluoride varnish application. Thirty practices were randomized to Early or Late Intervention, and outcomes assessed for 16 419 well-child visits. A multidisciplinary team characterized facilitation processes by using comparative case study methods.

RESULTS:

Baseline performance was as follows: for Obesity: 3.5% successful performance in Early and 6.3% in Late practices, P = .74; Lead: 62.2% and 77.8% success, respectively, P = .11; and Fluoride: <0.1% success for all practices. Four months after randomization, performance rose in Early practices, to 82.8% for Obesity, 86.3% for Lead, and 89.1% for Fluoride, all P < .001 for improvement compared with Late practices’ control time. During the full 6-month intervention, care improved versus baseline in all practices, for Obesity for Early practices to 86.5%, and for Late practices 88.9%; for Lead for Early practices to 87.5% and Late practices 94.5%; and for Fluoride, for Early practices to 78.9% and Late practices 81.9%, all P < .001 compared with baseline. Improvements were sustained 2 months after intervention. Successful facilitation involved multidisciplinary support, rapid-cycle problem solving feedback, and ongoing relationship-building, allowing individualizing facilitation approach and intensity based on 3 levels of practice need.

CONCLUSIONS:

Practice-tailored Facilitation Intervention can lead to substantial, simultaneous, and sustained improvements in 3 domains, and holds promise as a broad-based method to advance pediatric preventive care.

Keywords: child, quality improvement, obesity, lead poisoning, dental caries

What’s Known on This Subject:

Children receive only half of recommended health care; disadvantaged children have higher risk of unmet needs. Practice coaching combined with quality improvement using rapid-cycle feedback has potential to help practices meet quality standards and improve pediatric health care delivery.

What This Study Adds:

The Practice-tailored Facilitation Intervention led to large and sustained improvements in preventive service delivery, including substantial numbers of disadvantaged children, and in multiple simultaneous health care domains. Practice-tailored facilitation holds promise as a method to advance pediatric preventive care delivery.

Preventive services for children are crucially important to foster optimal health and developmental potential1–3; however, children receive only about half of recommended health care.4 Children from economically disadvantaged backgrounds have even higher risk of unmet needs.5–9 Improving the capacity of primary care practices to meet national standards based on evidence-based quality metrics is central to emerging health care models, but significant hurdles exist.10–17 System change is challenging; small group practices, the most common care setting,13,18–20 traditionally lack the systems, resources, and quality improvement experience needed to achieve broad-based, sustainable practice improvements. Practices differ in organization, leadership, and preferences, so 1 approach does not fit all,21,22 and addressing 1 component at a time can be inefficient and counterproductive.23–25 Practice change facilitation implements interventions tailored to the needs of individual practices and, especially when combined with academic detailing and rapid-cycle feedback, has been shown to improve delivery of primary care–based preventive services26,27; although experience with pediatric services is more limited,24,26,28–34 and insights into how effective facilitation actually works are also limited.34–36

We implemented and evaluated a 6-month Practice-Tailored Facilitation Intervention (PTFI), called CHEC-UPPP (Child Health Excellence Center: a University-Practice-Public Partnership), that combines practice coaching with rapid-cycle feedback/change to improve delivery of recommended pediatric preventive services simultaneously in 3 domains of public health importance: obesity detection and counseling, lead screening, and fluoride varnish application to prevent dental decay, targeting diverse practice settings.11,37–41 We also sought to characterize the process by which the facilitation approach can be successfully adjusted to meet diverse practices’ learning styles and levels of need.

Methods

A cluster-randomized trial design was conducted in which practices were randomized to initial or delayed intervention with the PTFI, and outcomes were measured at the level of the patient. Observational field note and interview data supplemented quantitative data for comparative case studies of the facilitation process across practices.

Practice Eligibility and Recruitment

Practices were recruited from 2 practice-based research networks: the Rainbow Research Network, and Research Association of Practices,42–44 pediatric and family medicine practice-based research networks, respectively, supported by the Cleveland Clinical and Translational Science Collaborative and The Case Comprehensive Cancer Center. Locally accessible practices were eligible for participation if they had at least 15% of patients of ≤10 years of age and at least 20% of pediatric patients covered by Medicaid insurance, and agreed to provide at least 2 of 3 targeted services and participate in educational meetings and chart reviews. Eligible practices were sequentially approached from November 1, 2010, to July 12, 2011, by letters followed by calls to practice lead physicians, until target practice enrollment was reached.

The protocol was approved by the institutional review board. Practices received fluoride varnish, and pediatricians were eligible for American Board of Pediatrics Maintenance of Certification credit.

Educational Sessions and Randomization

After baseline data collection, practice sites received 2 standardized 30-minute educational sessions, delivered by a coinvestigator (LC, SBM, RS).29,32 Sessions included information on the prevalence and health implications of obesity, lead exposure, and dental decay, and recommendations for preventive services, based on standards established by government and/or professional bodies.11,37–41,45

After the educational sessions, practices were randomized 1:1 to either Early-Phase or Late-Phase (control) PTFI from February 24, 2011, to August 31, 2011, by using covariate adaptive randomization,46 stratifying on practice size (≤2 or ≥3 clinicians) and percentage of children with Medicaid (20% to 40% or >40%) to balance practice-level covariates. To avoid contamination, practice sites that were administratively linked with shared leadership were randomized to the same intervention group. Because treating each site as an independent cluster may underestimate variance, for sensitivity analysis we repeated analyses by using the 19 administrative groups as the cluster variable replacing the 30 individual practice sites.47,48 Early-Phase practices began the 6-month PTFI immediately after randomization. Late-Phase practices began the PTFI after a 4-month lag (control time) during which program staff had no direct practice contact.

Intervention

The intervention was based on principles of practice-tailored facilitation13,26,30,34 and rapid-cycle change.13,18,24,31,49–52 Practice facilitation recognizes that imposed mandates for change are not likely to be sustained, and that incorporation of evidence-based practice requires tailoring an intervention based on individual practice needs.21,24,26 Facilitators develop ongoing relationships with practices to perform audits, feedback, training, and system redesign.

A practice facilitator was recruited, based on public health, primary care, and coaching experience. She had no previous experience with study practices and was introduced at their first educational session. The facilitator received training based on published literature, provided by a coinvestigator with facilitator training experience (MCR).24,49 Study coordination was performed by a separate individual.

At the beginning of the 6-month intervention, the practice facilitator conducted 1 to 2 days of observation to understand practice dynamics and establish relationships with staff. The facilitator then initiated the PTFI with each practice with a group meeting, reviewing baseline performance data with clinicians and staff. Guided by the facilitator, each practice set expectations and short-term goals designed to incrementally improve delivery of all 3 preventive services simultaneously, and planned steps to achieve them. Toolkits were provided, including materials useful for meeting goals (eg, BMI wheels, parent handouts, fluoride varnish kits).

The practice facilitator visited each practice approximately weekly during the 6-month PTFI; mean visit length was 1 hour (interquartile range 40–70 minutes). At each visit, the facilitator delivered rapid-cycle feedback, tailored to specific practice needs. She (1) reviewed a small convenience sample of ∼5 to 10 charts for well-child visits conducted the previous week and documented whether targeted services were performed; (2) plotted each week’s results on “run charts”; and (3) “huddled” briefly with available practice members to review run charts, assess what had worked during the previous week, brainstorm solutions for further improvement, and select new tools/procedures to implement during the coming week. For example, some practices needed training in BMI calculation/interpretation, some rearranged processes/documentation, and most learned fluoride varnish application. Updated run charts were posted in prominent locations, and facilitators held structured feedback meetings with all practice members to review results of large-scale data collection after 6 full months of PTFI, and at 2 months after the PTFI program ended. Facilitators did not provide other support during the 2-month follow-up period of sustainability assessment.

Outcomes

Quality measure outcomes were measured at the individual well-child visit level (Table 1). For obesity, the outcome was appropriate screening and counseling at well-child visits for children 2 to <18 years of age, defined based on Health Effectiveness Data and Information Set (HEDIS), and professional organizations’11,37 recommendations to include BMI calculated, documented, and plotted on a growth chart and, for overweight or obese children (BMI ≥85th percentile), documented counseling for nutrition and physical activity. For lead, the outcome was screening at 12 and 24 months of age per Ohio Department of Health guidelines.38,39 For practices using universal lead testing, this was defined as lead blood tests ordered within 3 months of the 12-month well-child visit, and within 3 months of the 24-month visit. For practices that performed selective testing, appropriate screening was defined as documented blood lead tests ordered within 3 months for children within high-risk zip codes, with Medicaid insurance, or with risk factors based on an Ohio Department of Health questionnaire. For fluoride, the primary outcome was varnish application during well visits for children 12 to 35 months of age, based on Ohio Department of Health guidelines and Ohio Medicaid reimbursement policy, unless the chart documented that the child had no teeth, had received varnish within the previous 6 months, or had a parent decline.41,45

TABLE 1.

Quality Measures

| Domain | Quality Outcome | Age | Definition | References |

|---|---|---|---|---|

| Obesity | Screening and counseling at well-child visits | 2–<18 y | For all children: | 11, 37 |

| 1. BMI calculated, documented, and plotted on a growth chart | ||||

| For overweight and obese childrena: | ||||

| 2. Documented counseling for nutrition | ||||

| 3. Documented counseling for physical activity | ||||

| Lead | Screening for toxicity | 9–12 and 21–24 mo | For practices with universal screening | 38, 39 |

| 1. Lead blood test ordered | ||||

| For practices with selective screening | ||||

| 2. Lead blood tests ordered for children with: | ||||

| a. High-risk zip code OR | ||||

| b. Medicaid insurance OR | ||||

| c. Risk factors based on Ohio Department of Health questionnaire | ||||

| Dental | Fluoride varnish application | 12–<36 mo | For all children with teeth: | 41, 45 |

| Fluoride varnish unless: | ||||

| a. Parent declined OR | ||||

| b. Primary care fluoride varnish application within previous 6 mo |

Overweight or obese: BMI ≥85th percentile.

Data Collection

Outcome data were collected by a separate evaluation team from January 19, 2011, to October 25, 2012, by chart reviews, at baseline before randomization and at 2-month intervals after randomization, over a total of 8 and 12 months for Early/Late practices, respectively.

Practice records were used to identify well-child visits for the appropriate age groups in the 2 months preceding each collection date; charts were reviewed in reverse chronological order until all were analyzed or a maximum of 100 charts were reviewed, whichever occurred first.

The facilitator kept detailed field notes of practice observations and interactions to guide her intervention and inform the qualitative analysis.

Sample Size

We estimated that 30 practices would participate, with11 to 40 eligible well-child visits per practice per day. Using a 2-sided α, assuming a change in the Late-Phase group up to 10%, and an intraclass correlation coefficient of 0.05,31,48 we would have 80% power to detect a 10% difference in performance rates between intervention groups with 52 charts screened per practice per outcome per data collection period.

Statistical Analyses

Analyses used Stata SE 12.1 (Stata Corp, College Station, TX), setting the type I error at 0.05, without correction for multiple comparisons. For univariate analysis, Student’s t test was used for continuous variables and χ2 or Fisher’s exact test for categorical variables.53

The unit of analysis was the well-child visit for each of the 3 study domains. The following a priori comparisons were performed for each preventive service outcome. (1) The relative effect of the PTFI versus control on targeted service delivery was assessed by comparing the change in the proportion of eligible children receiving each service at well-child visits during the first 4 months after randomization, comparing Early-Phase practices (PTFI time) with Late-Phase practices (control time). (2) The full effect of the 6-month PTFI was assessed for all practices by comparing service delivery after 6 months of the PTFI versus at baseline. (3) To assess whether the PTFI had a consistent effect throughout the study, improvement in service delivery during the 6-month PTFI was compared for Early versus Late practices. (4) Short-term sustainability of the PTFI effect was assessed by comparing service delivery after 6 months of the PTFI to delivery 2 months after the end of the PTFI (follow-up time) for all practices.

For multivariable analysis, using Stata’s xtlogit function with mixed effects polynomial logistic regression, service delivery for each outcome was modeled on the Intervention group over time, adjusting for clustering by practice and assessing model fit by using Akaike’s Information Criterion.47,48,54–56 Covariates were included if they were significantly different between Early- and Late-Phase groups and if they changed the odds ratio point estimate by ≥10%. For sensitivity analysis, all analyses were repeated by using the 19 practice networks replacing their 30 practices as the cluster unit.

Comparative Case Study Analyses

Using data sources shown in Supplemental Appendix Table A1, qualitative analyses were conducted by a multidisciplinary study team (facilitator, study coordinator, data managers) with expertise in nursing, pediatrics, public health, epidemiology, sociology, and psychology. An outside analyst with no previous experience with the study provided external perspective and challenged the team to make their findings explicit. Team members with minimal involvement in the initial analysis served as auditors who challenged and asked for corroborating data for themes.24 This involvement of multiple data sources, disciplinary perspectives, and analytic frames represents methodological triangulation, a source of rigor and trustworthiness in qualitative analysis.57

The facilitator used multiple data sources to draft a 1-page descriptive case study of each practice’s intervention experience, including behavioral dynamics, communication and leadership styles, work ethics, barriers to change, and her perception of what motivated the practice to reach outcome goals. Each team member reviewed the draft and offered impressions of each practice. Through this iterative process, consensus was reached on emergent key descriptive traits for each practice.

The outside analyst created a separate list of descriptive traits and behaviors, noting how the facilitator tailored each practice’s intervention, then met with the team to compare lists of practice traits, habits, and behaviors. The analyst's independent description ultimately matched the study team’s portrayal, helping to explain the intensity of the facilitator’s tailoring process. An evolving definition of facilitation intensity was created to include other, not easily measured components (eg, time spent by the facilitator/study staff outside the practice responding to requests, amount of education/reminders needed, degree of tailoring needed).

Cross-case analyses involved study team members individually identifying themes in practice descriptive data related to the facilitation process and outcome, by using a combination of editing35 and immersion/crystallization approaches.52 Emergent themes were discussed, challenged, and refined during multiple meetings, and cross-cutting themes were identified.

We assessed whether there was a relationship between the level of facilitation intensity needed and practice’s improvement over time, by using the mixed effects logistic regression model for each service outcome.

Results

Participant Flow

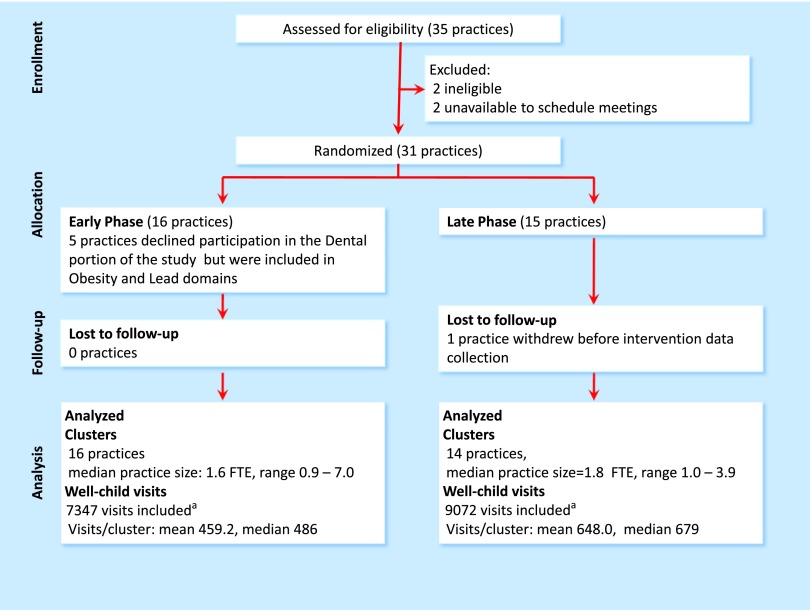

Practice recruitment and flow through the study are shown in Fig 1. Forty practices were sent invitations; 5 practices could not be reached. The remaining 35 practices were screened further for eligibility; 2 were not eligible and 2 declined to schedule education meetings. The remaining 31 practices were randomized, 16 to Early-Phase PTFI and 15 to Late PTFI. One Late-Phase practice withdrew before PTFI data collection. Data were collected for 16 419 well-child visits.

FIGURE 1.

Flow diagram. aMore visits were included for Late-Phase practices because they had 4 months of control time before starting the intervention. FTE, full-time equivalent.

Baseline Data

Table 2 shows baseline data comparing Early- with Late-Phase practices; randomization effectively achieved balance between the intervention groups. There were no differences in number of clinicians or staff, clinician experience or gender, percentage of Medicaid visits, practice type or location, or use of electronic medical records (EMRs). Half of the practices included at least 40% of children covered by Medicaid. There were also no baseline differences between Early- and Late-Phase practices in the proportion of children receiving appropriate obesity screening/counseling: 3.5% (95% confidence interval [CI] 0.2.2%–5.8%) for Early practices versus 6.3% (3.9%–9.9%) for Late practices, lead screening: 62.2% (47.8%–74.7%) for Early Practices versus 77.8% (64.7%–87.1%) for Late practices, or fluoride varnish application (only 1 Late-Phase practice applied fluoride varnish at baseline).

TABLE 2.

Practice Characteristics: Comparing Early- and Late-Phase Practices

| Early-Phase, 16 Practices | Late-Phase, 14 Practices | Intracluster Coefficient | |

|---|---|---|---|

| Practice type, no. of practices | |||

| Community clinic | 2 | 7 | — |

| Hospital-owned | 8 | 4 | — |

| Physician- or physician group-owned | 6 | 5 | — |

| Specialty, no. of practices | |||

| Family medicine | 2 | 1 | — |

| Pediatrics | 14 | 13 | — |

| County, no. of practices | |||

| Cuyahoga (Cleveland) | 11 | 11 | — |

| Surrounding counties | 5 | 3 | — |

| Percentage of pediatric patients enrolled in Medicaid | |||

| 20%–40% | 8 | 7 | — |

| >40% | 8 | 7 | — |

| Health records, no. of practices | |||

| Electronic | 9 | 6 | — |

| Paper | 7 | 8 | — |

| Clinicians, physicians, and nurse practitioners (n = 102) | |||

| No. of clinicians per practice, mean (range, SD) | 3.50 (1–10, 2.34) | 3.64 (1–9, 2.27) | — |

| No. of clinician FTEs per practice, mean (range, SD) | 2.27 (0.9–7.0,1.67) | 1.98 (1.0–3.9, 0.83) | — |

| Female gender, % | 69.8 | 67.3 | |

| Years since professional degree, mean (SD) | 20.71 (8.57) | 24.45 (7.55) | — |

| Nonclinician staff FTEs per practice, mean (SD) | 4.74 (3.97) | 3.14 (1.67) | — |

| Well-child visit–level characteristics from chart reviews | |||

| Age at well-child visit, y, mean/cluster (SD) | 6.73 (0.31) | 7.30 (1.09) | — |

| Number eligible visits/cluster/data collection, mean (SD) | 91.84 (15.46) | 92.57 (10.39) | N/A |

| Eligible for obesity screening and counseling | 68.62 (12.34) | 72.24 (11.51) | 0.15 |

| Eligible for lead screening | 26.71 (9.79) | 22.95 (10.11) | 0.27 |

| Eligible for fluoride varnish | 19.11 (12,64) | 21.67 (7.75) | 0.16 |

| Baseline preventive service delivery, unadjusted, % | |||

| Obesity screening and counseling | 5.2 | 5.6 | — |

| Lead screening | 61.3 | 67.8 | — |

| Fluoride varnish | 0 | 0.9 | — |

FTE, full-time equivalent; —, not applicable.

Outcomes

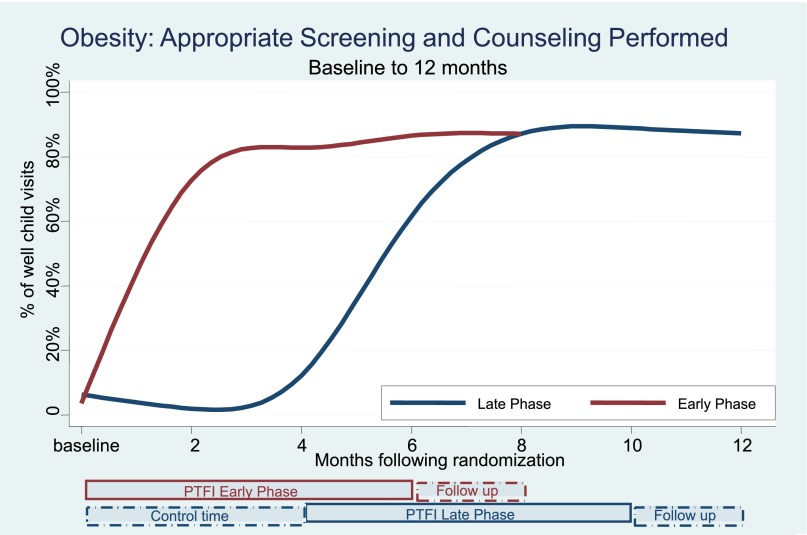

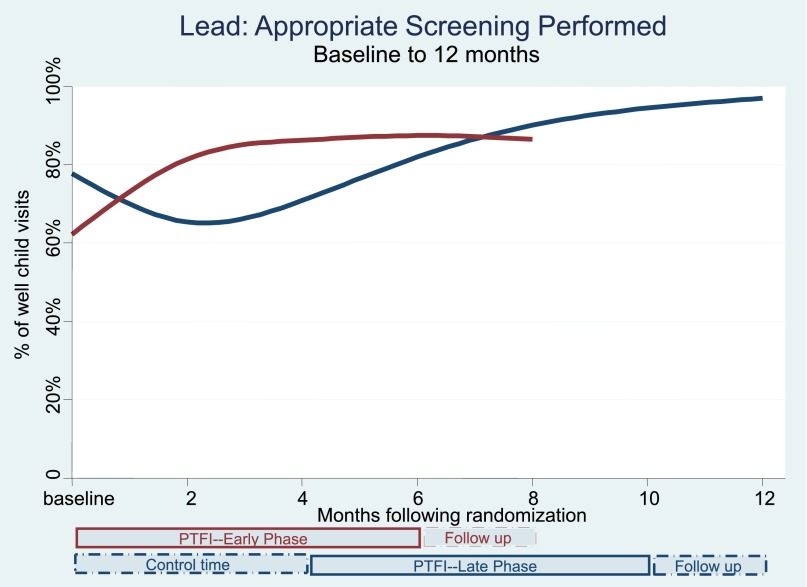

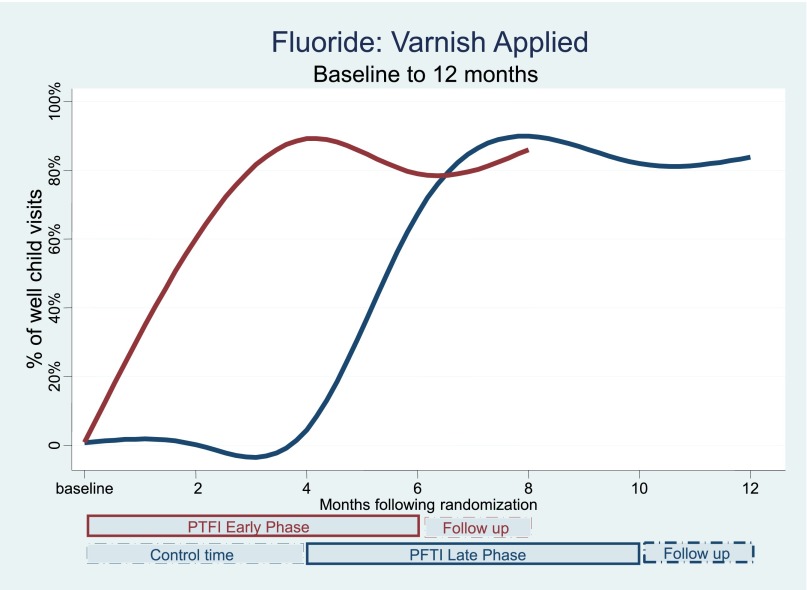

Table 3 and Figs 2, 3, and 4 show the changes in the delivery of the 3 preventive services, adjusted for clustering by practice, over the course of multiple time points during the study. There were significant and simultaneous improvements in all 3 services (obesity, lead, fluoride) comparing Early-Phase PTFI time relative to Late-Phase control time at 4 months, and similar large improvements comparing all 3 outcomes at the end of the 6-month PTFI time versus baseline for all practices. Improvement was more dramatic for obesity and fluoride outcomes, which had very low baseline rates, relative to baseline lead screening.

TABLE 3.

Delivery of Targeted Services: Adjusted Results From the Mixed Effects Polynomial Logistic Regression Analyses

| % Performance | Time After Randomization | ||||||

|---|---|---|---|---|---|---|---|

| Baseline | 2 mo | 4 mo | 6 mo | 8 mo | 10 mo | 12 mo | |

| Obesity screening and counseling | |||||||

| Early-Phase practices | 3.5 | 72.7 | 82.8 | 86.5 | 87.1 | — | — |

| Late-Phase practices | 6.3 | 1.9 | 12.2 | 61.6 | 87.1 | 88.9 | 87.3 |

| Lead screening | |||||||

| Early-Phase practices | 62.2 | 81.4 | 86.3 | 87.5 | 86.5 | — | — |

| Late-Phase practices | 77.8 | 65.4 | 70.9 | 82.0 | 90.1 | 94.5 | 97.0 |

| Fluoride varnish application | |||||||

| Early-Phase practices | 0.01 | 60.0 | 89.1 | 78.9 | 86.0 | — | — |

| Late-Phase practices | 0.01 | 0 | 4.4 | 67.3 | 89.9 | 81.9 | 83.7 |

—, not applicable.

FIGURE 2.

Obesity: detection and counseling performed.

FIGURE 3.

Lead: appropriate screening performed.

FIGURE 4.

Fluoride: varnish applied.

Obesity Screening and Counseling

During the first 4 months after randomization, obesity screening and counseling fell initially, then rose slightly in Late-Phase practices (control time) to 12.2% successful performance (95% CI 8.2%–17.8%), but rose substantially in Early-Phase practices (PTFI time) to 82.8% successful performance (76.1%–87.9%), with P < .001, comparing the change for Early versus Late Phase practices (Table 3, Fig 2). The full 6-month PTFI was associated with large improvements in obesity screening/counseling in all practices; obesity screening/counseling rose to 86.5% (80.9%–90.7%) in Early-Phase and 88.9% (83.7%–92.5%) in Late-Phase practices after 6 months of intervention, both P < .001 compared with baseline. The rate of improvement, in percent change over the 6-month PTFI, was the same in Early- and Late-Phase practices (P = .14). For all practices, the improvement was sustained during the 2 months after the end of the PTFI (follow-up time); P = .663, P = .192, for Early- and Late-Phase practices, respectively.

Lead Screening at 12 and 24 Months of Age

During the first 4 months after randomization, lead screening decreased in Late practices (control time) to 70.9% successful performance (56.8%–81.9%), but rose substantially in Early-Phase practices (PTFI time) to 86.3% successful performance (77.4%–92.0%), P < .001, comparing the change for Early versus Late practices (Table 3, Fig 3). The full 6-month PTFI was associated with improvement in lead screening in all practices; lead screening rose to 87.5% (79.2%–92.7%) in Early-Phase practices and to 94.5% (89.7%–97.1%) in Late-Phase practices, both P < .001 compared with baseline. The rate of improvement of percent change over the 6-month PTFI was the same in Early- and Late-Phase practices (P = .62). For all practices, the improvement was sustained during the 2 months after the end of the PTFI, with Late-Phase practices showing further improvement during this time.

Fluoride Varnish Application

During the first 4 months after randomization, fluoride varnish application decreased very slightly in Late-Phase practices (control time) (P = .93) but rose substantially in Early-Phase practices (PTFI time) to 89.1% successful performance (82.8%–93.3%), P < .001 (Table 3, Fig 4). The full 6-month PFTI was associated with a large improvement in fluoride varnish application in all practices; application rose to 78.9% (68.8%–86.4%) in Early-Phase and to 81.9% (72.9%–88.3%) in Late-Phase practices by 6 months, both P < .001 compared with baseline. The rate of percent improvement over the 6- month PTFI was the same in Early and Late practices (P = .65). Late-Phase practices sustained this improvement during the 2 months after the end of the PFTI (P = .57), whereas Early-Phase practices showed further improvement (P = .01).

Specific practice characteristics were not significantly associated with differences in service delivery. Results were unchanged with analyses using the 19 practice networks replacing the 30 practices as the cluster unit.

Tailoring the Facilitation Intervention

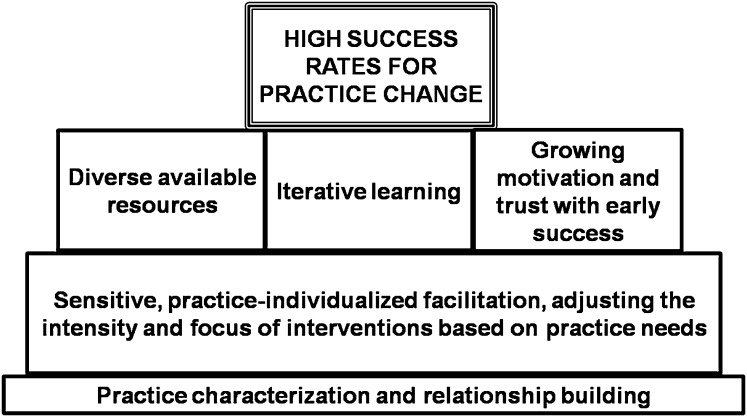

A key foundation for practice change was ongoing relationship-building with practices, coupled with the facilitator’s sensitive tailoring of the intervention to each practice’s level of need (Fig 5). Using available resources, an iterative learning process, and practices’ growing motivation and trust fueled by early successes, were critical stepping stones to successful practice change.

FIGURE 5.

Building blocks for reaching high success rates with practice change.

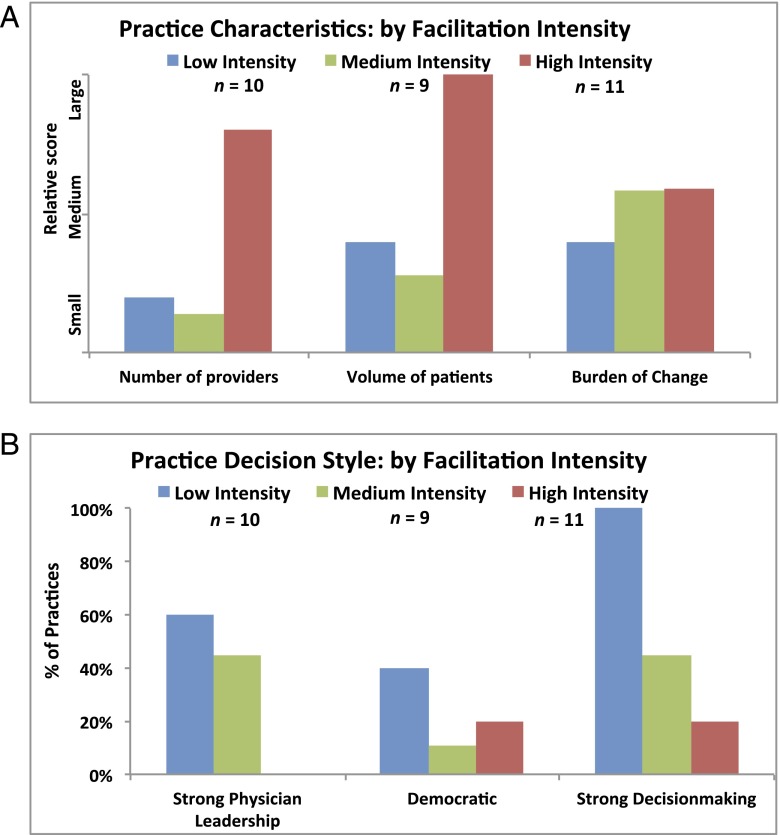

Qualitative analyses suggested 3 main practice profiles corresponding to level of need: low, medium, or high, corresponding to the intensity of facilitation required to foster change. Examples across this spectrum are included in the Supplemental Appendix. It was not possible to determine the facilitation intensity required by considering 1 or 2 factors alone (Fig 6 A and B). The combined effects of different practice characteristics and barriers needed to be considered together, along with the facilitator’s growing familiarity with the practice over time, to tailor the intervention intensity.

FIGURE 6.

A, Practice characteristics by facilitation intensity. B, Practice decision style: by facilitation intensity.

For example, one might expect a large busy practice to require intensive intervention, but other characteristics may offset this (eg, a strong leader or practice-improvement process well-established at baseline). Likewise, a small, less-busy practice may require more intensive intervention under certain circumstances (eg, undergoing transition to an EMR).

There were performance differences between practices needing “low” versus “medium” versus “high” facilitation intensity for all 3 outcomes, with a “dose effect” throughout the study period; medium-intensity practices performed better than “low-intensity” practices, and “high-intensity” practices had the best performance, at baseline, after the full 6-month intervention, and after the sustainability period, 2 months after intervention end (Supplemental Appendix Table A2).

The intervention process involved repeated trial and error, with helping practices brainstorm and pilot process change. Although the educational and formal feedback sessions were designed for practice meetings, many practices found large meetings disruptive; between larger meetings they preferred brief group “huddles” and one-on-one facilitator interactions, to review weekly run charts and fine-tune changes. Meeting with providers independently was sometimes necessary; this could involve finding personal motivational hooks or instrumental solutions, and/or troubleshooting roles and processes. Often this involved demonstrating lags in peer-comparison performance, and/or reframing goals.

Preventive service documentation needed to be thorough and explicit, while also nonburdensome and tailored to practices’ needs, preferences, and sometimes evolving medical record systems. Certain services, such as behavioral counseling, may be underrepresented in the medical record, but we believe that even if there is an increase in documentation, this represents a positive effect of the intervention, as documentation is an important aspect of service delivery.

Discussion

The CHEC-UPPP randomized controlled trial and multimethod comparative case study process assessment evaluated the effectiveness of practice facilitation targeted to practices’ specific needs, including sensitive, supportive feedback, problem solving, and rapid-cycle change. Several previous studies evaluated practice coaching to improve pediatric preventive service delivery in community practices, including rapid-cycle feedback,31,58–65 academic detailing, and learning collaboratives; some targeted single services and used a higher facilitator-to-practice ratio.31,66,67 Previous studies have not described practice-specific characteristics associated with improvement.34,61,65

Our PTFI program led to large improvements in all 3 services: obesity detection/counseling, lead screening, and fluoride application. Most improvements were broad-based with no difference in improvement across practices with different characteristics. Addressing multiple improvements simultaneously using rapid-cycle feedback can efficiently provide an overall higher treatment “dose.”

For Late-Phase control practices, all 3 services showed a slight decrease from baseline to the 2-month time point; although statistically significant, these changes were much smaller in clinical significance than their subsequent improvements, and Early practices’ improvements, during intervention time.

The finding that facilitation intensity needs were associated with practice performance was not surprising, as practices were characterized retrospectively after completing the intervention. Future research can explore whether practices’ needs can be predicted; services could potentially be further targeted to practices with higher needs.

The key component of our facilitation process was the longitudinal evolving relationship between the facilitator and the practices and its interaction with (1) a diverse team with complementary skills; (2) repeated cycles of outcome assessment, feedback, and problem solving; and (3) a sensitive facilitator who could gain rapport with diverse members of each practice’s culture, judge their needs, and tailor the nature and intensity of the intervention accordingly.

This program’s findings are consistent with Solberg’s68 framework for practice improvement, incorporating both “hard” systems changes and “soft” changes in organizational culture, and with Bodenheimer et al’s69 findings that the most important predictors of care improvements are strong leadership and organizational structures that value quality. Some practices had competing priorities that influenced their “leverage points” and relative “readiness-to-change,” but all eventually achieved improvement.

By first engaging medical and administrative leaders, and then reaching out to all practice members, we recognized that practices function like complex adaptive systems,70–74 while also establishing that high-quality care delivery depends on each individual’s contributions.68,75–77 The rapid improvements by most practices are evidence that the intervention had great salience to providers and staff, that they place high value on delivering quality care, and were ready to take advantage of the opportunity to change, by using the facilitator as a catalyst. Although classic practice-based quality improvement depends on formal group meetings to perform plan-do-study-act cycles, we found that the facilitator’s flexibility to many practices’ preference for brief informal “huddles” and one-on-one interactions was key for maintaining buy-in and motivation; clinicians and staff learned to take responsibility both individually and as a group in plan-do-study-act–led improvement.51,68 Neither our quantitative nor our qualitative analyses could discern individual practice characteristics that predicted success, probably because the individualized facilitation approach helped to overcome individual practice barriers.

This article builds on previous work to show how effective facilitation is supported by the facilitator’s evolving relationship-based understanding of each practice’s culture, linked to scientific evidence, goal setting, problem-solving, flexibility, feedback, a diverse supportive team, and space for learning and reflection, creating a sometimes messy and iterative process of practice improvement.24,49,52,78,79

This study’s strengths include our randomized design using a control group with a lagged intervention, multimethod comparative case study process assessment, research team with previous facilitation experience, number and diversity of practices, simultaneous inclusion of 3 unrelated outcomes, strong longitudinal practice relationships, and large improvements in all outcomes across all practices.43 Facilitation responsibilities were handled separately from research data collection, supporting the potential for dissemination beyond the research setting.

Limitations of our study include that PTFI may not be equally effective with different service targets, in different settings, or using facilitators with different training/skills. The history of strong collaborative relationships between our academic medical center and regional practices may have contributed to the program’s success. Second, we did not assess sustainability beyond 2 months after PTFI ended. It may be unrealistic to expect that practices can sustain successes indefinitely and initiate new improvements on their own. Instead, practice facilitation may work best as part of a redesigned primary care model, with biweekly or monthly facilitator visits to reinforce earlier successes and introduce new goals. Improvement was apparent in all practices within 2 to 4 months, suggesting that shorter intervention cycles could enhance efficiency. This model could provide long-term sustainability, perhaps supported by future pay-for-performance incentives. Third, it is difficult to perform cost-effectiveness analyses for preventive services30; although we can estimate the cost of PTFI services, it is harder to precisely define future cost savings due to improved preventive care.13,14

Future research can explore wider dissemination, addressing broader use in other contexts, and longer-term sustainability. Using this study as a template, we are currently up-scaling the PTFI program to include additional practices and outcomes.

In conclusion, these results suggest that the PTFI holds promise as a method to advance meaningful, broad-based, generalizable, and sustainable improvements in pediatric preventive health care delivery.

Supplementary Material

Acknowledgments

The authors dedicate this manuscript to the memory of Dr Leona Cuttler; we are grateful for her inspiration and leadership.

We are indebted to the participating practices and to Lauren Birmingham, MA, for her work with data management.

Glossary

- CHEC-UPPP

Child Health Excellence Centera: University-Practice-Public Partnership

- CI

confidence interval

- EMR

electronic medical record

- PTFI

Practice-tailored Facilitation Intervention

Footnotes

Dr Meropol helped to design and supervise the intervention, evaluation, and data collection, participated in the intervention, planned and carried out the analysis, drafted the initial manuscript, reviewed and revised the manuscript, and approved the final manuscript as submitted; Dr Schiltz assisted with the evaluation, analysis plan, and analysis, and reviewed and revised the manuscript; Dr Sattar assisted with and supervised the analysis, and reviewed and revised the manuscript, and approved the final manuscript as submitted; Dr Stange advised and assisted in study design, designing and implementing the intervention and evaluation, reviewed the analysis, reviewed and revised the manuscript, and approved the final manuscript as submitted; Ms Nevar assisted with study design and supervision, assisted with design of the evaluation and intervention, reviewed the analysis, and reviewed and approved the final manuscript as submitted; Ms Davey assisted with data collection instrument and design of the intervention and qualitative analysis, participated in data collection and evaluation, carried out the intervention, assisted with the qualitative analysis, and reviewed and approved the final manuscript as submitted; Dr Ferretti helped to conceptualize and design the study, reviewed the results, and reviewed and approved the final manuscript as submitted; Ms Howell assisted with data collection instrument design, assisted with carrying out the intervention, participated in the intervention and evaluation, and reviewed and approved the final manuscript as submitted; Dr Strosaker assisted with study, intervention and evaluation design, participated in the intervention, assisted with study supervision, and reviewed and approved the final manuscript as submitted; Ms Vavrek assisted with design of the data collection instruments and qualitative analysis, data collection, intervention, and evaluation, coordinated and supervised the intervention and data collection, assisted with data review and qualitative analysis, and reviewed and and approved the final manuscript as submitted; Ms Bader assisted with critical review of the qualitative data from the intervention, and reviewed and approved the final manuscript as submitted; Ms Ruhe advised and assisted in study design, advised and assisted with intervention implementation and evaluation, advised on and reviewed the qualitative analysis, and reviewed and revised the manuscript, and reviewed and approved the final manuscript as submitted; and Dr Cuttler conceptualized, designed, and supervised the study, designed the intervention and data collection, helped to design, and supervised and reviewed the analysis, and reviewed and revised the manuscript; .

This trial has been registered at www.clinicaltrials.gov (identifierNCT01739166).

Deceased.

FINANCIAL DISCLOSURE: The authors have indicated they have no financial relationships relevant to this article to disclose.

FUNDING: This work was supported by a grant from the Medicaid Technical Assistance and Policy Program from the Ohio Department of Jobs and Family Services (Dr Cuttler) and by The Center Child Health and Policy at Rainbow Babies and Children’s Hospital. A portion of Dr Stange's time is additionally supported by a Clinical Research Professorship from the American Cancer Society. This publication was made possible by the Clinical and Translational Science Collaborative of Cleveland, UL1TR000439 from the National Center for Advancing Translational Sciences component of the National Institutes of Health (NIH), and NIH Roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official views of the NIH. Funded by the National Institutes of Health (NIH).

POTENTIAL CONFLICT OF INTEREST: The authors have indicated they have no potential conflicts of interest to disclose.

References

- 1.American Academy of Pediatrics. Recommendations for Preventive Pediatric Health Care. 2008. Available at: http://brightfutures.aap.org/pdfs/aap bright futures periodicity sched 101107.pdf. Accessed January 7, 2013

- 2.McGlynn EA, Damberg, CL, Kerr, EA, Schuster, MA. Quality of care for children and adolescents: a review of selected clinical conditions and quality indicators. 2000. Rand Corporation. Available at: www.rand.org/pubs/monograph_reports/MR1283.html. Accessed January 7, 2013

- 3.Advisory Committee on Immunization Practices, Temte, JL, Chair. Centers for Disease Control and Prevention. Recommended immunization schedule for persons aged 0 through 6 years–United States, 2012. 2012. Available at: http://aapredbook.aappublications.org/site/resources/IZSchedule0-6yrs.pdf. Accessed January 7, 2013

- 4.Mangione-Smith R, DeCristofaro AH, Setodji CM, et al. The quality of ambulatory care delivered to children in the United States. N Engl J Med. 2007;357(15):1515–1523 [DOI] [PubMed] [Google Scholar]

- 5.Zickafoose JS, Gebremariam A, Davis MM. Medical home disparities for children by insurance type and state of residence. Matern Child Health J. 2012;16(suppl 1):S178–S187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wisk LE, Witt WP. Predictors of delayed or forgone needed health care for families with children. Pediatrics. 2012;130(6):1027–1037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Friedman B, Basu J. Health insurance, primary care, and preventable hospitalization of children in a large state. Am J Manag Care. 2001;7(5):473–481 [PubMed] [Google Scholar]

- 8.Liu SY, Pearlman DN. Hospital readmissions for childhood asthma: the role of individual and neighborhood factors. Public Health Rep. 2009;124(1):65–78 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Williams DR. The health of U.S. racial and ethnic populations. J Gerontol B Psychol Sci Soc Sci. 2005;60(spec no. 2):53–62 [DOI] [PubMed] [Google Scholar]

- 10.Evans M, Flores G, Gallia CA, et al. Children's Health Insurance Program Reauthorization Act (CHIPRA) 2011. Available at: www.ahrq.gov/policymakers/chipra/pubs/background-2012/index.html. Accessed December 31, 2012

- 11.HEDIS. 2012: Summary table of measure, product lines and changes: Health Information Data and Information Set (HEDIS). 2012. Available at: www.ncqa.org/Portals/0/HEDISQM/HEDIS 2011/SUMMARY_TABLE_OF_MEASURES_for_HEDIS_2012.pdf. Accessed December 31, 2012

- 12.Abrams M, Nuzum R, Mika S, Lawlor G. How the Affordable Care Act will strengthen primary care and benefit patients, providers, and payers. Issue Brief (Commonw Fund). 2011;1:1–28 [PubMed] [Google Scholar]

- 13.Grumbach K, Bainbridge E, Bodenheimer T. Facilitating improvement in primary care: the promise of practice coaching. Issue Brief (Commonw Fund). 2012;15:1–14 [PubMed] [Google Scholar]

- 14.Berwick DM, Nolan TW, Whittington J. The triple aim: care, health, and cost. Health Aff (Millwood). 2008;27(3):759–769 [DOI] [PubMed] [Google Scholar]

- 15.Koh HK, Sebelius KG. Promoting prevention through the Affordable Care Act. N Engl J Med. 2010;363(14):1296–1299 [DOI] [PubMed] [Google Scholar]

- 16.Rittenhouse DR, Shortell SM, Fisher ES. Primary care and accountable care—two essential elements of delivery-system reform. N Engl J Med. 2009;361(24):2301–2303 [DOI] [PubMed] [Google Scholar]

- 17.Report to Congress: National Strategy for Quality Improvement in Health Care. Washington, DC: US Department of Health and Human Services; 2011

- 18.Grumbach K, Mold JW. A health care cooperative extension service: transforming primary care and community health. JAMA. 2009;301(24):2589–2591 [DOI] [PubMed] [Google Scholar]

- 19.Leu MG, O’Connor KG, Marshall R, Price DT, Klein JD. Pediatricians’ use of health information technology: a national survey. Pediatrics. 2012;130(6). Available at: www.pediatrics.org/cgi/content/full/130/6/e1441 [DOI] [PubMed] [Google Scholar]

- 20.Klein JD, Sesselberg TS, Johnson MS, et al. Adoption of body mass index guidelines for screening and counseling in pediatric practice. Pediatrics. 2010;125(2):265–272 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Stange KC. One size doesn’t fit all. Multimethod research yields new insights into interventions to increase prevention in family practice. J Fam Pract. 1996;43(4):358–360 [PubMed] [Google Scholar]

- 22.Poses RM. One size does not fit all: questions to answer before intervening to change physician behavior. Jt Comm J Qual Improv. 1999;25(9):486–495 [DOI] [PubMed] [Google Scholar]

- 23.McMahon LF, Jr, Hayward R, Saint S, Chernew ME, Fendrick AM. Univariate solutions in a multivariate world: can we afford to practice as in the “good old days”? Am J Manag Care. 2005;11(8):473–476 [PubMed] [Google Scholar]

- 24.Ruhe MC, Weyer SM, Zronek S, Wilkinson A, Wilkinson PS, Stange KC. Facilitating practice change: lessons from the STEP-UP clinical trial. Prev Med. 2005;40(6):729–734 [DOI] [PubMed] [Google Scholar]

- 25.Barkin SL, Scheindlin B, Brown C, Ip E, Finch S, Wasserman RC. Anticipatory guidance topics: are more better? Ambul Pediatr. 2005;5(6):372–376 [DOI] [PubMed] [Google Scholar]

- 26.Stange KC, Goodwin MA, Zyzanski SJ, Dietrich AJ. Sustainability of a practice-individualized preventive service delivery intervention. Am J Prev Med. 2003;25(4):296–300 [DOI] [PubMed] [Google Scholar]

- 27.Goodwin MA, Zyzanski SJ, Zronek S, et al. The Study to Enhance Prevention by Understanding Practice (STEP-UP) . A clinical trial of tailored office systems for preventive service delivery. Am J Prev Med. 2001;21(1):20–28 [DOI] [PubMed] [Google Scholar]

- 28.Bordley WC, Margolis PA, Stuart J, Lannon C, Keyes L. Improving preventive service delivery through office systems. Pediatrics. 2001;108(3). Available at: www.pediatrics.org/cgi/content/full/108/3/E41 [DOI] [PubMed] [Google Scholar]

- 29.Honigfeld L, Chandhok L, Spiegelman K. Engaging pediatricians in developmental screening: the effectiveness of academic detailing. J Autism Dev Disord. 2012;42(6):1175–1182 [DOI] [PubMed] [Google Scholar]

- 30.Nagykaldi Z, Mold JW, Aspy CB. Practice facilitators: a review of the literature. Fam Med. 2005;37(8):581–588 [PubMed] [Google Scholar]

- 31.Margolis PA, Lannon CM, Stuart JM, Fried BJ, Keyes-Elstein L, Moore DE, Jr. Practice based education to improve delivery systems for prevention in primary care: randomised trial. BMJ. 2004;328(7436):388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Soumerai SB, Avorn J. Principles of educational outreach (‘academic detailing’) to improve clinical decision making. JAMA. 1990;263(4):549–556 [PubMed] [Google Scholar]

- 33.Ragazzi H, Keller A, Ehrensberger R, Irani AM. Evaluation of a practice-based intervention to improve the management of pediatric asthma. J Urban Health. 2011;88(suppl 1):38–48 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Baskerville NB, Liddy C, Hogg W. Systematic review and meta-analysis of practice facilitation within primary care settings. Ann Fam Med. 2012;10(1):63–74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Crabtree BF. Primary care practices are full of surprises! Health Care Manage Rev. 2003;28(3):279–283, discussion 289–290 [DOI] [PubMed] [Google Scholar]

- 36.Ruhe MC, Carter C, Litaker D, Stange KC. A systematic approach to practice assessment and quality improvement intervention tailoring. Qual Manag Health Care. 2009;18(4):268–277 [DOI] [PubMed] [Google Scholar]

- 37.Barlow SE, Expert Committee . Expert committee recommendations regarding the prevention, assessment, and treatment of child and adolescent overweight and obesity: summary report. Pediatrics. 2007;120(suppl 4):S164–S192 [DOI] [PubMed] [Google Scholar]

- 38.Advisory Committee on Childhood Lead Poisoning Prevention, Centers for Disease Control and Prevention. Low-level lead exposure harms children: a renewed call for primary prevention. January 4, 2012. Available at: www.cdc.gov/nceh/lead/ACCLPP/Final_Document_030712.pdf. Accessed April 2, 2014

- 39.Ohio Department of Health, Ohio Healthy Homes and Lead Poisoning Program. Lead testing requirements and medical management recommendations for children under the age of six years. 2010. Available at: www.odh.ohio.gov/∼/media/ODH/ASSETS/Files/cfhs/lead poisoning - children/ohiochildhoodleadpoisoningpreventionscreeningrecommendations.ashx. Accessed November 25, 2012

- 40.American Academy of Pediatric Dentistry (AAPD). Clinical guideline on periodicity of examination, preventive dental services, anticipatory guidance/counseling and oral treatment for infants, children, and adolescents. Chicago (IL): American Academy of Pediatric Dentistry (AAPD); 2009. Available at: www.guideline.gov/content.aspx?id=15257. Accessed April 2, 2014

- 41.Section on Pediatric Dentistry and Oral Health . Preventive oral health intervention for pediatricians. Pediatrics. 2008;122(6):1387–1394 [DOI] [PubMed] [Google Scholar]

- 42.Dingle J, Badger, GF, Jordan, WS. Illness in the Home. A study of 25,000 Illnesses in a Group of Cleveland Families. Cleveland, OH: The Press of Western Reserve University; 1964

- 43.Carey TS, Kinsinger L, Keyserling T, Harris R. Research in the community: recruiting and retaining practices. J Community Health. 1996;21(5):315–327 [DOI] [PubMed] [Google Scholar]

- 44.Bloom HR, Zyzanski SJ, Kelley L, Tapolyai A, Stange KC. Clinical judgment predicts culture results in upper respiratory tract infections. J Am Board Fam Pract. 2002;15(2):93–100 [PubMed] [Google Scholar]

- 45.Ohio Department of Health. Facts: about fluoride varnish information for parents and caregivers. 2012. Available at: www.odh.ohio.gov/∼/media/ODH/ASSETS/Files/ohs/oral health/flvarnishfactsheetforparents.ashx. Accessed December 5, 2012

- 46.Aickin M. A simulation study of the validity and efficiency of design-adaptive allocation to two groups in the regression situation. Int J Biostat. 2009;5(1):19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Localio AR, Berlin JA, Ten Have TR, Kimmel SE. Adjustments for center in multicenter studies: an overview. Ann Intern Med. 2001;135(2):112–123 [DOI] [PubMed] [Google Scholar]

- 48.Campbell MJ, Donner A, Klar N. Developments in cluster randomized trials and statistics in medicine. Stat Med. 2007;26(1):2–19 [DOI] [PubMed] [Google Scholar]

- 49.Knox L, Taylor EF, Geonnotti K, et al. Developing and Running a Primary Care Practice Facilitation Program: A How-to Guide. Rockville, MD: Agency for Healthcare Research and Quality; 2011 [Google Scholar]

- 50.Langley G, Nolan KM, Nolan TW, Norman CL, Provost LP. The Improvement Guide: A Practical Approach to Enhancing Organizational Performance. 2nd ed. San Francisco, CA: Jossey-Bass; 2009. [Google Scholar]

- 51.Berwick DM. The science of improvement. JAMA. 2008;299(10):1182–1184 [DOI] [PubMed] [Google Scholar]

- 52.Cohen D, McDaniel RR, Jr, Crabtree BF, et al. A practice change model for quality improvement in primary care practice. J Healthc Manag. 2004;49(3):155–168, discussion 169–170 [PubMed] [Google Scholar]

- 53.Vittinghoff E, Glidden D, Shiboski S, McCollock C. Regression Methods in Biostatistics: Linear, Logistic, Survival, and Repeated Measures Models. New York, NY: Springer; 2012 [Google Scholar]

- 54.Rabe-Hesketh S, Skrondal A. Multilevel and Longitudinal Modeling Using Stata. College Station, TX: Stata Press; 2008 [Google Scholar]

- 55.Hedeker D, Gibbons RD. Longitudinal Data Analysis. Hoboken: John Wiley & Sons; 2006 [Google Scholar]

- 56.Localio AR, Berlin JA, Have TR. Longitudinal and repeated cross-sectional cluster-randomization designs using mixed effects regression for binary outcomes: bias and coverage of frequentist and Bayesian methods. Stat Med. 2006;25(16):2720–2736 [DOI] [PubMed] [Google Scholar]

- 57.Winickoff RN, Coltin KL, Morgan MM, Buxbaum RC, Barnett GO. Improving physician performance through peer comparison feedback. Med Care. 1984;22(6):527–534 [DOI] [PubMed] [Google Scholar]

- 58.Bunik M, Federico MJ, Beaty B, Rannie M, Olin JT, Kempe A. Quality improvement for asthma care within a hospital-based teaching clinic. Acad Pediatr. 2011;11(1):58–65 [DOI] [PubMed] [Google Scholar]

- 59.Bryce FP, Neville RG, Crombie IK, Clark RA, McKenzie P. Controlled trial of an audit facilitator in diagnosis and treatment of childhood asthma in general practice. BMJ. 1995;310(6983):838–842 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.McCowan C, Neville RG, Crombie IK, Clark RA, Warner FC. The facilitator effect: results from a four-year follow-up of children with asthma. Br J Gen Pract. 1997;47(416):156–160 [PMC free article] [PubMed] [Google Scholar]

- 61.Mold JW, Aspy CA, Nagykaldi Z, Oklahoma Physicians Resource/Research Network . Implementation of evidence-based preventive services delivery processes in primary care: an Oklahoma Physicians Resource/Research Network (OKPRN) study. J Am Board Fam Med. 2008;21(4):334–344 [DOI] [PubMed] [Google Scholar]

- 62.Baskerville NB, Hogg W, Lemelin J. Process evaluation of a tailored multifaceted approach to changing family physician practice patterns improving preventive care. J Fam Pract. 2001;50(3):W242–249 [PubMed] [Google Scholar]

- 63.Smith KD, Merchen E, Turner CD, Vaught C, Fritz T, Mold J. Improving the rate and quality of Medicaid well child care exams in primary care practices. J Okla State Med Assoc. 2010;103(7):248–253 [PubMed] [Google Scholar]

- 64.Duncan P, Frankowski B, Carey P, et al. Improvement in adolescent screening and counseling rates for risk behaviors and developmental tasks. Pediatrics. 2012;130(5). Available at: www.pediatrics.org/cgi/content/full/130/5/e1345 [DOI] [PubMed] [Google Scholar]

- 65.Van Cleave J, Kuhlthau KA, Bloom S, et al. Interventions to improve screening and follow-up in primary care: a systematic review of the evidence. Acad Pediatr. 2012;12(4):269–282 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Lannon CM, Flower K, Duncan P, Moore KS, Stuart J, Bassewitz J. The Bright Futures Training Intervention Project: implementing systems to support preventive and developmental services in practice. Pediatrics. 2008;122(1). Available at: www.pediatrics.org/cgi/content/full/122/1/e163 [DOI] [PubMed] [Google Scholar]

- 67.Lazorick S, Crowe VL, Dolins JC, Lannon CM. Structured intervention utilizing state professional societies to foster quality improvement in practice. J Contin Educ Health Prof. 2008;28(3):131–139 [DOI] [PubMed] [Google Scholar]

- 68.Solberg LI. Improving medical practice: a conceptual framework. Ann Fam Med. 2007;5(3):251–256 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Bodenheimer T, Wang MC, Rundall TG, et al. What are the facilitators and barriers in physician organizations’ use of care management processes? Jt Comm J Qual Saf. 2004;30(9):505–514 [DOI] [PubMed] [Google Scholar]

- 70.Griffiths F, Byrne D. General practice and the new science emerging from the theories of ‘chaos’ and complexity. Br J Gen Pract. 1998;48(435):1697–1699 [PMC free article] [PubMed] [Google Scholar]

- 71.Miller WL, McDaniel RR, Jr, Crabtree BF, Stange KC. Practice jazz: understanding variation in family practices using complexity science. J Fam Pract. 2001;50(10):872–878 [PubMed] [Google Scholar]

- 72.Stroebel CK, McDaniel RR, Jr, Crabtree BF, Miller WL, Nutting PA, Stange KC. How complexity science can inform a reflective process for improvement in primary care practices. Jt Comm J Qual Patient Saf. 2005;31(8):438–446 [DOI] [PubMed] [Google Scholar]

- 73.Sweeney K. Complexity in Primary Care. Oxon, UK: Radcliffe Publishing Ltd; 2006 [Google Scholar]

- 74.Litaker D, Tomolo A, Liberatore V, Stange KC, Aron D. Using complexity theory to build interventions that improve health care delivery in primary care. J Gen Intern Med. 2006;21(suppl 2):S30–S34 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Ellis B, Herbert SI. Complex adaptive systems (CAS): an overview of key elements, characteristics and application to management theory. Inform Prim Care. 2011;19(1):33–37 [DOI] [PubMed] [Google Scholar]

- 76.Doebbeling BN, Flanagan ME. Emerging perspectives on transforming the healthcare system: redesign strategies and a call for needed research. Med Care. 2011;49(suppl):S59–S64 [DOI] [PubMed] [Google Scholar]

- 77.Krzyzanowska MK, Kaplan R, Sullivan R. How may clinical research improve healthcare outcomes? Ann Oncol. 2011;22(suppl 7):vii10–vii15 [DOI] [PubMed] [Google Scholar]

- 78.Miller WL, Crabtree BF, Nutting PA, Stange KC, Jaen CR. Primary care practice development: a relationship-centered approach. Ann Fam Med. 2010;8(suppl 1):S68–S79; S92 [DOI] [PMC free article] [PubMed]

- 79.Litaker D, Ruhe M, Flocke S. Making sense of primary care practices’ capacity for change. Transl Res. 2008;152(5):245–253 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.