Abstract

Behavioral data supports the commonsense view that babies elicit different responses than adults do. Behavioral research also has supported the babyface overgeneralization hypothesis that the adaptive value of responding appropriately to babies produces a tendency for these responses to be over-generalized to adults whose facial structure resembles babies. Here we show a neural substrate for responses to babies and babyface overgeneralization in the amygdala and the fusiform face area (FFA). Both regions showed greater percentage BOLD signal change compared with fixation when viewing faces of babies or babyfaced men than maturefaced men. Viewing the first two categories also yielded greater effective connectivity between the two regions. Facial qualities previously shown to elicit strong neural activation could not account for the effects. Babyfaced men were distinguished only by their resemblance to babies. The preparedness to respond to infantile facial qualities generalizes to babyfaced men in perceivers’ neural responses just as it does in their behavioral reactions.

It is a truism that babies elicit different responses than adults do. Differential responses to infants include preferential attention (McCall & Kennedy, 1980), more smiling (Schleidt, Schiefenhovel, Stanjek, & Krell, 1980), more protective reactions (Alley, 1983), closer approach, distinctive facial expressions that exaggerate a universal greeting response (see Eibel-Eibesfeldt, 1989, for a review), and impressions of lesser threat and intelligence as well as greater attractiveness, submissiveness, physical weakness, naïveté, warmth, and lovability (Berry & McArthur, 1986; Zebrowitz, Fellous, Mignault, & Andreoletti, 2003). Such effects are shown even by infant perceivers as well as by perceivers from diverse cultures, suggesting a biological preparedness to respond to the facial qualities that identify infants (Lorenz, 1942).

According to the babyface overgeneralization hypothesis, the adaptive value of responding appropriately to babies, such as giving protection and inhibiting aggression, produces a tendency for responses to infants to be generalized to adults whose facial structure resembles them (Zebrowitz, 1996, 1997). Consistent with this hypothesis, more babyfaced individuals are perceived to have more childlike traits than their maturefaced peers* more naïve, submissive, physically weak, warm, and honest (Montepare & Zebrowitz, 1998). Moreover, cross-cultural agreement in reactions to babyfaced adults (Zebrowitz, Montepare, & Lee, 1993a) and reactions to babyfaced adults by infants and young children (Keating & Bai, 1986; Kramer, Zebrowitz, San Giovanni, & Sherak, 1995; Montepare & Zebrowitz-McArthur, 1989)are consistent with the argument that impressions of faces that vary in babyfaceness reflect a biologically prepared response.

The purpose of the present study was to investigate the neural mechanisms for babyface overgeneralization using fMRI. We focused on the amygdala, because it is activated by emotionally salient stimuli (Phelps et al., 2000). Such stimuli include faces, particularly those that are attractive (Aharon et al., 2001; Winston, O’Doherty, Kilner, Perrett, & Dolan, 2007) or that display affect (Fitzgerald, Angstadt, Jelsone, Nathan, & Phan, 2006). Babies are arguably in the category of emotionally salient stimuli (Lorenz, 1942), a claim that is supported by various responses to their faces, including: greater preferences (Feldman & Nash, 1978); greater emotional appeal (Berman, 1976); increased facial muscle activity that is typically associated with pleasant or happy facial expressions (Hildebrandt & Fitzgerald, 1978); and increased pupil dilation, a response to emotionally toned stimuli (Green, Kraus, & Green, 1979; Hess & Polt, 1960). We also investigated activation in the fusiform face area (FFA), both because it and analogous brain regions in monkeys are activated more by faces than by objects (Kanwisher, McDermott, & Chun, 1997; Kanwisher & Yovel, 2006; Perrett, Rolls, & Caan, 1982; Perrett et al., 1984), and also because it is activated more by emotionally salient faces (Gobbini, Leibenluft, Santiago, & Haxby, 2004; Golby, Gabrieli, Chiao, & Eberhardt, 2001; Lewis et al., 2003). We predicted that faces of babies would elicit higher amygdala and FFA activation, due to their emotional salience, and that faces of babyfaced adults would elicit higher activation than those of maturefaced adults, due to the babyface overgeneralization effect.

We considered four potential confounding variables that might account for our predicted results: attractiveness, smiling, a distinctive facial structure, and structural similarity of faces within each category. These variables are pertinent because attractive faces have been shown to elicit greater amygdala activation (Winston et al., 2007) as has laughter (Sander & Scheich, 2005), and emotionally expressive faces elicit more activation of the FFA (Ganel, Valyear, Goshen-Gottstein, & Goodale, 2005). Distinctive faces, defined as those with a facial structure farther from the mean face, also have been shown to elicit greater FFA activation (Loffler, Yourganov, Wilkinson, & Wilson, 2005). So do faces with less within category structural similarity when presented in a block design, an effect attributed greater neuronal habituation when sequentially presented stimuli excite the same neurons due to their structural similarity (Jiang et al., 2006; Winston, Henson, Fine-Goulden, & Dolan, 2004). We expected that the predicted effects of babyfaceness would not be accounted for by these other facial qualities.

METHOD

Participants

Functional MRI data were provided by 17 healthy, Caucasian participants (9 females), 21–36 years old (M = 26.5). All were right handed (self-reported), and were paid $80. Babyface and attractiveness ratings on 7-point scales were provided by a different group of 17 Caucasian raters (9 females) 18–21 years old (M = 18.8), who received credit toward an introductory psychology course requirement.

Stimuli

One hundred twenty grayscale faces with direct gaze formed twelve equal a priori categories: babies, babyfaced, maturefaced, low attractive, high attractive, disfigured, elderly, female, angry, disgust, fear and happy. Except for the female and baby categories, all were adult males. Except for the emotion faces, all had neutral expressions with closed mouths. Faces were normalized: mean luminance and contrast were equated; the eyes were at the same height; and the middle of the eyes was at the same location. A small fixation cross was superimposed on the mid-point of the eyes. All of the neutral expression faces had been used in a previous study (Zebrowitz et al., 2003).

The current study focused on the first three face categories: babies, babyfaced men, and maturefaced men. These included faces of 10 Caucasian infants, ranging in age from 5 to 9 months, drawn from previous research and faces of 20 young adult Caucasian men, also drawn from previous research (Hildebrandt & Fitzgerald, 1979; Zebrowitz et al., 2003). The men were drawn from a longitudinal study of representative samples of individuals born in Berkeley, California, or attending school in Oakland, California (Eichorn, 1981).

Babyfaceness, attractiveness, and smiling

The men’s faces previously had been rated in comparison with 185 other men of the same age on a 7-point scale with endpoints labeled babyfaced/maturefaced (Zebrowitz, Olson, & Hoffman, 1993b). The 10 maturefaced men (Mage = 17.4, SD = 0.52) were in the bottom 30% in babyface ratings, and the 10 babyfaced men (Mage = 17.6, SD = 0.48) were in the top 20%. When the faces were re-rated on the same scale in the context of the 12 face categories used in the present study, the designation of the babyfaced and maturefaced categories was sustained: babyfaced males M = 4.35, SD = 0.34; maturefaced males M = 3.42, SD = 0.43; t(18) = 5.38, p<.001. These two face categories were differentiated not only by subjective babyface ratings, but also by their facial metrics. A connectionist model trained to differentiate faces of babies and adults from facial metric inputs (cf. Zebrowitz et al., 2003) was subsequently activated significantly more by facial metric inputs from the babyfaced men (M = 19.1 SD = 8.63) than the maturefaced men (M = 10.3, SD = 3.43); t(18) = 3.01, p= .01. Attractiveness ratings on a 7-point scale with endpoints labeled unattractive/attractive were made by the same judges who provided babyface ratings in the present study. Smile ratings on a 7-point scale with endpoints labeled no smile/big smile were taken from a previous study (Zebrowitz et al., 2003).

Distinctiveness and within category similarity

To assess the faces’ distinctiveness (distance from the centroid) and their within category structural similarity, we computed their facial metrics (cf. Zebrowitz et al., 2003) and performed a principal components analysis (PCA) to decompose the metrics into a set of eigenvectors that described the variation among the faces. We then used the PCs to describe the distinctiveness and within category similarity of the faces (described in Appendix 1).

Procedure

MRI scanning was conducted at MGH/MIT/HST/Martinos Center. Stimuli were presented by E-Prime using a back-projected device and a set of mirrors mounted on the head coil. Participants passively viewed the faces with instructions to maintain central fixation throughout the task and to press a button whenever they saw a big fixation cross. The latter task was included to maintain their alertness. The experimental protocol conformed to all regulatory standards, and it was approved by the IRBs at the MGH/MIT/HST/Martinos Center and at Brandeis University. All participants signed an informed consent form.

Experimental paradigm

The faces were presented in a six-run block design. Within each run, a block of faces was alternated with a block of fixation points, lasting 20 s. Each block of faces included 10 faces from the same category. All 120 faces were shown within each run, with the sequence of face category presentations counter-balanced across runs (Dale, 1999). Both the faces and the fixation crosses were presented for 200 ms each, followed by a blank grey screen for 1800 ms.

Imaging protocol

We used a head coil Siemens 3T scanner. Scanning included T2*-weighted functional images followed by (1) T1-weighted structural images with the same parameters for co-registration purposes, and (2) Two T1, 128 sagittal images (1 × 1 × 1.33 mm) for anatomy. Functional images included 25 contiguous axial oblique (AC–PC line) 6 mm slices (TR = 2000 ms; TE = 30 ms; FOV = 40 × 20 cm; 30 slices; voxel resolution = 3.1 × 3.1 × 4.8 mm).

RESULTS

Pre-statistics data processing

Using FEAT Version 5.4 (FSL 3.1, 2004) we did the following: motion correction; non-brain removal; spatial smoothing using a Gaussian kernel of FWHM 5 mm; high pass signal cutoff at 50 seconds; and registration to the standard Montreal Neurological Institute (MNI) average template. For motion correction, we used MCFLIRT, a linear registration process that overcomes subtle movements of the brain (http://www.fmrib.ox.ac.uk/fsl/mcflirt/index.html). The threshold for exclusion of data due to excessive motion was 1 mm absolute movement. Based on this criterion, one run had to be excluded for one participant.

Region of interest analyses

Region of interest (ROI) analyses focused on the amygdala and the FFA. We selected all the voxels in the amygdala and the FFA that were activated significantly more by the face categories than by the baseline fixation cross (Gilaie-Dotan & Malach, 2007). This comparison was performed on the data averaged across subjects. The identified clusters of activated voxels were then used as ROIs to sample percent signal change from individual data for analyses of variance (ANO-VAs). Subject was the unit of analysis in these ANOVAs, with participant sex as a between-groups variable and face category and brain hemisphere as within-groups variables.1

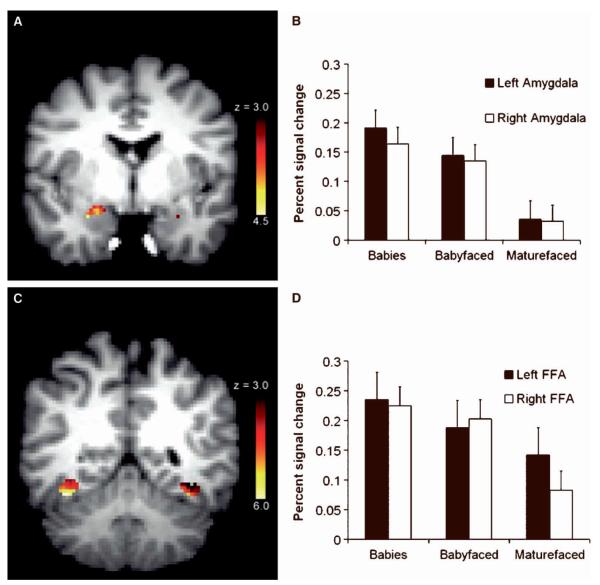

Amygdala signal change

There was a significant effect of face category effect on signal change in the amygdala, F(2, 15) = 3.89, MSE = 0.249, p = .03, but no effects of Brain Hemisphere or Face Category × Brain Hemisphere, respective Fs = 0.36 and 0.17, MSE = 0.009 and 0.003, ps > .56. Planned comparisons revealed that overall amygdala signal change was higher for the babies (M = 0.18, SD = 0.11) than for the maturefaced adults (M = 0.01, SD = 0.09), p = .03. Also, consistent with the babyface overgeneralization hypothesis, babyfaced adults (M = 0.14, SD = 0.06) elicited higher amygdala signal change than maturefaced adults, p = .03. Furthermore, the signal change for babies and babyfaced adults did not differ, p = .55 (Figure 1).

Figure 1.

Neural activity in the amygdala and FFA regions of interest. (A) Activation in the amygdala ROI to all faces versus baseline fixation (cluster based threshold: z > 3.0) determined by a mixed-effects group analysis. The most significant activation in the right side was in the Talairach coordinate (28, −4, −20) with z = 4.29. The most significant activation in the left side was in the coordinate (−28, −4, −20) with z = 3.34. (B) Means and standard errors of percent change in the left and right amygdala BOLD signal for each face category compared with baseline. (C) Activation in the FFA ROI to all faces versus baseline fixation (cluster based threshold: z > 3.0) determined by a mixed-effects group analysis. The most significant activation in the right side was in the Talairach coordinate (38, 53, 14) with z 5.97. The most significant activation in the left side was in the Talairach coordinate ( −36, −78, −11) with z =6.17. (D) Means and standard errors of percent change in the left and right FFA BOLD signal for each face category compared with baseline.

FFA signal change

There was a marginally significant effect of face category on signal change in the FFA, F (2, 15) = 3.08, MSE = 0.13, p = .06, but no effects for Brain Hemisphere or Face Category Brain Hemisphere, respective Fs = 0.40 and 0.67, MSEs = 0.008 and 0.010, ps > .52. Planned comparisons revealed that overall FFA signal change was higher for babies (M = 0.23, SD = 0.10) than for mature-faced adults (M = 0.11, SD = 0.12), p = .02, as predicted. There also was a tendency for babyfaced adults (M = 0.19, SD = 0.11) to elicit higher FFA signal change than maturefaced adults, although the effect was not significant, p = .14. The signal change for babies and babyfaced adults did not differ, p = .46 (Figure 1).

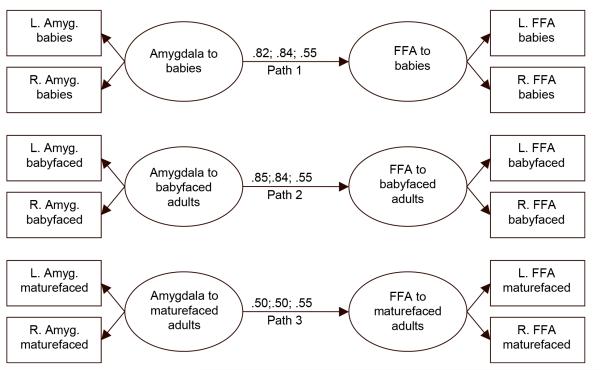

Connectivity

The parallel signal changes in the amygdala and the FFA raises the possibility that the registration of emotional salience in the amygdala modulates variations in FFA signal change across face categories. If so, one would expect greater effective connectivity between the amygdala and the FFA when viewing emotionally salient faces than when viewing less emotionally salient faces. We used structural equation modeling (SEM) to test this hypothesis (McIntosh & Gonzalez-Lima, 1994). Since the ANOVA results showed no effects of brain hemisphere, we created latent variables of amygdala signal change and FFA signal change using percent BOLD signal change in the left and right sides of the brain as indicators. Path 1 represented the amygdala-FFA connectivity when viewing babies, path 2 when viewing babyfaced adults, and path 3 when viewing maturefaced adults. In Model 1 each of the three paths was free to vary. In Model 2, paths 1 and 2 were set to equal. In Model 3, all paths were set to equal. The goodness of fit indices for Model 1, χ2(48) = 54.86, p = .23, and Model 2, χ2(49) 54.77, p = .26, did not differ significantly, Δχ2(1) = 0.09, p = .76, which indicated that allowing paths 1 and 2 to vary freely did not improve the fit as compared with forcing them to be equal. Next we compared the goodness of fit indices for Model 2 with Model 3, χ2(50) 61.51, p = .13. The goodness of fit indices for these two models did differ significantly, Δχ2(1) = 6.74, p = .009, indicating that allowing path 3 to vary freely provided a better fit to the data than forcing all three paths to be equal. The path coefficients from the best fitting Model 2 indicate that the effective connectivity between the amygdala and the FFA was lower when viewing maturefaced adults than when viewing either babies or babyfaced adults, which did not differ (see Figure 2).

Figure 2.

Path diagram of structural equation model (SEM) used to test effective connectivity between the amygdala and fusiform face area (FFA) when viewing babies, babyfaced adults, and maturefaced adults. Path coefficients from Model 1, Model 2, and Model 3 are listed from left to right.

Alternative explanations

One-way ANOVAs followed by planned comparisons were performed to assess face category differences in smiling, attractiveness, distinctiveness, and within-category similarity. There were no category differences in smiling, F = 0.49, MSE = 0.079, p = .62. Since we had selected faces with neutral expressions, all were rated close to the bottom of the smile scale (Mbabies = 1.23, SD = 0.50, Mbabyfaced = 1.37, SD = 0.33, Mmaturefaced = 1.40, SD = 0.34). The within-category distances among the facial metrics also did not differ significantly across categories (Mbabies = 3.75, SD = 0.43, Mbabyfaced = 4.00, SD = 0.34, Mmaturefaced = 4.15, SD = 0.52), F(2,27) = 2.24, MSE = 0.42, p = .13. However, the attractiveness of the three categories did differ, F(2, 27) = 51.51, MSE = 8.93, p = .001, with the attractiveness of babies (M = 5.85, SD = 0.39) higher than that of either babyfaced men (M = 4.49, SD = 0.42), t(18) = 7.48, p<.001, or maturefaced men (M = 4.20, SD = 0.61), t(18) = 7.18, p<.001, who did not differ from each other, t(18) = 1.23, p = .23. These variations in attractiveness cannot account for the pattern of results in which the signal change in response to babies was equivalent to that for babyfaced adults but higher than that for maturefaced adults. Similar results were found for the distinctiveness of the three categories, which differed in distance from the centroid, F(2, 27) = 5.00, p = .01. The distance from the mean face of babies’ facial metrics (M = 3.63, SD = 0.55) was greater than that of either babyfaced adults (M = 2.86, SD = 0.54), t(18) = 3.16, p = .005, or maturefaced adults (M = 3.05, SD = 0.61), t(18) = 2.22, p =.04, who did not differ from each other, t(18) = 0.76, p = .46. Like attractiveness, these variations in distinctiveness cannot account for the pattern of signal change across the three categories.

It should be emphasized that although babyfaced and maturefaced men did not differ in distinctiveness, as defined by distance from the mean face in the PCA analysis, there was a marginally significant tendency for the facial metrics of maturefaced men to be farther from babies (M = 6.87, SD = 1.11) than those of babyfaced men (M = 5.88, SD = 0.90), F(1, 198) = 2.88, p = .09, when comparing all pairwise distances (each baby’s face from each babyfaced man and each baby’s face from each maturefaced man). This effect is consistent with the connectionist modeling results reported in the method section, which showed that the facial metrics of babyfaced men resembled babies significantly more than did the metrics of maturefaced men. The difference in strength of the PCA and connectionist modeling effects can be attributed to the fact that the nonlinear connectionist modeling of resemblance to babies provides a more powerful test of similarity than does the linear PCA computation of distance from babies. In contrast to the equal attractiveness, distinctiveness, and within-category similarity of babyfaced and maturefaced adults, significant differences in their objective and perceived resemblance to babies paralleled the observed differences in amygdala and FFA signal change, supporting the babyface overgeneralization hypothesis.

DISCUSSION

Our results reveal a neural substrate for the babyface overgeneralization effect in the amygdala and the FFA. Both regions showed a greater percentage BOLD signal change compared with fixation when viewing faces of babies or babyfaced men than when viewing faces of maturefaced men. The greater amygdala and FFA activation elicited by babies is a novel finding that can be explained by their high emotional salience, since other emotionally salient stimuli also elicit higher activation in these brain regions (Aharon et al., 2001; Fitzgerald et al., 2006; Gobbini et al., 2004; Golby et al., 2001; Hamann & Mao, 2002; Lewis et al., 2003; Sander & Scheich, 2005; Winston et al., 2007). More remarkable was the equally high activation to babyfaced men and babies. Our pattern of results cannot be attributed to face category variations in smiling, attractiveness, distance from the “mean” face, or within-category structural similarity. Rather, babyfaced men were distinguished only by their resemblance to babies. These results demonstrate a neural babyface generalization effect, whereby perceivers’ reactions to infantile facial qualities are evident in their neural activation to babyfaced adults just as in their first impressions.

The distributed pattern of activation associated with face category is consistent with a distributed face processing system that has been previously shown in the coding for individual faces across different brain areas (Ishai, Schmidt, & Boesiger, 2005; O’Toole, Jiang, Abdi, & Haxby, 2005). The observed difference in effective connectivity between the amygdala and the FFA across the three face categories is also consistent with previous research on distributed patterns of brain activation. In particular, the higher connectivity between the amygdala and the FFA when viewing faces of babies or babyfaced men than mature-faced men agrees with evidence for greater effective connectivity between the amygdala and visual cortex when viewing emotionally salient stimuli (Corden, Critchley, Skuse, & Dolan, 2006; Fairhall & Ishai, 2007), as well as with evidence that the amygdala modulates the processing of the emotionally salient attribute of facial expression in extrastriate cortex (Morris et al., 1998; Phelps, 2006; Vuilleumier, Armony, Driver, & Dolan, 2003).

The present findings make an important contribution to the burgeoning research literature on the neural mechanisms for face perception. Much has been learned about face recognition and the perception of changeable facial qualities such as eye gaze, smiling, and other emotional expressions (Bruce & Young, 1986; Calder & Young, 2005; Haxby, Hoffman, & Gobbini, 2002). However, this literature has largely ignored the consensual first impressions of psychological qualities from faces, a phenomenon that has received considerable attention from social psychologists (see Zebrowitz & Montepare, 2006, for a recent review). The present findings demonstrate a neural substrate for the babyface overgeneralization effect, one mechanism that has been shown to contribute to consensual first impressions. In so doing, they highlight the necessity for a neuroscience of face perception to account for all the attributes that are perceived in faces, which include not only identity and emotion, but also social category and psychological attributes.

Acknowledgments

This work was supported by NIH Grant MH066836 and NSF Grant 0315307 to the first and last authors and K02MH72603 to the first author. The Authors declare that they have no competing financial interests.

APPENDIX 1

Calculation of distinctiveness and within category similarity

After performing a principal components analysis (PCA) on the faces’ facial metrics (Zebrowitz et al., 2003), we computed distance of each face to the centroid (distinctiveness) as

V is a vector representing face i’s loading on the PCs. We weighted each PC equally using the Mahalanobis metric.

The distance between faces i and j is derived from their positions in the factor loading space as

These distances between faces were averaged within face category to determine the within category structural similarity.

APPENDIX 2

Perceiver sex effects Amygdala activation

There was no significant effect of participant sex on amygdala activation (Mmen = 0.14, SD= 0.13, Mwomen =0.08, SD =0.14), F(1, 15) = 0.59, p=.46. There also was no significant Participant Sex × Face Category interaction, F(2, 15) = 2.18, p = .13, Participant Sex Brain Hemisphere interaction, F(1, 15) = .24, p = .63, or Participant Sex Face Category Brain Hemisphere interaction, F(1, 15) = 1.05, p = .63.

Fusiform activation

There was no significant effect of participant sex on FFA activation (Mmen = 0.14, SD = 0.13, Mwomen =0.22, SD =0.16), F(1, 15) = 1.11, p =.31. There also was no significant Participant Sex × Face Category interaction, F(2, 15) = 0.21, p =.81, or Participant Sex × Brain Hemisphere interaction, F(1, 15) = 0.08, p = .78. Although there was a marginally significant Participant Sex × Face Category Brain Hemisphere interaction, F(2, 15) = 2.62, p = .09, LCD tests revealed no significant differences between men and women in right or left fusiform activation to any of the face categories. We also performed LCD tests to see whether the overall effect of greater FFA activation when viewing babies than maturefaced adults was replicated for both male and female participants. The results showed that it was, albeit in different hemispheres. Female participants showed greater left FFA signal change when viewing faces of babies than maturefaced adults (Mbabies = 0.34, SD = 0.20, Mmaturefaced = 0.15, SD = 0.10, p = .002), whereas male participants showed marginally greater right FFA signal change (Mbabies = 0.21, SD = 0.28, Mmaturefaced = 0.01, SD = 0.25, p =.06. In contrast, right FFA signal change was not significant for female participants (Mbabies 0.25, SD = 0.16, Mmaturefaced 0.15, SD = 0.16, p =.32) and left FFA signal change was not significant for male participants (Mbabies = 0.13, SD = 0.28, Mmaturefaced = 0.12, SD = 0.27, p = .84). The fact that the difference between babies and mature-faced adults was significant for women and only marginal for men should be interpreted with caution, since the adult faces were all men. The sex difference in lateralization of the effect is consistent with some other evidence for greater left hemisphere response to emotional stimuli in women and greater right hemisphere response in men (Gasbarri et al., 2006).

Footnotes

Exploratory analyses examining the effects of perceiver sex are reported in Appendix 2 rather than in the results section because we had no basis for making predictions. Previous research has found equivalent reactions to babyfaced vs. maturefaced adults by male and female perceivers (Berry & McArthur, 1985; Zebrowitz et al., 1993a, 2003), and there is mixed evidence concerning sex differences in response to babies. The most reliable effects are shown in public self-report data, with little consistency when physiological or behavioral responses toward infants are assessed (Berman, 1980). Also, the one study that examined brain activation found sex differences only when viewing faces of babies that were morphed to resemble the self, but not for other faces of babies (Platek et al., 2004).

Contributor Information

Leslie A. Zebrowitz, Brandeis University, Waltham, Massachusetts, USA

Victor X. Luevano, Brandeis University, Waltham, Massachusetts, USA

Philip M. Bronstad, Brandeis University, Waltham, Massachusetts, USA

Itzhak Aharon, Martinos Center at Massachusetts General Hospital, Cambridge, Massachusetts, USA.

REFERENCES

- Aharon I, Etcoff N, Ariely D, Chabris CF, O’Connor E, Breiter HC. Beautiful faces have variable reward value: fMRI and behavioral evidence. Neuron. 2001;32:537–551. doi: 10.1016/s0896-6273(01)00491-3. [DOI] [PubMed] [Google Scholar]

- Alley TR. Infant head shape as an elicitor of adult protection. Merrill-Palmer Quarterly. 1983;29:411–427. [Google Scholar]

- Berman PW. Social context as a determinant of sex differences in adults’ attraction to infants. Developmental Psychology. 1976;12:365–366. [Google Scholar]

- Berman PW. Are women more responsive than men to the young? A review of developmental and situational variables. Psychological Bulletin. 1980;88:668–695. [Google Scholar]

- Berry DS, McArthur LA. Some components and consequences of a babyface. Journal of Personality and Social Psychology. 1985;48:312–323. [Google Scholar]

- Berry DS, McArthur LA. Perceiving character in faces: The impact of age-related craniofacial changes on social perception. Psychological Bulletin. 1986;100:3–18. [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. British Journal of Psychology. 1986;77:305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nature Reviews Neuroscience. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Corden B, Critchley HD, Skuse D, Dolan RJ. Fear recognition ability predicts differences in social cognitive and neural functioning in men. Journal of Cognitive Neuroscience. 2006;18:889–897. doi: 10.1162/jocn.2006.18.6.889. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Human Brain Mapping. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eibel-Eibesfeldt I. Human ethology. Aldine de Gruyter; New York: 1989. [Google Scholar]

- Eichorn DH. Samples and procedures. In: Eichorn DH, Clausen JA, Haan N, Honzik MP, Muzzin PH, editors. Present and past in middle life. Academic Press; New York: 1981. pp. 33–51. [Google Scholar]

- Fairhall SL, Ishai A. Effective connectivity within the distributed cortical network for face perception. Cerebral Cortex. 2007;17:2400–2406. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Feldman SS, Nash SC. Interest in babies during young adulthood. Child Development. 1978;49:617–622. [Google Scholar]

- Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL. Beyond threat: Amygdala reactivity across multiple expressions of facial affect. NeuroImage. 2006;30:1441–1448. doi: 10.1016/j.neuroimage.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Ganel T, Valyear KF, Goshen-Gottstein Y, Goodale MA. The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia. 2005;43:1645–1654. doi: 10.1016/j.neuropsychologia.2005.01.012. [DOI] [PubMed] [Google Scholar]

- Gasbarri A, Arnone B, Pompili A, Marchetti A, Pacitti F, Calil SS, et al. Sex related lateralized effect of emotional content on declarative memory: An event related potential study. Behav. Brain Res. 2006;2006;168:177–184. doi: 10.1016/j.bbr.2005.07.034. Erratum. Behavioural Brain Research, 170, 173-173. [DOI] [PubMed] [Google Scholar]

- Gilaie-Dotan S, Malach R. Sub-exemplar shape tuning in human face-related areas. Cerebral Cortex. 2007;17:325–338. doi: 10.1093/cercor/bhj150. [DOI] [PubMed] [Google Scholar]

- Gobbini MI, Leibenluft E, Santiago N, Haxby JV. Social and emotional attachment in the neural representation of faces. NeuroImage. 2004;22:1628–1635. doi: 10.1016/j.neuroimage.2004.03.049. [DOI] [PubMed] [Google Scholar]

- Golby AJ, Gabrieli JDE, Chiao JY, Eberhardt JL. Differential responses in the fusiform region to same-race and other-race faces. Nature Neuroscience. 2001;4:845–850. doi: 10.1038/90565. [DOI] [PubMed] [Google Scholar]

- Green ML, Kraus SK, Green RG. Pupillary responses to pictures and descriptions of sex-stereotyped stimuli. Perceptual and Motor Skills. 1979;49:759–764. doi: 10.2466/pms.1979.49.3.759. [DOI] [PubMed] [Google Scholar]

- Hamann S, Mao H. Positive and negative emotional verbal stimuli elicit activity in the left amygdala. Neuroreport: For Rapid Communication of Neuroscience Research. 2002;13:15–19. doi: 10.1097/00001756-200201210-00008. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. Human neural systems for face recognition and social communication. Biological Psychiatry. 2002;51:59–67. doi: 10.1016/s0006-3223(01)01330-0. [DOI] [PubMed] [Google Scholar]

- Hess EH, Polt JM. Pupil size as related to interest value of visual stimuli. Science. 1960;132:349–350. doi: 10.1126/science.132.3423.349. [DOI] [PubMed] [Google Scholar]

- Hildebrandt KA, Fitzgerald HE. Adults’ responses to infants varying in perceived cuteness. Behavioural Processes. 1978;3:159–172. doi: 10.1016/0376-6357(78)90042-6. [DOI] [PubMed] [Google Scholar]

- Hildebrandt KA, Fitzgerald HE. Facial feature determinants of perceived infant attractiveness. Infant Behavior and Development. 1979;2:329–339. [Google Scholar]

- Ishai A, Schmidt CF, Boesiger P. Face perception is mediated by a distributed cortical network. Brain Research Bulletin. 2005;67:87–93. doi: 10.1016/j.brainresbull.2005.05.027. [DOI] [PubMed] [Google Scholar]

- Jiang X, Rosen E, Zeffiro T, VanMeter J, Blanz V, Riesenhuber M. Evaluation of a shape-based model of human face discrimination using fMRI and behavioral techniques. Neuron. 2006;50:159–172. doi: 10.1016/j.neuron.2006.03.012. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: A module in human extrastriate cortex specialized for face perception. Journal of Neuroscience. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: A cortical region specialized for the perception of faces. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences. 2006;361:2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keating CF, Bai DL. Children’s attributions of social dominance from facial cues. Child Development. 1986;57:1269–1276. [Google Scholar]

- Kramer S, Zebrowitz LA, San Giovanni JP, Sherak B. Infants’ preferences for attractiveness and babyfaceness. In: Bardy BG, Bootsma RJ, Guiard Y, editors. Studies in perception and action III. Lawrence Erlbaum Associates, Inc.; Mahwah, NJ: 1995. pp. 389–392. [Google Scholar]

- Lewis S, Thoma RJ, Lanoue MD, Miller GA, Heller W, Edgar C, et al. Visual processing of facial affect. Neuroreport. 2003;14:1841–1845. doi: 10.1097/00001756-200310060-00017. [DOI] [PubMed] [Google Scholar]

- Loffler G, Yourganov G, Wilkinson F, Wilson HR. fMRI evidence for the neural representation of faces. Nature Neuroscience. 2005;8:1386–1390. doi: 10.1038/nn1538. [DOI] [PubMed] [Google Scholar]

- Lorenz K. Die angeborenen Formen moglicher Arfahrung [The innate forms of potential experience] Zeitschrift fur Tierpsychologie. 1942;5:235–409. [Google Scholar]

- McCall RB, Kennedy CB. Attention of 4-month infants to discrepancy and babyishness. Journal of Experimental Child Psychology. 1980;29:189–201. doi: 10.1016/0022-0965(80)90015-6. [DOI] [PubMed] [Google Scholar]

- McIntosh AR, Gonzalez-Lima F. Structural equation modeling and it’s application to network analysis in functional brain imaging. Human Brain Mapping. 1994;2:2–22. [Google Scholar]

- Montepare JM, Zebrowitz LA. “Person perception comes of age”: The salience and significance of age in social judgments. In: Zanna M, editor. Advances in experimental social psychology. Vol. 30. Academic Press; San Diego, CA: 1998. pp. 93–163. [Google Scholar]

- Montepare JM, Zebrowitz-McArthur L. Children’s perceptions of babyfaced adults. Perceptual and Motor Skills. 1989;69:467–472. doi: 10.2466/pms.1989.69.2.467. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Büchel C, Frith CD, Young AW, Calder AJ, et al. A neuromodulatory role for the human amygdala inprocessing emotional facial expressions. Brain: A Journal of Neurology. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- O’Toole AJ, Jiang F, Abdi H, Haxby JV. Partially distributed representations of objects and faces in ventral temporal cortex. Journal of Cognitive Neuroscience. 2005;17:580–590. doi: 10.1162/0898929053467550. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Rolls ET, Caan W. Visual neurones responsive to faces in the monkey temporal cortex. Experimental Brain Research. 1982;47:329–342. doi: 10.1007/BF00239352. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Smith PAJ, Potter DD, Mistlin AJ, Head AS, Milner AD, et al. Neurons responsive to faces in the temporal cortex—Studies of functional-organization, sensitivity to identity and relation to perception. Human Neurobiology. 1984;3:197–208. [PubMed] [Google Scholar]

- Phelps EA. Emotion and cognition: Insights from studies of the human amygdala. Annual Review of Psychology. 2006;57:27–53. doi: 10.1146/annurev.psych.56.091103.070234. [DOI] [PubMed] [Google Scholar]

- Phelps EA, O’Connor KJ, Cunningham WA, Funayama ES, Gatenby JC, Gore JC, et al. Performance on indirect measures of race evaluation predicts amygdala activation. Journal of Cognitive Neuroscience. 2000;12:729–738. doi: 10.1162/089892900562552. [DOI] [PubMed] [Google Scholar]

- Platek SM, Raines DM, Gallup GG, Jr., Mohamed FB, Thomson JW, Myers TE, et al. Reactions to children’s faces: Males are more affected by resemblance than females are, and so are their brains. Evolution and Human Behavior. 2004;25:394–405. [Google Scholar]

- Sander K, Scheich H. Left auditory cortex and amygdala, but right insula dominance for human laughing and crying. Journal of Cognitive Neuroscience. 2005;17:1519–1531. doi: 10.1162/089892905774597227. [DOI] [PubMed] [Google Scholar]

- Schleidt M, Schiefenhovel W, Stanjek K, Krell R. Reactions of passersby to a mother and baby. Man Environment Systems. 1980;10:73–82. [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nature Neuroscience. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- Winston JS, Henson RNA, Fine-Goulden MR, Dolan RJ. fMRI-adaptation reveals dissociable neural representations of identity and expression in face perception. Journal of Neurophysiology. 2004;92:1830–1839. doi: 10.1152/jn.00155.2004. [DOI] [PubMed] [Google Scholar]

- Winston JS, O’Doherty J, Kilner JM, Perrett DI, Dolan RJ. Brain systems for assessing facial attractiveness. Neuropsychologia. 2007;45:195–206. doi: 10.1016/j.neuropsychologia.2006.05.009. [DOI] [PubMed] [Google Scholar]

- Zebrowitz LA. Physical appearance as a basis for stereotyping. In: McRae MHN, Stangor C, editors. Foundation of stereotypes and stereotyping. Guilford Press; New York: 1996. pp. 79–120. [Google Scholar]

- Zebrowitz LA. Reading faces: Window to the soul? Westview Press; Boulder, CO: 1997. [Google Scholar]

- Zebrowitz LA, Fellous JM, Mignault A, Andreoletti C. Trait impressions as over-generalized responses to adaptively significant facial qualities: Evidence from connectionist modeling. Personality and Social Psychology Review. 2003;7:194–215. doi: 10.1207/S15327957PSPR0703_01. [DOI] [PubMed] [Google Scholar]

- Zebrowitz LA, Montepare JM. The ecological approach to person perception: Evolutionary roots and contemporary offshoots. In: Schaller M, Simpson JA, Kenrick DT, editors. Evolution and social psychology. Psychology Press; New York: 2006. pp. 81–113. [Google Scholar]

- Zebrowitz LA, Montepare JM, Lee HK. They don’t all look alike: Individual impressions of other racial groups. Journal of Personality and Social Psychology. 1993a;65:85–101. doi: 10.1037//0022-3514.65.1.85. [DOI] [PubMed] [Google Scholar]

- Zebrowitz LA, Olson K, Hoffman K. Stability of babyfaceness and attractiveness across the life span. Journal of Personality & Social Psychology. 1993b;64:453–466. doi: 10.1037//0022-3514.64.3.453. [DOI] [PubMed] [Google Scholar]