Abstract

Perception is the window through which we understand all information about our environment, and therefore deficits in perception due to disease, injury, stroke or aging can have significant negative impacts on individuals’ lives. Research in the field of perceptual learning has demonstrated that vision can be improved in both normally seeing and visually impaired individuals, however, a limitation of most perceptual learning approaches is their emphasis on isolating particular mechanisms. In the current study, we adopted an integrative approach where the goal is not to achieve highly specific learning but instead to achieve general improvements to vision. We combined multiple perceptual learning approaches that have individually contributed to increasing the speed, magnitude and generality of learning into a perceptual-learning based video-game. Our results demonstrate broad-based benefits of vision in a healthy adult population. Transfer from the game includes; improvements in acuity (measured with self-paced standard eye-charts), improvement along the full contrast sensitivity function, and improvements in peripheral acuity and contrast thresholds. The use of this type of this custom video game framework built up from psychophysical approaches takes advantage of the benefits found from video game training while maintaining a tight link to psychophysical designs that enable understanding of mechanisms of perceptual learning and has great potential both as a scientific tool and as therapy to help improve vision.

INTRODUCTION

Over 100 million people worldwide suffer from low-vision; visual impairments that cannot be corrected by spectacles. Our knowledge of the world is derived from our perceptions, and an individual’s ability to navigate his/her surroundings or engage in activities of daily living such as walking, reading, watching TV, and driving, naturally relies on his/her ability to process sensory information. Thus deficits in visual abilities, due to disease, injury, stroke or aging, can have significant negative impacts on all aspects of an individual’s life. Likewise, an enhancement of visual abilities can have substantial positive benefits to one’s lifestyle. Visual deficits can generally be categorized as those related to the properties of the eye, for which there are numerous innovative corrective approaches, and those related to brain processing of visual information, for which understanding, and thus appropriate therapies, are very limited. The lack of appropriate approaches to treat brain-based aspects of low-vision is a serious problem since in many cases a component of the individual’s low-vision is related to sub-optimal brain processing (Polat, 2009).

Research in the field of perceptual learning provides promise to address components of low-vision. Improvements have been identified for a wide set of perceptual abilities; from perception of elementary features (e.g. luminance contrast (Adini et al., 2002; Furmanski et al., 2004), motion (Ball & Sekuler, 1982; Vaina et al., 1998) and line-orientation (Karni & Sagi, 1991; Ahissar & Hochstein, 1997)) to global scene processing (Chun, 2000) and image recognition (Gold et al., 1999; Lin et al., 2010). Recent advances in the field of perceptual learning show great promise for rehabilitation from a diverse set of vision disorders. For example, recent work on perceptual learning has been translated to develop treatments of amblyopia (Levi & Li, 2009; Hussain et al., 2012), presbyopia (Polat, 2009), macular degeneration (Baker et al., 2005), stroke (Vaina & Gross, 2004; Huxlin et al., 2009), and late-life recovery of visual function (Ostrovsky et al., 2006). However, one shortcoming of previous studies is that perceptual learning is often very specific to the trained stimulus features (Fahle, 2005). Consequently, progress in the field has been distinctly focused on this specificity, which has limited the development of training strategies that are optimized to target broad based benefits to visual processing.

A limitation of most perceptual learning approaches, towards translational efficacy, is emphasis on isolating individual mechanisms. Nearly all approaches train a small set of stimulus features and use stimuli of a single sensory modality. It is also common to employ tasks that are not motivating to participants and require difficult stimulus discriminations for which subjects make many errors and have low confidence of their accuracy. To achieve effective therapies for low-vision populations research of perceptual learning needs to shift focus from isolating mechanisms of learning to that of integrating multiple learning approaches. A few studies have used this approach, showing for instance that training on multiple stimulus dimensions (Adini et al., 2004; Yu et al., 2004; Xiao et al., 2008), or with off-the-shelf video-games (Green & Bavelier, 2003), dramatically improves the extent to which learning generalizes to untrained conditions. Furthermore, research shows that learning is improved when using multisensory stimuli (Shams & Seitz, 2008a), motivating tasks (Shibata et al., 2009), and settings where participants understand the accuracy of their responses (Ahissar & Hochstein, 1997) and receive consistent reinforcement to the stimuli that are to be learned (Seitz & Watanabe, 2009). Together, these studies suggest that the coordinated engagement of attention, delivery of reinforcement, use of multisensory stimuli, and the training of multiple stimulus dimensions individually enhance learning.

Another related line of research has examined the efficacy of commercial video games as a tool to induce perceptual learning (Green & Bavelier, 2003). For example, Green and Bavelier (2003) showed that training with action video games positively impacted a wide range of visual skills including useful field of view, multiple object tracking, attentional blink, and performance in flanker compatibility tests. Furthermore, recent research has found that even basic visual abilities such as contrast sensitivity (Li et al., 2009) and acuity (Green & Bavelier, 2007; Li et al., 2011) can show improvement after video game use. However, there exists some controversies regarding the mechanisms leading to these effects (Boot et al., 2011) and it is difficult to determine which aspects of the games lead to the observed learning effects. Thus while there is great promise in the video game approach, there is a need for games to be developed that allow a clearer link between game attributes and mechanisms of perceptual learning.

In the current study, we adopted an integrative approach where the goal was not to achieve highly specific learning, but instead to achieve broad-based improvements to vision. We combined multiple perceptual learning approaches (including engagement of attention, reinforcement, multisensory stimuli, and multiple stimulus dimensions) that have individually contributed to increasing the speed, magnitude and generality of learning into an integrated perceptual-learning video game. Training with this video game induced significant improvements in acuity (measured with self-paced standard eye-charts), improvement along the full contrast sensitivity function, as well as improvements in peripheral acuity and contrast thresholds in participants with normal vision. The use of this type of custom video game framework built up from psychophysical approaches takes advantage of the benefits found from video game training while maintaining a tight link to psychophysical designs that enable understanding of mechanisms of perceptual learning.

MATERIALS AND METHODS

Participants

Thirty participants (18 male and 12 females; age range 18–55 years) were recruited and gave written consent to participate in experiments conforming to the guidelines of the University of California, Riverside Human Research Review Board. They were all healthy and had normal or corrected-to-normal visual acuity. None of them reported any neurological, psychiatric disorders or medical problems.

Materials

For the test stimuli, an Apple Mac Mini or a Dell PC, both running Matlab (Mathworks, Natick, MA) with Psychtoolbox Version 3 (Brainard, 1997; Pelli, 1997), was used to generate the stimuli and control the experiment. Participants sat on a height adjustable chair at 5 feet from a 24″ SonyTrinitron CRT monitor (resolution: 1600 * 1200 at 100 Hz) or a 23″ LED Samsung Monitor (resolution: 1920 × 1080 at 100 Hz) and viewed the stimuli under binocular viewing conditions. Gaze position on the screen was tracked with the use of an eye-tracker (EyeLink 1000, SR Research ®).

For the training session, custom written software (Carrot NT, Los Angeles, CA) that runs on both Apple and Windows personal computers was used. Participants had their head-position fixed with a headrest, but eye-movements were not tracked during training.

Testing Procedures

Central Vision Tests

Sixteen participants conducted the central vision assessments, with 8 in the Training Group and 8 in the Control Group. Tests were conducted twice for each participant on separate days: before and after training for participants in the Training Group, and at least 24 hours apart for the Control Group. An Optec Functional Visual Analyzer (Stereo Optical Company, Chicago, IL USA) was used to measure central visual acuity (EDTRS chart), and contrast sensitivity function (CSF), with the Functional Acuity Contrast Test (FACT™) chart. Both tests were self-paced and stimuli were presented until the participant responded. The FACT chart tests orientation discrimination of sine-wave gratings with 5 spatial frequencies (1.5, 3, 6, 12, 18 cpd) and 9 levels of contrast. Participants responded to the orientation of the grating (left, right, or vertical) using the method of limits for each spatial frequency. We present these data as a function of contrast-sensitivity (1/percent contrast) so that the data is consistent with the typical presentation of contrast sensitivity functions (Campbell & Robson, 1968).

Peripheral Vision Tests

Twenty-seven participants were tested on peripheral vision, 14 in the Training Group and 13 in the Control Group (data from 3 subjects was missing due to technical problems). Tests were conduced twice for each participant on separate days: before and after training for participants in the Training Group, and at least 24 hours apart for the Control Group. For 16 of the participants (8 Trained, 8 Control) a gaze contingent display was utilized to ensure that stimuli were properly positioned on the retina. Participants fixated on a centrally presented red dot for 500 ms in order for each trial to begin. This was controlled by the use of an eye-tracker (EyeLink 1000, SR Research ®). In the other participants similar results were obtained without the eye-tracker.

In the peripheral Acuity Test, Landolt C stimuli (using the Sloan Font size 32) were presented for 200 ms in 3 different eccentricities (2°, 5°, 10°) for each of 8 different angles (22.5°, 67.5°, 112.5°, 157.5°, 202.5°, 247.5°, 292.5°, 337.5°) around the circle. The task was to respond to the direction of the opening of the letter C, right or left. Participants did not receive feedback on the accuracy of their response. The stimulus size was adjusted using a three-down/one-up staircase with separate staircases (30 trials each) for each stimulus eccentricity/angle combination, for a total of 720 trials. Thresholds were then averaged across all the positions at each eccentricity.

In the peripheral Contrast Test, participants responded to the location (left or right of fixation) on the screen of a dimly presented letter “O” via a key-press. Participants did not receive feedback on the accuracy of their responses. The stimuli were presented for 200 ms at 3 different eccentricities (2°, 5°, 10°), while the size of each stimulus was computed as log (eccentricity/2)/4, for each of 8 different angles (22.5°, 67.5°, 112.5°, 157.5°, 202.5°, 247.5°, 292.5°, 337.5°) around the circle. The stimulus contrast started at 17%, and was adjusted using a three-down/one-up staircase with separate staircases (30 trials each) for each stimulus eccentricity/angle combination, for a total of 720 trials. We present these data as a function of %-contrast to be consistent with typical assessments of contrast thresholds used in letter charts. Thresholds were then averaged across all the positions at each eccentricity.

Training Procedures

Fourteen participants each conducted 24 training sessions with the integrated perceptual learning video game. Sessions were conducted on separate days with an average of 4 sessions per week. Each training session lasted approximately 30 minutes. Stimuli consisted of Gabor patches (targets) at 6 spatial frequencies (1.56, 3.13, 6.25, 12.5, 25, 50 cpd), and 8 orientations (0, 22.5°, 45°, 67.5°, 90°, 112.5°, 135°, 157.5°). Gaussian windows of Gabors varied with sigma between .25–1 degrees and with phases (0, 45, 90, 135). To avoid aliasing, SF=50 was only shown at phases (0,90) and at orientations (0,45,90,135).

We describe this program as a “video-game” because numerous elements were introduced with the goal of entertainment. The program was set up like a game, with points given each time a target was selected (and taken away when distractors were selected), bonuses given for rapid responses, and levels that progressed in difficulty throughout training. Levels were defined by the types of distractors that were included, the size of stimuli that were presented, and the total number of elements. In later levels, targets and distractors would appear and disappear when not selected quickly enough. Many parameters were adaptive to participant performance, including level progression, contrast and number of stimuli, and the rate of stimulus presentation. These adaptive procedures were all based upon passing criteria that depended upon the level. Of note, given the large number of stimulus and game parameters that could change as subjects progressed through exercises, it is difficult to plot a simple metric of learning from the training sessions. Thus even though we saved details of each stimulus that was shown during the entire training regime, the gamification led to a rich but complex training data set for which more power would be required to fully analyze and comprehend.

Calibration

At the beginning of each session a calibration was run where participants were shown a display containing Gabor Stimuli of 7 contrast values spanning the range between suprathreshold and subthreshold, (these were adaptively determined across sessions based on previous performance levels), with one screen for each of the 6 spatial frequencies. This calibration determined the initial contrast values for each spatial frequency to be displayed during the training exercises.

Participants ran 8–12 training exercises that lasted approximately 2 minutes each (number of training exercises varied depending on participants’ rate of performance). Exercises alternated between Static (simultaneous) and Dynamic (sequential) types. The goal of the exercises was to click on all of the targets as quickly as possible. Separate staircases were run on each spatial frequency. Targets that were not selected during the time limit would start flickering at a 20 Hz frequency. Previous research (Beste et al., 2011) shows that a visual stimulus flickering at 20 Hz is sufficient to induce learning even when there is no training task on those stimuli. The delay before flickering onset allowed the use of adaptive procedures to track the pre-flickering thresholds. If targets were still not selected while flickering, contrast increased gradually until selected. This allowed participants to successfully select all targets.

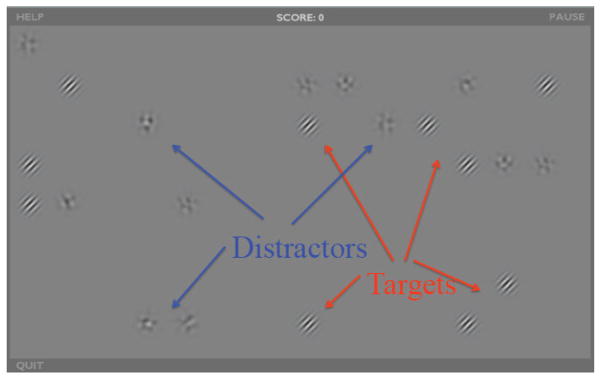

The first few exercises consisted of only targets (Gabors), but distracters were added as the training progressed (Figure 1). The number of distractors varied for each participant based on their performance and their level in the game. However, once distractors were added all remaining sessions contained distractors. Before each exercise that included distractors, participants were given instructions including an example of a correct target and an incorrect distractor. Throughout training distractors became more similar to the targets (starting off as blobs, then oriented patterns, then noise patches of the same spatial frequency as the targets, etc.). Participants were instructed not to select distractors, on penalty of losing points. Participants received points proportional to the inverse of the contrast of the stimuli that they clicked on and thus their scores corresponded to the number targets selected and their contrast sensitivity.

Figure 1.

Game screenshot - Static search with distractors. Participants should select the targets, and ignore the distractors. As levels progress distractors will look more and more like targets. Of note, both targets and distractors are typically much lower contrast than they appear here.

During the exercises, when a target was selected a sound was played through speakers where interaural level differences were used to co-locate the sound with the visual targets. Here, low-frequency tones corresponded with stimuli at the bottom of the screen and high-frequencies tones corresponded to stimuli at the top of the screen. Thus the horizontal and vertical locations on the screen each corresponded to a unique tone. The sounds provided an important cue to the location of the visual stimuli and were included to boost learning as has been found in studies of multisensory facilitation (Shams & Seitz, 2008a).

Static (simultaneous) Exercise

An array of targets of a single randomly determined orientation and spatial frequency combination would appear all at once randomly scattered across the screen. The contrast and number of targets was adaptively determined. The contrast was decreased by 5% of the current contrast-level whenever 80% of the displayed targets were selected within a 2.5 second per targets time limit, and increased whenever fewer than 40% of targets were selected within this time limit. Target number was adaptively determined to approximate the number that participants could select within 20 seconds. Each time the participant cleared a screen in less than 20 seconds the selection rate (per second) was calculated, multiplied by 20, and this was the number shown on subsequent rounds.

Dynamic (sequential) Exercises

Targets would fade in one at a time at a random location on the screen. For each 20-second miniround all the targets would appear in a randomly chosen orientation/spatial frequency combination. Targets would appear sequentially at a rate determined adaptively (starting at 1 target every 2.5 seconds and changing this rate to the average of the current value and the average reaction time from the last round), or as soon all previous targets were cleared. Target contrast was adaptively determined using a three–down/one–up staircase. In addition to a tone being played when targets were selected, a separate unique tone corresponding to the target location was played with the onset of each target. This tone cued the location where the target appeared on the screen. In exercises that contained distractors, the distractors appeared all at once on the screen while targets faded in sequentially.

RESULTS

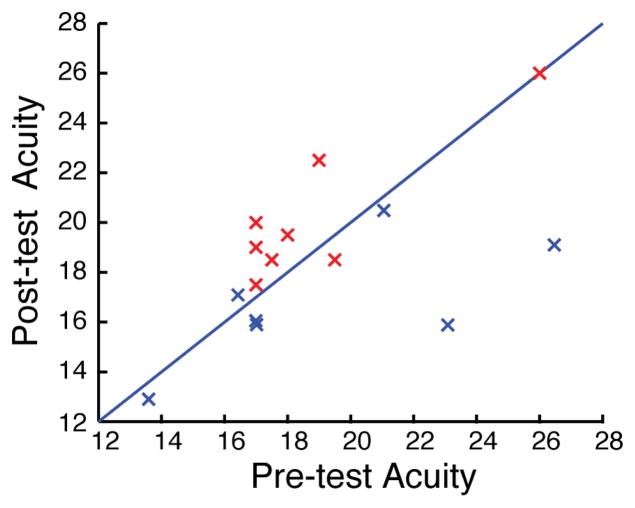

We first examined changes in central vision in 16 participants (8 Training, 8 Control) using an Optec Functional Visual Analyzer (see Figure 2; trained in blue, control in red). Central acuity significantly improved (from 20/19.3 ± 1.5 (SE) to 20/16.7 ± 0.8) in the training group (p = 0.03, t-test) but actually got a little worse in the control group (20/18.9 ± 1.1 to 20/20.2 ± 1.0), with a significant interaction in learning between groups (F(1,14)=8.4, p=0.01; two-way ANOVA with session as a within subject measure and group as a between subject measure). Thus we found that training with the integrated perceptual learning video game had a positive impact on central acuity in a population with initially good vision.

Figure 2.

Central acuity. Each point represents one subject in the trained group (blue) or control group (red). Values are based upon the 20/20 acuity scale.

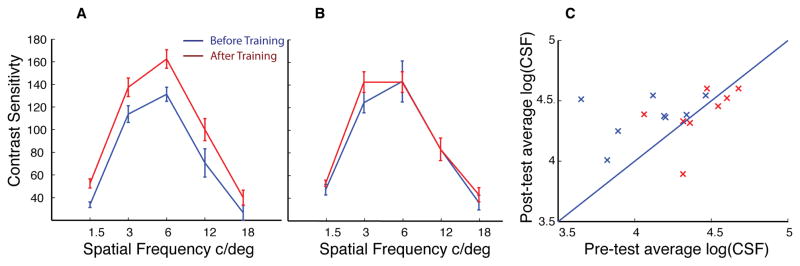

We also used the OptecAnalyzer to measure participants’ centrally viewed Contrast Sensitivity Function (CSF) using 5 spatial frequencies (1.5, 3, 6, 12, and 18 cpd). Of note, while the plots show contrast sensitivity, as is conventional for the FACT test, the statistical analyses were conducted on log (CSF) so as to better equate the variance across spatial frequencies. The trained group (Figure 3a) showed significant change in CSF (F(1,7) = 12.4, p = 0.01), whereas that of the control group (Figure 3b) showed no change (F(1,7) = 1.7, p = 0.2), with a significant interaction in learning between groups (F(1,14)=5.6, p=0.04; three-way ANOVA with session and spatial frequency as within subject measures and group as a between subject measure). In Figure 3c we plot the average log contrast sensitivity and can see that CSF improvements were found in all subjects in the trained group, but the data was inconsistent for the control group.

Figure 3.

Contrast Sensitivity Function. Average CSF on pretest (blue) and posttest (red) for experimental (A) and control group (B). Error bars represent within subject standard error. C, scatter plot of average of log (CSF) where, each point represents one subject in the trained group (blue) or control group (red).

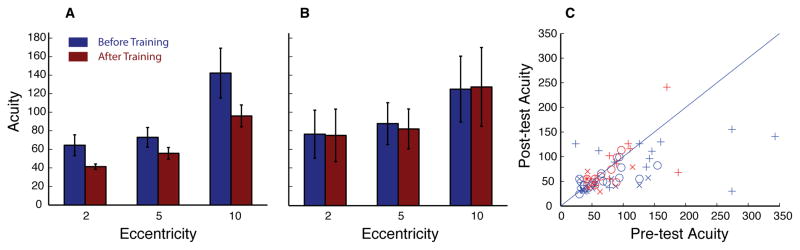

Next, we examined peripheral acuity in 27 participants (14 Training, 13 Control), 16 (8 Training, 8 Control) of which were run with a gaze-contingent display to ensure accurate fixation on each trial, using Landolt C’s to assess peripheral acuity (Figure 4). A three-way, one-tailed, ANOVA with session and eccentricity as within subject measures and group as a between subject measure and found a significant interaction in learning between groups (F(1,26) = 2.8, p = 0.05), demonstrating a significant difference in learning between the trained and control groups. Furthermore, significant benefits of training were confirmed with a two-way repeated measures ANOVA (Eccentricity X Session) showing a main effect of Session for acuity (F(1,13) = 4.7, p = 0.03). Benefits of peripheral acuity in the trained group were significant at each of the eccentricities tested (2° p = 0.02, 5° p = 0.02, 10° p = 0.04; t-tests). A second ANOVA showed no such benefits for the control group (F(1,12) = 0.4, p = 0.5). A significant main effect of eccentricity was found for both groups (trained, F(2,26)=23.3, p< 0.0001; control, F(2,22)=13.0, p= 0.0002), with neither group showing an interaction between session and eccentricity (trained, F(2,26)=1.8, p= 0.19; control, F(2,22)=0.0, p= 0.99).

Figure 4.

Peripheral Acuity. Average acuity thresholds (based on 20/20 values) on pretest (blue) and posttest (red) for experimental (A) and control group (B). Error bars represent within subject standard error. C, scatter plot of individual performance where, each point represents one subject in the trained group (blue) or control group (red) at each eccentricity (x=2, O=5, and +=10).

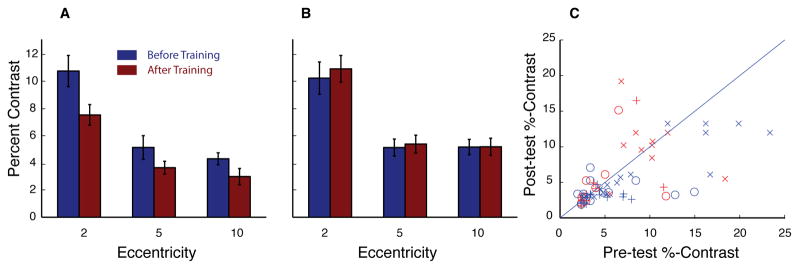

Peripheral contrast thresholds (Figure 5) were measured with a letter “O” presented at each eccentricity (scaled logarithmically to account for cortical magnification factor), also using gaze-contingent displays. Of note, while the percent contrast might be expected to be lowest at the smallest eccentricity (closest to the fovea), our results exhibit the opposite pattern. This is likely due to our scaling inadvertently decreasing the difficulty of the task as eccentricity increased. However since our test of interest was improvement from pre-test to post-test, these baseline differences across eccentricity are not of primary concern. A three-way, one-tailed, ANOVA with session and eccentricity as within subject measures and group as a between subject measure and found a significant interaction in learning between groups (F(1,26) = 3.3, p = 0.04), demonstrating a significant difference in learning between the trained and control groups. For the trained group, a two-way repeated measures ANOVA revealed a main effect of Session for contrast sensitivity (F(1,13) = 6.8, p = 0.01), and benefits of peripheral acuity were found at each eccentricity tested (2° p = 0.003, 5°p = 0.1, 10° p = 0.01; t-tests), however with only a trend for the 5° point. A second ANOVA showed no significant changes in the control group (F(1,12) = 0.6, p = 0.8). A main effect of eccentricity was found for both groups (trained, F(2,26)=30.8, p< 0.0001; control, F(2,22)=54.9, p< 0.0001), with neither group showing an interaction between session and eccentricity (trained, F(2,26)=0.6, p= 0.6; control, F(2,22)=0.1, p= 0.9).

Figure 5.

Peripheral Contrast Sensitivity. Average contrast thresholds on pretest (blue) and posttest (red) for experimental (A) and control group (B). Error bars represent within subject standard error. C, scatter plot of individual performance where, each point represents one subject in the trained group (blue) or control group (red) at each eccentricity (x=2, O=5, and +=10).

DISCUSSION

This study shows broad-based benefits of vision in a healthy adult population through an integrative perceptual learning based video game. To our knowledge, we are the first to show that a single training approach can produce such broad-based improvements in central and peripheral acuity and contrast sensitivity. Furthermore, all of our test-measures involved different tasks and optotypes than those used in the game, including acuity and CSF measures that weren’t computer based and allowed prolonged viewing, and peripheral vision tests involving letters.

The magnitude of the effects that we observe with acuity rival those found using off-the-shelf video games (Green & Bavelier, 2007); however unlike Green and Bavelier (2003), our acuity measures were found with self-paced standard eye-charts rather than rapidly presented stimuli on a computer screen. As opposed to off the shelf video games, our program uses a custom video game framework built up from carefully controlled psychophysical approaches. In the future, we anticipate adding and subtracting various perceptual learning principles in order to better understand the contribution of each feature to learning. Additionally, our results match results of Li et al (2009) showing improvement along the full contrast sensitivity curve. However unlike Li et al (2009), our study involved no time restriction in the viewing of the stimuli while measuring contrast sensitivity. Also, those studies involved 30–50 hours of video game training, whereas we only trained subjects for 12 hours. While it is hard to infer the relationship between the amount of training and the resultant improvements across studies, it appears that our integrated perceptual learning based video game is at least competitive with, and may be producing greater benefits to vision than, off the shelf video games. However, there are numerous other benefits that video games yield to other aspects of cognition that are not addressed in our study (Green & Bavelier, 2003; Green et al., 2010).

Other perceptual learning studies demonstrates relatively broad-based improvements in visually impaired individual by showing that the adult visual system is sufficiently plastic to ameliorate effects of amblyopia (Levi & Li, 2009), presbyopia (Polat, 2009), macular degeneration (Baker et al., 2005), stroke (Huxlin et al., 2009), and late-life recovery of visual function (Ostrovsky et al., 2006) and other individuals with impaired vision (Huang et al., 2008; Zhou et al., 2012). These studies suggest that our integrated training program may yield significant benefits in these populations as well.

We combined multiple perceptual learning approaches, such as training with a diverse set of stimuli (Xiao et al., 2008), optimized stimulus presentation (Beste et al., 2011), multisensory facilitation (Shams & Seitz, 2008b), and consistent reinforcement of training stimuli (Seitz & Watanabe, 2009), which have individually contributed to increasing the speed (Seitz et al., 2006), magnitude (Seitz et al., 2006; Vlahou et al., 2012) and generality of learning (Green & Bavelier, 2007; Xiao et al., 2008), with the goal of creating an integrated perceptual learning based-training program that would powerfully generalize to real world tasks. The integration of each of these principles into the task is described below:

Diverse set of stimuli – perceptual learning can often be highly specific to the trained stimulus features (Fahle, 2005), such as orientation (Fiorentini & Berardi, 1980), retinal location (Karni & Sagi, 1991) or even the eye of training (Poggio et al., 1992; Seitz et al., 2009). For example training with a single visual stimulus at a single screen location can result in learning that is specific to that situation. However, training with multiple stimulus types at multiple locations can lead to broad transfer across stimulus types and locations (Xiao et al., 2008; Yu et al., 2010). In the vision training game we used a diverse set of stimuli (multiple orientations, spatial frequencies, locations, distractor types, etc). Importantly, we varied orientations, spatial frequencies and distractor types across blocks in order to minimize effects of roving (Adini et al., 2004; Yu et al., 2004; Seitz et al., 2005; Otto et al., 2006; Zhang et al., 2008; Hussain et al., 2012).

Optimized stimulus presentation - research on exposure-based learning (Godde et al., 2000; Dinse et al., 2006; Seitz & Dinse, 2007; Beste et al., 2011), shows that a visual stimulus flickering at 20 Hz is sufficient to induce learning even when there is no training task on those stimuli. LTP-like stimulation was added to the game with a simple manipulation in which targets started flickering when not clicked within 2 seconds. The delay before flickering onset was to allow time for the adaptive procedures to track the pre-flickering thresholds.

Multisensory facilitation - multisensory interactions facilitate learning (Seitz et al., 2006; Kim et al., 2008). However, to date, most cognitive training procedures either don’t include sounds as part of the task (other than as feedback) or include sounds that are not coordinated with visual stimuli. As described above, each location on the screen corresponded to a unique sound with low-frequency tones corresponding to targets at the bottom of the screen and high-frequency tones to targets at the top. As each target appeared, a sound was played through two speakers such that interaural level differences could be used to co-locate the horizontal position of the visual target, and tone frequency could be used to locate the vertical position. Having complementary information about the target objects come from different sensory modalities allows the senses to work together to facilitate learning.

Consistently reinforcing training stimuli – research of task-irrelevant learning (Seitz & Watanabe, 2003; Seitz et al., 2009) demonstrates that coordination of reinforcement and training stimuli is key to producing learning. To date, most perceptual learning studies train participants at threshold levels; participants respond correctly to only ~75% of trials and therefore receive no reward on ~25% of trials. In our game we utilized a novel procedure that allows high performance while ensuring that the task remains challenging. With a simple manipulation to the game, a target that is not clicked within two seconds increases in contrast until the target is clicked. In this way, participants successfully click on all of the target stimuli although not always within the designated time. For the adaptive procedures, accurate responses are those within the 2-second response window and incorrect responses are those that occur post-brightening. Thus stimuli are kept at threshold while participants are nevertheless able to select nearly 100% of the target stimuli.

While our study provides a proof of principle that the integrated vision training program is effective, there are a number of limitations that will need to be addressed in further research. A first concern regards the lack of a placebo based control (Boot et al., 2011). While it is classically believed that acuity and contrast sensitivity are relatively robust to placebo effects, and benefits to contrast sensitivity typically require extensive and specialized training (Adini et al., 2002; Furmanski et al., 2004), without such a control in our design, we cannot fully rule out such concerns. A second concern regards the complexity of our training procedure where we adapted many task features and included multiple exercise types (such as the static and dynamic rounds) with the goal of creating a compelling user experience. However, this added complexity made it difficult to relate performance during training to test results and leaves ambiguity regarding which task-elements aided, or potentially harmed, the learning process. Third, we discuss the importance of different perceptual learning mechanisms that are integrated into our procedure, but the current design doesn’t inform us of their precise interactions. Further study will be required to address these concerns and to better understand the learning effects observed in the current study.

CONCLUSION

Here we show that a scientifically principled perceptual learning video game provides broad-based improvements in vision in normally seeing individuals. This approach combines the advantage of the careful control of obtained psychophysical studies with a more complex video game type interface. Also, key to our approach is the integration of principles from numerous prior works on perceptual learning. While the details of how these principles interact will be the topic of future research, we suggest that this type of integrative framework has significant translational benefits. As such our research can contribute to training approaches for typically-developed individuals, and potentially as a rehabilitative approach in individuals with low-vision.

Highlights.

We designed a video game integrating multiple perceptual learning approaches

Participants with normal vision trained with this game

Training dramatically improved central and peripheral acuity and contrast sensitivity

Control subjects showed no change in vision across tests

Integrative perceptual video game significantly augments vision

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

REFERNCES

- Adini Y, Sagi D, Tsodyks M. Context-enabled learning in the human visual system. Nature. 2002;415:790–793. doi: 10.1038/415790a. [DOI] [PubMed] [Google Scholar]

- Adini Y, Wilkonsky A, Haspel R, Tsodyks M, Sagi D. Perceptual learning in contrast discrimination: the effect of contrast uncertainty. J Vis. 2004;4:993–1005. doi: 10.1167/4.12.2. [DOI] [PubMed] [Google Scholar]

- Ahissar M, Hochstein S. Task difficulty and the specificity of perceptual learning. Nature. 1997;387:401–406. doi: 10.1038/387401a0. [DOI] [PubMed] [Google Scholar]

- Baker CI, Peli E, Knouf N, Kanwisher NG. Reorganization of visual processing in macular degeneration. J Neurosci. 2005;25:614–618. doi: 10.1523/JNEUROSCI.3476-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ball K, Sekuler R. A specific and enduring improvement in visual motion discrimination. Science. 1982;218:697–698. doi: 10.1126/science.7134968. [DOI] [PubMed] [Google Scholar]

- Beste C, Wascher E, Gunturkun O, Dinse HR. Improvement and impairment of visually guided behavior through LTP- and LTD-like exposure-based visual learning. Curr Biol. 2011;21:876–882. doi: 10.1016/j.cub.2011.03.065. [DOI] [PubMed] [Google Scholar]

- Boot WR, Blakely DP, Simons DJ. Do action video games improve perception and cognition? Front Psychol. 2011;2:226. doi: 10.3389/fpsyg.2011.00226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Campbell FW, Robson JG. Application of Fourier analysis to the visibility of gratings. J Physiol. 1968;197:551–566. doi: 10.1113/jphysiol.1968.sp008574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun MM. Contextual cueing of visual attention. Trends Cogn Sci. 2000;4:170–178. doi: 10.1016/s1364-6613(00)01476-5. [DOI] [PubMed] [Google Scholar]

- Dinse HR, Kleibel N, Kalisch T, Ragert P, Wilimzig C, Tegenthoff M. Tactile coactivation resets age-related decline of human tactile discrimination. Ann Neurol. 2006;60:88–94. doi: 10.1002/ana.20862. [DOI] [PubMed] [Google Scholar]

- Fahle M. Perceptual learning: specificity versus generalization. Curr Opin Neurobiol. 2005;15:154–160. doi: 10.1016/j.conb.2005.03.010. [DOI] [PubMed] [Google Scholar]

- Fiorentini A, Berardi N. Perceptual learning specific for orientation and spatial frequency. Nature. 1980;287:43–44. doi: 10.1038/287043a0. [DOI] [PubMed] [Google Scholar]

- Furmanski CS, Schluppeck D, Engel SA. Learning strengthens the response of primary visual cortex to simple patterns. Curr Biol. 2004;14:573–578. doi: 10.1016/j.cub.2004.03.032. [DOI] [PubMed] [Google Scholar]

- Godde B, Stauffenberg B, Spengler F, Dinse HR. Tactile coactivation-induced changes in spatial discrimination performance. J Neurosci. 2000;20:1597–1604. doi: 10.1523/JNEUROSCI.20-04-01597.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold J, Bennett PJ, Sekuler AB. Signal but not noise changes with perceptual learning. Nature. 1999;402:176–178. doi: 10.1038/46027. [DOI] [PubMed] [Google Scholar]

- Green CS, Bavelier D. Action video game modifies visual selective attention. Nature. 2003;423:534–537. doi: 10.1038/nature01647. [DOI] [PubMed] [Google Scholar]

- Green CS, Bavelier D. Action-video-game experience alters the spatial resolution of vision. Psychol Sci. 2007;18:88–94. doi: 10.1111/j.1467-9280.2007.01853.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green CS, Pouget A, Bavelier D. Improved Probabilistic Inference as a General Learning Mechanism with Action Video Games. Current Biology. 2010;20:1573–1579. doi: 10.1016/j.cub.2010.07.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang CB, Zhou Y, Lu ZL. Broad bandwidth of perceptual learning in the visual system of adults with anisometropic amblyopia. Proc Natl Acad Sci U S A. 2008;105:4068–4073. doi: 10.1073/pnas.0800824105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussain Z, Webb BS, Astle AT, McGraw PV. Perceptual learning reduces crowding in amblyopia and in the normal periphery. J Neurosci. 2012;32:474–480. doi: 10.1523/JNEUROSCI.3845-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huxlin KR, Martin T, Kelly K, Riley M, Friedman DI, Burgin WS, Hayhoe M. Perceptual relearning of complex visual motion after V1 damage in humans. J Neurosci. 2009;29:3981–3991. doi: 10.1523/JNEUROSCI.4882-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karni A, Sagi D. Where practice makes perfect in texture discrimination: evidence for primary visual cortex plasticity. Proc Natl Acad Sci U S A. 1991;88:4966–4970. doi: 10.1073/pnas.88.11.4966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim RS, Seitz AR, Shams L. Benefits of stimulus congruency for multisensory facilitation of visual learning. PLoS ONE. 2008;3:e1532. doi: 10.1371/journal.pone.0001532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levi DM, Li RW. Improving the performance of the amblyopic visual system. Philos Trans R Soc Lond B Biol Sci. 2009;364:399–407. doi: 10.1098/rstb.2008.0203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li R, Polat U, Makous W, Bavelier D. Enhancing the contrast sensitivity function through action video game training. Nat Neurosci. 2009;12:549–551. doi: 10.1038/nn.2296. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li RW, Ngo C, Nguyen J, Levi DM. Video-game play induces plasticity in the visual system of adults with amblyopia. PLoS Biol. 2011;9:e1001135. doi: 10.1371/journal.pbio.1001135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin JY, Pype AD, Murray SO, Boynton GM. Enhanced memory for scenes presented at behaviorally relevant points in time. PLoS Biol. 2010;8:e1000337. doi: 10.1371/journal.pbio.1000337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ostrovsky Y, Andalman A, Sinha P. Vision following extended congenital blindness. Psychol Sci. 2006;17:1009–1014. doi: 10.1111/j.1467-9280.2006.01827.x. [DOI] [PubMed] [Google Scholar]

- Otto TU, Herzog MH, Fahle M, Zhaoping L. Perceptual learning with spatial uncertainties. Vision Research. 2006;46:3223–3233. doi: 10.1016/j.visres.2006.03.021. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis. 1997;10:437–442. [PubMed] [Google Scholar]

- Poggio T, Fahle M, Edelman S. Fast perceptual learning in visual hyperacuity. Science. 1992;256:1018–1021. doi: 10.1126/science.1589770. [DOI] [PubMed] [Google Scholar]

- Polat U. Making perceptual learning practical to improve visual functions. Vision Res. 2009;49:2566–2573. doi: 10.1016/j.visres.2009.06.005. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Dinse HR. A common framework for perceptual learning. Curr Opin Neurobiol. 2007;17:148–153. doi: 10.1016/j.conb.2007.02.004. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Kim D, Watanabe T. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61:700–707. doi: 10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Kim R, Shams L. Sound facilitates visual learning. Curr Biol. 2006;16:1422–1427. doi: 10.1016/j.cub.2006.05.048. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Watanabe T. Psychophysics: Is subliminal learning really passive? Nature. 2003;422:36. doi: 10.1038/422036a. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Watanabe T. The phenomenon of task-irrelevant perceptual learning. Vision Res. 2009;49:2604–2610. doi: 10.1016/j.visres.2009.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz AR, Yamagishi N, Werner B, Goda N, Kawato M, Watanabe T. Task-specific disruption of perceptual learning. Proc Natl Acad Sci U S A. 2005;102:14895–14900. doi: 10.1073/pnas.0505765102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shams L, Seitz AR. Benefits of multisensory learning. Trends Cogn Sci. 2008a;12:411–417. doi: 10.1016/j.tics.2008.07.006. [DOI] [PubMed] [Google Scholar]

- Shams L, Seitz AR. Benefits of multisensory learning. Trends Cogn Sci. 2008b doi: 10.1016/j.tics.2008.07.006. [DOI] [PubMed] [Google Scholar]

- Shibata K, Yamagishi N, Ishii S, Kawato M. Boosting perceptual learning by fake feedback. Vision Res. 2009;49:2574–2585. doi: 10.1016/j.visres.2009.06.009. [DOI] [PubMed] [Google Scholar]

- Vaina LM, Belliveau JW, des Roziers EB, Zeffiro TA. Neural systems underlying learning and representation of global motion. Proc Natl Acad Sci U S A. 1998;95:12657–12662. doi: 10.1073/pnas.95.21.12657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaina LM, Gross CG. Perceptual deficits in patients with impaired recognition of biological motion after temporal lobe lesions. Proc Natl Acad Sci U S A. 2004;101:16947–16951. doi: 10.1073/pnas.0407668101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vlahou EL, Protopapas A, Seitz AR. Implicit training of nonnative speech stimuli. J Exp Psychol Gen. 2012;141:363–381. doi: 10.1037/a0025014. [DOI] [PubMed] [Google Scholar]

- Xiao LQ, Zhang JY, Wang R, Klein SA, Levi DM, Yu C. Complete Transfer of Perceptual Learning across Retinal Locations Enabled by Double Training. Curr Biol. 2008;18:1922–1926. doi: 10.1016/j.cub.2008.10.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu C, Klein SA, Levi DM. Perceptual learning in contrast discrimination and the (minimal) role of context. J Vis. 2004;4:169–182. doi: 10.1167/4.3.4. [DOI] [PubMed] [Google Scholar]

- Yu C, Zhang JY, Zhang GL, Xiao LQ, Klein SA, Levi DM. Rule-Based Learning Explains Visual Perceptual Learning and Its Specificity and Transfer. Journal of Neuroscience. 2010;30:12323–12328. doi: 10.1523/JNEUROSCI.0704-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang JY, Kuai SG, Xiao LQ, Klein SA, Levi DM, Yu C. Stimulus coding rules for perceptual learning. PLoS Biol. 2008;6:e197. doi: 10.1371/journal.pbio.0060197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou J, Zhang Y, Dai Y, Zhao H, Liu R, Hou F, Liang B, Hess RF, Zhou Y. The eye limits the brain’s learning potential. Sci Rep. 2012;2:364. doi: 10.1038/srep00364. [DOI] [PMC free article] [PubMed] [Google Scholar]