ABSTRACT

Understanding the factors that facilitate implementation of behavioral medicine programs into practice can advance translational science. Often, translation or implementation studies use case study methods with small sample sizes. Methodological approaches that systematize findings from these types of studies are needed to improve rigor and advance the field. Qualitative comparative analysis (QCA) is a method and analytical approach that can advance implementation science. QCA offers an approach for rigorously conducting translational and implementation research limited by a small number of cases. We describe the methodological and analytic approach for using QCA and provide examples of its use in the health and health services literature. QCA brings together qualitative or quantitative data derived from cases to identify necessary and sufficient conditions for an outcome. QCA offers advantages for researchers interested in analyzing complex programs and for practitioners interested in developing programs that achieve successful health outcomes.

KEYWORDS: Qualitative comparative analysis (QCA), Methods for translational and implementation research, Case study

INTRODUCTION

In this paper, we describe the methodological features and advantages of using qualitative comparative analysis (QCA). QCA is sometimes called a “mixed method.” It refers to both a specific research approach and an analytic technique that is distinct from and offers several advantages over traditional qualitative and quantitative methods [1–4]. It can be used to (1) analyze small to medium numbers of cases (e.g., 10 to 50) when traditional statistical methods are not possible, (2) examine complex combinations of explanatory factors associated with translation or implementation “success,” and (3) combine qualitative and quantitative data using a unified and systematic analytic approach.

This method may be especially pertinent for behavioral medicine given the growing interest in implementation science [5]. Translating behavioral medicine research and interventions into useful practice and policy requires an understanding of the implementation context. Understanding the context under which interventions work and how different ways of implementing an intervention lead to successful outcomes are required for “T3” (i.e., dissemination and implementation of evidence-based interventions) and “T4” translations (i.e., policy development to encourage evidence-based intervention use among various stakeholders) [6, 7].

Case studies are a common way to assess different program implementation approaches and to examine complex systems (e.g., health care delivery systems, interventions in community settings) [8]. However, multiple case studies often have small, naturally limited samples or populations; small samples and populations lack adequate power to support conventional, statistical analyses. Case studies also may use mixed-method approaches, but typically when researchers collect quantitative and qualitative data in tandem, they rarely integrate both types of data systematically in the analysis. QCA offers solutions for the challenges posed by case studies and provides a useful analytic tool for translating research into policy recommendations. Using QCA methods could aid behavioral medicine researchers who seek to translate research from randomized controlled trials into practice settings to understand implementation. In this paper, we describe the conceptual basis of QCA, its application in the health and health services literature, and its features and limitations.

CONCEPTUAL BASIS OF QCA

QCA has its foundations in historical, comparative social science. Researchers in this field developed QCA because probabilistic methods failed to capture the complexity of social phenomena and required large sample sizes [1]. Recently, this method has made inroads into health research and evaluation [9–13] because of several useful features as follows: (1) it models equifinality, which is the ability to identify more than one causal pathway to an outcome (or absence of the outcome); (2) it identifies conjunctural causation, which means that single conditions may not display their effects on their own, but only in conjunction with other conditions; and (3) it implies asymmetrical relationships between causal conditions and outcomes, which means that causal pathways for achieving the outcome differ from causal pathways for failing to achieve the outcome.

QCA is a case-oriented approach that examines relationships between conditions (similar to explanatory variables in regression models) and an outcome using set theory; a branch of mathematics or of symbolic logic that deals with the nature and relations of sets. A set-theoretic approach to modeling causality differs from probabilistic methods, which examines the independent, additive influence of variables on an outcome. Regression models, based on underlying assumptions about sampling and distribution of the data, ask “what factor, holding all other factors constant at each factor’s average, will increase (or decrease) the likelihood of an outcome.” QCA, an approach based on the examination of set, subset, and superset relationships, asks “what conditions—alone or in combination with other conditions—are necessary or sufficient to produce an outcome.” For additional QCA definitions, see Ragin [4].

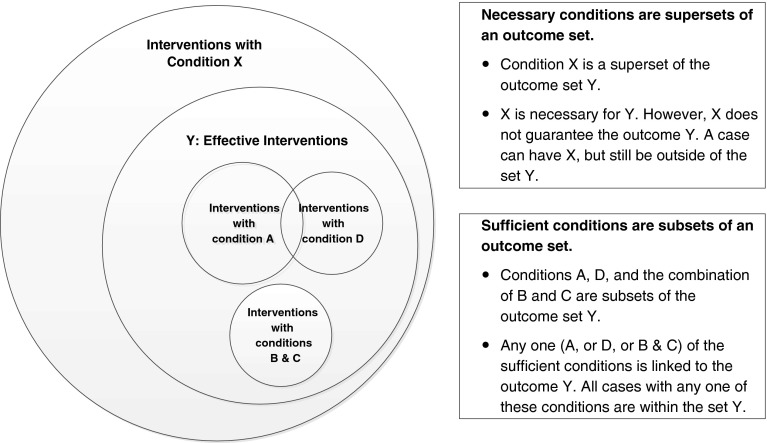

Necessary conditions are those that exhibit a superset relationship with the outcome set and are conditions or combinations of conditions that must be present for an outcome to occur. In assessing necessity, a researcher “identifies conditions shared by cases with the same outcome” [4] (p. 20). Figure 1 shows a hypothetical example. In this figure, condition X is a necessary condition for an effective intervention because all cases with condition X are also members of the set of cases with the outcome present; however, condition X is not sufficient for an effective intervention because it is possible to be a member of the set of cases with condition X, but not be a member of the outcome set [14].

Fig. 1.

Necessary and sufficient conditions and set-theoretic relationships

Sufficient conditions exhibit subset relationships with an outcome set and demonstrate that “the cause in question produces the outcome in question” [3] (p. 92). Figure 1 shows the multiple and different combinations of conditions that produce the hypothetical outcome, “effective intervention,” (1) by having condition A present, (2) by having condition D present, or (3) by having the combination of conditions B and C present. None of these conditions is necessary and any one of these conditions or combinations of conditions is sufficient for the outcome of an effective intervention.

QCA AS AN APPROACH AND AS AN ANALYTIC TECHNIQUE

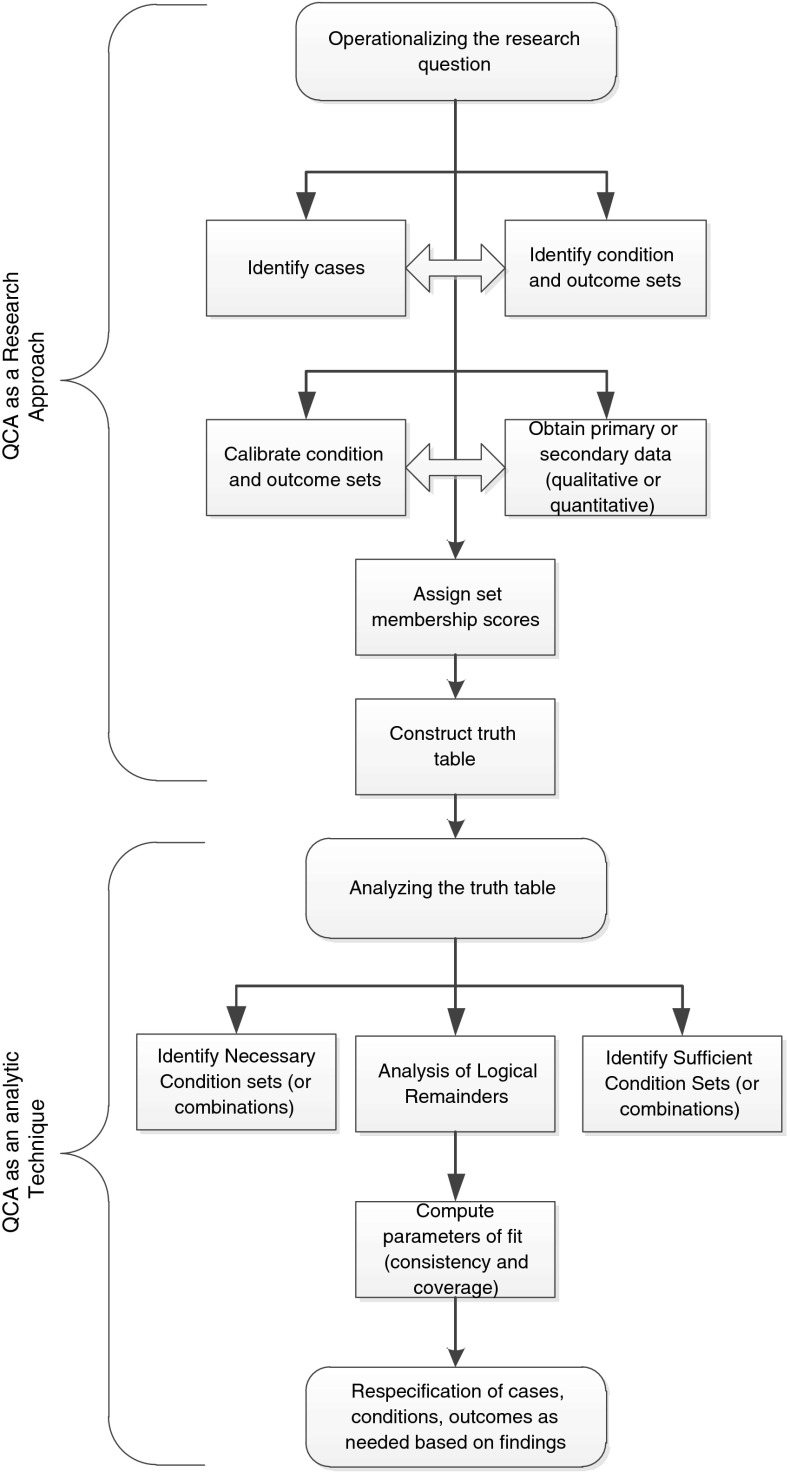

The term “QCA” is sometimes used to refer to the comparative research approach but also refers to the “analytic moment” during which Boolean algebra and set theory logic is applied to truth tables constructed from data derived from included cases. Figure 2 characterizes this distinction. Although this figure depicts steps as sequential, like many research endeavors, these steps are somewhat iterative, with respecification and reanalysis occurring along the way to final findings. We describe each of the essential steps of QCA as an approach and analytic technique and provide examples of how it has been used in health-related research.

Fig. 2.

QCA as an approach and as an analytic technique

Operationalizing the research question

Like other types of studies, the first step involves identifying the research question(s) and developing a conceptual model. This step guides the study as a whole and also informs case, condition (c.f., variable), and outcome selection. As mentioned above, QCA frames research questions differently than traditional quantitative or qualitative methods. Research questions appropriate for a QCA approach would seek to identify the necessary and sufficient conditions required to achieve the outcome. Thus, formulating a QCA research question emphasizes what program components or features—individually or in combination—need to be in place for a program or intervention to have a chance at being effective (i.e., necessary conditions) and what program components or features—individually or in combination—would produce the outcome (i.e., sufficient conditions). For example, a set theoretic hypothesis would be as follows: If a program is supported by strong organizational capacity and a comprehensive planning process, then the program will be successful. A hypothesis better addressed by probabilistic methods would be as follows: Organizational capacity, holding all other factors constant, increases the likelihood that a program will be successful.

For example, Longest and Thoits [15] drew on an extant stress process model to assess whether the pathways leading to psychological distress differed for women and men. Using QCA was appropriate for their study because the stress process model “suggests that particular patterns of predictors experienced in tandem may have unique relationships with health outcomes” (p. 4, italics added). They theorized that predictors would exhibit effects in combination because some aspects of the stress process model would buffer the risk of distress (e.g., social support) while others simultaneously would increase the risk (e.g., negative life events).

Identify cases

The number of cases in a QCA analysis may be determined by the population (e.g., 10 intervention sites, 30 grantees). When particular cases can be chosen from a larger population, Berg-Schlosser and De Meur [16] offer other strategies and best practices for choosing cases. Unless the number of cases relies on an existing population (i.e., 30 programs or grantees), the outcome of interest and existing theory drive case selection, unlike variable-oriented research [3, 4] in which numbers are driven by statistical power considerations and depend on variation in the dependent variable. For use in causal inference, both cases that exhibit and do not exhibit the outcome should be included [16]. If a researcher is interested in developing typologies or concept formation, he or she may wish to examine similar cases that exhibit differences on the outcome or to explore cases that exhibit the same outcome [14, 16].

For example, Kahwati et al. [9] examined the structure, policies, and processes that might lead to an effective clinical weight management program in a large national integrated health care system, as measured by mean weight loss among patients treated at the facility. To examine pathways that lead to both better and poorer facility-level weight loss, 11 facilities from among those with the largest weight loss outcomes and 11 facilities from among those with the smallest were included. By choosing cases based on specific outcomes, Kahwati et al. could identify multiple patterns of success (or failure) that explain the outcome rather than the variability associated with the outcome.

Identify conditions and outcome sets

Selecting conditions relies on the research question, conceptual model, and number of cases similar to other research methods. Conditions (or “sets” or “condition sets”) refer to the explanatory factors in a model; they are similar to variables. Because QCA research questions assess necessary and sufficient conditions, a researcher should consider which conditions in the conceptual model would theoretically produce the outcome individually or in combination. This helps to focus the analysis and number of conditions. Ideally, for a case study design with a small (e.g., 10–15) or intermediate (e.g., 16–100) number of cases, one should aim for fewer than five conditions because in QCA a researcher assesses all possible configurations of conditions. Adding conditions to the model increases the possible number of combinations exponentially (i.e., 2k, where k = the number of conditions). For three conditions, eight possible combinations of the selected conditions exist as follows: the presence of A, B, C together, the lack of A with B and C present, the lack of A and lack of B with C present, and so forth. Having too many conditions will likely mean that no cases fall into a particular configuration, and that configuration cannot be assessed by empirical examples. When one or more configurations are not represented by the cases, this is known as limited diversity, and QCA experts suggest multiple strategies for managing such situations [4, 14].

For example, Ford et al. [10] studied health departments’ implementation of core public health functions and organizational factors (e.g., resource availability, adaptability) and how those conditions lead to superior and inferior population health changes. They operationalized three core public functions (i.e., assessment of environmental and population public health needs, capacity for policy development, and authority over assurance of healthcare operations) and operationalized those for their study by using composite measures of varied health indicators compiled in a UnitedHealth Group report. In this examination of 41 state health departments, the authors found that all three core public health functions were necessary for population health improvement. The absence of any of the core public health functions was sufficient for poorer population health outcomes; thus, only the health departments with the ability to perform all three core functions had improved outcomes. Additionally, these three core functions in combination with either resource availability or adaptability were sufficient combinations (i.e., causal pathways) for improved population health outcomes.

Calibrate condition and outcome sets

Calibration refers to “adjusting (measures) so that they match or conform to dependably known standards” and is a common way of standardizing data in the physical sciences [4] (p. 72). Calibration requires the researcher to make sense of variation in the data and apply expert knowledge about what aspects of the variation are meaningful. Because calibration depends on defining conditions based on those “dependably known standards,” QCA relies on expert substantive knowledge, theory, or criteria external to the data themselves [14]. This may require researchers to collaborate closely with program implementers.

In QCA, one can use “crisp” set or “fuzzy” set calibration. Crisp sets, which are similar to dichotomous categorical variables in regression, establish decision rules defining a case as fully in the set (i.e., condition) or fully out of the set; fuzzy sets establish degrees of membership in a set. Fuzzy sets “differentiate between different levels of belonging anchored by two extreme membership scores at 1 and 0” [14] (p.28). They can be continuous (0, 0.1, 0.2,..) or have qualitatively defined anchor points (e.g., 0 is fully out of the set; 0.33 is more out than in the set; 0.66 is more in than out of the set; 1 is fully in the set). A researcher selects fuzzy sets and the corresponding resolution (i.e., continuous, four cutoff points, six cutoff) based on theory and meaningful differences between cases and must be able to provide a verbal description for each cutoff point [14]. If, for example, a researcher cannot distinguish between 0.7 and 0.8 membership in a set, then a more continuous scoring of cases would not be useful, rather a four point cutoff may better characterize the data. Although crisp and fuzzy sets are more commonly used, new multivariate forms of QCA are emerging as are variants that incorporate elements of time [14, 17, 18].

Fuzzy sets have the advantage of maintaining more detail for data with continuous values. However, this strength also makes interpretation more difficult. When an observation is coded with fuzzy sets, a particular observation has some degree of membership in the set “condition A” and in the set “condition NOT A.” Thus, when doing analyses to identify sufficient conditions, a researcher must make a judgment call on what benchmark constitutes recommendation threshold for policy or programmatic action.

In creating decision rules for calibration, a researcher can use a variety of techniques to identify cutoff points or anchors. For qualitative conditions, a researcher can define decision rules by drawing from the literature and knowledge of the intervention context. For conditions with numeric values, a researcher can also employ statistical approaches. Ideally, when using statistical approaches, a researcher should establish thresholds using substantive knowledge about set membership (thus, translating variation into meaningful categories). Although measures of central tendency (e.g., cases with a value above the median are considered fully in the set) can be used to set cutoff points, some experts consider the sole use of this method to be flawed because case classification is determined by a case’s relative value in regard to other cases as opposed to its absolute value in reference to an external referent [14].

For example, in their study of National Cancer Institutes’ Community Clinical Oncology Program (NCI CCOP), Weiner et al. [19] had numeric data on their five study measures. They transformed their study measures by using their knowledge of the CCOP and by asking NCI officials to identify three values: full membership in a set, a point of maximum ambiguity, and nonmembership in the set. For their outcome set, high accrual in clinical trials, they established 100 patients enrolled accrual as fully in the set of high accrual, 70 as a point of ambiguity (neither in nor out of the set), and 50 and below as fully out of the set because “CCOPs must maintain a minimum of 50 patients to maintain CCOP funding” (p. 288). By using QCA and operationalizing condition sets in this way, they were able to answer what condition sets produce high accrual, not what factors predict more accrual. The advantage is that by using this approach and analytic technique, they were able to identify sets of factors that are linked with a very specific outcome of interest.

Obtain primary or secondary data

Data sources vary based on the study, availability of the data, and feasibility of data collection; data can be qualitative or quantitative, a feature useful for mixed-methods studies and systematically integrating these different types of data is a major strength of this approach. Qualitative data include program documents and descriptions, key informant interviews, and archival data (e.g., program documents, records, policies); quantitative data consists of surveys, surveillance or registry data, and electronic health records.

For instance, Schensul et al. [20] relied on in-depth interviews for their analysis; Chuang et al. [21] and Longest and Thoits [15] drew on survey data for theirs. Kahwati et al. [9] used a mixed-method approach combining data from key informant interviews, program documents, and electronic health records. Any type of data can be used to inform the calibration of conditions.

Assign set membership scores

Assigning set membership scores involves applying the decision rules that were established during the calibration phase. To accomplish this, the research team should then use the extracted data for each case, apply the decision rule for the condition, and discuss discrepancies in the data sources. In their study of factors that influence health care policy development in Florida, Harkreader and Imershein [22] coded contextual factors that supported state involvement in the health care market. Drawing on a review of archival data and using crisp set coding, they assigned a value of 1 for the presence of a contextual factor (e.g., presence of federal financial incentives promoting policy, unified health care provider policy position in opposition to state policy, state agency supporting policy position) and 0 for the absence of a contextual factor.

Construct truth table

After completing the coding, researchers create a “truth table” for analysis. A truth table lists all of the possible configurations of conditions, the number of cases that fall into that configuration, and the “consistency” of the cases. Consistency quantifies the extent to which cases that share similar conditions exhibit the same outcome; in crisp sets, the consistency value is the proportion of cases that exhibit the outcome. Fuzzy sets require a different calculation to establish consistency and are described at length in other sources [1–4, 14]. Table 1 displays a hypothetical truth table for three conditions using crisp sets.

Table 1.

Sample of a hypothetical truth table for crisp sets

| Condition A | Condition B | Condition C | Cases | Proportion of cases that exhibit the outcome Pr (Y) |

|---|---|---|---|---|

| 1 | 1 | 1 | 5 | 1.00 |

| 1 | 1 | 0 | 2 | 0.50 |

| 1 | 0 | 1 | 3 | 0.33 |

| 1 | 0 | 0 | 2 | 1.00 |

| 0 | 1 | 1 | 1 | 0.00 |

| 0 | 1 | 0 | 3 | 0.00 |

| 0 | 0 | 1 | 4 | 0.75 |

| 0 | 0 | 0 | 3 | 0.00 |

1 fully in the set, 0 fully out of the set

QCA AS AN ANALYTIC TECHNIQUE

The research steps to this point fall into QCA as an approach to understanding social and health phenomena. Analysis of the truth table is the sine qua non of QCA as an analytic technique. In this section, we provide an overview of the analysis process, but analytic techniques and emerging forms of analysis are described in multiple texts [3, 4, 14, 17]. The use of computer software to conduct truth table analysis is recommended and several software options are available including Stata, fsQCA, Tosmana, and R.

A truth table analysis first involves the researcher assessing which (if any) conditions are individually necessary or sufficient for achieving the outcome, and then second, examining whether any configurations of conditions are necessary or sufficient. In instances where contradictions in outcomes from the same configuration pattern occur (i.e., one case from a configuration has the outcome; one does not), the researcher should also consider whether the model is properly specified and conditions are calibrated accurately. Thus, this stage of the analysis may reveal the need to review how conditions are defined and whether the definition should be recalibrated. Similar to qualitative and quantitative research approaches, analysis is iterative.

Additionally, the researcher examines the truth table to assess whether all logically possible configurations have empiric cases. As described above, when configurations lack cases, the problem of limited diversity occurs. Configurations without representative cases are known as logical remainders, and the researcher must consider how to deal with those. The analysis of logical remainders depends on the particular theory guiding the research and the research priorities. How a researcher manages the logical remainders has implications for the final solution, but none of the solutions based on the truth table will contradict the empirical evidence [14]. To generate the most conservative solution term, a researcher makes no assumptions about truth table rows with no cases (or very few cases in larger N studies) and excludes them from the logical minimization process. Alternately, a researcher can choose to include (or exclude) rows with no cases from analysis, which would generate a solution that is a superset of the conservative solution. Choosing inclusion criteria for logical remainders also depends on theory and what may be empirically possible. For example, in studying governments, it would be unlikely to have a case that is a democracy (“condition A”), but has a dictator (“condition B”). In that circumstance, the researcher may choose to exclude that theoretically implausible row from the logical minimization process.

Third, once all the solutions have been identified, the researcher mathematically reduces the solution [1, 14]. For example, if the list of solutions contains two identical configurations, except that in one configuration A is absent and in the other A is present, then A can be dropped from those two solutions. Finally, the researcher computes two parameters of fit: coverage and consistency. Coverage determines the empirical relevance of a solution and quantifies the variation in causal pathways to an outcome [14]. When coverage of a causal pathway is high, the more common the solution is, and more of the outcome is accounted for by the pathway. However, maximum coverage may be less critical in implementation research because understanding all of the pathways to success may be as helpful as understanding the most common pathway. Consistency assesses whether the causal pathway produces the outcome regularly (“the degree to which the empirical data are in line with a postulated subset relation,” p. 324 [14]); a high consistency value (e.g., 1.00 or 100 %) would indicate that all cases in a causal pathway produced the outcome. A low consistency value would suggest that a particular pathway was not successful in producing the outcome on a regular basis, and thus, for translational purposes, should not be recommended for policy or practice changes. A causal pathway with high consistency and coverage values indicates a result useful for providing guidance; a high consistency with a lower coverage score also has value in showing a causal pathway that successfully produced the outcome, but did so less frequently.

For example, Kahwati et al. [9] examined their truth table and analyzed the data for single conditions and combinations of conditions that were necessary for higher or lower facility-level patient weight loss outcomes. The truth table analysis revealed two necessary conditions and four sufficient combinations of conditions. Because of significant challenges with logical remainders, they used a bottom-up approach to assess whether combinations of conditions yielded the outcome. This entailed pairing conditions to ensure parsimony and maximize coverage. With a smaller number of conditions, a researcher could hypothetically find that more cases share similar characteristics and could assess whether those cases exhibit the same outcome of interest.

At the completion of the truth table analysis, Kahwati et al. [9] used the qualitative data from site interviews to provide rich examples to illustrate the QCA solutions that were identified, which explained what the solutions meant in clinical practice for weight management. For example, having an involved champion (usually a physician), in combination with low facility accountability, was sufficient for program success (i.e., better weight loss outcomes) and was related to better facility weight loss. In reviewing the qualitative data, Kahwati et al. [9] discovered that involved champions integrate program activities into their clinical routines and discuss issues as they arise with other program staff. Because involved champions and other program staff communicated informally on a regular basis, formal accountability structures were less of a priority.

ADVANTAGES AND LIMITATIONS OF QCA

Because translational (and other health-related) researchers may be interested in which intervention features—alone or in combination—achieve distinct outcomes (e.g., achievement of program outcomes, reduction in health disparities), QCA is well suited for translational research. To assess combinations of variables in regression, a researcher relies on interaction effects, which, although useful, become difficult to interpret when three, four, or more variables are combined. Furthermore, in regression and other variable-oriented approaches, independent variables are held constant at the average across the study population to isolate the independent effect of that variable, but this masks how factors may interact with each other in ways that impact the ultimate outcomes. In translational research, context matters and QCA treats each case holistically, allowing each case to keep its own values for each condition.

Multiple case studies or studies with the organization as the unit of analysis often involve a small or intermediate number of cases. This hinders the use of standard statistical analyses; researchers are less likely to find statistical significance with small sample sizes. However, QCA draws on analyses of set relations to support small-N studies and to identify the conditions or combinations of conditions that are necessary or sufficient for an outcome of interest and may yield results when probabilistic methods cannot.

Finally, QCA is based on an asymmetric concept of causation, which means that the absence of a sufficient condition associated with an outcome does not necessarily describe the causal pathway to the absence of the outcome [14]. These characteristics can be helpful for translational researchers who are trying to study or implement complex interventions, where more than one way to implement a program might be effective and where studying both effective and ineffective implementation practices can yield useful information.

QCA has several limitations that researchers should consider before choosing it as a potential methodological approach. With small- and intermediate-N studies, QCA must be theory-driven and circumscribed by priority questions. That is, a researcher ideally should not use a “kitchen sink” approach to test every conceivable condition or combination of conditions because the number of combinations increases exponentially with the addition of another condition. With a small number of cases and too many conditions, the sample would not have enough cases to provide examples of all the possible configurations of conditions (i.e., limited diversity), or the analysis would be constrained to describing the characteristics of the cases, which would have less value than determining whether some conditions or some combination of conditions led to actual program success. However, if the number of conditions cannot be reduced, alternate QCA techniques, such as a bottom-up approach to QCA or two-step QCA, can be used [14].

Another limitation is that programs or clinical interventions involved in a cross-site analysis may have unique programs that do not seem comparable. Cases must share some degree of comparability to use QCA [16]. Researchers can manage this challenge by taking a broader view of the program(s) and comparing them on broader characteristics or concepts, such as high/low organizational capacity, established partnerships, and program planning, if these would provide meaningful conclusions. Taking this approach will require careful definition of each of these concepts within the context of a particular initiative. Definitions may also need to be revised as the data are gathered and calibration begins.

Finally, as mentioned above, crisp set calibration dichotomizes conditions of interest; this form of calibration means that in some cases, the finer grained differences and precision in a condition may be lost [3]. Crisp set calibration provides more easily interpretable and actionable results and is appropriate if researchers are primarily interested in the presence or absence of a particular program feature or organizational characteristic to understand translation or implementation.

CONCLUSION

QCA offers an additional methodological approach for researchers to conduct rigorous comparative analyses while drawing on the rich, detailed data collected as part of a case study. However, as Rihoux, Benoit, and Ragin [17] note, QCA is not a miracle method, nor a panacea for all studies that use case study methods. Furthermore, it may not always be the most suitable approach for certain types of translational and implementation research. We outlined the multiple steps needed to conduct a comprehensive QCA. QCA is a good approach for the examination of causal complexity, and equifinality could be helpful to behavioral medicine researchers who seek to translate evidence-based interventions in real-world settings. In reality, multiple program models can lead to success, and this method accommodates a more complex and varied understanding of these patterns and factors.

Footnotes

Implications

Practice: Identifying multiple successful intervention models (equifinality) can aid in selecting a practice model relevant to a context, and can facilitate implementation.

Policy: QCA can be used to develop actionable policy information for decision makers that accommodates contextual factors.

Research: Researchers can use QCA to understand causal complexity in translational or implementation research and to assess the relationships between policies, interventions, or procedures and successful outcomes.

References

- 1.Ragin CC. The comparative method: moving beyond qualitative and quantitative strategies. Berkeley, CA: The University of California Press; 1987. [Google Scholar]

- 2.Ragin CC. Using qualitative comparative analysis to study causal complexity. Health Serv Res. 1999;34(5 Pt 2):1225–1239. [PMC free article] [PubMed] [Google Scholar]

- 3.Ragin CC. Fuzzy-set social science. Chicago: The University of Chicago Press; 2000. [Google Scholar]

- 4.Ragin CC. Redesigning social inquiry: fuzzy sets and beyond. Chicago, IL: University of Chicago Press; 2008. [Google Scholar]

- 5.Glasgow RE, Vinson C, Chambers D, et al. National Institutes of Health approaches to dissemination and implementation science: current and future directions. Am J Public Health. 2012;102:1274–1281. doi: 10.2105/AJPH.2012.300755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kon A. The clinical and translational science award (CTSA) consortium and the translational research model. Am J Bioeth. 2008;8(3):58–63. doi: 10.1080/15265160802109389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tufts Clinical and Translational Science Institute. What is translational science? Available at http://www.tuftsctsi.org/About-Us/What-is-Translational-Science.aspx?c=129864037426803263. Accessibility verified July 10, 2012

- 8.Yin RK. Case study research: design and methods. 4. Thousand Oaks: Sage Publications; 2009. [Google Scholar]

- 9.Kahwati LC, Lewis MA, Kane H, et al. Best practices in the Veterans Health Administration’s MOVE! weight management program. Am J Prev Med. 2011;41(5):457–464. doi: 10.1016/j.amepre.2011.06.047. [DOI] [PubMed] [Google Scholar]

- 10.Ford EW, Duncan WJ, Ginter PM. Health departments’ implementation of public health’s core functions: an assessment of health impacts. Public Health. 2005;119:11–21. doi: 10.1016/j.puhe.2004.03.002. [DOI] [PubMed] [Google Scholar]

- 11.Blackman T, Wistow JN, Byrne D. A qualitative comparative analysis of factors associated with trends in narrowing health inequalities in England. Soc Sci Med. 2011;72(12):1965–1974. doi: 10.1016/j.socscimed.2011.04.003. [DOI] [PubMed] [Google Scholar]

- 12.Dy SM, Garg P, Nyberg D, et al. Critical pathway effectiveness: assessing the impact of patient, hospital care, and pathway characteristics using qualitative comparative analysis. Health Serv Res. 2005;40(2):499–516. doi: 10.1111/j.1475-6773.2005.0r370.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Thygeson NM, Solberg LI, Asche SE, et al. Using fuzzy set qualitative comparative analysis (fs/QCA) to explore the relationship between medical “homeness” and quality. Health Serv Res. 2011 doi: 10.1111/j.1475-6773.2011.01303.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Schneider CQ, Wagemann C. Set-theoretic methods for the social sciences: a guide to qualitative comparative analysis. Cambridge: Cambridge University Press; 2012. [Google Scholar]

- 15.Longest KC, Thoits PA. Gender, the stress process, and health: A configurational approach. Soc Mental Health. 2012; published, online 6 July 2012.

- 16.Berg-Schlosser D, De Meur G. Comparative research design: case and variable selection. In RC Rihoux Benoit & C Ragin (Eds.), configurational comparative methods: qualitative comparative analysis (QCA), and related techniques. Thousand Oaks: SAGE Publications; 2009. [Google Scholar]

- 17.Rihoux Benoit RC, Ragin CC, editors. Configurational comparative methods: qualitative comparative analysis (QCA) and related techniques. Thousand Oaks: SAGE Publications; 2009. [Google Scholar]

- 18.Caren N, Panofsky A. TQCA. A technique for adding temporality to qualitative comparative analysis. Sociol Methods Res. 2005;34(2):147–172. doi: 10.1177/0049124105277197. [DOI] [Google Scholar]

- 19.Weiner BJ, Jacobs SR, Minasian LM, et al. Organizational designs for achieving high treatment trial enrollment: a fuzzy-set analysis of the community clinical oncology program. J Oncol Pract. 2012;8(5):288–290. doi: 10.1200/JOP.2011.000507. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Schensul JJ, Chandran D, Singh SK, et al. The use of qualitative comparative analysis for critical event research in alcohol and HIV in Mumbai, India. AIDS Behav. 2010;14:S113–S125. doi: 10.1007/s10461-010-9736-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chuang E, Dill J, Morgan JC, et al. A configurational approach to the relationship between high-performance work practices and frontline health care worker outcomes. Health Serv Res. 2012;47(4):1460–1481. doi: 10.1111/j.1475-6773.2011.01366.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Harkreader S, Imershein AW. The conditions for state action in Florida’s health care market. J Health Soc Behav. 1999;40(2):159–174. doi: 10.2307/2676371. [DOI] [PubMed] [Google Scholar]