Abstract

Remembering a past event involves reactivation of content-specific patterns of neural activity in high-level perceptual regions (e.g., ventral temporal cortex, VTC). In contrast, the subjective experience of vivid remembering is typically associated with increased activity in lateral parietal cortex (LPC)—“retrieval success effects” that are thought to generalize across content types. However, the functional significance of LPC activation during memory retrieval remains a subject of active debate. In particular, theories are divided with respect to whether LPC actively represents retrieved content or if LPC activity only scales with content reactivation elsewhere (e.g., VTC). Here, we report a human fMRI study of visual memory recall (faces vs scenes) in which complementary forms of multivoxel pattern analysis were used to test for and compare content reactivation within LPC and VTC. During recall of visual images, we observed robust reactivation of broad category information (face vs scene) in both VTC and LPC. Moreover, recall-related activity patterns in LPC, but not VTC, differentiated between individual events. Importantly, these content effects were particularly evident in areas of LPC (namely, angular gyrus) in which activity scaled with subjective reports of recall vividness. These findings provide striking evidence that LPC not only signals that memories have been successfully recalled, but actively represents what is being remembered.

Keywords: angular gyrus, decoding, memory reactivation, MVPA, parietal cortex, recall

Introduction

Successfully recalling a past event is associated with reactivation of content-specific patterns of neural activity. For example, recalling an image of a face or a scene reactivates category-selective regions of ventral temporal cortex (VTC; Polyn et al., 2005; Kuhl et al., 2011). Reactivation of visual category information within VTC has been shown to scale with behavioral measures of recall success (Kuhl et al., 2011; Gordon et al., 2013) and reaction time (Kuhl et al., 2012; Kuhl et al., 2013), suggesting its relevance to behavioral expressions of remembering. In contrast, other neural mechanisms are thought to track whether successful remembering has occurred independently of retrieved content. Content-general retrieval success effects have been observed consistently in lateral parietal cortex (LPC; for reviews, see Wagner et al., 2005; Vilberg and Rugg, 2008; Cabeza et al., 2008). In particular, successfully recalling specific details about a past experience is associated with increased activation within ventral LPC—most typically in angular gyrus (ANG; Dobbins and Wagner, 2005; Wagner et al., 2005; Hutchinson et al., 2009; Spaniol et al., 2009; Vilberg and Rugg, 2009). Despite the ubiquity of LPC retrieval success effects, there remains considerable debate concerning their functional significance.

Potential accounts of LPC retrieval success effects include: (1) accumulation of mnemonic evidence (Wagner et al., 2005); (2) directing attention to internal, mnemonic representations (Cabeza et al., 2008); (3) representing retrieved content in an “output buffer” (Vilberg and Rugg, 2012); or (4) binding information from other cortical inputs (Shimamura, 2011). Notably, the mnemonic accumulator and attentional accounts argue that LPC interacts with content representations in other cortical regions (e.g., in VTC) without actively representing retrieved content. In contrast, the output buffer account emphasizes active content representations within LPC. Similarly, the binding account is also compatible with content effects in LPC, but argues that LPC integrates content into bound, event-specific representations. Despite some recent support for content-sensitive mnemonic representations in LPC (Christophel et al., 2012; Kuhl et al., 2013; Xue et al., 2013; cf. Johnson et al., 2013), there remains a lack of clear evidence for content reactivation specifically within LPC regions that signal retrieval success. In addition, to date, there is no evidence that recall-related activity patterns in LPC differentiate between individual events.

Here, we applied complementary forms of multivoxel pattern analysis to human fMRI data to test for and compare content reactivation within VTC and LPC. Our task involved three phases: study of words paired with pictures (faces/scenes), cued recall of the pictures (word-?) and, finally, a recognition test for pictures alone (Fig. 1). We predicted that cued recall of pictures would elicit reactivation of category information (face vs scene), not only in VTC, but also in LPC. We specifically predicted reactivation within ANG based on evidence that ANG in particular is associated with recall success (Hutchinson et al., 2009). In addition, we predicted that ANG activity patterns would differentiate between individual events, a prediction motivated by theoretical arguments that ANG represents integrated event details and empirical evidence for stimulus-specific activity patterns in LPC during working memory maintenance (Christophel et al., 2012). To test this, we compared activity patterns elicited by word cues during recall with activity patterns elicited by pictures during recognition. If activity patterns elicited by words are more similar to the specific pictures they were studied with compared with “unassociated” pictures from the same category, then this would constitute strong evidence for event-specific representations.

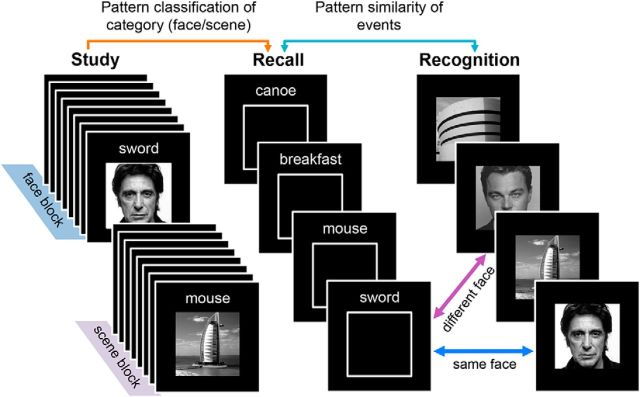

Figure 1.

Experimental paradigm. During each study phase block, participants studied word–picture pairs grouped by category (i.e., eight words paired with faces and eight words paired with scenes). After study, memory for some of the word–picture pairs was tested (twice each) in a recall phase. On recall trials, a word was presented and participants attempted to recall the corresponding picture in as much detail as possible (indicating vividness of recall by button press). Next, a recognition test followed in which participants were shown pictures that had either been studied and recalled (RCL), studied but not recalled (NoRCL), or not studied at all (NEW). Participants indicated whether each picture was “old” or “new.” Participants completed a total of eight study-recall-recognition cycles, all during fMRI scanning. Pattern classifiers were trained to learn the picture categories (face vs scene) based on study-phase activity patterns and were tested on data from the recall phase. Successful classification of recall trials constituted evidence of category reactivation. In addition, patterns of activity elicited during the recall phase (word present, picture absent) were compared with patterns of activity elicited during the recognition phase (word absent, picture present) to test for event-level pattern similarity.

Materials and Methods

Participants.

Twenty right-handed, native English speakers (six female; mean age = 21.1 years) from Yale University community participated. An additional participant was excluded due to a high rate of “no response” trials. All participants had normal or corrected-to-normal vision. Informed consent was obtained in accordance with the Yale Institutional Review Board.

Materials.

Ninety-six scenes, 96 faces, and 128 cue words were used. Scenes and faces were grayscale photographs (225 × 225 pixels). Scenes corresponded to well known locations (e.g., “Pentagon” or “Rocky Mountains“) and included manmade structures and natural landscapes. Faces corresponded to famous people (e.g., “Hillary Clinton” or “Matt Damon”) and included male and female actors, musicians, politicians, and athletes. Faces were cropped to include the area from approximately the chin to top of the head. Although pictures corresponded to specific labels (e.g., “Rocky Mountains”), these labels were never studied by or relevant to participants; that is, participants were never asked to name or label the stimuli.

Cue words were nouns (e.g., “whiskey” or “cow”) selected using the Medical Research Council Psycholinguistic Database (Wilson, 1988). Words were of moderate to high concreteness (M = 592, range = 457–644), imagibility (M = 597, range = 505–639), and frequency (M = 26.2, range = 5–127). Words were between 3 and 9 letters in length (M = 5.2). Cue words were randomly assigned to pictures and word–picture pairs were randomly assigned to experimental conditions for each subject.

Procedure and design.

The experiment consisted of eight fMRI scans (blocks) with each block comprised of three phases that occurred in sequence: study, recall, and recognition (Fig. 1). Each study round began with a 10 s fixation cross. Participants then studied word–picture pairs, one at a time, with the explicit instruction to remember them. On each study trial, a word was displayed above a picture. Sixteen word–picture pairs were presented during each study round, divided into two “miniblocks” of eight word–face pairs and eight word–scene pairs. Half of the study rounds began with a face miniblock; half began with a scene miniblock. The motivation for the miniblock structure was to allow for more efficient estimation of category-level patterns of activity (i.e., for pattern classification analyses). Within each miniblock, each word–picture pair appeared for 3500 s, followed by a 500 ms fixation cross (for a total of 32 s per miniblock). After each miniblock, subjects indicated (via button press) the direction of randomly oriented (left/right) arrows. The arrow task began with a fixation cross for 700 ms, followed by a sequence of four arrows. Each arrow appeared for 800 ms and was followed by a fixation cross for 400 ms. After this sequence, a fixation cross appeared for 500 ms. In total, the study phase lasted 86 s.

Each recall phase began with a screen (6 s) instructing subjects to “get ready” for the upcoming recall phase; this was followed by a reminder (6 s) of the response option: index finger = “vivid”; middle finger = “weak”; ring finger = “don't know.” On each trial (4 s), subjects were presented with a cue word displayed above an empty box representing the to-be-recalled picture. Participants were instructed to recall the corresponding picture as vividly as possible and to indicate the vividness of their memory by button press. The decision to require a vividness rating as opposed to a measure for which accuracy could be objectively verified was based on two factors. First, we wanted to encourage subjects to recall the pictures in as much detail as possible and did not want to bias subjects toward recall of a more general, category-level memory (e.g., “face”). Second, by not requiring subjects to indicate the picture's category at any point, a pattern classifier could not decode category information based on behavioral responses. For the first 3 s of each recall trial, the outline of the box beneath the cue word was white; during the final 1 s, the outline changed to red to indicate that an immediate response was required. In each recall round, subjects completed 16 trials, with eight pairs from the immediately preceding study round (i.e., half of the studied pairs) tested twice each. Therefore, studied pairs corresponded to two different conditions according to whether pairs were subsequently tested during recall: nonrecalled pairs (NoRCL) and recalled pairs (RCL); half of the pairs in each condition within each block corresponded to faces, half to scenes. Of the eight pairs tested in each recall round, each pair was tested once (trials 1–8) before any of the pairs were tested a second time (trials 9–16). The ordering of the items in each recall round was pseudorandom, with the constraint that the same pair was not tested on consecutive trials. Each recall trial was followed by the same arrow task as in the study phase. Each recall phase lasted 172 s.

After each recall phase, participants completed a recognition phase. The recognition phase began with a “get ready” screen (6 s), followed by a reminder of the response options (6 s) for the recognition phase: index finger = “new”; middle finger = “old.” Each recognition trial (1800 ms) presented subjects with a picture and subjects were instructed to indicate whether they had seen the picture at some point during the experiment (“old”) or whether the picture had not been seen at any point during the experiment (“new”). Each recognition phase contained 24 trials: eight NoRCL pictures, eight REC pictures, and eight novel (foil) pictures (NEW; half faces, half scenes). To reduce potential uncertainty, participants were informed that “old” items (NoRCL + REC) would only be drawn from the current block. Each recognition trial was followed by a fixation cross (200 ms; responses made during this time or earlier were recorded) and then the same arrow task (6 s) that was included in the study and recall phases. In total, the recognition phase lasted 204 s. The total time per block (study + recall + recognition) was 462 s.

fMRI methods.

Imaging data were collected on a 3T Siemens Trio scanner at the Anlyan Center at Yale University using a 12-channel head coil. Before the functional imaging, two T1-weighted anatomical scans were collected (in-plane and high-resolution 3D). Functional data were collected using a T2*-weighted gradient EPI sequence; TR = 2000 ms, TE = 25 ms, flip angle = 90°, 34 axial-oblique slices, 224 mm FOV (3.5 × 3.5 × 4 mm). A total of eight functional scans were collected. Each scan consisted of 231 volumes; the first five volumes were discarded to allow for T1 equilibration. fMRI data preprocessing was conducted using SPM8 (Wellcome Department of Cognitive Neurology, London). Images were first corrected for slice timing and head motion. High-resolution anatomical images were coregistered to the functional images and segmented into gray matter, white matter, and CSF. Segmented gray matter images were “skull-stripped” and normalized to a gray matter Montreal Neurological Institute template. Resulting parameters were used for normalization of functional images. Functional images were resampled to 3 mm cubic voxels and smoothed with a Gaussian kernel (8 mm FWHM).

Univariate fMRI analyses.

For univariate analyses, fMRI data were analyzed under the assumptions of the general linear model (GLM). Trials were modeled using a canonical hemodynamic response function and its first-order temporal derivative. Data were modeled in a single GLM that contained separate regressors representing the study, recall, and recognition phases. Across all phases, face and scene trials were modeled separately. For study trials, RCL and NoRCL trials were separately modeled (despite the fact that these trials did not differ until the recall phase). For the recall phase, first and second repetitions were modeled under a common regressor. For recognition trials, each of the three conditions (RCL, NoRCL, NEW) were modeled separately. Additional regressors representing motion and scan number were also included. Linear contrasts were used to obtain subject-specific estimates for each effect of interest, which were then entered into a second-level, random-effects analysis using a one-sample t test against a contrast value of 0 at each voxel.

Pattern classification.

Pattern classification analyses were applied to preprocessed but unmodeled fMRI data. The preprocessing included all of the steps applied to the data used for univariate analyses, including normalization and smoothing. Normalization of the data allowed for use of standard-space anatomical masks, as well as the use of group-defined functional ROIs. The decision to use smoothed (as opposed to unsmoothed) fMRI data was based on our observation, from prior datasets, that smoothing tends to be beneficial to pattern classification performance and on recent studies demonstrating that smoothing—even with a large kernel—does not result in information loss (Kamitani and Sawahata, 2010; Op de Beeck, 2010) and may in fact reduce noise and improve sensitivity when fMRI data have been subjected to motion correction algorithms, which results in nonindependence between neighboring voxels (Kamitani and Sawahata, 2010).

In addition, fMRI data used for classification analyses were high-pass filtered (0.01 Hz), detrended, and z-scored within scan. After relevant trials and corresponding volumes had been selected, data were z-scored again, first across voxels within each volume (i.e., at each point in time, mean activation across voxels = 0) and then across all volumes within each phase (i.e., mean response for each voxel within each phase = 0). Data corresponding to each trial were reduced to a single image/volume by averaging volumes across TRs. For study phase trials, TRs 3–4 (representing 4–8 s posttrial onset) were equally weighted. For recall phase trials, a weighted average was applied to TRs 3–5 (4–10 s posttrial onset), with the third and fourth volumes weighted more heavily (weighting = 0.4 for each) than the fifth volume (weighting = 0.2). A wider temporal window was applied to recall trials to reflect the fact that recall processes should be slower than perception (study trials); the weighted average was based on results from prior studies (Kuhl et al., 2011; Kuhl, Bainbridge, and Chun, 2012). For the recognition phase, TRs 3–4 were equally weighted (as for the study phase).

Pattern classification analyses were performed using a penalized (L2) logistic regression classifier and implemented via the Princeton MVPA toolbox and custom Matlab code. Separate classifiers were applied to each of 10 anatomical ROIs (see Fig. 3A). ROIs were created using an anatomical automatic labeling atlas (Tzourio-Mazoyer et al., 2002).

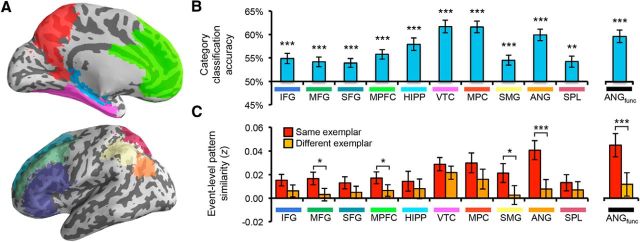

Figure 3.

Reactivation within anatomical ROIs. A, Ten anatomical ROIs: IFG, MFG, SFG, MPFC, hippocampus (HIPP), VTC, MPC, SMG, ANG, and SPL. B, Category classification (face vs scene) for recall trials. Accuracy in all regions was significantly above chance (p < 0.005). Errors bars reflect SEM. C, Event-level pattern similarity between recall and recognition trials. Recall-recognition similarity (z-transformed correlation coefficient) is shown as a function of whether trials corresponded to the same picture or a different picture (from the same category). The main effect of match (same > different) was significant (p = 0.007); individually, the effect was significant in MFG, MPFC, SMG (p < 0.05), and ANG (p = 0.0007); only ANG was significant after correction for multiple comparisons. Error bars reflect the SEM difference for each region. *p < 0.05; **p < 0.01; ***p < 0.005.

Pattern similarity.

Pattern similarity analyses (Kriegeskorte et al., 2008) were used to compare (correlate) neural activity during recall and recognition trials. These analyses were performed using fMRI data preprocessed as described for the pattern classification analyses. Measured correlations were z-transformed (Fisher's z) before any averaging or statistical analyses were performed.

Correction for multiple comparisons.

All pattern classification and similarity analyses are reported across 10 anatomical ROIs. Although VTC and ANG represented regions of a priori interest, correction for multiple comparisons was performed using a conservative, corrected p value of 0.05/10 = 0.005. All of the reported p values are uncorrected, but for all of the core analyses, we indicate which effects do or do not survive correction for multiple comparisons. For functionally defined ROIs (which are not independent of the anatomical ROIs), we do not indicate whether effects survive correction for multiple comparisons because the appropriate correction is less clear.

Results

Behavioral results

During recall, participants indicated the vividness with which they recalled each picture: 1 = “vivid” (M = 63.2%), 2 = “weak” (17.3%), 3 = “don't know” (14.6%). No response was made on 4.9% of trials. Mean ratings were comparable for face (M = 1.44) and scene (M = 1.43) trials (p = 0.98) and did not significantly vary across repetitions (M = 1.45 and M = 1.42, respectively; p = 0.35).

During the recognition phase, subjects failed to respond within the allotted 2 s on an average of 9.1% of trials (no difference across conditions: p > 0.15); these trials were excluded from behavioral analyses. Pictures tested during the recall phase (RCL) were correctly identified as “old” (hits) with high accuracy (M = 91.0%). The hit rate for pictures initially studied but not tested during the recall phase (NoRCL) was numerically, but not significantly, lower (M = 88.8%, p = 0.23). False alarms to novel pictures (NEW) were infrequent (M = 7.3%). Mean discriminability, as measured by A′, did not differ for the RCL and NoRCL conditions (M = 0.955 and 0.948, respectively; p = 0.29). Mean reaction times (for hits only) also did not differ across the RCL and NoRCL conditions (M = 1188 and 1182 ms, respectively; p = 0.64). Mean reaction times for face trials (hits only) were significantly faster than for scene trials (1155 vs 1215 ms, respectively; p = 0.003). The hit rate was also significantly higher for faces than for scenes (93.1% vs 86.8%, p = 0.007).

Univariate effects of recall success

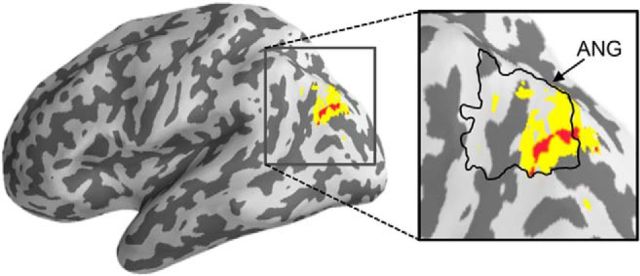

To identify regions associated with the subjective experience of vivid recall, we contrasted all recall trials associated with vivid remembering (vividness rating = 1) versus nonvivid recall trials (all others). This contrast could reflect effects of vividness (vivid > weak) and/or retrieval success (vivid > don't know). We constructed a GLM with factors of category (face/scene) and vividness (vivid/nonvivid). At a threshold of p < 0.001 (uncorrected, 5 voxel extent threshold), we found several regions that showed greater activity for vivid than nonvivid trials, including the ANG (a region of a priori interest; Fig. 2), hippocampus, and orbitofrontal cortex extending to caudate. Therefore, across category types, the ANG was associated with the subjective experience of vivid recall, consistent with prior findings (Dobbins and Wagner, 2005; Wagner et al., 2005; Hutchinson et al., 2009; Spaniol et al., 2009; Vilberg and Rugg, 2009).

Figure 2.

ANG associated with vivid remembering. Univariate contrast of recall trials corresponding to vivid remembering (rating = 1) relative to nonvivid remembering (all other trials) revealed activation in ANG. Red, p < 0.001, uncorrected; yellow, p < 0.005, uncorrected.

Category reactivation

Reactivation of category information during the recall phase was assessed by training pattern classifiers to discriminate face versus scene study trials and then testing how accurately recall phase trials were classified according to their category (Polyn et al., 2005; Kuhl et al., 2011; Kuhl, Bainbridge, and Chun, 2012). Because recall phase trials only presented subjects with words (and not pictures), successful classification of recall phase trials reflected the degree to which category-related patterns of encoding activity were reactivated during recall. Each recall trial was categorized by the classifier and these “guesses” were scored as correct or incorrect according to the true category of the to-be-recalled image. Mean accuracy across trials was computed for each participant and accuracy across participants was compared with chance (50%) via one-sample t tests.

We first assessed classification accuracy across 10 anatomical ROIs corresponding to subdivisions of prefrontal, parietal, and temporal lobe regions (Fig. 3A). Classification was performed across all recall trials (regardless of behavioral response or repetition number). Mean classification accuracy was above chance for each ROI (all p < 0.005; Fig. 3B). Classification accuracy was numerically highest in VTC, followed by medial parietal cortex (MPC), and ANG (in decreasing order). Of particular interest, the robust reactivation in ANG suggests that the same area of lateral parietal cortex that exhibited heightened activation during vivid recall also carried information about what was being remembered.

To test more directly the overlap between vividness-related univariate activity and category reactivation, we generated a functional ROI for ANG (ANGfunctional) by selecting all voxels within the anatomical mask that displayed greater activity for vivid than nonvivid recall trials using a liberal threshold (p < 0.05) to include a sufficient number of voxels for pattern analysis (281 voxels). Category reactivation within this functionally defined ANG ROI was highly robust (p < 5e-5; Fig. 3B). Therefore, voxels within ANG that were associated with the subjective experience of vivid remembering also carried information about the visual category of a recalled stimulus.

Event-specific patterns of recall activity

We next assessed whether information about specific events/trials (which face or which scene was paired with a given cue word) was reactivated during recall. This was assessed by measuring pattern similarity (the correlation of activity vectors) between recall and recognition trials—trials that contained no perceptual information in common. To make this analysis independent of category reactivation, pattern similarity was restricted to pairs of recall and recognition trials that corresponded to pictures from the same category. For example, a recall trial in which a face was the target would only be correlated with recognition trials consisting of face images. In addition, because pairs of recall-recognition trials that corresponded to the same event (“same trials”) were always from the same block (scan), it was important to avoid confounding same/different with temporal lag between recall and recognition trials. Therefore, each recall trial was only correlated with a recognition trial from the same block, thereby matching same/different comparisons in terms of recall-recognition lag. Finally, correlations were further restricted to recognition trials corresponding to the same condition (i.e., RCL).

An ANOVA with factors of region (10 anatomical ROIs), repetition (first vs second recall trial), and recall-recognition “match” (same, different) revealed a significant main effect of match (F(1,19) = 9.14, p = 0.007), reflecting stronger similarity when recall-recognition trials corresponded to the same picture relative to a different picture (from the same category, block, and condition; Fig. 3C). This event-level similarity effect did not interact with repetition (F < 1), but varied as a function of region (F(9,171) = 2.12, p = 0.03). Considering individual ROIs, the main effect of match was significant (p < 0.05) in middle frontal gyrus (MFG), medial prefrontal cortex (MPFC), supramarginal gyrus (SMG), and ANG; however, the effect was particularly robust in ANG (p = 0.0007). When correcting for multiple comparisons across the 10 anatomical ROIs (which is conservative given the a priori prediction for ANG), only the ANG effect remained significant. The match effect in ANG was also significantly stronger than the effect in VTC (p = 0.005) and all other ROIs (p < 0.05) except for MPC (p = 0.08) and SMG (p = 0.14). To confirm that variance across ROIs was unrelated to the number of voxels in each ROI, we replicated the analysis using a random sample of 500 voxels from each ROI; this was repeated 10 times per ROI/subject and the resulting correlation matrices were averaged. This had virtually no effect on the results: match effects remained significant without correction for multiple comparisons in MFG, MPFC, and SMG and with correction for multiple comparisons in ANG. Critically, the match effect was also highly robust in ANGfunctional (p = 0.0003).

As an alternative way of quantifying event-level match effects, we also assessed whether measures of recall-recognition similarity could be used to “classify” which recognition trial corresponded to a given recall trial. Specifically, for each recall trial, we selected the recognition trial (from the same block, condition, and category) with the most similar pattern of neural activity. The selected image was then scored as either correct or incorrect. Because each recognition block contained four items from each condition/category, chance performance was 25% accuracy. This analysis was performed separately for each repetition during the recall phase and accuracy was then averaged across repetitions. This event classification approach has the advantage of potentially reducing the influence of extreme correlation values by translating all of the data into a binary measure of similarity and also translates the event-level similarity into a more intuitive number. Using this method, the only anatomical ROI exhibiting above-chance classification accuracy was ANG (M = 27.7%, p = 0.001; all others, p > 0.15). Event classification was also significant in ANGfunctional (p = 0.006).

Given that our functionally defined ANG ROI was selected on the basis of its sensitivity to recall success, one important question is whether event-level effects in ANG might simply reflect a match in “memory strength.” That is, items that were strongly recalled may have also been strongly recognized, thus producing match effects. This account would predict significant event-level effects in other regions that exhibited robust recall success effects. To test this, we defined two additional functional ROIs from the contrast of vivid versus nonvivid recall trials. Using a relatively high threshold of p < 0.0005, uncorrected, we selected clusters of voxels within bilateral orbitofrontal cortex, extending to caudate (169 voxels) and within bilateral posterior hippocampus, extending slightly into parahippocampal and retrosplenial cortices (270 voxels). Critically, event classification was not above chance either in the orbitofrontal/caudate (M = 25.0%, p = 0.95) or hippocampal (M = 24.7%, p = 0.74) functional ROIs. However, although the hippocampal ROI was nearly identical in size to the ANGfunctional ROI (270 vs 281 voxels, respectively), the orbitofrontal/caudate ROI was smaller (169 voxels). Therefore, to fully eliminate number of voxels as a factor, we reran the event classification analysis for ANGfunctional such that we subsampled 169 voxels from ANGfunctional (10 repetitions per subject were performed with a random 169 voxels selected for each repetition). Accuracy remained above chance in ANGfunctional (M = 27.2%, p = 0.01).

As an additional control, we also regressed out behavioral responses from the recall and recognition data, which removed potential content-general signals that were related to subjective memory strength (i.e., any effects of vivid remembering that generalized across items). Specifically, separate logistic regression models were used to predict behavioral responses from activity patterns during the recall and recognition phases and the residuals from these models were retained for the event classification analysis. Again, the event classification effects remained significant in ANGfunctional (M = 27.3%, p = 0.009). Therefore, the event-level match effects observed in ANG are not easily explained in terms of retrieval strength.

Subcategory reactivation

Another potential account of the event-specific effect in ANG is that it reflects category information subordinate to the broad categories we used (i.e., face and scene subcategories). Although we did not explicitly control for or balance subcategory information in designing the experiment, face stimuli could be divided into male and female subcategories and scenes into manmade versus natural subcategories. Notably, we have previously found that VTC distinguishes between these subcategories during event encoding (Kuhl, Rissman, and Wagner, 2012). To test for subcategory effects during recall, we compared patterns of activity elicited during recall trials to patterns of activity elicited during recognition trials, sorting the data as a function of subcategory match. That is, we compared recall-recognition similarity for trial pairs corresponding to the same subcategory versus different subcategories. Note that all recognition trials were included in this analysis, regardless of condition (RCL, NoRCL, NEW) with the important exception that pairs corresponding to the same picture (event) were excluded (to differentiate subcategory effects from event-level effects).

An ANOVA with factors of subcategory (same vs different subcategory), repetition (first vs second recall trial), and region (10 anatomical ROIs) revealed only a trend for an effect of subcategory (F(1,19) = 2.23, p = 0.15); however, the interaction between repetition and subcategory was significant (F(1,19) = 5.80, p = 0.03). When considered separately, the effect of subcategory (same > different) was significant for first recall trials (F(1,19) = 12.97, p = 0.002), but not for second recall trials (F < 1). Consistent with our prior finding, VTC discriminated between subcategories (first recall trials; p = 0.008). This was also true of inferior frontal gyrus (IFG; p = 0.00003), MFG (p = 0.03), superior frontal gyrus (SFG; p = 0.03), and MPC (p = 0.008). However, subcategory effects (first repetition only) were not present in ANG (p = 0.20) or ANGfunctional (p = 0.38). To rule out more definitively a contribution of subcategory information to the event-level effects in ANG, we repeated the event-level pattern similarity analysis with the additional constraint that each recall trial was only correlated with recognition trials that corresponded to the same subcategory. When controlling for subcategory, the event-level pattern similarity match effect remained significant in SMG (p = 0.02) and ANG (p = 0.0007), but was only significant when controlling for multiple comparisons (n = 10) in ANG. Similarly, the effect in ANGfunctional remained significant when controlling for subcategory (p = 0.0007). Note that event classification accuracy is not reported because subsampling trials to match for subcategory resulted in chance accuracy varying from block to block depending on the number of items from each subcategory within each block.

Reactivation and retrieval success

In all of the preceding analyses of reactivation, we included all recall trials regardless of behavioral vividness ratings. We next compared category and event classification as a function of recall success. However, analysis of performance separated by behavioral response was complicated by the suboptimal distribution of frequencies across response bins (“vivid,” “weak,” and “don't know”) and the fact that some subjects failed to make any response on some trials (range = 0–21.1% across trials). For example, “vivid” responses ranged in frequency from 30.5% to 94.5% across subjects and “don't know” responses ranged in frequency from 0% to 39.8%. Therefore, we excluded subjects that had <5 trials in any of the three response bins (n = 6) as well as subjects that failed to respond on >10% of the recall trials (n = 3).

In ANGfunctional, category reactivation was significant for trials associated with “vivid” (M = 61.6%) and “weak” (M = 63.4%) ratings of vividness (both p < 0.005), but not for “don't know” responses (M = 52.4%; p = 0.25). Similarly, in VTC, category reactivation was significant for “vivid” (p < 0.001) and “weak” trials (M = 65.6% and M = 64.6%, respectively; both p < 0.001), but not “don't know” trials (M = 55.0%; p = 0.18). Notably, the difference between “vivid” and “weak” trials was not significant for either ANGfunctional or VTC (both p > 0.6). The lack of difference between “vivid” and “weak” trials is somewhat surprising, but it is notable that “vivid” trials were associated with significantly faster reaction times than “weak” trials (p < 0.00001). Therefore, it is possible that classifier performance benefitted from the greater time on task for “weak” trials. In addition, because we did not collect objective measures of recall detail or success (our motivation for using a subjective vividness scale was to motivate subjects to recall images in detail without biasing them toward selectively recalling a required piece of information), it is unclear how weak the “weak” memories were. Considering “vivid” and “weak” trials collectively as “successful” recall trials, both ANGfunctional and VTC were associated with highly robust category reactivation for successful recall trials (both p < 0.001), but not don't know trials (both p > 0.17) and the difference in category reactivation for successful versus don't know trials was also significant for both regions (p < 0.01; Fig. 4A).

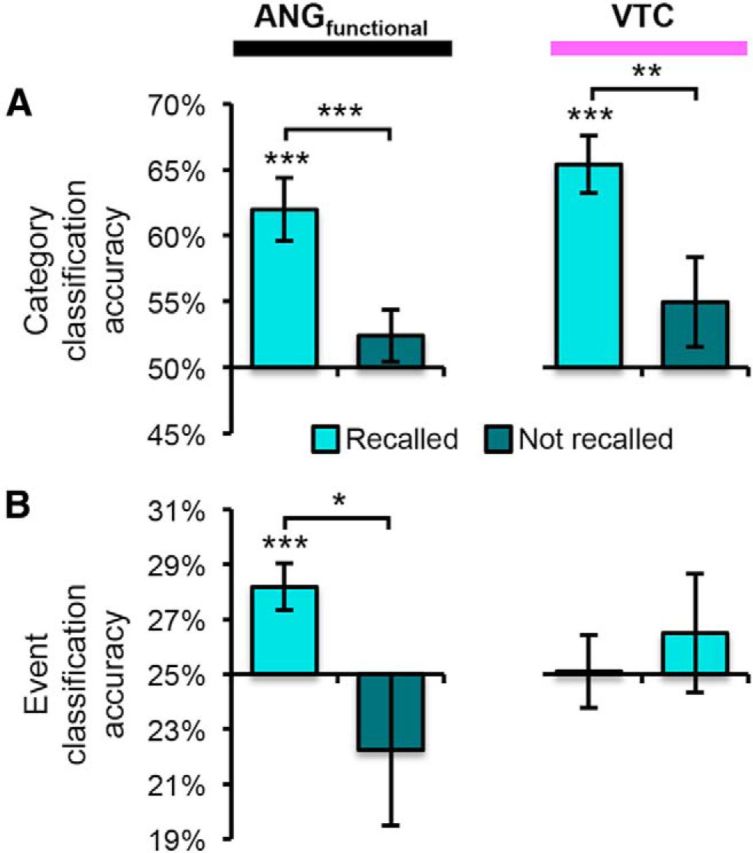

Figure 4.

Category and event classification as a function of recall success. A, Category classification accuracy within ANGfunctional and VTC as a function of whether pictures were successfully recalled. For both regions, accuracy was only above chance (p < 0.005) when pictures were successfully recalled. B, Event classification within ANGfunctional and VTC as a function of whether pictures were successfully recalled. Accuracy was only above chance within ANGfunctional when pictures were successfully recalled. Error bars indicate SEM. *p < 0.05; **p < 0.01; ***p < 0.005.

In ANGfunctional, event classification accuracy was significantly above chance for successful recall trials (M = 28.2%, p = 0.004), but not “don't know” trials (M = 22.2%, p = 0.34; Fig. 4B) and accuracy was significantly greater for successful than “don't know” trials (p = 0.03). In VTC, event classification did not differ from chance for either successful (M = 25.1%, p = 0.94) or “don't know” trials (M = 26.5%, p = 0.51). The interaction between region (ANGfunctional vs VTC) and recall success (successful vs don't know) was significant (F(1,10) = 5.16, p < 0.05), confirming that the greater event classification accuracy in ANG than VTC was specific to recall trials associated with successful remembering.

Relationship between VTC category reactivation and ANG event similarity

It is notable that VTC, which displayed very robust category reactivation (Fig. 3B), did not show an event-level pattern similarity effect (p = 0.22). However, it is possible that variance in the strength of VTC category reactivation was related to ANG event evidence. Therefore, we tested whether trial-level variance in ANG pattern similarity (for same trials) was related to the strength of category reactivation in VTC. A separate correlation coefficient was computed for each participant and these values were then z-transformed and compared with 0 via one-sample t test. Notably, ANG pattern similarity (for same trials) was unrelated to VTC category reactivation (mean z = 0.01, p = 0.74). To confirm that this null result was not simply due to poor correspondence between these two forms of analysis, we also tested whether VTC pattern similarity for same trials was related to VTC category reactivation and, indeed, the relationship was highly significant (mean z = 0.11, p = 1.9e-7). Therefore, ANG event information was distinct from VTC category reactivation.

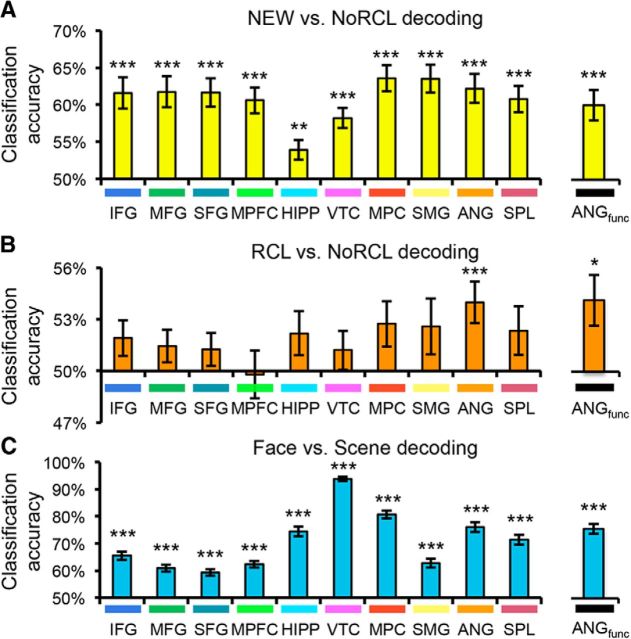

Decoding content-general mnemonic history

In a final set of analyses, we focused on recognition trials alone to distinguish between content-general representations of an image's mnemonic history versus “memory-general” content effects. It has been shown previously that patterns of activity in parietal cortex support highly accurate decoding of whether a stimulus has previously been studied (Rissman et al., 2010). We thus adopted a similar approach here. Using cross-validation analyses (leaving one scan out), we tested whether trials could be successfully classified as old versus new. Old trials corresponded to pictures that were studied but not tested during the recall phase (NoRCL); new trials corresponded to the foils presented during recognition (NEW). To remove reaction time as a potential confound (Todd et al., 2013), a linear regression model was first applied in which the fMRI data were used to predict reaction time; residuals from this model (i.e., fMRI data that was unrelated to reaction time) were then used to perform old versus new classification. Old/new classification was significantly above chance across each of the 10 anatomical ROIs (all p < 0.005, all significant after correction for multiple comparisons; Fig. 5A), with accuracy tending to be highest in parietal regions. Accuracy was also above chance in ANGfunctional (p = 0.0001). Therefore, information about whether a stimulus was old or new was widely distributed (Rissman et al., 2010), including in the ANG region associated with recall success and reactivation.

Figure 5.

Decoding of recognition trials. A, Classification of novel foil images (NEW) versus old images that were not tested during the recall phase (NoRCL). Classification accuracy was assessed using a cross-validation procedure. Accuracy was above chance in all anatomical and functional ROIs (p < 0.05). B, Classification of old images that were tested during the recall phase (RCL) versus old images that were not tested during the recall phase (NoRCL). Accuracy was above chance in the anatomical ANG ROI (p = 0.001) and ANGfunctional (p < 0.05). C, Classification of image category (face vs scene). Accuracy was above chance in all anatomical and functional ROIs (p < 0.001), but was maximal in VTC. Colored bars under ROI labels match color labels in Figure 3A. *p < 0.05; **p < 0.01; ***p < 0.005.

Next, we asked a more subtle question: whether patterns of activity differentiated between old items that had previously been tested in the recall phase (RCL) versus old items that had not been tested (NoRCL). It should be noted that we did not observe differences in reaction time or accuracy at the group level between these conditions. However, to fully control for potential confounding effects of accuracy or reaction time (Todd et al., 2013), linear regression was used to remove effects of these variables. Across the 10 anatomical ROIs, ANG was the only region in which classification of RCL versus NoRCL was above chance (p = 0.004, significant after correction for multiple comparisons; all others, p > 0.05, uncorrected; Fig. 5B). Similarly, classification was above chance in ANGfunctional (p = 0.01). Therefore, although pictures in the RCL and NoRCL conditions had identical perceptual histories and only differed with respect to whether they had been tested previously (and reactivated), these categories could be discriminated according to the patterns of activity they elicited in ANG.

As a final step, we tested for representations of content (face/scene) that generalized across mnemonic history (RCL, NoRCL, NEW). Again, we first removed effects of accuracy and reaction time. Face versus scene decoding was well above chance in each of the 10 anatomical regions (all p < 9e-7, all significant after correction for multiple comparisons; Fig. 5C), with accuracy ranging from 59.3% (SFG) to 93.9% (VTC). Accuracy was also high in ANGfunctional (M = 75.2%, p < 2e-11). Therefore, content effects that were independent of memory were clearly robust in ANG, including in voxels that were explicitly selected on the basis of their sensitivity to recall vividness.

Discussion

We found that recall of visual images is associated with highly robust category reactivation, not only in VTC, but also ANG. Importantly, content effects were clearly present in ANG voxels that were explicitly selected based on their sensitivity to subjective reports of vivid remembering. Moreover, activity patterns in ANG were event specific, allowing for cue words (presented during recall) to be reliably “matched” with mnemonically associated pictures (presented during recognition), even though these recall and recognition trials shared no perceptual overlap. These findings provide important evidence for content reactivation extending to event-specific representations within LPC.

LPC contributions to memory retrieval

Traditionally, memory reactivation has been thought of as a property of sensory cortical regions, which contrasts with putative content-general frontoparietal mechanisms that guide retrieval or signal retrieval success (Buckner and Wheeler, 2001; Wheeler and Buckner, 2003). More recent theories have been divided as to whether LPC actively represents retrieved content (see Introduction). The mnemonic accumulator proposal that was described but not advocated by Wagner et al. (2005) holds that LPC tracks the strength of evidence in favor of a behavioral response without representing retrieved information per se. Although this account is appealing given the finding that monkey LPC tracks the strength of behaviorally relevant sensory evidence (Shadlen and Newsome, 2001), it does not accommodate the content effects we observed in LPC. Similarly, the “attention to memory” model (Cabeza et al., 2008) is not readily consistent with our findings because it argues for a role of LPC in orienting attention to content that is represented in other cortical regions (e.g., VTC).

Instead, the present findings appear more consistent with an output buffer account (Baddeley, 2000; Wagner et al., 2005; Vilberg and Rugg, 2008) or binding account (Shimamura, 2011). According to the output buffer account, retrieved content is temporarily stored in LPC, perhaps until a behavioral decision is made. Consistent with this perspective, activity in LPC is sustained over the time course of retrieval events (Vilberg and Rugg, 2012; Kuhl et al., 2013). According to the binding account, ventral LPC forms event-specific representations by integrating information across multiple domains and modalities. The present finding of greater event specificity within ANG than VTC may therefore be explained in terms of the greater diversity of information projecting to ANG, allowing for individual events to be better differentiated.

One challenge for all of the above theories is to explain why damage to LPC does not cause pronounced memory impairments. Although modest memory impairments have been observed among LPC patients (Olson and Berryhill, 2009) or with transcranial magnetic stimulation to (ventral) LPC (Sestieri et al., 2013), the small magnitude of these impairments indicates that LPC contributions to memory are subtle. However, if LPC supports a memory buffer, one possibility is that LPC disruption will be disproportionately associated with memory impairments when retrieved information must be retained over a delay, manipulated, and/or evaluated with respect to other information held in working memory. Similarly, if LPC supports the binding of event information, memory impairments may only be observed when retrieval tests require access to integrated feature information.

Ventral versus dorsal LPC

Successful recall of event details has consistently been associated with activity in ventral LPC—namely, in ANG (Hutchinson et al., 2009, 2014; Spaniol et al., 2009). In contrast, dorsal LPC has been associated with successful item recognition but not with recall of event details (Wagner et al., 2005; Hutchinson et al., 2009; Hutchinson et al., 2014). For example, superior parietal lobule (SPL) retrieval activity is positively correlated with uncertainty and/or reaction time (Cabeza et al., 2008; Hutchinson et al., 2014). This ventral/dorsal dissociation fits well with the present finding that category reactivation was more evident in ANG than SPL and that event-specific information was robust in ANG but absent in SPL. The only analysis in which SPL was comparable to ANG was in old versus new decoding of recognition trials, consistent with prior evidence that SPL activity reflects item recognition.

However, category reactivation in SPL was still above chance. Therefore, although the dissociation between ANG and SPL was clear, the difference in content representation across these regions may not be absolute. In fact, we have shown recently that reactivation of visual category information in SPL can be as robust as category reactivation in ANG if visual category information per se is behaviorally relevant (Kuhl et al., 2013). Here, category information was never explicitly relevant. Therefore, the extent to which category representations emerge in dorsal LPC may be closely related to behavioral goals (Toth and Assad, 2002; Freedman and Assad, 2006) and/or the mapping of categories to responses (Tosoni et al., 2008). Indeed, whereas activity in ANG peaks relatively early during retrieval and is not sustained over time, dorsal LPC activity peaks later and is sustained until response execution (Sestieri et al., 2011).

In the present study, voxels that lay at the boundary between ventral and dorsal LPC—effectively, the intraparietal sulcus (IPS)—were excluded from all ROIs to avoid blurring of dorsal/ventral activity. However, episodic retrieval frequently elicits activation within IPS (Wagner et al., 2005) and retrieval-related IPS activations can be functionally dissociated from those in ANG and SPL (Hutchinson et al., 2014). An analysis of the excluded voxels (effectively an IPS ROI; 389 voxels) revealed significant category reactivation (M = 57.0%, p < 0.001) and event classification (M = 27.4%, p = 0.01). In terms of magnitude, both of these effects fell between ANG and SPL. Although this provides evidence for content reactivation within IPS, given the methods used here, which involved group-level ROIs in normalized brain space, we believe it is difficult to make strong claims about the representations in IPS relative to SPL or ANG. Rather, to potentially dissociate IPS effects from those in ANG and/or SPL, it would be preferable to define subject-specific ROIs based on anatomical images and perhaps constrained by functional localizers (Hutchinson et al., 2014).

Nature of LPC representations

Although our event-level pattern similarity results provide clear evidence that word cues (recall phase) and corresponding pictures (recognition) elicited a common representation, the specific nature of this representation could take several forms. First, word–picture similarity may have been driven by the pictures alone. By this account, recalling and perceiving the same picture resulted in an event-level match. The nature of picture representations could, in turn, be visual, semantic, or both so long as this information was incorporated into an episodic memory. Alternatively, event-level similarity may have been driven by event specific associations between words and pictures (Staresina et al., 2012) or even by the word alone (i.e., if cue words were reactivated during recognition trials). Although we cannot adjudicate between these accounts, they all emphasize event-specific content representations in LPC.

An alternative perspective is that event-level similarity in LPC was related to either retrieval strength or category strength. According to a retrieval strength account, items that were strongly recalled may have also been strongly recognized, resulting in a pattern similarity match. Notably, this account cannot explain the observed category reactivation effects. Moreover, we found that event-level effects in ANG remained even when regressing out behavioral responses (retrieval success). With respect to a category strength account, it may be expected that some pictures elicited stronger category responses than others (e.g., category prototypes); if such category strength effects were reflected both at recall and recognition, this could produce event-level match effects. However, this would predict event-specific effects to also occur in VTC, which we did not find. We also found no relationship between the strength of category reactivation in VTC and event-level similarity effects in ANG. Therefore, neither of these accounts appears sufficient on their own. However, an appealing intermediate idea is that event-specific representations may reflect a set of feature elements (content) convolved with associated mnemonic strengths, an account that potentially accommodates ANG demonstrating category and event-level reactivation as well as content-general mnemonic signals.

Event-specific versus category reactivation

In humans, there is relatively limited evidence for reactivation of event-specific information (Buchsbaum et al., 2012; Staresina et al., 2012; Ritchey et al., 2013). Two recent studies used pattern similarity analyses similar to those reported here and found that, in VTC, pattern similarity between corresponding encoding and retrieval trials was greater when the event was successfully remembered (Staresina et al., 2012; Ritchey et al., 2013). However, in each of these studies, corresponding encoding/retrieval trials were perceptually overlapping. Given that pattern similarity across repeated encoding (perception) of a stimulus is associated with successful remembering (Xue et al., 2010; Ward et al., 2013; Xue et al., 2013), it is unclear whether these studies observed perceptual similarity that gave rise to successful remembering or true similarity of mnemonic representations. A critical aspect of our study was that event-level reactivation reflected similarity between a cue (word) and associate (picture) that had no perceptual overlap.

It is notable that we did not find event-specific effects within the hippocampus, given prior evidence that the hippocampus is involved in separating event representations during encoding (LaRocque et al., 2013) and retrieval (Chadwick et al., 2011). Restricting our hippocampal ROI to only those voxels that exhibited a univariate effect of vivid remembering (p < 0.05; the same criteria used to define the functional ANG ROI), the event-level pattern similarity effect was marginally significant (p = 0.05), but only after excluding an outlier (z = 3.0). However, this effect was eliminated when controlling for subcategory (p = 0.29). Therefore, we did not observe compelling event-specific effects within the hippocampus, but it is important to emphasize that we did not use a high-resolution imaging protocol focused on the medial temporal lobes (Chadwick et al., 2011; LaRocque et al., 2013) and our anatomical ROIs were not subject specific, reducing our ability to clearly separate hippocampus from surrounding cortex. Therefore, a targeted comparison of reactivation within hippocampus versus ANG would be informative to try in a future study.

Although it is notable that category reactivation was quite comparable across VTC and ANG (VTC vs ANGfunctional: p = 0.23), this similarity likely masks differences in the representations across these regions. Previously, we found that category reactivation in VTC versus LPC is differentially modulated by behavioral goals, pointing to a functional difference (Kuhl et al., 2013). Here, we observed a double dissociation between VTC and ANG when considering information at a finer grain than the category level: namely, recall activity in VTC, but not ANG, reflected subcategory information, whereas recall activity in ANG, but not VTC, was event specific. This dissociation is notable because prior studies of memory reactivation have often used category reactivation within VTC as a proxy for individual memories. Instead, an interesting possibility is that category (and perhaps subcategory) reactivation in VTC may be correlated with successful remembering, but also with gist-based errors (Schacter and Slotnick, 2004), whereas event-level reactivation in ANG may track information that differentiates between highly similar events. Indeed, univariate activity levels in ventral LPC are associated with a higher probability of successfully retrieving perceptual details of an event relative to gist-based false remembering (Guerin et al., 2012). Therefore, it will be of interest to further characterize dissociations between category and event-level reactivation and to test whether the relative strength of these measures is related to subtle differences in memory quality (Johnson et al., 1988) or if it is predictive of memory errors or distortions that occur during retrieval (Johnson and Raye, 1998; Kuhl and Wagner, 2009; Schacter et al., 2011).

Footnotes

This work was supported by the National Institutes of Health (Grant R01-EY014193 to M.M.C. and Grant EY019624-02 to B.A.K.).

The authors declare no competing financial interests.

References

- Baddeley A. The episodic buffer: A new component of working memory? Trends Cogn Sci. 2000;4:417–423. doi: 10.1016/S1364-6613(00)01538-2. [DOI] [PubMed] [Google Scholar]

- Buchsbaum BR, Lemire-Rodger S, Fang C, Abdi H. The neural basis of vivid memory is patterned on perception. J Cogn Neurosci. 2012;24:1867–1883. doi: 10.1162/jocn_a_00253. [DOI] [PubMed] [Google Scholar]

- Buckner RL, Wheeler ME. The cognitive neuroscience of remembering. Nat Rev Neurosci. 2001;2:624–634. doi: 10.1038/35090048. [DOI] [PubMed] [Google Scholar]

- Cabeza R, Ciaramelli E, Olson IR, Moscovitch M. The parietal cortex and episodic memory: an attentional account. Nat Rev Neurosci. 2008;9:613–625. doi: 10.1038/nrn2459. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chadwick MJ, Hassabis D, Maguire EA. Decoding overlapping memories in the medial temporal lobes using high-resolution fMRI. Learn Mem. 2011;18:742–746. doi: 10.1101/lm.023671.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christophel TB, Hebart MN, Haynes JD. Decoding the contents of visual short-term memory from human visual and parietal cortex. J Neurosci. 2012;32:12983–12989. doi: 10.1523/JNEUROSCI.0184-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobbins IG, Wagner AD. Domain-general and domain-sensitive prefrontal mechanisms for recollecting events and detecting novely. Cereb Cortex. 2005;15:1768–1778. doi: 10.1093/cercor/bhi054. [DOI] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA. Experience-dependent representation of visual categories in parietal cortex. Nature. 2006;443:85–88. doi: 10.1038/nature05078. [DOI] [PubMed] [Google Scholar]

- Gordon AM, Rissman J, Kiani R, Wagner AD. Cortical reinstatement mediates the relationship between content-specific encoding activity and subsequent recollection decisions. Cereb Cortex. 2013 doi: 10.1093/cercor/bht194. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guerin SA, Robbins CA, Gilmore AW, Schacter DL. Interactions between visual attention and episodic retrieval: Dissociable contributions of parietal regions during gist-based false recognition. Neuron. 2012;75:1122–1134. doi: 10.1016/j.neuron.2012.08.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutchinson JB, Uncapher MR, Wagner AD. Posterior parietal cortex and episodic retrieval: Convergent and divergent effects of attention and memory. Learn Mem. 2009;16:343–356. doi: 10.1101/lm.919109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutchinson JB, Uncapher MR, Weiner KS, Bressler DW, Silver MA, Preston AR, Wagner AD. Functional heterogeneity in posterior parietal cortex across attention and episodic memory retrieval. Cereb Cortex. 2014;24:49–66. doi: 10.1093/cercor/bhs278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson JD, Suzuki M, Rugg MD. Recollection, familiarity, and content-sensitivity in lateral parietal cortex: A high-resolution fmri study. Front Hum Neurosci. 2013;7:219. doi: 10.3389/fnhum.2013.00219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MK, Raye CL. False memories and confabulation. Trends Cogn Sci. 1998;2:137–145. doi: 10.1016/S1364-6613(98)01152-8. [DOI] [PubMed] [Google Scholar]

- Johnson MK, Foley MA, Suengas AG, Raye CL. Phenomenal characteristics of memories for perceived and imagined autobiographical events. J Exp Psychol Gen. 1988;117:371–376. doi: 10.1037/0096-3445.117.4.371. [DOI] [PubMed] [Google Scholar]

- Kamitani Y, Sawahata Y. Spatial smoothing hurts localization but not information: Pitfalls for brain mappers. Neuroimage. 2010;49:1949–1952. doi: 10.1016/j.neuroimage.2009.06.040. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Mur M, Bandettini P. Representational similarity analysis-connecting the branches of systems neuroscience. Front Syst Neurosci. 2008;2:4. doi: 10.3389/neuro.01.016.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Wagner AD. Forgetting and retrieval. In: Berntson GG, Cacioppo JT, editors. Handbook of neuroscience for the behavioral sciences. Hoboken, NJ: Wiley; 2009. pp. 586–605. [Google Scholar]

- Kuhl BA, Rissman J, Chun MM, Wagner AD. Fidelity of neural reactivation reveals competition between memories. Proc Natl Acad Sci U S A. 2011;108:5903–5908. doi: 10.1073/pnas.1016939108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Bainbridge WA, Chun MM. Neural reactivation reveals mechanisms for updating memory. J Neurosci. 2012;32:3453–3461. doi: 10.1523/JNEUROSCI.5846-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Rissman J, Wagner AD. Multi-voxel patterns of visual category representation during episodic encoding are predictive of subsequent memory. Neuropsychologia. 2012;50:458–469. doi: 10.1016/j.neuropsychologia.2011.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuhl BA, Johnson MK, Chun MM. Dissociable neural mechanisms for goal-directed versus incidental memory reactivation. J Neurosci. 2013;33:16099–16109. doi: 10.1523/JNEUROSCI.0207-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque KF, Smith ME, Carr VA, Witthoft N, Grill-Spector K, Wagner AD. Global similarity and pattern separation in the human medial temporal lobe predict subsequent memory. J Neurosci. 2013;33:5466–5474. doi: 10.1523/JNEUROSCI.4293-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson IR, Berryhill M. Some surprising findings on the involvement of the parietal lobe in human memory. Neurobiol Learn Mem. 2009;91:155–165. doi: 10.1016/j.nlm.2008.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Op de Beeck HP. Against hyperacuity in brain reading: Spatial smoothing does not hurt multivariate fmri analyses? Neuroimage. 2010;49:1943–1948. doi: 10.1016/j.neuroimage.2009.02.047. [DOI] [PubMed] [Google Scholar]

- Polyn SM, Natu VS, Cohen JD, Norman KA. Category-specific cortical activity precedes retrieval during memory search. Science. 2005;310:1963–1966. doi: 10.1126/science.1117645. [DOI] [PubMed] [Google Scholar]

- Rissman J, Greely HT, Wagner AD. Detecting individual memories through the neural decoding of memory states and past experience. Proc Natl Acad Sci U S A. 2010;107:9849–9854. doi: 10.1073/pnas.1001028107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ritchey M, Wing EA, LaBar KS, Cabeza R. Neural similarity between encoding and retrieval is related to memory via hippocampal interactions. Cereb Cortex. 2013;23:2818–2828. doi: 10.1093/cercor/bhs258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schacter DL, Slotnick SD. The cognitive neuroscience of memory distortion. Neuron. 2004;44:149–160. doi: 10.1016/j.neuron.2004.08.017. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Guerin SA, St Jacques PL. Memory distortion: an adaptive perspective. Trends Cogn Sci. 2011;15:467–474. doi: 10.1016/j.tics.2011.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sestieri C, Corbetta M, Romani GL, Shulman GL. Episodic memory retrieval, parietal cortex, and the default mode network: functional and topographic analyses. J Neurosci. 2011;31:4407–4420. doi: 10.1523/JNEUROSCI.3335-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sestieri C, Capotosto P, Tosoni A, Luca Romani G, Corbetta M. Interference with episodic memory retrieval following transcranial stimulation of the inferior but not the superior parietal lobule. Neuropsychologia. 2013;51:900–906. doi: 10.1016/j.neuropsychologia.2013.01.023. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the rhesus monkey. J Neurophysiol. 2001;86:1916–1936. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- Shimamura AP. Episodic retrieval and the cortical binding of relational activity. Cogn Affect Behav Neurosci. 2011;11:277–291. doi: 10.3758/s13415-011-0031-4. [DOI] [PubMed] [Google Scholar]

- Spaniol J, Davidson PS, Kim AS, Han H, Moscovitch M, Grady CL. Event-related fmri studies of episodic encoding and retrieval: Meta-analyses using activation likelihood estimation. Neuropsychologia. 2009;47:1765–1779. doi: 10.1016/j.neuropsychologia.2009.02.028. [DOI] [PubMed] [Google Scholar]

- Staresina BP, Henson RN, Kriegeskorte N, Alink A. Episodic reinstatement in the medial temporal lobe. J Neurosci. 2012;32:18150–18156. doi: 10.1523/JNEUROSCI.4156-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Todd MT, Nystrom LE, Cohen JD. Confounds in multivariate pattern analysis: theory and rule representation case study. Neuroimage. 2013;77:157–165. doi: 10.1016/j.neuroimage.2013.03.039. [DOI] [PubMed] [Google Scholar]

- Tosoni A, Galati G, Romani GL, Corbetta M. Sensory-motor mechanisms in human parietal cortex underlie arbitrary visual decisions. Nat Neurosci. 2008;11:1446–1453. doi: 10.1038/nn.2221. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toth LJ, Assad JA. Dynamic coding of behaviourally relevant stimuli in parietal cortex. Nature. 2002;415:165–168. doi: 10.1038/415165a. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activation in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Vilberg KL, Rugg MD. Memory retrieval and the parietal cortex: A review of evidence from a dual-process perspective. Neuropsychologia. 2008;46:1787–1799. doi: 10.1016/j.neuropsychologia.2008.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vilberg KL, Rugg MD. Functional significance of retrieval-related activity in lateral parietal cortex: evidence from fMRI and ERPs. Hum Brain Mapp. 2009;30:1490–1501. doi: 10.1002/hbm.20618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vilberg KL, Rugg MD. The neural correlates of recollection: Transient versus sustained FMRI effects. J Neurosci. 2012;32:15679–15687. doi: 10.1523/JNEUROSCI.3065-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner AD, Shannon BJ, Kahn I, Buckner RL. Parietal lobe contributions to episodic memory retrieval. Trends Cogn Sci. 2005;9:445–453. doi: 10.1016/j.tics.2005.07.001. [DOI] [PubMed] [Google Scholar]

- Ward EJ, Chun MM, Kuhl BA. Repetition suppression and multi-voxel pattern similarity differentially track implicit and explicit visual memory. J Neurosci. 2013;33:14749–14757. doi: 10.1523/JNEUROSCI.4889-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wheeler ME, Buckner RL. Functional dissociation among components of remembering: Control, perceived oldness, and content. J Neurosci. 2003;23:3869–3880. doi: 10.1523/JNEUROSCI.23-09-03869.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson M. MRC psycholinguistic database: Machine-usable dictionary, version 2.00. Behavior Research Methods, Instruments, & Computers. 1988;20:6–10. doi: 10.3758/BF03202594. [DOI] [Google Scholar]

- Xue G, Dong Q, Chen C, Lu Z, Mumford JA, Poldrack RA. Greater neural pattern similarity across repetitions is associated with better memory. Science. 2010;330:97–101. doi: 10.1126/science.1193125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xue G, Dong Q, Chen C, Lu ZL, Mumford JA, Poldrack RA. Complementary role of frontoparietal activity and cortical pattern similarity in successful episodic memory encoding. Cereb Cortex. 2013;23:1562–1571. doi: 10.1093/cercor/bhs143. [DOI] [PMC free article] [PubMed] [Google Scholar]