Abstract

In the 1990’s, seminal work from Newsome, Movshon, and colleagues made it possible to study the neuronal mechanisms of simple perceptual decisions. The key strength of this work was the clear and direct link between neuronal activity and choice processes. Since then, a great deal of research has extended these initial discoveries to more complex forms of decision-making, with the goal of bringing the same strength of linkage between neural and psychological processes. Here we discuss the progress of two such research programs, namely our own, that are aimed at understanding memory-guided decisions and reward-guided decisions. These problems differ in the relevant brain areas, in the progress that has been achieved, and in the extent of broader understanding achieved so far. However, they are unified by the use of theoretical insights about how to link neuronal activity to decisions.

Introduction

During the past 30 years a number of physiological, statistical and behavioral tools have emerged that allow neuroscientists to establish compelling links between the behavior of single neurons and perceptual experience. The development of these tools has given us great insight into the neural mechanisms of decision-making. Pioneering work by Newsome, Movshon and colleagues (Newsome et al., 1989) revealed remarkable similarities between neural responses to moving stimuli of a population of MT neurons while monkeys reported the direction of these stimuli, leading to the conclusion that activity of these neurons may contribute to perceptual decisions. This conclusion subsequently received strong support from a series of microstimulation studies showing that direct electrical activation of small groups neurons selective for a given stimulus dimension can alter the reports make about those stimuli, suggesting that they influence the perceptual decisions monkeys make (Salzman et al., 1990; Bisley et al., 2001; Nichols & Newsome, 2002; Cohen & Newsome, 2004; DeAngelis & Newsome, 2004; Afraz et al., 2006; Shiozaki et al., 2012).

A measure, termed choice probability has been subsequently introduced (Britten et al., 1996). This term refers to specific mathematical approach that allows an examination of the relationship between neuronal activity and perceptual decisions. This measure, based on signal detection theory (Green & Swets, 1966), allows scientists to relate activity of individual neurons to perceptual decisions on a trial-by-trial basis and has since been used by many sensory neurophysiologists searching for a direct link between neural representation and perception (eg. (Dodd et al., 2001; Parker et al., 2002; Uka & DeAngelis, 2004; Liu & Newsome, 2006; Palmer et al., 2007). Many of these studies recorded from visual neurons specialized for processing of fundamental dimensions of visual motion stimuli in monkeys trained to report the identity of such stimuli. The results revealed weak but statistically significant links between activity of neurons processing relevant sensory dimension and the behavioral report, suggesting that perceptual decisions made by the monkeys were likely to rely on the activity of neurons processing stimulus dimension relevant to the behavioral task.

These studies and the techniques they introduced have been enormously influential in several respects. First, they have led to a consensus view – controversial at first – that the decision-making is a problem that is tractable by neuroscientific methods. Second, they have paved the way for a host of studies examining the mechanisms behind other types of basic perceptual decisions, including somatosensory, auditory, and olfactory decisions (Romo & Salinas, 2003; Uchida et al., 2006; Cohen & Maunsell, 2009; Cohen & Newsome, 2009). Third, they have helped us develop a more sophisticated understanding of the functions of many brain regions that lie between the sensory inputs and motor outputs. Finally, these studies have served as the foundation for studies of more complex types of decision-making.

This paper focuses on two types of complex decision-making problems that go beyond the basic perceptual classification. We will focus, in turn, on memory-guided sensory-based decisions and reward-based decisions. Both types involve classification processes, but involve additional psychological factors – memory and reward processing, respectively. We will make extensive use of our own research in illustrating the problems that are encountered and approaches that can be taken to overcome them.

Sensory-based decisions

Here, we describe a series of studies aimed at identifying the neural basis of a more complex visual discrimination task requiring subjects to compare two sequentially presented moving stimuli, S1 and S2, and report whether their motion directions are the same or different. In contrast to the more commonly used tasks requiring identification of a single stimulus, in this task, often faced by active observers, the decision can only be made after the presentation of the second stimulus and is based on the comparison of that stimulus with stimulus that is no longer present (Fig 1a). Thus, the neural code underlying the perceptual report required by this task must include the information about the current and the previous stimulus. In other words, in addition to perceptual discrimination, this task requires active use of working memory. This factor means that subjects must compare an active representation to a stored one.

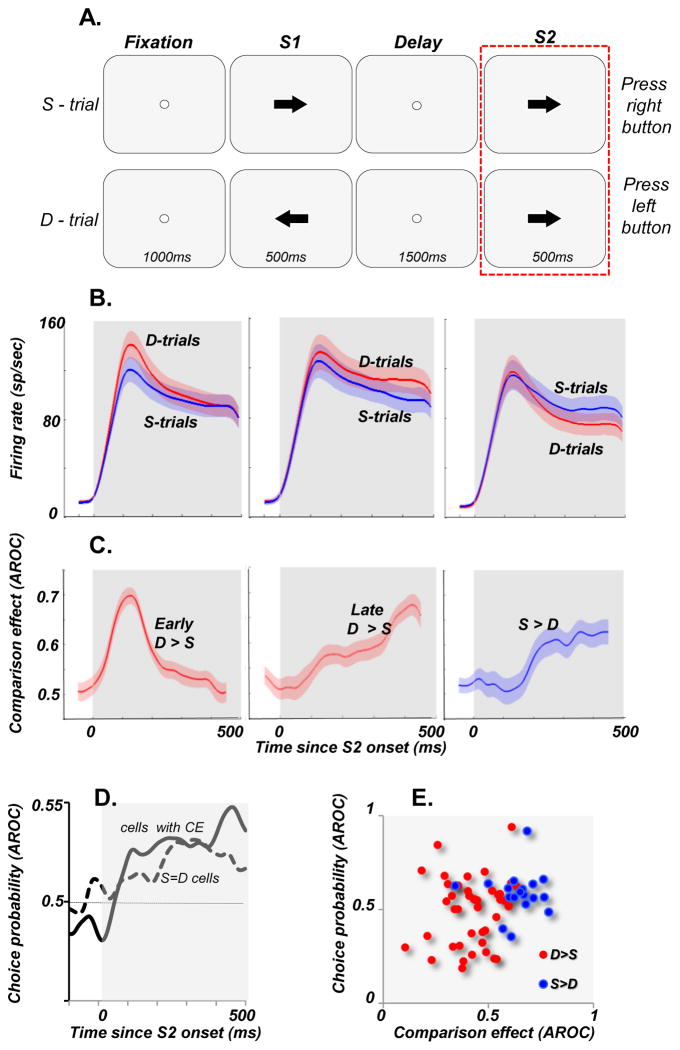

Figure 1. MT activity at the time of decision during comparisons of motion directions.

(A) The diagrams outline the temporal sequences of the events during the task. The monkeys fixated a spot for 1000ms before being presented with two stimuli, S1 and S2, lasting 500ms each and separated by a 1500ms delay. S1 and S2 moved either in the same (S-trials) or in opposite (D-trials) directions. They pressed one of two pushbuttons to report whether S1 and S2 moved in the same or in different directions. The rectangle around the S2 component highlights the portion of the trial analyzed here. During the task stimuli consisted of random dots displaced in directions chosen from a predetermined distribution. The width of this distribution determined the range of directions within which individual dots move and was varied between 0° (all dots moving in the same direction) and 360° (dots moving in random directions). The data in B & C are based on trials with coherent motion (0o range), while the data in D are based on trials with S1 consisting of random motion (360o range). Both stimuli were placed in the neuron’s receptive field (RF) and during S2 moved in the preferred direction for that neuron. (B) Average responses during S-trials (blue curves) and D-trials (red curves). Three distinct groups of cells showed differences in their responses to identical stimuli moving in the neuron’s preferred direction: cells with stronger activity on D-trials early in the response (left plot; early D>S cells, n=34), cells with stronger activity on D trials late in the response (middle plot, late D>S cells, n=27), and cells with stronger activity on S-trials (right plot, S>D cells, n=32). (C) Differences between the two response curves shown in B (i.e. comparison effects), computed with ROC analysis shown separately for each group of cells. (D) Choice probability (CP) computed separately for neurons with comparison effects (CP = 0.53± 0.02; p = 0.145, n = 62) and neurons with no comparison effects (CP = 0.53±0.01, p=0.012; n = 67). Note, that the overall CP for cells with CE failed to reach significance, while neurons with no comparison effects (S=D) showed significant choice-related activity, indicative of higher activity prior to “same” report. However, when CP was computed separately for each cell group, one cell group, showed consistently significant CP (S>D, CP=0.58, p=0.03; see Lui and Pasternak, 2011). (E) Correlation between CE and CP computed for individual cells was weak, failing to reach statistical significance (r=0.24, p=0.06).

To identify the neural mechanisms that allow the animal to make decisions based on stored representations of stimuli, we focused on activity associated with the comparison phase of the task, the period during and following the presentation of S2. We examined this activity in two cortical regions likely to provide information relevant to the motion comparison task: area MT, which has a well-documented role in processing of visual motion, and dorsolateral prefrontal cortex (DLPFC; areas 8, 9, 46), which has a documented role in representing behaviorally relevant motion (Zaksas & Pasternak, 2006; Hussar & Pasternak, 2009) and strongly implicated in sensory maintenance and executive control (Miller & Cohen, 2001a). Our results, briefly described below, provide a convincing link between neuronal activity recorded in both areas and the choices made by the animals.

Choice-related activity in MT during direction comparison task

In a recently published report we provided detailed account of choice-related activity in MT during the direction comparison task, shown in Figure 1 (Lui & Pasternak, 2011). In this task, the animals were trained to report whether two random-dot stimuli, S1 and S2, separated by a delay moved in the same directions (S-trials) or in different directions (D-trials) by pressing one of two response buttons. Both types of trials were equally likely to occur and were interleaved on a trial-by-trial basis. Task difficulty was manipulated by varying the coherence of the random-dot stimulus presented during S1 (for details see (Zaksas & Pasternak, 2006; Lui & Pasternak, 2011)). To determine whether MT neurons contribute to the comparison of directions required by the task, we examined responses to the preferred direction presented during the second stimulus, S2, on S-trials when it was preceded by the same direction and on D-trials when it was preceded by a different direction. We reasoned that the dependence of firing rate during the S2 on the sensory properties of the first stimulus, would be indicative of MT having access to remembered information. We found that many MT neurons showed this pattern. In fact, this modulation was of two types, each represented by separate sets of neurons: those with stronger responses on “same” trials (S>D neurons) and those responding more on “different” trials (D>S neurons). Furthermore, we observed that D>S responses, were of two types, each represented by a distinct group of neurons with different temporal profiles, one occurring shortly after S2 onset and the other occurring a few hundreds of milliseconds later (fig 1B,C). The presence of these effects, we termed comparison effects, shows that after the onset of S2, firing rates of many MT neurons depend on both the current and the remembered stimulus.

We examined whether this response modulation could provide the basis for the perceptual report made at the end of each trial by first computing choice probability, the approach that allowed us to determine whether activity preceding the report predicts specific choices made by the monkeys at the end of each trial. We limited this analysis to trials with S1 containing random motion and S2 moving coherently in the neuron’s preferred direction (see (Lui & Pasternak, 2011)). Under these conditions the performance was at chance and the animals were equally likely to report trials as “same” or “different” (i.e. press right or left button). Because stimulus conditions were identical during the two types of trials, any difference in activity associated with each report could not have been driven by stimulus differences. With this approach we compared activity associated with each report and found significant but weak choice-related activity during S2, linking MT to decisions made by the monkeys at the end of each trial (fig 1D).

We then examined whether the robust comparison effects recorded during S2 were utilized in these perceptual reports. We found that while in some cells these effects co-existed with choice-related activity, in other cells they were absent despite the presence of significant CP, suggesting that the link between the comparison effects and choice-related activity is weak. Indeed, the correlation between comparison effects and choice probability examined on a cell-by-cell basis was relatively weak (Fig 1E).

Despite the weak correlation of comparison effects with decision-related signals, the contribution of MT to the comparison stage of the direction discrimination task is supported by the results of our earlier lesion study (Bisley & Pasternak, 2000). In that study, we examined the effects of a unilateral MT lesion on motion discrimination by presenting S1 and S2 at separate locations represented by the intact (ipsilateral) and lesioned (contralateral) regions of MT. This allowed us to separately assess the contribution of MT to encoding/retention (S1 & delay) and comparison (S2) phases of the task. The deficit in the accuracy of direction discrimination was profound but only when the comparison stimulus was presented in the affected field. When the conditions were reversed and that stimulus appeared in the intact and the initial stimulus was placed in the lesioned field, the precision of direction was largely unaffected. This observation highlights the importance of MT for the comparison process, complementing the finding of comparison signals recorded in MT during S2 (Lui & Pasternak, 2011). These results further strengthen the notion that this sensory region participates not only in processing of current motion stimuli but also in the circuitry underlying comparisons between current and remembered stimuli (Pasternak & Greenlee, 2005).

On the other hand, these data also suggest that MT, with its weak choice-related activity and the absence of correlation with signals reflecting sensory comparisons, is unlikely to be sufficient to account for behavioral choices made by our monkeys. Thus, it is necessary to consider the contribution of neurons in other cortical regions, starting with DLPFC, the area strongly associated with sensory maintenance and decision making (Miller & Cohen, 2001b).

Decision-related activity in the dorsolateral prefrontal cortex

To address the relationship between neural activity and perceptual choice during motion comparisons, we focused on DLPFC, the region directly and indirectly interconnected with MT (Barbas, 1988; Petrides & Pandya, 2006; Ninomiya et al., 2012) and with documented selectivity for visual motion used during motion comparison tasks (Zaksas & Pasternak, 2006; Hussar & Pasternak, 2009). Recordings were carried out during a task that was similar to that used during MT recordings (Hussar & Pasternak, 2012). In the task used to probe DLPFC, the monkeys also compared two sequentially moving random dots that moved in the same or different directions. Whereas in the MT experiments, we controlled task difficulty by adjusting coherence, here we controlled it by adjusting the difference in direction between the two stimuli.

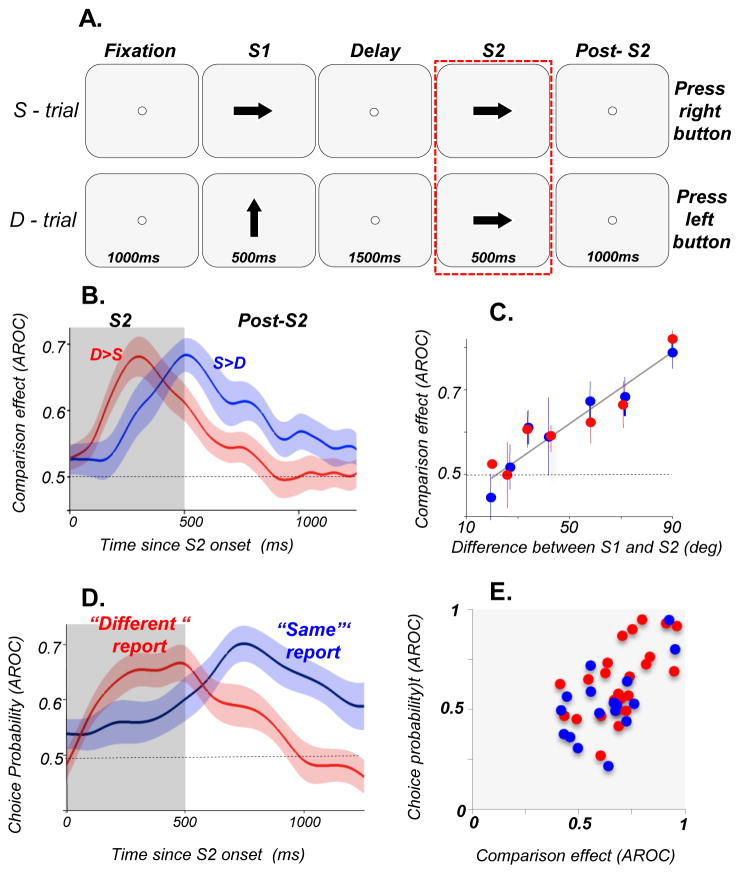

As in MT, we found that on trials with the largest differences between S1 and S2, responses were modulated by the preceding direction. As in MT, we observed this modulation in the form of stronger responses on “same” trials (S>D cells) or on “different trials” (D>S cells), in two separate groups of neurons. However, in contrast to MT, which showed two temporal profiles of D>S modulations, early and late, in the DLPFC this type of modulation occurred later in the response, towards the end of S2 and was represented by a single group of cells (Fig 2B). Furthermore, same-different modulations persisted after the offset of S2, with D>S comparison effect emerging earlier. It is not clear whether the activity recorded after S2 offset was also characteristic of MT, since during MT recordings such data were not collected. Because during this task we used both small and large differences between S1 and S2 directions, we were able to show that the magnitude of comparison effects decreased as the two directions became more similar (Fig 2C). This relationship paralleled behaviorally measured accuracy of direction discrimination and we found that comparison effect was no longer present for pairs of directions that the monkeys were unable to discriminate reliably (Hussar & Pasternak, 2012). This relationship between comparison effect and discrimination performance was further confirmed by finding lower incidence of comparison effects in the monkey with less accurate direction discrimination (see figs 7D & E in (Hussar & Pasternak, 2012)). It is noteworthy, that we observed similar effects during an analogous task involving comparisons of stimulus speeds (Hussar & Pasternak, 2013b). Taken together, these observations support the notion that response modulation during S2 represents the difference between directions of the two stimuli being compared during the task. While these signals appear to reflect the computation required by the task, it is not clear whether they are utilized in the decision process.

Figure 2. DLPFC activity at the time of decision during comparisons of motion directions.

(A) Diagram of the direction comparison task showing the two types of trials, S-trials and D-trials (Hussar & Pasternak, 2012). On S-trials, S1 and S2 separated by 1500ms delay moved in the same direction while on D-trials, S1 and S2 moved in different directions. The animals reported whether the two directions were the same or different by pressing one of two response buttons. They were allowed to respond 1000ms after S2 offset. During each session, direction difference thresholds were measured by varying the difference between directions in S1 and S2. The rectangle around the S2 trial components highlights the portion of the trial relevant to the analysis described here. (B) Comparison effects (CEs) recorded during and after S2. Average CE for S>D cells (blue, n=20) and D>S cells (red, n=26) during S2 and post-S2. (C) Dependence of CE on the difference in direction between S1 and S2: D>S (red); S>D cells (blue). The correlation between CE and direction difference was highly significant (p<7.5×106). (D) Choice probability of DLPFC neurons more active before “different” (n=24) and before “same” (n=17) reports. Shadings represent ±SEM. (E) Correlation between CE and CP computed for individual S>D and D>S cells which showed both CE and CP. The two measures were strongly correlated during 200–400ms (p=1.3×107) shown here, as well as later in the trial, 600–800ms after S2 onset (not shown; p=1.2×104).

To determine whether the activity recorded during and after the comparison stimulus (S2) is predictive of choices made by the animals, we computed choice probability. This analysis, carried out on the subset of trials in which S1 and S2 moved in the same direction, revealed significant choice-related activity in firing rates that appeared shortly after the onset of S2 and persisted until the behavioral report. This activity was of two types: one associated with “same report” and the other with “different report”, paralleling the two types of comparison effects associated with the same and different direction trials.. We found that a third of DLPFC neurons carried both the comparison and choice-related signals and that the comparison effects reliably preceded the choice-related activity, suggesting that at the time of decision, DLPFC neurons represent information about the difference between S1 and S2. Such pattern would be consistent with a possibility that the observed comparison effects are utilized in the decision process. Indeed, we found that the comparison and choice-related activity were strongly correlated. Specifically, neurons “preferring” S-trials also showed more activity prior to the “same” report and neurons preferring D-trials also showing greater activity prior to the “different” report. While this correlation does not prove that the comparison effect provides the basis for the perceptual report, its strength and the consistency in the sign of the two types of signals supports this possibility.

Relative contributions of MT and DLPFC to decisions during motion comparisons

This brief summary of work examining the participation of neurons in two interconnected cortical areas, one specialized in processing of visual motion (area MT) and the other strongly linked to executive control and working memory (DLPFC), highlights an approach based on relating the activity of single neurons to choices made by the monkeys during a multistage motion discrimination task. In contrast to simpler tasks requiring subjects to identify aspects of the current stimulus (e.g. direction), in our task the decision must be based on the comparison between two identified stimuli. This task cannot be solved without the information about the previous stimulus stored in working memory. Our results showed that the contribution of MT to the direction task is not limited to processing the current stimulus, since its neurons also represent the information about the remembered direction. Recordings in the DLPFC revealed that its contribution to motion comparisons is also not limited to a single component of the task. Rather, its neurons participate in all phases of the task, faithfully representing behaviorally relevant motion, showing stimulus-related and anticipatory activity during the delay, and representing the difference between the two comparison stimuli (Hussar & Pasternak, 2009; Hussar & Pasternak, 2010; Hussar & Pasternak, 2012; Hussar & Pasternak, 2013a). Thus, our data point to similarities in the way MT and DLPFC neurons represent behaviorally relevant visual motion and in the nature of their activity during the comparison phase of the task. While the presence of direction selectivity in DLPFC can be attributed to the influences arriving from MT, the source of comparison effects in the two areas is more difficult to identify. The early D>S signal appears in MT soon after the onset of S2, before neurons in DLPFC display D>S modulation. On the other hand, the other two types of comparison effects in MT occur relatively late after S2 onset and are substantially weaker than those recorded in DLPFC. These similarities and differences in comparison effects between the two areas point to a scenario in which they both participate in the network underlying the comparison process, with MT providing initial signals originating the process and DLPFC supplying MT and presumably other components of that network with the information underlying the upcoming choice. Strong correlation between comparison effects and choice-related activity in DLPFC, but not in MT, point to prefrontal neurons as a likely source of signals that ultimately lead to perceptual reports.

In this paper focused exclusively on cortical activity recorded during comparisons of visual motion. However, such signals are not unique to visual motion and analogous comparison effects have also been observed in prefrontal and visual processing neurons during tasks involving other stimulus dimensions (e.g. (Miller et al., 1993; Miller et al., 1996; Freedman et al., 2003; Cromer et al., 2011; Hayden & Gallant, 2013). For example, Freedman and colleagues (Freedman et al., 2003) used a match-to-category task to train monkeys to distinguish between categories of shapes and observed that responses in the PFC during the comparison stimulus (test) were modulated by the shape category that appeared during the sample. As in our study, some neurons responded more strongly during the match (“same”) trials and some during the non-match (”different”) trials. Parallel recordings in inferotemporal cortex revealed similar response modulation. Analogous comparison effects recorded during the same task were also observed in premotor cortex (Cromer et al., 2011). Furthermore, signals reflected choice-related activity have also been reported in a number of cortical areas during tasks involving tactile stimuli (Hernández et al., 2010).

Reward-based decisions

In perceptual decisions, subjects must judge the properties of potentially ambiguous sensory stimuli but their value function is assumed to be trivial: their goal is simply correct performance (Britten et al., 1996). In contrast, many real world decisions require additional calculations – subjects need to consider their own value functions, which may not be normatively determined (Glimcher, 2002; Rangel et al., 2008; Padoa-Schioppa, 2011). For example, different people have different attitudes about risk: some people are risk-seeking and others are risk-averse. Neither of these attitudes is wrong; instead they are assumed to reflect different mappings between objective, external values and subjective values. Value functions are necessarily covert, although they can be inferred through a large number of decisions (Glimcher, 2002; Montague & Berns, 2002). Understanding the neural basis of reward-based decisions requires dealing with the additional uncertainty imposed by invisible value functions.

Relative to perceptual decision-making, including memory-guided decision-making, the neuroscience of reward-based decision-making remains relatively unexplored. Current studies have focused on the simplest questions: understanding which reward structures in the brain signal information about rewards and how they do it (Schultz, 2006; Balleine et al., 2007; Rushworth et al., 2011). For the most part, it is quite easy to find neurons whose activity correlates with reward value and with other aspects of valuation (Kennerley et al., 2011). The trick is carefully determining what variable is encoded, while controlling for other variables that are highly correlated, and what role that signal plays in cognition (Cook & Maunsell, 2002; Leathers & Olson, 2012). For example, motivation is distinct from reward: it is closely correlated with value in the positive domain but strongly anti-correlated in the negative (punisher) domain (Roesch & Olson, 2004). In other words, a strongly negative reinforcer may motivate avoidance just as strongly as a strongly positive reinforcer motivates approach. Thus, studies that use only positive reinforcers may confuse reinforcer size with motivation. Early studies using only positive rewards suggested that the lateral intraparietal area (LIP), tracks the reward value of shifting gaze to a specific location (Platt & Glimcher, 1999). However, a recent study using both positive and negative ones demonstrates that activity of these neurons tracks motivation, not reward (Leathers & Olson, 2012). Another variable that is closely related to both reward and punishment is attention, which is often frequently confused with reward size (Maunsell, 2004). Dissociating effects of reward from ancillary effects that correlate with it is seldom trivial (Bendiksby & Platt, 2006). Neuroscientists have now more or less agreed on a central subset of reward structures (e.g. (Platt & Glimcher, 1999; Averbeck et al., 2006; Paton et al., 2006; Wallis, 2007; Kennerley & Wallis, 2009; Krajbich et al., 2010). These include the orbitofrontal cortex (Wallis, 2007; Padoa-Schioppa, 2011), dorsolateral prefrontal cortex (Barraclough et al., 2004), anterior cingulate cortex (Hayden et al., 2009b; Hayden et al., 2011a), posterior parietal cortex (Platt & Glimcher, 1999), the dopamine system (Schultz, 2006), the ventral striatum (Cai et al., 2011), and the amygdala (Gottfried et al., 2003; Paton et al., 2006). However, this is more or less the state of the field. The precise function and role in cognition of each of these remains contentiously debated. Here, we discuss one area that has received particular attention, which is a site where we have often recorded, the dorsal portion of the anterior cingulate sulcus (dACC).

Neural correlates of reward-based choice

The dACC has long been closely associated with reward representations. Neurons in this region respond following rewarding outcomes (Niki & Watanabe, 1979; Ito et al., 2003; Williams et al., 2004; Matsumoto et al., 2007), and track the proximity of rewards (Shidara & Richmond, 2002). These signals maintain a representation of reward outcomes across trials (Seo & Lee, 2007; Bernacchia et al., 2011). Formal classifications show that these neurons signal reward prediction errors (Amiez et al., 2006; Matsumoto et al., 2007; Sallet et al., 2007) or reflect subjective values of options, independent of stimulus attributes (Kennerley et al., 2008; Cai & Padoa-Schioppa, 2012). These results implicate dACC directly in decisions (Walton et al., 2003; Williams et al., 2004).

However, as noted above, reward tends to correlate with a variety of other factors that are not reward per se. Thus for example, rewards motivate both learning and small changes in responses strategy (i.e. adjustments), and more generally affect the patterns by which we behave in the future. One basic taxonomy is that signals can either be monitoring signals or control signals (Botvinick et al., 2001; Kerns et al., 2004). Monitoring signals track the size of a rewarding outcome and carry a representation of this for use by any downstream structures. Control signals carry information that directly affects ongoing cognitive processes. Control signals may track the size of a rewarding outcome as well, but do so only incidentally. While control signals and monitoring signals are often identical, control depends on contextual factors that influence behavior and monitoring signals do not. Thus, in the case of reward areas, and reward signals, correlation is not enough to infer representation.

Thus, the mechanisms of reward-based decisions comprise at least two distinct processes: an acute reward value comparison and selection process, and a monitoring and adjustment process. Both are absolutely critical to reward-based decisions. Here we focus on the monitoring and adjustment process, although we will briefly discuss the comparison processes as well.

We have recently performed two studies to investigate the role of dACC in reward-based decisions (Hayden et al., 2009b; Hayden et al., 2011a). In one study, monkeys performed a difficult decision-making task in which their goal was to find the baited option among seven decoys (Hayden et al., 2009a). The location of the baited location was determined by a stochastic pattern. Its value varied independently from its location, randomly, from trial to trial. In practice, the monkey was able to guess its location about half the time and failed to guess it correctly the other half. The key element was that monkeys found out what the reward was for the baited option regardless of their decision. On trials when they failed to guess it, the reward was fictive – meaning it would have occurred had they chosen the baited option.

Because we interleaved real and fictive outcomes within single neuron trials, we were able to compare activity within single neurons for both types of outcomes. Interestingly, we saw a common coding scheme for real and fictive rewards – larger responses for larger rewards, regardless of whether they were read or fictive. This finding is difficult to reconcile with the observation that dACC neurons solely track outcomes of decisions – fictive decisions are, by definition, outcomes that did not occur. They suggest that one of two alternative hypotheses is true – either that dACC tracks multiple types of outcomes and that, presumably, downstream decoders have access to information about whether the outcome was real through other channels – or else that dACC doesn’t track outcomes, but tracks some variable that is the same whether the outcome was real or not. What could this variable be?

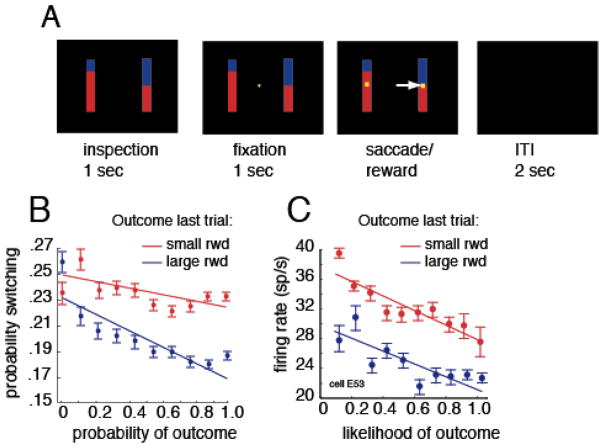

Rewards have direct effects on behavior. They often lead to quick changes in strategy depending on the type of information they give about upcoming payoffs. Thus neurons activation patterns that appear to be reward signals may instead be signals that direct a change in strategy. In our fictive learning task, larger outcomes tended to promote an acute adjustment in strategy whether they were real or fictive (Figure 3).

Figure 3. Schematic of task and results from fictive learning experiment.

A. Illustration of structure of fictive learning task (Hayden et al, 2009). Stimuli were presented on a dark computer monitor, illustrated by black rectangle. On each trial, a central fixation spot (yellow) appeared, with eight white squares arrayed in a large circle around it. Following a brief hold period, subjects were free to shift gaze to one of the squares to select it. Selection of the square led to an immediate end of the trial. All squares turned color; seven turned red, one turned one of six other colors (see right hand side of panel). Colors validly predicted the reward associated with the chosen option (rewal reward) or, for the seven unchosen options, the reward that would have been given for that option (fictive reward). B. Schematic of behavior on next trial (likelihood of choosing the optimal target) as a function of reward chosen (gray line) or the value of the oddball unchosen reward (black line). Larger rewards led to larger probability of choosing optimally, regardless of whether they were real or fictive. (Note that there is a strong ceiling effect for real rewards). C. and D. Illustration of peri-stimulus time histogram (PSTH) of firing rate for one neuron aligned to time reward is revealed (time 0) for real (C) and fictive (D) rewards. This neuron (and majority of other neurons, data not shown) exhibit higher firing rates following larger reward, regardle sof whether they were real of fictive.

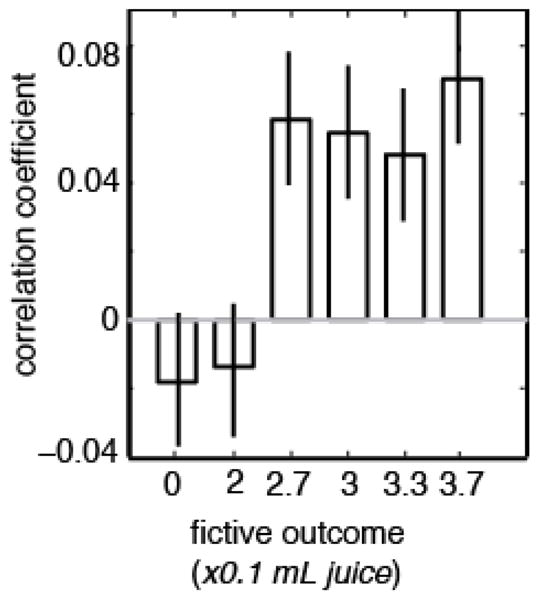

To study this effect more directly, we examined the correlation between behavioral switching and firing rate, holding reward size constant (Figure 4). We did this by performing the analysis separately for each reward size. This analysis has a lot in common with a choice probability analysis. Whereas a choice probability analysis regresses out variations in stimulus properties and correlates residual variation in firing rate with variation in perceptual report, our analysis correlates residual variation in firing with variation in preference. We found that, for four of the six reward sizes used, residual variation in firing rate after controlling for rewards correlated with likelihood of shifting strategy on the next trial (Figure 4). This finding is consistent with the idea that dACC contributes to the computation and representation of control signals.

Figure 4. Variation in preference is predicted by variation in firing rate.

Plot of average correlation between firing rate in response to fictive rewards for all neurons in dataset and likelihood that monkey would choose optimally on next trial, separated out by reward sizes. For the four larger fictive rewards (2.7, 3, 3.3, and 3.7), there was a significantly positive correlation between firing rate and strategy on next trial. This analysis has many similarities to the choice probability type of analysis.

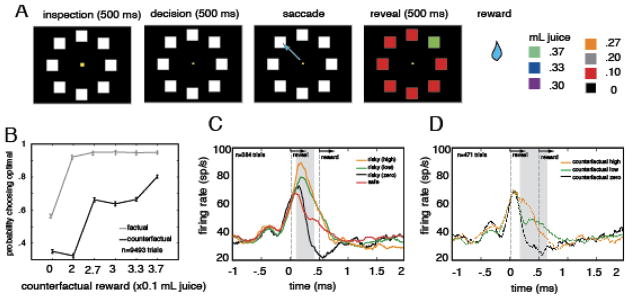

This interpretation is further supported by the results of another study, one that used a different decision-making task (Hayden et al., 2011b). This task is a variant of the explicit gambling task that we have developed to study risk attitudes in rhesus monkeys (Hayden et al., 2008a; Hayden et al., 2010). In this task, monkeys chose between pairs of gambles (Figure 5). Gambles were signaled using vertically oriented bars divided into a red and a blue portion. The size of the red portion indicated the probability that the gamble would yield a small reward and the size of the blue portion indicated the probability the gamble would yield a large reward.

Figure 5. Schematic of task and results from risky choice experiment.

A. Illustration of risky choice task. On each trial, two options appeared and were freely inspected for 1 second. Then monkey fixated and after a brief delay, selected one option by shifting gaze to it. Each option offered a gamble defined by a specific probability (1–100% of a large reward, remainder of probability to a small reward). Following choice, gamble was resolved. B. Monkeys exhibited a bias towards the dominant normative strategy (choose option with greater probability of large reward) to an inferior strategy (choose option with less probability of large reward). In all sessions, monkeys exhibited a greater tendency to switch from the dominant strategy to the inferior one (y-axis) following small rewards than following large rewards. They also exhibited a greater tendency to switch following unexpected rewards, regardless of their sign (x-axis). C. Neuronal responses following outcomes were greater following small rewards than large rewards and following unexpected rewards than expected ones. The patterns of neuronal responses tracked closely the pattern of behavioral results, suggesting that dACC neurons signal likelihood of strategy adjustment, not reward per se.

Like in the fictive learning study, above, we found that dACC neurons respond most strongly following the rewards that result from choices. We found two clear patterns in the size of these responses. First, neuronal activity is stronger in response to smaller reward than to larger reward – this is the opposite of the pattern we observed in the fictive task. This finding is entirely inconsistent with the results of our fictive learning study, and suggests that dACC neurons do not have stable tuning patterns for reward size. Second, we find that responses are greater for unexpected outcomes, regardless of their size. Although these two effects were found to be statistically independent, they pose a problem for decoders: responses to expected small rewards were the same as responses to unexpected large ones. Thus, downstream neurons would not be able to distinguish a surprising large reward from an expected small one.

Thus, either downstream decoder regions have some other way to distinguish rewards, or they don’t care about rewards, but about the effects they have. Indeed, we found that, in this task, surprising rewards and small rewards both motivate switching to a different behavioral strategy. Monkeys generally prefer the option with the greater expected value but, in some cases, switch to the lower valued option. Examining the effect of outcomes on propensity to switch, it is clear that firing rates track switching closely across conditions (Figure 5). Thus, one parsimonious interpretation of these data is that dACC neurons don’t in fact track outcomes, but instead signal trial-to-trial adjustments or switches in strategy.

We have used the choice probability strategy with some success to understand the function of dACC neurons. However, it is not clear that this strategy will solve all the relevant problems. Instead, our findings point to what has been the most successful strategy for making linkages between brain activity and behavior: use of multiple different tasks to study the problem. This is especially useful when the tasks call upon a variety of mappings between variables that correlate with neuronal activity and inputs and outputs.

One major limitation of the work discussed here is that it focuses on one very specific element of reward-based decision making: the way in which the outcomes of one trial influence choices on the next one. These trial-to-trial factors are a critical element of reward-based choices, but are different from the complementary problem of understanding the role of neurons at the time of the decision. We focus here on this trial-to-trial element because, historically, it has been more studied and its mechanisms more fully worked out.

However, several groups have begun to investigate the mechanisms of decision at the time of choice as well (Wallis, 2007; Kennerley & Wallis, 2009; Kennerley et al., 2011). The basic approach has been to identify the types of reward variables encoded in key reward regions during simple and complex choice tasks (Padoa-Schioppa & Assad, 2006; Cai et al., 2011; Hayden et al., 2011b; Padoa-Schioppa, 2011). Although some of these ideas are still in their infancy, there is already strong reason to believe that the key mechanisms by which two values are compared are similar to those by which two traces are compared in memory. First, reward neurons in OFC appear to show persistent activation in working memory for rewards (Lara et al., 2009). Second, neuronal models of memory trace comparison work just as well for rewards as they do for stored memories (Wang, 2012). Third, there is strong evidence in both fMRI and MEG that reward values are compared through a mutual inhibition process that mirrors closely that used to compare percepts in working memory (Hunt et al., 2012; Jocham et al., 2012). Although the bigger picture remains to be worked out, it appears memory and reward based decisions may ultimately rely on common processes (Rushworth et al., 2011).

Overall, these results highlight our limited understanding of the substrates of reward-based decisions. In contrast to perceptual decisions, which are based on relatively well-understood neural representation of sensory stimuli, the study of neural representation of reward-based decisions is challenged by the difficulty defining the reward or other factors leading to the behavioral choice

Summary: putting it all together

The neuroscience of decision-making was worked out on what are sometimes called perceptual judgments: identifying features of sensory stimuli based on their perceptual qualities. Much of the basic theoretical and empirical work made it possible to study the neuroscience of decision-making, and to understand how it works. Here, we have discussed two more complicated forms of decision-making.

During the perceptual decision task, we were able to link firing rates of neurons residing in two interconnected cortical areas faithfully representing task relevant motion stimuli, areas MT and DLPFC, to comparison processes likely to provide the basis for perceptual reports. Interestingly, while neurons in both areas carried similar comparison signals, only activity recorded in the DLPFC could be convincingly linked to decisions made by the monkeys. This work, far from complete, shows that neurons processing sensory information have access to signals stored in working memory and that neurons linked to executive control and working memory can faithfully represent both current and remembered sensory signals. Thus, it is likely that the decision-making circuit underlying decisions based on sensory comparisons is likely to include neurons in both cortical areas, each making a distinct contribution to the task at hand.

We were also able to link changes in firing rates of neurons in dACC to switches in behavioral strategies. Although neuronal activity correlated with rewards themselves, across multiple paradigms, the single best explanatory factor for neuronal activity was the monkeys’ propensity to switch or adjust on the subsequent trial. Indeed, by examining the residual variation in firing rate after accounting for reward, a technique that is analogous to choice probability techniques, we are able to establish a correlative link between firing and switching. These results suggest a basic mechanism by which dACC responses can contribute to reward-based decisions.

Reward and working memory are not the only two factors that can make simple perceptual judgments more complicated and more realistic. Other factors that influence decisions are emotions, long-term learning, including category learning, internal states like hunger, context effects, strategic effects, social contexts, metacognitive factors, and many others. Future studies will need to incorporate each of these factors into our understanding of reward-based choices. Fortunately, as our understanding of the mechanisms of choice matures, we will develop more tools and techniques for asking these questions.

One of the most important problems, also not addressed here, will be to integrate our understanding of decision processes across multiple domains, such as reward, memory, multiple stimulus dimensions, social parameters, emotion, and so on. Current research tends to focus on one problem to the exclusion of others. Thus, work on reward-based choice tends to ignore situations in which perceptual discrimination is difficult and reward on perceptual choice tends to ignore situations in which reward functions are non-trivial. This strategy has undoubtedly been very productive. By focusing on a single dimension, scientists have been able to achieve great understanding of basic decision processes. However, a fuller and complete understanding of decision-making will require understanding how multiple influences combine to affect decisions.

Several things make this problem particularly difficult. For example, factors that change motivation, including rewards, alter the strategy that subject use to perform tasks, and may even alter perceptual processes themselves. More generally, introducing additional complexity taxes decision systems and can strongly affect how those decisions get made. Another problem comes from the fact that these various factors must be integrated into a common representation. The locus of this integration, as well as the process, remains unknown.

Thus, one of the major goals for future studies will be to develop a comprehensive account of decision-making that incorporates all possible influences on selection of actions. To do this, it will be essential to study decision-makers in naturalistic environments subject to natural constraints. Such constraints include competing demands on time and energy, predation risk, competition for resources, and opportunities for mutually beneficial social interactions. In addition, we cannot be limited to a single technique.

While single-unit recordings have been very informative, we must rely on complementary techniques as well. Such techniques include local field potential recordings, and spike-field coherence measures, neuroimaging in animals and humans, and lesion studies. One critical tool will be direct causal manipulation of neuronal activity. We have begun to make use of temporary local lesions induced by muscimol and of direct activation of neuronal activity by microstimulation. Such techniques provide the strongest evidence linking neuronal activity to a causal role in decision-making. For example, we recorded neuronal activity in one poorly understood brain area, the posterior cingulate cortex (PCC) in a gambling task. We found that neuronal responses signaled outcomes of gambles, that these outcome signals lasted until the next trial, and that they correlated with variance in choice on the next trial (Hayden et al., 2008b). With microstimulation, we were able to show that direct activation of this tissue was sufficient to change preferences on subsequent choices. These results therefore provided much stronger evidence than even choice probability can provide in linking neuronal activity to the choice process. We hope that similar techniques on a larger scale can provide a new source of confirmation for theories linking neuronal activity to decisions.

Similarly, area MT has always been thought of as a region specialized in processing of current motion stimuli and not linked to the retention of these stimuli during the memory delay. However, the use of microstimulation and lesion approaches provided evidence of MT participation in maintenance and comparison of visual motion stimuli during discrimination tasks. The microstimulation study revealed that injecting current into MT during the memory delay disrupts the ability to perform direction comparisons (Bisley et al., 2001), while the lesion study showed that MT is involved in the retention of stimuli requiring motion integration (Bisley & Pasternak, 2000). These studies for the first time implicated area MT in more cognitive aspects of the perceptual decision process, highlighting the importance of applying multiple methodologies in establishing links between neural activity and perceptual decision.

Interpreting the data that arise from such situations will require care and having a good solid understanding of the fundamentals will be critical. Thus, we see the study of memory and reward as two building blocks that can contribute to a larger understanding of decision-making. Despite the difficulties, however, natural decision-making, with all its various complexities, factors, and biases, is one of the chief functions our brains have evolved to accomplish. Achieving a comprehensive integrated account is an important goal for future studies. Doing so will benefit from the decades of research on the neural mechanisms of simpler types of decisions.

References

- Afraz SR, Kiani R, Esteky H. Microstimulation of inferotemporal cortex influences face categorization. Nature. 2006;442:692–695. doi: 10.1038/nature04982. [DOI] [PubMed] [Google Scholar]

- Amiez C, Joseph JP, Procyk E. Reward encoding in the monkey anterior cingulate cortex. Cereb Cortex. 2006;16:1040–1055. doi: 10.1093/cercor/bhj046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Averbeck BB, Sohn JW, Lee D. Activity in prefrontal cortex during dynamic selection of action sequences. Nat Neurosci. 2006;9:276–282. doi: 10.1038/nn1634. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Delgado MR, Hikosaka O. The Role of the Dorsal Striatum in Reward and Decision-Making. The Journal of Neuroscience. 2007;27:8161–8165. doi: 10.1523/JNEUROSCI.1554-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barbas H. Anatomic organization of basoventral and mediodorsal visual recipient prefrontal regions in the rhesus monkey. Journal of Comparative Neurology. 1988;276:313–342. doi: 10.1002/cne.902760302. [DOI] [PubMed] [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bendiksby MS, Platt ML. Neural correlates of reward and attention in macaque area LIP. Neuropsychologia. 2006;44:2411–2420. doi: 10.1016/j.neuropsychologia.2006.04.011. [DOI] [PubMed] [Google Scholar]

- Bernacchia A, Seo H, Lee D, Wang XJ. A reservoir of time constants for memory traces in cortical neurons. Nat Neurosci. 2011;14:366–372. doi: 10.1038/nn.2752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bisley JW, Pasternak T. The multiple roles of visual cortical areas MT/MST in remembering the direction of visual motion. Cerebral Cortex. 2000;10:1053–1065. doi: 10.1093/cercor/10.11.1053. [DOI] [PubMed] [Google Scholar]

- Bisley JW, Zaksas D, Pasternak T. Microstimulation of Cortical Area MT Affects Performance on a Visual Working Memory Task. J Neurophysiol. 2001;85:187–196. doi: 10.1152/jn.2001.85.1.187. [DOI] [PubMed] [Google Scholar]

- Botvinick MM, Braver TS, Barch DM, Carter CS, Cohen JD. Conflict monitoring and cognitive control. Psychol Rev. 2001;108:624–652. doi: 10.1037/0033-295x.108.3.624. [DOI] [PubMed] [Google Scholar]

- Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual responses of neurons in macaque MT. Vis Neurosci. 1996;13:87–100. doi: 10.1017/s095252380000715x. [DOI] [PubMed] [Google Scholar]

- Cai X, Kim S, Lee D. Heterogeneous coding of temporally discounted values in the dorsal and ventral striatum during intertemporal choice. Neuron. 2011;69:170–182. doi: 10.1016/j.neuron.2010.11.041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai X, Padoa-Schioppa C. Neuronal encoding of subjective value in dorsal and ventral anterior cingulate cortex. J Neurosci. 2012;32:3791–3808. doi: 10.1523/JNEUROSCI.3864-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MR, Maunsell JHR. Attention improves performance primarily by reducing interneuronal correlations. Nat Neurosci. 2009;12:1594–1600. doi: 10.1038/nn.2439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen MR, Newsome WT. What electrical microstimulation has revealed about the neural basis of cognition. Current Opinion in Neurobiology. 2004;14:169–177. doi: 10.1016/j.conb.2004.03.016. [DOI] [PubMed] [Google Scholar]

- Cohen MR, Newsome WT. Estimates of the Contribution of Single Neurons to Perception Depend on Timescale and Noise Correlation. J Neurosci. 2009;29:6635–6648. doi: 10.1523/JNEUROSCI.5179-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook EP, Maunsell JH. Dynamics of neuronal responses in macaque MT and VIP during motion detection. Nat Neurosci. 2002;5:985–994. doi: 10.1038/nn924. [DOI] [PubMed] [Google Scholar]

- Cromer JA, Roy JE, Buschman TJ, Miller EK. Comparison of Primate Prefrontal and Premotor Cortex Neuronal Activity during Visual Categorization. Journal of Cognitive Neuroscience. 2011:3355–3365. doi: 10.1162/jocn_a_00032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- DeAngelis GC, Newsome WT. Perceptual “Read-Out” of Conjoined Direction and Disparity Maps in Extrastriate Area MT. PLoS Biol. 2004;2:e77. doi: 10.1371/journal.pbio.0020077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dodd JV, Krug K, Cumming BG, Parker AJ. Perceptually bistable three-dimensional figures evoke high choice probabilities in cortical area mt. J Neurosci. 2001;21:4809–4821. doi: 10.1523/JNEUROSCI.21-13-04809.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman DJ, Riesenhuber M, Poggio T, Miller EK. A Comparison of Primate Prefrontal and Inferior Temporal Cortices during Visual Categorization. J Neurosci. 2003;23:5235–5246. doi: 10.1523/JNEUROSCI.23-12-05235.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glimcher P. Decisions, decisions, decisions: choosing a biological science of choice. Neuron. 2002;36:323–332. doi: 10.1016/s0896-6273(02)00962-5. [DOI] [PubMed] [Google Scholar]

- Gottfried JA, O’Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Green DM, Swets JA. Signal Detection Theory and Psychophysics. New York: Wiley; 1966. [Google Scholar]

- Hayden B, Gallant J. Working memory and decision processes in visual area V4. Frontiers in Neuroscience. 2013:7. doi: 10.3389/fnins.2013.00018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Heilbronner SR, Nair AC, Platt ML. Cognitive influences on risk-seeking by rhesus macaques. Judgment and Decision Making. 2008a;3:389–395. [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Heilbronner SR, Pearson JM, Platt ML. Surprise signals in anterior cingulate cortex: neuronal encoding of unsigned reward prediction errors driving adjustment in behavior. J Neurosci. 2011a;31:4178–4187. doi: 10.1523/JNEUROSCI.4652-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Heilbronner SR, Platt ML. Ambiguity aversion in rhesus macaques. Front Neurosci. 2010;4 doi: 10.3389/fnins.2010.00166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Nair AC, McCoy AN, Platt ML. Posterior cingulate cortex mediates outcome-contingent allocation of behavior. Neuron. 2008b;60:19–25. doi: 10.1016/j.neuron.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Pearson JM, Platt ML. Fictive reward signals in the anterior cingulate cortex. Science. 2009a;324:948–950. doi: 10.1126/science.1168488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Pearson JM, Platt ML. Neuronal basis of sequential foraging decisions in a patchy environment. Nat Neurosci. 2011b;14:933–939. doi: 10.1038/nn.2856. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Smith DV, Platt ML. Electrophysiological correlates of default-mode processing in macaque posterior cingulate cortex. Proc Natl Acad Sci U S A. 2009b doi: 10.1073/pnas.0812035106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernández An, Nácher V, Luna R, Zainos A, Lemus L, Alvarez M, Vázquez Y, Camarillo L, Romo R. Decoding a Perceptual Decision Process across Cortex. Neuron. 2010;66:300–314. doi: 10.1016/j.neuron.2010.03.031. [DOI] [PubMed] [Google Scholar]

- Hunt LT, Kolling N, Soltani A, Woolrich MW, Rushworth MF, Behrens TE. Mechanisms underlying cortical activity during value-guided choice. Nature Neuroscience. 2012 doi: 10.1038/nn.3017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussar C, Pasternak T. Trial-to-trial variability of the prefrontal neurons reveals the nature of their engagement in a motion discrimination task. Proceedings of the National Academy of Sciences. 2010;107:21842–21847. doi: 10.1073/pnas.1009956107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussar CR, Pasternak T. Flexibility of Sensory Representations in Prefrontal Cortex Depends on Cell Type. Neuron. 2009;64:730–743. doi: 10.1016/j.neuron.2009.11.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussar CR, Pasternak T. Memory-Guided Sensory Comparisons in the Prefrontal Cortex: Contribution of Putative Pyramidal Cells and Interneurons. The Journal of Neuroscience. 2012;32:2747–2761. doi: 10.1523/JNEUROSCI.5135-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussar CR, Pasternak T. Common rules guide comparisons of speed and direction of motion in the dorsolateral prefrontal cortex. The Journal of Neuroscience. 2013a doi: 10.1523/JNEUROSCI.4075-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussar CR, Pasternak T. Common rules guide comparisons of speed and direction of motion in the dorsolateral prefrontal cortex. Journal of Neuroscience. 2013b;33:972–986. doi: 10.1523/JNEUROSCI.4075-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito S, Stuphorn V, Brown JW, Schall JD. Performance Monitoring by the Anterior Cingulate Cortex During Saccade Countermanding. Science. 2003;302:120–122. doi: 10.1126/science.1087847. [DOI] [PubMed] [Google Scholar]

- Jocham G, Hunt LT, Near J, Behrens TEJ. A mechanism for value-guided choice based on the excitation-inhibition balance in prefrontal cortex. Nat Neurosci. 2012;15:960–961. doi: 10.1038/nn.3140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Behrens TEJ, Wallis JD. Double dissociation of value computations in orbitofrontal and anterior cingulate neurons. Nat Neurosci. 2011;14:1581–1589. doi: 10.1038/nn.2961. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Dahmubed AF, Lara AH, Wallis JD. Neurons in the Frontal Lobe Encode the Value of Multiple Decision Variables. J Cogn Neurosci. 2008 doi: 10.1162/jocn.2009.21100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennerley SW, Wallis JD. Evaluating choices by single neurons in the frontal lobe: outcome value encoded across multiple decision variables. Eur J Neurosci. 2009;29:2061–2073. doi: 10.1111/j.1460-9568.2009.06743.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kerns JG, Cohen JD, MacDonald AW, 3rd, Cho RY, Stenger VA, Carter CS. Anterior cingulate conflict monitoring and adjustments in control. Science. 2004;303:1023–1026. doi: 10.1126/science.1089910. [DOI] [PubMed] [Google Scholar]

- Krajbich I, Armel C, Rangel A. Visual fixations and the computation and comparison of value in simple choice. Nat Neurosci. 2010;13:1292–1298. doi: 10.1038/nn.2635. [DOI] [PubMed] [Google Scholar]

- Lara AH, Kennerley SW, Wallis JD. Encoding of gustatory working memory by orbitofrontal neurons. J Neurosci. 2009;29:765–774. doi: 10.1523/JNEUROSCI.4637-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leathers ML, Olson CR. In Monkeys Making Value-Based Decisions, LIP Neurons Encode Cue Salience and Not Action Value. Science. 2012;338:132–135. doi: 10.1126/science.1226405. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Newsome WT. Local Field Potential in Cortical Area MT: Stimulus Tuning and Behavioral Correlations. J Neurosci. 2006;26:7779–7790. doi: 10.1523/JNEUROSCI.5052-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lui LL, Pasternak T. Representation of Comparison Signals in Cortical Area MT During a Delayed Direction Discrimination Task. J Neurophysiol. 2011;106:1260–1273. doi: 10.1152/jn.00016.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Matsumoto M, Matsumoto K, Abe H, Tanaka K. Medial prefrontal cell activity signaling prediction errors of action values. Nat Neurosci. 2007;10:647–656. doi: 10.1038/nn1890. [DOI] [PubMed] [Google Scholar]

- Maunsell JHR. Neuronal representations of cognitive state: reward or attention? Trends in Cognitive Sciences. 2004;8:261–265. doi: 10.1016/j.tics.2004.04.003. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001a;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001b;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. Journal of Neuroscience. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Li L, Desimone R. Activity of neurons in anterior inferior temporal cortex during a short-term memory task. Journal of Neuroscience. 1993;13:1460–1478. doi: 10.1523/JNEUROSCI.13-04-01460.1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Montague PR, Berns GS. Neural economics and the biological substrates of valuation. Neuron. 2002;36:265–284. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- Newsome WT, Britten KH, Movshon JA. Neuronal correlates of a perceptual decision. Nature. 1989;341:52–54. doi: 10.1038/341052a0. [DOI] [PubMed] [Google Scholar]

- Nichols MJ, Newsome WT. Middle temporal visual area microstimulation influences veridical judgments of motion direction. J Neurosci. 2002;22:9530–9540. doi: 10.1523/JNEUROSCI.22-21-09530.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niki H, Watanabe M. Prefrontal and cingulate unit activity during timing behavior in the monkey. Brain Research. 1979;171:213–224. doi: 10.1016/0006-8993(79)90328-7. [DOI] [PubMed] [Google Scholar]

- Ninomiya T, Sawamura H, Inoue K-i, Takada M. Segregated Pathways Carrying Frontally Derived Top-Down Signals to Visual Areas MT and V4 in Macaques. The Journal of Neuroscience. 2012;32:6851–6858. doi: 10.1523/JNEUROSCI.6295-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C. Neurobiology of economic choice: a good-based model. Annu Rev Neurosci. 2011;34:333–359. doi: 10.1146/annurev-neuro-061010-113648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmer C, Cheng S-Y, Seidemann E. Linking Neuronal and Behavioral Performance in a Reaction-Time Visual Detection Task. J Neurosci. 2007;27:8122–8137. doi: 10.1523/JNEUROSCI.1940-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parker AJ, Krug K, Cumming BG. Neuronal activity and its links with the perception of multi-stable figures. Philosophical Transactions of the Royal Society B: Biological Sciences. 2002;357:1053–1062. doi: 10.1098/rstb.2002.1112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pasternak T, Greenlee M. Working Memory in Primate Sensory Systems. Nature Reviews Neuroscience. 2005;6:97–107. doi: 10.1038/nrn1603. [DOI] [PubMed] [Google Scholar]

- Paton JJ, Belova MA, Morrison SE, Salzman CD. The primate amygdala represents the positive and negative value of visual stimuli during learning. Nature. 2006;439:865–870. doi: 10.1038/nature04490. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Efferent association pathways originating in the caudal prefrontal cortex in the macaque monkey. J Comp Neurol. 2006;498:227–251. doi: 10.1002/cne.21048. [DOI] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Rangel A, Camerer C, Montague PR. A framework for studying the neurobiology of value-based decision making. Nat Rev Neurosci. 2008;9:545–556. doi: 10.1038/nrn2357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Olson CR. Neuronal activity related to reward value and motivation in primate frontal cortex. Science. 2004;304:307–310. doi: 10.1126/science.1093223. [DOI] [PubMed] [Google Scholar]

- Romo R, Salinas E. Flutter discrimination: neural codes, perception, memory and decision making. Nature Reviews Neuroscience. 2003;4:203–218. doi: 10.1038/nrn1058. [DOI] [PubMed] [Google Scholar]

- Rushworth Matthew FS, Noonan MaryAnn P, Boorman Erie D, Walton Mark E, Behrens Timothy E. Frontal Cortex and Reward-Guided Learning and Decision-Making. Neuron. 2011;70:1054–1069. doi: 10.1016/j.neuron.2011.05.014. [DOI] [PubMed] [Google Scholar]

- Sallet J, Quilodran R, Rothe M, Vezoli J, Joseph JP, Procyk E. Expectations, gains, and losses in the anterior cingulate cortex. Cogn Affect Behav Neurosci. 2007;7:327–336. doi: 10.3758/cabn.7.4.327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salzman CD, Britten KH, Newsome WT. Cortical microstimulation influences perceptual judgements of motion direction [published erratum appears in Nature 1990 Aug 9;346(6284):589] [see comments] Nature. 1990;346:174–177. doi: 10.1038/346174a0. [DOI] [PubMed] [Google Scholar]

- Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- Seo H, Lee D. Temporal Filtering of Reward Signals in the Dorsal Anterior Cingulate Cortex during a Mixed-Strategy Game. J Neurosci. 2007;27:8366–8377. doi: 10.1523/JNEUROSCI.2369-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shidara M, Richmond BJ. Anterior cingulate: single neuronal signals related to degree of reward expectancy. Science. 2002;296:1709–1711. doi: 10.1126/science.1069504. [DOI] [PubMed] [Google Scholar]

- Shiozaki HM, Tanabe S, Doi T, Fujita I. Neural Activity in Cortical Area V4 Underlies Fine Disparity Discrimination. The Journal of Neuroscience. 2012;32:3830–3841. doi: 10.1523/JNEUROSCI.5083-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Uchida N, Kepecs A, Mainen ZF. Seeing at a glance, smelling in a whiff: rapid forms of perceptual decision making. Nat Rev Neurosci. 2006;7:485–491. doi: 10.1038/nrn1933. [DOI] [PubMed] [Google Scholar]

- Uka T, DeAngelis GC. Contribution of area MT to stereoscopic depth perception: choice-related response modulations reflect task strategy. Neuron. 2004;42:297–310. doi: 10.1016/s0896-6273(04)00186-2. [DOI] [PubMed] [Google Scholar]

- Wallis JD. Orbitofrontal cortex and its contribution to decision-making. Annu Rev Neurosci. 2007;30:31–56. doi: 10.1146/annurev.neuro.30.051606.094334. [DOI] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Alterescu K, Rushworth MF. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J Neurosci. 2003;23:6475–6479. doi: 10.1523/JNEUROSCI.23-16-06475.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang XJ. Neural dynamics and circuit mechanisms of decision-making. Current Opinion in Neurobiology. 2012;22:1039–1046. doi: 10.1016/j.conb.2012.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams ZM, Bush G, Rauch SL, Cosgrove GR, Eskandar EN. Human anterior cingulate neurons and the integration of monetary reward with motor responses. Nat Neurosci. 2004;7:1370–1375. doi: 10.1038/nn1354. [DOI] [PubMed] [Google Scholar]

- Zaksas D, Pasternak T. Directional Signals in the Prefrontal Cortex and in Area MT during a Working Memory for Visual Motion Task. J Neuroscience. 2006;26:11726–11742. doi: 10.1523/JNEUROSCI.3420-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]