Abstract

Historically, the study of human identity perception has focused on faces, but the voice is also central to our expressions and experiences of identity (P. Belin, Fecteau, & Bedard, 2004). Our voices are highly flexible and dynamic; talkers speak differently depending on their health, emotional state, and the social setting, as well as extrinsic factors such as background noise. However, to date, there have been no studies of the neural correlates of identity modulation in speech production. In the current fMRI experiment, we measured the neural activity supporting controlled voice change in adult participants performing spoken impressions. We reveal that deliberate modulation of vocal identity recruits the left anterior insula and inferior frontal gyrus, supporting the planning of novel articulations. Bilateral sites in posterior superior temporal/inferior parietal cortex and a region in right mid/anterior superior temporal sulcus showed greater responses during the emulation of specific vocal identities than for impressions of generic accents. Using functional connectivity analyses, we describe roles for these three sites in their interactions with the brain regions supporting speech planning and production. Our findings mark a significant step toward understanding the neural control of vocal identity, with wider implications for the cognitive control of voluntary motor acts.

Introduction

Voices, like faces, express many aspects of our identity (P. Belin et al., 2004). From hearing only a few words of an utterance, we can estimate the speaker’s gender and age, their country or even specific town of birth, as well as more subtle evaluations on current mood or state of health (Karpf, 2007). Some of the indexical cues to speaker identity are clearly expressed in the voice. The pitch (or fundamental frequency, F0) of the voice of an adult male speaker tends to be lower than that of adult females or children, due to the thickening and lengthening of the vocal folds during puberty in human males. The secondary descent of the larynx in adult males also increases the spectral range in the voice, reflecting an increase in vocal tract length.

However, the human voice is also highly flexible, and we continually modulate the way we speak. The Lombard Effect (Lombard, 1911) describes the way that talkers automatically raise the volume of their voice when the auditory environment is perceived as noisy. In the social context of conversations, interlocutors start to align their behaviours, from body movements and breathing patterns to pronunciations and selection of syntactic structures (Chartrand & Bargh, 1999; Condon & Ogston, 1967; Garrod & Pickering, 2004; McFarland, 2001; J. S. Pardo, 2006). Laboratory tests of speech shadowing, where participants repeat speech immediately as they hear it, have shown evidence for unconscious imitation of linguistic and paralinguistic properties of speech (Bailly, 2003; Kappes, Baumgaertner, Peschke, & Ziegler, 2009; Shockley, Sabadini, & Fowler, 2004). Giles and colleagues (Giles, 1973; Giles, Coupland, & Coupland, 1991) put forward the Communication Accommodation Theory to account for processes of convergence and divergence in spoken language pronunciation – namely, they suggest that talkers change their speaking style to modulate the social distance between them and their interlocutors, with convergence promoting greater closeness. It has been argued by others that covert speech imitation is central to facilitating comprehension in conversation (Pickering & Garrod, 2007). Aside from these short-term modulations in speech, changes in vocal behaviour can also be observed over much longer periods – the speech of Queen Elizabeth II has shown a gradual progression toward standard southern British pronunciation (Harrington, Palethorpe, & Watson, 2000).

Although modulations of the voice often occur outside conscious awareness, they can also be deliberate. A recent study showed that student participants could change their speech to sound more masculine or feminine, by making controlled alterations that simulated target-appropriate changes in vocal tract length and voice pitch (Cartei, Cowles, & Reby, 2012). Indeed, speakers can readily disguise their vocal identity (Sullivan, 1998), which makes forensic voice identification notoriously difficult (Eriksson et al., 2010; Ladefoged, 2003). Notably, when control of vocal identity is compromised, for example in Foreign Accent Syndrome (e.g. Scott, Clegg, Rudge, & Burgess, 2006), the change in the patient’s vocal expression of identity can be frustrating and debilitating. Interrogating the neural systems supporting vocal modulation is an important step in understanding human vocal expression, yet this dynamic aspect of the voice is a missing element in existing models of speech production (Hickok, 2012; Jason A. Tourville & Guenther, 2011).

Speaking aloud is an example of a very well-practised voluntary motor act (Jurgens, 2002). Voluntary actions need to be controlled in a flexible manner to adjust to changes in environment and the goals of the actor. The main purpose of speech is to perform the transfer of a linguistic/conceptual message. However, we control our voices to achieve intended goals on a variety of levels, from acoustic-phonetic accommodation to the auditory environment (Cooke & Lu, 2010; Lu & Cooke, 2009) to socially-motivated vocal behaviours reflecting how we wish to be perceived by others (Jennifer S. Pardo, Gibbons, Suppes, & Krauss, 2012; Jennifer S. Pardo & Jay, 2010). Investigations of the cortical control of vocalization have identified two neurological systems supporting the voluntary initiation of innate and learned vocal behaviours, where expressions such as emotional vocalizations are controlled by a medial frontal system involving the anterior cingulate cortex and supplementary motor area (SMA), while speech and song are under the control of lateral motor cortices (Jurgens, 2002). Thus, patients with speech production deficits following strokes to lateral inferior motor structures still exhibit spontaneous vocal behaviours such as laughter, crying and swearing, despite their severe deficits in voluntary speech production (Groswasser, Korn, Groswasser-Reider, & Solzi, 1988). Electrical stimulation studies showing that vocalizations can be elicited by direct stimulation of the anterior cingulate (e.g. laughter; described by Sem-Jacobsen & Torkildsen, 1968) and lesion evidence shows that bilateral damage to anterior cingulate prevents the expression of emotional inflection in speech (Jurgens & von Cramon, 1982). In healthy participants, a detailed investigation of the lateral motor areas involved in voluntary speech production directly compared voluntary inhalation/exhalation with syllable repetition. The study found that the functional networks associated with laryngeal motor cortex were strongly left-lateralized for syllable repetition, but bilaterally organized for controlled breathing (Simonyan, Ostuni, Ludlow, & Horwitz, 2009). However, that design did not permit further exploration of the modulation of voluntary control within either speech or breathing. This aspect has been addressed in a study of speech prosody, which reported activations in left inferior frontal gyrus and dorsal premotor cortex for the voluntary modulation of both linguistic and emotional prosody, that overlapped with regions sensitive to the perception of these modulations (Aziz-Zadeh, Sheng, & Gheytanchi, 2010).

Some studies have addressed the neural correlates of overt, and unintended, imitation of heard speech (Peschke, Ziegler, Kappes, & Baumgaertner, 2009; Reiterer et al., 2011). Peschke and colleagues found evidence for unconscious imitation of speech duration and F0 in a shadowing task in fMRI, in which activation in right inferior parietal cortex correlated with stronger imitation of duration across participants. Reiterer and colleagues (2011) found that participants with poor ability to imitate non-native speech showed greater activation (and lower grey matter density) in left premotor, inferior frontal and inferior parietal cortical regions during a speech imitation task, compared with participants who were highly rated mimics. The authors interpret this as a possible index of greater effort in the phonological loop for less skilled imitators. However, in general, the reported functional imaging investigations of voluntary speech control systems have typically involved comparisons of speech output with varying linguistic content, for example connected speech of different linguistic complexities (ef-S. C. Blank, S. K. Scott, K. Murphy, E. Warburton, & R. J. S. Wise, 2002; Dhanjal, Handunnetthi, Patel, & Wise, 2008), or pseudowords of varying length and phonetic complexity (Bohland & Guenther, 2006; Papoutsi et al., 2009).

In order to address the ubiquitous behavior of voluntary modulation of vocal expression in speech, while holding the linguistic content of the utterance constant, we carried out a functional magnetic resonance imaging (fMRI) experiment in which we studied the neural correlates of controlled voice change in adult speakers of English performing spoken impressions. The participants, who were not professional voice artists or impressionists, repeatedly recited the opening lines of a familiar nursery rhyme under three different speaking conditions: normal voice (N), impersonating individuals (I), and impersonating regional and foreign accents of English (A). The nature of the task is similar to the kinds of vocal imitation used in everyday conversation, for example in reporting the speech of others during storytelling. We aimed to uncover the neural systems supporting changes in the way speech is articulated, in the presence of unvarying linguistic content. We predicted that left-dominant orofacial motor control centres, including the left inferior frontal gyrus, insula and motor cortex, as well as auditory processing sites in superior temporal cortex, would be important in effecting change to speaking style and monitoring the auditory consequences. Beyond this, we aimed to measure whether the goal of the vocal modulation – to imitate a generic speaking style/accent versus a specific vocal identity – would modulate the activation of the speech production network and/or its connectivity with brain regions processing information relevant to individual identities.

METHODS

Participants

Twenty-three adult speakers of English (7 female; mean age 33 years 11 months) were recruited who were willing to attempt spoken impersonations. All had healthy hearing and no history of neurological incidents, nor any problems with speech or language (self-reported). Although some had formal training in acting and music, none had worked professionally as an impressionist or voice artist. The study was approved by the UCL Department of Psychology Ethics Committee.

Design and Procedure

Participants were asked to compile in advance lists of 40 individuals and 40 accents they could feasibly attempt to impersonate. These could include any voice/accent with which they were personally familiar, from celebrities to family members (e.g. ‘Sean Connery’, ‘Carly’s Mum’). Likewise, the selected accents could be general or specific (e.g. ‘French’ vs. ‘Blackburn’).

Functional imaging data were acquired on a Siemens Avanto 1.5-Tesla scanner (Siemens AG, Erlangen, Germany) in a single run of 163 echo-planar whole-brain volumes (TR = 8 sec, TA = 3 sec, TE = 50 msec, flip angle = 90°, 35 axial slices, 3 mm × 3 mm × 3 mm in-plane resolution). A sparse-sampling routine (Edmister, Talavage, Ledden, & Weisskoff, 1999; Hall et al., 1999) was employed, with the task performed during a 5-second silence between volumes.

There were 40 trials of each condition: normal voice (N), impersonating individuals (I), impressions of regional and foreign accents of English (A), and a rest baseline (B). The mean list lengths across participants were 36.1 (s.d. 5.6) for condition I and 35.0 (s.d. 6.9) for A (a non-significant difference; t(1,22) = .795, p = .435). When submitted lists were shorter than 40, some names/accents were repeated to fill the 40 trials. Condition order was pseudorandomized, with each condition occurring once in every four trials. Participants wore electrodynamic headphones fitted with an optical microphone (MR Confon GmbH, Magdeburg, Germany). Using MATLAB (Mathworks Inc., Natick, MA) with the Psychophysics Toolbox extension (Brainard, 1997) and a video projector (Eiki International, Inc., Rancho Santa Margarita, CA), visual prompts (‘Normal Voice’, ‘Break’ or the name of a voice/accent, as well as a ‘Start speaking’ instruction) were delivered onto a front screen, viewed via a mirror on the head coil. Each trial began with a condition prompt triggered by the onset of a whole-brain acquisition. At 0.2 seconds after the start of the silent period, the participant was prompted to start speaking and to cease when the prompt disappeared (3.8 seconds later). In each speech production trial, participants recited the opening line from a familiar nursery rhyme, such as ‘Jack and Jill went up the hill’, and were reminded that they should not include person-specific catchphrases or catchwords. This controlled for the linguistic content of the speech across the conditions. Spoken responses were recorded using Audacity (http://audacity.sourceforge.net). After the functional run, a high-resolution T1-weighted anatomical image was acquired (HIRes MP-RAGE, 160 sagittal slices, voxel size = 1 mm3). The total time in the scanner was around 35 minutes.

Acoustic Analysis of Spoken Impressions

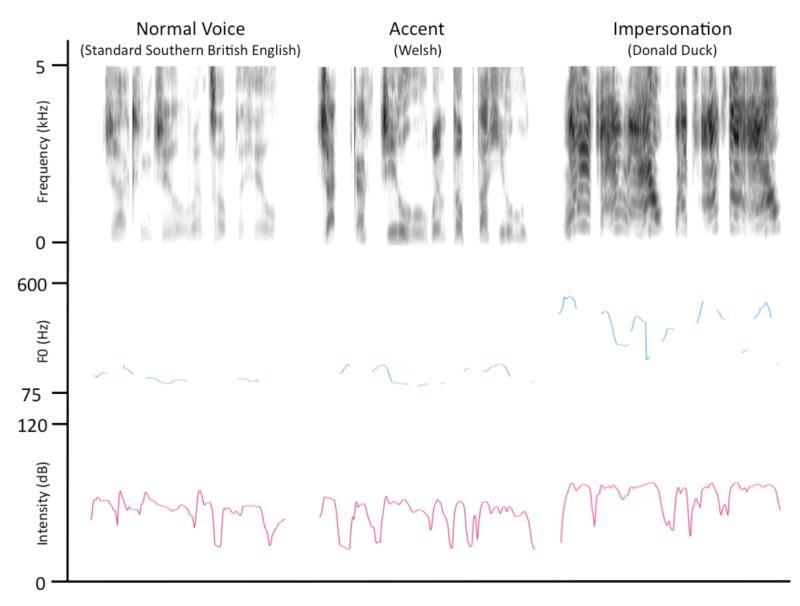

Due to technical problems, auditory recordings were only available for 13 participants. The 40 tokens from the three speech conditions – Normal Voice, Impersonations and Accents – was entered into a repeated-measures ANOVA with Condition as a within-subjects factor for each of the following acoustic parameters: (i) Duration (s), (ii) Intensity (dB), (iii) Mean F0 (Hz), (iv) Minimum F0 (Hz), (v) Maximum F0 (Hz), Standard Deviation of F0 (Hz), (vi) Spectral Centre of Gravity (Hz) and (vii) Spectral Standard Deviation (Hz). Three Bonferroni-corrected post-hoc paired t-tests compared the individual conditions. Table 1 illustrates the results of these analyses, and Figure 1 illustrates the acoustic properties of example trials from each speech condition (taken from the same participant).

Table 1. Acoustic Correlates of Voice Change during spoken impressions.

| Acoustic Parameter | Mean Normal | Mean Voices | Mean Accents | ANOVA | t-test N vs V |

t-test N vs A |

t-test V vs A |

||||

|---|---|---|---|---|---|---|---|---|---|---|---|

|

|

|||||||||||

| F | sig. | t | sig. | t | sig. | t | sig. | ||||

| Duration (s) | 2.75 | 3.10 | 2.98 | 9.96 | .006 | 3.25 | .021 | 3.18 | .024 | 2.51 | .081 |

| Intensity (dB) | 47.4 | 51.3 | 51.3 | 49.25 | .000 | 10.15 | .000 | 7.62 | .000 | 0.88 | 1.00 |

| Mean F0 (Hz) | 155.9 | 207.2 | 186.3 | 24.11 | .000 | 5.19 | .001 | 4.87 | .001 | 3.89 | .006 |

| Min F0 (Hz) | 94.4 | 104.9 | 102.1 | 3.71 | .039 | 2.20 | .144 | 2.18 | .149 | 0.77 | 1.00 |

| Max F0 (Hz) | 625.0 | 667.6 | 628.5 | 1.28 | .295 | 1.31 | .646 | 0.10 | 1.00 | 2.15 | .158 |

| Std Dev F0 (Hz) | 117.3 | 129.9 | 114.7 | 1.62 | .227 | 1.26 | .694 | .240 | 1.00 | 3.30 | .019 |

| Spec CoG (Hz) | 2100 | 2140 | 2061 | 0.38 | .617 | 0.37 | 1.00 | 0.39 | 1.00 | 1.49 | .485 |

| Spec Std Dev (Hz) | 1647 | 1579 | 1553 | 2.24 | .128 | 1.17 | 0.789 | 2.05 | .188 | 0.89 | 1.00 |

F0 = fundamental frequency, Std Dev = Standard Deviation, Spec = spectral; CoG = Centre of Gravity. s = seconds, dB = decibels, Hz = Hertz. Significant effects are shown in bold.

Figure 1.

Functional magnetic resonance imaging analysis

Data were preprocessed and analyzed using SPM5 (Wellcome Trust Centre for Neuroimaging, London, UK). Functional images were realigned and unwarped, co-registered with the anatomical image, normalized using parameters obtained from unified segmentation of the anatomical image, and smoothed using a Gaussian kernel of 8 mm FWHM. At the first level, the condition onsets were modelled as instantaneous events coincident with the prompt to speak, using a canonical hemodynamic response function. Contrast images were calculated to describe each of the four conditions (N, I, A and B), each speech condition compared with rest (N > B, I > B, A > B), each impression condition compared with normal speech (I > N, A > N), and the comparison of impression conditions (I > A). These images were entered into second-level, one-sample T tests for the group analyses.

The results of the conjunction analyses are reported at a voxel height threshold of p < .05 (corrected for familywise error). All other results are reported at an uncorrected voxel height threshold of p < .001, with a cluster extent correction of 20 voxels applied for a whole-brain alpha of p < .001 using a Monte Carlo simulation (with 10 000 iterations) implemented in MATLAB (Slotnick, Moo, Segal, & Hart, 2003).

Conjunction analyses of second-level contrast images were performed using the null conjunction approach (Nichols, Brett, Andersson, Wager, & Poline, 2005). Using the MarsBaR toolbox (Brett, Anton, Valabregue, & Poline, 2002), spherical regions of interest (ROIs; 4mm radius) were built around the peak voxels – parameter estimates were extracted from these ROIs to construct plots of activation.

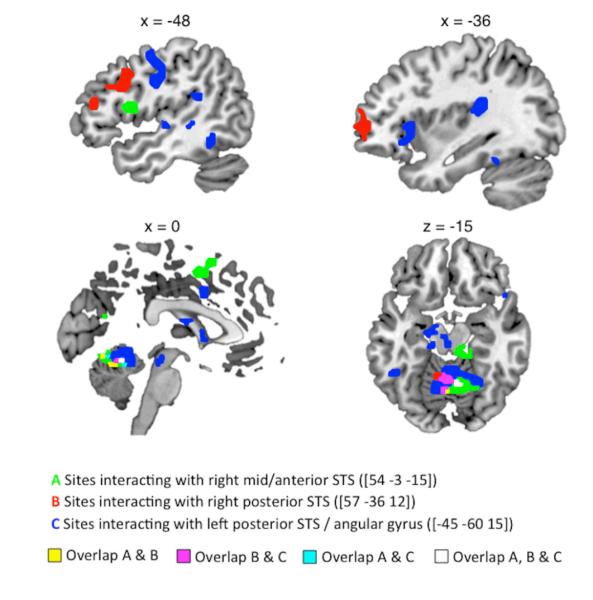

A psychophysiological interaction (PPI) analysis was used to investigate changes in connectivity between the conditions I and A. In each participant, the time-course of activation was extracted from spherical Volumes of Interest (VOIs; 4mm radius) built around the superior temporal peaks in the group contrast I > A (Right mid-anterior STS: [54 −3 −15], Right posterior STS: [57 −36 12], Left posterior STS: [−45 −60 15]). A PPI regressor described the interaction between each VOI and a psychological regressor for the contrast of interest (I > A) – this modelled a change in the correlation between activity in these STS seed regions and the rest of the brain across the two conditions. The PPIs from each seed region were evaluated in a first-level model that included the individual physiological and psychological time-courses as covariates of no interest. A random-effects, one-sample t-test assessed the significance of each PPI in the group (voxelwise threshold: p < .001, corrected cluster threshold: p < .001).

Post-hoc pairwise t-tests using SPSS (version 18.0; IBM, Armonk, NY) compared condition-specific parameter estimates (N vs. B, and I vs. A) within the peak voxels in the voice change conjunction ((I > N) ⋂ (A > N)). To maintain independence and avoid statistical ‘double-dipping’, an iterative, hold-one-out approach was used in which the peak voxels for each subject were defined from a group statistical map of the conjunction ((I > N) ⋂ (A > N)) using the other 22 participants. These subject-specific peak locations were used to extract condition-specific parameter estimates from 4mm spherical regions of interest built around the peak voxel (ROIs; using MarsBaR). Paired t-tests were run using a corrected alpha level of .025 (to correct for 2 tests in each ROI).

The anatomical locations of peak and sub-peak voxels (at least 8mm apart) were labelled using the SPM Anatomy Toolbox (version 18) (Eickhoff et al., 2005).

RESULTS AND DISCUSSION

Brain regions supporting voice change

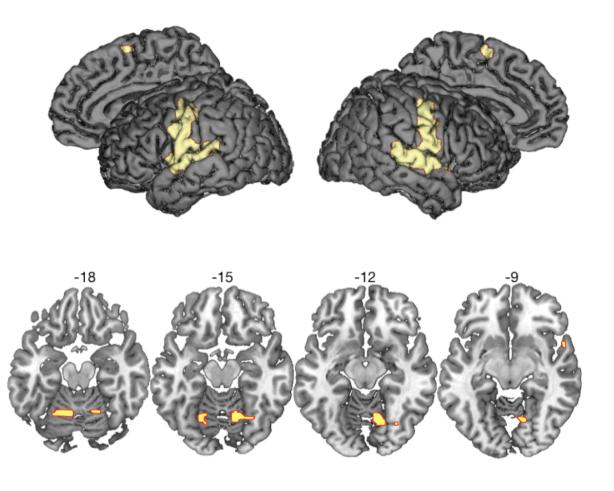

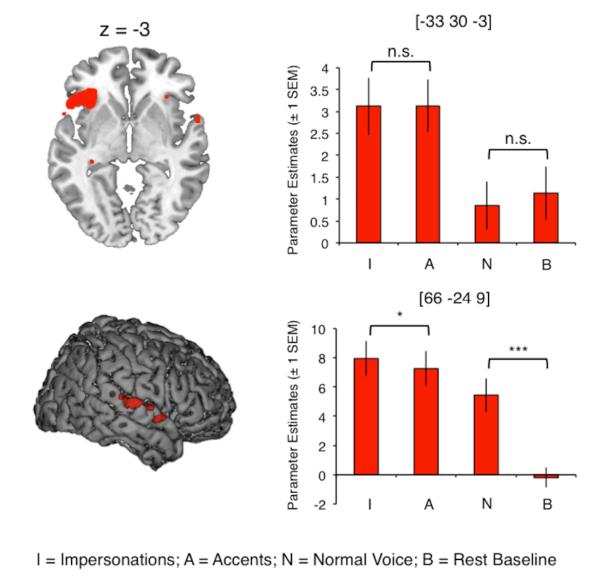

Areas of activation common to the three speech output conditions compared with a rest baseline (B) ((N > B) ⋂ (I > B) ⋂ (A > B)) comprised a speech production network of bilateral motor and somatosensory cortex, supplementary motor area, superior temporal gyrus (STG) and cerebellum (Figure 2 and Table 2) (S. C. Blank, S. K. Scott, K. Murphy, E. Warburton, & R. J. Wise, 2002; Bohland & Guenther, 2006; A. Riecker et al., 2005; Simmonds, Wise, Dhanjal, & Leech, 2011; Jason A. Tourville & Guenther, 2011; J. A. Tourville, Reilly, & Guenther, 2008; Wise, Greene, Buchel, & Scott, 1999). Activation common to the voice change conditions (I and A) compared with normal speech ((I > N) ⋂ (A > N)) was found in left anterior insula, extending laterally onto the inferior frontal gyrus (orbital and opercular parts), and on the right STG (Figure 3 and Table 3). Planned post-hoc comparisons showed that responses in the left frontal sites were equivalent for impersonations and accents (two-tailed, paired t-test; t(22) = −.068, corrected p = 1.00), and during normal speech and rest (t(22) = .278, corrected p = 1.00). The right STG, in contrast, was significantly more active during impersonations than accents (two-tailed, paired t-test; t(22) = 2.69, Bonferroni-corrected p = .027) and during normal speech compared with rest (t(22) = 6.64, corrected p < .0001). Thus, we demonstrate a partial dissociation of the inferior frontal/insular and sensory cortices, where both respond more during impressions than in normal speech, but where the STG shows an additional sensitivity to the nature of the voice change task – that is, whether the voice target is associated with a unique identity.

Figure 2.

Table 2. Activation common to the three speech output conditions.

| Contrast | No of Voxels | Region | Coordinate |

T | z | ||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| All Speech > Rest | 963 | Left postcentral gyrus / STG / pre-central gyrus | −48 | −15 | 39 | 14.15 | 7.07 |

| ((N > B) ⋂ (I > B) ⋂ (A > B)) | 852 | Right superior temporal gyrus / precentral gyrus / postcentral gyrus | 63 | −15 | 3 | 13.60 | 6.96 |

| 21 | Left cerebellum (lobule VI) | −24 | −60 | −18 | 7.88 | 5.38 | |

| 20 | Left SMA | −3 | −3 | 63 | 7.77 | 5.34 | |

| 34 | Right cerebellum (lobule VI), right fusiform gyrus | 12 | −60 | −15 | 7.44 | 5.21 | |

| 35 | Right/left calcarine gyrus | 3 | −93 | 6 | 7.41 | 5.19 | |

| 5 | Left calcarine gyrus | −15 | −93 | −3 | 6.98 | 5.02 | |

| 7 | Right lingual gyrus | 15 | −84 | −3 | 6.73 | 4.91 | |

| 1 | Right Area V4 | 30 | −69 | −12 | 6.58 | 4.84 | |

| 3 | Left calcarine gyrus | −9 | −81 | 0 | 6.17 | 4.65 | |

| 2 | Left thalamus | −12 | −24 | −3 | 6.15 | 4.64 | |

| 2 | Right calcarine gyrus | 15 | −69 | 12 | 6.13 | 4.63 | |

Conjunction null analysis of all speech conditions (Normal, Impersonations and Accents) compared with rest. Voxel height threshold p < .05 (familywise error corrected). Coordinates indicate the position of the peak voxel from each significant cluster, in Montreal Neurological Institute (MNI) stereotactic space. STG = superior temporal gyrus, SMA = supplementary motor area.

Figure 3.

Table 3. Neural regions recruited during voice change (null conjunction of Impersonations > Normal and Accents > Normal).

| Contrast | No of Voxels | Region | Coordinate |

T | z | ||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Impressions > Normal | 180 | LIFG (pars orb., pars operc.) / insula | −33 | 30 | −3 | 8.39 | 5.56 5.22 |

| Speech ((I > N) ⋂ (A > N)) | 1 | Left temporal pole | −54 | 15 | −9 | 7.48 | 5.21 |

| 19 | Right thalamus | 3 | −6 | 9 | 7.44 | 5.15 | |

| 17 | Right superior temporal gyrus | 66 | −24 | 9 | 7.30 | 5.10 | |

| 16 | Right hippocampus | 33 | −45 | 3 | 7.17 | 5.07 | |

| 4 | Left thalamus | −12 | −6 | 12 | 7.11 | 4.94 | |

| 9 | Left thalamus | −27 | −21 | −9 | 6.80 | 4.87 | |

| 3 | Left hippocampus | −15 | −21 | −15 | 6.65 | 4.85 | |

| 6 | Right insula | 33 | 27 | 0 | 6.59 | 4.78 | |

| 1 | Right superior temporal gyrus | 63 | −3 | 3 | 6.45 | 4.78 | |

| 1 | Left hippocampus | −24 | −39 | 9 | 6.44 | 4.78 | |

| 2 | Right superior temporal gyrus | 66 | −9 | 6 | 6.44 | 4.77 | |

| 4 | Right temporal pole | 60 | 6 | −6 | 6.42 | 4.71 | |

| 1 | Left hippocampus | −15 | −42 | 12 | 6.30 | 4.66 | |

| 4 | Right caudate nucleus | 21 | 12 | 18 | 6.20 | 4.62 | |

| 2 | Left cerebellum (lobule VI) | −24 | −60 | −18 | 6.10 | ||

Voxel height threshold p < .05 (familywise error corrected). Coordinates indicate the position of the peak voxel from each significant cluster, in Montreal Neurological Institute (MNI) stereotactic space. LIFG = left inferior frontal gyrus, pars orb. = pars orbitalis, pars operc. = pars opercularis.

Acoustic analyses of the impressions from a subset of participants (n = 13) indicated that the conditions involving voice change resulted in acoustic speech signals that were significantly longer, more intense and higher in fundamental frequency (roughly equivalent to pitch) than normal speech. This may relate to the right-lateralized temporal response during voice change, as previous work has shown that the right STG is engaged during judgments of sound intensity (P. Belin et al., 1998). The right temporal lobe has also been associated with processing non-linguistic information in the voice, such as speaker identity (P. Belin, R. J. Zatorre, & P. Ahad, 2002; P. Belin, Zatorre, Lafaille, Ahad, & Pike, 2000; Kriegstein & Giraud, 2004; von Kriegstein, Eger, Kleinschmidt, & Giraud, 2003; von Kriegstein, Kleinschmidt, Sterzer, & Giraud, 2005) and emotion (Meyer, Zysset, von Cramon, & Alter, 2005; Schirmer & Kotz, 2006; Wildgruber et al., 2005), although these results tend to implicate higher-order regions such as the superior temporal sulcus (STS).

The neuropsychology literature has described the importance of the left IFG and anterior insula in voluntary speech production (Broca, 1861; Dronkers, 1996; Kurth, Zilles, Fox, Laird, & Eickhoff, 2010). Studies of speech production have identified that the left posterior IFG and insula are sensitive to increasing articulatory complexity of spoken syllables (Bohland & Guenther, 2006; Axel Riecker, Brendel, Ziegler, Erb, & Ackermann, 2008), but not to the frequency with which those syllables occur in everyday language (Axel Riecker et al., 2008), suggesting involvement in the phonetic aspects of speech output rather than higher-order linguistic representations. Ackermann & Riecker (Ackermann & Riecker, 2010) suggest that insula cortex may actually be associated with more generalized control of breathing, which could be voluntarily modulated to maintain the sustained and finely controlled hyperventilation required to produce connected speech. In finding that the left IFG and insula can influence the way we speak, as well as what we say, we have also shown that they are not just coding abstract linguistic elements of the speech act. In agreement with Ackermann and Riecker (2010), we suggest that these regions may also play a role in more general aspects of voluntary vocal control during speech, such as breathing and modulation of pitch. In line with this, our acoustic analysis shows that both accents and impressions were produced with longer durations, higher pitches and greater intensity, all of which are strongly dependent on the way that breathing is controlled (A. Maclarnon & Hewitt, 2004; A. M. MacLarnon & Hewitt, 1999).

Effects of target specificity: Impersonations versus Accents

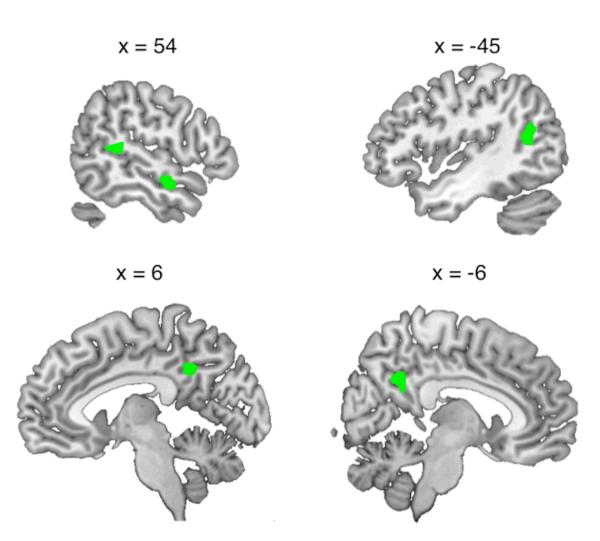

A direct comparison of the two voice change conditions (I > A) showed increased activation for specific impersonations in right mid/anterior STS, bilateral posterior STS extending to angular gyrus (AG) on the left, and posterior midline sites on cingulate cortex and precuneus (Figure 4 and Table 4; the contrast A>I gave no significant activations). Whole-brain analyses of functional connectivity revealed areas that correlated more positively with the three sites on STS during impersonations than during accents (Figure 5 and Table 5). Strikingly, all three temporal seed regions showed significant interactions with areas typically active during speech perception and production, with notable sites of overlap in sensorimotor lobules V and VI of the cerebellum and left STG. However, there were also indications of differentiation of the three connectivity profiles. The left posterior STS seed region interacted with a speech production network including bilateral pre/postcentral gyrus, bilateral STG and cerebellum (S. C. Blank et al., 2002; Bohland & Guenther, 2006; Cathy J. Price, 2010), as well as left-lateralized areas of anterior insula and posterior medial planum temporale. In contrast, the right anterior STS seed interacted with the left opercular part of the IFG and left SMA, and the right posterior STS showed a positive interaction with the left inferior frontal gyrus/sulcus, extending to the left frontal pole. Figure 5 illustrates the more anterior distribution of activations from the right-lateralized seed regions and the region of overlap from all seed regions in cerebellar targets.

Figure 4.

Table 4. Brain regions showing greater activation during specific impersonations.

| Contrast | No of Voxels | Region | Coordinate |

T | z | ||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Impersonation > Accents | 29 | Right superior temporal sulcus | 54 | −3 | −15 | 5.79 | 4.46 |

| 24 | Left superior temporal sulcus | −45 | −60 | 15 | 4.62 | 3.82 | |

| 66 | Left middle cingulate cortex | −6 | −48 | 36 | 4.48 | 3.73 | |

| 32 | Right superior temporal gyrus | 57 | −36 | 12 | 4.35 | 3.66 | |

Voxel height threshold p < .001 (uncorrected), cluster threshold p < .001 (corrected). Coordinates indicate the position of the peak voxel from each significant cluster, in Montreal Neurological Institute (MNI) stereotactic space.

Figure 5.

Table 5. Brain regions showing an enhanced positive correlation with temporoparietal cortex during impersonations, compared with accents.

| Seed Region | No of >Voxels | Target Region | Coordinate | T | Z | ||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Right anterior STS | 66 | Left STG | −60 | −12 | 6 | 6.16 | 4.65 |

| 98 | Right / left cerebellum | 9 | −63 | −12 | 5.86 | 4.50 | |

| 77 | Right cerebellum | 15 | −36 | −18 | 5.84 | 4.49 | |

| 21 | Left IFG (pars operc.) | −48 | 9 | 12 | 5.23 | 4.17 | |

| 65 | Right calcarine gyrus | 15 | −72 | 18 | 5.03 | 4.06 | |

| 48 | Left/right pre-SMA | −3 | 3 | 51 | 4.84 | 3.95 | |

| 37 | Right STG | 63 | −33 | 9 | 4.73 | 3.88 | |

|

| |||||||

| Left posterior STS | 346 | Left Rolandic operculum / left STG/STS | −33 | −30 | 18 | 6.23 | 4.68 |

| 287 | Left / right cerebellum | 0 | −48 | −15 | 6.15 | 4.64 | |

| 306 | Right STG/IFG | 66 | −6 | −3 | 5.88 | 4.51 | |

| 163 | Right/left caudate nucleus and right thalamus | 15 | 21 | 3 | 5.72 | 4.43 | |

| 35 | Left thalamus / hippocampus | −12 | −27 | −6 | 5.22 | 4.17 | |

| 33 | Left hippocampus | −15 | −15 | −21 | 4.97 | 4.03 | |

| 138 | Left pre/postcentral gyrus | −51 | −6 | 30 | 4.79 | 3.92 | |

| 26 | Left/right mid cingulate cortex | −9 | 9 | 39 | 4.37 | 3.67 | |

| 21 | Left IFG/STG | −57 | 12 | 3 | 4.27 | 3.61 | |

| 23 | Right postcentral gyrus | 54 | −12 | 36 | 4.23 | 3.58 | |

| 37 | Left insula / IFG | −36 | 21 | 3 | 4.14 | 3.52 | |

|

| |||||||

| Right posterior STS | 225 | Left middle/IFG | −39 | 54 | 0 | 5.90 | 4.52 |

| 40 | Left STS | −66 | −36 | 6 | 5.63 | 4.38 | |

| 41 | Right postcentral gyrus / precuneus | 27 | −45 | 57 | 5.05 | 4.07 | |

| 20 | Right IFG | 42 | 18 | 27 | 4.79 | 3.92 | |

| 57 | Left/right cerebellum | −24 | −48 | −24 | 4.73 | 3.89 | |

| 29 | Left lingual gyrus | −18 | −69 | 3 | 4.64 | 3.83 | |

| 31 | Left STG | −63 | −6 | 0 | 4.35 | 3.66 | |

Voxel height threshold p < .001 (uncorrected), cluster threshold p < .001 (corrected). Coordinates indicate the position of the peak voxel from each significant cluster, in Montreal Neurological Institute (MNI) stereotactic space. STG = superior temporal gyrus, IFG = inferior frontal gyrus, pars operc. = pars opercularis, SMA = supplementary motor area, STS = superior temporal sulcus

Our results suggest that different emphases can be distinguished between the roles performed by these superior temporal and inferior parietal areas in spoken impressions. In a meta-analysis of the semantic system, Binder et al. (2009) identified the AG as a high-order processing site performing the retrieval and integration of concepts (Binder, Desai, Graves, & Conant, 2009). The posterior left STS / AG activation has been implicated in the production of complex narrative speech and writing (Awad, Warren, Scott, Turkheimer, & Wise, 2007; Brownsett & Wise, 2010; Spitsyna, Warren, Scott, Turkheimer, & Wise, 2006) and, along with the precuneus, in the perceptual processing of familiar names, faces and voices (Gorno-Tempini et al., 1998; von Kriegstein et al., 2005) and person-related semantic information (Tsukiura, Mochizuki-Kawai, & Fujii, 2006). We propose a role for the left STS/AG in accessing and integrating conceptual information related to target voices, in close communication with the regions planning and executing articulations. The increased performance demands encountered during the emulation of specific voice identities, which requires accessing the semantic knowledge of individuals, results in greater engagement of this left posterior temporo-parietal region and its enhanced involvement with the speech production network.

The interaction of right-lateralized sites on STS with left middle and inferior frontal gyrus and pre-SMA suggests higher-order roles in planning specific impersonations. Blank et al. (2002) found that the left pars opercularis of the inferior frontal gyrus and left pre-SMA exhibited increased activation during production of speech of greater phonetic and linguistic complexity and variability, and linked the pre-SMA to the selection and planning of articulations. In studies of voice perception, the typically right-dominant Temporal Voice Areas (TVAs) in STS show stronger activation in response to vocal sounds of human men, women and children compared with non-vocal sounds (Pascal Belin & Grosbras, 2010; Pascal Belin, Robert J. Zatorre, & Pierre Ahad, 2002; P. Belin et al., 2000; Giraud et al., 2004), and right-hemisphere lesions are clinically associated with specific impairments in familiar voice recognition (Hailstone, Crutch, Vestergaard, Patterson, & Warren, 2010; Lang, Kneidl, Hielscher-Fastabend, & Heckmann, 2009; Neuner & Schweinberger, 2000). Investigations of familiarity and identity in voice perception have implicated both posterior and anterior portions of the right superior temporal lobe, including the temporal pole, in humans and macaques (P. Belin & Zatorre, 2003; Kriegstein & Giraud, 2004; Nakamura et al., 2001; von Kriegstein et al., 2005). We propose that the right STS performs acoustic imagery of target voice identities in the Impersonations condition, and that these representations are used online to guide the modified articulatory plans necessary to effect voice change via left-lateralized sites on the inferior and middle frontal gyri. Although there were some acoustic differences between the speech produced under these two conditions – the Impersonations had a higher mean and standard deviation of pitch than the Accents (see Table 1) – we would expect to see sensitivity to these physical properties in earlier parts of the auditory processing stream i.e. STG rather than STS. Therefore, the current results offer the first demonstration that right temporal regions previously implicated in the perceptual processing and recognition of voices may play a direct role in modulating vocal identity in speech.

The flexible control of the voice is a crucial element of the expression of identity. Here, we show that changing the characteristics of vocal expression, without changing the linguistic content of speech, primarily recruits left anterior insula and inferior frontal cortex. We propose that therapeutic approaches targeting metalinguistic aspects of speech production, such as Melodic Intonation Therapy (P. Belin et al., 1996) and respiratory training, could be beneficial in cases of speech production deficits after injury to left frontal sites.

Our finding that superior temporal regions previously identified with the perception of voices showed increased activation and greater positive connectivity with frontal speech planning sites during the emulation of specific vocal identities offers a novel demonstration of a selective role for these voice-processing sites in modulating the expression of vocal identity. Existing models of speech production focus on the execution of linguistic output, and monitoring for errors in this process (Hickok, 2012; C. J. Price, Crinion, & Macsweeney, 2011; Jason A. Tourville & Guenther, 2011). We suggest that non-canonical speech output need not always form an error – for example, the convergence on pronunciations observed in conversation facilitates comprehension, interaction and social cohesion (Chartrand & Bargh, 1999; Garrod & Pickering, 2004). However, there likely exists some form of task-related error-monitoring and correction when speakers attempt to modulate how they sound, possibly along a predictive coding mechanism that attempts to reduce the disparity between predicted and actual behaviour (K. Friston, 2010; K. J. Friston & Price, 2001; C. J. Price et al., 2011) – this could take place in the right superior temporal cortex (although we note that previous studies directly investigating the detection of, and compensation for, pitch/time-shifted speech have located this to bilateral posterior STG; Takaso, Eisner, Wise, & Scott, 2010; J. A. Tourville et al., 2008). We propose to repeat the current experiment with professional voice artists, who are expert at producing of convincing impressions and presumably also skilled in self-report on, for example, performance difficulty and accuracy. These trial-by-trial ratings could be used to interrogate the brain regions engaged when the task is more challenging, to potentially uncover a more detailed mechanistic explanation for the networks identified for the first time in the current experiment.

We offer the first delineation of how speech production and voice perception systems interact to effect controlled changes of identity expression during voluntary speech. This provides an essential step in understanding the neural bases for the ubiquitous behavioural phenomenon of vocal modulation in spoken communication.

References

- Ackermann H, Riecker A. The contribution of the insula to motor aspects of speech production: a review and a hypothesis. Brain Lang. 2004;89(2):320–328. doi: 10.1016/S0093-934X(03)00347-X. doi: 10.1016/S0093-934X(03)00347-X ; pii: S0093934X0300347X. [DOI] [PubMed] [Google Scholar]

- Awad M, Warren JE, Scott SK, Turkheimer FE, Wise RJS. A common system for the comprehension and production of narrative speech. Journal of Neuroscience. 2007;27(43):11455–11464. doi: 10.1523/JNEUROSCI.5257-06.2007. doi: 10.1523/jneurosci.5257-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aziz-Zadeh L, Sheng T, Gheytanchi A. Common Premotor Regions for the Perception and Production of Prosody and Correlations with Empathy and Prosodic Ability. Plos One. 2010;5(1) doi: 10.1371/journal.pone.0008759. doi: 10.1371/journal.pone.0008759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bailly G. Close shadowing natural versus synthetic speech. International Journal of Speech Technology. 2003;6(1):11–19. [Google Scholar]

- Belin P, Fecteau S, Bedard C. Thinking the voice: neural correlates of voice perception. Trends in Cognitive Sciences. 2004;8(3):129–135. doi: 10.1016/j.tics.2004.01.008. doi: 10.1016/j.tics.2004.01.008. [DOI] [PubMed] [Google Scholar]

- Belin P, Grosbras M-H. Before Speech: Cerebral Voice Processing in Infants. Neuron. 2010;65(6):733–735. doi: 10.1016/j.neuron.2010.03.018. doi: 10.1016/j.neuron.2010.03.018. [DOI] [PubMed] [Google Scholar]

- Belin P, McAdams S, Smith B, Savel S, Thivard L, Samson S, Samson Y. The functional anatomy of sound intensity discrimination. Journal of Neuroscience. 1998;18(16):6388–6394. doi: 10.1523/JNEUROSCI.18-16-06388.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belin P, Van Eeckhout P, Zilbovicius M, Remy P, Francois C, Guillaume S, Samson Y, et al. Recovery from nonfluent aphasia after melodic intonation therapy: A PET study. Neurology. 1996;47(6):1504–1511. doi: 10.1212/wnl.47.6.1504. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ. Adaptation to speaker’s voice in right anterior temporal lobe. Neuroreport. 2003;14(16):2105–2109. doi: 10.1097/00001756-200311140-00019. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Ahad P. Human temporal-lobe response to vocal sounds. Brain research. Cognitive brain research. 2002;13(1):17–26. doi: 10.1016/s0926-6410(01)00084-2. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Ahad P. Human temporal-lobe response to vocal sounds. Cognitive Brain Research. 2002;13(1):17–26. doi: 10.1016/s0926-6410(01)00084-2. doi: 10.1016/s0926-6410(01)00084-2. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403(6767):309–312. doi: 10.1038/35002078. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where Is the Semantic System? A Critical Review and Meta-Analysis of 120 Functional Neuroimaging Studies. Cerebral Cortex. 2009;19(12):2767–2796. doi: 10.1093/cercor/bhp055. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blank SC, Scott SK, Murphy K, Warburton E, Wise RJ. Speech production: Wernicke, Broca and beyond. Brain. 2002;125(Pt 8):1829–1838. doi: 10.1093/brain/awf191. [DOI] [PubMed] [Google Scholar]

- Blank SC, Scott SK, Murphy K, Warburton E, Wise RJS. Speech production: Wernicke, Broca and beyond. Brain. 2002;125:1829–1838. doi: 10.1093/brain/awf191. doi: 10.1093/brain/awf191. [DOI] [PubMed] [Google Scholar]

- Bohland JW, Guenther FH. An fMRI investigation of syllable sequence production. Neuroimage. 2006;32(2):821–841. doi: 10.1016/j.neuroimage.2006.04.173. doi: 10.1016/j.neuroimage.2006.04.173. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The Psychophysics Toolbox. Spatial Vision. 1997;10(4):433–436. [PubMed] [Google Scholar]

- Brett M, Anton JL, Valabregue R, Poline JB. Region of interest analysis using an SPM toolbox; Paper presented at the International Conference on Functional Mapping of the Human Brain; Sendai, Japan. 2002. [Google Scholar]

- Broca P. Perte de la parole, ramollissement chronique, et destruction partielle du lobe antérieur gauche du cerveau. Bulletin de la Société Anthropologique. 1861;2:235–238. [Google Scholar]

- Brownsett SLE, Wise RJS. The Contribution of the Parietal Lobes to Speaking and Writing. Cerebral Cortex. 2010;20(3):517–523. doi: 10.1093/cercor/bhp120. doi: 10.1093/cercor/bhp120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cartei V, Cowles HW, Reby D. Spontaneous Voice Gender Imitation Abilities in Adult Speakers. Plos One. 2012;7(2) doi: 10.1371/journal.pone.0031353. doi: 10.1371/journal.pone.0031353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chartrand TL, Bargh JA. The Chameleon effect: The perception-behavior link and social interaction. Journal of Personality and Social Psychology. 1999;76(6):893–910. doi: 10.1037//0022-3514.76.6.893. doi: 10.1037//0022-3514.76.6.893. [DOI] [PubMed] [Google Scholar]

- Condon WS, Ogston WD. A segmentation of behavior. Journal of Psychiatric Research. 1967;5(3):221. doi: 10.1016/0022-3956(67)90004-0. [Google Scholar]

- Cooke M, Lu Y. Spectral and temporal changes to speech produced in the presence of energetic and informational maskers. Journal of the Acoustical Society of America. 2010;128(4):2059–2069. doi: 10.1121/1.3478775. doi: 10.1121/1.3478775. [DOI] [PubMed] [Google Scholar]

- Dhanjal NS, Handunnetthi L, Patel MC, Wise RJ. Perceptual systems controlling speech production. J Neurosci. 2008;28(40):9969–9975. doi: 10.1523/JNEUROSCI.2607-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dronkers NF. A new brain region for coordinating speech articulation. Nature. 1996;384(6605):159–161. doi: 10.1038/384159a0. doi: 10.1038/384159a0. [DOI] [PubMed] [Google Scholar]

- Edmister WB, Talavage TM, Ledden PJ, Weisskoff RM. Improved auditory cortex imaging using clustered volume acquisitions. Human Brain Mapping. 1999;7(2):89–97. doi: 10.1002/(SICI)1097-0193(1999)7:2<89::AID-HBM2>3.0.CO;2-N. doi: 10.1002/(sici)1097-0193(1999)7:2<89::aid-hbm2>3.0.co;2-n. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25(4):1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Eriksson E, Sullivan K, Zetterholm E, Czigler P, Green J, Skagerstrand A, van Doorn J. Detection of imitated voices: who are reliable earwitnesses? International Journal of Speech Language and the Law. 2010;17(1):25–44. doi: 10.1558/ijsll.v17i1.25. [Google Scholar]

- Friston K. The free-energy principle: a unified brain theory? Nature Reviews Neuroscience. 2010;11(2):127–138. doi: 10.1038/nrn2787. doi: 10.1038/nrn2787. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Price CJ. Dynamic representations and generative models of brain function. Brain Res Bull. 2001;54(3):275–285. doi: 10.1016/s0361-9230(00)00436-6. doi: 10.1016/s0361-9230(00)00436-6. [DOI] [PubMed] [Google Scholar]

- Garrod S, Pickering MJ. Why is conversation so easy? Trends in Cognitive Sciences. 2004;8(1):8–11. doi: 10.1016/j.tics.2003.10.016. doi: 10.1016/j.tics.2003.10.016. [DOI] [PubMed] [Google Scholar]

- Giles H. Accent mobility: A model and some data. Anthropological Linguistics. 1973;15:87–105. [Google Scholar]

- Giles H, Coupland N, Coupland J. Accommodation theory: Communication, context, and consequence. In: Giles H, Coupland J, Coupland N, editors. The contexts of accommodation. Cambridge University Press; New York: 1991. pp. 1–68. [Google Scholar]

- Giraud AL, Kell C, Thierfelder C, Sterzer P, Russ MO, Preibisch C, Kleinschmidt A. Contributions of sensory input, auditory search and verbal comprehension to cortical activity during speech processing. Cerebral Cortex. 2004;14(3):247–255. doi: 10.1093/cercor/bhg124. doi: 10.1093/cercor/bhg124. [DOI] [PubMed] [Google Scholar]

- Gorno-Tempini ML, Price CJ, Josephs O, Vandenberghe R, Cappa SF, Kapur N, Frackowiak RS. The neural systems sustaining face and proper-name processing. Brain. 1998;121(Pt 11):2103–2118. doi: 10.1093/brain/121.11.2103. [DOI] [PubMed] [Google Scholar]

- Groswasser Z, Korn C, Groswasser Reider,, Solzi P. Mutism associated with buccofacial apraxia and bihemispheric lesions. Brain Lang. 1988;34(1):157–168. doi: 10.1016/0093-934x(88)90129-0. [DOI] [PubMed] [Google Scholar]

- Hailstone JC, Crutch SJ, Vestergaard MD, Patterson RD, Warren JD. Progressive associative phonagnosia: A neuropsychological analysis. Neuropsychologia. 2010;48(4):1104–1114. doi: 10.1016/j.neuropsychologia.2009.12.011. doi: 10.1016/j.neuropsychologia.2009.12.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hall DA, Haggard MP, Akeroyd MA, Palmer AR, Summerfield AQ, Elliott MR, Bowtell RW. “Sparse” temporal sampling in auditory fMRI. Human Brain Mapping. 1999;7(3):213–223. doi: 10.1002/(SICI)1097-0193(1999)7:3<213::AID-HBM5>3.0.CO;2-N. doi: 10.1002/(sici)1097-0193(1999)7:3<213::aid-hbm5>3.0.co;2-n. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrington J, Palethorpe S, Watson CI. Does the Queen speak the Queen’s English? Elizabeth II’s traditional pronunciation has been influenced by modern trends. Nature. 2000;408(6815):927–928. doi: 10.1038/35050160. doi: 10.1038/35050160. [DOI] [PubMed] [Google Scholar]

- Hickok G. Computational neuroanatomy of speech production. Nat Rev Neurosci. 2012;13(2):135–145. doi: 10.1038/nrn3158. doi: 10.1038/nrn3158 ; pii: nrn3158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jurgens U. Neural pathways underlying vocal control. Neuroscience and Biobehavioral Reviews. 2002;26(2):235–258. doi: 10.1016/s0149-7634(01)00068-9. pii: S0149763401000689. [DOI] [PubMed] [Google Scholar]

- Jurgens U, von Cramon D. On the role of the anterior cingulate cortex in phonation: a case report. Brain Lang. 1982;15(2):234–248. doi: 10.1016/0093-934x(82)90058-x. [DOI] [PubMed] [Google Scholar]

- Kappes J, Baumgaertner A, Peschke C, Ziegler W. Unintended imitation in nonword repetition. Brain and Language. 2009;111(3):140–151. doi: 10.1016/j.bandl.2009.08.008. doi: 10.1016/j.bandl.2009.08.008. [DOI] [PubMed] [Google Scholar]

- Karpf A. The Human Voice: The Story of a Remarkable Talent. Bloomsbury Publishing PLC; London: 2007. [Google Scholar]

- Kriegstein KV, Giraud AL. Distinct functional substrates along the right superior temporal sulcus for the processing of voices. Neuroimage. 2004;22(2):948–955. doi: 10.1016/j.neuroimage.2004.02.020. doi: 10.1016/j.neuroimage.2004.02.020. [DOI] [PubMed] [Google Scholar]

- Kurth F, Zilles K, Fox PT, Laird AR, Eickhoff SB. A link between the systems: functional differentiation and integration within the human insula revealed by meta-analysis. Brain Struct Funct. 2010;214(5-6):519–534. doi: 10.1007/s00429-010-0255-z. doi: 10.1007/s00429-010-0255-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ladefoged P. Validity of voice identification. Journal of the Acoustical Society of America. 2003;114:2403–2403. [Google Scholar]

- Lang CJG, Kneidl O, Hielscher-Fastabend M, Heckmann JG. Voice recognition in aphasic and non-aphasic stroke patients. Journal of Neurology. 2009;256(8):1303–1306. doi: 10.1007/s00415-009-5118-2. doi: 10.1007/s00415-009-5118-2. [DOI] [PubMed] [Google Scholar]

- Lombard É . Le signe de l’élévation de la voix. Annales des Maladies de L’Oreille et du Larynx. 1911;XXXVII:101–109. [Google Scholar]

- Lu Y, Cooke M. Speech production modifications produced in the presence of low-pass and high-pass filtered noise. Journal of the Acoustical Society of America. 2009;126(3):1495–1499. doi: 10.1121/1.3179668. doi: 10.1121/1.3179668. [DOI] [PubMed] [Google Scholar]

- Maclarnon A, Hewitt G. Increased breathing control: Another factor in the evolution of human language. Evolutionary Anthropology. 2004;13(5):181–197. doi: 10.1002/evan.20032. [Google Scholar]

- MacLarnon AM, Hewitt GP. The evolution of human speech: The role of enhanced breathing control. American Journal of Physical Anthropology. 1999;109(3):341–363. doi: 10.1002/(SICI)1096-8644(199907)109:3<341::AID-AJPA5>3.0.CO;2-2. doi: 10.1002/(sici)1096-8644(199907)109:3<341::aid-ajpa5>3.0.co;2-2. [DOI] [PubMed] [Google Scholar]

- McFarland DH. Respiratory markers of conversational interaction. Journal of Speech, Language, and Hearing Research. 2001;44(1):128–143. doi: 10.1044/1092-4388(2001/012). doi: 10.1044/1092-4388(2001/012) [DOI] [PubMed] [Google Scholar]

- Meyer M, Zysset S, von Cramon DY, Alter K. Distinct fMRI responses to laughter, speech, and sounds along the human peri-sylvian cortex. Brain research. Cognitive brain research. 2005;24(2):291–306. doi: 10.1016/j.cogbrainres.2005.02.008. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Kawashima R, Sugiura M, Kato T, Nakamura A, Hatano K, Kojima S. Neural substrates for recognition of familiar voices: a PET study. Neuropsychologia. 2001;39(10):1047–1054. doi: 10.1016/s0028-3932(01)00037-9. doi: 10.1016/s0028-3932(01)00037-9. [DOI] [PubMed] [Google Scholar]

- Neuner F, Schweinberger SR. Neuropsychological impairments in the recognition of faces, voices, and personal names. Brain and Cognition. 2000;44(3):342–366. doi: 10.1006/brcg.1999.1196. doi: 10.1006/brcg.1999.1196. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. Neuroimage. 2005;25(3):653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Papoutsi M, de Zwart JA, Jansma JM, Pickering MJ, Bednar JA, Horwitz B. From Phonemes to Articulatory Codes: An fMRI Study of the Role of Broca’s Area in Speech Production. Cerebral Cortex. 2009;19(9):2156–2165. doi: 10.1093/cercor/bhn239. doi: 10.1093/cercor/bhn239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pardo JS. On phonetic convergence during conversational interaction. Journal of the Acoustical Society of America. 2006;119(4):2382–2393. doi: 10.1121/1.2178720. doi: 10.1121/1.2178720. [DOI] [PubMed] [Google Scholar]

- Pardo JS, Gibbons R, Suppes A, Krauss RM. Phonetic convergence in college roommates. Journal of Phonetics. 2012;40(1):190–197. doi: 10.1016/j.wocn.2011.10.001. [Google Scholar]

- Pardo JS, Jay IC, et al. Conversational role influences speech imitation. Attention, Perception and Psychophysics. 2010;72(8):2254–2264. doi: 10.3758/bf03196699. doi: 10.3758/app.72.8.2254. [DOI] [PubMed] [Google Scholar]

- Peschke C, Ziegler W, Kappes J, Baumgaertner A. Auditory-motor integration during fast repetition: The neuronal correlates of shadowing. Neuroimage. 2009;47(1):392–402. doi: 10.1016/j.neuroimage.2009.03.061. doi: 10.1016/j.neuroimage.2009.03.061. [DOI] [PubMed] [Google Scholar]

- Pickering MJ, Garrod S. Do people use language production to make predictions during comprehension? Trends in Cognitive Sciences. 2007;11(3):105–110. doi: 10.1016/j.tics.2006.12.002. doi: 10.1016/j.tics.2006.12.002. [DOI] [PubMed] [Google Scholar]

- Price CJ. The anatomy of language: a review of 100 fMRI studies published in 2009. In: Kingstone AMMB, editor. Year in Cognitive Neuroscience 2010. Vol. 1191. 2010. pp. 62–88. [DOI] [PubMed] [Google Scholar]

- Price CJ, Crinion JT, Macsweeney M. A Generative Model of Speech Production in Broca’s and Wernicke’s Areas. Front Psychol. 2011;2:237. doi: 10.3389/fpsyg.2011.00237. doi: 10.3389/fpsyg.2011.00237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiterer SM, Hu X, Erb M, Rota G, Nardo D, Grodd W, Ackermann H. Individual differences in audio-vocal speech imitation aptitude in late bilinguals: functional neuro-imaging and brain morphology. Frontiers in psychology. 2011;2:271–271. doi: 10.3389/fpsyg.2011.00271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riecker A, Brendel B, Ziegler W, Erb M, Ackermann H. The influence of syllable onset complexity and syllable frequency on speech motor control. Brain and Language. 2008;107(2):102–113. doi: 10.1016/j.bandl.2008.01.008. doi: 10.1016/j.bandl.2008.01.008. [DOI] [PubMed] [Google Scholar]

- Riecker A, Mathiak K, Wildgruber D, Erb M, Hertrich I, Grodd W, Ackermann H. fMRI reveals two distinct cerebral networks subserving speech motor control. Neurology. 2005;64(4):700–706. doi: 10.1212/01.WNL.0000152156.90779.89. [DOI] [PubMed] [Google Scholar]

- Schirmer A, Kotz SA. Beyond the right hemisphere: brain mechanisms mediating vocal emotional processing. Trends in Cognitive Sciences. 2006;10(1):24–30. doi: 10.1016/j.tics.2005.11.009. doi: 10.1016/j.tics.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Scott SK, Clegg F, Rudge P, Burgess P. Foreign accent syndrome, speech rhythm and the functional neuronatomy of speech production. Journal of Neurolinguistics. 2006;19(5):370–384. doi: 10.1016/j.jneuroling.2006.03.008. [Google Scholar]

- Sem-Jacobsen C, Torkildsen A. Depth recording and electrical stimulation in the human brain. In: Ramey EROD, D.S., editors. Electrical studies on the unanesthetized brain. New York: 1968. [Google Scholar]

- Shockley K, Sabadini L, Fowler CA. Imitation in shadowing words. Perception and Psychophysics. 2004;66(3):422–429. doi: 10.3758/bf03194890. doi: 10.3758/bf03194890. [DOI] [PubMed] [Google Scholar]

- Simmonds AJ, Wise RJS, Dhanjal NS, Leech R. A comparison of sensory-motor activity during speech in first and second languages. Journal of Neurophysiology. 2011;106(1):470–478. doi: 10.1152/jn.00343.2011. doi: 10.1152/jn.00343.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simonyan K, Ostuni J, Ludlow CL, Horwitz B. Functional but not structural networks of the human laryngeal motor cortex show left hemispheric lateralization during syllable but not breathing production. J Neurosci. 2009;29(47):14912–14923. doi: 10.1523/JNEUROSCI.4897-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slotnick SD, Moo LR, Segal JB, Hart J. Distinct prefrontal cortex activity associated with item memory and source memory for visual shapes. Cognitive Brain Research. 2003;17(1):75–82. doi: 10.1016/s0926-6410(03)00082-x. doi: 10.1016/s0926-6410(03)00082-x. [DOI] [PubMed] [Google Scholar]

- Spitsyna G, Warren JE, Scott SK, Turkheimer FE, Wise RJS. Converging language streams in the human temporal lobe. Journal of Neuroscience. 2006;26(28):7328–7336. doi: 10.1523/JNEUROSCI.0559-06.2006. doi: 10.1523/jneurosci.0559-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sullivan KPH, Schlichting F. A perceptual and acoustic study of the imitated voice. Journal of the Acoustical Society of America. 1998;103:2894–2894. [Google Scholar]

- Takaso H, Eisner F, Wise RJ, Scott SK. The effect of delayed auditory feedback on activity in the temporal lobe while speaking: a positron emission tomography study. J Speech Lang Hear Res. 2010;53(2):226–236. doi: 10.1044/1092-4388(2009/09-0009). doi: 10.1044/1092-4388(2009/09-0009) ; pii: 1092-4388_2009_09-0009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tourville JA, Guenther FH. The DIVA model: A neural theory of speech acquisition and production. Language and Cognitive Processes. 2011;26(7):952–981. doi: 10.1080/01690960903498424. doi: 10.1080/01690960903498424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tourville JA, Reilly KJ, Guenther FH. Neural mechanisms underlying auditory feedback control of speech. Neuroimage. 2008;39(3):1429–1443. doi: 10.1016/j.neuroimage.2007.09.054. doi: 10.1016/j.neuroimage.2007.09.054 ; pii: S1053-8119(07)00883-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsukiura T, Mochizuki-Kawai H, Fujii T. Dissociable roles of the bilateral anterior temporal lobe in face-name associations: An event-related fMRI study. Neuroimage. 2006;30(2):617–626. doi: 10.1016/j.neuroimage.2005.09.043. doi: 10.1016/j.neuroimage.2005.09.043. [DOI] [PubMed] [Google Scholar]

- von Kriegstein K, Eger E, Kleinschmidt A, Giraud AL. Modulation of neural responses to speech by directing attention to voices or verbal content. Cognitive Brain Research. 2003;17(1):48–55. doi: 10.1016/s0926-6410(03)00079-x. doi: 10.1016/s0926-6410(03)00079-x. [DOI] [PubMed] [Google Scholar]

- von Kriegstein K, Kleinschmidt A, Sterzer P, Giraud AL. Interaction of face and voice areas during speaker recognition. Journal of Cognitive Neuroscience. 2005;17(3):367–376. doi: 10.1162/0898929053279577. [DOI] [PubMed] [Google Scholar]

- Wildgruber D, Riecker A, Hertrich I, Erb M, Grodd W, Ethofer T, Ackermann H. Identification of emotional intonation evaluated by fMRI. Neuroimage. 2005;24(4):1233–1241. doi: 10.1016/j.neuroimage.2004.10.034. doi: 10.1016/j.neuroimage.2004.10.034. [DOI] [PubMed] [Google Scholar]

- Wise RJ, Greene J, Buchel C, Scott SK. Brain regions involved in articulation. Lancet. 1999;353(9158):1057–1061. doi: 10.1016/s0140-6736(98)07491-1. pii: S0140673698074911. [DOI] [PubMed] [Google Scholar]