Abstract

Purpose

To use a mixed-methods approach to develop a letter that can be used to notify patients of their bone mineral density (BMD) results by mail that may activate patients in their bone-related health care.

Patients and methods

A multidisciplinary team developed three versions of a letter for reporting BMD results to patients. Trained interviewers presented these letters in a random order to a convenience sample of adults, aged 50 years and older, at two different health care systems. We conducted structured interviews to examine the respondents’ preferences and comprehension among the various letters.

Results

A total of 142 participants completed the interview. A majority of the participants were female (64.1%) and white (76.1%). A plurality of the participants identified a specific version of the three letters as both their preferred version (45.2%; P<0.001) and as the easiest to understand (44.6%; P<0.01). A majority of participants preferred that the letters include specific next steps for improving their bone health.

Conclusion

Using a mixed-methods approach, we were able to develop and optimize a printed letter for communicating a complex test result (BMD) to patients. Our results may offer guidance to clinicians, administrators, and researchers who are looking for guidance on how to communicate complex health information to patients in writing.

Keywords: osteoporosis, DXA, test results, patient education, fracture risk, patient activation

Introduction

Osteoporosis, a condition characterized by decreased bone density, predisposes affected individuals to fractures after minor falls or minor trauma (eg, bumping into a table).1–4 Osteoporosis-related fractures are associated with increased mortality and reduced quality of life and will affect approximately 50% of postmenopausal women and 25% of men >50 years old in the US.5,6 Fortunately, two inexpensive and widely accessible tools exist for identifying patients with osteoporosis – dual-energy X-ray absorptiometry (DXA) and the Fracture Risk Assessment Tool (FRAX®; World Health Organization Collaborating Centre for Metabolic Bone Diseases, University of Sheffield, UK). DXA is a reliable and painless diagnostic test for measuring bone mineral density (BMD). FRAX® is an online calculator that uses DXA results and other patient factors (eg, age, body mass index, fracture history, tobacco, and alcohol use, etc) to compute a patient’s individualized 10-year risk of major osteoporotic fracture.7 Together, DXA and FRAX® provide the information that both patients and providers need to engage in shared medical decision making with respect to osteoporosis.5,8

However, communicating complex test results, such as DXA, to patients is fraught with difficulty. Both health literacy and numeracy are barriers to sharing test results with patients. Health literacy is a factor in patient comprehension of any type of health-related communication and is a problem for most people in the US.9–11 Poor health literacy has been linked to decreases in patients’ ability to manage their health.10 Numeracy – the ability a person has to understand probability12,13 – is also poor, even for those who are highly educated.14 Most patients may have trouble comprehending their DXA results and FRAX®, given the poor health literacy and numeracy levels in the US.

In addition to issues of health literacy and numeracy, additional barriers in communicating DXA results exist. For example, testing centers may fail to communicate DXA results to providers. Providers may also have difficulty understanding DXA results and at times may also fail to communicate DXA results to patients. Then even when results are effectively communicated, appropriate treatment may not be initiated.15–20 In an effort to overcome these barriers and to improve osteoporosis care, many investigators have undertaken interventions aimed at educating providers about osteoporosis;20–22 however, many of these efforts have been unsuccessful at improving care.

More recently, researchers seeking to improve care in both osteoporosis and other conditions have turned to patient activation models,23–25 which focus quality improvement efforts at the patient. These models have been based upon the assumption that the patient has the greatest motivation and interest in ensuring that he/she receives high-quality care. Over the past decade, our team has been progressively studying patient activation interventions focused upon having DXA testing centers directly communicate DXA results to patients.26,27 Our model differs from traditional clinical practice in which bone density testing centers typically send DXA results to the ordering physicians only.15,28 Our model is designed to overcome several of the barriers previously mentioned by ensuring that the DXA results are communicated to patients, something that other studies have found to be a problem.15,17,29 In earlier studies, we have demonstrated that both physicians and patients would be amenable to a system of DXA centers directly reporting results to patients,15,26,28 but at the present time we are unaware of a theoretically driven and rigorously derived letter for communicating DXA results to patients.

With funding from the US National Institutes of Health, we are conducting a randomized controlled trial – the Patient Activation After DXA Result Notification (PAADRN) study (NCT01507662).30 The aim of the PAADRN study is to evaluate the impact that a mailed DXA result notification letter has on patients’ knowledge of osteoporosis, follow-up with their health care provider, and change in bone-related preventative health behaviors (eg, calcium and vitamin D intake, osteoporosis medication adherence, and exercise).31

A key component of the PAADRN study has been the development of a DXA result letter that could be mailed to patients by DXA testing centers. With this background, the aim of the current study is to describe our efforts using a mixed-methods approach to develop and pilot test a letter for communicating DXA results to a spectrum of patients who might be typical of those patients seen in many osteoporosis clinics.

Materials and methods

Letter development

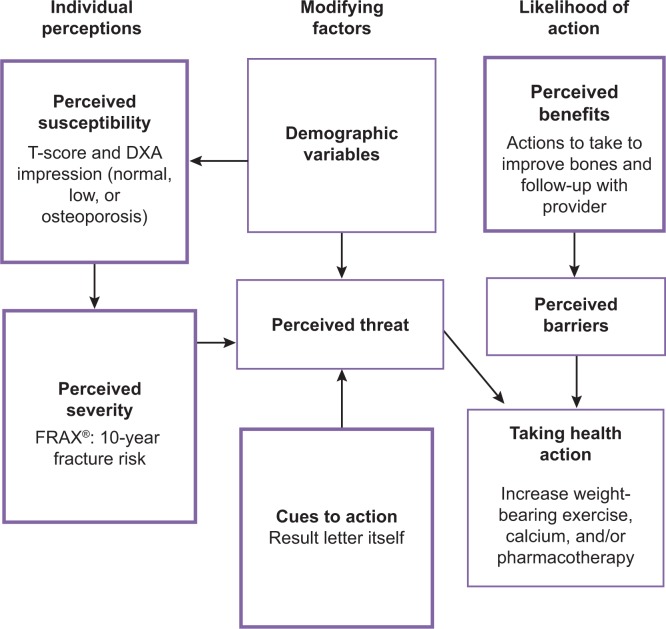

Our research team consisted of health care practitioners and health communication experts (faculty and staff with graduate degrees in health education and communication). We began by creating a list of key information that we deemed important to communicate to patients who had just had a DXA scan; our list was informed by the Health Belief Model (Figure 1).32 This information included:

Figure 1.

Critical topics in the DXA result letter linked to the Health Belief Model.

Abbreviations: DXA, dual-energy X-ray absorptiometry; FRAX®, Fracture Risk Assessment Tool (World Health Organization Collaborating Centre for Metabolic Bone Diseases, University of Sheffield, UK).

DXA: an explanation that they had a DXA and the date performed.

T-score: a score that gives an individual’s bone density compared to the bone density of a normal 30 year old.

Diagnosis or impression: typically derived from the T-score and categorized as normal, low-bone density (osteopenia), or osteoporosis.

FRAX®: individual’s 10-year probability of major osteoporotic fracture (fracture in the hip, vertebrae, distal forearm, and proximal humerus).7

Recommendations: actions patients can take to improve their bone health and to follow-up with their health care provider.

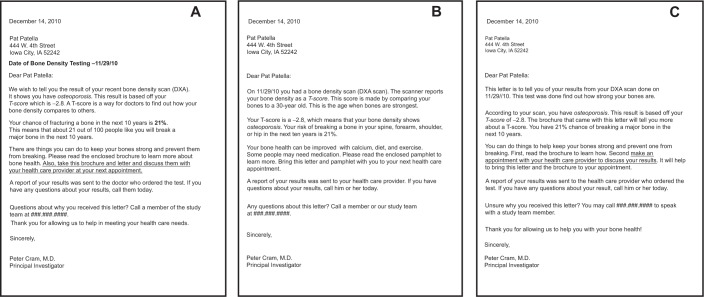

Based upon the list of required topics we wanted to include in the letter, we created several draft versions of a DXA result letter for internal review that represented differing styles, phrasing, and ordering of various pieces of information. All letters were written for a sixth grade reading level as per the Fry Readability Formula,33 contained the same content, and used a 12-point serif font as per best-practices guidelines.34 The draft letters were ultimately reduced to three test versions for pilot testing (letter A, letter B, and letter C). Figure 2 displays the three versions; Figure 3 highlights the key differences between the letters.

Figure 2.

Three versions of the DXA result notification letter (A–C).

Abbreviations: DXA, dual-energy X-ray absorptiometry; FRAX®, Fracture risk Assessment Tool.

Figure 3.

Key characteristics and differences among the three versions of the DXA result letter.

Abbreviations: DXA, dual-energy X-ray absorptiometry; FRAX®, Fracture risk Assessment Tool.

Design

We used a mixed-methods approach to identify the potential participants’ letter preference and their rationale for these preferences. Quantitative measures were employed to identify significant differences within these constructs with regard to differently worded test result letters. Open-ended qualitative items were included to provide a contextualized understanding of these trends and more readily identify effective ways to refine the letters for greatest effect. By collecting the participants’ own words to justify their ranking, we were able to identify larger health literacy and risk perception issues to be addressed in our randomized controlled trial.

Participants and recruitment

After developing the three candidate versions of the letter, we used a mixed-methods approach to examine: which version of the letter was preferred; why one version was preferred relative to others; and to obtain suggestions for improvement in our letters. We approached a convenience sample of patients and visitors from the campus of a large teaching hospital in the Midwestern US (site A) and a private outpatient clinic in the Southeastern US (site B). Participants were deemed eligible for our study if they met the enrollment criteria for the PAADRN study for which we were developing the mailed letters.

Specifically, we were seeking males and females, 50 years of age and older. We excluded people who did not speak and read English, prisoners, and those with mental disabilities. Similar to the PAADRN study eligibility criteria, we included both adults who had received prior DXA testing and adults who had not. Research assistants who were trained in quantitative and qualitative interview techniques approached potential participants in the clinic waiting areas and lobbies of our two study sites. Participants were given parking vouchers and/or gift cards in appreciation for their participation. This study was approved by the institutional review board at each site.

Interview

We conducted audio-recorded structured interviews with each participant to gain an understanding of his or her preferences and suggestions regarding the sample letters. After approaching a subject and obtaining agreement to participate, the interviewer guided each subject through a series of steps:

-

Step 1.

Study introduction and reading of the letters. A research assistant introduced the study to each subject using the following script: “Some people need to have a test called a DXA done to figure out how strong their bones are. After the test is done, the results are sent to their doctor. In addition to this, we want to send the test results directly to patients in a letter that will be easy for them to understand. We would like your help in trying to figure out the best way to write the results to those patients receiving them so they understand what they mean. We have written three versions of a sample letter that could be sent to patients. Please read these letters and help us design the best letter. Please read letter A and let me know when you are finished.” Participants were then presented with each letter (in a random order) sequentially and asked to read it. The letters were presented in a random order to ensure that our results were not influenced by the priming of participants that might have occurred if a fixed sequence had been used.

-

Step 2.

Participants were asked to state the main point of the letter and the action they would take, if any, after receiving this letter.

-

Step 3.

Participants were instructed to annotate the letters by using assorted stickers to identify words, phrases, or sentences they liked and/or found confusing.

-

Step 4.

Participants were asked to verbally explain the reasoning behind all stickers.

-

Step 5.

Participants were asked to compare the letters and identify which was: 1) their favorite; 2) their least favorite; 3) the easiest to understand; 4) the hardest to understand; and 5) the letter that made them the most worried.

-

Step 6.

Participants were asked to explain their reasons for identifying the letters in these categories and to provide suggestions for improving the letters.

To aid our analysis, we collected additional information, including demographics (age, race, sex), educational attainment, health numeracy (using a subset of questions from the Subjective Numeracy Scale),35 and three health literacy screening items.36 We also collected information on each participant’s current employment; history of fracture; diagnosis with osteoporosis or low bone density; exposure to DXA; and how they would rank their overall health. See the Supplementary materials for the interview script pertinent to this data collection phase.

Analysis

We used a sequential mixed-methods approach for data analysis, prioritizing the analysis of the quantitative data to focus our analysis of the qualitative data.37 The quantitative data were used to compare participant rankings of letter preference, comprehension, and worry for the three letters. We used qualitative methods to analyze results from the interview transcripts.

Quantitative analyses

We first compared demographic (eg, age, race, sex) and clinical characteristics (eg, history of prior DXA scans, history of osteoporosis, or prior fracture) of subjects from the two sites. We used two-sample Student’s t-test for comparisons of continuous variables and the chi-squared test for categorical variables. We examined whether the participants’ choice of their favorite letter and most easily understood letter differed statistically by sex, race, age, education and site, using Cochran–Mantel–Haenszel statistics, and by average literacy and numeracy, using analysis of variance. The pairwise comparison significance level was adjusted by Tukey’s test. All analyses were performed using SAS Version 9.2 (SAS Institute Inc., Cary, NC, USA). An alpha level of 0.05 was considered statistically significant.

To examine participant preference and comprehension of the distinct components of the letters, each letter was broken down into each of the five critical elements discussed previously (DXA, T-score, diagnosis, FRAX®, and recommendations). We coded each topic in each letter as “liked” or “disliked” for each participant’s critique of each letter. We then examined the frequency of the number of “likes” and “dislikes” for each topic from each letter to assess which components of each letter were preferred.

Qualitative analyses

Two trained qualitative analysts reviewed the interview transcripts to develop a codebook for categorizing participants’ rationales for preferring one letter versus another and for particular sections/phrasings of text within the individual letters. A random sample of 10% of the transcripts were reviewed in duplicate by the first author; disagreements in coding were resolved through discussion with the coders. The participants’ rationale for sticker placement was then layered onto this coding to foster rapid comparison across the three letters. After the completion of the coding of all the transcripts and passages, the codes were independently reviewed by two of the authors (SWE and SLS), who identified general themes to characterize participants’ beliefs and preferences with respect to the letters and to the optimal communication of the DXA results. These themes were compared, refined, and synthesized to develop a final letter reflecting participant preference and comprehension. Interviews were coded using qualitative data analysis software (MAXQDA Version 10, VERBI Software GmbH, Marburg, Germany).

Results

Participants

We interviewed 142 participants. A majority of the participants were female (64.1%) and Caucasian (76.1%); the mean age was 64.0 (±8.3) years. Site B had a higher percentage of women (P=0.03), a higher percentage of African Americans (P<0.001), and a higher level of education (P<0.01) when compared to site A (Table 1). Across sites, more than one-half of subjects reported their health as “very good” or “excellent” (54.7%). The findings are presented according to the survey items and responses to the quantitative results with participants’ qualitative rationale.

Table 1.

Characteristics of participants in the study sample, % (n)

| All sites (n=142) |

Site A (n=80; 56.3%) |

Site B (n=62; 43.7%) |

P-value | |

|---|---|---|---|---|

| Sex, % | ||||

| Female | 64.1 (91) | 56.3 (45) | 74.2 (46) | *0.03 |

| Age, % | ||||

| 50–59 | 35.2 (50) | 28.8 (23) | 43.5 (27) | |

| 60–69 | 38.7 (55) | 41.3 (33) | 35.5 (22) | |

| 70+ | 26.1 (37) | 30.0 (24) | 21.0 (13) | |

| Education, % | ||||

| High school or less | 22.3 (29) | 30.4 (21) | 13.1 (8) | *0.002 |

| Some college | 33.9 (44) | 37.7 (26) | 29.5 (18) | |

| College graduate or more | 43.9 (57) | 31.9 (22) | 57.4 (35) | |

| Race, % | ||||

| White | 76.1 (108) | 92.5 (74) | 54.8 (34) | *<0.001 |

| African American | 19.0 (27) | 3.8 (3) | 38.7 (24) | |

| Other | 4.9 (7) | 3.8 (3) | 6.5 (4) | |

| Literacy, mean (SD) | 3.3 (0.6) | 3.4 (0.6) | 3.2 (0.5) | 0.28 |

| Numeracy, mean (SD) | 3.5 (1.4) | 4.3 (1.2) | 2.5 (0.9) | *<0.001 |

| General health, % | ||||

| Excellent | 13.9 (18) | 13.2 (9) | 14.5 (9) | 0.44 |

| Very good | 40.8 (53) | 45.6 (31) | 35.5 (22) | |

| Good | 36.9 (48) | 33.8 (23) | 40.3 (25) | |

| Fair | 7.7 (10) | 7.4 (5) | 8.1 (5) | |

| Poor | 0.8 (1) | 0 (0) | 1.6 (1) | |

| Bone health, % | ||||

| History of previous DXA | 46.2 (60) | 38.2 (26) | 54.8 (34) | 0.06 |

| History of osteoporosis or osteopenia | 24.2 (32) | 18.6 (13) | 30.7 (19) | 0.11 |

| Fracture history | 16.2 (21) | 13.2 (9) | 19.4 (12) | 0.35 |

Note:

Indicates variables for which the sites are significantly different (P<0.05).

Abbreviations: DXA, dual-energy X-ray absorptiometry; SD, standard deviation.

Letter comprehension

Letter B was ranked as the easiest to understand by a significantly higher percentage of respondents (44.6%) than letter A (26.9%) or letter C (19.2%) (P<0.001); 9.2% of participants had no preference (Table 2). Conversely, letter C was viewed as the most difficult letter to understand by 27.0% of participants as compared to 22.2% for letter A and 19.1% for letter B, although these differences were not statistically significant (P=0.4). Also, 31.8% of respondents had no opinion for which letter was the most difficult to understand. Results were generally similar when patients were stratified by sex, education, age, site, and race (Table 2).

Table 2.

Letter reported as easiest to understand among key study subgroups

| Percent selecting as most understandable letter

|

No preference | # missing | P-value | |||

|---|---|---|---|---|---|---|

| A | B | C | ||||

| Total (n=130) | 26.9 | 44.6 | 19.2 | 9.2 | 12 | <0.01* |

| Sex, % | 12 | 0.85 | ||||

| Female (n=85) | 27.1 | 43.5 | 21.2 | 8.2 | ||

| Male (n=45) | 26.7 | 46.7 | 15.6 | 11.1 | ||

| Education, % | 21 | 0.76 | ||||

| High school or less (n=26) | 34.6 | 34.6 | 23.1 | 7.7 | ||

| Some college (n=42) | 21.4 | 50.0 | 21.4 | 7.1 | ||

| College graduate or more (n=53) | 28.3 | 47.2 | 17.0 | 7.6 | ||

| Age, % | 12 | 0.47 | ||||

| 50–59 (n=46) | 30.4 | 45.7 | 15.2 | 8.7 | ||

| 60–69 (n=52) | 30.8 | 42.3 | 21.2 | 5.8 | ||

| 70+ (n=32) | 15.6 | 46.9 | 21.9 | 15.6 | ||

| Site, % | 12 | 0.66 | ||||

| Site A (n=70) | 31.4 | 41.4 | 18.6 | 8.6 | ||

| Site B (n=60) | 21.7 | 48.3 | 20.0 | 10.0 | ||

| Race, % | 13 | 0.92 | ||||

| White (n=97) | 28.9 | 42.3 | 19.6 | 9.3 | ||

| African American | 19.2 | 53.9 | 19.2 | 7.7 | ||

| Other | 33.3 | 33.3 | 16.7 | 16.7 | ||

Note:

P-value accounting only for difference in those that had a preference in letter format.

As we evaluated our qualitative data, we found that participants preferred letter B because its conciseness made it the easiest letter to understand. This reason was refected in these participant responses:

The way [letter B] is written. It is direct and to the point. That is the way I like things, don’t give me 10 years to think about it. [Participant 49, white, female, age 51]

Stayed to the point, [letter B] didn’t have a lot of language to say one thing. It is clear and flows better. The others have more words to say the same thing. [Participant 54, white, female, age 57]

Less dense and wordy. [Participant 12, white, male, age 65]

Participants listed several reasons as to why letter C was the most difficult to understand. Most of the reasons included the way the letter was worded and that it did not provide enough information:

There was not enough information and left me thinking that I have to call my doctor to find out anything. [Participant 33, white, female, age 74]

You had to read the brochure to tell you about the T-score instead of a description in the letter itself. [Participant 46, African American, male, age 53]

When asked what actions they would take after receiving each letter, participants commonly stated that they would contact their health care provider. Letter B prompted more participants to state they would take actions to improve their bones. Letter C was the only letter that seemed to provoke a negative action from participants as three of them stated they would cry if they received letter C in the mail.

I would cry. Because it sounds like I have cancer and am going to die. I would not read the brochure … I would call the doctor. [Participant 4, white, male age 65]

I would cry. And then maybe read the letter over a few times to see if there is anything else I could do. [Participant 54, white, female, age 57]

Cry a lot. [Letter C] doesn’t explain what is going on. I would have to get on the telephone and call the doctor and then talk to his nurse. [Participant 39, African American, female, age 65]

Letter preference

More participants preferred letter B (45.2%) than letter A (30.4%) and letter C (24.4%), (P<0.01); 0.7% of participants had no preference (Table 3). When asked which letter they least preferred, 31.3% of participants listed letter C, 29.0% listed letter A, and 26.0% listed letter B – but these differences were not statistically significant (P=0.7). In addition, 13.7% had no preference and responses were missing for 8% of participants. These results did not differ significantly when respondents were stratified by sex, education level, age, site, or race (Table 3). Those with a lower than average literacy scores were significantly more likely to favor letter A or letter B than letter C (P<0.01), but preference did not vary by average numeracy score (P=0.7).

Table 3.

Preferred letter among key study subgroups

| Percent selecting as favorite letter

|

# missing | P-value | |||

|---|---|---|---|---|---|

| A | B | C | |||

| Total (n=135) | 30.4 | 45.2 | 24.4 | 7 | <0.001* |

| Sex, % | |||||

| Female (n=88) | 30.7 | 45.5 | 23.9 | 7 | 0.98 |

| Male (n=47) | 29.8 | 44.7 | 25.5 | ||

| Education, % | 17 | 0.88 | |||

| High school or less (n=26) | 30.8 | 42.3 | 26.9 | ||

| Some college (n=43) | 30.2 | 44.2 | 25.6 | ||

| College graduate or more (n=56) | 28.6 | 48.2 | 23.2 | ||

| Age, % | 7 | 0.62 | |||

| 50–59 (n=48) | 33.3 | 47.9 | 18.8 | ||

| 60–69 (n=54) | 25.9 | 46.3 | 27.8 | ||

| 70+ (n=33) | 33.3 | 39.4 | 27.3 | ||

| Site, % | 7 | 0.37 | |||

| Site A (n=74) | 35.1 | 40.5 | 24.3 | ||

| Site B (n=61) | 24.6 | 50.8 | 24.6 | ||

| Race, % | 8 | 0.30 | |||

| White (n=102) | 32.4 | 41.2 | 26.5 | ||

| African American (n=26) | 19.2 | 57.7 | 23.1 | ||

| Other (n=6) | 50 | 50 | 0 | ||

Note:

P-value accounting only for difference in those that had a preference in letter format.

In supporting qualitative analysis, participants who preferred letter B mentioned that they liked how letter B provided suggestions for actions that they could take to improve their bones and the way the information on the T-score was presented:

[Letter B] explained bones in easier to understand way and told how to improve bone health instead of having to read the brochure. It was short and concise. [Participant 40, white, male, age 59].

[Letter B] seemed less stressful reading through it. It made the bad news appear that there is still something you could do with the calcium and exercise. [Participant 20, white, female, age 56].

If you can explain a T-score in a sentence it is good to include that and the reference point in letter B to a 30 year old is good. [Participant 46, African American, male, age 53].

Participants who preferred letter C the least said that it needed more information.

Too vague. It left me hanging. [Participant 123, African American, female, age 57].

[Letter C] didn’t really explain as much as the other two letters. [Participant 11, white, male, age 64].

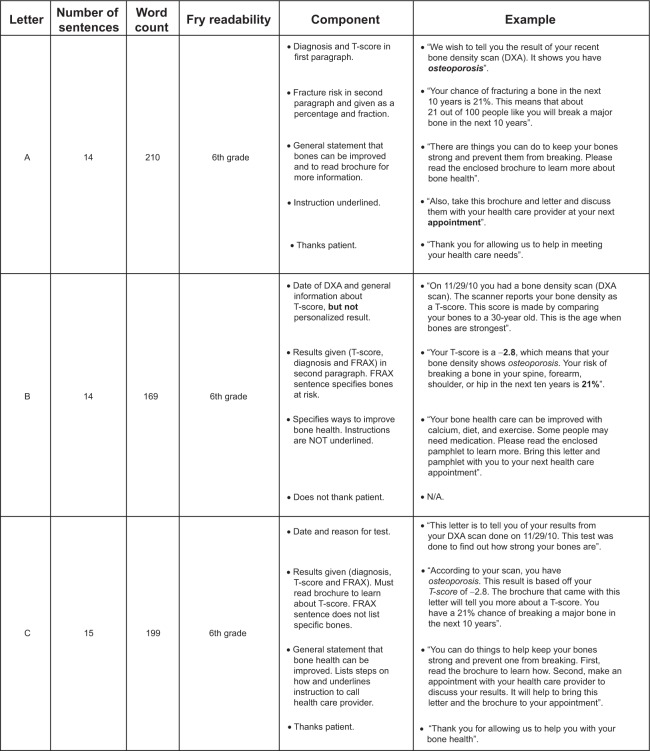

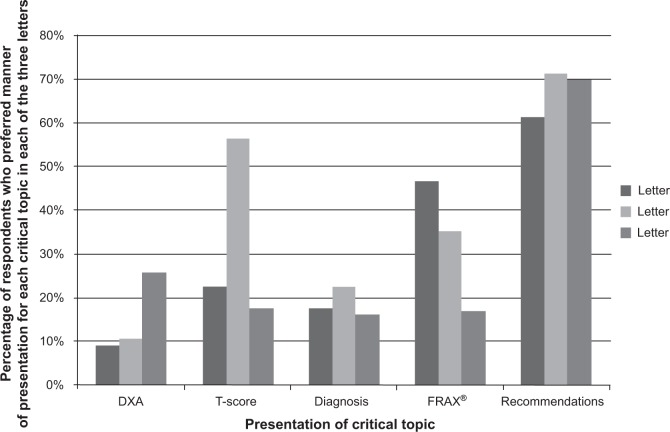

Preference for five critical topics

When asked about which letter explained and described the DXA test best, 26% preferred the phrasing in letter C (Figure 4). Alternatively, subjects liked the description of the T-score, diagnosis, and the post-DXA recommendations provided in letter B. The explanation of the FRAX® was most liked in letter A. Conversely, the DXA topic was disliked more frequently in letter C; the T-score topic was disliked the most for letter B, and the FRAX® topic for letter A. The data that we collected regarding specific sections of the letters that participants liked and disliked provided us an opportunity to examine why specific phrasing was liked or disliked. Exemplars for each topic are presented in Table 4. Overall, it appeared that participants liked knowing their T-score and what that score meant. They also liked knowing that a 21% chance of fracture meant 21 out of 100 people like them would fracture a bone in the next 10 years; they also liked getting a concise list of recommendations of things they could do to improve their bones.

Figure 4.

Respondent preference for the five critical topics presentation by letter.

Abbreviations: DXA, dual-energy X-ray absorptiometry; FRAX®, Fracture Risk Assessment Tool.

Table 4.

Frequency of participant responses on five critical topics in the letter and a typical qualitative response

| Critical topic | Letter A | Letter B | Letter C | |

|---|---|---|---|---|

| DXA | Like | 23 | 24 | 32 “It is very, very direct. This is why you had the test, because sometimes we don’t know why we had the test”. |

| Dislike | 13 | 6 | 27 “Don’t like the wording. Letter A explained it better”. |

|

| T-score | Like | 35 | 68 “It eases the person into what the test results are and what that means for their health condition”. |

33 |

| Dislike | 31 | 34 “Didn’t like the comparison to a 30-year old”. |

28 | |

| Diagnosis | Like | 34 | 42 “It explained T-score, how to read it, and what the results meant”. |

29 |

| Dislike | 18 | 14 | 20 “It doesn’t really say what osteoporosis is. Could be more definitive”. |

|

| FRAX® | Like | 73 “This part explains percentage well. Says exactly 21 out of 100 people, which is easily understood even for people who don’t understand percentages”. |

57 | 29 |

| Dislike | 24 “My mind stays on bone fracturing, wording is scary to some who might frighten easily”. |

14 | 21 | |

| Recommendations | Like | 76 | 89 “It gives you information on what you can do to improve your bone health”. |

79 |

| Dislike | 29 “You need to tell people about the calcium, diet and exercise, so they know what to talk about with the doctor”. |

30 | 20 |

Abbreviations: DXA, dual-energy X-ray absorptiometry; FRAX®, Fracture Risk Assessment Tool.

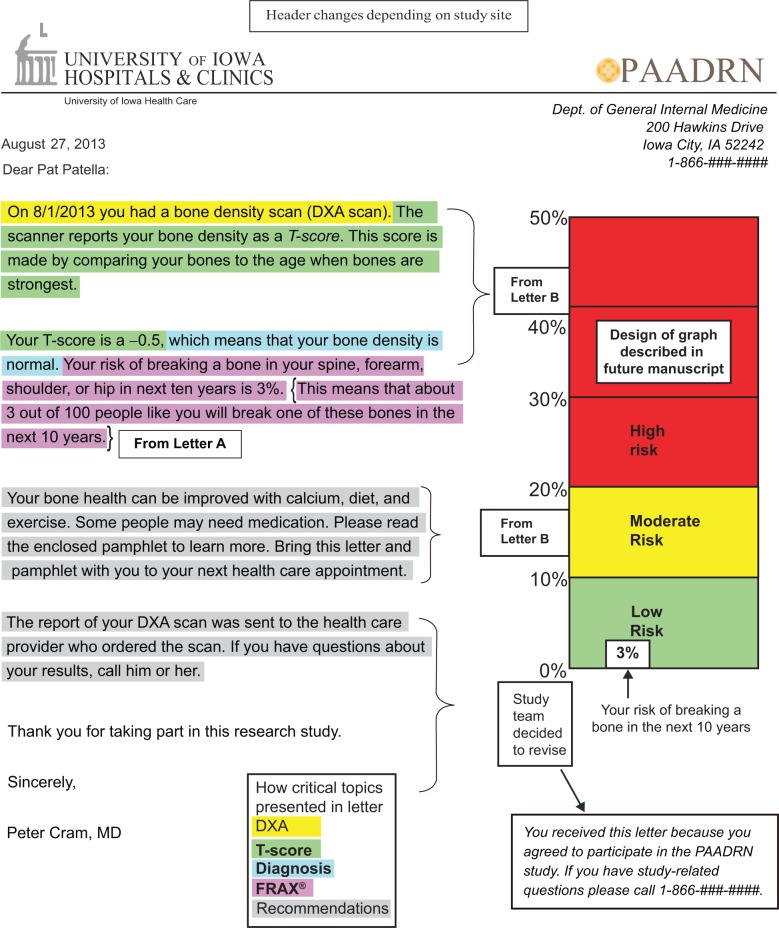

Creating final result letter

We created a final DXA result letter by synthesizing the feedback from all participants regarding the strengths and weaknesses of the individual letters and suggestions that participants provided with respect to the five key elements included in the letters (Figure 5). Our final letter was largely based upon letter B. Letter B was generally viewed the most favorably, but modifications were made to reflect participant feedback. For example, we chose to remove two sentences explaining the T-score, because respondents frequently cited these phrases as areas of confusion. Likewise, since many participants liked the portion of letter A that provided FRAX® as both a percentage and a fraction, we added this sentence to letter B. The final DXA result letter used for the PAADRN trial is reported elsewhere.

Figure 5.

Final DXA result letter with annotations on how created.

Abbreviations: DXA, dual-energy X-ray absorptiometry; FRAX®, Fracture Risk Assessment Tool.

Discussion

We report on the process of developing a DXA result notification letter using a mixed-methods approach to solicit guidance from our target audience of adults aged 50 and older who would likely be considered for bone density testing. The feedback that we received helped us to improve our letter in several ways. Moreover, our experience should serve as a guide to both researchers and clinicians who are looking to pilot test and implement new mechanisms for communicating complex test results to patients.

A number of our findings warrant elaboration. First, it is important to explain the rationale for developing letters to communicate test results; such efforts are important. It is now recognized that anywhere from 3%–30% of abnormal test results may simply be missed. The causes of missed test results are complex, but the net effect is that patients do not receive the treatments they should after abnormal diagnostic tests.15 Directly communicating results to patients is one mechanism for preventing missed test results. Communicating test results to patients not only can improve patient safety by preventing missed test results, but it also can help to activate patients and engage them in the care and management of their health condition. Whether using a mailed letter, secure Internet emailing, or electronic patient portals embedded into electronic health records, there have been few empirical studies evaluating how best to communicate complex medical results to patients.38–40 Communicating test results is made more complex by the varying levels of health literacy and numeracy of patients that must be incorporated into any communication materials.10,41,42 Not only does the current study chronicle our efforts to develop a letter for communicating DXA results, but our study also provides guidance to researchers, clinicians, and health communication experts in our general technique and methods for developing our materials.

Second, it is important to discuss briefly the aspects of our letters that patients liked and disliked. One of the three letters (letter B) was clearly preferred over the others. Participants cited the fact that letter B was concise and provided the specific steps that subjects could take to improve their bone health. Several participants also mentioned that letter B made them more hopeful – an important consideration when balancing the need to warn patients adequately about their test result without unduly frightening them.

It is important to mention several limitations in our study. Our sample was highly educated with only 22% of respondents reporting a high school education or less. A population with greater or lesser educational attainment may have yielded different results. Second, our study population was predominantly composed of non-Hispanic whites and African Americans drawn from two medical centers. Letter preferences might differ in other settings and populations.

Third, our written letter was developed for a single test (DXA). Adapting our mailed letter to other modes of communication (eg, email, text message) should be done with care. Likewise, while our study provides a template for the generation of communication materials for other test results, we are uncertain of the direct translation of our study results to other diagnostic tests.

The strength of this study is the thought that went into communicating a test result with patients in a feasible manner. While this test result letter is not meant to serve as a consultation with a health care provider, it does increase the likelihood that patients will learn of their DXA results and receive some educational information on osteoporosis. The letter or mode of notification could be tailored to an individual patient based on DXA result, age, sex, culture, or comorbidities. However, tailoring decreases the feasibility and increases the cost of implementing a direct-to-patient result notification process. An additional strength is that we created a letter following the constructs of the Health Belief Model to motivate behavior change, and we elicited feedback from a diverse, adult population. These processes allowed us to create a final letter used for the PAADRN trial that was theory driven and based on guidance from clinicians, health communication experts, and our target audience.

Conclusion

In conclusion, this study summarizes the methods, findings, and challenges involved in developing a letter to notify patients of their DXA results. We demonstrate the conceptual underpinnings, the study design and data collection, data analysis, and results from our efforts to develop a letter to communicate a complex medical test result to a diverse patient population. As noted in this manuscript, people have a preference for health communication materials that are easy to understand. Careful thought and consideration need to be taken in developing health communication materials by addressing health literacy, numeracy, and considering the emotional response patients may have when receiving their test results. Our experience should serve as a guide to others who are attempting to develop such communication materials.

Supplementary materials

Interview script for eliciting feedback on letter text

Acknowledgments

The authors would like to thank Shelly Campo and Natoshia Askelson for their expert advice on the design of the letters. We would also like to thank Brandi Robinson, Mollie Giller, Rebecca Burmeister, Sylvie Hall, Roslin Nelson, and Akeba Mitchell for their assistance in data collection and coding. This study is a pilot trial as part of the PAADRN study that is registered at clinicaltrials.gov (Trial NCT01507662). Dr Solimeo received partial support from the Department of Veterans Affairs, Center for Comprehensive Access & Delivery Research and Evaluation, Iowa City VA Health Care System, Iowa City, IA. Dr Cram is supported by a K24 award from the National Institute of Arthritis and Musculoskeletal and Skin Diseases (AR062133). This work is also funded by R01 AG033035 from the National Institute on Aging at the US National Institutes of Health. The US Department of Health and Human Services, National Institutes of Health’s National Institute on Aging had no role in the analysis or interpretation of data or the decision to report these data in a peer-reviewed journal.

Footnotes

Disclosure

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the United States government. The authors report no conflicts of interest in this work.

References

- 1.Gass M, Dawson-Hughes B. Preventing osteoporosis-related fractures: an overview. Am J Med. 2006;119(4 Suppl 1):S3–S11. doi: 10.1016/j.amjmed.2005.12.017. [DOI] [PubMed] [Google Scholar]

- 2.Lane NE. Epidemiology, etiology, and diagnosis of osteoporosis. Am J Obstet Gynecol. 2006;194(2 Suppl):S3–S11. doi: 10.1016/j.ajog.2005.08.047. [DOI] [PubMed] [Google Scholar]

- 3.Osteoporosis prevention, diagnosis, and therapy. NIH Consens Statement. 2000;17(1):1–45. [PubMed] [Google Scholar]

- 4.Warriner AH, Saag KG. Osteoporosis diagnosis and medical treatment. Orthop Clin North Am. 2013;44(2):125–135. doi: 10.1016/j.ocl.2013.01.005. [DOI] [PubMed] [Google Scholar]

- 5.Screening for Osteoporosis: Recommendation Statement [webpage on the Internet] Rockville, MD, USA: US Preventive Services Task Force; 2011. [Accessed February 3, 2012]. [cited January 2011]. Available from: http://www.uspreventiveservicestaskforce.org/uspstf10/osteoporosis/osteors.htm. [Google Scholar]

- 6.Center JR, Nguyen TV, Schneider D, et al. Mortality after all major types of osteoporotic fracture in men and women: an observational study. Lancet. 1999;353:878. doi: 10.1016/S0140-6736(98)09075-8. [DOI] [PubMed] [Google Scholar]

- 7.Kanis JA, Oden A, Johnell O, et al. The use of clinical risk factors enhances the performance of BMD in the prediction of hip and osteoporotic fractures in men and women. Osteoporos Int. 2007;18(8):1033–1046. doi: 10.1007/s00198-007-0343-y. [DOI] [PubMed] [Google Scholar]

- 8.Cummings SR, Melton LJ. Epidemiology and outcomes of osteoporotic fractures. Lancet. 2002;359(9319):1761–1767. doi: 10.1016/S0140-6736(02)08657-9. [DOI] [PubMed] [Google Scholar]

- 9.White S. Assessing the Nation’s Health Literacy: Key concepts and findings of the National Assessment of Adult Literacy (NAAL) Chicago, IL, USA: American Medical Association Foundation; 2008. [Accessed April 25, 2012]. Available from: http://www.ama-assn.org/resources/doc/ama-foundation/hl_report_2008.pdf. [Google Scholar]

- 10.National Research Council . In: Health Literacy: A Prescription to End Confusion. Neilsen-Bohlman L, Panzer AM, Kindig DA, editors. Washington, DC: The National Academies Press; 2004. [Accessed August 29, 2013]. Available from: http://www.nap.edu/catalog.php?record_id=10883. [PubMed] [Google Scholar]

- 11.Berkman ND, Sheridan SL, Donahue KE, Halpern DJ, Crotty K. Low health literacy and health outcomes: an updated systematic review. Ann Intern Med. 2011;155(2):97–107. doi: 10.7326/0003-4819-155-2-201107190-00005. [DOI] [PubMed] [Google Scholar]

- 12.Schapira MM, Fletcher KE, Gilligan MA, et al. A framework for health numeracy: how patients use quantitative skills in health care. J Health Commun. 2008;13(5):501–517. doi: 10.1080/10810730802202169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lipkus IM, Samsa G, Rimer BK. General performance on a numeracy scale among highly educated samples. Med Decis Making. 2001;21(1):37–44. doi: 10.1177/0272989X0102100105. [DOI] [PubMed] [Google Scholar]

- 14.Kirsch IS, Jungeblut A, Jenkins L, Kolstad A. Adult Literacy in America: A First Look at the Results of the National Adult Survey. Wasington, DC: US Department of Education; 1993. [Accessed December 30, 2013]. Available from: http://nces.ed.gov/pubs93/93275.pdf. [Google Scholar]

- 15.Cram P, Rosenthal GE, Ohsfeldt R, Wallace RB, Schlechte J, Schiff GD. Failure to recognize and act on abnormal test results: the case of screening bone densitometry. Jt Comm J Qual Patient Saf. 2005;31(2):90–97. doi: 10.1016/s1553-7250(05)31013-0. [DOI] [PubMed] [Google Scholar]

- 16.Pickney CS, Arnason JA. Correlation between patient recall of bone densitometry results and subsequent treatment adherence. Osteoporos Int. 2005;16(9):1156–1160. doi: 10.1007/s00198-004-1818-8. [DOI] [PubMed] [Google Scholar]

- 17.Cadarette SM, Beaton DE, Gignac MA, Jaglal SB, Dickson L, Hawker GA. Minimal error in self-report of having had DXA, but self-report of its results was poor. J Clin Epidemiol. 2007;60(12):1306–1311. doi: 10.1016/j.jclinepi.2007.02.010. [DOI] [PubMed] [Google Scholar]

- 18.Gardner MJ, Brophy RH, Demetrakopoulos D, et al. Interventions to improve osteoporosis treatment following hip fracture. A prospective, randomized trial. J Bone Joint Surg Am. 2005;87(1):3–7. doi: 10.2106/JBJS.D.02289. [DOI] [PubMed] [Google Scholar]

- 19.Solomon DH, Polinski JM, Stedman M, et al. Improving care of patients at-risk for osteoporosis: a randomized controlled trial. J Gen Intern Med. 2007;22(3):362–367. doi: 10.1007/s11606-006-0099-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Solomon DH, Finkelstein JS, Polinski JM, et al. A randomized controlled trial of mailed osteoporosis education to older adults. Osteoporos Int. 2006;17(5):760–767. doi: 10.1007/s00198-005-0049-y. [DOI] [PubMed] [Google Scholar]

- 21.Solomon DH, Katz JN, La Tourette AM, Coblyn JS. Multifaceted intervention to improve rheumatologists’ management of glucocorticoid-induced osteoporosis: a randomized controlled trial. Arthritis Rheum. 2004;51(3):383–387. doi: 10.1002/art.20403. [DOI] [PubMed] [Google Scholar]

- 22.Colón-Emeric CS, Lyles KW, House P, et al. Randomized trial to improve fracture prevention in nursing home residents. Am J Med. 2007;120(10):886–892. doi: 10.1016/j.amjmed.2007.04.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Morisky DE, Bowler MH, Finlay JS. An educational and behavioral approach toward increasing patient activation in hypertension management. J Community Health. 1982;7(3):171–182. doi: 10.1007/BF01325513. [DOI] [PubMed] [Google Scholar]

- 24.Pilling SA, Williams MB, Brackett RH, et al. Part I, patient perspective: activating patients to engage their providers in the use of evidence-based medicine: a qualitative evaluation of the VA Project to Implement Diuretics (VAPID) Implement Sci. 2010;5:23. doi: 10.1186/1748-5908-5-23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Buzza CD, Williams MB, Vander Weg MW, Christensen AJ, Kaboli PJ, Reisinger HS. Part II, provider perspectives: should patients be activated to request evidence-based medicine? A qualitative study of the VA project to implement diuretics (VAPID) Implement Sci. 2010;5:24. doi: 10.1186/1748-5908-5-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cram P, Schlechte J, Rosenthal GE, Christensen AJ. Patient preference for being informed of their DXA scan results. J Clin Densitom. 2004;7(3):275–280. doi: 10.1385/jcd:7:3:275. [DOI] [PubMed] [Google Scholar]

- 27.Cram P, Schlechte J, Christensen A. A randomized trial to assess the impact of direct reporting of DXA scan results to patients on quality of osteoporosis care. J Clin Densitom. 2006;9(4):393–398. doi: 10.1016/j.jocd.2006.09.002. [DOI] [PubMed] [Google Scholar]

- 28.Sung S, Forman-Hoffman V, Wilson MC, Cram P. Direct reporting of laboratory test results to patients by mail to enhance patient safety. J Gen Intern Med. 2006;21(10):1075–1078. doi: 10.1111/j.1525-1497.2006.00553.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Doheny MO, Sedlak CA, Estok PJ, Zeller R. Osteoporosis knowledge, health beliefs, and DXA T-scores in men and women 50 years of age and older. Orthop Nurs. 2007;26(4):243–250. doi: 10.1097/01.NOR.0000284654.68215.de. [DOI] [PubMed] [Google Scholar]

- 30.University of Iowa Patient Activation After DXA Result Notification (PAADRN) [Accessed March 27, 2014]. Available from: http://clinicaltrials.gov/ct2/show/NCT01507662. NLM identifier: NCT01507662.

- 31.Edmonds SW, Wolinsky FD, Christensen AJ, et al. PAADRN Investigators The PAADRN Study: a design for a randomized controlled practical clinical trial to improve bone health. Contemp Clin Trials. 2013;34(1):90–100. doi: 10.1016/j.cct.2012.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Rosenstock IM, Strecher VJ, Becker MH. Social learning theory and the Health Belief Model. Health Educ Q. 1988;15(2):175–183. doi: 10.1177/109019818801500203. [DOI] [PubMed] [Google Scholar]

- 33.Fry E. The readability graph validated at primary levels. The Reading Teacher. 1969;22(6):534–538. [Google Scholar]

- 34.Centers for Disease Control and Prevention . Simply Put: A guide for creating easy-to-understand materials. Atlanta, GA, USA: Centers for Disease Control and Prevention; 2009. [Accessed December 19, 2013]. Available from: http://www.cdc.gov/healthliteracy/pdf/simply_put.pdf. [Google Scholar]

- 35.Fagerlin A, Zikmund-Fisher BJ, Ubel PA, Jankovic A, Derry HA, Smith DM. Measuring numeracy without a math test: development of the Subjective Numeracy Scale. Med Decis Making. 2007;27(5):672–680. doi: 10.1177/0272989X07304449. [DOI] [PubMed] [Google Scholar]

- 36.Chew LD, Bradley KA, Boyko EJ. Brief questions to identify patients with inadequate health literacy. Fam Med. 2004;36(8):588–594. [PubMed] [Google Scholar]

- 37.Creswell JW. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. 3rd ed. Thousand Oaks, CA, USA: SAGE Publications; 2008. [Google Scholar]

- 38.Walker J, Leveille SG, Ngo L, et al. Inviting patients to read their doctors’ notes: patients and doctors look ahead: patient and physician surveys. Ann Intern Med. 2011;155(12):811–819. doi: 10.7326/0003-4819-155-12-201112200-00003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Feeley TW, Shine KI. Access to the medical record for patients and involved providers: transparency through electronic tools. Ann Intern Med. 2011;155(12):853–854. doi: 10.7326/0003-4819-155-12-201112200-00010. [DOI] [PubMed] [Google Scholar]

- 40.Delbanco T, Walker J, Bell SK, et al. Inviting patients to read their doctors’ notes: a quasi-experimental study and a look ahead. Ann Intern Med. 2012;157(7):461–470. doi: 10.7326/0003-4819-157-7-201210020-00002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.US Department of Health and Human Services . National Action Plan to Improve Health Literacy. Washington, DC: Office of Disease Prevention and Health Promotion; 2010. [Accessed August 29, 2013]. Available from: http://www.health.gov/communication/hlactionplan/pdf/Health_Literacy_Action_Plan.pdf. [Google Scholar]

- 42.111th Congress . The Patient Protection and Afforadable Care Act. Washington, DC: Senate and House of Representatives of the United States of America; 2010. [Accessed August 29, 2013]. Available from: http://www.gpo.gov/fdsys/pkg/BILLS-111hr3590enr/pdf/BILLS-111hr3590enr.pdf. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.