Abstract

Glaucoma, the second leading cause of blindness worldwide, is an optic neuropthy characterized by distinctive changes in the optic nerve head (ONH) and visual field. The detection of glaucomatous progression is one of the most important and most challenging aspects of primary open angle glaucoma (OAG) management. In this context, ocular imaging equipment is increasingly sophisticated, providing quantitative tools to measure structural changes in ONH topography, an essential element in determining whether the disease is getting worse. In particular, the Heidelberg Retina Tomograph (HRT), a confocal scanning laser technology, has been commonly used to detect glaucoma and monitor its progression.

In this paper, we present a new framework for detection of glaucomatous progression using HRT images. In contrast to previous works that do not integrate a priori knowledge available in the images, particularly the spatial pixel dependency in the change detection map, the Markov Random Field is proposed to handle such dependency. To our knowledge, this is the first application of the Variational Expectation Maximization (VEM) algorithm for inferring topographic ONH changes in the glaucoma progression detection framework. Diagnostic performance of the proposed framework is compared to recently proposed methods of progression detection.

Keywords: Glaucoma, Markov field, change detection, variational approximation

1. Intruduction

Glaucoma is characterized by structural changes in the optic nerve head (neuroretinal rim thinning and optic nerve cupping are usually the first visible signs) and visual field defects. Elevated intraocular Pressure (IOP) is a major risk factor for the disease [1].

However, some subjects with moderately high IOP may never develop glaucoma while others may have glaucoma without ever having an elevated IOP. Initially asymptomatic for several years, the first clinical symptoms related to glaucoma is a loss of peripheral vision and, in advanced disease, irreversible blindness [2]. Because most people with glaucoma have no early symptoms or pain, it is crucial to develop clinical routines to diagnose the disease before permanent damage to the optic nerve head occurs.

Since the introduction of the ophthalmoscope by Helmholtz in 1851, ophthalmologists have been able to view changes in optic nerve head topography associated with glaucoma. Nevertheless, the clinical examination of the ONH remains subjective (inter-observer diagnosis variability) and non-reproducible [3].

Because of this, ocular imaging instruments have been developed as potential tools to quantitatively measure ONH topography, which is essential for clinical diagnosis. For instance, the Heidelberg Retina Tomograph (HRT; Heidelberg Engineering, Heidelberg, Germany), a confocal scanning laser technology, has been commonly used for glaucoma diagnosis since its commercialization 20 years ago[4].

In order to assist the expert in qualitative and quantitative analysis, automatic image processing methods have been proposed. These methods aim to facilitate the interpretation of the obtained images by objectively measuring the ONH structure and detecting changes between a reference image (baseline exam) and other images (follow-up exams).

A limited number of studies have been published that deal with the change detection/classification problems for HRT images. In [5], the Topographic Change Analysis (TCA) was proposed for assessing glaucomatous changes. This technique has been shown to classify progressing and stable eyes with reasonably good sensitivity and specificity. However, TCA does not exploit additional available knowledge, such as spatial dependency among neighboring pixels ( i.e, the status of a pixel will depend on the status of its neighborhood). The POD method indirectly utilizes the spatial relationship among pixels by controlling the family-wise Type I error rate. In [6], a retinal surface reflectance model and a homomorphic filter were used to detect progression from changes in retinal reflectance over time. Similar to POD, dependency among spatial locations was only indirectly considered by controlling family-wise Type I error rate. In order to accommodate the presence of false positive change detection, a threshold-based classifier is generally used [7]. Nevertheless, the choice of the threshold (usually 5% of the number of pixels within the optic disc) may affect the robustness of the classification method. Indeed, if we decrease the threshold value, the risk of false negative classifications increases and similarly if we increase the threshold value, the risk of false positive classifications will increase.

In this paper, we propose a new strategy for glaucoma progression detection. We are particularly interested in determining if directly modeling and integrating the spatial dependency of pixels within the change detection model can improve glaucoma detection. Specifically, the Markov Random Field (MRF) is proposed to exploit the statistical correlation of intensities among the neighboring pixels [8]. Moreover, in order to account for noise in the estimation scheme, we consider the change detection problem as a missing data problem where we jointly estimate the noise hyperparameters and the change detection map. The most widely used procedure to estimate different problem parameters is the Expectation-Maximization EM algorithm [9]. However, the optimization step is intractable in our case because of the MRF prior we use for the change detection map. In order to overcome this problem, the Variational Expectation Maximization (VEM) algorithm is proposed. The VEM has been successfully employed for blind source separation [10], image deconvolution [11] and image superresolution [12].

Once the change detection map is estimated, a classification step is performed. This step aims at classifying a HRT image into “non-progressing” and “progressing” glaucoma classes, based on the estimated change detection map. In contrast to previous works which used the threshold-based classifier [7], a two layer fuzzy classifier is proposed. The importance of fuzzy set theory stems from the fact that much of the information on which human decisions are based is possibilistic, rather then deterministic [13]. We show that the proposed classifier increases the non-progressing versus progressing accuracy.

The paper is divided into three sections. In the next section, the proposed glaucoma progression detection scheme is presented. The classification step is described is section 3. Then, in section 4, results obtained by applying the proposed scheme to real data are presented and we compare the diagnostic accuracy, robustness and the efficiency of this novel proposed approach with three existing progression detection approaches, topographic change analysis (TCA) [5], proportional orthogonal decomposition (POD) [7] and the reflectance based method [6].

2. Glaucoma progression detection scheme

2.1. Intensity normalization

The curvature of the retina and differences in the angle of imaging the eye between exams may lead to an inhomogeneous background (illuminance) and introduce changes among follow-up exams [6]. Although this problem is not due to glaucomatous progression, it would have an influence on subsequent statistical analysis and quantitative estimates of progression. To this end, a reflectance-based normalization step is performed. Assuming that the optic nerve head is Lambertian, each HRT image I can be simplified and formulated as a product I = L × F where F is the reflectance and L is the illuminance. Because illumination varies slowly over the field of view, the illuminance L is assumed to be contained in the low frequency component of the image. Therefore, the reflectance F can be approximated by the high frequency component of the image. The reflectance component F describes the surface reflectivity of the retina, whereas the illumination component L models the light sources. The reflectance image can then be used as an input to the change detection algorithm [14, 15, 6].

Several methods have been proposed to solve the problem of reflectance and illuminance estimation including homomorphic filtering [14], the scale retinex algorithm [16, 17, 18] and the isotropic diffusion approach [19]. The reflectance based method of detecting progression uses a homomorphic filter to estimate retinal reflectance [6]. In our new glaucoma progression detection scheme presented in this study, we used the scale retinex approach. The retinex approach has been successfully utilized in many applications, including medical radiography [20], underwater photography [21] and weather images enhancement [22].

2.2. Change detection

Let us consider the detection of changes in a pair of amplitude images. Change-detection can be formulated as a binary hypothesis testing problem where the null hypothesis of “H0: No change” is tested against the alternative hypothesis of “H1: Change”. We denote by I0 and I1 two images acquired over the same scene at times t0 and t1, respectively (t1 > t0), and coregistered. A difference image R with M pixels was estimated as the pixelwise difference between images I0 (a baseline image) and I1 (a follow-up image): R = abs(I0 – I1). The difference image R(i) = {R(i)∣, i = 1, 2, …, M} can be modeled as R = X + N, where X is the true (or ideal) difference image and N is the noise (assumed to be white and normally distributed). Finally, the change detection is handled through the introduction of change class assignments Q, Q = {Qi∣Qi ∈ {H0, H1}, i = 1, 2, …, M} represent the change class assignment at each pixel.

The Bayesian model aims at estimating the model variables (R, X, Q) and parameters Θ. This requires the definition of the likelihood and prior distributions. The model is given by the joint distribution:

| (1) |

We now present each term of the Bayesian model.

Likelihood

The definition of the likelihood depends on the noise model. In this study, we assume that the noise is white and normally distributed:

| (2) |

where N is the normal distribution and σN is the noise standard deviation.

Model prior

To describe the marginal distribution of X conditioned to Hl, with l ∈ {0, 1}, we opted for the Normal distribution:

| (3) |

where μl and σl stand for the mean and the standard deviation respectively.

Concerning the change variable, we assume that p(Q; Θ) is a spatial Markov prior. In this study we adopt the Potts model with interaction parameter β:

| (4) |

where Z is the normalization constant and δ the delta Kroneker function. Hence, the definition of the energy function favors the generation of homogeneous areas reducing the impact of speckle noise, which could affect the classification results of the HRT images [23]. β allows us to tune the importance of the spatial dependency constraint. In particular, high values of β correspond to a high spatial constraint. Note that we have opted for the 8-connexity neighboring system. The estimation procedure of the model parameters (X, Q) and hyperparameters Θ = {σN, μl, σl, β} is presented in the next section.

Variational Expectation-Maximization

Once our model is established, we now address the inference problem. This step consists in estimating the model parameters and hyperparameters. However, exact inference is intractable due to the Markov prior we adopted for the change detection map, so we turn to approximate algorithms that search for distributions that are close to the correct posterior distribution. One of the most commonly used procedures is the Monte Carlo Markov Chain (MCMC) technique [24]. Unfortunately, this technique requires large computational resources and fine tuning of model hyperparameters. To overcome this problem, the Iterative Conditional Mode (ICM) [25] procedure is widely used because it is easy to implement [25]. However, the ICM does not take into account uncertainties in hidden variables (i.e; Q) during inference, so it may converge to poor local minima [26]. In this study, the variational approximations of the Expectation Maximization (EM) algorithm is proposed to alleviate the computational burden associated with stochastic MCMC approaches. In contrast to the MCMC procedure, the proposed VEM approach does not require priors on the model parameters for inference to be carried out. Indeed, the EM procedure can be viewed as an alternating maximization procedure of a function F : F (p, Θ) = Ep[log(p(R, X, Q; Θ)] + T(p) where Ep denotes the expectation with respect to p and T(p) = −Ep[log p(X, Q)] is the entropy of p. At iteration [r], and given the current hyperparameters values Θ[r–1], the EM procedure consists of:

where P is the set of all probability distribution on X × Q. The optimization step leads to maximize p(X, Q∣R, Θ[r–1]). Unfortunately, this posterior is intractable for X and Q for our model. Hence, the VEM procedure can be derived from the EM in which p(X, Q∣R, Θ[r–1]) is approximated as a product of two probability density functions on X and Q: qX,Q = qXqQ where qX and qQ are probability distributions on X and Q, respectively. At iteration [r], the updating rules become:

E-step

The approximate posterior distribution q can be computed as follows. Keeping only the terms of log p(X∣R, Q, Θ[r–1]) that depend on X we have:

| (5) |

Notice that this is the exponent of a Gaussian distribution with mean μX and covariance matrix ∑X given by: and I is the identity matrix. The analytic expression of the distribution that minimizes F is given by: qx ~ N (μx, Σx);

Due to the Markov prior, is intractable. To overcome this problem, many studies proposed the use of the Mean Field algorithm [27, 8]. According to this theory, given a single element in a random field, the effect of other elements can be approximated by the effect of their mean. The mean field approximation is a particular case of the mean field-like approximation when the neighborhood of a site at position i is set to constants [27]. , approximated by the mean field likely algorithm, is given by [28]:

| (6) |

where is a given configuration of Q created basing on X[r] and updated at each iteration.

M-step

This step aims at estimating the model hyperparameters Θ = {σN, μl, σl, β}:

Let us denote by the inverse of σn (i.e; ). The derivation of EqX (log p(R∣X[r], Θ)) with respect to is given by:

| (7) |

Setting to zero the derivation, we obtain the following updated expression:

| (8) |

Setting to zero the derivation of EqX,qQ (log p(X[r]/Q[r], μl, σl) with respect to (μl, σl), we get:

| (9) |

where αl = ∑i qQ(Qi = l).

The estimation of β[r] is carried out using a gradient algorithm.

3. Classification

Once the change detection maps are estimated, we now tackle the glaucoma progressor image classification problem. This step aims at assigning an image to the non-progressor or the progressor classes. To this end, a two-layer fuzzy classifier is proposed. On one hand, in contrast to [7], no threshold is required. In our case, the fuzzy set theory is used to quantify the membership degrees of a given image to each class (i.e; progressor and non-progressor). On the other hand, the use of a two-layer classifier instead of one-layer allows better modeling of the classification function [29]. Moreover, the fuzzy classifier we propose can be trained using only the control data (non-progressor eyes) as in some cases, no prior knowledge of the changes due to glaucoma progression is available.

As in [7], we considered two features as input for the classifier: 1) feature1: the number of changed sites and 2) feature2: the residual image intensity R of changed sites. Note that only the loss of retinal height in neighboring areas is considered change due to glaucomatous progression because an increase in retinal height is considered improvement (possibly due to treatment or tissue rearrangement).

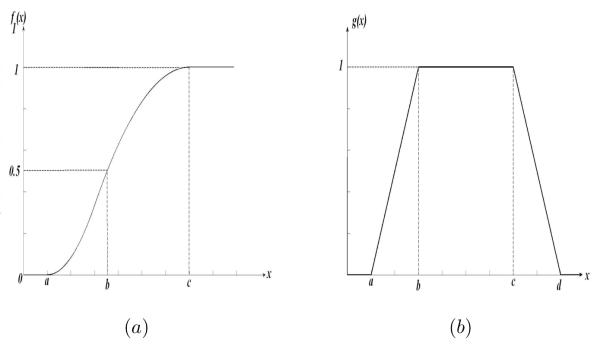

We now calculate the elementary membership degree to the non-progressor class given each feature γnor,o∈{feature1,feature2} using a S-membership function f whose expression is given in Eq. 10. Note that the range [a, c] defines the fuzzy region.

| (10) |

where a < b < c. The shape of f for a given triplet (a, b, c) is presented in Fig. 1.(a).

Figure 1.

Different fuzzy membership functions used in the classification scheme: (a) S membership function and (b) trapezoidal membership function. The hyperparameters of these fuzzy membership functions are estimated with the Genetic algorithm using a training HRT dataset which contains 7 non-progressor eyes and 7 progressor eyes.

| (11) |

where γnor,feature1 = f(Nc; a1, b1, c1), γnor,feature2 = f(∑i∈C Ri; a2, b2, c2), Nc and C stand for the number of changed sites and the changed site class respectively and (a1, b1, c1, a2, b2, c2) are the hyperparameters of the S-membership functions. The hyperparameters (a1, b1, c1, a2, b2, c2) are estimated with the Genetic algorithms (see Appendix A) using longitudinal HRT data from a training dataset which contains 7 non-progressor eyes and 7 progressor eyes. Note that the training dataset is independent of the test dataset described in section 4.1.

The membership degree to the glaucoma class γglau is given by:

| (12) |

To decide if a given HRT image belongs to the glaucoma progressor class, another membership function is used. We opted for the trapezoidal function denoted by g as a membership function. The expression of g is given by :

| (13) |

where a3 < b3 < c3 < d3. The shape of g for a given quadruple (a3, b3, c3, d3) is presented in Fig. 1.(b). Alternately, the Genetic algorithm was used to estimate this quadruple. The decision to classify an image into the glaucoma class depends on the output of the function g(γglau). As can be observed, g function depends on the quadruple (a3, b3, c3, d3) as well as on (a2, b2, c2) and (a1, b1, c1). If g(γglau) = 1 the image is assigned to the glaucoma progressor class.

4. Experiments

This section aims at validating the proposed framework. Different datasets used for validation are presented in the next sub-section. In sub-section 4.2, the reliability of the intensity normalization algorithm is discussed. Change detection results on simulated and semi-simulated datasets are presented in sub-section 4.3. In order to corroborate the effectiveness of the variational estimation algorithm, comparisons with other estimation algorithms will be conducted throughout the present section. Finally, the classification results on real datasets are presented in sub-section 4.4.

4.1. Datasets for experiments

The proposed framework was experimentally validated on clinical, semi-simulated and simulated datasets. Our clinical dataset consists of 36 eyes from 33 participants with progressed glaucoma and 210 eyes from 148 participants considered non-progressing. It’s important to note that the glaucomatous progression in the patient eyes was not defined based on the HRT images but based on two other techniques: 1) likely progression by visual field (based on longitudinal standard automated perimetry testing) or 2) progression by stereo-photographic assessment of the optic disk. Progressive changes in the stereo-photographic appearance of the optic disk between baseline and the most recent available stereo-photograph (patient name, diagnosis, and temporal order of stereo-photographs were masked) were assessed by two observers based on a decrease in the neuroretinal rim width, appearance of a new retinal nerve fiber layer (RNFL) defect, or an increase in the size of a pre-existing RNFL defect. A third observer adjudicated any differences in assessment between these two observers. Therefore, no ground truth for glaucomatous change in the HRT images is available. An additional 21 eyes from 20 participants were normal eyes with no history of IOP > 22 mmHg, normal appearing optic disk by stereo-photography and visual field exams within normal limits. Note that all eligible participants were recruited from the University of California, San Diego Diagnostic Innovations in Glaucoma Study (DIGS) with at least 4 good quality HRT-II exams and at least 5 good quality visual field exams to ensure an accurate diagnosis.

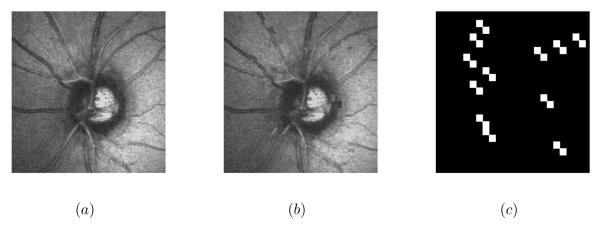

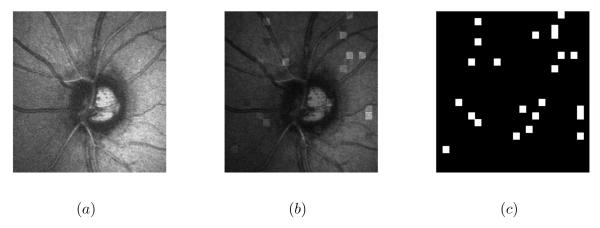

Because no change detection map is available for the clinical dataset, we generated two semi-simulated datasets to assess the proposed change detection method. The first one (dataset1) was constructed from four normal HRT images. Changes were simulated by the permutation of 5%, 7%, 10% and 12% of image regions in the four images, respectively. In order to emphasize the robustness of the proposed approach compared to the high level of noise, an additive Gaussian noise with different values of peak signal to noise ratio (PSNR) (30, 28, 25, and 23 dB) (PSNR = 10 log10(max(Image)2/E[(Noise)2])) was introduced to both the original image and the image with translated regions. Fig 2 presents an example of a semi-simulated image.

Figure 2.

Semi-simulated image by the permutation of image regions: (a) the original image (b) the simulated image (PSNR=28 dB) and (c) the ground truth.

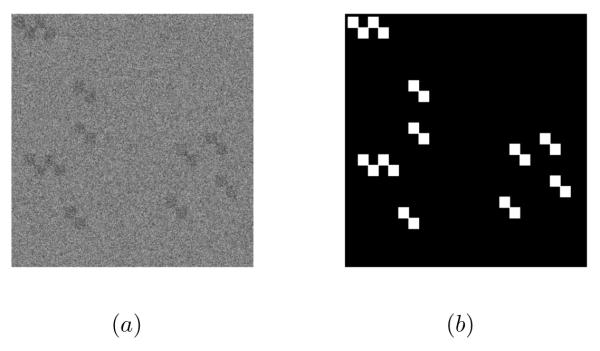

The second simulated dataset (dataset2) was constructed from four other HRT images. Changes were simulated by modifying the intensities of each 15 × 15 pixel-sized regions in the four images randomly with 0.5%, 0.75%, 1% and 1.25% probabilities, respectively. The intensities were modified by randomly multiplying the real intensities by 0.5, 0.75, 1.5 or 2 with a 0.25% probability. As for the first semi-simulated dataset, a Gaussian noise was added to each image. Fig 3 presents an example of a semi-simulated image.

Figure 3.

Semi-simulated image by the modification of image intensities: (a) the original image (b) the simulated image (PSNR=28 dB) and (c) the ground truth.

Concerning the simulated dataset, we have generated four images according to the proposed model (Eq. 1) with different values of PSNR (30, 28, 25 and 23 dB). Fig 4 presents an example of a simulated image.

Figure 4.

Simulated image: (a) the simulated image (PSNR=28 dB) and (b) the ground truth.

Note that for each exam, the baseline image and the follow-up image are co-registered using built in instrument software and saved together.

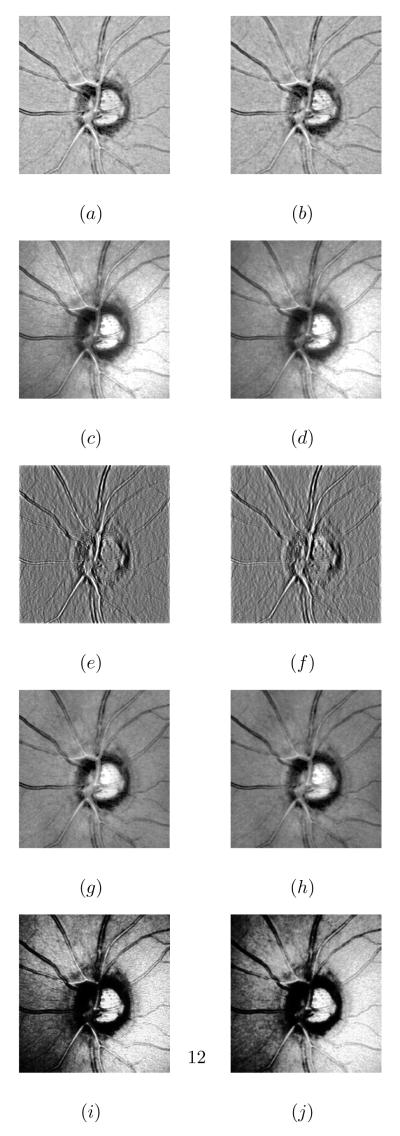

4.2. Intensity normalization algorithm assessment

To assess the proposed illumination correction normalization algorithm and particularly the use of the retinex method for reflectance estimation, five different methods were used to normalize the image intensities: the proposed method [17] (filter size=20 pixels), the homomorphic filtering method [30] (standard deviation=2 and filter size=20 pixels), the isotropic diffusion method [19] (smoothness constraint=7 pixels), the Discrete Cosine Transform (DCT) method [31] (number of components is 40) and the wavelet-based method (with Daubechies wavelet and three level decomposition) [20]. Fig.5 presents the intensity normalization of a baseline and a follow-up image using the five methods.

Figure 5.

Intensity normalization results of a baseline (left column) and a follow-up (right column) images respectively using : (a) (b) the proposed method, (c) (d) the homomorphic filtering, (e) (f) the isotropic diffusion method, (g) (h) the DCT method and (i) (j) the wavelet method.

The proposed change detection method is then applied to dataset1 and dataset2. In order to perform the change detection evaluation, we use the Percentage of False Alarm PFA, the Percentage of Missed Detection PMD and the Percentage of Total Error PTE measurements defined by:

where FA stands for the number of unchanged pixels that were incorrectly determined as changed ones, NF the total number of unchanged pixels, MD the number of changed pixels that were mistakenly detected as unchanged ones, and NM the total number of changed pixels.

Table. 1 presents the false detections, the missed detections and the total errors for both datasets. The retinex normalization and the DCT normalization perform similarly (with the retinex method performing slightly better). Moreover, the retinex-based method for reflectance estimation allows us to obtain better results than other reflectance estimation methods. Specifically, we obtained 0.98 % of total error with the retinex method while the homomorphic filtering method led to 1.43 % of total errors.

Table 1.

False detection, missed detection and total errors resulting from: the proposed initialization method, the homomorphic filtering, the isotropic diffusion method, the DCT method and the wavelet method.

| dataset1 | False detection | Missed detection | Total Errors |

| Retinex | 0.71 % | 4.61 % | 0.99 % |

| DCT | 0.74 % | 4.96 % | 1.07 % |

| Isotropic diffusion | 0.79 % | 5.39 % | 1.28 % |

| Homomorphic filtering | 0.87 % | 6.32 % | 1.38 % |

| Wavelet | 1.98 % | 7.24 % | 2.69 % |

| dataset2 | False detection | Missed detection | Total Errors |

| Retinex | 0.62 % | 3.85 % | 0.89 % |

| DCT | 0.69 % | 4.72 % | 1.02 % |

| Isotropic diffusion | 0.72 % | 5.19 % | 1.26 % |

| Homomorphic filtering | 0.86 % | 6.98 % | 1.43 % |

| Wavelet | 1.59 % | 6.25 % | 2.38 % |

4.3. Change detection results

In order to emphasize the benefit of the proposed change detection algorithm and particularly the use of the Markov model to handle pixel spatial dependency, we compared the proposed method with two kernel-based methods and a threshold-based method:

The Support Vector Data Description (SVDD) [32] with the Radial Basis Function (RB) kernel using positive and negative examples,

The Support Vector Machine (SVM) [33] with the RBF Gaussian kernel,

A Bayesian threshold-based method [34],

Note that we used the retinex-based intensity normalization for all methods. First, we applied these methods on the four semi-simulated images from dataset1 with PSNR=30dB and the four semi-simulated images from dataset 2 with PSNR=30dB (Table 2). The proposed method tends to perform better than the SVDD, SVM and the Bayesian threshold-based methods. This means that the proposed Markov priori we considered improves the change detection results by taking the spatial dependency of pixels into the detection scheme. Note the results on dataset2 are similar to those obtained on dataset 1, so they are omitted for the sake of brevity.

Table 2.

False detection, missed detection and total errors resulting from: the proposed change detection method, the SVM method, the SVDD method and the threshold method.

| Image 1 from dataset1 (PSNR=30dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.62 % | 4.28 % | 0.95 % |

| SVM | 0.97 % | 6.47 % | 1.68 % |

| SVDD | 1.26 % | 7.39 % | 2.21 % |

| Threshold | 2.14 % | 9.34 % | 3.05 % |

| Image 2 from dataset1 (PSNR=30dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.68 % | 4.01 % | 0.81 % |

| SVM | 0.91 % | 5.98 % | 1.42 % |

| SVDD | 1.68 % | 7.35 % | 2.38 % |

| Threshold | 2.13 % | 9.31 % | 3.04 % |

| Image 3 from dataset1 (PSNR=30dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.65 % | 4.68 % | 0.83 % |

| SVM | 0.94 % | 6.24 % | 1.71 % |

| SVDD | 1.51 % | 7.03 % | 2.09 % |

| Threshold | 2.10 % | 9.43 % | 3.17 % |

| Image 4 from dataset1 (PSNR=30dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.75 % | 4.62 % | 1.08 % |

| SVM | 0.96 % | 6.98 % | 1.73 % |

| SVDD | 1.39 % | 7.90 % | 2.36 % |

| Threshold | 2.21 % | 10.32 % | 3.57 % |

In order to assess the noise robustness of the proposed approach, three different methods were applied on the semi-simulated datasets with different values of PSNR: the proposed method, the SVM method and the threshold method. (Table 3). We observe that the proposed method performed better than both the kernel based-method and the threshold-based method. Specifically, even with PSNR=25dB, we obtained a total error inferior to 1 % which is two times smaller than that obtained by the SVM method. This can be explained by the fact that we took into account in our change detection scheme both the uncertainty (the noise) and the spatial dependency of pixels.

Table 3.

False detection, missed detection and total errors resulting from: the proposed change detection method, the SVM method and the threshold method using the semi-simulated datasets.

| (PSNR=30dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.58 % | 3.81 % | 0.86 % |

| SVM | 0.84 % | 4.58 % | 1.12 % |

| Threshold | 1.51 % | 6.58 % | 2.03 % |

| (PSNR=28dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.62 % | 3.85 % | 0.88 % |

| SVM | 1.18 % | 6.91 % | 1.61 % |

| Threshold | 1.98 % | 6.92 % | 2.71 % |

| (PSNR=25dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.75 % | 4.61 % | 0.97 % |

| SVM | 1.91 % | 6.8 % | 2.68 % |

| Threshold | 2.32 % | 8.17 % | 3.18 % |

| (PSNR=23dB) | False detection | Missed detection | Total Errors |

| Proposed method | 0.91 % | 4.77 % | 1.20 % |

| SVM | 2.68 % | 8.39 % | 3.88 % |

| Threshold | 2.73 % | 8.63 % | 3.97 % |

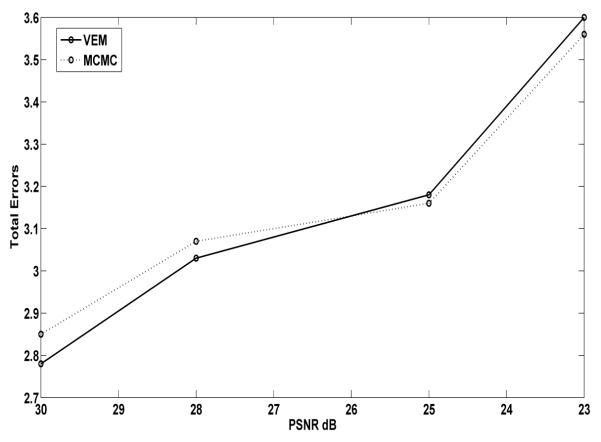

In order to emphasize the benefit of the variational approximation used in the change detection scheme, we compared the proposed VEM estimation procedure with two other procedures:

MCMC procedure,

The Iterative Conditional Mode (ICM) [25],

The change detection results obtained on dataset1 and dataset2 with different PSNR values are presented in Table 4. As can be seen, the proposed variational procedure performs better than the MCMC and the ICM procedure for PSNR=30, 28 and 25 dB and the MCMC approach performs slightly better for a PSNR=23dB. These results are similar to those obtained on the simulated dataset (cf. Fig. 6). This means that our variational estimation represents a good alternative to the MCMC procedure requiring a large computational burden and the fine tuning of model hyper-parameters.

Table 4.

False detection, missed detection and total errors resulting from: the proposed VEM procedure, the MCMC procedure and the ICM procedure using the semi-simulated datasets.

| (PSNR=30dB) | False detection | Missed detection | Total Errors |

| Proposed VEM | 0.58 % | 3.81 % | 0.86 % |

| MCMC | 0.73 % | 4.12 % | 0.93 % |

| ICM | 0.97 % | 5.02 % | 1.29 % |

| (PSNR=28dB) | False detection | Missed detection | Total Errors |

| Proposed VEM | 0.62 % | 3.85 % | 0.88 % |

| MCMC | 0.83 % | 4.32 % | 1.02 % |

| ICM | 1.98 % | 6.92 % | 2.71 % |

| d(PSNR=25dB) | False detection | Missed detection | Total Errors |

| Proposed VEM | 0.75 % | 4.61 % | 0.97 % |

| MCMC | 0.85 % | 4.46 % | 1.04 % |

| ICM | 2.3 % | 7.58 % | 3.03 % |

| (PSNR=23dB) | False detection | Missed detection | Total Errors |

| Proposed VEM | 0.91 % | 4.77 % | 1.20 % |

| MCMC | 0.88 % | 4.71 % | 1.16 % |

| ICM | 2.69 % | 8.61 % | 3.82 % |

Figure 6.

The total errors resulting from: the proposed VEM procedure and the MCMC procedure on the simulated dataset.

4.4. Classification results

We are now faced with the problem of framework validation on the clinical dataset. We have retained the sensitivity and the specificity measurements to perform the classification evaluation. In order to emphasize the benefit of the proposed glaucoma progression detection scheme, we have compared the proposed framework with three other published methods: the Topographic Change Analysis (TCA) method [35], the Proper Orthogonal Decomposition (POD) method [7] and the reflectance based method [6] (Table 5). Moreover, to emphasize the benefit of the proposed classification algorithm and particularly the use of the fuzzy set theory, we compared the proposed two-layer fuzzy classifier with two kernel-based methods, the SVM [36] and the SVDD [37] classifiers with the RBF Gaussian kernel. We obtained higher specificity and sensitivity with the proposed classifier compared to the SVM and the SVDD. This is due to the fact that the fuzzy set classifier leads to a better result when the training dataset is small. Moreover, we obtained the same progressor sensitivity as the TCA method while conserving a higher non-progressor specificity. This can be explained by the fact that the TCA technique does not include an intensity normalization step in the progression detection scheme, which makes the method less robust to the illuminance variation compared to the proposed scheme. Indeed, the TCA compares the topographic height variability at superpixels (4 × 4 pixels) in a baseline examination with the height change between baseline and follow-up examinations. A change map of P-values, indicating the probability of change at each superpixel is created, and contiguous superpixels showing significant (P<0.05) decreases in retinal height are clustered.

Table 5.

Diagnostic Accuracy of the proposed framework, the POD method, the reflectance based method and the TCA method.

| Progressor sensitivity | Non-progressor specificity | |

|---|---|---|

| Proposed framework | 86 % | 88 % |

| Proposed framework with SVM |

79 % | 85 % |

| Proposed framework with SVDD |

80 % | 83 % |

| POD | 78 % | 45 % |

| TCA | 86 % | 25 % |

| reflectance based method |

64 % | 76 % |

The POD and the reflectance based methods led to a superior non-progressor specificity compared to the TCA. This is due in part that for both methods, dependency among spatial locations were indirectly used by controlling family-wise Type I error rate. However, the proposed method led to higher specificity and sensitivity than the POD and the reflectance based methods. This is likely because of the fact that we explicitly modeled the spatial dependency of classification among pixels whereas dependency is only implicitly accounted for by the two other methods.

Our results suggest that the detection of structural glaucomatous changes within the VEM framework, by taking into account the noise variations within the framework as well as the dependency among pixels using the MRF prior, shows promise for reducing the number of necessary follow up exams (i.e; only one follow-up exam is required for the proposed framework whereas at least 3 for the TCA technique) while maintaining high diagnostic specificity.

5. Conclusion

In this paper, a unified framework for glaucoma progression detection has been proposed. We particularly focus on the formulation of the change problem as a missing data problem. The task of inferring the topographic ONH changes is tackled with a Variational Expectation Maximization algorithm that is used for the first time, to our knowledge, in the glaucomatous progression detection framework. The handling of both spatial dependency of pixels and uncertainty increased the robustness of the proposed change detection scheme, compared with the kernel-based method and the threshold method. The real validation of the proposed approach is shown by its ability to perform more accurate glaucomatous progression detection compared with existing methods.

Supplementary Material

Acknowledgments

The authors acknowledge the funding and support of the National Eye Institute (grant numbers: U10EY14267, EY019869, EY021818, EY022039 and EY08208, EY11008, P30EY022589 and EY13959) and Eyesight Foundation of Alabama.

Appendix A. Genetic Algorithm

Genetic Algorithms (GA) are adaptive heuristic search algorithm premised on the evolutionary ideas of natural selection and genetics [38]. The basic concept of GA is designed to simulate processes in natural system necessary for evolution, specifically those that follow the principles first laid down by Charles Darwin of survival of the fittest. As such they represent an intelligent exploitation of a random search within a defined search space to solve a problem. The aim of genetic algorithm is to use simple representations to encode complex structures and simple operations to improve these structures. Let us denote by:

the population representing the parameters of the used fuzzy membership functions. We apply real coding to encode the chromosomes of the population. Therefore, each individual is represented by a vector of {0, 1}. The different steps of GA are:

- Generating in an experimental way the initial population:

- Define individual fitness function to indicate the fitness of every chromosome. The proposed function is expressed as:

where g is given by Eq. 13, γglau is given by Eq. 13 and 1 or 0 is the expected result. Note that we used a training HRT dataset different from the test dataset and which contains 7 nonprogressor eyes and another 7 progressor eyes. Each eyes consists of one baseline and 4 to 8 follow-up exams (mean =5.5 exams). The change detection maps were estimated using the VEM algorithm presented in section 2.2.(A.1) Generating offspring by selection and crossover: 20% of the population which has best fitness is copied directly to next generation to keep the best gene. The other 80% of the population is obtained by crossover operation with a probability Pc = 0.8. We select randomly one cut-point and then we exchange the right part of two chromosomes.

Mutation operation: only the Chromosomes having undergone crossover can be affected by the mutation. It consists in modifying a gene with a probability Pm = 0.008.

Ending condition: if the maximal evolutionary epoch (maximal number of iterations 500) is reached, the GA end.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Dielemans I, De Jong P, Stolk R, Vingerling J, Grobbee D, Hofman A, et al. Primary open-angle glaucoma, intraocular pressure, and diabetes mellitus in the general elderly population. the rotterdam study. Ophthalmology. 1996;103:1271. doi: 10.1016/s0161-6420(96)30511-3. [DOI] [PubMed] [Google Scholar]

- [2].Traverso C, Walt J, Kelly S, Hommer A, Bron A, Denis P, Nordmann J, Renard J, Bayer A, Grehn F, et al. Direct costs of glaucoma and severity of the disease: a multinational long term study of resource utilisation in europe. British journal of ophthalmology. 2005;89:1245–1249. doi: 10.1136/bjo.2005.067355. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Jaffe G, Caprioli J. Optical coherence tomography to detect and manage retinal disease and glaucoma. American journal of ophthalmology. 2004;137:156–169. doi: 10.1016/s0002-9394(03)00792-x. [DOI] [PubMed] [Google Scholar]

- [4].Mistlberger A, Liebmann J, Greenfield D, Pons M, Hoh S, Ishikawa H, Ritch R. Heidelberg retina tomography and optical coherence tomography in normal, ocular-hypertensive, and glaucomatous eyes. Ophthalmology. 1999;106:2027–2032. doi: 10.1016/S0161-6420(99)90419-0. [DOI] [PubMed] [Google Scholar]

- [5].Chauhan B, Blanchard J, Hamilton D, LeBlanc R. Technique for detecting serial topographic changes in the optic disc and peripapillary retina using scanning laser tomography. Investigative ophthalmology & visual science. 2000;41:775–782. [PubMed] [Google Scholar]

- [6].Balasubramanian M, Bowd C, Kriegman D, Weinreb R, Sample P, LM Z. Detecting glaucomatous changes using only one optic nerve head scan per exam. Invest Ophthalmol Vis Sci. 51 E-Abstract 2723. [Google Scholar]

- [7].Balasubramanian M, Kriegman D, Bowd C, Holst M, Weinreb R, Sample P, Zangwill L. Localized glaucomatous change detection within the proper orthogonal decomposition framework. Investigative Ophthalmology & Visual Science. 53(2012):3615–3628. doi: 10.1167/iovs.11-8847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Li S. Markov random field modeling in computer vision. Springer-Verlag New York, Inc.; 1995. [Google Scholar]

- [9].McLachlan G, Krishnan T. The EM algorithm and extensions. ume 274. Wiley; New York: 1997. [Google Scholar]

- [10].Nasios N, Bors A. Computer Analysis of Images and Patterns. Springer; Blind source separation using variational expectation-maximization algorithm; pp. 442–450. [Google Scholar]

- [11].Likas A, Galatsanos N. A variational approach for bayesian blind image deconvolution. Signal Processing, IEEE Transactions on. 2004;52:2222–2233. doi: 10.1109/TIP.2008.2011757. [DOI] [PubMed] [Google Scholar]

- [12].Kanemura A, Maeda S, Ishii S. Superresolution with compound markov random fields via the variational em algorithm. Neural Networks. 2009;22:1025–1034. doi: 10.1016/j.neunet.2008.12.005. [DOI] [PubMed] [Google Scholar]

- [13].Waltz E, Llinas J, et al. Multisensor data fusion. ume 685. Artech House Norwood, MA; 1990. [Google Scholar]

- [14].Toth D, Aach T, Metzler V. Illumination-invariant change detection. Image Analysis and Interpretation; Proceedings. 4th IEEE Southwest Symposium, IEEE.2000. pp. 3–7. [Google Scholar]

- [15].Aach T, Dumbgen L, Mester R, Toth D. Bayesian illumination-invariant motion detection. Image Processing; Proceedings. 2001 International Conference on; IEEE; 2001. pp. 640–643. [Google Scholar]

- [16].Hines G, Rahman Z, Jobson D, Woodell G. Single-scale retinex using digital signal processors. Global Signal Processing Conference; Citeseer; [Google Scholar]

- [17].Wang W, Li B, Zheng J, Xian S, Wang J. A fast multi-scale retinex algorithm for color image enhancement. Wavelet Analysis and Pattern Recognition; ICWAPR’08. International Conference on; IEEE; 2008. pp. 80–85. [Google Scholar]

- [18].Watanabe T, Kuwahara Y, Kojima A, Kurosawa T. An adaptive multi-scale retinex algorithm realizing high color quality and high-speed processing. Journal of imaging science and technology. 2005;49:486–497. [Google Scholar]

- [19].Gross R, Brajovic V. Audio-and Video-Based Biometric Person Authentication. Springer; An image preprocessing algorithm for illumination invariant face recognition; pp. 1055–1055. [Google Scholar]

- [20].Shuyue C, Ling Z. Chest radiographic image enhancement based on multi-scale retinex technique. Bioinformatics and Biomedical Engineering; ICBBE 2009. 3rd International Conference on, IEEE.2009. pp. 1–3. [Google Scholar]

- [21].Brahim N, Daniel S, Gueriot D. OCEANS. IEEE; 2008. Potential of underwater sonar systems for port infrastructure inspection; pp. 1–7. [Google Scholar]

- [22].Joshi K, Kamathe R. Quantification of retinex in enhancement of weather degraded images. Audio, Language and Image Processing; ICALIP 2008. International Conference on, IEEE.2008. pp. 1229–1233. [Google Scholar]

- [23].Schuman JS, Pedut-Kloizman T, Hertzmark E, Hee MR, Wilkins JR, Coker JG, Puliafito CA, Fujimoto JG, Swanson EA. Reproducibility of nerve fiber layer thickness measurements using optical coherence tomography. Ophthalmology. 1996;103:1889. doi: 10.1016/s0161-6420(96)30410-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Gilks W, Richardson S, Spiegelhalter D. Markov chain Monte Carlo in practice. Chapman & Hall/CRC; 1996. [Google Scholar]

- [25].Besag J. On the statistical analysis of dirty pictures. Journal of the Royal Statistical Society. Series B (Methodological) 1986:259–302. [Google Scholar]

- [26].Frey BJ, Jojic N. A comparison of algorithms for inference and learning in probabilistic graphical models, Pattern Analysis and Machine Intelligence. IEEE Transactions on. 2005;27:1392–1416. doi: 10.1109/TPAMI.2005.169. [DOI] [PubMed] [Google Scholar]

- [27].Zhang J. The mean field theory in em procedures for markov random fields, Signal Processing. IEEE Transactions on. 1992;40:2570–2583. doi: 10.1109/83.210863. [DOI] [PubMed] [Google Scholar]

- [28].Celeux G, Forbes F, Peyrard N. Em procedures using mean field-like approximations for markov model-based image segmentation. Pattern recognition. 2003;36:131–144. [Google Scholar]

- [29].Bengio Y, LeCun Y. Scaling learning algorithms towards ai. Large-Scale Kernel Machines. 2007;34 [Google Scholar]

- [30].Toth D, Aach T, Metzler V. Illumination-invariant change detection. Image Analysis and Interpretation; Proceedings. 4th IEEE Southwest Symposium, IEEE.2000. pp. 3–7. [Google Scholar]

- [31].Chen W, Er M, Wu S. Illumination compensation and normalization for robust face recognition using discrete cosine transform in logarithm domain, Systems, Man, and Cybernetics. Part B: Cybernetics, IEEE Transactions on. 2006;36:458–466. doi: 10.1109/tsmcb.2005.857353. [DOI] [PubMed] [Google Scholar]

- [32].Bovolo F, Camps-Valls G, Bruzzone L. A support vector domain method for change detection in multitemporal images. Pattern Recognition Letters. 2010;31:1148–1154. [Google Scholar]

- [33].Bruzzone L, Chi M, Marconcini M. A novel transductive SVM for semisupervised classification of remote-sensing images. IEEE Transactions on Geoscience and Remote Sensing. 2006;44:3363–3373. [Google Scholar]

- [34].Bazi Y, Bruzzone L, Melgani F. An unsupervised approach based on the generalized Gaussian model to automatic change detection in multitemporal SAR images. IEEE Transactions on Geo-science and Remote Sensing. 2005;43:874–887. [Google Scholar]

- [35].Chauhan B, Hutchison D, Artes P, Caprioli J, Jonas J, LeBlanc R, Nicolela M. Optic disc progression in glaucoma: comparison of confocal scanning laser tomography to optic disc photographs in a prospective study. Investigative ophthalmology & visual science. 2009;50:1682–1691. doi: 10.1167/iovs.08-2457. [DOI] [PubMed] [Google Scholar]

- [36].Cortes C, Vapnik V. Support-vector networks. Machine learning. 1995;20:273–297. [Google Scholar]

- [37].Tax D, Duin R. Support vector data description. Machine Learning. 2004;54:45–66. [Google Scholar]

- [38].Goldberg D. Genetic Algorithms in Search and Optimization. Addison-wesley; 1989. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.