Abstract

Background

The Council of Emergency Medicine Residency Directors (CORD) Standardized Letter of Recommendation (SLOR) has become the primary tool used by emergency medicine (EM) faculty to evaluate residency candidates. A survey was created to describe the training, beliefs, and usage patterns of SLOR writers.

Methods

The SLOR Task Force created the survey, which was circulated to the CORD listserv in 2012.

Results

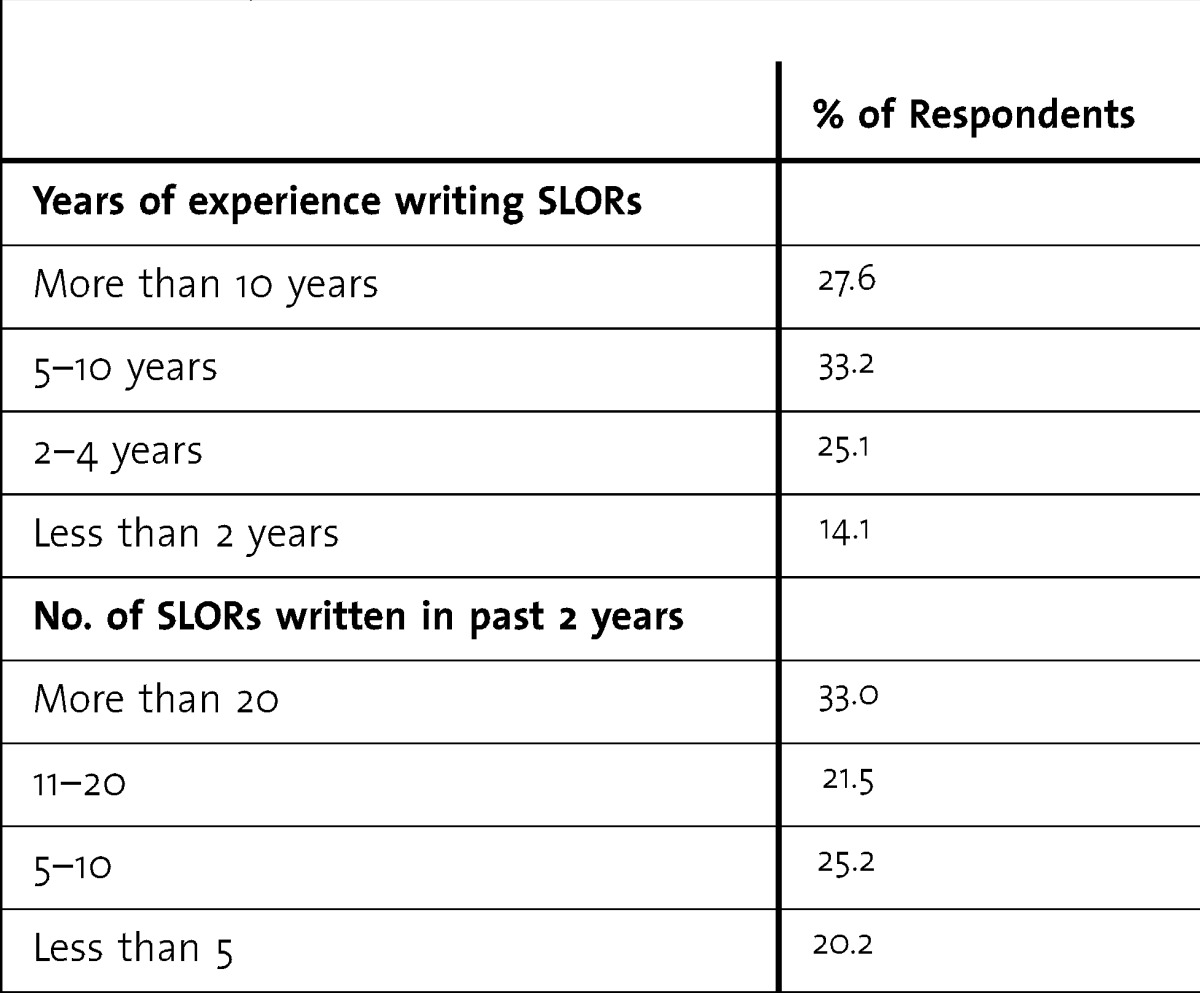

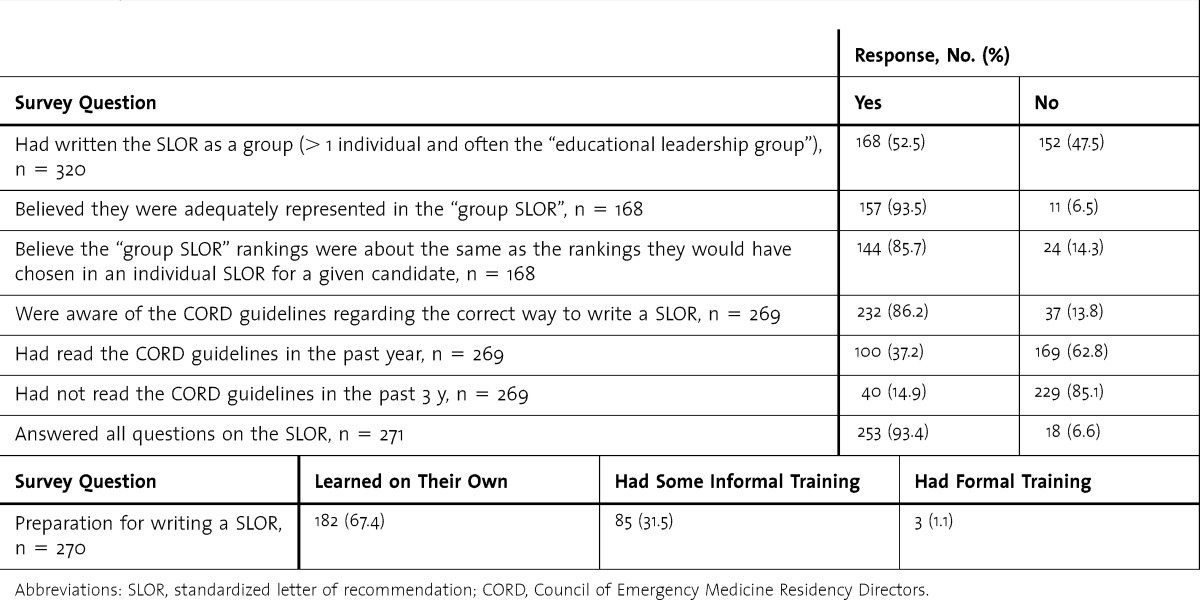

Forty-six percent of CORD members (320 of 695) completed the survey. Of the respondents, 39% (125 of 320) had fewer than 5 years of experience writing SLOR letters. Most were aware of published guidelines, and most reported they learned how to write a SLOR on their own (67.4%, 182 of 270). Sixty-eight percent (176 of 258) admitted to not following the instructions for certain questions. Self-reported grade inflation occurred “rarely” 36% (97 of 269) of the time and not at all 40% (107 of 269) of the time.

Conclusions

The CORD SLOR has become the primary tool used by EM faculty to evaluate candidates applying for residency in EM. The SLOR has been in use in the EM community for 16 years. However, our study has identified some problems with its use. Those issues may be overcome with a revised format for the SLOR and with faculty training in the writing and use of this document.

What was known

A standardized letter of recommendation (SLOR) for individuals applying to residency seeks to address the idiosyncrasies of narrative letters and the resulting difficulty in comparing applicants.

What is new

A study of Council of Emergency Medicine Residency Directors members who write SLORs identified some problems with the current SLOR and its use.

Limitations

A nonvalidated survey tool was used. The low response rate may introduce respondent bias, and there was the potential for social desirability responding.

Bottom line

Problems with the emergency medicine SLORs may be addressed by clarifying some questions and response options and by enhancing training for faculty writing those letters.

Editor's Note: The online version of this article contains the survey instrument (497.1KB, pdf) used in the study and the standardized letter of recommendation form (25.1KB, docx) described in this article.

Introduction

Selecting the individuals who will become outstanding residents from a sea of applicants is a challenge faced by educators in every field of medicine.1–9 Letters of recommendation (LOR) are widely considered a core component of the application for residency placement, yet studies of their predictive value are limited.10–16 Although many fields rely on traditional narrative LORs (NLORs), emergency medicine (EM) adopted a Standardized LOR (SLOR). The Council of Emergency Medicine Residency Directors (CORD) SLOR has become the primary tool used by EM faculty to evaluate candidates applying for residency.17 The SLOR was developed in 1995 by a CORD task force and was first used in 1997 to provide a global perspective on an applicant's candidacy for residency training through a standardized, concise, and discriminating letter of recommendation.17 The task force recommended that the SLOR include 4 sections: (1) background information on the applicant, (2) personal characteristics, (3) global or summary assessment, and (4) an open, narrative section for written comments.17 An early survey17 of the CORD community showed that, compared with NLORs, (1) the SLOR was easier to read and incorporate into a ranking scheme, (2) the SLOR was easier to complete, and (3) readers were better able to discriminate differences between candidates. In addition, program directors believed that use of the SLOR had not affected their student grading scheme, and CORD members wanted to continue using it in the future.17 A 1998 study noted that “compared with NLORs, the CORD SLOR offers better interrater reliability with less interpretation time.”18(p1101) More recent studies support these concepts—the strengths of SLORs in comparison to NLORs include decreased writing and reviewing time, increased ease of reviewing, and higher interrater reliability.19,20

Although the SLOR is an important attempt to standardize a vital component of medical student evaluation, there are potential problems with question interpretation.21,22 Because the SLOR has existed for nearly 15 years without significant revisions, CORD reestablished the SLOR Task Force in 2011 to review the current state of the SLOR and to make recommendations for improvements. The task force consisted of volunteer members of CORD holding educational leadership positions representing a cross section of educators in EM. One mandate of the task force was to survey EM faculty members who write the letters to get feedback from users. The primary aim of this article is to describe how that user survey was created, present the results, and discuss recommendation for future improvements to the SLOR.

Methods

Study Design

In 2011–2012, members of the SLOR Task Force created a list of survey questions through a consensus process (including face-to-face meetings, teleconference discussions, and members submitting questions that were reviewed by the committee and agreed upon).

This survey was initiated upon approval of the primary site Institutional Review Board by expedited review.

Study Setting and Population

The CORD listserv is composed of educators in EM, including program directors, assistant program directors, clerkship directors, and departmental chairs. This listserv was chosen because that group makes up most of the faculty members who write SLORs each year.

Study Protocol

A link to the 17-item survey administered through the SurveyMonkey (Palo Alto, CA) tool was distributed via the CORD e-mail listserv to approximately 695 members. The survey was available for completion from March 23, 2012, to April 20, 2012. There were 3 subsequent reminders that were sent out on a weekly basis during that period. Individuals taking the survey were not required to complete all items.

Data Analysis

Results were compiled using SurveyMonkey software. Descriptive statistics were calculated using Prism 6, version 6.0b (GraphPad Software, San Diego, CA) for Mac OS X. Comments from the survey were listed and reviewed.

Results

Our survey response rate was 46% (320 of 695). Key findings included the time that had elapsed since most respondents had last reviewed the CORD guidelines for writing SLORs, and the high percentage of faculty writing or contributing to a SLOR who were self-taught (tables 1 and 2).

TABLE 1.

Writers' Standardized Letter of Recommendation (SLOR) Experience

TABLE 2.

General Survey Results

The next sections of the survey focused on how faculty answered specific questions on the SLOR (provided as online supplemental material).

SLOR Survey Results by Section

Section A: Background Information

In supplying an answer to the optional question “One Key Comment from ED [emergency department] Faculty Eval,” 44.3% (109 of 246) of the respondents stated that they provide a random positive comment from faculty evaluations; 17.5% (43 of 246) provide a number of verbatim comments, both positive and negative, from faculty evaluations; 15.9% (39 of 246) provide a concise paragraph that describes the applicant; and 14.2% (35 of 246) provide 1 sentence that sums up the applicant's candidacy.

Section B: Qualifications for EM

For the SLOR questions of Section B, questions 1 to 3, and Section C, question 1, which require authors to stratify candidates into 4 categories, 76.5% (199 of 260) indicated that they use the recommended top 10%, top third, middle third, and lower third, whereas 23.5% (61 of 260) indicated that they use a gestalt of very good, good, fair, and not so good.

For Section B questions, those surveyed were asked whether the answers to any of the questions should be changed. Those questions with the highest percentage of “yes” answers (indicating the question should be changed) included the following:

Question B5b: “Given the necessary guidance, what is your prediction of success for the applicant?” (40.0%, 102 of 255);

Question B1: “Commitment to Emergency Medicine” (39.7%, 106 of 267);

Question B4a: “Personality, ability to interact with others” (37.8%, 99 of 262);

Question B5a: “How much guidance do you predict this applicant will need during residency?” (37.2%, 97 of 261); and

Question B4b: “Personality, ability to communicate a caring nature to patients” (31.4%, 81 of 258).

For Section B, those surveyed were also asked whether any of the questions should be dropped because they do not provide useful information. The questions that received the highest number of “yes” answers (indicating they should be dropped) included the following:

Question B1: “Commitment to Emergency Medicine” (37.7%, 86 of 228); and

Question B5b: “Given the necessary guidance, what is your prediction of success for the applicant?” (30.2%, 68 of 225).

Section C: Global Assessment

The survey also asked about the overall final recommendation of the applicant as well as his or her predicted position on the Match list. Nearly half of respondents (49.1%, 132 of 269) responded that they were responsible for the final Match list and used their prior experience to estimate where a candidate would reside on the Match list. A quarter (24.2%, 65 of 269) responded that they follow the 2×, 4×, 6× rule: For 8 incoming interns, those with 2× (very competitive) would be in the top 16 places; those listed as 4× (competitive) would be in the next 32 spots (numbers 17–48 on the rank list); those marked 6× (possible match) would be in the next 48 places (numbers 49–96 on the rank list); and an Unlikely Match was marked for anyone below 96 on the rank list. An additional 14.1% (38 of 269) discussed that item (Question C2) with their program director who helped guide their answers; 10.4% (28 of 269) indicated they were not responsible for the final rank list, but “place them where I believe they belong if I were solely responsible for the rank list”; and 2.2% (6 of 269) indicated they do not answer that question.

In addition to the EM clinical experience factoring into the global assessment, other factors that some survey respondents stated they included in creating their global assessment were “grades on core clinical rotations” (25.7%, 65 of 253), “USMLEs” (26.1%, 66 of 253), and “participation beyond the clinical arena” (43.9%, 111 of 253). Free text responses to this question supported the varied data used and ranged from some letter writers basing their opinion only on the student's performance on their EM clerkship to others who factored in all elements of their overall medical school performance (USMLE scores, other rotation scores, etc).

On the Section C question that asked how the respondent came up with an answer to how competitive the candidate would be, 68.2% (176 of 258) stated they did not follow the 2×, 4×, 6× rule, and they answered that question based on a gestalt of very competitive, competitive, and possible match; 32% (82 of 258) of survey respondents who use the rule are interpreting it in 2 distinct ways, with 19.0% (49 of 258) following the 2×, 4×, 6× rule (for 8 incoming interns, those marked 2× would be in the top 16 places, 4× would be numbers 16–48, etc) and 12.8% (33 of 258) following a different version of the 2×, 4×, 6× rule (for 8 incoming interns, those marked 2× would be in the top 16 places, 4× would be in the top 32 places, etc). Many comments in the free text answer section indicated confusion about that question.

Global SLOR Questions

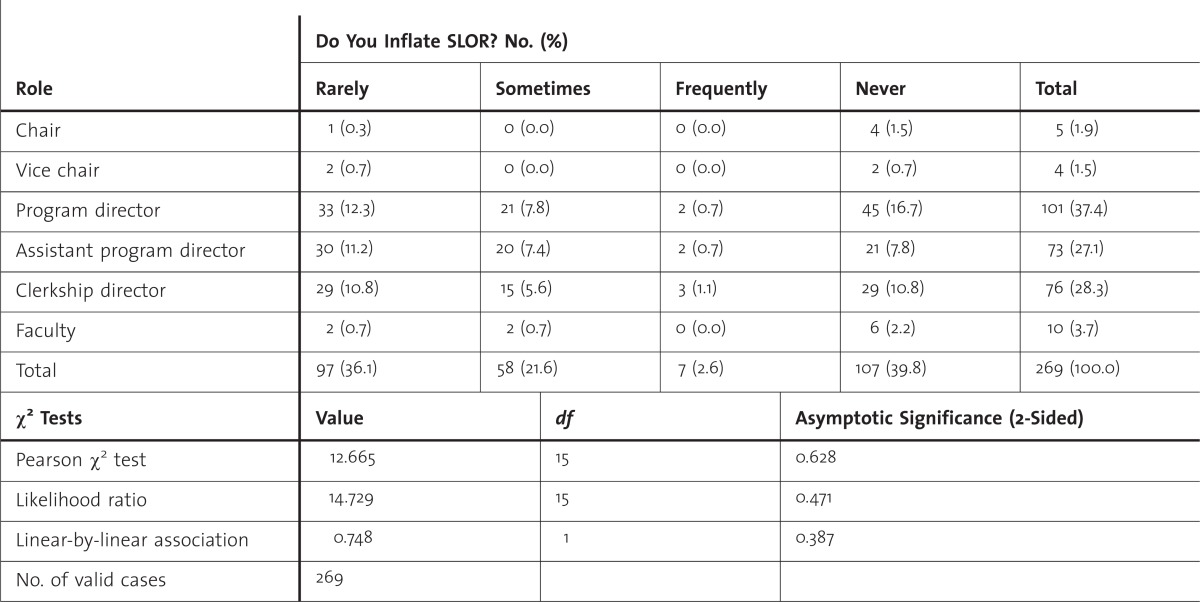

For all those data points marked on a SLOR, those surveyed were asked if they ever inflated a score to avoid hurting an applicant's chance at matching; 39.9% (108 of 271) stated they never inflated scores, 36.2% (98 of 271) rarely inflated scores, 21.4% (58 of 271) sometimes inflated scores, and 2.6% (7 of 271) frequently inflated scores. table 3 shows those data classified by job title.

TABLE 3.

Survey Responses by Job Title for Score Inflation on Standardized Letter of Recommendation (SLOR)

One of the final questions asked was whether there was an applicant attribute that could not be assessed using the current SLOR, and 28.7% (68 of 237) of those surveyed indicated that there was. For the free text answers to that question, the most common attributes not currently assessed were “leadership” (n = 7) and “teamwork” (n = 7).

Summarizing free text comments from all questions asked, the 2 most commonly stated phrases by far were “grade inflation” (n = 41) and “lack of consistency” (n = 30).

Discussion

The results of this survey of SLOR writers provided insight into the strengths and weaknesses of the SLOR as an assessment tool for residency candidates.

The CORD provides guidelines on their website that outlines how to properly fill out the SLOR,23 but our study indicates only a fraction of writers regularly access those instructions. Despite most of the group indicating they were aware of the guidelines, there were several important questions that were not being completed according to those guidelines. Perhaps the most important point of confusion was the 2×, 4×, 6× rule for placing candidates on the rank list.23 Most respondents indicated they did not follow that rule although the rule is recommended in the website guidelines, and the SLOR template itself has the triggers listed directly next to the check box areas. In addition, respondents who used the rule interpreted it in 2 distinct ways, with 19% (49 of 258) interpreting it to include spots 1 to 96 on the rank list, and 13% (33 of 258) interpreting it to include spots 1 to 48 on the rank list. There is room to clarify how the question should be answered and to educate those who fill out the SLOR to the suggested method. Another point of concern is that writers reported using different information to come up with their final global assessment, with different SLOR writers using a different combination of EM clinical experience, USMLE scores, core clinical grades, and participation beyond the clinical arena.

Most respondents to our survey indicated that they had learned how to write a SLOR on their own (67.4%, 182 of 270), showing a potential need for a more formal educational process to teach writers how to properly fill out a SLOR. Possible methods for educating SLOR writers could include lectures or workshops at national conferences or a video on the CORD website.

Regarding the optional “One key comment from ED faculty evaluation” question, a wide range of material was included in that answer. To standardize that section (Section A), either the guidelines need to be clearer about what should be placed in the answer or the section should be removed.

For Section B of the SLOR, no question received a majority of “yes” answers to suggest a change in any single question. Those surveyed indicated that there were a few questions that could potentially be edited or removed—with B1 “Commitment to Emergency Medicine” and B5b “Given the necessary guidance, what is your prediction of success for the applicant?”—appearing on both the “to be edited” and the “removal” lists. Based on that information, we recommend that those questions be reassessed. There was also support for editing and improving the questions on 4a/4b “personality” and 5a “how much guidance.” A few survey respondents wanted to drop those questions, suggesting there was interest in the topic, but discomfort with the way the questions were worded. A few of those surveyed felt that there was an important area not addressed on the current SLOR, and the most commonly recommended areas to be added to the SLOR were questions on “leadership qualities or ability” and “teamwork.”

Because most respondents (75.8%, 204 of 269) reported they rarely or never inflate scores, most of the SLOR authors appeared to believe they were giving a fairly true representation of the applicant and their Match potential. In contrast, the free text comments supported the idea that grade inflation was a big problem. Further evidence of rank inflation comes from a recently published study24 that showed that grade inflation was the most significant issue with the SLOR. That study reported that 40% (234 of 583) of the SLORs reviewed scored the applicant in the top 10%.24 Although there may be some explanation as to why such a discrepancy exists, it is clear that this is an important controversy with the SLOR and an area that may warrant further study.24

Limitations of our study included the response rate of 46%. Because of our inability to fully characterize the individuals who write SLORs because the demographics of the members of the CORD listserv are not known, we do not know how representative the survey responders were to the total population of SLOR writers. In addition, the survey questions were not tested for validity,25,26 and the retrospective self-reporting design of our study and the potential for a response or social desirability bias could also have affected our results.

Conclusion

The CORD SLOR has become the primary tool used by faculty to evaluate candidates applying for residency in EM. The SLOR has been in use within the community for 16 years; our study has identified some problems with its question design and use. Those difficulties may be overcome with a revised SLOR and faculty training in its use.

Footnotes

Cullen B. Hegarty, MD, is Associate Professor of Emergency Medicine, Regions Hospital, University of Minnesota; David R. Lane, MD, is Assistant Professor of Emergency Medicine, Medstar Georgetown University Hospital, Medstar Washington Hospital Center; Jeffrey N. Love, MD, is Vice Chair of Academic Affairs, Medstar Georgetown University Hospital, Medstar Washington Hospital Center; Christopher I. Doty, MD, is Associate Professor of Emergency Medicine, University of Kentucky; Nicole M. DeIorio, MD, is Associate Professor, Clerkship Director, and Co-Chief for Education, Department of Emergency Medicine, Oregon Health and Science University; Sarah Ronan- Bentle, MD, MS, is Assistant Professor, Department of Emergency Medicine, University of Cincinnati College of Medicine; and John Howell, MD, is Staff Physician, Inova Fairfax Hospital.

Funding: The authors report no external funding source for this study.

Conflict of interest: The authors declare they have no competing interests.

The authors would like to thank and acknowledge the Critical Care Research Consortium staff at Regions Hospital, Josh Salzman, and Alexandra Muhar for their assistance with the manuscript.

References

- 1.Grewal SG, Yeung LS, Brandes SB. Predictors of success in a urology residency program. J Surg Educ. 2013;70(1):138–143. doi: 10.1016/j.jsurg.2012.06.015. [DOI] [PubMed] [Google Scholar]

- 2.Chole RA, Ogden MA. Predictors of future success in otolaryngology residency applicants. Arch Otolaryngol Head Neck Surg. 2012;138(8):707–712. doi: 10.1001/archoto.2012.1374. [DOI] [PubMed] [Google Scholar]

- 3.Bell RM, Fann SA, Morrison JE, Lisk JR. Determining personal talents and behavioral styles of applicants to surgical training: a new look at an old problem, part II. J Surg Educ. 2012;69(1):23–29. doi: 10.1016/j.jsurg.2011.05.017. [DOI] [PubMed] [Google Scholar]

- 4.Harfmann KL, Zirwas MJ. Can performance in medical school predict performance in residency? a compilation and review of correlative studies. J Am Acad Dermatol. 2011;65(5):1010–1022. doi: 10.1016/j.jaad.2010.07.034. [DOI] [PubMed] [Google Scholar]

- 5.Cullen MW, Reed DA, Halvorsen AJ, Wittich CM, Kreuziger LM, Keddis MT, et al. Selection criteria for internal medicine residency applicants and professionalism ratings during internship. Mayo Clin Proc. 2011;86(3):197–202. doi: 10.4065/mcp.2010.0655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Egol KA, Collins J, Zuckerman JD. Success in orthopaedic training: resident selection and predictors of quality performance. J Am Acad Orthop Surg. 2011;19(2):72–80. doi: 10.5435/00124635-201102000-00002. [DOI] [PubMed] [Google Scholar]

- 7.Brenner AM, Mathai S, Jain S, Mohl PC. Can we predict “problem residents”. Acad Med. 2010;85(7):1147–1151. doi: 10.1097/ACM.0b013e3181e1a85d. [DOI] [PubMed] [Google Scholar]

- 8.Nallasamy S, Uhler T, Nallasamy N, Tapino PJ, Volpe NJ. Ophthalmology resident selection: current trends in selection criteria and improving the process. Ophthalmology. 2010;117(5):1041–1047. doi: 10.1016/j.ophtha.2009.07.034. [DOI] [PubMed] [Google Scholar]

- 9.Lee AG, Golnik KC, Oetting TA, Beaver HA, Boldt HC, Olso R, et al. Re-engineering the resident applicant selection process in ophthalmology: a literature review and recommendations for improvement. Surv Ophthalmol. 2008;53(2):164–176. doi: 10.1016/j.survophthal.2007.12.007. [DOI] [PubMed] [Google Scholar]

- 10.MacLean LM, Alexander G, Oja-Tebbe N. Letters of recommendation in residency training: what do they really mean. Acad Psychiatry. 2011;35(3):342–343. doi: 10.1176/appi.ap.35.5.342. [DOI] [PubMed] [Google Scholar]

- 11.Stedman JM, Hatch JP, Schoenfeld LS. Letters of recommendation for the predoctoral internship in medical schools and other settings: do they enhance decision making in the selection process. J Clin Psychol Med Settings. 2009;16(4):339–345. doi: 10.1007/s10880-009-9170-y. [DOI] [PubMed] [Google Scholar]

- 12.DeZee KJ, Thomas MR, Mintz M, Durning SJ. Letters of recommendation: rating, writing and reading by clerkship directors of internal medicine. Teach Learn Med. 2009;21(2):153–158. doi: 10.1080/10401330902791347. [DOI] [PubMed] [Google Scholar]

- 13.Blechman A, Gussman D. Letters of recommendation: an analysis for evidence of Accreditation Council for Graduate Medical Education core competencies. J Reprod Med. 2008;53(10):793–797. [PubMed] [Google Scholar]

- 14.Messner AH, Shimahara E. Letters of recommendation to an otolaryngology/head and neck surgery residency program: their function and the role of gender. Laryngoscope. 2008;118(8):1335–1344. doi: 10.1097/MLG.0b013e318175337e. [DOI] [PubMed] [Google Scholar]

- 15.Shultz K, Mahabir RC, Song J, Verheyden CN. Evaluation of the current perspectives on letters of recommendation for residency applicants among plastic surgery program directors. Plast Surg Int. 2012;2012:728981. doi: 10.1155/2012/728981. doi:10.1155/2012/728981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Stohl HE, Hueppchen NA, Bienstock JL. The utility of letters of recommendation in predicting resident success: can the ACGME competencies help. J Grad Med Educ. 2011;3(3):387–390. doi: 10.4300/JGME-D-11-00010.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Keim SM, Rein JA, Chisholm C, Dyne PL, Hendey GW, Jouriles NJ, et al. A standardized letter of recommendation for residency application. Acad Emerg Med. 1999;6(11):1141–1146. doi: 10.1111/j.1553-2712.1999.tb00117.x. [DOI] [PubMed] [Google Scholar]

- 18.Girzadas DV, Jr, Harwood RC, Dearie J, Garrett S. A comparison of standardized and narrative letters of recommendation. Acad Emerg Med. 1998;5(11):1101–1104. doi: 10.1111/j.1553-2712.1998.tb02670.x. [DOI] [PubMed] [Google Scholar]

- 19.Perkins JN, Liang C, McFann K, Abaza MM, Streubel SO, Prager JD. Standardized letter of recommendation for otolaryngology residency selection. Laryngoscope. 2013;123(1):123–133. doi: 10.1002/lary.23866. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Prager JD, Perkins JN, McFann K, Myer CM, III, Pensak ML, Chan KH. Standardized letter of recommendation for pediatric fellowship selection. Laryngoscope. 2012;122(2):415–424. doi: 10.1002/lary.22394. [DOI] [PubMed] [Google Scholar]

- 21.Garmel GM. Letters of recommendation: what does good really mean. Acad Emerg Med. 1997;4(8):833–834. doi: 10.1111/j.1553-2712.1997.tb03796.x. [DOI] [PubMed] [Google Scholar]

- 22.Oyama LC, Kwon M, Fernandez JA, Fernández-Frackelton M, Campagne DD, Castillo EM, et al. Inaccuracy of the global assessment score in the emergency medicine standard letter of recommendation. Acad Emerg Med. 2010;17(suppl 2):538–541. doi: 10.1111/j.1553-2712.2010.00882.x. [DOI] [PubMed] [Google Scholar]

- 23.Council of Emergency Medicine Residency Directors. CORD website. http://www.cordem.org. Accessed January 23, 2013. [Google Scholar]

- 24.Love J, Deiorio NM, Ronan-Bentle S, Howell JM, Doty CI, Lane DR, et al. Characterization of the Council of Emergency Medicine Residency Director's standard letter of recommendation in 2011–2012. Acad Emerg Med. 2013;20(9):926–932. doi: 10.1111/acem.12214. [DOI] [PubMed] [Google Scholar]

- 25.Rickards G, Magee C, Artino AR., Jr You can't fix by analysis what you've spoiled by design: developing survey instruments and collecting validity evidence. J Grad Med Educ. 2012;4(4):407–410. doi: 10.4300/JGME-D-12-00239.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gehlbach H, Artino AR, Jr, Durning S. AM last page: survey development guidance for medical education researchers. Acad Med. 2010;85(5):925. doi: 10.1097/ACM.0b013e3181dd3e88. [DOI] [PubMed] [Google Scholar]