Abstract

In this paper, we present a novel approach to imaging sparse and focal neural current sources from MEG (magnetoencephalography) data. Using the framework of Tikhonov regularization theory, we introduce a new stabilizer that uses the concept of controlled support to incorporate a priori assumptions about the area occupied by focal sources. The paper discusses the underlying Tikhonov theory and its relationship to a Bayesian formulation which in turn allows us to interpret and better understand other related algorithms.

Introduction

The brain’s neuronal activity generates weak magnetic fields (10 fT-pT). Magnetoencephalography (MEG) is an non-invasive technique for characterizing these magnetic fields using an array of superconducting quantum interference devices (SQUIDs). SQUID magnetometers can measure the changes in the brain’s magnetic field on a millisecond timescale, thus, providing unique insights into the dynamic aspects of the brain’s activity. The goal of biomagnetic imaging is to use MEG data to characterize macroscopic dynamic neural information by solving an electromagnetic source localization problem.

In the past decade, the development of source localization algorithms has significantly progressed (Baillet et al., 2001). Currently, there are two general approaches to estimating MEG sources: parametric methods and tomographic imaging methods (Hamalainen et al., 1993). With parametric methods, a few current dipoles of unknown location and moment represent the sources. In this case, the inverse problem is a non-linear optimization in which one estimates the position and magnitude of the dipoles.

In this paper, we use the tomographic imaging method, where a grid of small voxels represents entire brain volume. The inverse problem then seeks to recover a whole brain activation image, represented by the moments and magnitudes of elementary dipolar sources located at each voxel. The advantage of such a formulation is that the forward problem becomes linear. However, the ill-posed nature of the imaging problem constitutes considerable difficulty, most notably due to the non-uniqueness of the solution.

A common way to constrain the non-uniqueness is to use the weighted minimum norm methods. Such methods find solutions that match the data while minimizing a weighted l2 norm of the solution (Hamalainen et al., 1993; Sarvas, 1987; Wang et al., 1992; Pascual-Marqui and Biscay-Lirio, 1993). Unfortunately, these techniques tend to “smear” focal sources over the entire reconstruction region.

There are three basic approaches for creating less smeared solutions to the MEG focal imaging problem: (1) use of lp norms, (2) Bayesian estimation procedures with sparse priors, and (3) iterative reweighting methods. The first approach that produces sparse solutions uses an l1, or an lp norm. Although, the l1 norm solution can be formulated as a linear programming problem which converges to the global solution, other lp norm methods are calculated using multidimensional iterative methods which often do not converge to the correct solution. Furthermore, all lp methods are sensitive to noise (Matsuura and Okabe, 1995; Uutela et al., 1999). The second approach is a Bayesian framework with sparse priors derived from Gibbs distributions (Schmidt et al., 1999). However, these methods are very computationally intensive since full a posteriori estimation is solved using the Markov-chain Monte-Carlo or mean-field approximation methods (Bertrand et al., 2001a,b; Phillips et al., 1997). The third approach is iterative reweighted minimum norm method. The method uses a weighting matrix which, as the iterations proceed, reinforces strong sources and reduces weak ones (Gorodnitsky and Rao, 1997; Gorodnitsky and George, 1995). The problems associated with this method are sensitivity to noise, high dependency on the initial estimate and tendency to accentuate the peaks of the previous iteration. In addition, the method often produces an image of a focal source as a scattered cloud of multiple sources that exist near each other.

In this paper, we combine features of all three approaches outlined above and derive a novel Controlled Support MEG imaging algorithm, using Tikhonov regularization theory. The advantages of our algorithm are the quality of focal source images as well as robustness and speed. We first formulate the MEG inverse problem under the framework of Tikhonov regularization theory, and introduce a way to constrain the problem using specially selected stabilizing functionals. We then describe the relationship of this formulation to the minimum norm methods and Bayesian methods. Subsequently, we revisit minimum support stabilizing functional which obtains the sparsest possible solutions, but may produce an image of a focal source as a cloud of points. To remedy this problem, we derive a new controlled support functional, by adding an extra constraining term to the minimum support and then explain details of computationally efficient method of reweighted optimization. The minimization algorithm section explains how the numerical minimization is carried out. In Results and discussion section, we demonstrate performance of the algorithm using results from Monte-Carlo simulation studies with realistic sensor geometries and variety of noise levels.

Formulation of the MEG inverse problem using Tikhonov regularization

Let the three Cartesian coordinates of the current dipole strength for each one of the Ns/3 voxels be denoted by the length Ns vector s. The data consist of a vector b that contains magnetic field measurements at all receivers. The length of the b is determined by the number of sensor sites, as denoted by Nb. The forward modeling operator L connects the model to the data:

| (1) |

where L is also known as the “lead field.” The lead field is a matrix of size Nb×Ns that connects the spatial distribution of the dipoles s to measurements at the sensors b. According to Hadamard (1902), the three difficulties in an inverse problem are: (1) the solution of the inverse problem may not exist, (2) the solution may be non-unique, (3) the solution may be unstable. The Tikhonov regularization theory resolves these difficulties using the notions of misfit, the stabilizer and the Tikhonov parametric functional.

The notion of misfit minimization resolves the first difficulty, the non-existence of the solution. In the event that an exact solution does not exist, we search for the solution that fits the data approximately, using the misfit functional as a goodness-of-fit measure. Following tradition (Eckhart, 1980), we use a quadratic form of the misfit functional, denoted as φ:

| (2) |

When the model produces a misfit that is smaller than the noise level (Tikhonov discrepancy principle), this model could be a solution of the problem.

The second difficulty, the non-uniqueness, is a situation where many different models have misfits smaller than the noise level. All of these models could be solutions of the problem. In practice, we need only one solution that is good. The stabilizing functional, enoted S(s), measures goodness of the solution. Designing the stabilizer S is a difficult task which we will discuss in detail in the next two sections. In simplest terms, S is small for “good” models and large for “bad” models. Therefore, the weighted sum of misfit and stabilizer (denoted as P) measures both the goodness of data fit and goodness of the model:

| (3) |

where λ is regularization parameter and P is Tikhonov parametric functional. Both difficulties considered so far (non-uniqueness and non-existence) are resolved by posing the minimization of parametric functional:

| (4) |

The third difficulty, the ill conditioning, is a situation where small variation in the data results in large variation in the solution. Careful choice of regularization parameter λ resolves this difficulty. In short, the Tikhonov discrepancy principle defines the choice of λ, which is discussed in The minimum support stabilizer section.

In summary, the formulation of MEG inverse problem using Tikhonov regularization reduces to minimization of the Tikhonov parametric functional to (4).

Finally, we note that a probabilistic framework provides a similar view on the inverse problem (Baillet and Garnero, 1997). A Bayesian approach poses the maximum a posteriori (MAP) problem:

| (5) |

Note that the logarithm of (5) is (4). While using different underlying axioms, the Tikhonov problem results in a formulation similar to the Bayesian approach. In the Bayesian framework, the functional exp (−λS(s)) incorporates prior assumptions on distribution of s. A stabilizer function in the Tikhonov framework can be viewed as the log of the prior probability drawn from an exponential distributions on the sources, without the normalization terms for probability distributions. For example, a quadratic functional would correspond to a Gaussian prior, a linear functional corresponds to a Laplacian prior and a P-norm functional would correspond to a sparse distribution drawn from the exponential family.

The minimum support stabilizer

As discussed in the previous section, the role of a stabilizer is especially important for a situation in which many different models produce similar data. Clearly, the misfit functional alone cannot discriminate between these models. Therefore, this situation requires using additional discriminatory measure, such as the stabilizing functional.

The choice of the stabilizing functional S is difficult. S should be small for good models and large for bad models, so that the minimum of S determines the solution. Unfortunately, the definition of a good model relies upon empirical knowledge and depends upon each particular problem.

The good model for MEG inverse problem should adequately represent focal current sources, i.e., sources that occupy a small volume (or, sources with small support). Therefore, the minimum support functional (Last and Kubik, 1983) (Portniaguine and Zhdanov, 1999) (denoted as Smin) is one possible choice for the stabilizer:

| (6) |

where sk is a component of vector s.

To better understand physical meaning of the minimum support stabilizer consider the following form of Smin:

| (7) |

where sign denotes signature function

| (8) |

Continuous approximation of the sign2 function is better for numerical implementation:

| (9) |

where constant 10−16 is machine precision. Note that, substituting (9) into (7) leads to (6). The form (7) is convenient to understand the physical meaning of Smin. Functional Smin measures a fraction of non-zero parameters. In other words, Smin measures support. If we use Smin as a stabilizer, we define the good model as one with the small support.

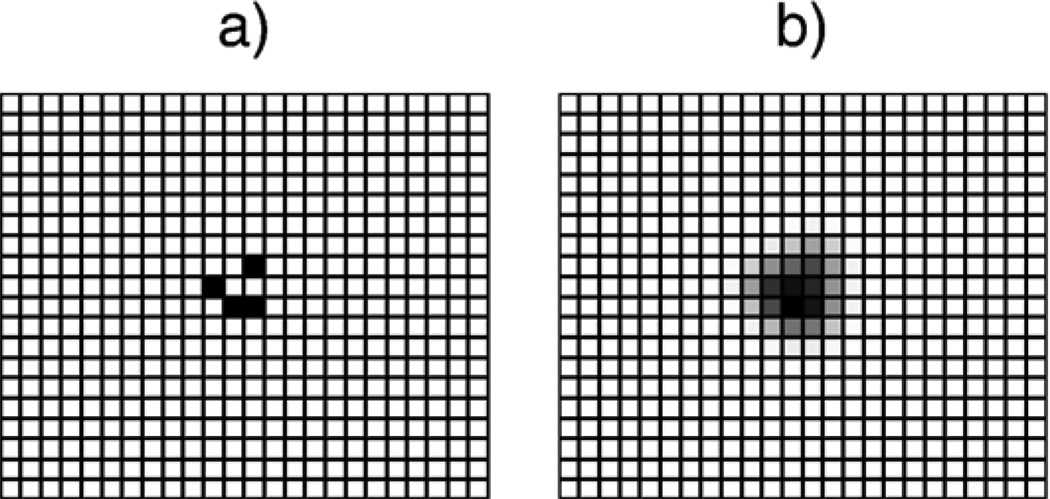

However, not all images with small support are suitable for imaging of focal MEG sources. As indicated in Fig. 1, sometimes the minimum support method represents a single focal source as a cloud of scattered points which can be misinterpreted as multiple local sources located near each other (panel a). What we ideally want in this situation is an image of a single patch, as depicted in panel (b) of Fig. 1. We note that this problem has also been reported by the researchers working with other types of sparse priors (Phillips et al., 1997). In the next section, we deal with this problem by introducing additional restrictive term to the minimum support stabilizer.

Fig. 1.

This Figure illustrates outcomes of two attempts to image a single focal source with two different stabilizers. (a) Image obtained with minimum support stabilizer is a cloud of disconnected multiple focal sources located near each other. (b) Image obtained with controlled support stabilizer is a single patch.

Controlled support stabilizer

The controlled support stabilizer (denoted as Scon) is a functional that reaches its minimum for images with a predetermined support value α. That value should be small, but not so small that it creates the undesirable of producing scattered sources. In other words, the image in Fig. 1 case b, which we consider to be good, produces a minimum of the stabilizer Scon. The undesirable (scattered) image (case a in the same figure) corresponds to a larger value of stabilizer Scon. This discriminative effect of Scon happens because Scon is a weighted sum of the previously introduced minimum support stabilizer Smin and an additional restricting term Sreg:

| (10) |

where the restricting term Sreg is:

| (11) |

Upon examining expression (11) we notice that the functional Sreg has opposing properties to Smin, Sreg has a maximum where Smin has a minimum. Obviously, the choice of the weighting factor α determines the balance between terms (1−α)Smin and αSreg. In summary, Smin favors minimum support solutions, Sreg favors solutions with large support, and Scon favors solutions with support controlled by the value of α.

The remainder of this section addresses two important details. First, we must explain why the effect of Sreg is opposite to that of Smin. Second, we will discuss the normalizations of Smin and Sreg, which leads to their invariance to discretization. We must note that Sreg is the square of the l2 norm weighted by the product of ll and l1 norms (11).We think of Sreg as a normalized l2 norm. Therefore, the minimum of Sreg is reached at the minimum l2 norm solution (a solution with large support where Smin has maximum). The maximum of Smin is 1, which happens for a case with one nonzero parameter, where Scon is at its minimum. Strictly speaking, the maximum of Sreg is also possible for other cases. However, opposing properties of the minimums are more important for our purposes.

Now, we consider the normalizations of Smin and Sreg. Factor 1/Ns normalizes Smin (6), while divisions by l1 and l∞ norms normalize stabilizer Sreg (11). Normalizations are important for the meaningful summation of Smin and Sreg in expression (10), because they make the terms bounded:

| (12) |

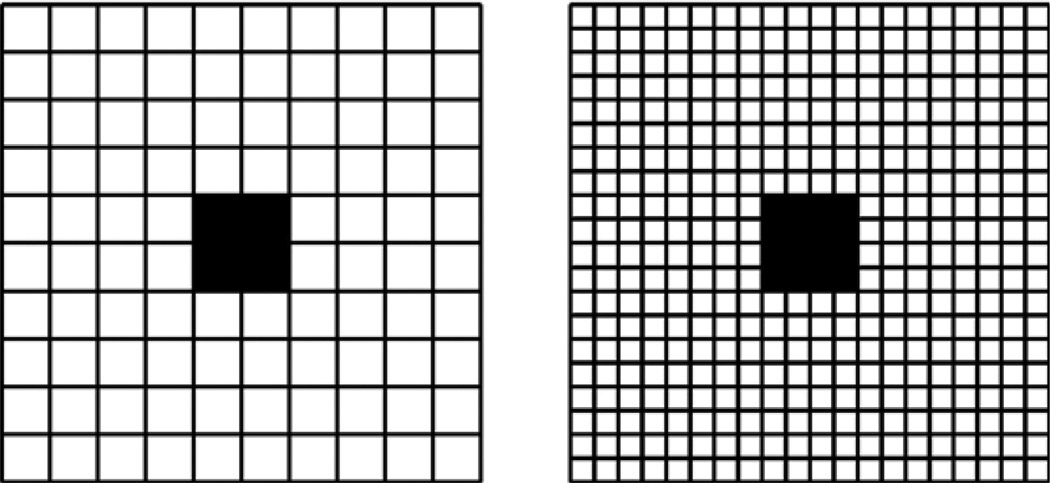

In addition, normalizations make functionals Smin and Sreg invariant to discretization and grayscale of the image. To illustrate this property, consider an example 2D image with a total of 100 pixels, where 96 pixels are zero, and a compact domain in the middle contains 4 pixels all with the value of a. The left panel in Fig. 2 illustrates this case. The following calculations find values of Smin and Sreg for this case:

| (13) |

Fig. 2.

Functionals Smin and Sreg are invariant to image level and discretization. For illustration, consider a 2D model depicted in this figure. Model has a non-zero domain in the middle. Left panel, shows case with 100 pixels and 4 non-zeros. Functional values for this model are Smin=0.04 and Sreg=1. Right panel, same case with finer discretization, 400 pixels and 16 non-zero values.

According to (13), Smin=0.04 (which is a fraction of the non-zero pixels in the image from Fig. 2) and Sreg=1. Now, we refine the discretization twice. The resulting image has total of 400 pixels with 16 pixels containing the value of a, as shown in the right panel of Fig. 2. Calculations similar to (13) show that Smin and Sreg did not change (Smin=0.04 and Sreg=1). This example also shows that functional values are invariant to a (image level, or grayscale). Note that the same properties hold true for 3D grids and volume models that we consider in this paper.

The method of reweighted optimization

In this section, we discuss how to solve minimization problem using the method of reweighted optimization. To obtain the final form of the objective functional (denoted as Pcon), we substitute definitions (2), (6), (10), (11) into (3):

| (14) |

In this section, we discuss the idea of how to solve the minimization problem:

| (15) |

Arguably, the minimization of Pcon is difficult, because it is a non-linear (non-quadratic) functional of s. We have two feasible options for the numerical solution of our non-quadratic problem. The first uses gradient-type inversion methods, and the second uses the method of reweighted optimization. While the gradient-type minimization method is well known (Fletcher, 1981), this method requires computing the gradient of a functional (14). Computing such a gradient is problematic since we previously used sign while constructing Pcon (see formulas (8) and (9)).

In this paper, we use the method of reweighted optimization, a historical choice for related minimum support problem (Last and Kubik, 1983; Portniaguine and Zhdanov, 1999; Portniaguine, 1999). In addition, a number of researchers have found the reweighted optimization convenient (Wolke and Schwetlick, 1988; O’Leary, 1990; Farquharson and Oldenburg, 1998), especially for cases where non-linearity is represented by weights to the quadratic term. This is exactly our case. Notice that the term in (14) can be taken out of the brackets

| (16) |

Thus, model-dependent weighting of the quadratic functional represents the non-linearity in (14):

| (17) |

where wk is model-dependent weight

| (18) |

It is convenient to assemble weights into a sparse diagonal matrix W(s) with terms wk in the main diagonal, and write (17) in matrix notations:

| (19) |

To understand our optimization algorithm in detail, it is necessary to convert the parametric functional to a purely quadratic form, which has a known analytic solution. This form is obtained by transforming the problem into a space of weighted model parameters. To do that, we insert W(s)W(s)−1 term into (19):

| (20) |

Then, we transform (20) by replacing the variables:

| (21) |

After substituting (21), expression (20) results in a purely quadratic form of the functional with respect to sw:

| (22) |

Since Pcon (sw) is purely quadratic with respect to sw, the minimization problem for Pcon (sw) has an analytical solution, known as the Riesz representation theorem (Aliprantis and Burkinshaw, 1978):

| (23) |

where Ib is unit matrix in the space of data (of size Nb×Nb).

Thus, the idea of reweighted optimization is to solve (15) iteratively, assuming the weights are constant on each iteration. Starting from the initial guess for the weights, we can use the Riesz representation theorem to find weighted solution. We can then convert back to original space, update the weights depending on the solution, and repeat the iterative process. The next section discusses the details of this process. The above equation is identical to the MAP estimator with Gaussian priors for the sources and the noise, where the source variance is assumed to be an unknown diagonal matrix and the noise variance is known and parameterized by λ.

The minimization algorithm

The algorithm for minimizing a parametric functional is iterative. On each iteration (enumerated with index n), we compute the updates of: the weights Wn, the weighted lead fields Lwn, the weighted model swn, and the update of the model sn. These quantities depend upon values from previous iteration (denoted with index n−1). Inputs to the algorithm include the data b, noise level estimate φ0, as well as the support parameter α. Before the first iteration, we precompute the lead fields L, set weights to one W0=Is, and set the current model update to zero s0=0. The important additional step are incorporated in the final algorithm. The first is the choice of regularization parameter, the second is the line search correction, and the third is termination criterion.

According to Tikhonov condition, the choice of the regularization parameter λ should be such that the misfit (2) at the solution equals an a priori known noise level φ0 (Tikhonov and Arsenin, 1977):

| (24) |

Substituting (23) into (24) yields the equation

| (25) |

which we solve with a fixed point iteration method. In Eq. (25) the only unknown variable is the scalar parameter λ. Since the Gramm matrix is small (Nb×Nb, where Nb is small), the fixed point iteration method easily solves Eq. (25) for λ. For the cases where the data dimension Nb is large, which makes the direct inversion of a Gramm matrix impractical, solving Eq. (22) using the Riesz theorem (23) can be substituted by solving (22) via a conjugate gradient method (Portniaguine, 1999). In this paper, we consider processing of MEG data from an array of 102 sensors, so the dimension of data is small Nb=102 and therefore the Gramm matrix is easily invertible with direct methods. Such a choice of the regularization term is analogous to setting the noise variance in the Bayesian MAP estimation procedure.

Second, to ensure convergence of the algorithm, we incorporate a line search procedure. Convergence of the reweighted optimization depends upon how accurately the Eq. (22) approximates the original non-quadratic Eq. (19). That, in turn, depends on assumption of constant weights, which, in our case, are dependent on s. The usual assumption for any iterative method is that the changes in a model are small from one iteration sn−1 to the next sn. That assumption may not always hold, and therefore, steps which converge on Eq. (22) may be divergent on the original Eq. (16) due to significant changes in W(s). The well-known method of line search (Fletcher, 1981) serves to correct this problem. Once the next approximate update Sn' is found from the previous update sn−1 using the approximate formula (22), we check the value of original non-linear objective functional Pcon (sn′). If the objective functional decreases, the line search is not deployed. If the objective functional increases (which signifies the divergent step), we perform a line search.

If Pcon (sn−1)<Pcon (sn), we perform a line search, by searching for the minimum of Pcon with respect to the scalar variable t (the step length):

If Pcon (sn−1)<Pcon (sn), then we set t=1 and do not perform the line search. Note that the smaller step size t is, the closer the corrected update sn is to the previous update sn−1 from which the weights Wn were derived. Thus, the smaller t has the less weights change from n−1 iteration to n, and therefore, our quadratic approximation becomes more accurate. With small enough t, a smaller value of Pcon will always be found somewhere between sn−1 and s′. Minimization of the scalar functional Pcon with respect to scalar argument t is a simple 1D problem. That problem is solved by sampling the function at a few points (usually three or four), fitting the parabola into it, and finding the argument of a minimum. Such sampling is fast, for one estimate of the functional we only need to solve one forward problem. In many previously conducted studies with minimum support functional Smin, the reweighted optimization has never diverged (Last and Kubik, 1983; Portniaguine and Zhdanov, 1999). Our new controlled support functional, Scon, is dominated by term Smin. Therefore, we expected the same good convergence for S as was reported for Smin. However, in the Monte-Carlo simulations carried out in this paper and with some limited datasets, the algorithm has never called the line search routine because the divergence was never detected.

Third, the termination criterion was formulated based on the following two observations.

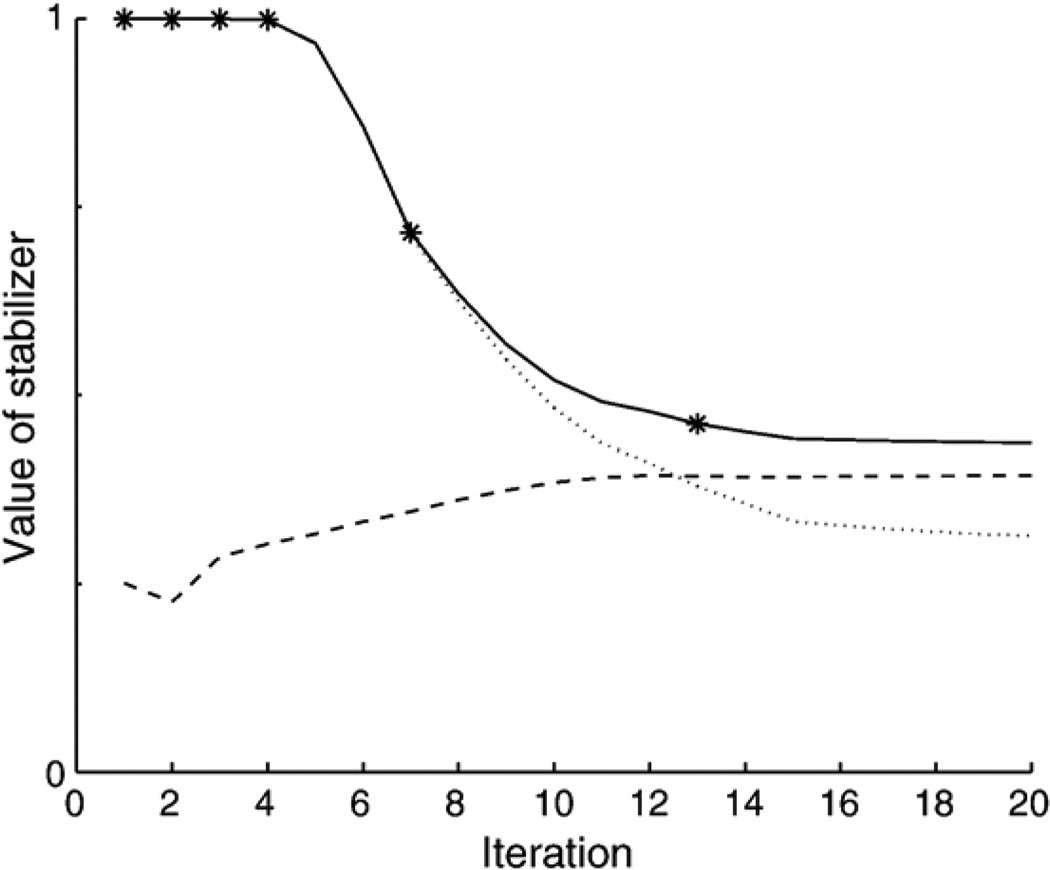

First, we must observe that all updates sn produce the same misfit φ0 (due to enforcement of the Tikhonov condition). Therefore, only the second term Scon determines the minimum of Pcon (sn) on a given set of arguments sn. This second term consists of two parts: (1−α) Smin (sn), and αSreg (sn). The second observation is that the Smin (sn) term decreases with n, and the Sreg (sn) term increases with n. This happens because on the first iteration n=1 we produce the minimum norm solution, and then progress towards more focused solutions (see discussion about opposing properties of Smin and Sreg in The minimum support stabilizer section). So, the dominance of αSreg term over (1−α)Smin term signifies close proximity to the minimum of Pcon. We summarize the complete algorithm as follows:

- Compute Lwn using (21)

Determine regularization parameter by fixed point iteration of Eq. (25).

- Find the weighted model swn using (23):

- Find preliminary update of the model sn using (21),

Check for divergence and incorporate line search.

- Corrected update sn is found from the previous update sn−1 using step length t:

- Check for termination criterion. We stop iterations if

Find the updated weight Wn using (18) and go to Step 1, repeating all steps in the loop.

Results and discussion

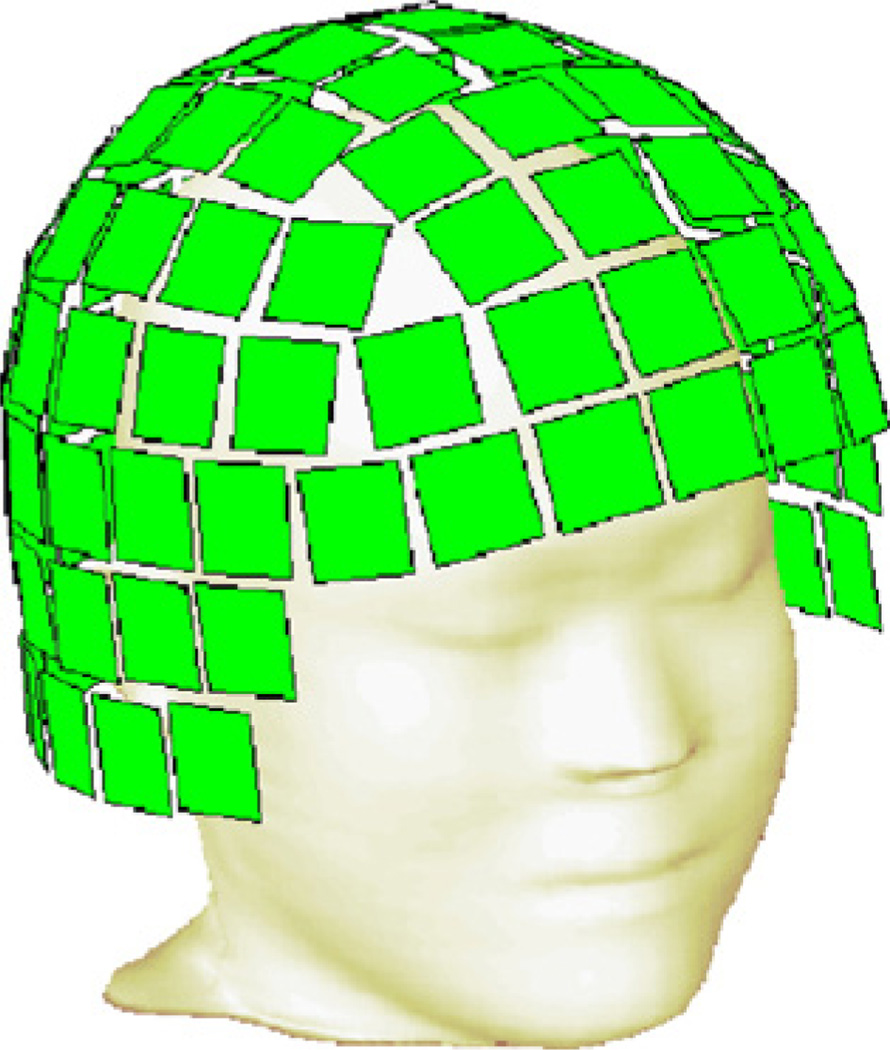

In simulations we demonstrate the algorithm performance, estimate localization accuracy and speed of the method. The geometry for all simulations was from an MR (magnetic resonance) image of a subject. Fig. 3 shows a head surface extracted from the MR volume image. We transformed the MEG sensor array to MRI coordinates by matching the reference points (measured on the subjectTs head) and the extracted head surface (Kozinska et al., 2001). Fig. 3 shows the MEG array as a “helmet” consisting of 102 square sensor “plates.” Each plate has magnetic coils that measure the normal (to the plate) component of the magnetic field. The middle of the plate serves as a reference point. In order to parameterize the inverse problem, we divided the volume of the brain into 30,000 cubic voxels of size 4×4×4 mm. Vector s consists of strengths of three components of current dipoles within each voxel. This produces Ns=90,000 free inversion parameters (unknowns). This parameterization takes into account both gray matter and white brain matter. We did not use the alternative parameterization with the cortical surface constraint because the final reconstruction result would strongly depend on the accuracy of the cortical surface extraction procedure. The controlled support algorithm is the point of our investigation. So, we did not use cortical surface extraction since it may mask the evaluation of the algorithmTs performance.

Fig. 3.

Geometry that was used in the model study. An outer head surface was extracted from the subject's MRI. MEG sensor array (a “hat” consisting of square receiver “plates”, as shown here) was positioned in MRI coordinates by matching the reference points to the head surface. Each “plate” measures normal component of a magnetic field.

We built the underlying forward model (lead field operator) using the formula for a dipole in a homogeneous sphere (Sarvas, 1987). We computed the sensitivity kernel for each sensor (a row of matrix L), using an individual sphere locally fit to the surface of the head near that particular sensor site (Huang et al., 1999).

As a first numerical experiment, we placed two dipoles within the brain, approximately at the level of the primary auditory cortex.

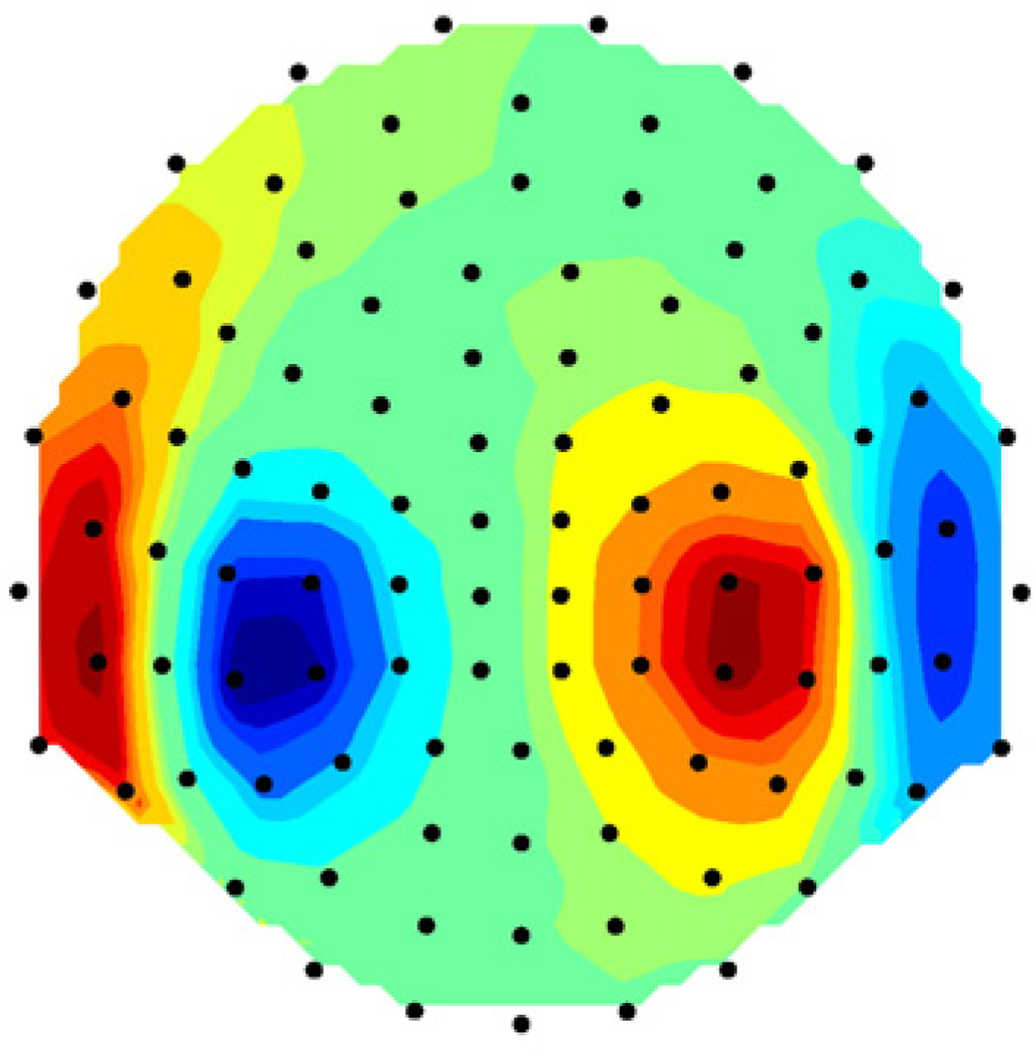

Fig. 4 shows the location of dipoles within the brain. That setup defines the vector s as zeros everywhere except the specified dipole locations. We generate the data b using the forward Eq. (1), adding Gaussian random noise such that the SNR is 400. The level of noise is set relative to the data and measured in the same units as a normalized misfit, as defined by the formula

| (26) |

where SNR is the signal-to-noise ratio defined by the ratio of the average signal power across channels divided by the average noise power, and n is the noise vector. Fig. 5 shows the resulting data as a flat projection.

Fig. 4.

Location of two test dipoles (stars) within the head.

Fig. 5.

Magnetic field data for two-dipole model (the model from Fig. 4). Data are shown by color map superimposed on flat projection of measuring array (the helmet from Fig. 3). Dots show the locations of the sensors, each sensor corresponds to one plate in Fig. 3. Data contain Gaussian random noise such that the SNR=400.

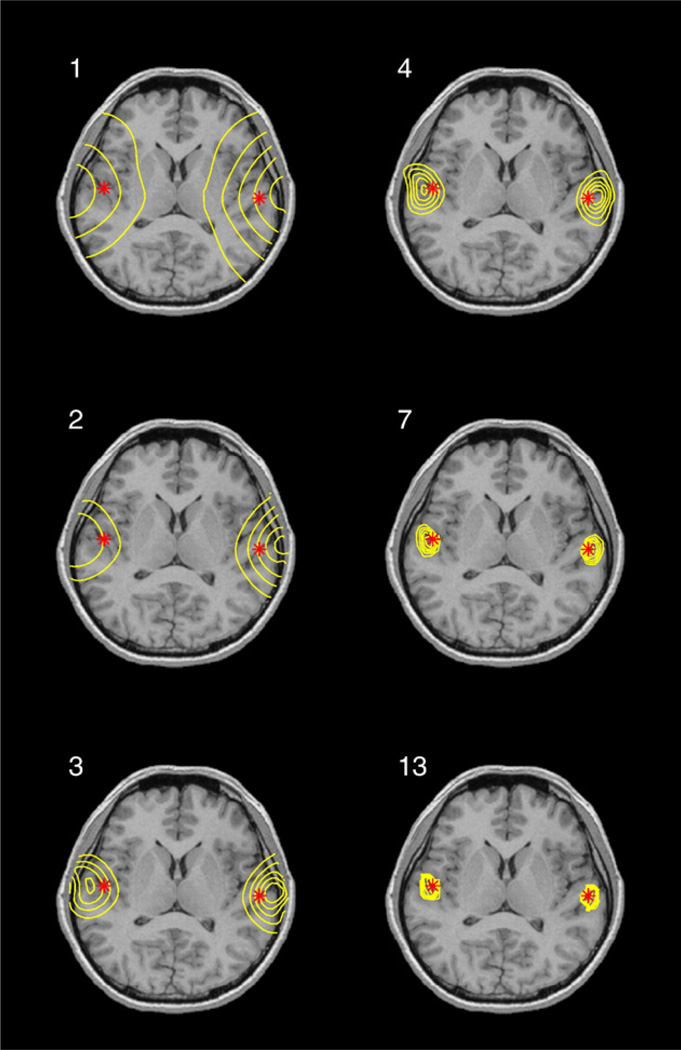

To illustrate convergence, Fig. 6 shows the evolution of stabilizers during iterations, and Fig. 7 shows the evolution of the solution. The isolines in Fig. 7 display the magnitudes of the dipoles in the solutions, superimposed on a corresponding MRI slice.

Fig. 6.

Evolution of stabilizers during reweighted iterations. Solid line shows evolution of S, dashes show evolution of αSreg and dots show the evolution of (1−α)Smin. Stars show the stopping point, where term (1−α)Smin becomes less than term αSreg. After that point term αSreg (dashes) dominates, and Scon (solid line) flattens, as illustrated by this figure.

Fig. 7.

Evolution of solution during reweighted iterations. The case corresponds to example discussed in Fig. 6. Stars show “true” location of dipoles (location of dipoles within the head is shown in Fig. 4). Solution is superimposed on corresponding MRI slice as isolines. Panels numbered 1, 2, 3, 4, 7, 13 show the solutions at the corresponding iteration.

Fig. 7 displays six solutions produced on each iteration. The first solution (panel 1) is a minimum norm solution that is smooth, and maximums are far away from the true dipole locations. In contrast to a common misconception about reweighted optimization methods, observe how the location of maximum activation shifts during reweighted iterations (panels 1–6 in Fig. 7). Note that the method discussed here does not simply accentuate the peaks of the previous iteration. The size and shape of the estimated source area are mainly due to the model and the noise level and do not directly relate to the size and shape of the original source.

Each of these solutions describe the data equally well, but they do not describe the prior expectations that the activity is focused. The solutions are indistinguishable in terms of the data fit, however, they have different values of the stabilizer. Fig. 6 shows evolutions of a stabilizer S (solid line) and its individual components (α−1)Smin (dots) and αSreg (dashes). We see that the first solution has a large value of a stabilizer, and on the next iterations, the stabilizer decreases to a minimum.

We have empirically determined, from our Monte-Carlo experiments, that the best estimate of the dipole location is not the maximum of the image, but rather the location of the maximum of a local weighted average of the image around its maximum solution. Such a technique is better since it provides estimates located away from the grid nodes, and is, therefore, less sensitive to a given inversion grid. We estimate the dipole locations by thresholding the whole image at 10% to the maximum, separating the individual maximums by clustering, and determining the center of each cluster as the average of a position of cluster points with weights corresponding to the intensity of the image. This procedure is very fast for our compact images (fractions of a second) and does not increase the overall computation time.

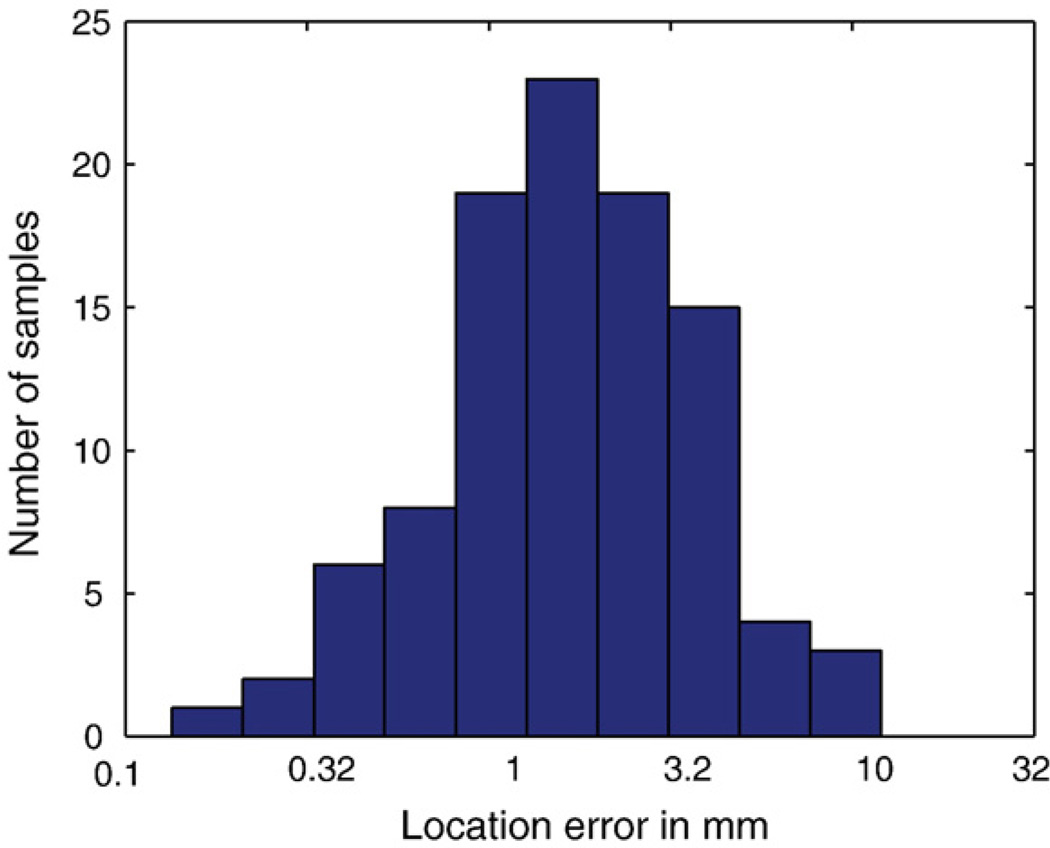

Second, we estimate the localization accuracy and speed of our algorithm using the Monte-Carlo study with 100 simulations. Each experiment is a separate round of inversion run on a data generated by a dipole with a random orientation and a random location within the brain. Fig. 8 shows a histogram of the root–mean–square localization error. The mean error was 2.1 mm, the three largest errors were 8,10 and 12 mm, all for dipoles located very deep within the brain or at the corners of the mesh. Four errors were below 8 mm, and the rest of 93 errors were below 4 mm. These results are consistent with performance reported in the literature for single-dipole parametric inversion (Leahy et al., 1998). With the geometrical setup described above, and using a 700 MHz PC, the localization runs for 30 s.

Fig. 8.

Histogram of localization errors for 100 experiments with a randomly located dipole. Average error is 2.1 mm, seven errors are above 4 mm.

Third, we run the source localization with different levels of noise, ranging from small (SNR=400) to high (= 4). Table 1 summarizes the results. Each column in the table is averaged from 100 Monte-Carlo trials. Expectedly, under high levels of noise localization accuracy as well as errors in orientation deteriorates. However, the method performed robustly and converged well even under 25% of noise and even in this case exhibited localization accuracy of 10 mm.

Table 1.

Results from Monte-Carlo simulation study

| Error/Noise | 400 | 100 | 67 | 33 | 16 | 8 | 4 |

|---|---|---|---|---|---|---|---|

| Location [mm] | 2.55 | 2.76 | 3.80 | 4.36 | 6.43 | 8.58 | 10.90 |

| Orientation [degree] | 1.35 | 1.50 | 1.94 | 2.62 | 4.13 | 4.78 | 6.18 |

Acknowledgments

This work was partially supported under NIH grant P41 RR12553-03 and also by grants from the Whitaker Foundation and NIH (R01DC004855) to S.N. The authors would like to thank Dr.M. Funke from the University of Utah Department of Radiology for his help providing the realistic MEG array geometry and Blythe Nobleman from Scientific Computing and Imaging Institute at the University of Utah, for her many useful suggestions pertaining to the manuscript.

Footnotes

Available online on ScienceDirect (www.sciencedirect.com).

References

- Aliprantis CD, Burkinshaw O. Locally Solid Riesz Spaces. New York and London: Academic Press; 1978. [Google Scholar]

- Baillet S, Garnero L. A Bayesian approach to introducing anatomo-functional priors in the EEG/MEG inverse problem. IEEE Trans. Biomed. Eng. 1997;44(5):374–385. doi: 10.1109/10.568913. [DOI] [PubMed] [Google Scholar]

- Baillet S, Mosher JC, Leahy RM. Electromagnetic brain mapping. IEEE Signal Processing Magazine. 2001;18(6):14–30. [Google Scholar]

- Bertrand C, Hamada Y, Kado H. MRI prior computation and parallel tempering algorithm: a probabilistic resolution of the MEG/EEG inverse problem. Brain Topogr. 2001a;14(1):57–68. doi: 10.1023/a:1012567806745. [DOI] [PubMed] [Google Scholar]

- Bertrand C, Ohmi M, Suzuki R, Kado H. A probabilistic solution to the MEG inverse problem via MCMC methods: the reversible jump and parallel tempering algorithms. IEEE Trans. Biomed. Eng. 2001b;48(5):533–542. doi: 10.1109/10.918592. [DOI] [PubMed] [Google Scholar]

- Eckhart U. Weber’s problem and Weiszfeld’s algorithm in general spaces. Math. Program. 1980;18:186–196. [Google Scholar]

- Farquharson CG, Oldenburg DW. Non-linear inversion using general measures of data misfit and model structure. Geophys. J. Int. 1998;134:213–227. [Google Scholar]

- Fletcher R. Practical Methods of Optimization. Wiley and Sons; 1981. [Google Scholar]

- Gorodnitsky IF, George JS. Neuromagnetic source imaging with focus: a recursive weighted minimum norm algorithm. Electroencephalogr. Clin. Neurophysiol. 1995;95(4):231–251. doi: 10.1016/0013-4694(95)00107-a. [DOI] [PubMed] [Google Scholar]

- Gorodnitsky IF, Rao BD. Sparse signal reconstruction from limited data using focus: a recursive weighted norm minimumization algorithm. IEEE Trans. Signal Process. 1997;45:600–616. [Google Scholar]

- Hadamard J. Sur les problemes aux derivees parielies et leur signification physique. Bull. Univ. Princeton. 1902:49–52. (in French) [Google Scholar]

- Hamalainen M, Hari R, Ilmoniemi RJ, Knuutila J, Lounasmaa OV. Magnetoencephalography theory, instrumentation, and applications to noninvasive studies of the working brain. Rev. Modern Phys. 1993;65:413–497. [Google Scholar]

- Huang MX, Mosher JC, Leahy RM. A sensor-weighted overlapping-sphere head model and exhaustive head model comparison for MEG. Phys. Med. Biol. 1999;44(2):423–440. doi: 10.1088/0031-9155/44/2/010. [DOI] [PubMed] [Google Scholar]

- Kozinska D, Carducci F, Nowinski K. Automatic alignment of EEG/MEG and MRI data sets. Clin. Neurophysiol. 2001;112:1553–1561. doi: 10.1016/s1388-2457(01)00556-9. [DOI] [PubMed] [Google Scholar]

- Last BJ, Kubik K. Compact gravity inversion. Geophysics. 1983;48:713–721. [Google Scholar]

- Leahy RM, Mosher JC, Spencer ME, Huang MX, Lewine JD. A study of dipole localization accuracy for MEG and EEG using a human skull phantom. Electroencephalogr. Clin. Neurophysiol. 1998;107(2):159–173. doi: 10.1016/s0013-4694(98)00057-1. [DOI] [PubMed] [Google Scholar]

- Matsuura K, Okabe Y. Selective minimum-norm solution of the biomagnetic inverse problem. IEEE Trans. Biomed. Eng. 1995;42(6):608–615. doi: 10.1109/10.387200. [DOI] [PubMed] [Google Scholar]

- O'Leary DP. Robust regression computation using iteratively reweighted least squares. SIAM J. Matrix Anal. Appl. 1990;11:466–480. [Google Scholar]

- Pascual-Marqui RD, Biscay-Lirio R. Spatial resolution of neuronal generators based on EEG and MEG measurements. Int. J. Neurosci. 1993;68(1–2):93–105. doi: 10.3109/00207459308994264. [DOI] [PubMed] [Google Scholar]

- Phillips JW, Leahy RM, Mosher JC. Meg-based imaging of focal neuronal current sources. IEEE Trans. Med. Imag. 1997;16(3):338–348. doi: 10.1109/42.585768. [DOI] [PubMed] [Google Scholar]

- Portniaguine O. Image focusing and data compression in the solution of geophysical inverse problems. PhD thesis. University of Utah; 1999. [Google Scholar]

- Portniaguine O, Zhdanov MS. Focusing geophysical inversion images. Geophysics. 1999;64:874–887. [Google Scholar]

- Sarvas J. Basic mathematical and electromagnetic concepts of the biomagnetic inverse problem. Phys. Med. Biol. 1987;32:11–22. doi: 10.1088/0031-9155/32/1/004. [DOI] [PubMed] [Google Scholar]

- Schmidt DM, George JS, Wood CC. Bayesian inference applied to the electromagnetic inverse problem. Hum. Brain Mapp. 1999;7(3):195–212. doi: 10.1002/(SICI)1097-0193(1999)7:3<195::AID-HBM4>3.0.CO;2-F. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tikhonov AN, Arsenin YV. Solution of Ill-posed Problems. Winston and Sons; 1977. [Google Scholar]

- Uutela K, Hamalainen M, Somersalo E. Visualization of magnetoencephalographic data using minimum current estimates. NeuroImage. 1999;10(2):173–180. doi: 10.1006/nimg.1999.0454. [DOI] [PubMed] [Google Scholar]

- Wang JZ, Williamson SJ, Kaufman L. Magnetic source images determined by a lead-field analysis: the unique minimum-norm leastsquares estimation. IEEE Trans. Biomed. Eng. 1992;39(7):665–675. doi: 10.1109/10.142641. [DOI] [PubMed] [Google Scholar]

- Wolke R, Schwetlick H. Iteratively reweighted least squares: algorithms, convergence analysis, and numerical comparisons. SIAM J. Sci. Statist. Comput. 1988;9:907–921. [Google Scholar]