Abstract

Skilled visual word recognition is thought to rely upon a particular region within the left fusiform gyrus, the visual word form area (VWFA). We investigated whether an individual (AA1) with pure alexia resulting from acquired damage to the VWFA territory could learn an alphabetic “FaceFont” orthography, in which faces rather than typical letter-like units are used to represent phonemes. FaceFont was designed to distinguish between perceptual versus phonological influences on the VWFA. AA1 was unable to learn more than five face-phoneme mappings, performing well below that of controls. AA1 succeeded, however, in learning and using a proto-syllabary comprising 15 face-syllable mappings. These results suggest that the VWFA provides a “linguistic bridge” into left hemisphere speech and language regions, irrespective of the perceptual characteristics of a written language. They also suggest that some individuals may be able to acquire a non-alphabetic writing system more readily than an alphabetic writing system.

Keywords: Acquired alexia, Dyslexia, Reading, Word identification, Orthography, Phonology, VWFA

1. Introduction

Over a century ago, the physician Adolph Kussmaul coined the term “word blindness” to describe the inability of some patients with acquired brain damage to read words and text effortlessly, despite a preservation of other visual-perceptual and language skills (Kussmaul, 1877). The advent of modern neuroimaging has helped to link this disorder to a particular territory within the left fusiform gyrus, specifically to a region that has been named the “visual word form area” (VWFA; Cohen et al., 2004; Cohen et al., 2003). In this study, we investigate the impact of VWFA damage on an individual's ability to learn an experimental writing system in which faces, rather than typical letter-like units, serve as the component phonograms for representing English words.

We developed the FaceFont orthography, which is an alphabetic system comprising 35 face-phoneme pairs, to test two competing accounts for the left-lateralization of the VWFA (Moore, Durisko, Perfetti, & Fiez, 2014). A visuo-perceptual account suggests that orthographic processing preferentially engages the VWFA because printed words impose a high demand on particular types of perceptual analysis that are best mediated by this region (such as high-spatial frequency and feature-based visual processing; McCandliss, Cohen, & Dehaene, 2003). However, because the anatomical projections of the VWFA provide a unique interconnection between the visual system and left-lateralized language areas involved in the representation of speech (Reinke, Fernandes, Schwindt, O'Craven, & Grady, 2008; Vigneau, Jobard, Mazoyer, & Tzourio-Maxzoyer, 2005), an alternative perspective is that the VWFA is uniquely positioned to serve as a bridge to left-lateralized language areas. FaceFont, which makes use of phonograms that are perceptually distinctive from typical letters, offers the opportunity to disentangle perceptual and linguistic influences on the VWFA (see Fig. 1a for an example of the FaceFont orthography).

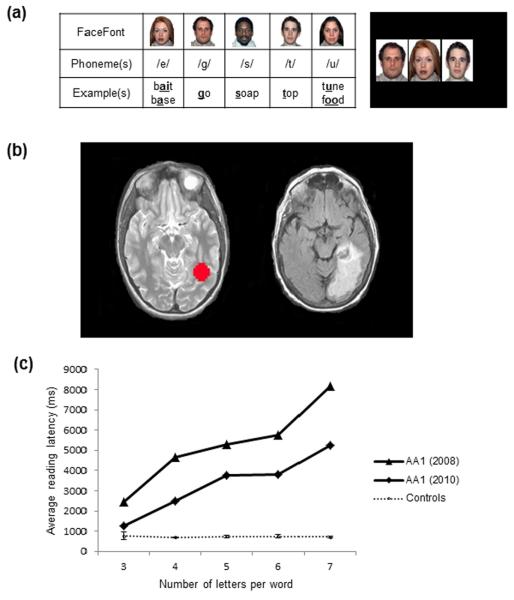

Fig. 1.

Examples of FaceFont phonograms and presentation of pure alexia in AA1. (a) Left: A sample of five FaceFont phonograms, their corresponding English phonemes (represented using the International Phonetic Alphabet), and examples of the sounds in English words. Right: An example of the word `gate' shown in FaceFont. (b) Typical location of the VWFA (red sphere) on a reference brain (left image). The structural image from AA1 (acquired during her initial hospital admission) reveals damage to the left occipotemporal cortex that includes the typical VWFA territory (right image). (c) Letter-by-letter reading observed in AA1, as demonstrated by a steep, linear increase in reading latencies as words increase in length, as compared to the average reading latencies of four controls. Error bars (representing standard deviations) reflect relatively little variance amongst the control participants when contrasted with AA1's reading latencies. Note: 4.81% of all trials were excluded in which the microphone did not register the response latencies. Latencies were reported for correct pronunciations only. See Table S3 for accuracy results.

The visuo-perceptual account predicts that an individual with damage to the VWFA will have little to no difficulty learning the FaceFont orthography since preferential responses to faces tend to localize to the right fusiform gyrus (i.e., to the fusiform face area, FFA) with less activation in the left fusiform gyrus (Cohen et al., 2002). In contrast, the linguistic bridge account predicts that an individual with damage to the VWFA will have an impaired ability to learn the FaceFont orthography because of the unique connection between faces and phonological information when faces are used alphabetically. The current study tests these predictions through a single-case study of an individual (AA1) with focal damage to the left occipitotemporal cortex and a concomitant neuropsychological profile of pure alexia.

2. Results

2.1. Neuropsychological characterization of AA1

2.1.1. Symptoms of pure alexia

AA1 exhibited deficits on two measures of reading fluency. She achieved 6 out of 30 possible points on three simple stories drawn from a standardized oral reading test (Wiederholt & Bryant, 2001), whereas four matched control participants all achieved perfect scores. Word identification tasks (Kay, Coltheart, & Lesser, 1992) confirmed that AA1 had corresponding deficits at the lexical level: she was markedly slower to name words than the controls and she exhibited the exaggerated word-length effect that is diagnostic of the letter-by-letter reading strategy associated with pure alexia (Fig. 1; ranging from 9.63 SDs (on 3-letter words) to 164.78 SDs (on 7-letter words) below the controls' average reading latency at each word length; Behrmann, Plaut, & Nelson, 1998; Coslett, 2000). Little change was observed in her performance two years later (Fig. 1; ranging from 2.88 SDs (3-letter words) to 100.25 SDs (7-letter words) below the controls).

Reading comprehension was primarily assessed using the standardized oral reading test mentioned above. Multiple choice questions that followed each of the three simple stories were read aloud to AA1 and she was given unlimited time to respond. Although AA1's fluency was slow and effortful, she eventually was able to identify most words in the passages and acquire enough information from the stories to accurately respond to the comprehension questions. AA1's comprehension appeared to be mildly affected by her poor fluency when the reading material was more challenging or when she was asked to read the comprehension questions herself (Table S1 and section A.2.2 of the supplementary materials).

In contrast to her impaired reading fluency, AA1 scored within age-appropriate ranges and/or within the range of the controls on measures of letter naming, letter-sound association, phonological processing, lexical decision, oral/written language comprehension and production, and verbal working memory (Table S1 and Appendix). In sum, neuropsychological assessment indicated that AA1 has a stable and isolated deficit in word reading characteristic of pure alexia.

2.1.2. General Abilities

We also assessed AA1's general cognitive abilities using a battery of tests that included measures of intelligence, attention, and memory. AA1 exhibited a normal profile of performance with one exception. At the time of her initial testing, AA1 scored outside of the normal range on a measure of delayed face recognition; her score on a test of immediate face recognition was within the normal range. When these two tests were repeated two years later, her scores for both fell within the normal range. Her improvement in delayed face recognition performance could indicate that she had a deficit that resolved, or that some other conditional factor affected her initial test score (e.g. fatigue). The same cognitive assessments were administered to four control participants. The results confirmed that the language and general cognitive abilities of our control participants were well matched to those of AA1. With the exception of her poor initial performance on delayed face recognition, AA1's scores fell, on average, within −0.18 SDs of the control means (Table S1).

2.2. Face-phoneme (FaceFont) phonogram learning

The ability of AA1 to learn phonograms from the FaceFont orthography was probed three times over a 3.5-year period. The supplementary materials provide a historical timeline of her testing, details of the procedures for all three attempts, and the full set of results for AA1's first two attempts. Here, we summarize the results from her first two attempts and then focus on those from her third attempt. In her initial effort, AA1 attempted to learn all 35 of the phonograms in the FaceFont alphabet. AA1 correctly recalled only 5.71% of the face-phoneme mappings when tested after 11 prior exposures to the phonogram set, a level of performance well below that observed in healthy young adults (Moore et al., 2014). In AA1's second FaceFont learning opportunity, we investigated whether she could learn FaceFont phonograms more easily if they were presented in small subsets. AA1 readily learned a first set of five FaceFont phonograms, but she was unable to recall more than 20.00% of the mappings for the items in a second set of five FaceFont phonograms.

In AA1's third FaceFont learning attempt, we once again investigated whether AA1 could learn FaceFont phonograms when they were presented in small subsets. More specifically, we selected ten phonograms from the FaceFont alphabet (Moore et al., 2014) for further training. These ten phonograms were divided into two five-item subsets containing broadly equivalent face and phoneme types, referred to hereafter as FaceFontA and FaceFontB. As compared to her prior attempt at learning FaceFont subsets, AA1 was given an increased number of trials to learn the phonograms in each set and she was asked to perform a decoding test after periods of training. In addition, comparison data were acquired from a matched group of control participants.

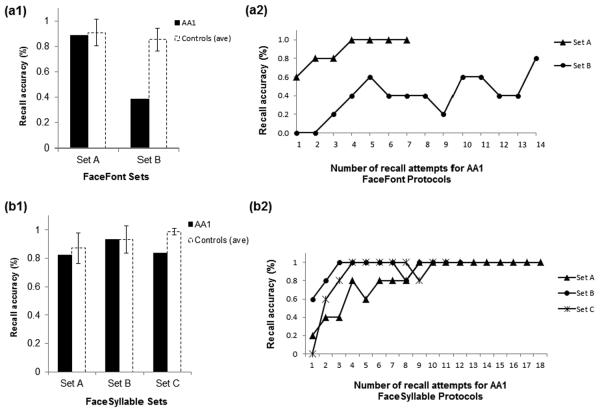

AA1 was able to learn the face-phoneme mappings for the initial set of five phonograms but not the second set of five phonograms. Her average recall accuracy for FaceFontA phonograms was 88.57% (control M = 90.89%, SD = 10.55%). In contrast, her average recall accuracy for FaceFontB phonograms was 38.57% (control M = 85.36%, SD = 8.79%; see Fig. 2).

Fig. 2.

FaceFont and FaceSyllable phonogram learning. AA1 was able to learn the initial set of five FaceFont phonograms (Set A) but not the second set (Set B). (a1) AA1's average accuracy across all FaceFontA recall attempts was only 0.22 SDs below the control mean, but her average accuracy across all FaceFontB recall attempts was 5.3 SDs below the control mean. (a2) In the FaceFont protocol, AA1 reached the early advancement criterion for FaceFontA (100% accuracy on two consecutive recall attempts). In contrast, for FaceFontB she reached the maximum recall attempts allowed without scoring above an 80.00%. AA1 was able to learn all three sets of five FaceSyllable phonograms. (b1) AA1's average accuracy across all recall attempts for each FaceSyllable set was 0.46 SDs below (FaceSyllableA), 0.02 SDs above (FaceSyllableB), and 6.51 SDs below (FaceSyllableC) the control mean. (b2) In contrast with the FaceFont protocol, AA1 reached 100.00% accuracy on all three FaceSyllable sets after nine or fewer recall attempts. Error bars in (a1, b1) represent standard deviations.

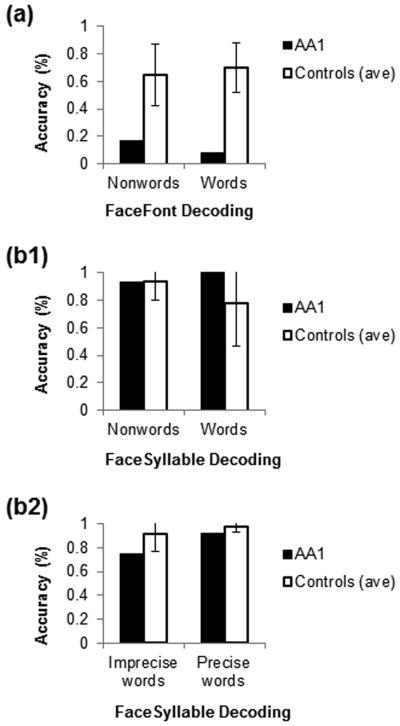

AA1 was unable to decode FaceFont strings accurately. Using only the graphs in FaceFontA, which AA1 learned successfully, AA1 correctly pronounced only one of twelve word and nonword items in her first decoding test, and only two items after a repeated training period with FaceFontA phonograms. This level of performance is considerably below that achieved by the controls (control M = 67.44%, SD = 20.23% accuracy across all FaceFont decoding tests; Fig. 3). AA1's performance on auditory phonological awareness tasks was excellent, making it unlikely that her problems with decoding arose from an underlying general phonological deficit (Table S1).

Fig. 3.

FaceFont and FaceSyllable decoding. (a) Performance on FaceFont decoding of nonwords and real words; AA1 performed 2.13 and 3.44 SDs below the control mean, respectively. (b1) AA1 had improved performance on FaceSyllable decoding; she scored identically to the control mean on FaceSyllable nonwords and 0.71 SDs above the control mean on real word items. (b2) FaceSyllable decoding in the final training stage with all 15 phonograms and only real words presented. AA1 continued to have improved performance compared to FaceFont decoding. She scored 1.15 SDs below the control mean on both imprecise and precise words. Additional scoring details are provided in Table S4.

2.3. Face-syllable phonogram learning

As AA1's difficulties learning the FaceFont orthography became apparent, we sought to determine the bounding conditions of this impairment. Results from a standardized memory test (Wechsler, 1997) indicated that AA1 had a normal ability to learn new auditory-verbal associations and her general face processing skills were intact (Table S1). These observations led us to suspect that she might be successful at some forms of face-sound learning. To test this possibility, we paired faces with larger phonological units (syllables).

AA1 and the controls completed a training session in which they were asked to learn 15 face-syllable phonograms. The 15 face-syllable phonograms were divided into three subsets of five items (FaceSyllableA, FaceSyllableB, FaceSyllableC). The syllables were all common English words (e.g., may, bee) or speech utterances (e.g., er, um). They were deliberately chosen with the goal of creating a “proto-syllabary:” that is, a set of syllabic phonograms that could be combined to represent other English words and pronounceable nonwords.

AA1 was able to master FaceSyllableA with comparable accuracy to the controls (AA1 = 82.22% accuracy; control M = 87.17%, SD = 10.83%). In contrast to the FaceFont training, AA1 had nearly identical accuracy to the mean control accuracy on the second set of face-syllable mappings, FaceSyllableB (AA1 = 93.33% accuracy; control M = 93.18%, SD = 9.43%). She learned the FaceSyllableC set of phonograms as well, though less quickly than the controls. As a result, AA1 attained an average accuracy of 83.64%, as compared to 98.67% (SD = 2.31%) for the controls who completed the training protocol. (One control reached only a 90.00% accuracy level at combined training with the FaceSyllableA and FaceSyllableB sets, and for this reason did not advance to FaceSyllableC training.)

AA1 was able to decode word and nonword strings of face-syllable phonograms (Fig. 3). In her last decoding effort, when only real words were presented, she scored above 90.00% accuracy when the represented syllables provided a precise mapping onto an English word (e.g., may and bee can be combined to produce the word maybe; AA1 = 92.31% accuracy; control M = 97.44%, SD = 4.44%). AA1's accuracy declined when the syllabic combinations provided only an approximation of an English word (e.g., ex, ten, and duh were used to represent extend; AA1 = 75.00% accuracy; control M = 91.67%, SD = 14.43%). Despite this decline, both of AA1's precise and imprecise decoding accuracies fell just 1.15 SDs below the accuracy levels attained by the control participants.

2.4 Reliability of observed patterns

To address concerns that general fatigue or lack of effort accounted for AA1's decreased performance with the FaceFont subsets, we administered a face-word learning paradigm immediately following AA1's second attempt with FaceFont learning. The paradigm used the same procedures to test whether AA1 could learn to associate each of five faces with five different words. AA1 had an overall recall accuracy of 80.00%. Her learning was comparable to that exhibited by the controls' (control M = 76.43%, SD = 22.66%; AA1 performed 0.16 SDs above the control mean). These results help to rule out general factors that might account for AA1's poor FaceFont learning. Additionally, they provide further support for her ability to learn associations pairing faces with larger phonological units, as seen in the face-syllable phonogram training.

3. Discussion

AA1, an individual with an acquired lesion to the typical territory of the VWFA (Fig. 1), exhibited the classic neuropsychological profile of pure alexia (Behrmann et al., 1998; Cohen et al., 2003). AA1 was unable to achieve normal levels of FaceFont (face-phoneme) learning in three separate attempts; further, she was unable to decode English words and pronounceable nonwords represented by strings of FaceFont phonograms. We conclude that the VWFA territory is essential for the skilled acquisition of the FaceFont orthography, as predicted by the linguistic bridge account of the VWFA.

These results converge with the neuroimaging findings of Moore et al. (2014), who trained young adults to read the FaceFont orthography. After a two-week training period, participants were able to read simple texts written in FaceFont with high levels of comprehension at rates similar to beginning readers of written English. The neural basis of their newly acquired skill was probed using functional neuroimaging. It was found that the hemodynamic response to visually displayed FaceFont strings was larger in the VWFA territory for participants who were trained to read FaceFont as compared to untrained participants. No group differences were observed in the right fusiform, which has been associated with right-lateralized responses to the visual presentation of faces. The learned responses to FaceFont indicated that the VWFA's contributions to reading are not limited by the perceptual qualities of orthographic stimuli. Taken together, the results from Moore et al. and the current findings provide strong support for the linguistic bridge account of VWFA lateralization.

3.1. A relationship between pure alexia and developmental dyslexia

The inability of AA1 to learn the FaceFont orthography provides new information about the relationship between pure alexia and developmental dyslexia. Historically, developmental dyslexia was viewed as a congenital form of word blindness (Hinshelwood, 1917). While there continues to be interest in visual perceptual factors that may underlie developmental dyslexia, most of the recent work in this area has centered on the idea that individuals with dyslexia have a core deficit in phonological processing (Vellutino, Fletcher, Snowling, & Scanlon, 2004). The same theoretical shift has not occurred within the pure alexia literature, where typical discussions of the disorder still treat it as an acquired disorder of visual perception (Behrmann et al., 1998; Coslett, 2000). However, our results suggest that there is a phonological aspect to pure alexia, one that is associated with orthographic-to-phonological mapping. We reason that congenital dysfunction or neural resource limitations within the VWFA could similarly affect orthographicto-phonological mapping, thereby making it particularly challenging for individuals with such dysfunction to become skilled readers (cf. Wimmer & Schurz, 2010). It is important to note that the parallel that we have drawn between acquired alexia and developmental dyslexia does have limitations. For instance, it is widely thought that most children with developmental dyslexia have a core deficit in phonological processing that precedes their exposure to reading instruction, whereas AA1 demonstrated intact phonological processing abilities with spoken stimuli.

3.2 Reliance on VWFA in alphabetic versus non-alphabetic orthographies

AA1 was able to learn a set of 15 face-syllable phonograms, and she was able to use this proto-syllabary to decode English words and pronounceable nonwords. One limitation of the current design is that the phonological similarity of the items in the face-phoneme learning sets was greater than the similarity of the items in the face-syllable learning sets. The greater phonological distinctiveness of the face-syllable items may have thus made them easier to learn. Another possibility, which we favor, is that the learning differences reflect a fundamental difference in the acquisition of alphabetic versus non-alphabetic writing systems. This interpretation is consistent with prior evidence that the neural substrates associated with skilled reading differ across alphabetic versus non-alphabetic writing systems. For instance, neuroimaging studies have revealed activation differences within the fusiform and prefrontal gyri associated with Chinese as compared to English word reading (Bolger, Perfetti, & Schneider, 2005), and differences in the neural correlates of dyslexia for Chinese versus English readers (Siok, Perfetti, Jin, & Tan, 2004). Thus, the shift to non-alphabetic phonograms may have ameliorated AA1's orthographic learning difficulties because syllabic writing systems tend to engage bilateral fusiform activation, which could lessen the reliance upon the VFWA. This raises the possibility that lexical- and syllabic-level intervention approaches could be effective for individuals with acquired and developmental dysfunction of the VWFA.

The psycholinguistic literature can be used to explain why syllabic writing systems may be less reliant upon the VWFA than alphabetic systems. We explore three possible factors, all of which focus on the different demands that alphabetic versus non-alphabetic writing systems place on orthographic-to-phonological transformation. One factor is that phonemes are smaller phonological units than syllables or words. This means that the average number of phonograms needed to represent a word alphabetically will necessarily be greater than the average number needed to represent a word non-alphabetically. In the early stages of reading acquisition, when effortful decoding strategies are often utilized, this may increase the demands on phonological processing (Hoien-Tengesdal & Tonnessen, 2011). Potentially, the VWFA may provide a crucial bridge into brain regions associated with phonological storage or combinatorial processing, or it may help to retain visual perceptual information long enough for word identification to occur (Hickok & Poeppel, 2004; Mano et al., 2012).

A second factor is that phonemes are abstract and non-intuitive units of representation without a clear auditory perceptual basis; instead, phonemes can be more readily understood in terms of implicit articulatory features associated with speech production (Savin & Bever, 1970). Syllables and words, on the other hand, can be readily extracted from speech input and children can segment and manipulate them with comparative ease (Gleitman & Rozin, 1973). Because of these representational differences, readers of an alphabetic system may be more reliant upon brain regions associated with speech production than readers of non-alphabetic systems; by extension, the VWFA may be particularly important because it may provide a crucial bridge between the visual system and a dorsal parietofrontal processing stream associated with speech production (Hickok & Poeppel, 2004).

A third factor is that syllables and words can serve as object, picture, or symbol names, whereas consonant phonemes cannot. By using names as phonological units, words within a given language can be phonologically represented through strings of pictures and/or symbols (e.g., a picture of a bee and the Roman numeral IV can be combined to represent the word before, which has no semantic relationship to the component names). The ability to “re-purpose” pictures and their associated names as syllabic phonograms (i.e., rebuses) appears to be relatively intuitive. For instance, young children can easily use such phonograms to support English word decoding (Gleitman & Rozin, 1973), and multiple cultures in human history have evolved fully formed syllabic writing systems from a rudimentary use of rebuses (Huey, 1918). Putting these ideas in neural terms, syllabic phonograms may be able to utilize an occipitotemporal visual processing stream associated with object recognition (Ungerleider & Haxby, 1994). By leveraging a basic route for object naming, it might be possible for syllabic writing systems to gain access to phonological representations with less involvement of the VWFA (Mano et al., 2012). An appealing aspect of this explanation is that it can also explain why individuals with acquired alexia often retain the ability to retrieve explicit knowledge about letter names and their sound correspondences (as we observed in AA1). Potentially, this preserved ability could be supported by the proposed object recognition/naming route, despite the loss of implicit orthographic-phonological access mediated by the VWFA. This could allow lexical access to the printed word forms to occur through the serial retrieval of learned letter names and an internal spelling process (e.g., silently naming “c”, “a,” and “t” permits lexical access to the word cat) or the serial retrieval of learned letter-sound correspondences and an effortful decoding process similar to that employed by beginning readers (who also show pronounced word length effects; Di Filippo, De Luca, Judica, Spinelli, & Zoccolotti, 2006).

4. Methods

4.1. Participants

All participants were right-handed, monolingual English speakers. All participants provided informed consent for multiple testing sessions in accordance with Institutional Review Board procedures at the University of Pittsburgh and were paid $15 for each hour of their time.

Participant AA1 was recruited for this study through the Western Pennsylvania Patient Registry. Testing began when she was 68 years old. Clinical images acquired at the time of her hospital admission indicated damage to her left occipitotemporal cortex, including the portion of the fusiform territory associated with the VWFA (Fig. 1).

Four age-matched female controls were recruited from a pool of individuals interested in research participation (M age = 70, age range = 68–73). The four controls were not strictly matched to AA1 on educational background. However, AA1's educational experience is within the range of the control participants' experience (Table S1).

4.2. Organization of testing

Table S2 provides a general overview of the organization of testing sessions for AA1 and the controls.

4.3. Phonogram learning protocols

Several factors influenced the general structure of our phonogram learning protocols: 1) our inability to predict AA1's performance in advance, which led us to design performance-based branch points in the training stages; 2) time constraints within a given session, which led us to design performance-based criteria for early advancement to another training stage; 3) the risk of subject fatigue and frustration that might occur due to difficulties in learning the phonograms, which led us to incorporate criteria for terminating training; 4) potential interference of items across phonogram sets, which led us to repeat training with already-learned phonograms after training with a novel set; and 5) potential confusion of training stimuli across sessions, which led us to use different faces for the FaceFont, FaceWord, and FaceSyllable sets. The procedural details for AA1's third FaceFont learning attempt are described here, along with information about the FaceSyllable learning protocol. The Supplementary Materials (see Appendix) provide information about the specific procedures for AA1's first two attempts at learning FaceFont, the FaceWord learning protocol, the phonogram sets used in all of the phonogram learning sessions, and the protocols and stimuli used in the administration of all other tasks.

4.3.1. Face-phoneme learning protocol

The face-phoneme protocol was administered to AA1 and the control participants. Participants attempted to learn the FaceFontA and FaceFontB sets. Training began with three rounds of randomly-ordered exposures to the five FaceFontA phonograms. A “listen-repeat-listen” procedure was used for these encoding rounds. For each trial, a face appeared and it was accompanied by an audio presentation of the associated phoneme. The face remained on the screen while a small image of a mouth appeared as a cue for AA1 to repeat aloud the presented phoneme. Then, AA1 pressed a space bar which triggered one more audio presentation of the associated phoneme before the face image was erased from the screen. This initial stage was followed by up to seven additional recall rounds with the five FaceFontA phonograms. For these recall rounds, a “recall-listen-repeat” procedure was used. For each trial, a face appeared along with a small image of a mouth, which served as a cue for AA1 to recall aloud the associated phoneme. Then, AA1 pressed a space bar triggering an audio presentation of the associated phoneme, which AA1 was then cued to repeat aloud. A second pressing of the space bar erased the face image from the screen and elicited the next phonogram.

Participants were given up to 20–30 rounds to learn the items in the FaceFontA and FaceFontB sets in the following stages: up to 10 rounds with FaceFontA, up to 10 rounds with FaceFontB, up to 10 rounds with FaceFontA, and either up to 10 rounds with a combined set of all 10 FaceFontA and FaceFontB items (for participants who achieved an accuracy of 100% on at least one prior FaceFontB recall round) or up to 10 rounds of continued training with just the FaceFontB items (for participants who failed to achieve an accuracy of 100% on at least one prior FaceFontB recall round). Training at each stage terminated when a participant achieved an accuracy of 100% on two successive recall rounds, or reached the maximum allowed rounds at a given stage.

When a participant achieved 100% accuracy on at least one recall round within a given training stage, a reading/lexical decision test was administered after the stage was completed. Participants were shown strings of three FaceFont phonograms drawn from the completed stage, and for each string they were asked to recall and blend the represented phonemes to decode the spoken form of the displayed string. After each naming attempt, participants verbally indicated whether the string corresponded to a real or “made up” word. Two practice items were provided at the start of the reading/lexical decision tests.

4.3.2. Face-syllable learning protocol

The face-syllable protocol was completed by AA1 and the control participants. Fifteen faces were drawn from the overall set of 35 FaceFont phonograms used in the Moore et al. (2014) stimulus set. Each face was re-paired with one of 15 syllables to create three subsets of five face-syllable phonograms (FaceSyllableA, FaceSyllableB, and FaceSyllableC). Using the general procedures and learning criterion outlined for the face-phoneme protocol, participants progressed through the following stages of training: up to 20 rounds of exposure to FaceSyllableA, up to 20 rounds of exposure to FaceSyllableB, up to 30 rounds of exposure to the combined set of 10 FaceSyllbleA and FaceSyllableB phonograms, up to 20 rounds of exposure to FaceSyllableC, up to 20 rounds of exposure to the overall set of 15 phonograms. Each successful training stage was followed by a naming/lexical decision test, with two exceptions. First, due to time constraints, the FaceSyllableC training stage was immediately followed by the final training stage with all 15 phonograms. Second, the decoding test that followed this final stage involved only word targets, with some of the strings providing only an imprecise mapping to the target word; this was done to emulate similar imprecision that can occur in syllabic writing systems. To minimize confusion, participants were informed of this change and the lexical decision component of the decoding test was omitted.

Supplementary Material

Highlights

AA1, with VWFA damage, exhibited a reading deficit characteristic of pure alexia.

AA1 was unable to learn an artificial orthography comprising face-phoneme pairs.

AA1 was able to learn and decode an orthography comprising face-syllable mappings.

Impaired FaceFont acquisition supports a linguistic bridge account of the VWFA.

Pure alexia has a phonological aspect and isn't strictly a visuo-perceptual deficit.

Acknowledgments

This project was funded by NIH Grant 1R01HD060388. AA1 was recruited from the Western Pennsylvania Patient Registry (WPPR; http://www.wppr/pitt.edu). A sincere thank you to Corrie Durisko and other members of the Fiez Lab for general assistance and helpful discussions related to this project.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Behrmann M, Plaut DC, Nelson J. A literature review and new data supporting an interactive account of postlexical effects in letter-by-letter reading. Cognitive Neuropsychology. 1998;15:7–52. doi: 10.1080/026432998381212. [DOI] [PubMed] [Google Scholar]

- Bolger DJ, Perfetti CA, Schneider W. A cross-cultural effect on the brain revisited: Universal structures plus writing system variation. Human Brain Mapping. 2005;25(1):83–91. doi: 10.1002/hbm.20124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L, Henry C, Dehaene S, Martinaud O, Lehericy S, Lemer C, Ferrieux S. The pathophysiology of letter-by-letter reading. Neuropsychologia. 2004;42(13):1768–1780. doi: 10.1016/j.neuropsychologia.2004.04.018. [DOI] [PubMed] [Google Scholar]

- Cohen L, Lehericy S, Chochon F, Lemer C, Rivaud S, Dehaene S. Language-specific tuning of visual cortex? Functional properties of the Visual Word Form Area. Brain. 2002;125(Pt 5):1054–1069. doi: 10.1093/brain/awf094. [DOI] [PubMed] [Google Scholar]

- Cohen L, Martinaud O, Lemer C, Lehericy S, Samson Y, Obadia M, Slachevsky A, Dehaene S. Visual word recognition in the left and right hemispheres: anatomical and functional correlates of peripheral alexias. Cerebral Cortex. 2003;13:1313–1333. doi: 10.1093/cercor/bhg079. [DOI] [PubMed] [Google Scholar]

- Coslett HB. Acquired dyslexia. Seminars in Neurology. 2000;20:419–246. doi: 10.1055/s-2000-13174. [DOI] [PubMed] [Google Scholar]

- Di Filippo G, De Luca M, Judica A, Spinelli D, Zoccolotti P. Lexicality and stimulus length effects in Italian dyslexics: Role of the overadditivity effect. Child Neuropsychology. 2006;12:141–149. doi: 10.1080/09297040500346571. [DOI] [PubMed] [Google Scholar]

- Gleitman LR, Rozin P. Teaching reading by use of a syllabary. Reading Research Quarterly. 1973;8:447–483. [Google Scholar]

- Hickok G, Poeppel D. Dorsal and ventral streams: a framework for understanding aspects of the functional anatomy of language. Cognition. 2004;92:67–99. doi: 10.1016/j.cognition.2003.10.011. [DOI] [PubMed] [Google Scholar]

- Hinshelwood J. Congenital Word Blindness. H. K. Lewis & Co; London: 1917. [Google Scholar]

- Hoien-Tengesdal I, Tonnessen F-E. The relationship between phonological skills and word decoding. Scandinavian Journal of Psychology. 2011;52:93–103. doi: 10.1111/j.1467-9450.2010.00856.x. [DOI] [PubMed] [Google Scholar]

- Huey EB. History and Pedagogy of Reading. The MacMillan Company; New York: 1918. [Google Scholar]

- Kay J, Coltheart M, Lesser R. PALPA: Psycholinguistic Assessments of Language Processing Aphasia. Psychology Press; New York: 1992. [Google Scholar]

- Kussmaul A. Disturbances of speech. In: von Ziemssen H, editor. Cyclopedia of the Practice of Medicine. Vol. 14. William Wood; New York: 1877. pp. 581–875. [Google Scholar]

- Mano QR, Humphries C, Desai RH, Seidenberg MS, Osmon DC, Stengel BC, Binder JR. The role of the left occipitotemporal cortex in reading: Reconciling stimulus, task, and lexicality effects. Cerebral Cortex. 2012 doi: 10.1093/cercor/bhs093. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: expertise for reading in the fusiform gyrus. Trends Cogn Sci. 2003;7(7):293–299. doi: 10.1016/s1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- Moore MW, Durisko C, Perfetti CA, Fiez JA. Learning to read an alphabet of human faces produces left-lateralized training effects in the fusiform gyrus. Journal of Cognitive Neuroscience. 2014;26(4):896–913. doi: 10.1162/jocn_a_00506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reinke K, Fernandes M, Schwindt G, O'Craven K, Grady CL. Functional specificity of the visual word form area: general activation for words and symbols but specific network activation for words. Brain and Language. 2008;104:180–189. doi: 10.1016/j.bandl.2007.04.006. [DOI] [PubMed] [Google Scholar]

- Savin HB, Bever TG. The nonperceptual reality of the phoneme. Journal of Verbal Learning and Verbal Behavior. 1970;9:295–302. [Google Scholar]

- Siok WT, Perfetti CA, Jin Z, Tan LH. Biological abnormality of impaired reading is constrained by culture. Nature. 2004;431(7004):71–76. doi: 10.1038/nature02865. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Haxby JV. 'What' and 'where' in the human brain. Curr Opin Neurobiol. 1994;4(2):157–165. doi: 10.1016/0959-4388(94)90066-3. [DOI] [PubMed] [Google Scholar]

- Vellutino FR, Fletcher JM, Snowling MJ, Scanlon DM. Specific reading disability (dyslexia): what have we learned in the past four decades? Journal of Child Psychology and Psychiatry. 2004;45:2–40. doi: 10.1046/j.0021-9630.2003.00305.x. [DOI] [PubMed] [Google Scholar]

- Vigneau M, Jobard G, Mazoyer B, Tzourio-Maxzoyer N. Word and non-word reading: What role for the visual word form area? NeuorImage. 2005;27:694–705. doi: 10.1016/j.neuroimage.2005.04.038. [DOI] [PubMed] [Google Scholar]

- Wechsler D. Wechsler Memory Scale - Third Edition (WMS-III) Psychological Corporation; New York: 1997. [Google Scholar]

- Wiederholt JL, Bryant BR. Gray Oral Reading Test - Fourth Edition (GORT-4) Pro-Ed; San Antonio, TX: 2001. [Google Scholar]

- Wimmer H, Schurz M. Dyslexia in regular orthographies: Manifestation and causation. Dyslexia. 2010;16:283–299. doi: 10.1002/dys.411. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.