Abstract

Objective

To comparatively evaluate the effectiveness of three different methods involving end-users for detecting usability problems in an EHR: user testing, semi-structured interviews and surveys.

Materials and methods

Data were collected at two major urban dental schools from faculty, residents and dental students to assess the usability of a dental EHR for developing a treatment plan. These included user testing (N=32), semi-structured interviews (N=36), and surveys (N=35).

Results

The three methods together identified a total of 187 usability violations: 54% via user testing, 28% via the semi-structured interview and 18% from the survey method, with modest overlap. These usability problems were classified into 24 problem themes in 3 broad categories. User testing covered the broadest range of themes (83%), followed by the interview (63%) and survey (29%) methods.

Discussion

Multiple evaluation methods provide a comprehensive approach to identifying EHR usability challenges and specific problems. The three methods were found to be complementary, and thus each can provide unique insights for software enhancement. Interview and survey methods were found not to be sufficient by themselves, but when used in conjunction with the user testing method, they provided a comprehensive evaluation of the EHR.

Conclusion

We recommend using a multi-method approach when testing the usability of health information technology because it provides a more comprehensive picture of usability challenges.

Keywords: EHR, Usability, Human Factors, Methodology

INTRODUCTION

Electronic health records (EHRs) are increasingly being adopted by healthcare providers, who are attracted by financial incentives and the promise of improved quality, efficiency and safety [1–5]. However, usability issues are cited as one of the major obstacles [6–8] to widespread adoption. Good usability, the goal of user-centered design [9], is meant to ensure that a technology will empower the user to effectively and efficiently complete work tasks with a high degree of satisfaction and success. Conversely, poor EHR usability has been shown to reduce efficiency, decrease clinician satisfaction, and even compromise patient safety [10–19].

Usability evaluation is conducted at different stages of the EHR development and deployment and vary in their approach and focus [20]. Formative usability assessments occur throughout the software development lifecycle. The purpose of these evaluations is to identify and fix usability issues as they emerge during the development stages of a system to assure quality of the tool, which in the long run would be cost effective in terms of time and effort [21, 22]. According to the National Institute of Standards and Technology (NIST) EHR Usability Protocol, “the role of formative testing is to support design innovations that would increase the usefulness of a system and provide feedback on the utility of an application to an intended user” [23]. Summative usability assessments occur after the completion of development of a system and before the release of a product or application where the goal “is to validate the usability of an application in the hands of the intended users, in the context of use for which it was designed, performing the typical or most frequent tasks that it was designed to perform.” [23] Recognizing the importance of usability to safety, summative usability testing is a requirement of the 2014 EHR certification criteria as part of Meaningful Use [24].

A key challenge is the selection of an appropriate method, or combination of methods, to efficiently and effectively assess usability. Evaluation methods vary and may be conducted solely by experts or involve end users (individuals that will use the product) [25–27]. Expert reviews, such as heuristic evaluation, involve usability specialists who systematically inspect EHR platforms and then identify best practice design violations [28, 29]. These reviews are typically conducted in order to classify and prioritize usability problems [30, 31]. Specialists may also build cognitive models to predict the time and steps needed to complete specific tasks [32]. End users, such as clinicians and allied health professionals who will actually use the system in the real environment, may participate in usability testing in which they are given tasks to complete and their performance is evaluated [33]. Trained interviewers provide useful qualitative data through semi-structured and guided interviews, and administering questionnaires.

Surveys are another method to assess the usability of a product in development. Surveys measure user satisfaction, perceptions and evaluations [34]; they have the advantages of being inexpensive to administer, the results quickly procured, and provide insight into the user’s experience with the product. Also, open-ended survey questions provide deeper insight into various usability concerns.

Each type of usability assessment method has benefits and drawbacks related to ease of conducting the study, predictive power and generalizability [27]. No single approach will answer all questions because each approach can identify only a subset of usability problems; therefore, it is reasonable to assert that a combination of different techniques will complement each other [12, 26, 35–37]. To our knowledge, there has not been any study that compares usability testing methods incorporating user participation (e.g., user testing, semi-structured interviews, and open-ended surveys) in terms of effectiveness in detecting usability problems in EHRs.

METHODS

Our investigation is a secondary analysis of data reported in a previous study [38], in which we used two methods - user testing and semi-structured interviews - to identify usability challenges faced by clinicians in finding and entering diagnoses selected from a standardized diagnostic terminology in a dental EHR. User testing results showed that only 22 to 41% of the participants were able to complete a simple task, and no participants completed a more complex task. We previously identified a total of 24 usability problem areas or themes related to the interface, diagnostic terminology or clinical workflow.

In this report we re-analyze the qualitative and quantitative data collected from our previous study, and we include the results from a usability survey that we subsequently administered to users concerning the same task within the EHR. Our goal is to identify and compare the frequency and type of usability problems detected by these three usability methods: user testing, interviews and open-ended surveys. A limitation of our study design was that subjects were not randomly assigned to participate in one of the three usability methods (user testing, interviews or surveys). Therefore, we were not able to determine if the characteristics of the subjects may have influenced the identification of usability problems.

In the original study, data were collected at two different dental schools: Harvard School of Dental Medicine (HSDM) and University of California School of Dentistry (UCSF). Each of these sites used the same version of the axiUm EHR system and the 2011 version of the EZCodes Dental Diagnostic Terminology [39]. Study participants were representative of clinic providers who practice in an academic setting, namely, third and fourth year dental students, advanced graduate educational students (residents) and attending dentists (faculty) (Table II).

Table II.

Summary of usability problems and themes detected by usability method

| Method | Usability Problems N = (187) | Themes N = (24) | Interface Themes (10) | Diagnostic Themes (5) | Work Domain Theme (9) |

|---|---|---|---|---|---|

| User Testing | 54% | 83% | 100% | 80% | 67% |

| Interview | 28% | 63% | 80% | 60% | 33% |

| Survey | 18% | 29% | 30% | 40% | 22% |

The methods for our prior work have been reported in detail previously [38]; however the following is a brief synopsis of the methodologies used:

User Testing Method

Investigators from the University of Texas Health Science Center at Houston (UTHealth) conducted a usability evaluation over a three day period at both HSDM and UCSF with 32 end-users. Participants performed a series of realistic tasks, which had been developed in collaboration with dentists on the research team. During the testing, subjects were asked to think aloud and verbalize their thoughts as they worked through two assigned scenarios. The performance of the participants and their interaction with the system were recorded by the tool MoraeRecorder Version 3.2 (Techsmith).

Hierarchical Task Analysis (HTA) was used to model and define an appropriate path that users needed to follow to complete the task. Optimal time to complete the tasks was calculated using the keystroke level model (KLM) [40] and a software tool called CogTool [41].

Semi-structured interview method

During the same site visits at HSDM and UCSF, investigators also interviewed a convenience sample of 36 participants (members of clinical teams) in thirty-minute sessions, with the goal of capturing the subjects’ experiences with the axiUm EHR, specifically the EZCodes dental terminology, workflow and interface. Interviewers used a semi-structured interview format, i.e., asking a series of open-ended questions to prompt unbiased responses. Overlap of participants in the user testing method and semi-structured interview method did occur but was not tracked. It was estimated that less than 50% of subjects participated in both user testing and interviews. The interviews were recorded, transcribed and analyzed for themes. Identified problems were categorized and tabulated using Microsoft Excel 2010.

Survey Method

After the site visits, a survey was conducted on a sample of 35 subjects composed of third and fourth year dental students, advanced graduate education students and the faculty at HSDM and UCSF. Questionnaires were sent to subjects, asking them to respond to 29 statements and four open-ended questions regarding a user’s view of the dental diagnostic terminology and its use in the EHR. Only the four open-ended questions were analyzed for this study. The open ended questions focused on the following: 1) need to add diagnoses missing from the terminology, 2) need to remove diagnoses from the terminology, 3) need to re-organize diagnoses, and 4) barriers to entering diagnoses in the EHR.

Data Organization and Analysis

Usability problems were identified when users struggled during the task, had an overt impediment on their performance, or reported a specific problems or concerns. We reanalyzed the collected qualitative data to identify usability problems; we then mapped these problems to 24 overarching themes that had been pre-identified in our previous work [38]. All problems were mapped to the previously identified themes. In this study, the 24 themes were grouped into three categories: 1) EHR user interface (10 themes), 2) diagnostic terminology (5 themes), and 3) clinical work domain and workflow (9 themes). We converted the data into a tabular form (Table III) in which each column was headed by a usability method; we ordered the rows based on themes stratified within their subject category. This process of mapping was conducted by one researcher and independently verified by another.

Table III.

Number of usability problems by method and theme.

| User interface-related themes | Number of findings (%) | |||

|---|---|---|---|---|

| User testing | Interview | Survey | Totals | |

| Time consuming to enter a diagnosis | 5 (14) | 17 (50) | 13(36) | 35(19) |

| Illogical ordering of terms | 5 (33) | 5 (33) | 5(34) | 15 (8) |

| Inconsistent naming and placement of user interface widgets | 12 (86) | 2 (14) | 0 | 14(8) |

| Search results do not match users expectations | 9 (70) | 2 (15) | 2(15) | 13(7) |

| Ineffective feedback to confirm diagnosis entry | 7 (88) | 1(12) | 0 | 8(4) |

| Users unaware of important functions to help find a diagnosis | 7 (100) | 0 | 0 | 7(4) |

| Limited flexibility in user interface | 3(50) | 3(50) | 0 | 6(3) |

| Inappropriate granularity / specificity of concepts | 2 (33) | 4 (67) | 0 | 6(3) |

| Term names not fully visible | 2 (67) | 1(33) | 0 | 3(2) |

| Distinction between the category name and preferred term in terminology unclear | 2 (100) | 0 | 0 | 2 (1) |

| Diagnosis terminology-related themes | ||||

| Some concepts appear missing / not included | 11 (55) | 1(5) | 8(4) | 20(11) |

| Visibility of the numeric code for a diagnostic term | 3 (60) | 2 (40) | 0 | 5(3) |

| Abbreviations not recognized by users | 2 (67) | 0 | 1(33) | 3(2) |

| Users not clear about the meaning of some concepts | 0 | 4(100) | 0 | 4(2) |

| Some concepts not classified in appropriate categories / sub categories | 2 (100) | 0 | 0 | 2(1) |

| Work domain and workflow related themes | ||||

| Knowledge level of diagnostic term concepts and how to enter in EHR limited | 14(70) | 2(10) | 4(20) | 20(11) |

| Free text option can be used circumvent structured data entry | 7 (58) | 5(42) | 0 | 12(7) |

| Only one diagnosis can be entered for each treatment | 0 | 2(100) | 0 | 2 (1) |

| No decision support to help suggest appropriate diagnoses, or alert if inappropriate ones are selected | 2(100) | 0 | 0 | 2 (1) |

| Synonyms not displayed | 2(100) | 0 | 0 | 2(1) |

| Diagnosis cannot be charted using the Odontogram or Periogram | 0 | 0 | 1(100) | 1(0) |

| No historical view of when a diagnosis has been added or modified | 1(100) | 0 | 0 | 1(0) |

| No way to indicate state of diagnosis (i.e differential, working or definitive) | 0 | 3(100) | 0 | 3(2) |

| Users forced to enter a diagnosis for treatments that may not require them | 1 | 0 | 0 | 1(0) |

| Total: | 99 (54) | 54(28) | 34(18) | 187 (100) |

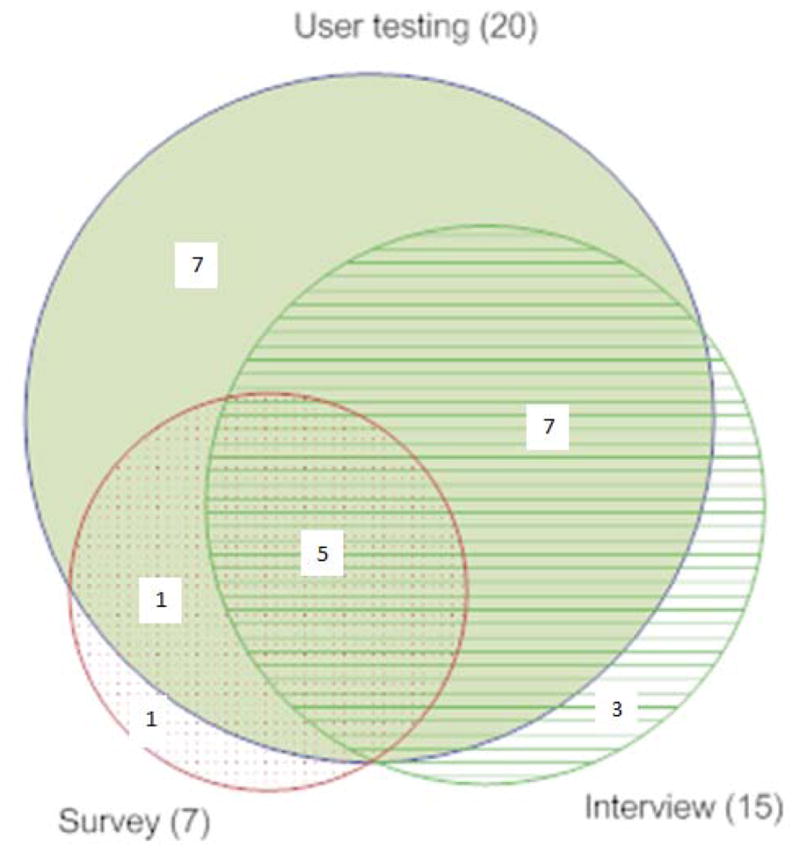

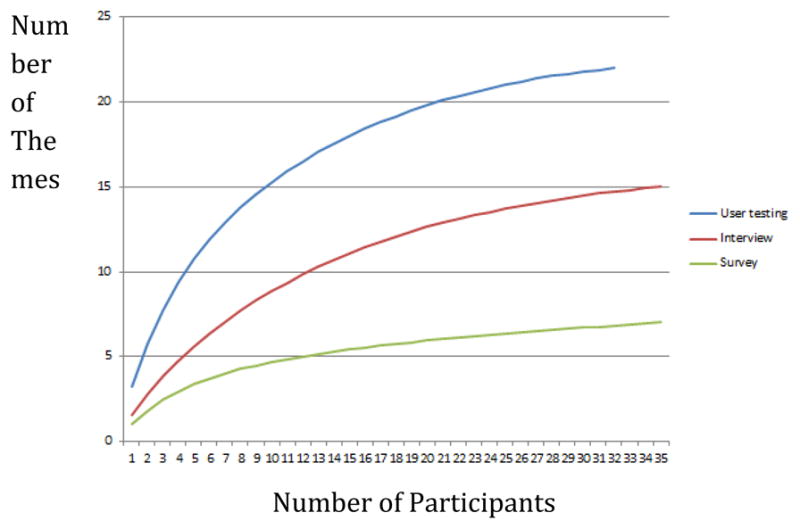

We computed the percentage of usability problems identified by each of the methods. The overlap of themes detected by each method is shown graphically in Figure 1, and the number of the themes identified as a function of the number of users is presented in Figure 2. Data were analyzed via a computer program using the statistical software package R (Version 2.11.1).

Figure 1.

Overlap of usability themes for each method

Figure 2.

Themes identified by participants

RESULTS

Participant demographics

The typical study participant was a male who practiced six sessions (half days) per week and was experienced in using technology. The percentage of males vs females was slightly higher in the survey than in user testing and the interview groups. In general, all subjects had prior experience with computers. About 75% of subjects of the user testing and interview groups had ample experience in using EHRs but this percentage was lower in the survey condition (table I).

Table I.

Participant demographics

| Participants: | User testing N=32 |

Interview N=36 |

Survey N=35 |

|---|---|---|---|

| Percentage of males (%) | 55 | 58 | 62 |

| Average clinic sessions/week | 6 | 5 | 7 |

| Experienced using computer (%) | 85 | 86 | 80 |

| Experienced with the use of the EHR (%) | 76 | 75 | 65 |

Findings

A total of 187 problems were detected by the three different methods: 54% through user testing, 28% by interview and 18% by survey. The frequency of findings for usability problems is presented for each method, categorized by theme and grouped by related usability problems in Tables II and III. The user testing method identified the most themes (83%), followed by the interview (63%) while the survey identified the fewest number of themes (29%). Taken together, the user testing and the interview methods identified 23 out of 24 themes. The survey uniquely identified one theme.

The user testing method identified all the themes (100%) of user interface-related problems, 80% of themes of diagnosis terminology-related problems and 67% of themes of work domain and workflow related problems. The interview method captured 80%, 60% and 33%, respectively, while the survey method captured 30%, 40%, and 22%. The user testing method was most effective in identifying problems, followed by the interview and the survey methods. It should be noted that for these three methods, the user interface-related category of themes were the most frequently cited concerns, followed by the diagnostic terminology-related and then the work domain and the workflow related problems. As shown in Figure 1, the three usability-testing methods conjointly identified five out of 24 themes.

The user testing and interview methods had 12 overlapping themes, followed by user testing and the survey method with 6 overlapping themes, while the interview and the survey had five overlapping themes. The five common themes identified by all three methods were: 1) Time consuming to enter a diagnosis, 2) Illogical ordering of terms, 3) Search results not matching user expectations, 4) Missing concepts or concepts not being included, and 5) Having limited knowledge of diagnostic term concepts and knowing how to enter them in EHR.

As shown in Figure 2, the number of themes identified, is a proportional function of the number of study participants, which reaches a point of diminishing returns.

DISCUSSION

Overall, a combination of multiple usability methods was superior in detecting more problems in a dental EHR than any single approach. Given the generic nature of these methods, it is reasonable to assume that this result will apply to any EHR or even any other health care technology. User testing methods provided us with both quantitative and qualitative findings, which were reflective of the systems’ effectiveness and efficiency, whereas the semi-structured interviews and open-ended surveys provided detailed qualitative data, the analysis of which reflected users’ perception and satisfaction, including the ease of use. This investigation also uncovered usability themes that commonly characterize summative problems for EHR systems in development such as time consuming data entry [42–48], term names not being fully visible [42], and mismatch between the user’s mental model and system design [12, 42].

User testing method

Among the broad range of usability inspection and testing methods available, user testing with the think aloud technique is a direct method to gain deep insight into the problems users encounter in interacting with a computer system. This method does not require users to retrieve concepts from long-term memory or retrospectively report on their thought processes. They provide not only the insights into usability problems, but also the causes underlying these problems [35].

The user testing method with the think aloud technique was of high value in evaluating EHR usability flaws. The performance of users was recorded, and we could accurately compute how fast users completed the assignment in comparison to the time needed by an expert, based on the keystroke-level model. This data provided a more objective assessment and is therefore more persuasive than information obtained from the interviews and the surveys.

The user testing method was best in detecting specific, technical performance problems, because users could report the problems they encountered as they performed tasks in real time. This point is clearly illustrated by the theme “inconsistent naming and placement of user interface widgets”; 86% of findings for this theme came from user testing, while only 14% of the comments came from the interview and no comments came from the survey.

Interview and survey methods

The interview method revealed important concepts missing in the work domain that other methods were unable to identify. For example, the system only allowed the entry of one diagnosis to support a particular treatment. However, in dentistry multiple diagnoses are often used to support one specific treatment. In addition, participants commented that there was no way to indicate the state of a diagnosis, such as differential, working or definitive diagnosis. The interview method alone provided this finding, probably because the clinical scenarios in the user testing method were constrained to those with one definitive diagnosis. The survey captured one theme (an additional functionality) that was not found using either user testing or interviews. Users expected to be able to chart a diagnosis by directly interacting with the visual representations (odontogram and perigram). Our findings suggest the limited value on relying on open ended surveys alone to assess EHR usability. However, in combination with user testing and interviews, surveys may help to verify reoccurring usability problems. A development team, for example, may choose to highly prioritize problems that are detected through multiple methods.

CONCLUSION

Although the user testing method detected more and different usability problems than the interview and survey methods, no single method was successful at capturing all usability problems. Therefore, a combination of different techniques that complement one another is necessary to adequately evaluate EHRs, as well as other health information technologies.

Highlights.

Numerous usability methods exist that can help to detect problems in EHRs

User testing is more effective in detecting usability problems in an EHR compared with interviews and open ended surveys

Supplementing user testing with interviews and open ended surveys allows the detection of additional usability problems

Summary Table.

| What was already known on the topic: |

|

| What this study added to our knowledge: |

|

Acknowledgments

We are indebted to clinical participants at Harvard School of Dental Medicine and the University of California, San Francisco School of Dentistry.

Funding This research was supported by the NICDR Grant 1R01DE021051 A Cognitive Approach to Refine and Enhance Use of a Dental Diagnostic Terminology.

Footnotes

Contributors:

MW and EH conceptualized the study, collected data, conducted analysis and wrote and finalized the paper. MP, DT and KK conducted analysis and wrote portions of the paper. RR, PS, NK, RV, ML, OT, and JW helped to conceptualize study methods and reviewed the final manuscript. VP provided scientific direction for conceptualizing the study, guided data analysis and reviewed the final manuscript.

Conflict of interest / Competing interests: None.

Provenance and peer review Not commissioned; externally peer reviewed.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Blumenthal D, Glaser JP. Information technology comes to medicine. N Engl J Med. 2007;356(24):2527–34. doi: 10.1056/NEJMhpr066212. [DOI] [PubMed] [Google Scholar]

- 2.Chaudhry B, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–52. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 3.Hillestad R, et al. Can electronic medical record systems transform health care? Potential health benefits, savings, and costs. Health Aff (Millwood) 2005;24(5):1103–17. doi: 10.1377/hlthaff.24.5.1103. [DOI] [PubMed] [Google Scholar]

- 4.Haux R. Health information systems - past, present, future. Int J Med Inform. 2006;75(3–4):268–81. doi: 10.1016/j.ijmedinf.2005.08.002. [DOI] [PubMed] [Google Scholar]

- 5.Medicine, I.o. Health IT and Patient Safety: Building Safer Systems for Better Care. 2011 [cited 2012 July 16]; Available from: http://www.iom.edu/~/media/Files/ReportFiles/2011/Health-IT/HealthITandPatientSafetyreportbrieffinal_new.pdf. [PubMed]

- 6.Patel VL, et al. Translational cognition for decision support in critical care environments: a review. J Biomed Inform. 2008;41(3):413–31. doi: 10.1016/j.jbi.2008.01.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zhang J. Human-centered computing in health information systems. Part 1: analysis and design. J Biomed Inform. 2005;38(1):1–3. doi: 10.1016/j.jbi.2004.12.002. [DOI] [PubMed] [Google Scholar]

- 8.Zhang J. Human-centered computing in health information systems part 2: evaluation. J Biomed Inform. 2005;38(3):173–5. doi: 10.1016/j.jbi.2004.12.005. [DOI] [PubMed] [Google Scholar]

- 9.Zhang J, Walji MF. TURF: Toward a unified framework of EHR usability. J Biomed Inform. 2011 doi: 10.1016/j.jbi.2011.08.005. [DOI] [PubMed] [Google Scholar]

- 10.Bates DW, et al. Reducing the frequency of errors in medicine using information technology. J Am Med Inform Assoc. 2001;8(4):299–308. doi: 10.1136/jamia.2001.0080299. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ash JS, Berg M, Coiera E. Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004;11(2):104–12. doi: 10.1197/jamia.M1471. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Thyvalikakath TP, et al. A usability evaluation of four commercial dental computer-based patient record systems. J Am Dent Assoc. 2008;139(12):1632–42. doi: 10.14219/jada.archive.2008.0105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Peute LW, Jaspers MW. The significance of a usability evaluation of an emerging laboratory order entry system. Int J Med Inform. 2007;76(2–3):157–68. doi: 10.1016/j.ijmedinf.2006.06.003. [DOI] [PubMed] [Google Scholar]

- 14.Campbell EM, et al. Types of unintended consequences related to computerized provider order entry. J Am Med Inform Assoc. 2006;13(5):547–56. doi: 10.1197/jamia.M2042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kushniruk AW, et al. Technology induced error and usability: the relationship between usability problems and prescription errors when using a handheld application. Int J Med Inform. 2005;74(7–8):519–26. doi: 10.1016/j.ijmedinf.2005.01.003. [DOI] [PubMed] [Google Scholar]

- 16.Koppel R, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA. 2005;293(10):1197–203. doi: 10.1001/jama.293.10.1197. [DOI] [PubMed] [Google Scholar]

- 17.Horsky J, Zhang J, Patel VL. To err is not entirely human: complex technology and user cognition. J Biomed Inform. 2005;38(4):264–6. doi: 10.1016/j.jbi.2005.05.002. [DOI] [PubMed] [Google Scholar]

- 18.Horsky J, Kuperman GJ, Patel VL. Comprehensive analysis of a medication dosing error related to CPOE. J Am Med Inform Assoc. 2005;12(4):377–82. doi: 10.1197/jamia.M1740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Han YY, et al. Unexpected increased mortality after implementation of a commercially sold computerized physician order entry system. Pediatrics. 2005;116(6):1506–12. doi: 10.1542/peds.2005-1287. [DOI] [PubMed] [Google Scholar]

- 20.Middleton B, et al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA. J Am Med Inform Assoc. 2013 doi: 10.1136/amiajnl-2012-001458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Nykanen P, et al. Guideline for good evaluation practice in health informatics (GEP-HI) Int J Med Inform. 2011;80(12):815–27. doi: 10.1016/j.ijmedinf.2011.08.004. [DOI] [PubMed] [Google Scholar]

- 22.Yusof MM, et al. Investigating evaluation frameworks for health information systems. Int J Med Inform. 2008;77(6):377–85. doi: 10.1016/j.ijmedinf.2007.08.004. [DOI] [PubMed] [Google Scholar]

- 23.Lowry SZ, et al. Technical Evaluation, Testing, and Validation of the Usability of Electronic Health Record, National Institute of Standards and Technology. NISTIR. 2012;(780) [Google Scholar]

- 24.Test Procedure for §170.314(g)(3) Safety-enhanced design. 2013 [cited 2013 12/6/2013]; Available from: http://www.healthit.gov/sites/default/files/170.314g3safetyenhanceddesign_2014_tp_approved_v1.3_0.pdf.

- 25.Rose AF, et al. Using qualitative studies to improve the usability of an EMR. J Biomed Inform. 2005;38(1):51–60. doi: 10.1016/j.jbi.2004.11.006. [DOI] [PubMed] [Google Scholar]

- 26.Khajouei R, Hasman A, Jaspers MW. Determination of the effectiveness of two methods for usability evaluation using a CPOE medication ordering system. Int J Med Inform. 2011;80(5):341–50. doi: 10.1016/j.ijmedinf.2011.02.005. [DOI] [PubMed] [Google Scholar]

- 27.Kushniruk AWP, VL Cognitive and usability engineering methods for the evaluation of clinical information systems. Journal of Biomedical Informatics. 2004;37(1):56–76. doi: 10.1016/j.jbi.2004.01.003. [DOI] [PubMed] [Google Scholar]

- 28.Chan J, et al. Usability evaluation of order sets in a computerised provider order entry system. BMJ Qual Saf. 2011;20(11):932–40. doi: 10.1136/bmjqs.2010.050021. [DOI] [PubMed] [Google Scholar]

- 29.Johnson CM, et al. Can prospective usability evaluation predict data errors? AMIA Annu Symp Proc. 2010;2010:346–50. [PMC free article] [PubMed] [Google Scholar]

- 30.Khajouei R, et al. Classification and prioritization of usability problems using an augmented classification scheme. J Biomed Inform. 2011;44(6):948–57. doi: 10.1016/j.jbi.2011.07.002. [DOI] [PubMed] [Google Scholar]

- 31.Khajouei R, et al. Classification and prioritization of usability problems using an augmented classification scheme. Journal of biomedical informatics. 2011 doi: 10.1016/j.jbi.2011.07.002. [DOI] [PubMed] [Google Scholar]

- 32.Saitwal H, et al. Assessing performance of an Electronic Health Record (EHR) using Cognitive Task Analysis. Int J Med Inform. 2010;79(7):501–6. doi: 10.1016/j.ijmedinf.2010.04.001. [DOI] [PubMed] [Google Scholar]

- 33.Khajouei R, et al. Effect of predefined order sets and usability problems on efficiency of computerized medication ordering. Int J Med Inform. 2010;79(10):690–8. doi: 10.1016/j.ijmedinf.2010.08.001. [DOI] [PubMed] [Google Scholar]

- 34.Viitanen J, et al. National questionnaire study on clinical ICT systems proofs: physicians suffer from poor usability. Int J Med Inform. 2011;80(10):708–25. doi: 10.1016/j.ijmedinf.2011.06.010. [DOI] [PubMed] [Google Scholar]

- 35.Jaspers MW. A comparison of usability methods for testing interactive health technologies: methodological aspects and empirical evidence. Int J Med Inform. 2009;78(5):340–53. doi: 10.1016/j.ijmedinf.2008.10.002. [DOI] [PubMed] [Google Scholar]

- 36.Peleg M, et al. Using multi-perspective methodologies to study users’ interactions with the prototype front end of a guideline-based decision support system for diabetic foot care. Int J Med Inform. 2009;78(7):482–93. doi: 10.1016/j.ijmedinf.2009.02.008. [DOI] [PubMed] [Google Scholar]

- 37.Horsky J, et al. Complementary methods of system usability evaluation: surveys and observations during software design and development cycles. J Biomed Inform. 2010;43(5):782–90. doi: 10.1016/j.jbi.2010.05.010. [DOI] [PubMed] [Google Scholar]

- 38.Walji MF, et al. Detection and characterization of usability problems in structured data entry interfaces in dentistry. Int J Med Inform. 2012 doi: 10.1016/j.ijmedinf.2012.05.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kalenderian E, et al. The development of a dental diagnostic terminology. J Dent Educ. 2011;75(1):68–76. [PMC free article] [PubMed] [Google Scholar]

- 40.Card SK, Moran TP, Newell A. The Psychology of Human-Computer Interaction. London: Lawrence Erbaum Associates; 1983. [Google Scholar]

- 41.John B, Prevas K, Salvucci D, Koedinger K. Predictive Human Performance Modeling Made Easy. Proceedings of CHI; 2004; Vienna, Austria. [Google Scholar]

- 42.Hill HK, Stewart DC, Ash JS. Health Information Technology Systems profoundly impact users: a case study in a dental school. J Dent Educ. 2010;74(4):434–45. [PubMed] [Google Scholar]

- 43.Menachemi N, Langley A, Brooks RG. The use of information technologies among rural and urban physicians in Florida. J Med Syst. 2007;31(6):483–8. doi: 10.1007/s10916-007-9088-6. [DOI] [PubMed] [Google Scholar]

- 44.Loomis GA, et al. If electronic medical records are so great, why aren’t family physicians using them? J Fam Pract. 2002;51(7):636–41. [PubMed] [Google Scholar]

- 45.Valdes I, et al. Barriers to proliferation of electronic medical records. Inform Prim Care. 2004;12(1):3–9. doi: 10.14236/jhi.v12i1.102. [DOI] [PubMed] [Google Scholar]

- 46.Kemper AR, Uren RL, Clark SJ. Adoption of electronic health records in primary care pediatric practices. Pediatrics. 2006;118(1):e20–4. doi: 10.1542/peds.2005-3000. [DOI] [PubMed] [Google Scholar]

- 47.Laerum H, Ellingsen G, Faxvaag A. Doctors’ use of electronic medical records systems in hospitals: cross sectional survey. BMJ. 2001;323(7325):1344–8. doi: 10.1136/bmj.323.7325.1344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ludwick DA, Doucette J. Primary Care Physicians’ Experience with Electronic Medical Records: Barriers to Implementation in a Fee-for-Service Environment. Int J Telemed Appl. 2009;2009:853524. doi: 10.1155/2009/853524. [DOI] [PMC free article] [PubMed] [Google Scholar]