Abstract

Background:

The case triage practice workflow model was used to manage incoming cases on a telepathology-enabled surgical pathology quality assurance (QA) service. Maximizing efficiency of workflow and the use of pathologist time requires detailed information on factors that influence telepathologists’ decision-making on a surgical pathology QA service, which was gathered and analyzed in this study.

Materials and Methods:

Surgical pathology report reviews and telepathology service logs were audited, for 1862 consecutive telepathology QA cases accrued from a single Arizona rural hospital over a 51 month period. Ten university faculty telepathologists served as the case readers. Each telepathologist had an area of subspecialty surgical pathology expertise (i.e. gastrointestinal pathology, dermatopathology, etc.) but functioned largely as a general surgical pathologist while on this telepathology-enabled QA service. They handled all incoming cases during their individual 1-h telepathology sessions, regardless of the nature of the organ systems represented in the real-time incoming stream of outside surgical pathology cases.

Results:

The 10 participating telepathologists’ postAmerican Board of pathology examination experience ranged from 3 to 36 years. This is a surrogate for age. About 91% of incoming cases were immediately signed out regardless of the subspecialty surgical pathologists’ area of surgical pathology expertise. One hundred and seventy cases (9.13%) were deferred. Case concurrence rates with the provisional surgical pathology diagnosis of the referring pathologist, for incoming cases, averaged 94.3%, but ranged from 88.46% to 100% for individual telepathologists. Telepathology case deferral rates, for second opinions or immunohistochemistry, ranged from 4.79% to 21.26%. Differences in concordance rates and deferral rates among telepathologists, for incoming cases, were significant but did not correlate with years of experience as a practicing pathologist. Coincidental overlaps of the area of subspecialty surgical pathology expertise with organ-related incoming cases did not influence decisions by the telepathologists to either defer those cases or to agree or disagree with the referring pathologist's provisional diagnoses.

Conclusions:

Subspecialty surgical pathologists effectively served as general surgical pathologists on a telepathology-based surgical pathology QA service. Concurrence rates with incoming surgical pathology report diagnoses, and case deferral rates, varied significantly among the 10 on-service telepathologists. We found no evidence that the higher deferral rates correlated with improving the accuracy or quality of the surgical pathology reports.

Keywords: Diagnostic accuracy, digital pathology, quality assurance, surgical pathology, telepathology, robotic telepathology

INTRODUCTION

Pathology diagnostic service workflow has been directly, or indirectly, studied from a number of perspectives.[1,2,3,4,5,6] These may be grouped as follows. The first group of telepathology workflow studies has focused on infrastructure issues including the evaluation and choices of digital imaging technologies and telecommunications modalities for specific clinical practice settings.[7,8,9,10,11,12,13,14,15,16] A second group of studies has examined metrics that characterize pathologists’ performances on a surgical pathology or cytopathology service, such as diagnostic accuracy, case through-put efficiency, and case deferral rates in various surgical pathology and cytopathology practice settings.[17,18,19,20,21] A third group of studies, which fall under the umbrella of basic research in medical imaging, has examined physiologic surrogates of human performance such as eye movements during the navigation of a digital slide using precise eye-tracking equipment set-ups.[22,23,24,25,26] Such futuristic research is aimed at finding physiologic “biomarkers” of expertise that could be potentially useful for the proficiency testing of pathologists for their suitability for inclusion in high-performance teams of diagnostic telepathologists to staff the envisioned “virtual” telepathologist team “call center-of-the-future”.[2,27,28]

Variability in human performance was identified as a potential concern in the first scientific paper on telepathology, published in 1987.[2] In a study performed under highly controlled conditions in a vision physiology laboratory, it was shown that individual pathologists had a range of personal thresholds for diagnosing breast disease using either video imaging or traditional light microscopy. The use of the “equivocal for malignancy” diagnostic category varied significantly among the six pathologists enrolled in the study. However, individual pathologist's use of the “equivocal for malignancy” diagnostic category for breast surgical pathology specimens was essentially the same for both conventional light microscopy and video microscopy for each individual pathologist.[2,3] Subsequently, in a large longitudinal study of an actual pathology diagnostic service incorporating telepathology, Dunn et al. at the US. Department of Veterans Affairs Medical Center, in Milwaukee, Wisconsin, using a static-image enhanced dynamic robotic telepathology system, studied patterns of case deferrals over a 12 year period of time.[17,19,20] Ten telepathologists rendered preliminary primary surgical pathology diagnoses on over 11,000 surgical pathology cases. Case deferral rates varied from 4% to 21% among different pathologists affecting workflow within their practice. Some of the highest case deferral rates were generated by relatively young pathologists.

In this study, we analyzed the influence of surgical pathology subspecialty expertise on case deferral rates when academic subspecialty surgical pathologists function as a triage pathologist staffing a telepathology-enabled QA program on a daily rotation basis.

MATERIALS AND METHODS

Telepathology-Based Quality Assurance Service

The Department of Pathology of the University Medical Center (UMC) at the University of Arizona (Tucson, Arizona) began a surgical pathology quality assurance (QA) program for the Havasu Regional Medical Center ([HRMC], Lake Havasu City, Arizona) 314 miles away, in July 2005. The Arizona Telemedicine Rural Network, a state-wide broadband telecommunications network operated by the Arizona Telemedicine Program (ATP), served as the telecommunications carrier. This QA program remained in operation for 51 months, terminating in October 2009, when a decision was made by HRMC to outsource its pathology services to a commercial laboratory. During the 51 months of operation, a total of 354 QA transmission sessions occurred.

Although this program was in operation, the HRMC surgical pathology laboratory handled 3,000-4,000 surgical pathology cases each year. The laboratory was staffed by a single surgical pathologist in full-time solo practice at HRMC. Representative slides of all new cancer cases and other challenging surgical pathology cases were selected by the local HRMC pathologist and examined remotely by telepathology as part of the QA program. The cases were representative of a typical community pathology practice both in distribution (organ system and procedure type) and complexity. Of note, the QA model utilized in this study relied upon the clinical judgment of the HRMC pathologist to select relevant slides for QA review. The HRMC pathologist did not necessarily provide all of the microscope slides in any given case for telepathology review. This QA model is standard operating procedure at the University of Arizona College of Medicine Department of Pathology and from the authors’ past experiences, is widely used in other academic medical centers. A different QA model, that in which the whole case is reviewed by one or more peers operating independently, may be more familiar to some readers, particularly those in a community practice setting.

During the period between July 2005 and October 2009, 1862 cases were transmitted from HRMC to UMC. Forty-seven cases, which were viewed by more than one telepathologist as incoming cases, were excluded from analysis of the performance characteristics of individual telepathologists (but these cases were included for consideration in other aspects of this study). The reason for having two telepathologists view an incoming case was that as new telepathologists joined the QA program, they were trained by an experienced peer operator for a period of one or more telepathology transmission sessions. After this introductory period of shadowing, the new telepathologist then operated independently. Because more than one telepathologists was present for these sessions, they were excluded from analysis of individual telepathologist performance characteristics, but were included in other areas of study. Therefore, in some analyses in this paper, data from all 1862 cases are included. For others, the analysis is based on the 1815 cases which were handled by a single QA telepathologist [i.e., distributions of cases by organ system, as shown in Tables 1 and 2].

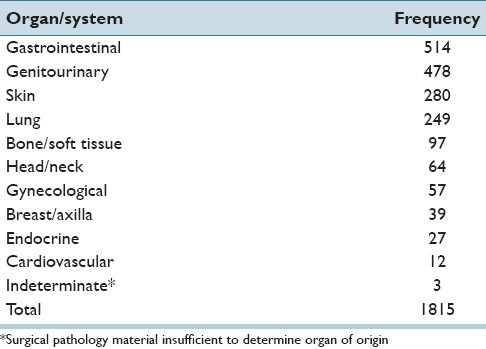

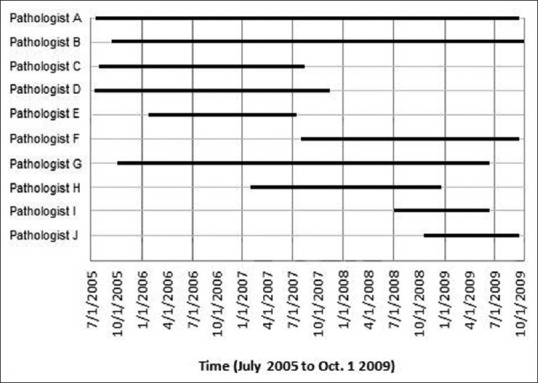

Table 1.

Distribution of cases by organ system

Table 2.

Telepathology case nondeferral rates and deferral rates

Telepathology Equipment

Telepathology equipment, as described by Dunn et al., and others, was used in this study.[16,17,18,19,20] A remotely controlled static-image enhanced robotic-dynamic telepathology system (Apollo PACS®, Falls Church, VA) was used to transmit a stream of images over the ATP's broadband telecommunications network, from Lake Havasu City to Tucson. Real-time video conferencing, a feature, which is built into the Apollo system, allowed face-to-face collaboration between the HRMC and UMC pathologists.

Staffing of the Surgical Pathology Quality Assurance Program

A total of 10 UMC surgical pathologists participated in the study. They had dual roles, functioning either as a “general” telepathologist, when an incoming QA case fell outside their area of subspecialty surgical pathology expertise and/or a subspecialty surgical pathologists depending on the nature of the case. It is noted that the participating telepathologists had considerable general surgical pathology experience. This experience came from multiple types of exposures: Rotating coverage of the general surgical pathology service, the frozen section service and the on-call surgical pathology service. In each of these areas, the 10 pathologists wear the hat of a generalist. It is reasonable to hypothesize that a more subspecialized practice might have higher deferral rates than those observed in this study. Nine surgical pathology subspecialties were represented, including dermatopathology, gastrointestinal/hepatic pathology, renal/genitourinary pathology, breast, thoracic, gynecologic, and head/neck pathology. Two renal/genitourinary pathologists were on the telepathology QA service. In addition to their subspecialty expertise, each of the 10 participating telepathologists had extensive experience handling general surgical pathology cases as each took turns covering the general surgical pathology service, signing out frozen sections and coving surgical pathology night and weekend call. Thus, these 10 pathologists were well-suited for their dual role as generalists/subspecialists.

In addition to the staffing telepathologists, a case manager was present at all telepathology transmission sessions. The role of the case manager was to document telepathology session details and to troubleshoot technical problems, should they occur.

Telepathology Quality Assurance Case Reviews

Cases reviewed through telepathology initially underwent light microscopy review by the HRMC pathologist. A preliminary diagnosis was rendered and a provisional pathology report was generated. This report and relevant patient medical history/clinical characteristics were provided to the UMC telepathologist in Tucson at the time of case review. The HRMC pathologist used their clinical judgment in the selection of relevant slides for transmission and QA review. The Apollo system (see telepathology equipment) was used to transmit, in real-time, the case images from Havasu City to Tucson, Arizona. These images were remotely navigated by the on-service triage telepathologists at UMC, in Tucson. Telepathologists and the HRMC pathologist were able to simultaneously collaborate face-to-face via the real-time video conferencing feature of the Apollo system. Representative static images of diagnostic histopathology fields were archived. The case manager was responsible for documenting details of the session on a standard service log form. Variables that were documented at the time of telepathology review included patient name, HRMC case number, telepathology case number, the name of the telepathologist(s) reviewing the case, the time that the case was started and finished, the number of static image captures per case and whether the case was signed out or deferred. Unfortunately, the reason(s) for individual case deferral were not recorded and we were therefore unable to evaluate this variable in this retrospective study. The session log form had a free text comment section where the case manager was able to record notes on any technical problems that arose during the session. Finally, a telepathology report was issued and this report, together with the HRMC report and QA session log form, were archived for future analysis.

The 10 telepathologists who participated in the study were faculty pathologists in the University of Arizona College of Medicine Department of Pathology, which staffs the UMC laboratories. For the vast majority of cases, a single telepathologist was present. However, as new telepathologists joined the QA program over time, they began by shadowing an experienced peer operator for one or more sessions. Thus, for a small percentage of cases (47 cases or 2.52%), two telepathologists were present.

We assessed interpathologist variability with respect to telepathology case turn-around times in handling QA case (i.e., telepathology case turn-around times), and incoming telepathology-enabled QA case deferral rates. Both of these parameters can affect workflow and case throughput times in a surgical pathology QA program.

The Case Triage Practice Workflow Model

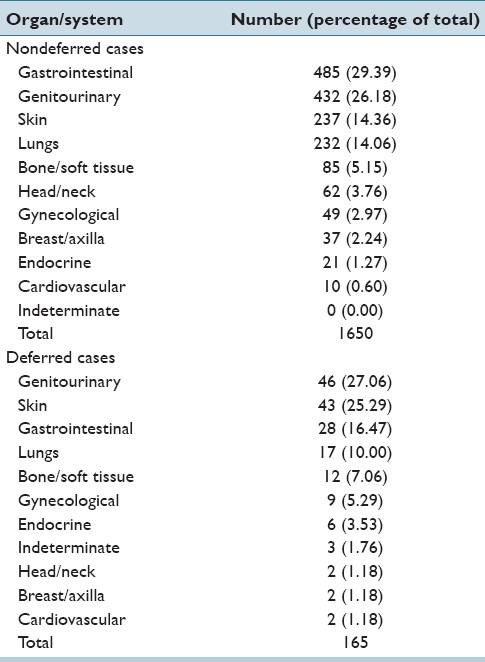

Using the CPT workflow model [Figure 1], the referring pathologist sends telepathology cases to the triage pathologist at the telepathology hub. When triage pathologists consult with subspecialty pathologists, they retain responsibility for generating the final QA telepathology report. This minimizes the number of consulting pathologists at the telepathology hub with whom the referring pathologist interacts with in a given week, and helps insure smooth handling of incoming cases.

Figure 1.

Workflow chart for case triage practice model

Surgical Pathology Case Material and Data Analysis

The preliminary surgical pathology reports (HRMC), UMC telepathology case reports and telepathology session log sheets were compiled sequentially. All cases transmitted between HRMC and UMC were included in this evaluation. Data collected from each case included date of telepathology review, name of telepathologist(s) present at review, specimen organ system, preliminary (HRMC) diagnosis, telepathology diagnosis, time spent per case and the number of static image captures archived per case. These data were uploaded in a Microsoft Excel, Bellevue, Washington] spreadsheet and examined for a case load per telepathologist, number of cases per organ system, and deferral rates. The HRMC diagnoses and telepathology diagnoses were compared and classified by an independent senior pathologist as being either concordant or discordant. Discordant diagnoses were further sub-classified by an independent pathologist as a major discrepancy (one that would alter clinical management) or minor discrepancy (one that would not alter clinical management).

Telepathology cases were also classified according to the telepathologists’ area of subspecialty surgical pathology expertise. A t-test for paired observations was used to determine if there was a statistically significant difference in deferral rates among pathologists as a function of incoming cases being within or outside their area of expertise. A Chi-square test was done to determine if the distributions of deferral rates differed across readers. We also compared pathologists’ performances regarding their deferral rates for QA cases that fell either within or outside of their individual area of subspecialty surgical pathology expertise.

As data analysis progressed, it became apparent that there was a higher rate of discrepant diagnoses in genitourinary cases. To determine the etiology of the increased rate of discrepancies in this organ system, additional analysis was performed. Cases were subcategorized by organ/site and the HRMC and UMC diagnoses were compared.

Designations as Subspecialty Surgical Pathology Experts

For this study, area of expertise for each participating telepathologist was defined on the basis of consensus among colleagues within the Department of Pathology at the University of Arizona. The majority of the telepathologists were board certified or fellowship-trained in their particular area of subspecialty surgical pathology expertise. Others were simply recognized within the department as the “go-to” pathologists for a specific organ system, having accrued experience in a specific area of pathology through years of experience.

RESULTS AND DISCUSSION

Telepathology-Enabled Quality Assurance Service Staffing

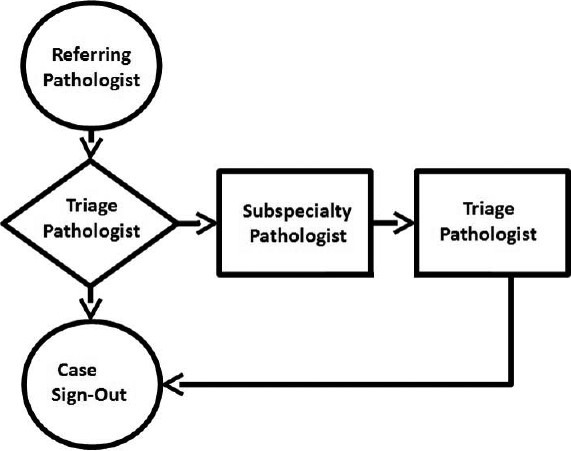

Figure 2 shows the time of participation for the 10 individual pathologists (A-J) in the telepathology QA program. Pathologist A and pathologist B were telepathology service providers throughout nearly all of the 51 months for which the service was active. On the other hand, pathologist “I” and pathologist “J” were recent hires and relative late comers to the telepathology-enabled QA program. Their late entry into the program may have contributed to the spike in deferral rates near the end of the program, although the spike was not statistically significant.

Figure 2.

Staffing of the University of Arizona's telepathology-enabled quality assurance program

Distribution of HRMC Analytical Surgical Pathology Cases

The distribution of 1815 HRMC cases reviewed initially by one telepathologist is provided in Table 1 according to organ system. Of these cases, 1650 cases (90.91%) were signed out immediately by a triage telepathologist serving as the “diagnostic telepathologist of-the-day.” Most of the remaining 165 cases (9.09%) were deferred for glass slide re-review by: A second pathologist without subspecialty expertise related to the incoming case; a subspecialist surgical pathologist; or for additional studies, most often immunohistochemical staining. An additional 47 cases handled by two telepathologists, working together, brought the total number of cases to 1862. The 47 cases handled by two telepathologists are not included in Table 1.

Deferral Rates

Table 2 shows the telepathology non-deferral rates and deferral rates according to organ.

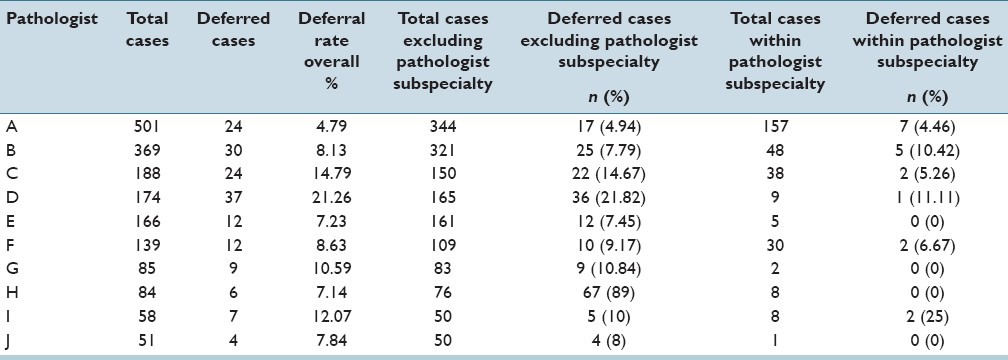

Numbers of cases per telepathologist ranged from 51 to 501 (average 182) [Table 3]. Overall deferral rates for individual telepathologists ranged from 4.79% to 21.26% (average 10.05%). Deferral rates, excluding their own surgical subspecialty cases, ranged from 4.94% to 21.81% (average 10.26%). Deferral rates were not affected by exclusion of cases within each telepathologist's subspecialty area. There was no statistically significant difference in deferral rates for case triage telepathologist for cases falling outside their areas of subspecialty pathology expertise versus triage cases falling within their area of subspecialty surgical pathology expertise (t = 0.032, P = 0.9754). A Chi-square test for distribution of deferral rates across telepathologists was statistically significant for general rates (χ2 = 20.52, P < 0.05) and subspecialty rates (χ2 = 20.23, P < 0.05). However, it may be noteworthy that 8 out of 10 telepathologists deferred a lower percent of telepathology cases that fell within their area of subspecialty surgical pathology expertise.

Table 3.

Case deferral rates for pathologists serving as either general or subspecialty surgical pathologists while in their triage telepathologist role

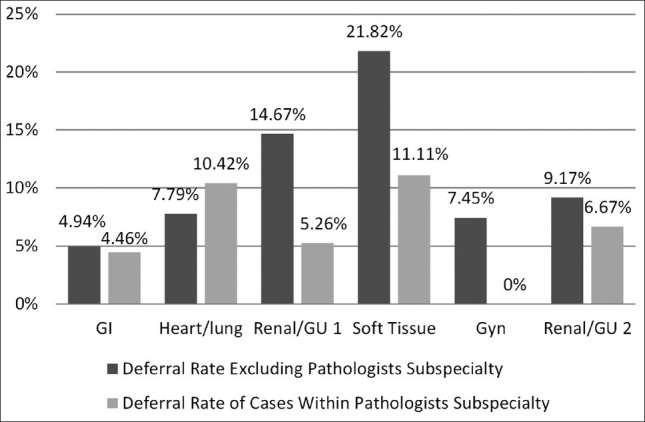

The case deferral rates for the six telepathologists, including two renal pathologists (Renal/GU 1 and Renal/GU 2), who handled over 100 telepathology cases are shown in Figure 3. The differences in deferral rates for incoming cases within an individual's area of subspecialty surgical pathology expertise and general pathology cases that fall outside of areas of an individual's surgical pathology expertise are not statistically significant.

Figure 3.

Comparisons for telepathology quality assurance case deferral rates for incoming nonsubspecialty surgical pathology cases and subspecialty surgical pathology cases

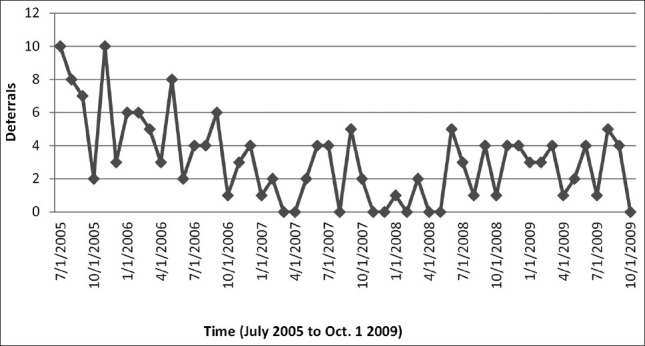

Case Deferral Rates over Time

The aggregate number of case deferrals over time was also assessed. Cases were summed according to calendar months from July 2005 to October 2009. The average aggregate case deferral rate per month was 3.314 with a range of 0-10. The monthly case deferral rates fluctuated as shown in Figure 4. The deferral rate/month over the first 12 months of the program was on average 6.25 cases/month. This fell to 2.58 cases/month for the second 12 month interval, 2.08 for the third, and then rose to 3.33 cases in the fourth interval. There is a significant (r = -0.4449, z = -3.387, P = 0.001) negative correlation with deferrals decreasing significantly over time, within this telepathology group practice.

Figure 4.

Overall deferral rates over time (1 month intervals)

Nondeferred and Deferred Case Viewing Times for Quality Assurance Cases

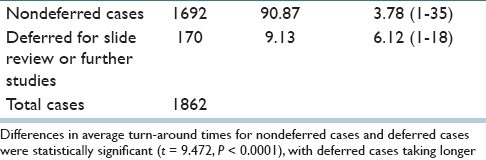

The time spent generating a surgical pathology report for each telepathology case was recorded at the end of each consultation by the case manager in the telepathology room. Overall, the time spent on individual cases, including nondeferred cases and deferred cases, was 3.99 min (range = 1-35 min). The average time for nondeferred cases (i.e. those signed out directly by the triage telepathologist) was 3.78 min (range = 1-35 min), and for deferred cases (i.e., those for which the triage telepathologist deferred rendering a diagnosis) was 6.12 min (range = 1-18 min). Table 4 includes incoming cases handled by a single telepathologist (1815 cases) as well as 47 incoming cases handled with two telepathologists present.

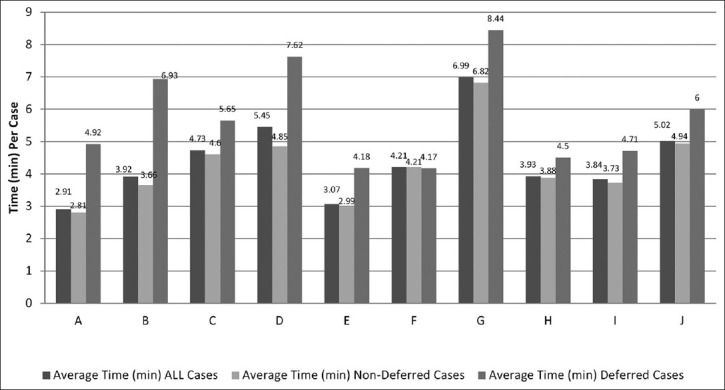

Table 4.

Turn-around times for nondeferred and deferred cases

Differences in average turn-around times for nondeferred cases and deferred cases were statistically significant (t = 9.472, P < 0.0001), with deferred cases taking longer.

Case Viewing Times by Organ System

Data were also analyzed to determine the time spent reviewing cases from different organ systems. Lowest average time for nondeferred cases was 3.08 min for breast/axilla cases (range = 5-9) and highest was 4.69 min for gynecological cases (range = 1-15). For deferred cases lowest average time per case was 3.00 min for cardiovascular cases (range = 2-4), and highest was 7.00 min for breast/axilla cases (range = 5-9).

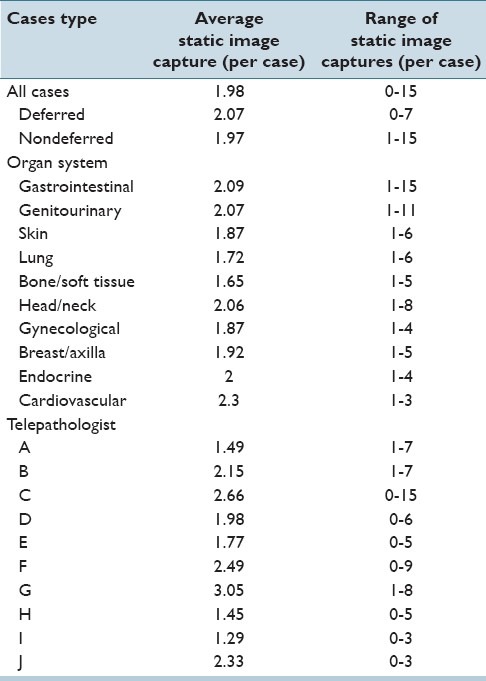

Case Review Viewing Times for the Panel of 10 University Telepathologists

Case reviewing time was also examined for patterns based upon individual reviewing telepathologist [Figure 5]. Lowest average case time for individual telepathologists was 2.91 min (range = 1-15), and highest was 6.99 min (range = 1-12). Lowest average time for nondeferred cases was 2.81 min (range = 1-15), and high was 6.82 min (range = 1-21). The average time for deferred cases was 4.17 min (range = 2-7), and highest was 8.44 min (range = 4-14).

Figure 5.

Time (min) per case by telepathology for nondeferred and deferred cases

An analysis of variance revealed significant differences (F = 21.369, P < 0.0001) with the average deferred case times being significantly higher (5.712 min) than the nondeferred cases turn-around times (4.249 min) and the overall average case turn-around time (4.407 min). In Figure 5, pathologist “A” and “E” are nearly twice as fast in signing out cases as pathologist “G”.

For 9 of 10 telepathologists, viewing times for nondeferred cases were shorter than the viewing times for deferred cases. Although these differences in time may seem relatively small, a cascade of incremental costs can be initiated by deferring a case for glass slide review by conventional light microscopy or by deferring a case for special studies such as immunohistochemistry performed at another institution.

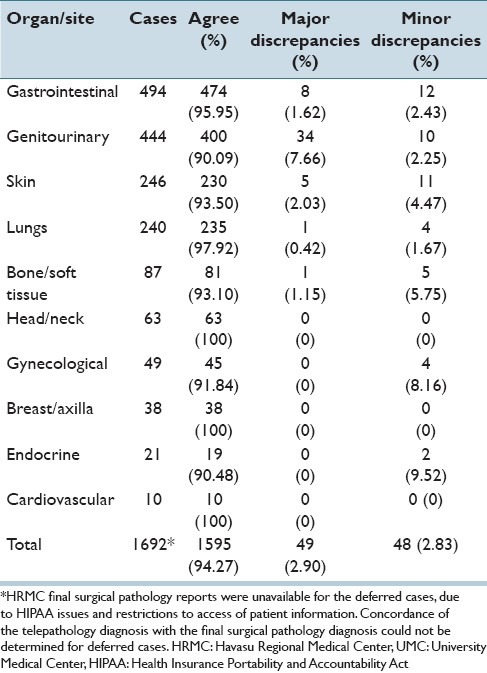

Static Image Captures for Archiving

The average number of static image captures per case for all case was 1.98 (range 0-15). The average number of static image captures of deferred cases was 2.07/case (range 0-7). For nondeferred cases, the average was 1.97/case (range 1-15). By organ system, the average ranged from 1.65/case (bone and soft tissue, range 1-5) to 2.3/case (cardiovascular, range 1-3). The average number of static image captures by individual telepathologists ranged from 1.29/case (pathologist I) to 3.05/case (pathologist G). Static image capture data is represented in Table 5.

Table 5.

Static image capture data

Overall concordance between HRMC and UMC pathologists for incoming cases was 94.27%. Concordance by tissue site/organ system ranged from 90.09% to 100% [Table 6]. The greatest discordance rate was seen with genitourinary cases with an overall discordance rate of 9.91% (7.66% major discrepancies and 2.25% minor discrepancies). Following the genitourinary system, endocrine cases had an overall discordance rate of 9.52% (no major discrepancies and 9.52% minor discrepancies) and gynecological cases an overall discordance rate of 8.16% (no major discrepancies and 8.16% minor discrepancies).

Table 6.

Concordance of HRMC and UMC diagnoses

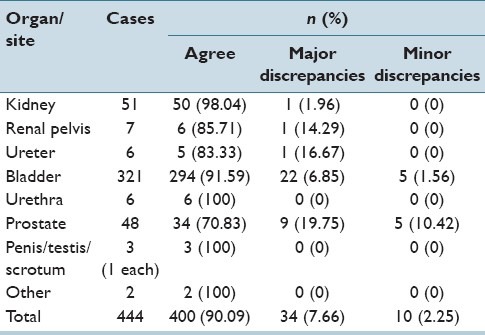

Evaluating Genitourinary System Discrepancies

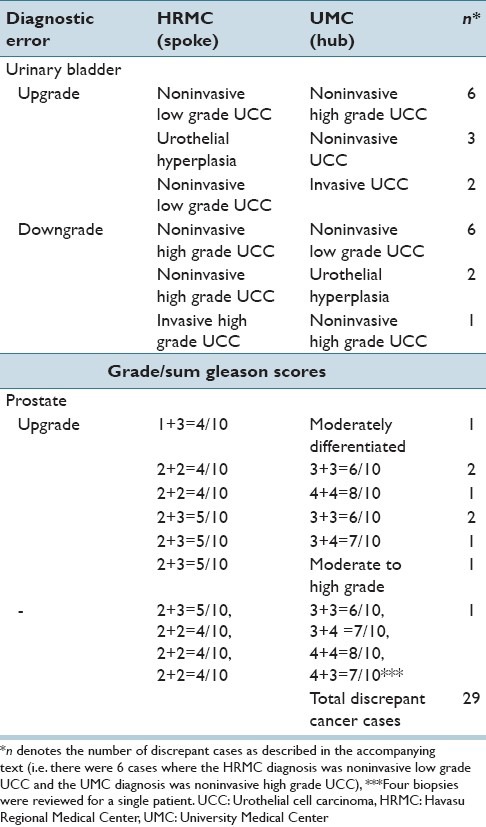

Discrepant diagnosis levels were relatively high for genitourinary cases. To further elucidate the nature of these discrepancies, additional analysis of the genitourinary cases was performed. The majority of the discrepancies were seen in the urinary bladder (8.41% discrepant, of which 6.85% were major and 1.56% were minor discrepancies) and prostate (29.17% discrepant, of which 18.75% were major and 10.42% were minor discrepancies). The discrepancy rates for each organ/site are shown in Table 7. The discrepancies were traced to disagreements in the grading of urothelial carcinomas and in the Gleason scores assigned in prostatic adenocarcinoma cases. No discernable pattern was seen in the discrepant grading of urothelial carcinomas of the urinary bladder; there was no consistent over- or under-grading on the part of either the HRMC pathologist or the UMC telepathologists. In contrast, a consistent pattern of under-grading was seen on the part of the HRMC pathologist in prostatic adenocarcinoma cases (there was not a single discrepant case of prostatic adenocarcinoma in which the UMC pathologist assigned a grade lower than that of the HRMC pathologist). Examples of discrepant urinary bladder and prostate diagnoses are shown in Table 8. Of note, several prostate diagnoses show nonadherence to standard Gleason grading criteria on the part of UMC pathologists.

Table 7.

Genitourinary system discrepancies by organ/site

Table 8.

Examples of discrepant genitourinary diagnoses

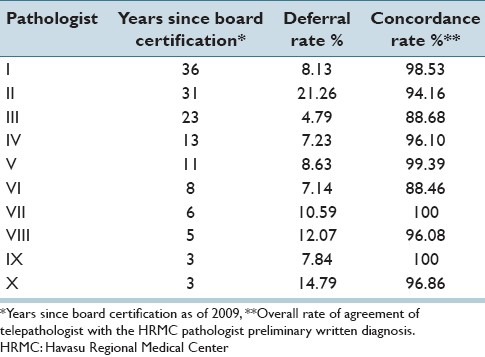

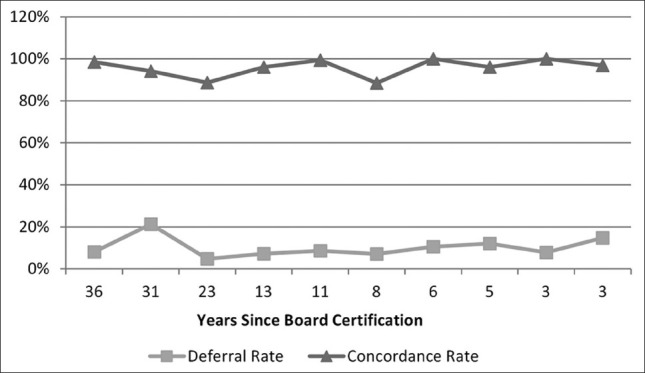

Pathologist Deferral Patterns and Concordance Rates by Years of Experience

For the 10 UMC staff pathologists, years of experience as a practicing surgical pathologist did not correlate with either case deferral rates (r = 0.146, z = 0.389, P = 0.6969) or concordance rates (r = −0.202, z = 0.542, P = 0.5877). Years of experience, deferral rates, and concordance rates for the individual UMC telepathologists are shown in Table 9 and Figure 6.

Table 9.

Years in surgical pathology practice experience versus deferral and concordance rates

Figure 6.

Telepathologist practice experience: Deferral and concordance patterns versus years since initial American board of pathology primary certification

Technical Problems in Quality Assurance Transmission Sessions

The majority of QA sessions proceeded without difficulty. Occasionally, technical difficulties were encountered during the QA transmission sessions. Of the 354 transmission sessions that occurred during the 51 month period that this QA program was in operation, technical difficulties were documented by the case manager during 24 sessions (6.78%). These included: Software glitches such as freezing or “hanging” occurred in two sessions (0.56%); equipment malfunction (such as failure of microphone and/or camera) occurred in three sessions (0.85%); image and/or specimen quality issues occurred in five sessions (1.41%); connectivity issues (including slow transmission speed and sudden disconnection) occurred in seven sessions (1.98%); and problems with control of/navigation with the robotic microscope or problems with mapping occurred in eight sessions (2.26%). Other quality issues included problems with pixilation of the transmitted image, crush artifact on the glass slide itself, and difficulty interpreting an iron stain through telepathology. Rarely, more than one type of technical difficulty occurred during a single transmission session. Of the 24 sessions during which technical difficulties did occur, the problem was reported to have been resolved during 11 of the sessions. Some of the problems were resolved by exiting the software, rebooting the computer(s) at either end of the transmission, restarting the software and re-establishing the connection between the two transmission sites.

DISCUSSION

Surgical pathologists in the UMC, Department of Pathology at the University of Arizona provided telepathology services for a network of four rural hospitals and one urban hospital, in Arizona, for over a decade. These services have included telepathology frozen section diagnoses and pathology QA case evaluations, immediate second opinions for an innovative integrated rapid breast care service.[11,29,30,31] The University of Arizona surgical pathology staff is thus experienced in using telepathology for routine clinical operations. The UMC pathology staff has had extensive clinical experience with telepathology and was comfortable using both robotic-dynamic telepathology and whole-slide imaging telepathology in everyday practice.

This study evaluated pathologists’ performance in a real-time telepathology QA program using the well-established case triage practice case workflow model.[10,11] The overall concordance rate between primary (HRMC) and telepathology (UMC) diagnosis was 94.27%. Of the discordant diagnoses, 2.90% represented major discrepancies and 2.83% represented minor discrepancies. The highest discordancy rate was seen in cases from the genitourinary system. Further analysis of these cases revealed that the vast majority of discrepant diagnoses stemmed from disagreement in bladder urothelial carcinoma and prostatic carcinoma grading. The high interobserver variability in the grading of these tumors is extremely well-known.[32,33,34,35,36,37,38,39,40,41,42,43,44,45] We believe the high discrepancy rate seen in the genitourinary cases in this study reflects the inherent difficulty of this area of pathology.

This study reproduced findings originally reported in the pioneering work on telepathology surgical pathology workflow, by Dunn et al. at the US Department of Veterans Affairs Medical Center in Milwaukee, Wisconsin.[20] In our Arizona experience, surgical pathology case deferral rates by a panel of 10 telepathologists ranged from 4% to 21% essentially duplicating the range of deferral rates for incoming cases experienced by a similar number of surgical pathologists working in the Department of Veterans Affairs Medical Center in Milwaukee.[20] Interestingly, although the broad ranges of deferral rates for individual pathologists overlapped, the patient cohorts and case materials for the two studies were quite different. Dunn's group was rendering preliminary primary diagnoses on nearly all new surgical pathology cases at a small, 35 bed rural hospital.[20] The case material utilized in the Arizona telepathology study was restricted to newly diagnosed cancer cases intermixed with difficult or problematic surgical pathology cases selected by the on-site rural pathologist. It is reasonable to suggest that the Arizona case material would be more challenging. This suggests that the relatively high deferral rates experienced by individual pathologists in each group practice are multi-factorial and not directly related to case difficulty, to a special requirement for access to subspecialty surgical pathology expertise, or to years of experience as a practicing pathologist. It is also noteworthy that both telepathology group practices, in Wisconsin and Arizona, included service pathologists who deferred <5% of incoming cases, setting a shared benchmark for low deferral rates.

Another difference was that Dunn et al. examined all of the glass slides for each case by telepathology since there was no on-site pathologist. In our Arizona study, there was an on-site pathologist at HRMC who actively participated in the QA telepathology review sessions and personally selected a sub-set of glass slides for telepathology review. Telepathologists at UMC were made aware of the full range of slides that had been pre-examined by the HRMC on-site pathologist and formed the basis for the provisional surgical pathology report generated in writing by the on-site rural pathologist prior to the telepathology QA session. UMC telepathologists rarely requested additional glass slides for telepathology case review. However, we acknowledge that limitations of this study included the fact that the HRMC pathologist was expected to use their clinical judgment and select representative slides to be transmitted for QA. It is possible that discrepancy and deferral rates may be impacted when a whole-case QA model is used.

In this study, we also examined another potential factor that could have an impact on workflow in a surgical pathology QA program, namely subspecialty surgical pathology expertise. This is important since the triage pathologists had dual roles within the UMC practice, functioning as both general pathologists and subspecialty surgical pathologists. This is a common arrangement in the United States, especially at small to mid-sized UMC hospitals that support a dozen or fewer subspecialty surgical pathologists on staff. Covering the full range of subspecialty surgical pathology cases with bona fide experts takes two dozen, or more, subspecialty surgical pathologists.

At least with respect to the question of possible effects of participation in subspecialty surgical pathology activities on performance as triage telepathologists, analysis of our data showed that discordance rates or case deferral rates were minimally changed with exclusion of cases within each telepathologist's subspecialty area. The subspecialty surgical pathologists performed well in their role as triage pathologist and could handle the large majority of the incoming cases without help by another pathologist. This supports the deployment of subspecialty surgical pathologists on general pathology rotations as triage pathologists in a telepathology-enabled surgical pathology QA program.

Our analysis indicates that triage pathologist performance is, in large measure, unrelated to the telepathologists’ other roles in the department as a subspecialty surgical pathologist. For example, the gastrointestinal pathologist was as likely to defer a colon case as a lung case. This observation may be partially explained by the fact that University of Arizona surgical pathologists are encouraged to retain their general surgical pathology sign-out skills by taking regular rotations on the general surgical pathology diagnostic service. The staffing of the UMC QA service proved to be robust with surgical pathologists remaining on the telepathology service as long as needed (Weinstein, unpublished observation, 2014).

Several limitations of this study are related to the fact that it's a retrospective study of a surgical pathology QA program in which the referring hospital and the hub hospital are in different, unrelated health care systems. There are privacy and security restrictions imposed on the exchange of patient information among healthcare organizations. Furthermore, there was no routine method for reconciliation of discrepancies, as is often encountered for this type of surgical pathology QA program. To the best of our knowledge, the HRMC pathologist received the discrepant telepathology diagnosis, but whether the surgical pathology report was modified is unknown to us. After the telepathology case was deferred, it became a consult case like any other and was essentially lost to follow-up. We acknowledge that this is a limitation of this retrospective study. Future studies would benefit from having a built in mechanism for tracking deferred cases.

CONCLUSION

Using the case triage practice telepathology staffing model, blending the services of university-based surgical pathologists and a community-based general pathologist at a rural hospital, provided a means for improving the quality of community-based laboratory services. This presented opportunities for university faculty pathologists to actively participate in their UMC-based community outreach program and increased the efficiency of their second opinion QA program. Our findings showed that the likelihood of a reviewing telepathologist agreeing or disagreeing with a diagnosis rendered at an unaffiliated hospital is not a function of the reviewers’ subspecialty surgical pathology expertise but, rather, is more likely to be related to human factors. As demonstrated previously under highly controlled laboratory conditions, pathologists with a tendency to “equivocate” on rendering diagnoses will do so at comparable levels for both digital pathology (e.g., telepathology) and for traditional light microscopy.[2] Deferral rates are not related to years of experience as a surgical pathologist.

Footnotes

Available FREE in open access from: http://www.jpathinformatics.org/text.asp?2014/5/1/18/133142

REFERENCES

- 1.Kaplan K, Weinstein RS, Pantanowitz L. In: Pathology Informatics: Theory and Practice. Ch. 16. Pantanowitz L, Balis UJ, Tuthill JM, editors. Chicago, IL: ASCP Press; 2012. pp. 257–72. [Google Scholar]

- 2.Weinstein RS, Bloom KJ, Rozek LS. Telepathology and the networking of pathology diagnostic services. Arch Pathol Lab Med. 1987;111:646–52. [PubMed] [Google Scholar]

- 3.Krupinski E, Weinstein RS, Bloom KJ, Rozek LS. Progress in telepathology: System implementation and testing. Adv Pathol Lab Med. 1993;6:63–87. [Google Scholar]

- 4.Weinstein RS, Descour MR, Liang C, Bhattacharyya AK, Graham AR, Davis JR, et al. Telepathology overview: From concept to implementation. Hum Pathol. 2001;32:1283–99. doi: 10.1053/hupa.2001.29643. [DOI] [PubMed] [Google Scholar]

- 5.Kayser K, Szymas J, Weinstein RS. Berlin: Electronic Education and Publication in e-Health, VSV Interdisciplinary Medical Publishing; 2005. Telepathology and Telemedicine: Communication; pp. 1–257. [Google Scholar]

- 6.Pantanowitz L, Valenstein PN, Evans AJ, Kaplan KJ, Pfeifer JD, Wilbur DC, et al. Review of the current state of whole slide imaging in pathology. J Pathol Inform. 2011;2:36. doi: 10.4103/2153-3539.83746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Pantanowitz L, Wiley CA, Demetris A, Lesniak A, Ahmed I, Cable W, et al. Experience with multimodality telepathology at the University of Pittsburgh Medical Center. J Pathol Inform. 2012;3:45. doi: 10.4103/2153-3539.104907. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mullick FG, Fontelo P, Pemble C. Telemedicine and telepathology at the Armed Forces Institute of Pathology: History and current mission. Telemed J. 1996;2:187–93. doi: 10.1089/tmj.1.1996.2.187. [DOI] [PubMed] [Google Scholar]

- 9.Williams BH, Mullick FG, Butler DR, Herring RF, O’leary TJ. Clinical evaluation of an international static image-based telepathology service. Hum Pathol. 2001;32:1309–17. doi: 10.1053/hupa.2001.29649. [DOI] [PubMed] [Google Scholar]

- 10.Weinstein RS, Bhattacharyya A, Yu YP, Davis JR, Byers JM, Graham AR, et al. Pathology consultation services via the Arizona-International Telemedicine Network. Arch Anat Cytol Pathol. 1995;43:219–26. [PubMed] [Google Scholar]

- 11.Bhattacharyya AK, Davis JR, Halliday BE, Graham AR, Leavitt SA, Martinez R, et al. Case triage model for the practice of telepathology. Telemed J. 1995;1:9–17. doi: 10.1089/tmj.1.1995.1.9. [DOI] [PubMed] [Google Scholar]

- 12.Ho J, Aridor O, Glinski DW, Saylor CD, Pelletier JP, Selby DM, et al. Needs and workflow assessment prior to implementation of a digital pathology infrastructure for the US Air Force Medical Service. J Pathol Inform. 2013;4:32. doi: 10.4103/2153-3539.122388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Stathonikos N, Veta M, Huisman A, van Diest PJ. Going fully digital: Perspective of a Dutch academic pathology lab. J Pathol Inform. 2013;4:15. doi: 10.4103/2153-3539.114206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Weinstein RS, Graham AR, Lian F, Braunhut BL, Barker GR, Krupinski EA, et al. Reconciliation of diverse telepathology system designs. Historic issues and implications for emerging markets and new applications. APMIS. 2012;120:256–75. doi: 10.1111/j.1600-0463.2011.02866.x. [DOI] [PubMed] [Google Scholar]

- 15.Nordrum I, Eide TJ. Remote frozen section service in Norway. Arch Anat Cytol Pathol. 1995;43:253–6. [PubMed] [Google Scholar]

- 16.Almagro US, Dunn BE, Choi H, Recla DL, Weinstein RS. The gross pathology workstation: An essential component of a dynamic-robotic telepathology system. Cell Vis. 1996;3:470–3. [Google Scholar]

- 17.Dunn BE, Almagro UA, Choi H, Sheth NK, Arnold JS, Recla DL, et al. Dynamic-robotic telepathology: Department of Veterans Affairs feasibility study. Hum Pathol. 1997;28:8–12. doi: 10.1016/s0046-8177(97)90271-9. [DOI] [PubMed] [Google Scholar]

- 18.Weisz-Carrington P, Blount M, Kipreos B, Mohanty L, Lippman R, Todd WM, et al. Telepathology between Richmond and Beckley Veterans Affairs Hospitals: Report on the first 1000 cases. Telemed J. 1999;5:367–73. doi: 10.1089/107830299311934. [DOI] [PubMed] [Google Scholar]

- 19.Dunn BE, Choi H, Almagro UA, Recla DL, Krupinski EA, Weinstein RS. Routine surgical telepathology in the Department of Veterans Affairs: Experience-related improvements in pathologist performance in 2200 cases. Telemed J. 1999;5:323–37. doi: 10.1089/107830299311899. [DOI] [PubMed] [Google Scholar]

- 20.Dunn BE, Choi H, Recla DL, Kerr SE, Wagenman BL. Robotic surgical telepathology between the Iron Mountain and Milwaukee Department of Veterans Affairs Medical Centers: A 12-year experience. Hum Pathol. 2009;40:1092–9. doi: 10.1016/j.humpath.2009.04.007. [DOI] [PubMed] [Google Scholar]

- 21.Halliday BE, Bhattacharyya AK, Graham AR, Davis JR, Leavitt SA, Nagle RB, et al. Diagnostic accuracy of an international static-imaging telepathology consultation service. Hum Pathol. 1997;28:17–21. doi: 10.1016/s0046-8177(97)90273-2. [DOI] [PubMed] [Google Scholar]

- 22.Krupinski EA, Weinstein RS, Rozek LS. Experience-related differences in diagnosis from medical images displayed on monitors. Telemed J. 1996;2:101–8. doi: 10.1089/tmj.1.1996.2.101. [DOI] [PubMed] [Google Scholar]

- 23.Krupinski EA, Radvany M, Levy A, Ballenger D, Tucker J, Chacko A, et al. Enhanced visualization processing: Effect on workflow. Acad Radiol. 2001;8:1127–33. doi: 10.1016/S1076-6332(03)80725-0. [DOI] [PubMed] [Google Scholar]

- 24.Krupinski EA, Tillack AA, Richter L, Henderson JT, Bhattacharyya AK, Scott KM, et al. Eye-movement study and human performance using telepathology virtual slides: Implications for medical education and differences with experience. Hum Pathol. 2006;37:1543–56. doi: 10.1016/j.humpath.2006.08.024. [DOI] [PubMed] [Google Scholar]

- 25.Gegenfurtner A, Lehtinen E, Saljo R. Expertise differences in the comprehension of visualizations: A meta-analysis of eye-tracking research in professional domains. Educ Psychol Rev. 2011;23:523–52. [Google Scholar]

- 26.Krupinski EA, Graham AR, Weinstein RS. Characterizing the development of visual search expertise in pathology residents viewing whole slide images. Hum Pathol. 2013;44:357–64. doi: 10.1016/j.humpath.2012.05.024. [DOI] [PubMed] [Google Scholar]

- 27.Weinstein RS. The S-curve framework: Predicting the future of anatomic pathology. Arch Pathol Lab Med. 2008;132:739–42. doi: 10.5858/2008-132-739-TSFPTF. [DOI] [PubMed] [Google Scholar]

- 28.Weinstein RS. Risks and rewards of pathology innovation: The academic pathology department as a business incubator. Arch Pathol Lab Med. 2009;133:580–6. doi: 10.5858/133.4.580. [DOI] [PubMed] [Google Scholar]

- 29.Weinstein RS, Graham AR, Richter LC, Barker GP, Krupinski EA, Lopez AM, et al. Overview of telepathology, virtual microscopy, and whole slide imaging: Prospects for the future. Hum Pathol. 2009;40:1057–69. doi: 10.1016/j.humpath.2009.04.006. [DOI] [PubMed] [Google Scholar]

- 30.Graham AR, Bhattacharyya AK, Scott KM, Lian F, Grasso LL, Richter LC, et al. Virtual slide telepathology for an academic teaching hospital surgical pathology quality assurance program. Hum Pathol. 2009;40:1129–36. doi: 10.1016/j.humpath.2009.04.008. [DOI] [PubMed] [Google Scholar]

- 31.López AM, Graham AR, Barker GP, Richter LC, Krupinski EA, Lian F, et al. Virtual slide telepathology enables an innovative telehealth rapid breast care clinic. Hum Pathol. 2009;40:1082–91. doi: 10.1016/j.humpath.2009.04.005. [DOI] [PubMed] [Google Scholar]

- 32.Ooms EC, Anderson WA, Alons CL, Boon ME, Veldhuizen RW. Analysis of the performance of pathologists in the grading of bladder tumors. Hum Pathol. 1983;14:140–3. doi: 10.1016/s0046-8177(83)80242-1. [DOI] [PubMed] [Google Scholar]

- 33.Abel PD, Henderson D, Bennett MK, Hall RR, Williams G. Differing interpretations by pathologists of the pT category and grade of transitional cell cancer of the bladder. Br J Urol. 1988;62:339–42. doi: 10.1111/j.1464-410x.1988.tb04361.x. [DOI] [PubMed] [Google Scholar]

- 34.Robertson AJ, Beck JS, Burnett RA, Howatson SR, Lee FD, Lessells AM, et al. Observer variability in histopathological reporting of transitional cell carcinoma and epithelial dysplasia in bladders. J Clin Pathol. 1990;43:17–21. doi: 10.1136/jcp.43.1.17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Epstein JI, Amin MB, Reuter VR, Mostofi FK. The World Health Organization/International Society of Urological Pathology consensus classification of urothelial (transitional cell) neoplasms of the urinary bladder. Bladder Consensus Conference Committee. Am J Surg Pathol. 1998;22:1435–48. doi: 10.1097/00000478-199812000-00001. [DOI] [PubMed] [Google Scholar]

- 36.Gleason DF. Histologic grading of prostate cancer: A perspective. Hum Pathol. 1992;23:273–9. doi: 10.1016/0046-8177(92)90108-f. [DOI] [PubMed] [Google Scholar]

- 37.de las Morenas A, Siroky MB, Merriam J, Stilmant MM. Prostatic adenocarcinoma: Reproducibility and correlation with clinical stages of four grading systems. Hum Pathol. 1988;19:595–7. doi: 10.1016/s0046-8177(88)80211-9. [DOI] [PubMed] [Google Scholar]

- 38.Ten Kate FJ, Gallee MP, Schmitz PI, Joebsis AC, van der Heul RO, Prins EF, Blom JHM. Problems in grading of prostatic carcinoma. Interobserver reproducibility of five different grading systems. World J Urol. 1986;4:147–52. [Google Scholar]

- 39.Bain G, Koch M, Hanson J. Feasibility of grading prostatic carcinomas. Arch Pathol Lab Med. 1982;106:265–7. [Google Scholar]

- 40.di Loreto C, Fitzpatrick B, Underhill S, Kim DH, Dytch HE, Galera-Davidson H, et al. Correlation between visual clues, objective architectural features, and interobserver agreement in prostate cancer. Am J Clin Pathol. 1991;96:70–5. doi: 10.1093/ajcp/96.1.70. [DOI] [PubMed] [Google Scholar]

- 41.Gallee MP, Ten Kate FJ, Mulder PG, Blom JH, van der Heul RO. Histological grading of prostatic carcinoma in prostatectomy specimens. Comparison of prognostic accuracy of five grading systems. Br J Urol. 1990;65:368–75. doi: 10.1111/j.1464-410x.1990.tb14758.x. [DOI] [PubMed] [Google Scholar]

- 42.Cintra ML, Billis A. Histologic grading of prostatic adenocarcinoma: Intraobserver reproducibility of the Mostofi, Gleason and Böcking grading systems. Int Urol Nephrol. 1991;23:449–54. doi: 10.1007/BF02583988. [DOI] [PubMed] [Google Scholar]

- 43.Allsbrook WC, Jr, Mangold KA, Johnson MH, Lane RB, Lane CG, Epstein JI. Interobserver reproducibility of Gleason grading of prostatic carcinoma: General pathologist. Hum Pathol. 2001;32:81–8. doi: 10.1053/hupa.2001.21135. [DOI] [PubMed] [Google Scholar]

- 44.Netto GJ, Eisenberger M, Epstein JI TAX 3501 Trial Investigators. Interobserver variability in histologic evaluation of radical prostatectomy between central and local pathologists: Findings of TAX 3501 multinational clinical trial. Urology. 2011;77:1155–60. doi: 10.1016/j.urology.2010.08.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.May M, Brookman-Amissah S, Roigas J, Hartmann A, Störkel S, Kristiansen G, et al. Prognostic accuracy of individual uropathologists in noninvasive urinary bladder carcinoma: A multicentre study comparing the 1973 and 2004 World Health Organisation classifications. Eur Urol. 2010;57:850–8. doi: 10.1016/j.eururo.2009.03.052. [DOI] [PubMed] [Google Scholar]