Abstract

The Where’s Waldo problem concerns how individuals can rapidly learn to search a scene to detect, attend, recognize, and look at a valued target object in it. This article develops the ARTSCAN Search neural model to clarify how brain mechanisms across the What and Where cortical streams are coordinated to solve the Where’s Waldo problem. The What stream learns positionally-invariant object representations, whereas the Where stream controls positionally-selective spatial and action representations. The model overcomes deficiencies of these computationally complementary properties through What and Where stream interactions. Where stream processes of spatial attention and predictive eye movement control modulate What stream processes whereby multiple view- and positionally-specific object categories are learned and associatively linked to view- and positionally-invariant object categories through bottom-up and attentive top-down interactions. Gain fields control the coordinate transformations that enable spatial attention and predictive eye movements to carry out this role. What stream cognitive-emotional learning processes enable the focusing of motivated attention upon the invariant object categories of desired objects. What stream cognitive names or motivational drives can prime a view- and positionally-invariant object category of a desired target object. A volitional signal can convert these primes into top-down activations that can, in turn, prime What stream view- and positionally-specific categories. When it also receives bottom-up activation from a target, such a positionally-specific category can cause an attentional shift in the Where stream to the positional representation of the target, and an eye movement can then be elicited to foveate it. These processes describe interactions among brain regions that include visual cortex, parietal cortex, inferotemporal cortex, prefrontal cortex (PFC), amygdala, basal ganglia (BG), and superior colliculus (SC).

Keywords: visual search, Where’s Waldo problem, spatial attention, object attention, category learning, gain field, reinforcement learning, eye movement

1. Introduction

This paper develops a neural model, called the ARTSCAN Search model (Figure 1), to explain how the brain solves the Where’s Waldo problem; in particular, how individuals can rapidly search a scene to detect, attend, recognize and look at a target object in it. The model predicts how the brain overcomes the deficiencies of computationally complementary properties of the brain’s What and Where cortical processing streams. The ventral What stream is associated with object learning, recognition, and prediction, whereas the dorsal Where stream carries out processes such as object localization, spatial attention, and eye movement control (Ungerleider and Mishkin, 1982; Mishkin et al., 1983; Goodale and Milner, 1992). To achieve efficient object recognition, the What stream learns object category representations that are increasingly invariant under view, position, and size changes at higher processing stages. Such invariance enables objects to be learned and recognized without causing a combinatorial explosion. However, by stripping away the positional coordinates of each object exemplar, the What stream loses the ability to command actions to the positions of valued objects. The Where stream computes positional representations of the world and controls actions to acquire objects in it, but does not represent detailed properties of the objects themselves. The ARTSCAN Search model shows how What stream properties of positionally-invariant recognition and Where stream properties of positionally-selective search and action can interact to achieve Where’s Waldo searches.

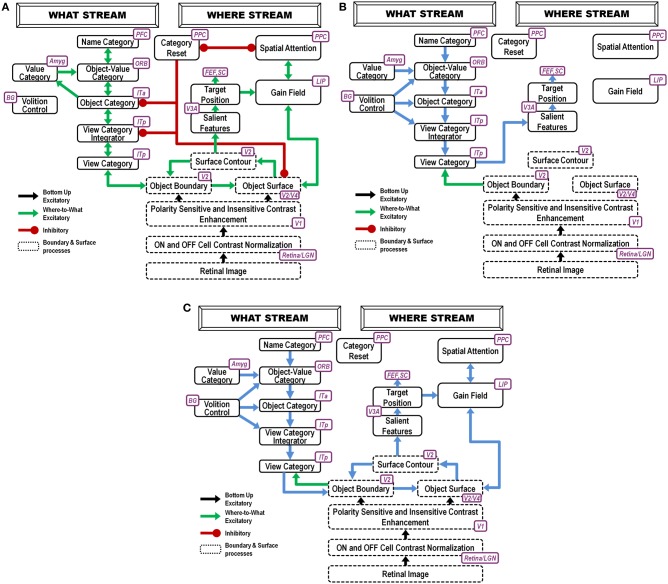

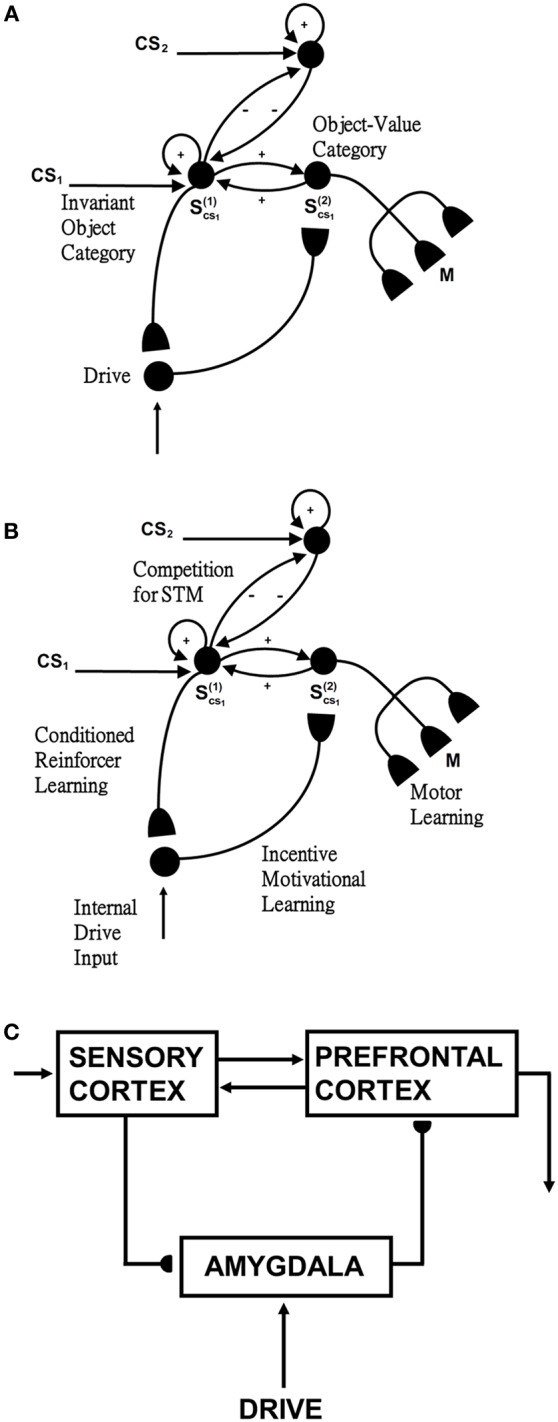

Figure 1.

ARTSCAN Search diagram. The dashed boxes indicate boundary and surface processes. (A) Category learning. The arrows represent the excitatory cortical processes from Where cortical stream to What cortical stream whereby invariant category learning and recognition, and reinforcement learning, occur. The connections ending in circular disks indicate inhibitory connections. (B) Direct pathway of top-down primed search from the What to the Where cortical stream. (C) Indirect pathway of top-down primed search from the What to the Where cortical stream. In both (B) and (C), the green arrows represent bottom-up image-driven processes and the blue arrows represent top-down processes from What cortical stream to Where cortical stream. See Figures 5, 6 and surrounding text for more details about the temporal progression of top-down searches. ITa, anterior part of inferotemporal cortex; ITp, posterior part of inferotemporal cortex; PPC, posterior parietal cortex; LIP, lateral intra-parietal cortex; LGN, lateral geniculate nucleus; ORB, orbitofrontal cortex; Amyg, amygdala; BG, basal ganglia; PFC, prefrontal cortex; SC, superior colliculus; V1 and V2, primary and secondary visual areas; V3 and V4, visual areas 3 and 4.

The model’s Where cortical stream processes of spatial attention and predictive eye movement control modulate What cortical stream processes whereby multiple view- and positionally-specific object categories are learned and associatively linked to view- and positionally-invariant object categories through both bottom-up and attentive top-down interactions. Gain fields control retinotopic and head-center coordinate transformations that enable spatial attention and predictive eye movements to carry out this role. In addition, What stream cognitive-emotional learning processes enable the focusing of motivated attention upon the invariant object categories of desired objects.

To carry out a goal-directed search, the model can use either a cognitive name or motivational drive to prime a view- and positionally-invariant object category representation in its What cortical stream. A major design challenge for the model is to show how priming of such a positionally-invariant category can drive a search that finds Waldo at a particular position. In particular, how does a positionally-invariant representation in the What stream shift spatial attention in the Where stream to a representation of Waldo’s position and activate an eye movement to foveate that position?

This is proposed to happen as follows: A volitional signal can convert the prime of the invariant object category into suprathreshold activation of the category. Once activated, the invariant category can, in turn, prime What stream view- and positionally-selective categories. When combined with bottom-up activation by the desired target of the positionally-selective category that represents the target’s identity and position, this positionally-selective category can achieve suprathreshold activation. It can then cause spatial attention to shift in the Where stream to a representation of the target’s position, after which an eye movement can be elicited to acquire it.

As illustrated in Figure 1, these processes are assumed to occur in the model analogs of the following brain regions: Spatial attention is carried out in the posterior parietal cortex (PPC). The view- and positionally-selective categories are learned in the posterior inferotemporal cortex (ITp). View- and positionally-invariant categories are learned in the anterior inferotemporal cortex (ITa). The cognitive priming by names arises in the prefrontal cortex (PFC), whereas motivational priming arises in the amygdala (AMYG) and activates object-value categories in the orbitofrontal cortex (ORB). The volitional signals arise in the basal ganglia (BG). The selection and control of eye movements includes cortical area V3A, the frontal eye fields (FEF), and the superior colliculus (SC). The gain fields within the lateral interparietal cortex (LIP) are activated by V3A and mediate between PPC and visual cortical areas that include V4. Preprocessing of visual boundary and surface representations occurs in the retina and lateral geniculate nucleus (LGN) and cortical areas V1, V2, and V4. More detailed explanations are provided below. The model has been briefly reported in Chang et al. (2009a,b, 2013).

This theoretical synthesis unifies and extends several previous neural models, notably the ARTSCAN model of view-invariant object category learning (Grossberg, 2007, 2009; Fazl et al., 2009; Foley et al., 2012), its extension to the positionally-invariant ARTSCAN, or pARTSCAN, model of view-, position-, and size-invariant object category learning (Cao et al., 2011), and the CogEM (Cognitive-Emotional-Motor) model of cognitive-emotional learning and motivated attention (Grossberg, 1971, 1972a,b, 1975, 1982, 1984; Grossberg and Levine, 1987; Grossberg and Schmajuk, 1987; Grossberg and Seidman, 2006; Dranias et al., 2008; Grossberg et al., 2008). pARTSCAN’s ability to recognize objects in multiple positions is needed as part of the Where’s Waldo search process. In particular, name or motivational primes can then, supplemented by a volitional signal, activate an object-value category and, from there, an object category that has view- and positionally-invariant properties. Such cognitive-emotional and motivated attention processes are modeled in the CogEM model, which is joined with pARTSCAN to enable motivationally-primed searches in the ARTSCAN Search model.

All of these component models have quantitatively explained and predicted large psychological and neurobiological databases. Some of these explanations are reviewed below. ARTSCAN Search preserves these previously demonstrated explanatory and predictive capabilities, while also making novel predictions.

During a Where’s Waldo search, when the positionally-invariant category is activated in the What stream, it needs to be able to activate, through top-down learned connections, its corresponding view- and positionally-selective categories in the What stream. The pARTSCAN model included only bottom-up learned links from view- and positionally-selective category representations in ITp to view- and positionally-invariant category representations in ITa, and then to naming categories in PFC. The ARTSCAN Search model incorporates, in addition, reciprocal top-down learned links from PFC to ITa, and from the invariant ITa categories to the variant ITp categories (Figures 1B,C).

Such reciprocal links are a part of Adaptive Resonance Theory, or ART, learning dynamics whereby invariant recognition categories and their naming categories are learned. As explained by ART (Grossberg, 1980b, 2012; Carpenter and Grossberg, 1991), these top-down links dynamically stabilize category learning against catastrophic forgetting. With all these top-down learned links in place, activating a name for the desired goal object can activate the corresponding positionally-invariant category representation, which in turn can attentively prime all the positionally-selective categories where the sought-after target object may be. When one of the primed positionally-selective categories is also activated bottom-up by the sought-after object, that category can fire, and can thereby activate the corresponding positional representation in PPC (Figures 1B,C). This What-to-Where stream interaction can draw spatial attention to the position of the desired target, which in turn can activate an eye movement to foveate the target before further engaging it. In addition to these top-down connections, volition control signals from the BG (Figures 1B,C), which were also not part of the pARTSCAN model, ensure that the appropriate top-down connections can fully activate, rather than just subliminally prime, their target cells (Figures 1B,C).

The ARTSCAN Search model hereby incorporates both cognitive-emotional and cognitive-perceptual bi-directional interactions between cortical streams to achieve both Where-to-What invariant object category learning and What-to-Where primed search for a desired object.

Sections 2 and 3 summarize how the ARTSCAN model embodies solutions to three important design problems in order to learn view-invariant object categories: the view-to-binding problem, the coordination of spatial attention and visual search, and the complementary interactions that occur between spatial attention and object attention. Section 3 summarizes how the ARTSCAN model regulates spatial attention using predictive remapping, surface contour signals, and eye movement search. Section 4 summarizes how the pARTSCAN model enables learning of object categories that are view-invariant and positionally-invariant. They are also size-invariant, but that is not a focus of the present study. Section 5 describes how CogEM cognitive-emotional interactions regulate reinforcement learning and motivated attention. Section 6 describes how top-down primed cognitive and motivational searches are incorporated into the ARTSCAN Search model via What-to-Where stream interactions, including the top-down learned cognitive and motivational priming connections, and the volitional signals that are needed to convert subthreshold primes into suprathreshold top-down signals. Section 7 provides a detailed, but non-mathematical, exposition of all the ARTSCAN Search neural mechanisms. This section also lists the equation numbers for the corresponding model equations that are defined in the Appendix, and provides pointers to the relevant model circuit diagrams. This three-way coordination of expository information is aimed at making the model more accessible. Section 8 describes computer simulations of Where’s Waldo capabilities of the final ARTSCAN Search model. Section 9 provides a discussion and comparison with alternative models. Finally, the Appendix summarizes the model’s mathematical equations and parameters.

2. Some key issues

Many neuroanatomical, electrophysiological, and lesion studies have supported the hypothesis that two parallel, but interacting, visual cortical systems exist (Ungerleider and Mishkin, 1982; Mishkin et al., 1983; Goodale and Milner, 1992). Starting from primary visual cortex, the dorsal Where stream passes through the parietal cortex and controls processes of spatial localization and action. The ventral What stream passes through the inferotemporal cortex and carries out processes of object learning, recognition, and prediction. The inferotemporal cortex and its cortical projections learn to recognize what visual objects are in the world, whereas the parietal cortex and its cortical projections learn to determine where objects are and how to locate them, track them through time, and direct action toward them.

2.1. The view-to-object binding problem

Accumulating evidence supports the hypothesis that the brain learns about individual views of an object, coded by “view-tuned units.” As this happens through time, neurons that respond to different views of the same object learn to activate the same neuronal population, creating a “view-invariant unit.” In other words, the brain learns to link multiple view-specific categories of an object to a view-invariant categorical representation of the object (Baloch and Waxman, 1991; Bülthoff and Edelman, 1992; Seibert and Waxman, 1992; Tanaka, 1993; Logothetis et al., 1994; Bradski and Grossberg, 1995; Bülthoff et al., 1995; Carpenter and Ross, 1995; Riesenhuber and Poggio, 2000; Hung et al., 2005).

Many view-based models have focused on changes in retinal patterns that occur when a three-dimensional (3D) object rotates about its object-centered axis with respect to a fixed observer. However, complex objects are often actively explored with saccadic eye movements. When we consider how eye movements help us to learn about an object, a fundamental view-to-object binding problem must be confronted.

How does the brain know when the views that are foveated on successive saccades belong to the same object, and thereby avoid the problem of erroneously learning to classify parts of different objects together? How does the brain do this without an external teacher under the unsupervised learning conditions that are the norm during many object learning experiences in vivo?

2.2. Coordinating spatial and object attention during view-invariant category learning

The ARTSCAN model proposes how the view-to-object binding problem may be solved through the coordinated use of spatial and object attention. Several authors have reported that the distribution of spatial attention can configure itself to fit an object’s form. Form-fitting spatial attention is sometimes called an attentional shroud (Tyler and Kontsevich, 1995). ARTSCAN explains how an object’s preattentively formed surface representation can induce a form-fitting attentional shroud that is predicted by the model to accomplish two things:

First, a shroud enables eye movements to lock spatial attention onto an object of interest while they explore salient features on the object’s surface, thereby enabling different view-specific categories of the same object to be learned and then linked via associative learning to an emerging view-invariant object category. Consistent psychophysical data of Theeuwes et al. (2010) show that, indeed, the eyes prefer to move within an object rather than to an equally distant different object, other things being equal. Other data show that successive eye movements are not random, but rather tend to be attracted to salient features, such as bounding contours, corners, intersections, and boundary high curvature points (Yarbus, 1961; Jonides et al., 1982; Gottlieb et al., 1998; Krieger et al., 2000; Fecteau and Munoz, 2006). Consistent with these data, the ARTSCAN model predicts, as explained in section 3, how the surface contour signals that initiate figure-ground separation (Grossberg, 1994, 2007) may be used to compute target positions at salient features of an object that provide the most information for the view-specific category learning that then gets linked to a view-invariant object category.

Second, a shroud keeps the emerging view-invariant object category active while different views of the object are learned and associated with it. This is proposed to happen through a temporally coordinated cooperation between the brain’s What and Where cortical processing streams: The Where stream maintains an attentional shroud through a surface-shroud resonance that is supported by positive feedback signals between cortical areas V4 and PPC, among other brain regions. When an object’s surface is part of a surface-shroud resonance, spatial attention is focused on it. When the eyes fixate a particular view of the attended object, a view-specific category is learned by the What stream, say in ITp. This category focuses object attention via a learned top-down expectation on the critical features in the visual cortex that will be used to recognize that view and its variations in the future. When the first such view-specific category is learned, it also activates a cell population at a higher cortical level, say ITa, that will become the view-invariant object category.

Suppose that the eyes or the object move sufficiently to expose a new view whose critical features are significantly different from the critical features that are used to recognize the first view. Then the first view category is reset, or inhibited. This happens due to the mismatch of its learned top-down expectation, or prototype of attended critical features, with the newly incoming view information to the visual cortex (Grossberg, 1980a, 2012; Carpenter and Grossberg, 1987, 1991). This top-down prototype focuses object attention on the incoming visual information. Object attention hereby helps to control which view-specific categories are learned by determining when the currently active view-specific category should be reset, and a new view-specific category should be activated. However, the view-invariant object category should not be reset every time a view-specific category is reset, or else it can never become view-invariant by being associated with multiple view-specific categories. This is what the attentional shroud accomplishes: It inhibits a tonically-active reset signal that would otherwise shut off the view-invariant category when each view-based category is reset (Figure 1). As the eyes foveate a sequence of object views through time, they trigger learning of a sequence of view-specific categories, and each of them is associatively linked through learning with the still-active view-invariant category.

When the eyes move off an object, its attentional shroud collapses in the parietal cortex of the Where stream, thereby transiently disinhibiting a parietal reset mechanism that shuts off the view-invariant category in the What stream (Figure 1). When the eyes look at a different object, its shroud can form in the Where stream and a new view-specific category can be learned that can, in turn, activate the cells that will become a new view-invariant category in the What stream.

2.3. Supportive psychophysical and neurobiological data

The ARTSCAN model prediction that a spatial attention shift (shroud collapse) causes a transient reset burst in parietal cortex that, in turn, causes a shift in categorization rules (new object category activation) has been supported by experiments using rapid event-related functional magnetic resonance imaging in humans (Chiu and Yantis, 2009). These coordinated effects also provide a neurophysiological explanation of how attention can be disengaged, moved, and engaged by different object surfaces (Posner, 1980).

When a surface-shroud resonance forms, positive feedback from a shroud to its surface is also predicted to increase the contrast gain of the attended surface, as has been reported in both psychophysical experiments (Carrasco et al., 2000) and neurophysiological recordings from cortical areas V4 (Reynolds et al., 1999, 2000; Reynolds and Desimone, 2003). In addition, the surface-shroud resonance strengthens feedback signals between the attended surface and its generative boundaries, thereby facilitating figure-ground separation of distinct objects in a scene (Hubel and Wiesel, 1959; Grossberg, 1994, 1997; Grossberg and Swaminathan, 2004; Grossberg and Yazdanbakhsh, 2005). These experiments, and others summarized below, provide important psychophysical and neurobiological markers for testing predictions of the model.

3. ARTSCAN model main concepts

This section outlines the main concepts from the FACADE, ARTSCAN, pARTSCAN, and CogEM models that are unified and extended in the ARTSCAN Search model.

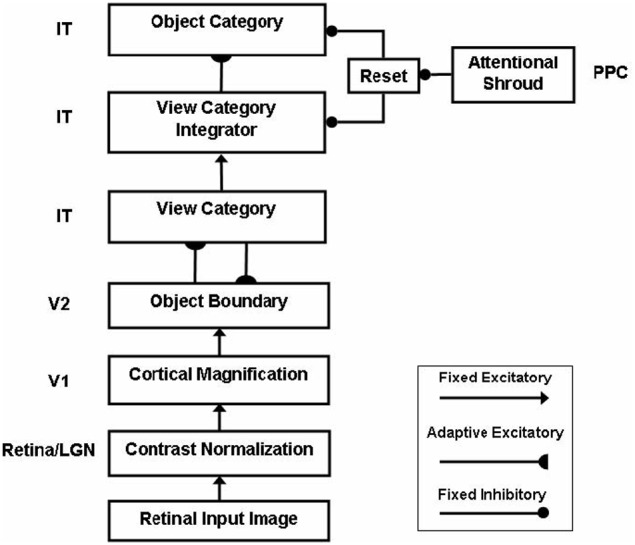

3.1. Image processing and spatial attention

Scenic inputs are processed in a simplified model retina/LGN by a shunting on-center off-surround network that contrast-normalizes the image. In the full FACADE model, and its extension and refinement by the 3D LAMINART model, object surface representations are formed in stages within the V1 blobs, V2 thin stripes, and V4. The current model does not consider 3D figure-ground separation of partially occluded objects, so can restrict its attention to a 2D filling-in process within the model analog of V2 thin stripes (Figure 1A, V2/V4) that is confined by object boundaries that form in the model analog of V2 pale stripes (Figure 1A, V2). The surfaces topographically activate spatial attention to induce a surface-fitting attentional shroud in the model PPC (Figure 1A, PPC) through a gain field (Figure 1A, LIP) that transforms the retinotopic coordinates of the surface into the head-centric coordinates of the shroud. This transformation maintains shroud stability during eye movements that explore different views of the object surface. In particular, the gain field is updated by predictive eye movement signals that are derived from surface contour signals (Figure 1, V2) from filled-in surfaces to their generative boundaries. Surface contour signals are generated by contrast-sensitive on-center off-surround networks that receive topographic inputs from their filled-in surface representations. Due to their contrast-sensitivity, they occur at the bounding contours of surface regions at which brightness or color values change suddenly across space.

3.2. Figure-ground separation and surface contour signals

Surface contour signals from a surface back to its generative boundaries strengthen the perceptual boundaries that will influence object percepts and recognition events, inhibit irrelevant boundaries, and trigger figure-ground separation (Grossberg, 1994, 1997; Kelly and Grossberg, 2000; Grossberg and Yazdanbakhsh, 2005). When the surface contrast is enhanced by top-down spatial attention (Figure 1A, PPC-LIP-V2/V4) as part of a surface-shroud resonance, its surface contour signals, because they are contrast-sensitive, become stronger, and thus its generative boundaries become stronger as well, thereby facilitating figure-ground separation. This feedback interaction from surfaces to boundaries via surface contour signals is predicted to occur from V2 thin stripes to V2 pale stripes, respectively.

3.3. Linking figure-ground separation to eye movement control

Corollary discharges are derived from these surface contour signal (Figure 1A, V3A, Nakamura and Colby, 2000; Caplovitz and Tse, 2007). They are predicted to generate saccadic commands that are restricted to the attended surface (Theeuwes et al., 2010) until the shroud collapses and spatial attention shifts to enshroud another object.

It is not possible to generate eye movements that are restricted to a single object until that object is separated from other objects in a scene by figure-ground separation. Various neurophysiological data support the idea that key steps in figure-ground separation occur in cortical area V2 (e.g., Qiu and von der Heydt, 2005). Thus, these eye movement commands are generated no earlier than cortical area V2. Surface contour signals are predicted to be computed in V2 (Grossberg, 1994). They are plausible candidates from which to derive eye movement target commands at a later processing stage because they are stronger at contour discontinuities and other distinctive contour features that are typical end points of saccadic movements. ARTSCAN proposes how surface contour signals are contrast-enhanced at a subsequent processing stage to choose the position of their highest activity as the target position of the next saccadic eye movement. The ARTSCAN model suggests that this choice takes place in cortical area V3A, which is known to be a region where vision and motor properties are both represented, indeed that “neurons within V3A… process continuously moving contour curvature as a trackable feature… not to solve the ‘ventral problem’ of determining object shape but in order to solve the ‘dorsal problem’ of what is going where” (Caplovitz and Tse, 2007, p. 1179).

3.4. Predictive remapping, gain fields, and shroud stability

These eye movement target positions are chosen before the eyes actually move. In addition to being relayed to regions that command the next eye movements, such as the FEF and SC (see Figure 1), they also maintain the stability of the active shroud in head-centered coordinates within the PPC, so that the shroud does not collapse every time the eyes move. They do this by controlling eye-sensitive gain fields that update the active shroud’s head-centered representation even before the eyes move to the newly commanded position. These gain fields thus carry out predictive remapping of receptive fields during eye movements. These ARTSCAN mechanisms new light on electrophysiological data showing perisaccadic (around the time of the saccade) remapping of receptive fields in parietal areas, including the lateral intraparietal cortex (LIP; Andersen et al., 1990; Duhamel et al., 1992) and the FEF (Goldberg and Bruce, 1990), as well as more modest remapping in V4 (Tolias et al., 2001). In particular, attended targets do not cause new transient activity in these regions after saccades (see Mathôt and Theeuwes, 2010 for a review). ARTSCAN predicts that the anatomical targets of these gain fields include an active shroud (viz., a form-sensitive distribution of spatial attention) in PPC that inhibits the reset of view-invariant object categories in ITa via a reset mechanism that transiently bursts when a shift of spatial attention occurs to a new object. This prediction suggests that manipulations of reset, such as those proposed by Chiu and Yantis (2009), be combined with manipulations of predictive remapping of receptive fields, such as those proposed by Andersen et al. (1990) and Duhamel et al. (1992).

4. pARTSCAN: positionally-invariant object learning and supportive neurophysiological data

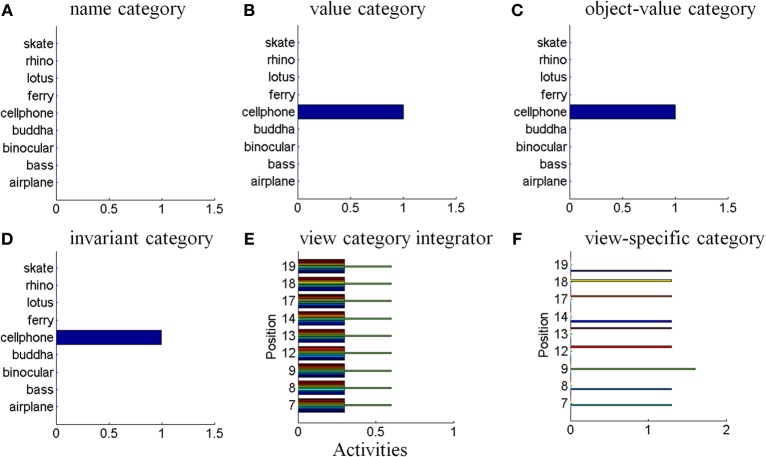

ARTSCAN does not explain how an object that is viewed at more peripheral retinal positions can be associated through learning with the same object category. However, peripheral vision makes important contributions to the execution of search tasks (Erkelens and Hooge, 1996). Electrophysiological data show that cells in the inferotemporal (IT) cortex respond to the same object at different retinal positions (Gross et al., 1972; Desimone and Gross, 1979; Ito et al., 1995; Booth and Rolls, 1998), and the selectivity to objects of an IT neuron can be altered by experiences with objects at such positions (Li and DiCarlo, 2008). The pARTSCAN extension of ARTSCAN (Cao et al., 2011), shown in Figure 2, explains how positionally-invariant object learning can be achieved.

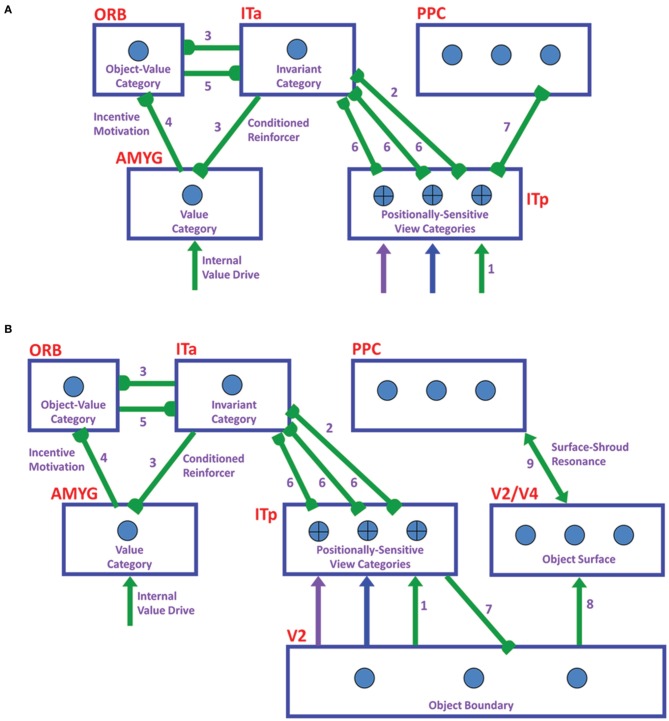

Figure 2.

Microcircuit of the pARTSCAN model (Cao et al., 2011; Figure 2). See text for details.

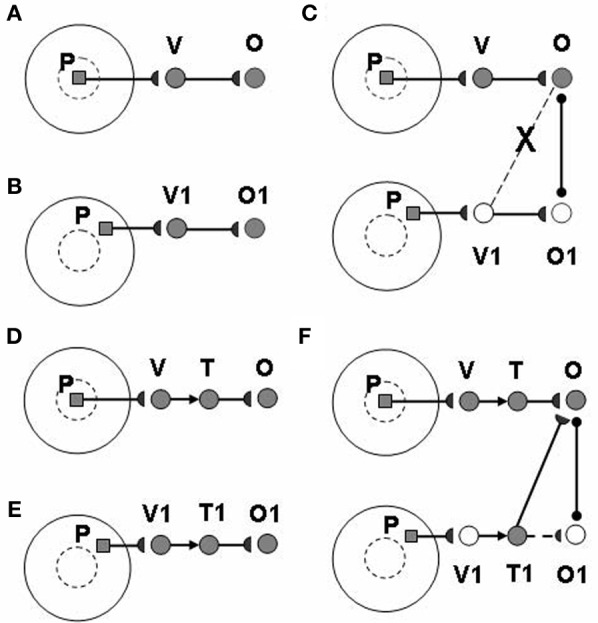

pARTSCAN builds on ARTSCAN by proposing how the following additional processes in the What cortical processing stream enable both view-invariant and positionally-invariant object categories to be learned: IT cells with persistent activity, defined by view category integrator cells; and a combination of normalized object category competition and a view-to-object learning law which together ensure that unambiguous views have a larger effect on object recognition than ambiguous views. Persistently firing neurons in the inferotemporal cortex have been observed in neurophysiological experiments (Fuster and Jervey, 1981; Miyashita and Chang, 1988; Tomita et al., 1999; Brunel, 2003), but not given a functional interpretation in terms of positionally-invariant object category learning. pARTSCAN also simulates neurophysiological data of Li and DiCarlo (2008) from monkeys showing how unsupervised natural experience in a target swapping experiment can gradually alter object representations in IT. The swapping procedure is predicted to prevent the reset of the attentional shroud, which would otherwise keep the representations of multiple objects from being combined by learning.

The view category integrator stage in pARTSCAN model occurs between the view category and object category stages (Figure 2). A view category integrator cell, unlike a view-category cell, is not reset when the eyes explore new views of the same object. It gets reset when the invariant object category stage gets reset due to a shift of spatial attention to a different object.

The view category integrator plays a key role in enabling learning of positionally-invariant object categories. Without the view category integrator, the following problem can occur: Suppose that a view of object P is generated by eye fixation in the fovea and sequentially triggers activations of view-specific category V and view-invariant object category O (Figure 3A). If the same object P appears in the periphery of the retina, as in Figure 3B, the model learns a new view-specific category V1 and in turns activates object category O1. Once a saccadic eye movement brings the object P into the foveal region (Figure 3C), it activates the previously learned view-specific category V and the object category O. Without the view category integrator, view category V1 is shut off with the saccade and it cannot learn to be associated with the object category O. As a result, object P learns to activate two object categories O and O1 corresponding to foveal and peripheral positions, respectively, and the same object at different positions can create different object categories. The view category integrator keeps the object from creating multiple object categorical proliferations. In Figures 3D,E, the view category integrators T and T1 preserve the activities of view categories V and V1 and learn connections to object categories O and O1. In Figure 3F, after the object P is foveated again, T1 is still active due to persistent activity, even though V1 is shut off by a saccade. Therefore, view category integrator T1 can be associated with object category O.

Figure 3.

How the view category integrator helps to learn a positionally-invariant object category. See text for details. [Reprinted from Cao et al. (2011), Figure 4, with permission].

In summary, the pARTSCAN model predicts persistent activity in inferotemporal cortex (IT) that enables the model to explain how both view- and positionally-invariant object categories may be learned in cortical area ITa. The same process enables size-invariant categories to be learned. The target swapping experimental data of Li and DiCarlo (2008), which show that IT neuron selectivity to different objects gets reversed at the swap position with increasing exposure, can also be explained using these mechanisms. Finally, pARTSCAN can identify Waldo targets at non-foveated positions, but does not in itself show how these targets can lead to a shift of attention and foveation.

5. Joining invariant category learning with reinforcement learning and motivated attention

The activation of an invariant recognition category by pARTSCAN mechanisms does not reflect the current emotional value of the object. Augmenting pARTSCAN with a CogEM circuit for reinforcement learning and motivated attention enables activation of an invariant category that is currently valued to be amplified by motivational feedback from the reinforcement learning circuit (Figure 4). Then the additional mechanisms of the ARTSCAN Search What-to-Where stream interactions can locate this motivationally salient object.

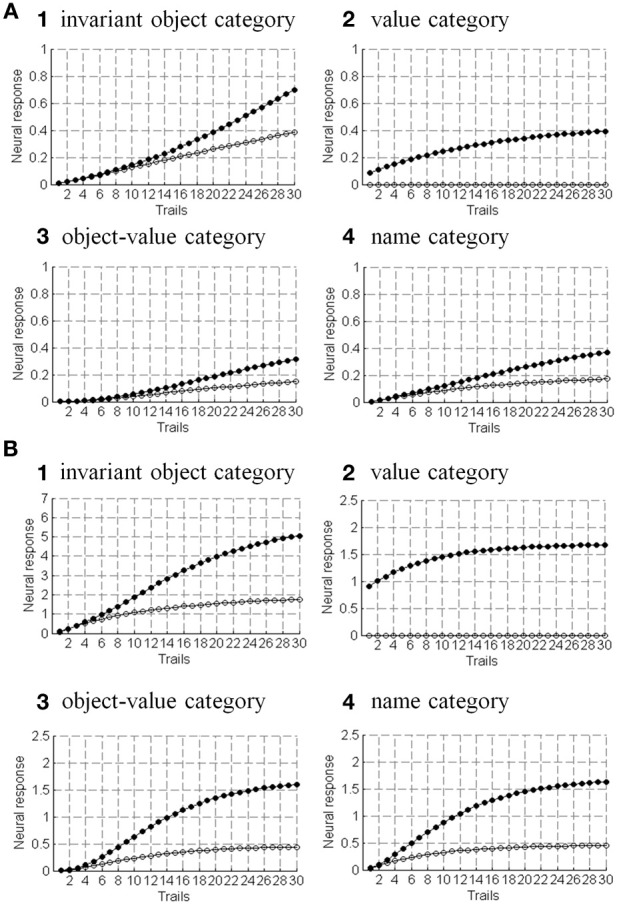

Figure 4.

Reinforcement learning circuit of the CogEM model (Grossberg, 1971, 1975; Grossberg and Seidman, 2006). (A) Processing stages of invariant object category, object-value category, and drive representation (value category) representations. CS, conditioned stimuli; S, sensory representations; and M, motor representations. (B) Conditioned reinforcer learning enables sensory events to activate emotional reactions at drive representations. Incentive motivational learning enables emotions to generate a motivational set that biases the system to process information consistent with that emotion. Motor learning allows sensory and cognitive representations to generate actions. (C) Anatomical interpretations of the processing stages. [Adapted from Grossberg and Seidman (2006), Figures 4, 5, with permission].

Such a CogEM circuit includes interactions between the inferotemporal cortex, orbitofrontal cortex, and amygdala (Figure 4C; Barbas, 1995). Activation of the feedback circuit through inferotemporal-amygdala-orbitofrontal interactions can create a resonance that focuses and maintains motivated attention upon a motivationally salient object category, while also supporting what Damasio has called “core consciousness” of goals and feelings (Grossberg, 1975, 2000; Damasio, 1999).

Such interactions were predicted by the CogEM, model, starting in Grossberg (1971), which simulates how sensory, or object, category representations (e.g., inferotemporal cortex, IT), drive, or value, representations (e.g., amygdala, AMYG), and object-value category representations (e.g., orbitofrontal cortex, ORB) interact via conditioned reinforcement, incentive motivational, and motor learning pathways (Figure 4). Various data support the prediction that drive-sensitive value category cells are found in the amygdala (Aggleton, 1993; LeDoux, 1993). Multimodal amygdala cells that are hunger and satiety selective (Muramoto et al., 1993; Yan and Scott, 1996) and respond in proportion to the value of a food reward have been extensively studied in the primate and rodent (Nishijo et al., 1988; Toyomitsu et al., 2002).

In the CogEM model, in response to visual cues, object-selective sensory representations in the inferotemporal cortex (Figures 4A,C) learn to activate drive representations in the amygdala via learned conditioned reinforcer pathways (Figures 4B,C). Activated drive representations can, in turn, activate the orbitofrontal cortex via learned incentive motivational pathways (Figure 4B). Motivationally salient sensory representations can hereby provide inputs directly to object-value representations (Figure 4A), and indirectly via the two-step learned conditioned reinforcer and incentive motivational pathway through the drive representations (Figures 4A,B). The incentive input determines how vigorously the object-value representation is activated (Rolls, 1999, 2000; Schoenbaum et al., 2003). The most active object-value representations can then select, and focus attention upon, motivationally consistent sensory representations. This selection process is driven by positive feedback from the object-value representations to their sensory representations, combined with competition among the sensory representations (Figure 4A). The motivationally most salient sensory representations can, in turn, attentionally block irrelevant sensory cues.

In summary, the CogEM model simulates how an invariant object category that is learned by pARTSCAN can learn to trigger an inferotemporal-amygdala-orbitofrontal resonance, thereby enabling motivationally enhanced activation of the invariant object category via top-down attentive feedback from the orbitofrontal cortex. Within the additional circuitry of the ARTSCAN Search model, a name category can prime the corresponding orbitofrontal object-value cells to initiate the process whereby a motivationally-enhanced top-down attentional priming signal triggers search for the valued object in the scene.

6. ARTSCAN search: bottom-up and top-down search from the what-to-where streams

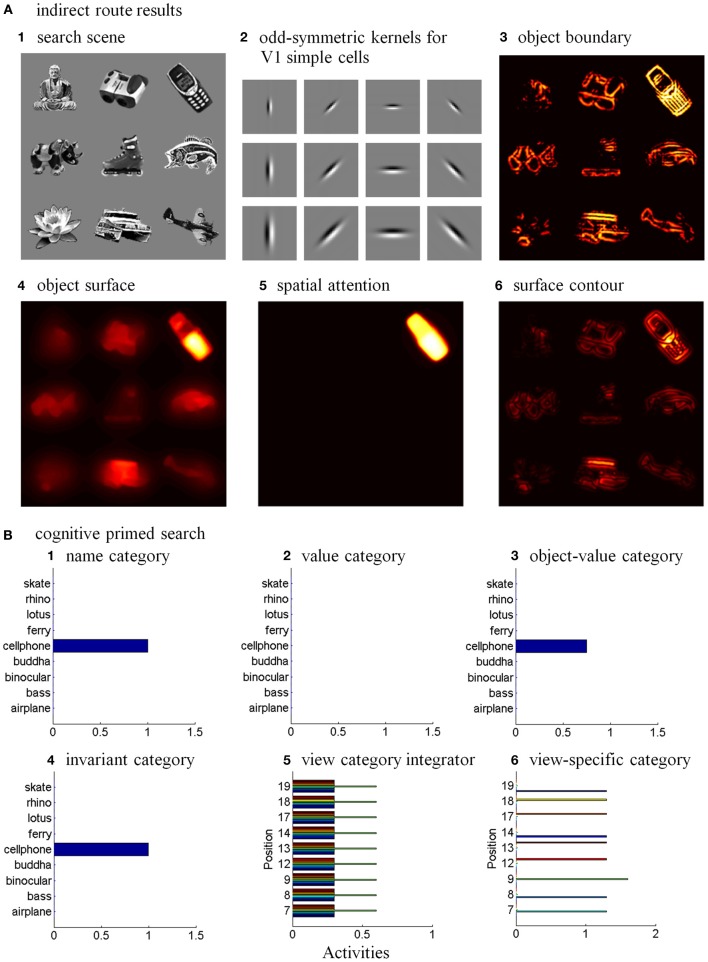

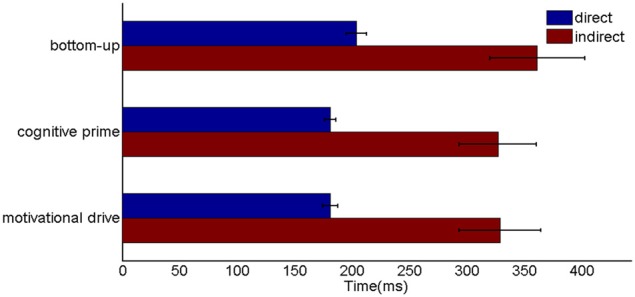

Six different routes can, in principle, drive a Where’s Waldo search (Figure 5): bottom-up direct and indirect routes; top-down cognitive direct and indirect routes; and top-down motivational direct and indirect routes. For completeness, the model was simulated for all six routes, and it was shown that the direct routes can operate more quickly than the indirect routes.

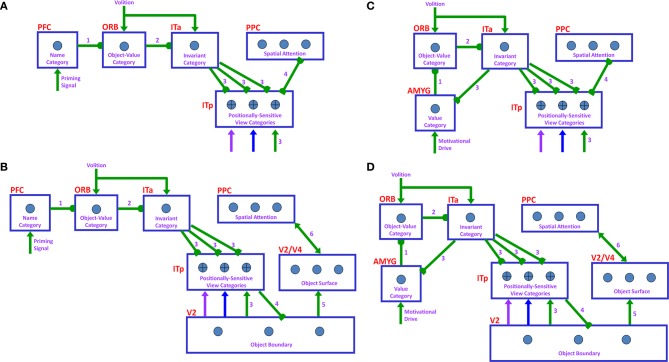

Figure 5.

Bottom-up stimulus-driven What stream recognition to Where stream search and action through (A) a direct What-to-Where pathway and (B) an indirect What-to-Where pathway. Interactions between multiple brain regions, such as ITa, ITp, amygdale, and orbitofrontal cortex (ORB) in the What stream guide Waldo discovery in the posterior cortex (PPC) in the Where stream. The numbers indicate the order of pathway activations. See text for details. [Figure A is adapted with permission from Grossberg (2009), Figure 6].

6.1. Bottom-up direct route

First, bottom-up scenic inputs activate ITp cells that learn view- and positionally-specific categories. These cells also topographically project to PPC, where the target locations of an object are represented (Figure 5A). This is one of the What-to-Where stream interactions in the model.

Second, ITp cells activate view- and positionally-invariant object categories in ITa. These invariant object categories are learned using the Where-to-What stream interactions of the pARTSCAN model whereby an attentional shroud in PPC modulates the activity of an emerging invariant object category in ITa as sequences of view-specific categories of the object are activated, learned, and reset in ITp using reciprocal Adaptive Resonance Theory, or ART, connections between ITp and ITa (Figure 5A). Even if all the objects in the scene are equally salient, they can activate their invariant object categories because of the nature of the normalized quenching competition that occurs among all the categorical processing stages (see section 7.3.7). However, they cannot yet activate an eye movement to foveate one of them.

Third, ITa cells activate AMYG and send inputs to ORB.

Fourth, convergent ITa and AMYG inputs together can activate the corresponding ORB object-value category cells (Grossberg, 1975, 1982; Barbas, 1995, 2000; Schoenbaum et al., 2003) using learned incentive motivational signals from the AMYG. In other words, incentive motivation can amplify activation of a valued object-value category.

Fifth, an activated ORB object-value category can draw motivated attention to a valued object by sending top-down attentional signals back to its ITa source cells. Typically, such top-down attentional signals are modulatory. However, when combined with volitional signals from the BG, they can generate suprathreshold activation of the target ITa cells, thereby enabling the feedback loop between ITa, AMYG, and ORB to close. As a result, a valued ITa invariant object category may be motivationally amplified by an inferotemporal-amygdala-orbitofrontal resonance, which enables it to better compete for object attention with other ITa representations.

Sixth, the amplified ITa cells can then send larger top-down priming signals to all of its ITp representations. The ITp representation whose position corresponds to the valued object is selectively amplified due to the amplification of its bottom-up input from the object by the top-down attentional prime.

Seventh, these selectively amplified ITp cells can send amplified signals to the object position that is represented in the PPC. PPC activation draws spatial attention to that position, which can elicit an eye movement to foveate the desired object.

6.2. Bottom-up indirect route

The sequence from step one to step six in the bottom-up indirect route is the same as for the bottom-up direct route except the ITp cells do not project directly to the PPC (Figure 5B).

Seventh, the selectively amplified ITp cell corresponding to the target position provides top-down excitatory feedback to selectively prime the boundary representation of the Where’s Waldo target object. This boundary representation is hereby enhanced in strength relative to other object boundaries in the scene.

Eighth, the enhanced boundary representation gates the object’s surface filling-in process and thereby increases the contrast of the selected target surface.

Ninth, the enhanced surface representation projects to the PPC to facilitate its competition for spatial attention. As a surface-shroud resonance forms, the target surface can competitively win to form an active shroud which draws spatial attention and an eye movement to the target position.

6.3. Top-down cognitive direct route

Many experiments have shown that top-down mechanisms play an important role in visual processing (e.g., Tomita et al., 1999; Barceló et al., 2000; Miyashita and Hayashi, 2000; Ranganath et al., 2004). The ARTSCENE Search model clarifies how such mechanisms may play an important role during a Where’s Waldo search (Figure 6).

Figure 6.

Top-down name-driven What stream recognition to Where stream search and action through (A) a direct What-to-Where pathway and (B) an indirect What-to-Where pathway. Top-down value-driven What stream recognition to Where stream search and action through (C) a direct What-to-Where pathway, and (D) an indirect What-to-where pathway. See text for details.

In particular, when the name of a desired object is presented to the model, the corresponding name category neuron in PFC can top-down prime the object-value category in ORB (Figure 6A). When BG volitional signals are also activated, this prime can supraliminally activate ORB cells which can, in turn, prime the corresponding view-invariant object category neuron in ITa. Here too a volitional signal can enable the prime to supraliminally activate the primed ITa cells, which can then activate all compatible positionally-selective view categories in ITp. This prime can amplify the ITp category that receives a match from the bottom-up Waldo input. Then the selected category can activate the corresponding position in PPC, which can direct an eye movement and other actions toward Waldo (Figure 6A).

6.4. Top-down cognitive indirect route

This route executes the same top-down pathway as the cognitive direct route from the desired name category neuron to selectively amplify the view-specific category neurons in ITp via the object-value category cells in ORB and view-invariant object category neurons in ITa. The amplified ITp cell activates the same pathways as the bottom-up indirect route from the seventh to ninth steps to create a surface-shroud resonance corresponding to the target object and leading to foveation of this object (Figure 6B).

6.5. Top-down motivational direct route

An object-value category in ORB can be primed by a value category in AMYG via incentive motivational signals (Figure 6C). Then the same process is activated as for the cognitive prime above.

6.6. Top-down motivational indirect route

This route performs similar interactions as the top-down cognitive indirect route except the initial stage begins with priming from the value category in AMYG (Figure 6D).

7. Model description

The ARTSCAN Search model incorporates and unifies the following innovations that go beyond the structure of the ARTSCAN model:

The gain field stage, which mediates the coordinate transformation between a retino-centric object surface representation and a head-centric spatial attention map, is processed by separate and parallel bottom-up and top-down channels, instead of combining them linearly in a single stage, as in ARTSCAN. See section 7.2.1.

As in pARTSCAN (Figure 2), a view category integrator stage occurs after the view category stage in the What stream to enable positionally-invariant as well as view-invariant categories to be learned. View category integrator neurons preserve view-specific category neural activities while the eyes scan the same object, and thereby enable view-specific categories of the same object at different positions to be associated with the same view-invariant object category. See section 7.3.2.

-

Reset is triggered when the total shroud activity reduces below a threshold value due to activity-dependent habituation in the surface-shroud feedback loop. The reset wave is extended to nonspecifically inhibit the spatial attentional map in PPC and the object surface representation in V4, not just ITa, as in ARTSCAN. Such a reset mechanism can more efficiently shut of the entire current surface-shroud resonance to allow a smooth attention shift to another object surface. In addition, as in pARTSCAN, the reset signal inhibits the currently active view category integrator neurons. See section 7.2.4.

Because the reset mechanism in the Where stream can inhibit the spatial attentional map, it is rendered transient by being multiplied, or gated, by a habituative transmitter. Otherwise, it could tonically inhibit the spatial attentional map and prevent the next object from being spatially attended. In contrast, the reset mechanism in the What stream is not gated by a habituative transmitter. This ensures that the view-specific categories of a newly attended object cannot be spuriously associated with the invariant object category of a previously attended object.

Value category and object-value category processing stages from the CogEM model (Figure 4) are added to enable valued categories to be motivationally amplified and attended, thereby facilitating their selection by an inferotemporal-amygdala-orbitofrontal resonance. See sections 7.3.3–7.3.5.

As in CogEM, there are adaptive conditioned reinforcer learning pathways from invariant object categories in ITa to value categories in AMYG, and incentive motivational learning pathways from AMYG to object-value categories in ORB. In addition, and beyond CogEM, ITa can also send adaptive excitatory projections to ORB to enable one-to-many associations to be learned from a given object representation to multiple reinforcers.

Top-down pathways and BG volitional control signals (Figure 6) together enable a top-down search for Waldo to occur from the What stream to the Where stream. The volitionally-enhanced excitability enables modulatory priming stimuli to fire their target cells and send thereby send top-down signals to lower processing stages.

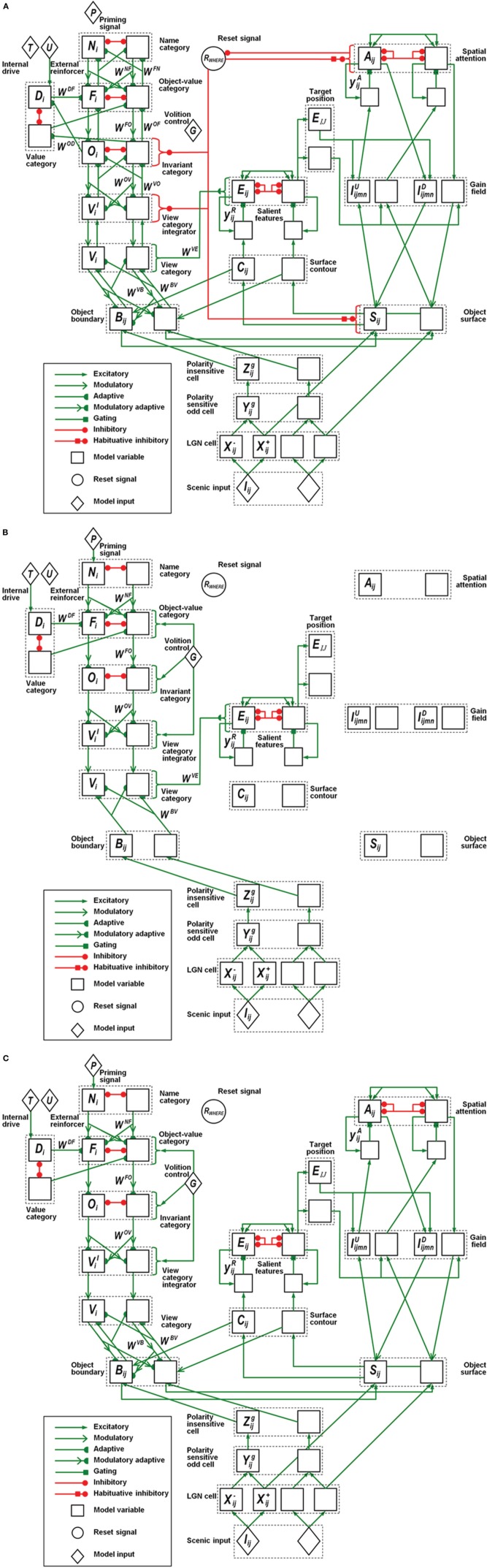

In all, the ARTSCAN Search model includes three component networks: (1) Boundary and Surface Processing, (2) WHAT Stream, and (3) WHERE Stream. Each component consists of several processing stages. Figure 1 shows a block diagram of the main model processing stages. Figure 7 illustrates model circuit interactions more completely.

Figure 7.

Model variables and their computational relations. (A) Category learning. (B) Direct pathway of top-down primed search. (C) Indirect pathway of top-down primed search. The dashed boxes correspond to the layers of the box diagram in Figure 1. Each layer has two neurons indicating the connections to the neighboring layers. Different types of connections correspond to excitatory, adaptive, or inhibitory effects between two layers. The letter inside each neuron refers to the variables or the constant values specified in the Appendix.

7.1. Retina and primary visual cortex processes

7.1.1. Retina and LGN polarity-sensitive cells

Input preprocessing is simplified to include only properties needed to carry out the category-level simulations that are the focus of the article. The model retina and LGN are accordingly lumped together. Together they normalize contrast of the input pattern using polarity-sensitive ON and OFF cells. ON (OFF) cells obey cell membrane, or shunting, equations that receive retinal outputs and generate contrast-normalized activities that discount the illuminant using multiple-scales of on-center off-surround (off-center on-surround) networks, respectively [Equations (A4–A8)]. These cells input to the simple cells in the model’s cortical area V1.

7.1.2. V1 polarity-sensitive oriented simple cells

The polarity-sensitive simple cells [Figure 7; Equations (A9–A14)] in primary visual cortical area V1 (Hubel and Wiesel, 1959, 1962) have elongated excitatory and inhibitory zones that form an oriented receptive field and produce a multiple-scale boundary representation of the image by processing the multiple-scale unoriented output signals from the LGN. Each receptive field consists of polarity-sensitive ON- and OFF-subregions. The ON-subregions receive excitatory ON LGN signals and inhibitory OFF LGN signals, while the OFF-subregions have the converse relation to the LGN channels (Hubel and Wiesel, 1962; Grossberg and Todorović, 1988; Reid and Alonso, 1995; Hirsch et al., 1998; Raizada and Grossberg, 2001).

7.1.3. V1 polarity-insensitive complex cells

Rectified output signals from opposite-polarity like-oriented simple cells at each position input to complex cells, which are therefore polarity-insensitive oriented detectors that are processed at multiple spatial scales [Figure 7; Equations (A15–A17)].

7.1.4. V2 boundaries and surface-to-boundary attentional priming

Because the 2D image database we simulated does not have illusory or missing contours or occlusions, the model simplifies the computation of object boundaries by omitting depth-selective disparity tuning processing in cortical area V1 and boundary completion processing in V2.

Object boundaries [Figure 7; Equations (A18–A20)] are modeled as V2 pale stripe neurons that receive multiple-scale bottom-up inputs from V1 complex cells. These boundaries multiplicatively gate a surface filling-in process, again at multiple scales, within model V2 thin stripe neurons. These boundary-to-surface signals contain the filling-in of surface brightnesses and colors within their borders. The boundaries are also gain-amplified by surface-to-boundary surface contour feedback signals [Figure 7; Equations (A27–A31)]. Top-down attention from a surface-shroud resonance can increase the perceived contrast of an attended surface, which increases the strength of the corresponding surface contour signals, thereby strengthening attend object boundaries as well, while weakening the boundaries of non-attended surfaces. Object boundaries also project to the What stream, where their adaptive pathway embody the learning of view-specific categories in cortical area ITp.

7.1.5. V2 surface filling-in

The filling-in of object surface activities in V2 thin stripe cells takes place within Filling-In Domains (FIDOs) [Figure 7; Equations (22–25)]. Filling-in is activated bottom-up by multiple-scale ON and OFF LGN inputs that activate different FIDOs (Cohen and Grossberg, 1984; Grossberg and Todorović, 1988; Grossberg, 1994).

A weighted sum across the multiple scales of the surface representations [Equation (A26)] generates topographic outputs to the spatial attention region in PPC, where these PPC inputs competitively bid to form a winning attentional shroud. The winning shroud delivers positive feedback to the corresponding surface representation, thereby inducing a surface-shroud resonance that locks spatial attention upon that surface while increasing its contrast.

Successfully filled-in surfaces generate contour-sensitive output signals via surface contours. Surface contours are computed by inputting the filled-in surface activities to a contrast-sensitive on-center off-surround shunting network [Equations (A27–A31)]. The surface contour outputs project back to their generative object boundaries across all scales. As noted in section 7.1.4, when a surface is attended as part of a surface-shroud resonance, its enhanced contrast increases its surface contour outputs which, via surface-to-boundary feedback, strengthens the corresponding boundaries and inhibits the boundaries of unattended surfaces.

The surface-shroud resonance can be inhibited at the FIDOs by a reset signal from the Where processing stream.

7.2. Where stream

A surface-shroud resonance in the Where stream ensures that successive eye fixations are restricted to salient features within the attended surface. These fixations enable the learning of multiple view-specific categories of the object, which can all be associated with the emerging view- and positionally-invariant object category until shroud collapse, and a shift of spatial attention away from the object, cause the invariant object category to be inhibited due to transient disinhibition of the category reset mechanism.

7.2.1. Gain field

Keeping the view-invariant object category active during these sequential saccades within the object requires that the reset mechanism continuously receives a sufficient amount of inhibition from the currently active shroud. In pARTSCAN, the surface representation is computed in retinotopic coordinates that change during a saccade. If all the coordinates of the shroud changed as well, reset could occur whether or not a saccade landed within the same object. Maintaining inhibition of reset is facilitated by computing shrouds in head-centric coordinates. The coordinate transformation from retinotopic to head-centered coordinates uses gain fields (Figure 7), which are known to act on the parietal cortex, notably the lateral intraparietal area (LIP), among other brain regions (Andersen et al., 1985; Colby et al., 1993).

A number of neural models have been proposed for how the outflow commands that control eye movements also activate a parallel corollary-discharge pathway which computes gain fields that transform retinotopic coordinates into head-centered coordinates (Grossberg and Kuperstein, 1986, 1989; Zipser and Andersen, 1988; Gancarz and Grossberg, 1999; Pouget and Snyder, 2000; Xing and Andersen, 2000; Mitchell and Zipser, 2003; Pouget et al., 2003; Cassanello and Ferrera, 2007). Equations (A32–A36) mathematically describe the gain field transformation that is used in this article.

7.2.2. Spatial attention: attentional shroud

The head-centric spatial attention neurons [Figures 1, 7; Equations (A37–A41)] receive bottom-up input from gain field neurons. The spatial attention neurons select a winning shroud through recurrent on-center off-surround interactions whose short-range excitations and surface-shroud positive feedback keep the winning shroud active, while longer-range off-surround feedback inhibits other spatial attentional neurons. The top-down feedback from the selected shroud neurons reaches object surface neurons through gain field neurons. This surface-shroud gain-field-modulated resonant feedback loop links retinotopic surface representations with head-centric spatial attentional shrouds. It is the neural event that corresponds to focusing spatial attention on the object surface.

Decay of an active shroud’s activity below a threshold value triggers a reset signal which, in turn, sends a nonspecific inhibitory signal back to the spatial attention network to ensure that the shroud is totally inhibited. However, the reset mechanism, in the absence of other factors, is tonically active [Equation (A55)]. In order to prevent reset-mediated inhibition from persisting indefinitely due to its tonic inhibition of the spatial attention network, all Where stream reset signals are multiplied, or gated, by an activity-dependent habituative transmitter that causes the gated reset signal to be transiently active [Equations (42, 47)]. Such a transmitter multiplies the reset signal, so when it collapses due to sufficient recent activity of the reset signal, the net reset signal collapses too. After the transient reset signal collapses, spatial attention can shift to another object and the cycle of attention shifting and invariant category learning can continue.

7.2.3. Eye movements to salient surface features and inhbition-of-return

The salient feature neurons [Figure 7; Equations (A43–A46)] receive their largest inputs from the surface contour neurons whose activities are amplified by the active shroud. The surface contour neurons hereby play two roles: (1) they strengthen the boundaries of an attended surface while also inhibiting unrelated boundaries via surface-to-boundary feedback, and (2) they activate a parallel pathway, hypothesized to involve cortical area V3A, that converts the salient features into target positions of saccadic eye movements aimed at the attended surface. This conversion is carried out by a contrast-enhancing recurrent on-center off-surround shunting network that chooses the most active position on the surface contour. This position marks the most salient feature at that time, as well as an “attention pointer” (cf. Cavanagh et al., 2010) to the target position of the next saccade. In this way, the eyes move to foveate the most salient features on the attended object, like corners and intersections.

The eye movement map is gated by habituative transmitters [Equation (A47)]. Once the eyes foveate a saccadic target position, these transmitters deplete in an activity-dependent way, thereby enabling another eye movement neuron to win the competition for the next target on the attended surface. This habituative mechanism instantiates the concept of “inhibition-of-return (IOR)” by preventing perseveration of eye movements to the same object position.

7.2.4. Object category reset by transient parietal burst

The reset-activated pathways to both the object surfaces and the spatial attention network are also gated by activity-dependent habituative transmitters [Equation (A52)]. These habituative gates facilitate the collapse of an active surface-shroud resonance after a period of sustained spatial attention directed toward the corresponding object surface. While an attentional shroud is active, the currently active neurons within that shroud inhibit the category reset neurons. The category reset stage [Equations (A50, A51)] in the Where stream is modeled by a tonically active neuronal population that nonspecifically inhibits the region where invariant object categories are learned within cortical area ITa of the What stream. The attended invariant object category can remain active because the category reset stage is inhibited by the currently active shroud. When the currently active shroud collapses, the category reset neurons are disinhibited, thereby enabling reset signals to inhibit the currently active invariant object category, as well as the currently active shroud. As a result of this transient reset burst, a shift of spatial attention can enable a correlated shift in categorization rules (Yantis et al., 2002; Serences and Yantis, 2006; Chiu and Yantis, 2009).

In the ARTSCAN Search model, unlike the ARTSCAN model, the reset signals are delivered to the view category integrators, invariant object categories, object surfaces, and spatial attention neurons. Reset may be initiated after only part of a shroud collapses, using a ratio reset rule that is more sensitive to the global structure of the shroud than was used in the ARTSCAN model. Due to the inhibition by the reset signal of the surface-shroud resonance itself, the more the attentional shroud collapses, the more the reset activity is disinhibited. This disinhibitory feedback loop enables fast and complete collapse of the currently active surface-shroud resonance, and a shift of attention to another object surface.

7.3. What stream

The What cortical stream in the ARTSCAN Search model includes several different kinds and sites of learning (Figure 7). First, there is view- and positionally-specific category learning in cortical area ITp. Second, there is view- and positionally-invariant category learning in cortical area ITa. Third, there is object-value learning from ITa to the orbitofrontal cortex (ORB). Fourth, there is conditioned reinforcer learning from ITa to the amygdala (AMYG). Fifth, there is incentive motivational learning from AMYG to ORB.

There are two types of reset events during category learning: First, there are the Where-to-What stream resets of the view- and positionally-invariant categories in ITa, discussed above, that are triggered by a surface-shroud collapse between V4 and PPC. Second, What stream resets of the view- and positionally-selective categories in ITp are mediated by sufficiently big mismatches of bottom-up visual input patterns with the top-down expectations that are read out to visual cortex from the currently active view- and positionally-selective categories (Carpenter and Grossberg, 1991; Grossberg, 2012).

The What stream also includes other top-down expectations that are used to perform a Where’s Waldo search (Figures 1, 6). These expectations carry priming signals from name categories in PFC to object-value categories in ORB, then to view- and positionally-invariant object categories in ITa, and finally to view- and positionally-specific categories in ITp. All of these top-down signals are modulatory: Without additional inputs to enhance them, they cannot fire their target cells. BG volitional signals enable the object-value and invariant object categories to fire when such top-down priming signals are also active. The subset of primed view-specific categories that receive bottom-up sensory inputs can also fire, and thereby activate the corresponding positions in PPC via a What-to-Where stream interaction, which leads to competitive selection of the most active position, and then a saccadic eye movement to that position.

7.3.1. View-specific categories

The view-specific category neurons, which are proposed to be computed in cortical area ITp, receive inputs from an object’s boundaries, which are proposed to be computed in the pale stripes of cortical area V2 [Figure 7; Equations (A55–A60)]. Each view-specific category learns to encode a range of boundary shapes, sizes, and orientations may be experienced when foveating different gaze positions of the same object view. View-specific categories are learned using an Adaptive Resonance Theory, or ART, classifier, notably Fuzzy ART (Carpenter et al., 1991, 1992), which is capable of rapidly learning and stably remembering recognition categories of variable generality in response to arbitrary sequences of analog or binary input patterns. Fuzzy ART includes learning within both a bottom-up adaptive filter that is tuned to cause category activation with increasing selectivity and vigor, and a top-down expectation that is matched against bottom-up input patterns to focus attention upon the set of critical features in the bottom-up input pattern that were previously learned by the top-down expectation. A big enough mismatch leads to reset of the currently active category via an orienting system. This reset triggers search for a new, or previously learned and better-matching, category with which to represent the current input. See Grossberg (2012) for a heuristic review of ART as a cognitive and neural theory.

As noted in section 6, view-specific categories can be activated during a Where’s Waldo search by either a bottom-up or a top-down route. The bottom-up route involves focusing motivated attention on the corresponding invariant object category via an ITa-AMYG-ORB resonance [Figure 5; Equations (A55–A71)]. The top-down routes involve top-down priming by a name category [Figures 6A,B; Equations (A72–A74)] via a PFC-ORB-ITa-ITp route or by a value category via an AMYG-ORB-ITa-ITp route. These top-down signals can selectively amplify the selected ITa representation which, in turn, sends larger top-down priming signals to its ITp representations. These ITp neurons correspond to different positions and views of the object. The view that is seen at a given position generates a bottom-up input that matches the corresponding top-down prime and can then better compete with other active ITp representations. The chosen ITp neuron can either activate a direct What-to-Where pathway from ITp to PPC to rapidly induce an eye movement [Figures 6A,C; Equation (A48)], or a longer path along an ITp-V2-V4-LIP-PPC route (Figures 6B,D) to direct the eye movement to desired target.

7.3.2. View category integrators

Each view-specific category activates its own population of view category integrator neurons [Figures 1, 2, 3; Equation (A61)]. These integrators stay active as the eyes move to explore different views of the same attended object, even after their view-specific category is reset. View category integrator neurons are reset when the shroud corresponding to a given object collapses, attention shifts to another object, and the eyes begin to explore the new object.

As explained in section 4, these neurons were introduced in the pARTSCAN model to show how object category neurons could learn to be positionally-invariant as well as view-invariant (Cao et al., 2011).

7.3.3. Invariant object categories

Object category neurons [Figures 1, 5, 6; Equations (A63–A65)] learn to become both view- and positionally-invariant due to the learning that occurs within the adaptive input signals that they receive from multiple view category integrator neurons; see section 4. This learning goes on as long as the view category integrator neurons are active. When attention shifts to another object, both the view category integrator neurons and the invariant object category neurons get reset, both to prevent them from being associated with another object, and to allow selective learning of many objects to occur.

Unlike the resets of Where stream spatial attention, these What stream resets are not gated by a habituative transmitter [Equations (A61, A63)]; rather, they are shut off by inhibition from the next shroud that forms. If What stream resets were transient, then the previously active invariant category could be reactivated during the time between the collapse of the previous shroud and the formation of the next shroud. As a reset, the previous invariant category could be erroneously associated with view-specific categories of the next object.

7.3.4. Value categories

Invariant object category representations can be amplified by an ITa-AMYG-ORB resonance (Figures 4C, 5), which can focus motivated attention on objects that are valued at a particular time. Such a resonance can develop as a result of two types of reinforcement learning (Grossberg, 1971, 1972a,b, 1982), as summarized in section 5: First, pairing the object with a reinforcer can convert the object representation into a conditioned reinforcer by strengthening the connection from the active invariant object category in ITa to an active value category, or drive representation, in AMYG [Figures 1, 4, 5, 6; Equation (A78)]. Many neurobiological data support the hypothesis that AMYG is a value category (e.g., Aggleton, 1993; LeDoux, 1993; Muramoto et al., 1993; Yan and Scott, 1996). Conditioned reinforcer learning is many-to-one learning because multiple categories can be associated with the same drive representation, much as multiple types of foods can be associated with the motivation to eat.

7.3.5. Object-value categories

The invariant object category in ITa can also send adaptive excitatory projections to object-value representations [Figures 1, 4, 5, 6; Equations (A70, A71)] in ORB (e.g., Barbas, 1995; Cavada et al., 2000; Rolls, 2000; Schoenbaum et al., 2003; Kringelbach, 2005). The adaptive nature of these connections is a new feature of the model, which enables associations to be learned from a given object representation to multiple reinforcers. A second many-to-one kind of learning in the model is incentive motivational learning. This type of learning can increase the incentive motivational signals from a value category in the AMYG to an object-value category in the ORB by strengthening the corresponding AMYG-to-ORB pathway. Motivationally salient invariant object category representations in ITa can hereby provide inputs directly to object-value representations in ORB, and indirectly via two-step learned conditioned reinforcer and incentive motivational pathways. Such favored object-value representations can generate positive feedback to the corresponding invariant object category representation via an ORB-to-ITa pathway [Equation (A77)]. This feedback amplifies the favored invariant object category in ITa and allows it to better compete for object attention, as occurs during attentional blocking experiments (Grossberg and Levine, 1987).

7.3.6. Name categories

Name category neurons in PFC [Figures 1, 6; Equations (A72–A74)] learn to be associated with the corresponding object-value category neurons in ORB and can thus send excitatory priming feedback to the corresponding object-value category neurons to enhance their representations during a top-down Where’s Waldo search [Equation (A81)].

7.3.7. Normalized quenching competitive dynamics during searches

The many-to-one nature of the learned connections between invariant object categories, value categories, and object-value categories could potentially cause problems during searches. Suppose, for example, that there were a winner-take-all competition at each of these processing stages. Choosing a winning view-specific category is needed, for example, to activate a single object’s boundary representation and thereby direct eye movements toward salient features of the boundary’s surface contours during indirect searches.

In apparent conflict with this useful property is how a winner-take-all choice can undermine motivational searches. During the initial bottom-up processing of a scene containing multiple objects of equal perceptual salience, there may be no clear winner of a winner-take-all competition. To break this tie, suppose that a winning view-specific category was arbitrarily chosen, say based on a random attentional spotlight. Suppose, moreover, that this view-specific category does not correspond to an invariant object category that was associated through reinforcement learning with the active value category during a motivational search. Then incentive motivational signals from the value category could prime all the object-value categories with which it was earlier associated. However, by itself, such a prime could not activate any of these categories because bottom-up input from an invariant object category corresponding to one of these object-value categories would also have to occur. However, if the winning view-specific category does not activate any of these object-value categories through its invariant object category, then the search could not continue.

This problem is overcome by incorporating mathematically proven properties of recurrent competitive dynamics among cells that obey the membrane equation, or shunting, dynamics of biological neurons (e.g., Grossberg, 1973, 1980b, 2013b). In particular, there exists a quenching threshold in such networks so that choices are not made in response to input activities that are too close to one another, but can be made in response to an input that is sufficiently bigger than its competitors. Moreover, such networks tend to normalize their total activities, whether or not a choice is made, using the automatic gain control property that follows from shunting dynamics. Normalization allows “weighing the evidence” among several equally salient alternatives. These properties is incorporated algorithmically in the competitive networks that determine the outputs of the view-specific categories [Equation (A60)], invariant object categories [Equation (A64)], value categories [Equation (A69)], object-value categories [Equation (A71)], and name categories [Equation (A74)]. This competition is henceforth called normalized quenching competition.

Given this refined competition property, in response to a bottom-up input from several equally salient inputs, the normalized network activity is divided equally among them. They can all activate their view-specific, invariant object, and object-value categories. Suppose that a value category now primes several object-value categories, but only one of them has a bottom-up input. Because it now receives a bottom-up input as well, this object-value category is selectively amplified and can win the competition among the object-value categories. The chosen object-value category can, in turn, enable the corresponding invariant object category to win its competition. The winning invariant object category can, in turn, prime all of its view-specific categories. Only one of these view-specific categories receives a bottom-up input, and this one can win its competition and drive either a direct or indirect eye movement to the position of the corresponding object.

The top-down cognitive and motivational searches also work because they enable a single object-value category to win its competition and thereby trigger the same top-down cascade of events that was just summarized.

8. Simulation results

The simulations of the ARTSCAN Search model demonstrate multiple sites of coordinated category, reinforcement, and cognitive learning, and use of the learned connections to carry out both bottom-up and top-down Where’s Waldo searches. ARTSCAN Search simulations process 24 objects taken from natural images of the Caltech 101 data base, with each object selected from different categories as Where’s Waldo exemplars. Each object is customized into 100 × 100 pixels (Figure 8A) against a homogeneous gray background with a luminance value of 0.5. The objects are in a gray scale with luminance values between 0 and 1. Input scenes are presented and simulated in Cartesian coordinates, for simplicity. A simulated scene is represented by 500 × 500 pixels and is divided into 25 regions of 100 × 100 pixels, with each region denoted as one position capable of representing one object.

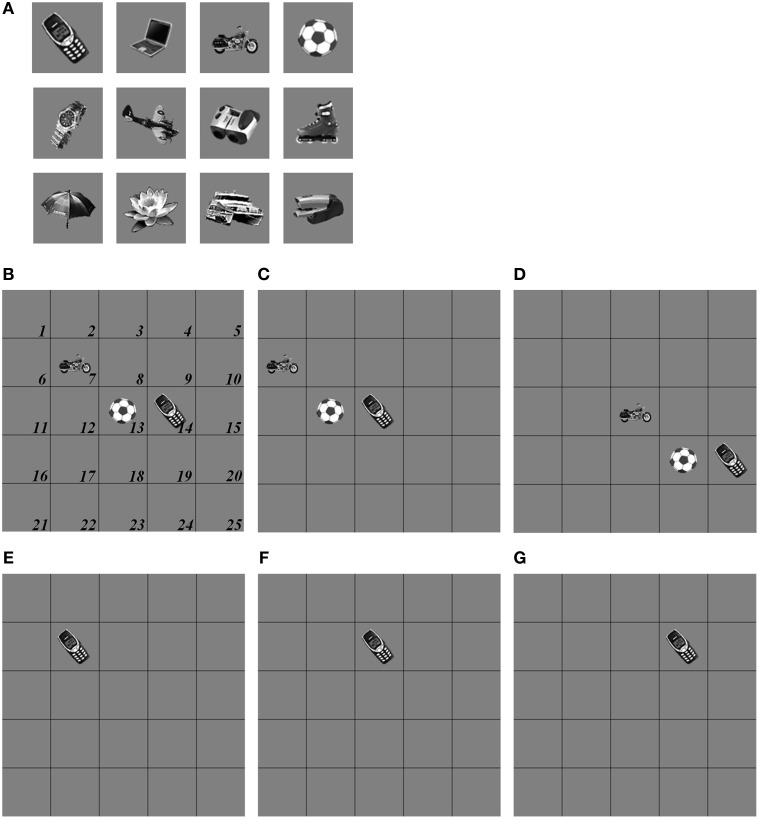

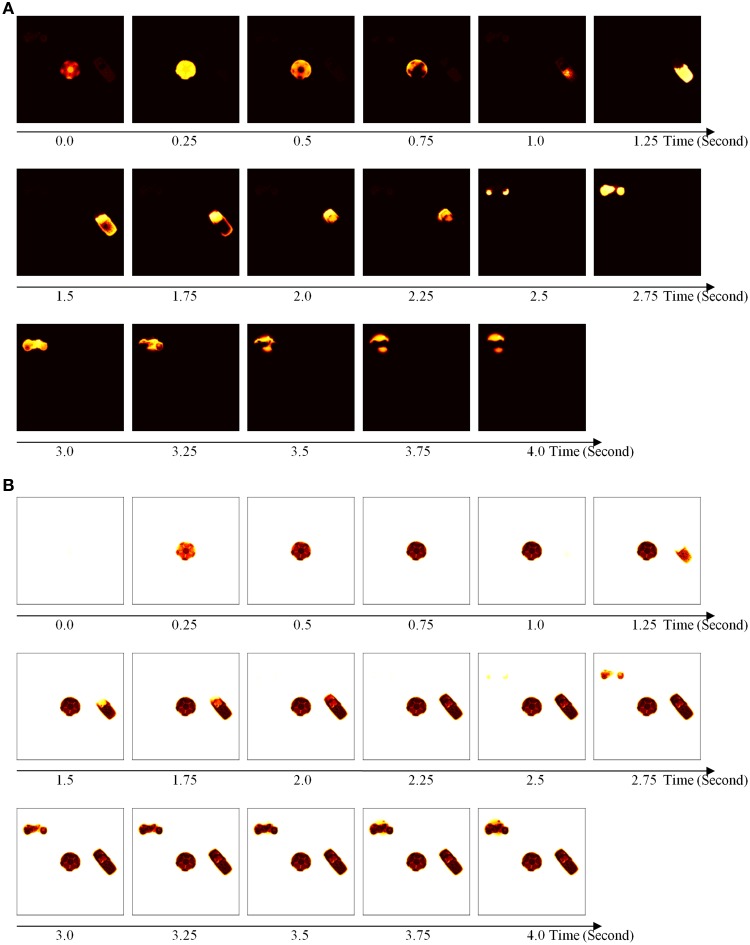

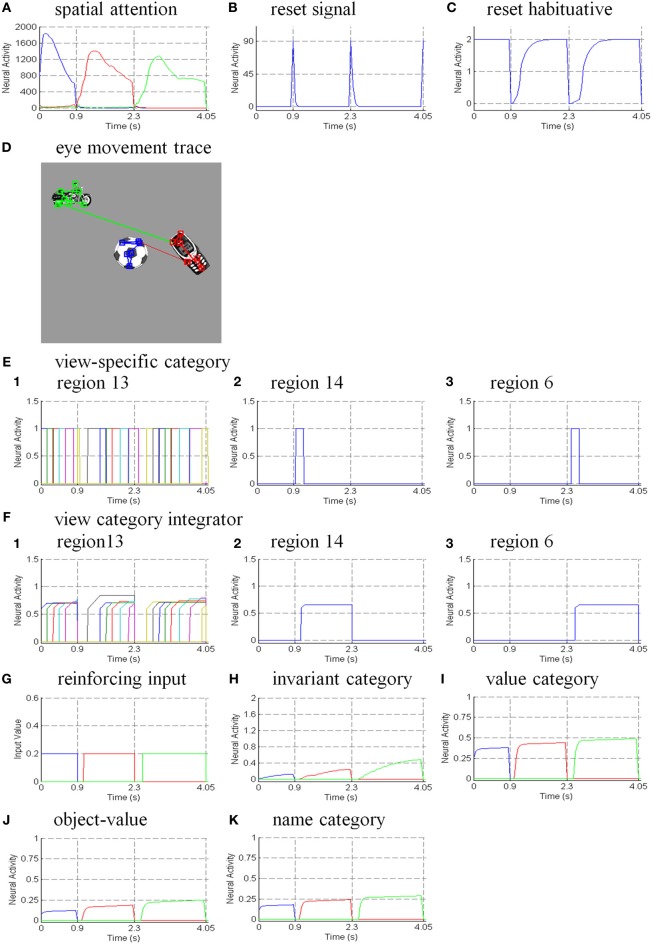

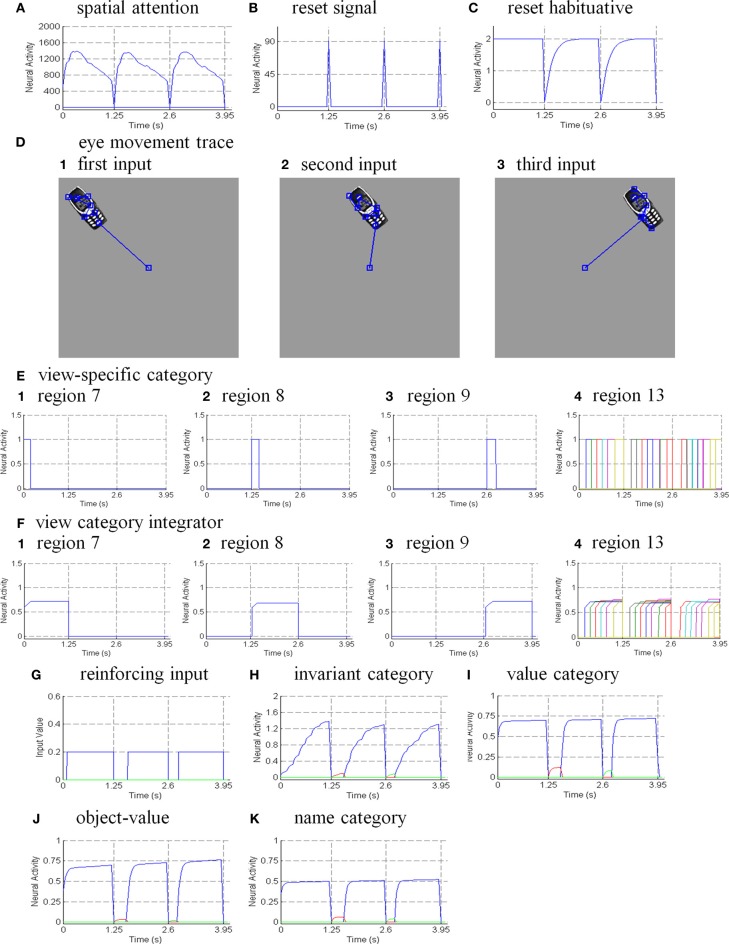

Figure 8.

Set of object stimuli for view- and positionally-invariant category learning. (A) Each object reflects the relative size within 100 × 100 pixels from Caltech 101 dataset. (B) A simulated scene for simulations of view-invariant object category learning in section 8.1. A scenic input image is partitioned into 25 regions (solid lines) and objects are located in the central regions of the input scene (regions 7, 8, 9, 12, 13, 14, 17, 18, and 19). Region 5 is the foveal region and others are the peripheral regions. (C) The bottom-up input representations after cellphone becomes the attended object and is foveated. (D) The bottom-up input representation when motorcycle becomes foveated after the soccer ball and cellphone are learned. (E,F) A sequence of simulated scenes for simulations of positionally- and view-invariant object category learning in section 8.2. Each scenic input only contains one object located in one of the center regions.

The simulations are separated into three processes. The first process replicates view-invariant category learning of the ARTSCAN model. The purpose of the simulation is to show that the ARTSCAN Search model maintains the properties of the ARTSCAN model while adding the view category integrator stage and reinforcement and cognitive learning. This simulation allows us to observe the dynamics of how spatial attentional shrouds form and then collapse to trigger category reset, of how spatial attention shifts from one object to another, and of how the model learns view-invariant object categories as the eyes autonomously explore a scene. While each shroud is active, the eyes move to approximately 7–8 hotspots on the attended surface. The duration of each fixation is approximately 0.3 s until the eye movement map computes the next saccadic eye movement command.

Initially, three out of 24 objects are randomly chosen and scattered into the central nine positions of the input scene, for reasons that are stated below. Putting the objects in the central region of the scene leaves enough space for the objects to remain in the scene after each eye movement. For example, in Figure 8B, the soccer ball object is the attended object in the center of the scene, whereas the motorcycle and cellphone objects are located at the 7 and 14th positions, respectively. Once spatial attention shifts from the soccer ball object to the cellphone object, the position of the soccer ball is shifted from the 13th to the 12th position, and the motorcycle shifts from the 7 to 6th position (Figure 8C). Figure 8D illustrates the shift when the motorcycle is foveated, and the soccer ball and cell phone shift to other positions in the scene.