Abstract

Positron Emission Tomography (PET), a non-invasive functional imaging method at the molecular level, images the distribution of biologically targeted radiotracers with high sensitivity. PET imaging provides detailed quantitative information about many diseases and is often used to evaluate inflammation, infection, and cancer by detecting emitted photons from a radiotracer localized to abnormal cells. In order to differentiate abnormal tissue from surrounding areas in PET images, image segmentation methods play a vital role; therefore, accurate image segmentation is often necessary for proper disease detection, diagnosis, treatment planning, and follow-ups. In this review paper, we present state-of-the-art PET image segmentation methods, as well as the recent advances in image segmentation techniques. In order to make this manuscript self-contained, we also briefly explain the fundamentals of PET imaging, the challenges of diagnostic PET image analysis, and the effects of these challenges on the segmentation results.

Keywords: Image Segmentation, PET, SUV, Thresholding, PET-CT, MRI-PET, Review

1. Introduction

Structural imaging techniques such as computed tomography (CT) and magnetic resonance imaging (MRI) are widely utilized in clinical practice to examine anatomical abnormalities caused by disease. The three dimensional (3D) images produced by these techniques usually give detailed structural information about one’s anatomy that can be used for diagnostic and therapeutic purposes [1]. However, structural imaging is not well suited for pathology detection applications where cellular activity is more significant than anatomical features [2]. The need for functional characterization leads researchers to develop PET scanners, which provide molecular information on the biology of many diseases. When combined with CT or MRI, utilizing both functional (PET) and structural information leads to a higher sensitivity and specificity than is achievable using either modality alone. Although the sensitivity of PET scans is usually much higher than conventional structural images, anatomical information from another modality (CT or MRI) is still needed to properly interpret and localize the radioctracer uptake and the PET images are somewhat limited due to low resolution. Hence, there is a frequent need for assessing functional images together with structural images in order to localize functional abnormalities and distinguish them from normal uptake of PET radiotracers, which tend to normally accumulate in the brain, heart, liver, kidneys, etc. [3, 4, 5]. PET-CT imaging and more recently MRI-PET have been used to combine complementary diagnostic information from different imaging modalities into a single imaging device, removing the need for registration [6]. Using these scanning techniques, disease can be labeled and identified such that an earlier diagnosis with more accurate staging for patients may potentially be delivered [7].

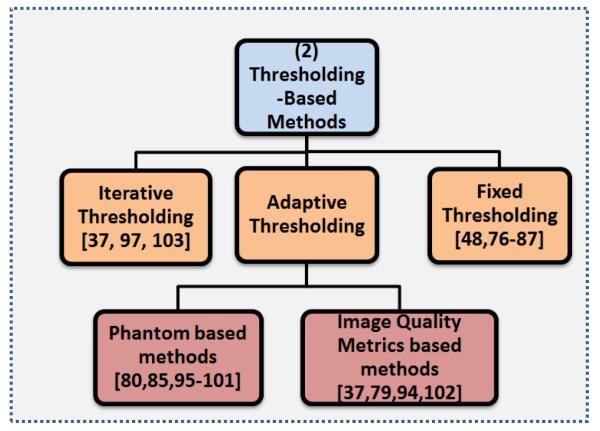

Some of the statistics for the use of PET imaging in the U.S. is summarized in Figure 1 (a). Over 1, 700, 000 clinical PET and PET-CT studies were reported nation-wide for 2011 only. Compared to single PET imaging, the use of PET-CT is relatively higher and continuing to increase. PET imaging is mostly used for (i) diagnosis, (ii) staging, (iii) treatment planning, and (iv) therapy follow-up, in different fields of medicine such as (1) oncology, (2) cardiology, and (3) neurology (Figure 1 (b)). PET is widely used in staging and follow-up therapy in oncology applications (Figure 1 (c)). For instance, radiation therapy, as a common cancer treatment in oncology, aims to target the boundary and volume of abnormal tissue and irradiates the targeted area with a high dosage of radiation, intending to eliminate all cancerous cells. In practice, the determination of this boundary (i.e., delineation) should be kept as small as possible to minimize damage to healthy tissue, but the boundary must ensure the inclusion of the entire extent of the diseased tissue [2]. PET is also used in cardiac applications such as quantifying blood flow to the heart muscle and quantifying the effects of a myocardial infarction [8]. More recently, PET has been used for imaging inflammation and infection in the lungs [9] with 18F – FDG because this glucose analog localizes to activated and proliferated inflammatory cells. The new norm in clinical practice is acquiring PET-CT images instead of a single PET scan to take advantage of the functional and structural information jointly.

Figure 1.

A summary of PET technology used in the U.S is shown in (a) [10]. (b) gives the breakdown of clinical PET and PET-CT studies in 2011 by the branch of medicine. (c) demonstrates 2010 PET technology used in the U.S. for oncology applications, in which PET has been used for mostly staging and follow-up therapy.

In pre-clinical and clinical applications, physicians and researchers use PET imaging to determine functional characterization of the tissues. Owing to this, clinical trials are now placing a greater reliance on imaging to provide objective measures in before, during, and after treatment processes. The functional morphology (the area, volume, geometry, texture, etc.) as well as activity measures–such as standardized uptake value (SUV) of the tissues–are of particular interest in these processes. Accurately determining quantitative measures enables physicians to assess changes in lesion biology during and after treatment; hence, it allows physicians to better evaluate tumor perfusion, permeability, blood volume, and response to therapy. Among these measures, functional volume (i.e., the volume of high uptake regions) has been proven useful for the definition of target volumes [11]. Therefore, an accurate image segmentation method, other than the conventional region of interest (ROI) analysis, is often needed for diagnostic or prognostic assessment. This functional characterization has a higher potential for proper assessment due to recent advances in PET imaging. Indeed, this higher potential has renewed interest in developing much more accurate (even globally optimal) segmentation methods to turn hybrid imaging systems into diagnostic tools [11]. Specifically, after the adoption of multi-modal imaging systems (i.e., PET-CT, MRI-PET), optimal approaches for precise segmentation and quantification of metabolic activities were crucial.

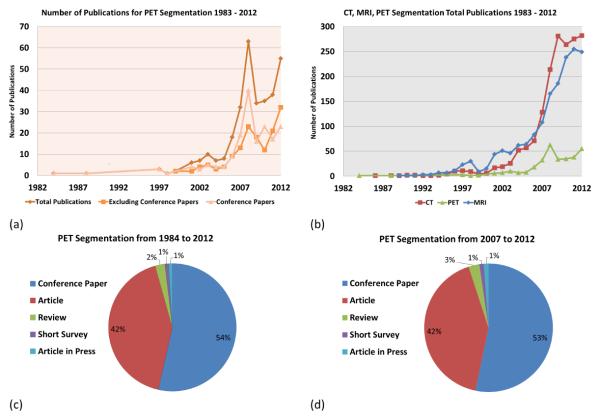

For the literature search, we used Pubmed™, IEEEXplore™, Google Scholar™, and ScienceDirect™and listed all the relevant articles from 1983 to March 2013. Our search also included the methods specifically developed for MRI and CT for comparison (Figure 2). The number of publications for PET image segmentation is further separated by publication type (conference, journal, and total) in Figure 2 (a). As a reflection of the improvements in multi-modality imaging technology (PET-CT and MRI-PET), there was a dramatic increase in the number of publications in 2008 and 2011. For a comparison, Figure 2 (b) shows how the number of publications in PET image segmentation methods compare to the number of CT and MRI based segmentation methods in the literature. Notably, the number of PET image segmentation publications has always been lower than both CT and MRI and was significantly lower before 2007. Figure 2 (c) gives the breakdown on the number of publications for segmentation techniques for PET images from 1984 to 2013. We also noted that only 2% of the articles were review papers and almost half of the total articles are journal papers (42% journal publications and 54% conference publications). For the last 6 years, Figure 2 (d) shows a snapshot of publication types from 2007 to 2013, during which the dramatic increase of PET image segmentation publications was observed. It appears that the growing interest in PET and hybrid imaging will further accelerate the methods for segmentation and quantification of lesions.

Figure 2.

Analysis of publications pertaining to PET image segmentation methods and their applications is shown (from 1983-2012). Journal and conference publications are shown in (a). A comparison of modality dependent image segmentation methods published for MRI, CT, and PET are shown in (b). Further categorization on the published papers has been conducted in (c) and (d) from 1984 to 2012 and from 2007 to 2012, respectively.

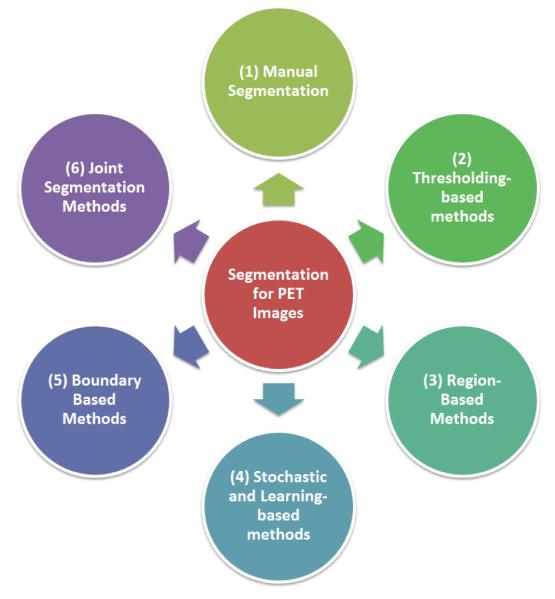

In this work, we systematically review state-of-the-art image segmentation methods for PET scans of body images, as well as the recent advances in PET image segmentation techniques. In order to have a complete review on the topic, the necessary knowledge of the physical principles of PET imaging are also given, along with the source of the challenges for segmentation inherent to PET images in Section 2. The state-of-the-art segmentation methods for PET images, their comparison, and recently developed advanced PET image segmentation methods are extensively analyzed in later sections, and the methods are divided into the following groups for clarity: manual segmentation and ground truth reconstruction (Section 3), thresholding-based (Section 4), stochastic and learning-based (Section 5), region-based (Section 6), boundary-based (Section 7), and multi-modality methods (Section 8). These categories are shown in Figure 3. Due to the large number of segmentation methods, we have categorized the state-of-the-art methods into intuitive groups for easier comprehension and better contrasting of the methods. Finally, discussions are made in Section 9, followed by conclusions in Section 10.

Figure 3.

An overview of the categories of PET segmentation methods: Manual segmentation, Thresholding-based, Region-based, Stochastic and Learning-based, Boundary Based, and Joint segmentation methods.

2. Background on PET Imaging and Segmentation

Radiotracers

The basic concept of PET is to label a radio-pharmaceutical compound with a biologically active ligand to form a radiotracer and inject it intravenously into a patient. The PET scanner then measures the distribution and concentration of the radiotracer accumulation throughout the patient’s body as a function of time [12]. To do this, PET utilizes positron emitting radioisotopes as molecular probes so the biochemical process can be measured through imaging in vivo [13]. There have been many radiotracers developed and among them F DG (18F combined with deoxyglucose) is considered the radiotracer of choice in most studies [14]. Metabolically active lesions have up regulation of glucose metabolism. For example, the rapid cell division in cancer cases and the immune response in infectious diseases require high levels of glucose. Therefore, labeling glucose with 18F renders these lesions detectable using PET imaging because the FDG accumulates in these areas [14]. Meanwhile, a large number of new compounds are also becoming prospects for PET imaging which have some advantages over FDG such as tracers that do not accumulate in the heart/kidney. However, FDG still remains the most commonly used radiotracer in the clinical routine for body imaging [15]. At the time of this writing, there is no reported study in the literature mimicking the differences of segmentation accuracy caused by using different radiotracers in PET imaging. Therefore, in this manuscript, an evaluation of image segmentation methods are assumed to be independent of the choice of radiotracer.

Quantitative Evaluation of Radiotracer Uptake in PET Images

A quantitative assessment of changes in FDG uptake in PET images is required for accurate diagnosis and assessment of treatment response, whereas a qualitative assessment of PET images is usually sufficient for the detection of lesions [16]. Qualitative assessments using PET images are often conducted visually by expert radiologists and nuclear medicine physicians [17], while various semi-quantitative and quantitative methods such as SUV, tumor-to-background ratio (TBR), nonlinear regression techniques, total lesion evaluation (TLE), and the Patlak-derived methods are currently undergoing extensive exploration [3]. Among these metrics, SUV is the most widely used quantification index for PET imaging because it gives a physiologically relevant measurement of cellular metabolism [13, 16]. SUV standardizes the intensities of the PET images, and it is simply defined as the tissue concentration of a tracer measured by the PET image intensity at any point of time, followed by a normalization with the injected dose, the patient’s size, and a decay factor which depends on the particular radiotracer type used during the imaging [18, 13]. The explicit formulation of the SUV computation is

| (1) |

where χ is either body weight (χ = BW (in g or kg)) or lean body mass (χ = LBM), depending on the type of SUV being computed [19]. D is the amount of injected dose (Bq) and C(t) is the total radioactivity concentration in a given tissue at time t, and it can be directly computed from a ROI. Exploration of SUV and its alternative measures are outside the scope of this review; however, readers are encouraged to refer to a comprehensive review on this subject [20].

What affects SUV measurements?

There are physiological, physical, and procedural factors affecting the SUV computation. Table 1 briefly explains factors affecting SUV calculation, their effects, and methods proposed to balance these effects. Physiological factors include body composition (fat, weight), blood glucose concentration, and kidney function. Among these, SUV is quite sensitive to body weight, especially for obese patients. High blood glucose concentration can also be problematic with diabetic patients when measuring SUV. Therefore, many techniques were developed to reduce uncertainties in the SUV measurements.

Table 1.

Methods for Correcting Physical and Physiological Factors Influencing SUV Computation

| Physiological Factors | Effects | Corrective Measure | References |

|---|---|---|---|

| Body Composition |

SUV in obese patients overesti- mates FDG |

Use of lean body mass (SUVLBM) or body surface area (SUVBSA) |

[19, 21, 22] |

| Blood Glucose Concen- tration |

Reduced FDG uptake in tissues with increasing glucose levels |

Control blood glucose levels before administering FDG |

[23, 24, 25] |

| Uptake Period | Increase of SUV over time in ma- lignant tissues |

Standardize the time of image acquisition |

[26, 27, 28] |

| Physical Factors | Effects | Corrective Measure | References |

| Respiratory Motion | Reduction of SUVmax up to 7 – 159% |

Respiratory gating or 4D re- construction |

[29, 30, 31] |

| Attenuation correction and reconstruction methods |

Underestimation of SUV with highly smoothed reconstruction by roughly 20% |

Standardize reconstruction algorithm |

[32, 33] |

| PVE | Underestimates SUV in lesions with diameters less than 2-3 times spatial resolution of scan- ner |

Adopt an optimal PVE factor | [34, 35] |

Physical factors consist of the partial volume effect (PVE), reconstruction and smoothing of the images, and respiratory motion (or organ/lesion motion) artifacts. PVE and respiratory motion artifacts lead to an underestimation of SUV for smaller lesions. Various methods have been developed for correcting PVEs; some of these are reported in Table 1. The literature has shown that repeated SUV measurements of the same patient may differ by up to 30% simply from measuring and analyzing variables that influence SUV computation [18, 36].

It is worth noting that the computation of SUVmax can be improved by removing statistical outliers by defining SUVmax differently [37, 38]; however, redefining SUV based on post-processing steps such as a parabolic fit around the maximum intensity may only correct the SUV marginally. So, instead of fitting the data to a predefined curve, averaging the most intense (“hottest”) voxels, which have the highest concentration of photons, together with SUVmax reduces the influence of a noisy outlier [36, 39, 40, 41, 42]. In [39], the repeatability of SUVmax, SUVmean and several ways of averaging the hottest voxels together were investigated and showed a reduced variability by a factor of 2.7 when the top 10 hottest voxels were averaged together to form an alternative way of computing SUVmax.

In addition to these factors, an ROI is needed for SUV computation, and the methodologies used for defining the ROI around a lesion can significantly affect the SUV based metrics. Therefore, in order to extract morphological and functional information from PET images, the ROI needs to be identified “precisely”. In other words, a precise segmentation is needed because even small errors in segmentation can distort the calculation of the SUV measurements by altering the region’s margins. Furthermore, inter- and intra-operator variability can be considerably high in defining these ROIs, affecting quantitation and possibly diagnostic decisions.

Challenges in Segmentation of PET Images

Without loss of generality, image segmentation can be thought of as two related tasks: recognition and delineation [43]. Recognition is the process of determining “where” the object is and to distinguish it from other object-like entities in the image, and delineation is the act of defining the spatial extent of the object region in the image [44]. In the recognition process, high uptake regions are observed and identified by clinicians. These rough areas of where the objects are located in the image are considered as ROIs, though this process can be automated as well [45]. In delineation, the second step of segmentation, the aim is precise separation of uptake regions from the background and non-significant uptakes [43, 46, 47]. Some of the intrinsic and extrinsic factors that significantly affect PET image segmentation are as follows:

resolution related issues,

large variability in the shape, texture, and location of pathologies,

noise.

These factors increase the difficulty of segmentation in multiple ways. For example, the low resolution and high smoothing decreases the contrast between objects in the image, and boundaries between nearby objects often become unclear. Several additional factors can be counted under resolution related issues. For instance, patients are sometimes unable to hold their breath during an entire scan, and motion artifacts may occur. These artifacts from breathing blur the images severely [48]. Second, the large variability in shape or texture of the pathologies makes the segmentation problem even more challenging due to difficulty in generalizing the available PET segmentation methods for those cases. Last, noise in PET images is inherently high, leading to further difficulties in image segmentation methods that tune parameters based on the value of the SUVmax as well as methods that use the intensity of an initial “seed” location. As demonstrated in [49], noise affects the segmentation of PET images and is regarded as the most significant contributing factor for not having a reproducible SUV measurement.However, some standards and guidelines have been enacted to ensure more reproducible analyses between scans and centers [50, 51].

Given the difficulties defined above and the unique challenges pertaining to PET images, there have been considerable improvements in PET image segmentation methods. These improvements are primarily due to the need of accurate and robust quantification tools that have the capability of analyzing multi-modal images in real time. An explosive growth in the use of PET-CT and more recently MRI-PET in clinics facilitates this need.

3. Manual Segmentation: Ground Truth Construction and Segmentation Evaluation

Ground Truth Construction

An overview of the categories that PET image segmentation methods are classified into is given in Figure 3. Before introducing the various PET image segmentation methods as summarized in Figure 3, it is useful and necessary to know the standard ways of evaluating the accuracy of segmentation for proper comparison. In order to evaluate an image segmentation algorithm, the true boundary of the object of interest should be identified. Unfortunately, there is no ground truth available if histopathologic samples are not available. This is the main challenge for all medical image delineation algorithms. Instead, surrogate truths (or reference standards) are used for measuring the quality of a segmentation algorithm. Using phantom images is one way to create a surrogate truth for measuring the performance of an algorithm. Phantoms have the benefit of knowing the exact dimensions of the object in the image. Additionally, a digital phantom, i.e. a synthetic image, can be constructed where the true boundary is known and imaging characteristics of a specific PET scanner can then be added [52]. However, the human anatomy is far too complex to be accurately represented via phantoms; hence, the use of phantoms is limited in terms of identifying the extent and true performance of the segmentation algorithms.

Another way, the most common one, is to use manually segmented structures and compare those structures with algorithm-generated segmentations in terms of overlap or boundary differences [53]. This strategy is currently the state-of-the-art segmentation method for the evaluation and development of medical image segmentation problems. Although it is important to incorporate as many manual segmentations as possible into the evaluation framework in order to reduce sampling error occurred due to the inherent high variations and inter-observer differences, it is often necessary to statically combine all these segmentations together to form a single ground truth for evaluation. The widely used Simultaneous Truth and Performance Level Estimation (STAPLE) method deals with this problem [54]. STAPLE estimates the ground truth segmentation by weighing each expert observer segmentation depending on an estimated performance level while also incorporating a prior model for the spatial distribution of objects being segmented [54].

Segmentation Evaluation

After creating a surrogate truth, there are three main categories of approaches for this task: quantifying volumetric differences, using estimators derived from the confusion matrix, shape based similarity measures, and regression based statistical methods. The volumetric difference is usually determined by simply computing the absolute percent difference in total volume between two segmentations. Since it is such an intuitive and simple metric, the percent volume difference is commonly used in the clinical literature, but this metric alone does not convey enough information to determine the similarity between two segmentations. For instance, it is highly possible for a segmentation method to produce the same volume as the volume of the ground truth, but the segmentation may still be unsatisfactory (i.e., segmentation leaks into non-object territory with some amount of volume, but still the same volume of ground truth can be obtained). Indeed, more quantitative metrics must be used along side for proper evaluation.

There are several estimators that are derived from the confusion matrix that are commonly used for segmentation evaluation. First, the dice similarity coefficient (DSC) is one of the most widely used quantitative metrics to evaluate segmentation accuracy, and this index allows for the false positives and false negatives to be combined into a single value for easy comparison [55, 56]. DSC simply measures spatial overlap (in percentage) between a segmented lesion and the surrogate truth where higher DSC values indicate the goodness of an image segmentation. Given that the segmented volume is denoted by V1 and the surrogate truth is shown by V2, the DSC is computed as follows:

| (2) |

where the overlap of two volumes (V1 ⋂ V2) indicates the True Positive Volume Fraction (TPVF) (also called sensitivity). The amount of false positive volume segmentation is measured in the False Positive Volume Fraction (FPVF) such that 100-FPVF is the specificity [47, 57].

For evaluating the segmentation of complex shaped lesions, boundary-based measures should be used in addition to region based metrics to quantify the shape dissimilarity between the delineated lesion and the ground truth [45, 57]. Geometric metrics such as the Hausdorff distance (HD) measure how far two boundaries are from each other [58]. Thus, an accuracte segmentation result would achieve a high DSC value (high regional overlap) and a low HD value (high shape similarity). The DSC and HD are commonly reported together in the literature for a more thorough evaluation.

Regression based statistical methods (i.e., Spearman and/or Pearson correlation coefficients) and the simple mean volume difference or relative volume ratio for the evaluation of segmentation methods are much more common than DSC based evaluations in clinical literature. However, readers should be aware that without having the TPVF (Sensitivity) and 100-FPVF (Specificity) pair or the DSC value, comparing statistics on the absolute volume difference does not provide complete information on segmentation accuracy. In addition to sensitivity and specificity, receiver operating characteristic (ROC) curves may be used to evaluate the performance of a delineation method by combining sensitivity and specificity of the PET segmentation algorithms for a given uncertainty level [59, 60].

In summary, a precise evaluation for a segmentation algorithm should be based on sensitivity and specificity measures (or DSC) and not solely on the absolute volume based statistical evaluations. Unless otherwise specified, we assume that all research papers listed in this review completed sensitivity and specificity comparisons for the proposed segmentation methods, even though some of those studies reported only volume based evaluations. It is also worth noting that DSC or sensitivity/specificity measures are region-based evaluation criteria. For a brief review on segmentation evaluation metrics, readers are encouraged to refer to [47, 55].

Difficulties in Manual Segmentation

Manually drawing a boundary around an object on the image is perhaps the most intuitive and easily implemented way of obtaining ROIs for a given image which makes it the most common method of obtaining surrogate truths, as described previously. However, it suffers from many drawbacks. Manual segmentation is highly subjective and intra- and inter-operator agreement rates are often presented in the literature to indicate the reliability of the obtained surrogate truths and the level of difficulty of the segmentation problem [61, 62, 63, 64, 65, 66].

The major drawbacks of manual segmentation are that it is time consuming, labor intensive, and operator-dependent. The high intra- and inter-operator variability of the resulting delineations make the delineation process less precise and unlikely reproducible. In a recent study [72], which involved 18 physicians from 4 different departments, the agreement, defined as a volume overlap of ≥ 70%, was found only in 21.8% of radiation oncologists and 30.4% of haematologic oncologists. It appears that a partial explanation for this high intra-observer variability for manual segmentation may be attributed to the size of the lesion because smaller lesions (i.e., < 4cm3) suffer much greater from the partial volume effect [73]. This causes the boundaries of objects to be blurred and unclear, making manual segmentation problematic. Table 2 exemplifies this high variation over a few studies reported in the literature. For seven studies, either the inter- and intra-observer variance is reported or the reliability coefficient is reported. The reliability coefficient is very similar to observer agreement rates, and it quantifies the consistency among multiple measurements on a scale from 0 to 1 [74]. Higher reliability means lower inter- and intra-variability. However, it should be noted that reliability does not imply validity. The reliability coefficient can be defined as the proportion of the total variance of interest that represents the true information being sought. More information about reliability coefficient can be found in [75]. Even among these recent highly cited studies, there is no consensus on how variable manual segmentation is as well as the experience level of the experts conducting the manual delineation.

Table 2.

Manual segmentation variability by expert radiologists - the gold standard: Intra- and Interobserver percent variability is the average percent variation in the segmentation volume. The reliability coefficient is used to quantifies the consistency among multiple measurements on a scale from 0 to 1, where 1 indicates most reliable, and 0 shows unreliable.

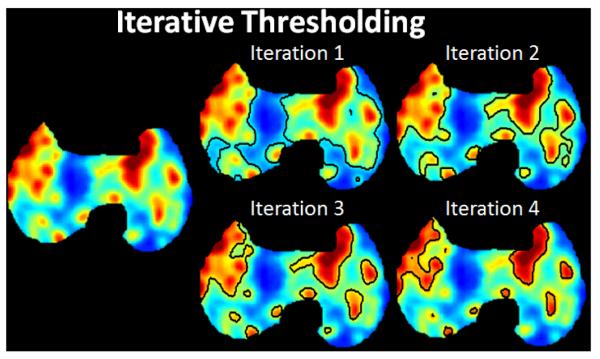

4. Thresholding-based Methods

Thresholding is a simple, intuitive, and popular image segmentation technique that converts a gray-level image into a binary image by defining all voxels greater than some value to be foreground and all other voxels are considered as background [76]. The thresholding-based PET image segmentation methods utilize the probability of intensities, usually by using the histogram of the image. An intuitive view on this process is that the objects of interest in the PET image, usually referred to as the highest uptake region, are much smaller than the background areas. A smaller area equates to a smaller probability of appearing on the image. Additionally, since the intensity of PET images has some physical meaning, the intensities are somewhat unique for the different tissue types and grouping specific ranges of the intensities for different objects is usually enough for a good segmentation. How to group these intensities together is the challenge, and thresholding is one approach to solve grouping problems.

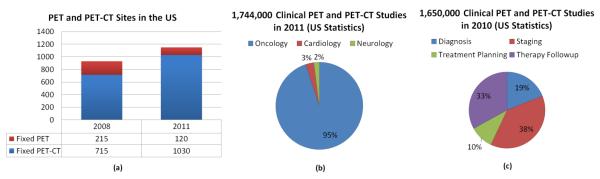

Due to the nature of PET images (i.e., low resolution with high contrast), thresholding-based methods are suitable because the local or global intensity histogram usually provides a sufficient level of information for separating the foreground (object of interest) from the background. However, there is some uncertainty that cannot be avoided when using thresholding-based methods. Because of the large variability of pathologies, low resolution, inherent noise, and high uncertainties in fuzzy object boundaries, there is no general consensus on the selection of a thresholding level (especially automatic threshold selection). Therefore, an optimal threshold determination remains a challenging task. Despite all these difficulties, thresholding-based methods are still under development for improving the segmentation mechanism towards the optimal boundary extraction. Hence, it is common to see these methods both in pre-clinical and clinical studies. Here, we will review state-of-the-art thresholding-based segmentation methods for PET images and their comparisons using clinical data. Thresholding techniques can be further divided into several groups: Fixed Thresholding, Adaptive Thresholding, and Iterative Thresholding Method. Also, we describe the challenges and drawbacks specifically pertaining to thresholding segmentation as well as the effects of the partial volume effect and the effects of different reconstruction algorithms.

4.1. Fixed thresholding

In fixed thresholding, as its name implies, all pixels above an intensity level are assigned to a group, and everything else is considered to be background. This level may be given as an input by an expert, learned in a training set of images of the same type, or derived by analytic expression using realistic phantoms. The object boundary in any PET image is going to contain some amount of fuzziness due to the PVE, resolution related issues, and motion artifacts [76]. Thus, many thresholding methods incorporate some amount of class uncertainty, entropy criteria, between-class variance, and many other types of criterion in order to account for this fuzzy object nature [76]. In many clinical studies, a value such as a SUV of 2.5 is set as a pre-defined threshold level to differentiate malignant lesions from benign [48]. Similarly, SUVmax can also be used to separate object information from the background by using a specific percentage of SUVmax, which has the advantage of being normalized between patients. The most common thresholding value chosen in the clinical setting is 40 – 43% of the SUVmax, but this may not always work well and may need to be adjusted considerably for different PET images, depending on the image properties, scanner type, reconstruction, image noise, etc. For example, several studies evaluated the commonly accepted thresholding value of 40 – 43% for segmenting lesions and found that this value was suitable in a broad sense. The authors suggested other thresholding values to obtain the correct boundaries such as 45% [77] and 78% [78, 79]. Another drawback of this fixed thresholding approach is the tendency to overestimate the lesion boundaries, particularly for small lesions. Therefore, an adaptation of thresholding with further information or user guidance is often necessary in order to provide a clinically sound delineation. Table 3 shows some of the notable studies in the literature that used a fixed thresholding method for segmenting lesions from various body regions. It lists the thresholding value chosen, anatomical area (or disease type), sample size, and the accuracy reported in the study. As can be seen from Table 3, since there is no consensus on a fixed thresholding method for segmenting PET images, variable results were reported even for similar lesion types.

Table 3.

Example studies using fixed-threshold based PET segmentation

| Segmentation Method |

Anatomical Area | Sample Size | Accuracy | References |

|---|---|---|---|---|

| T 42% | Static phantom | 3 elliptical spheres 4 SBR |

Mean volume deviation (%): 8.4% |

[80] |

| T 34% | Moving phantom | 3 Spheres 3 Motions |

Difference from ideal ranged from 3 to 94 cm3 for motion volumes of 1.2 to 243 cm3 |

[81] |

| T 34% | Moving lollipop phantom |

1 Sphere with 3 longitudinal movements |

Volume deviation from ground truth (%): 1.4 ± 8.1% |

[82] |

| T 50% | Intact squamous cell carcinoma |

40 Patients | Volume deviation from CT (%): 54.5% |

[83] |

| T 50% | NSCLC | 101 Patients | Volume deviation from CT (%): 27 ± 3% |

[84] |

|

T42% : < 3cm T24% : 3 – 5cm T15% : > 5cm |

NSCLC | 20 Patients | Determined threshold values such that the volumes were exactly the ground truth |

[85] |

| T40%, SUV2.5 | NSCLC | 19 Patients | Median volume deviation from CT (%): −140%, −20% |

[86] |

| Manual, T40%, T50%, TSBR |

Oral cavity, oropharynx, hypopharynx, larynx |

78 Lesions | Mean overlap fraction (CT): 0.61, 0.55, 0.39, 0.43 |

[77] |

| Manual, SUV2.5 T40%, TSBR |

NSCLC | 25 Lesions | Mean GTV (cm3): 157.7, 164.6, 53.6, 94.7 Mean Radius (cm): 3.03, 3.05, 2.18, 2.52 |

[79] |

| T43%, SUV2.5 | Rectal and anal Cancer |

18 patients | 55.4 ± 18.3, 36.7 ± 38.4 Vol- ume difference compared to manual delineation |

[87] |

|

TSUVmax, TIterative, TBgd, FIT |

Nonspherical simulated tumors inserted into real patient PET scans |

41 Lesions | Mean error volume (%): −50% ± 10%, −40% ± 40%, 4% ± 10%, 24% ± 20% |

[88] |

| TSBR | NSCLC | 23 Tumors | Compared to Histopathol- ogy: Sensitivity: 66.7% and Specificity: 95.0% Compared to Manual Seg- mentation: Sensitivity: 55.6% Specificity: 88.3% |

[89] |

| T42%, T50%, FCM | Sphere phantoms with diameters 13 - 37 (mm) |

6 Spheres using 4 scanners |

Classification error (%): 42.6 ± 51.6, 20.3 ± 18.5, 27.8 ± 25.6 |

[67] |

| Manual, TSBR, T40%, T50%, SUV2.5 |

High-grade gliomas | 18 patients | Mean Overlap Fraction (PET): 0.61, 0.62, 0.57, 0.67, 0.67 Mean Overlap Fraction (MRI): 0.45, 0.44, 0.54, 0.36, 0.14 |

[78] |

| Manual, TBgd20% ,TBgd40%, SUV2.5, T40% |

Esophageal Carcinoma |

96 Tumors | Mean length of tumors (cm): 6.30 ± 2.69, 5.55 ± 2.48, 6.80 ± 2.92, 6.65 ± 2.66, 4.88 ± 1.99, 5.90 ± 2.38 |

[90] |

| Gradient Based, TSBR, T40%, T40% |

Stage I-II NSCLC | 10 Patients | DSC: 66%, 64%, 62%, 65% | [91] |

Patients had various number of tumors

TBgd: = ∊*T70% + mean background intensity

TBgdα = SUVBgd + α(%) * (SUVmax - SUVBgd)

4.2. Adaptive thresholding

Many fixed thresholding-based segmentation methods use digital or physical phantoms to construct and quantify the relationship between the true lesion volume and the estimated lesion volume, with respect to various image quality metrics to “adapt” the thresholding value for a particular image of interest. The source-to-background ratio (SBR or S/B), mean background intensity, estimated mean lesion intensity, and full width half maximum (FWHM) of the scanner can be used as potential image quality metrics for this purpose.

In addition to adapting the thresholding value based on image quality metrics, it is also possible to adapt the thresholding value based on the motion artifacts of the PET image. As described previously, it lowers the contrast and mean intensity difference between the object and the background. For instance, some phantom based studies characterized the thresholding level of oscillating spheres instead to static spheres in order to mimic breathing and cardiac motions in [93]. Although adapting the threshold level to accommodate breathing and cardiac motion may have important applications for segmenting lung cancers or inflammation in the lungs when respiratory gating is unavailable, the method presented in [93] suffers from serious shortcomings such as the requirement of a prior estimation of lesion volume from structural imaging [38]. When a prior estimation of the lesion volume does not exist (possibly due to a lack of a corresponding CT or MRI image), success rates of the delineations are restricted to lesions larger than 4 cm3. For small lesions, even with prior anatomical knowledge, the success rate is under the desired clinical accuracy.

Table 4 lists some of the state-of-the-art adaptive thresholding equations in the literature, which were validated using various phantoms. The studies in Table 4 are organized based on the same year of publication, with the earliest year at the top and the most recent year at the bottom. Studies in same year are in no particular order. Notably, the analytic expressions grow increasingly complex as the years advance with earlier studies only considering the estimated lesion volume while later studies also take into consideration the resolution of the scanner and various image quality metrics. The volume difference between the segmentation found using the analytic expression and the ground truth is reported. This volume difference is divided between the volume difference when the method is applied to phantom images or patient images for a better comparison, if reported in the study.

Table 4.

Examples of adaptive thresholding equations used in segmenting lesions from PET images

| Analytic Expressions | Notes | Volume Difference1 |

References |

|---|---|---|---|

| T(%) = A*e−C*VmL | Parameters A,C are coefficients computed for each SBR. First to use the SBR to estimate the thresholding level. |

8.4%, NA | [80] |

| SUVCutOff = 0.307* SUVmean + 0.588 | Iteratively selected the mean tar- get SUV |

21%, 67% | [94] |

| T(%) = 0.15* Imean + SUVBgdmean |

Imean is approximation of mean intensity of tumor. Bgdmean is mean intensity in relevant back- ground structure. |

NA, 60.1% | [79] |

| T(%) = 59.1 − 18.5*log(V) | Fitted a logarithmic regression curve to threshold values in PET that resulted in the same volume from CT |

NA | [85]2 |

|

T(%) = SBR* (SUVmax − SUVBgdmean) + SUVBgdmean |

Considered influence of differ- ence between target and back- ground intensities |

47.3%, NA | [95] |

|

T(%) = SUVBgdmean + (Threl)*(SUVmax − SUVBgdmean) |

Threl = Relative threshold cor- responding to the physical diam- eter of the source. Used Threl to approximate the image trans- fer function at the interface be- tween lesion and background. |

Spheres > 15mm accurate to ≤ ±1mm |

[96] |

| Initialization for an iterative thresholding method |

10%, 16.6% | [37] | |

|

T(%) = 90.787*e(−0.0025*Area) T(%) = 0.00154*Area + 28.77 |

Area < 448mm2 Area ≥ 448mm2 |

13.8%, NA | [97] |

| Diameter > 30 mm a = 0.50, b = 0.50 Diameter ≤ 30 mm a = 0.67, b = 0.60 |

4.7%, 7.5% | [98] | |

|

|

Sphere inner diameter ≤ 10mm Sphere inner diameter > 10mm Considered scan duration and initial injected activity in model. |

NA | [99]2 |

| An iterative technique based on Monte Carlo simulation studies |

5.1%, 11.1% | [100] | |

| T(%) is normalized to back- ground. x is Volume in cm3, y is the motion in mm, z is the SBR Considered how motion in moving lung tumors affects the thresholding |

Withing 2 mm of CT volume |

[101] | |

|

|

Cross sectional area ≤ 133mm2 Cross sectional area > 133mm2 Considered the resolution of the scanner for small objects where the PVE has a significant effect by including the full width half maximum (FWHM) of the point spread function of the scanner |

NA, 6.62% | [102] |

Volume difference is the difference between the volume found from the adaptive thresholding segmentation and the ground truth. Left is the volume difference when method is applied to phantom images while Right is the volume difference when the method is applied to patient scans.

These studies used spherical phantoms to determine the thresholding level that gives the true volume and then fitted these values to a curve without testing against test images.

Overall, the major limitation in analytic expression based thresholding methods is that it is difficult to reproduce the same/similar segmentation results in different scanners or different patients [38]. This is because analytic expressions require precise tuning for a specific scanner, the reconstruction type, and even the patient size. Another drawback is that these expressions normally fail for lesions with a complex shape, due to an invalid analytic model for those cases (e.g. the partial volume would affect complex-shaped lesions differently then the spherical-shaped lesions). Also, it is very important to note that because of the limitations of representing anatomical structures and metabolic activities realistically, the construction and calibration of analytic expressions introduce uncertainty to the task of finding an optimal thresholding level; therefore, none of the studies listed in Table 4 are general enough to be used in clinical applications, particularly in radiotherapy planning and surgery.

4.3. Iterative thresholding method (ITM)

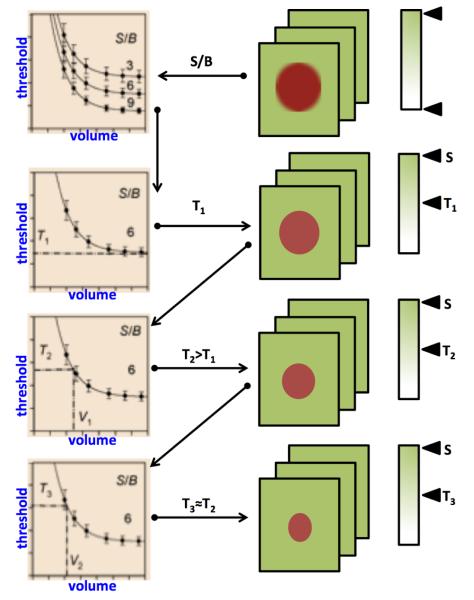

Commonly used adaptive thresholding methods in PET segmentation require a priori estimation of the lesion volume from anatomic images such as CT or analytic expression based on phantom geometry; however the iterative threshold method (ITM), proposed by Jentzen et al [37] estimates the PET volumes without anatomic prior knowledge. The ITM iteratively converges to the optimum threshold to be applied to the PET image. The method is based on calibrated threshold-volume curves at varying S/B ratio acquired by phantom measurements using spheres of known volumes. The measured S/B ratios of the lesions are then estimated from PET images, and their volumes are iteratively calculated using the calibrated S/B-threshold-volume curves [97, 103]. The resulting PET volumes are then compared with the known sphere volume and CT volumes of tumors that served as gold standards.

This process is illustrated in Figure 5. The ITM begins by obtaining several curves that are observed at typical S/B ratios from the PET images. The calibrated S/B-threshold-volume curve that best fits the measured S/B ratio is then used. For the selected S/B-threshold-volume curve, there is a fixed-threshold value T1 (fixed-threshold region) for large volumes that is applied to the PET image and an initial estimation of the volume (V1) is made using the ellipsoid model. The volume V1 is used further to determine the second threshold value T2. If the value T2 is significantly larger than T1, then the threshold value T2 is applied again to the gray scale of the PET image and a second volume V2 can be calculated. If the threshold values T2 and T3 are not significantly different, the iteration stops at step 3 with an estimated volume V3, the iteration ends at step n with an estimated volume Vn if the threshold value Tn does not deviate significantly from Tn+1. An example delineation process is given in Figure 6 where optimal threshold was found in 4 iterations.

Figure 5.

Iterative thresholding method for finding optimal thresholding value.

Figure 6.

The segmentation result at each iteration using the ITM is shown.

Unlike most adaptive thresholding methods, ITM does not require any prior information of the volume of interest. It only requires (a) the S/B ratio of the lesion easily taken from the PET image and (b) the S/B-threshold-volume curve to be determined once for the specific camera, reconstruction algorithm, and the radiotracer. However, it is important to note here that S/B-threshold-volume curve depends on the type of reconstruction algorithm used, especially for the small structures. Also the S/B-threshold-volume curve strongly depends on the spatial resolution of the imaging device. The ITM is further limited by the spatial resolution, implicit activity distribution, edge detection, and volume model of the lesion. For lesions with an effective diameter close to the spatial resolution of the scanner, the ITM method cannot be applied due to the PVE. Also, the ITM estimates the volume reliably only if the imaged activity distribution is homogeneous. The asymmetric activity distribution results in an underestimated volume. Additionally, the measurement of the S/B-threshold-volume curves assumed spherical lesions, and the clinical PET and CT volume calculations were performed using an ellipsoid model. Therefore, these suppositions are only an approximation of the irregularly shaped tumors. As a consequence, the clinical volume estimation may be less than accurate.

4.4. Optimal choice of thresholding, partial volume and reconstruction effects on thresholding

From the conclusions reported in [103, 104, 105], the conditions to obtain an accurate (i.e., exact or very close) delineation of objects in PET images using thresholding are very strict. Considering an object whose largest diameter is less than ten times the image resolution, given as the FWHM of the point spread function (PSF), there exists a single threshold value that allows the true contours of the object to be accurately recovered if and only if

the object is spherical,

the uptake is uniform inside and outside the object, and

the PSF is isotropic and constant over the whole field of view.

For all other conditions, where non-spherical uptake occur or uptake is non-uniform, the choice of an optimal threshold selection is ill-posed problem; therefore, theoretical justification is not always possible [106].

Thresholding methods do not perform well with tumors that are less than 2 - 3 times the spatial resolution of the scanner [34, 35, 79, 80, 97, 99, 102, 107, 108]. This is because the scanner’s PSF introduces the PVE. PVE comes from two sources: finite spatial resolution of imaging system and the discrete nature of PET images. Since PET is of low spatial resolution compared to CT or MRI, this factor is significant for PET imaging and most methods aim at this problem. New techniques such as digital photon counting (Philips Vereos PET/CT) is being explored to promote the resolution [109]. Furthermore, the continuous distribution of radiotracer is sampled to discrete voxels of PET images. Therefore, most voxels contains more than one tissue and the uptake value is the average of the response from all tissues within the voxel. This rule applies to all digital imaging modalities regardless of their spatial resolution. PVE is a major source of bias for PET quantification and several techniques have been proposed for PVE correction. During the reconstruction phase, spatial resolution can be enhanced by incorporating PSF information; anatomical information from CT or MRI can further be utilized as priors for better reconstruction. Deconvolution can also be applied for the purpose of resolution enhancement after reconstruction. To model the mutual influence between regions within image domain, Recovery Coefficient (single region) and Geometric Transfer Matrix (multiple regions) can be pre-calculated according to the approximate size and shape of the target regions. Such corrections are simple but limited to mean value correction only and the assumptions of homogeneity and shape. More sophisticated methods targeting voxel level correction often make use of co-registered high resolution anatomical information from CT or MRI to define the structures and boundaries between regions. The PVE factors can subsequently be obtained by modeling the interaction between regions.

In practice, for smaller tumors, a 5% thresholding change can cause the measured volume to change by a factor of two [79, 80, 102, 108]. It is explained in [99] that the near optimal thresholding value for small volumes depends largely on the size or diameter, due to the PVE being more influential while the optimal threshold value of larger objects has more functional dependence on the SBR. This is why many analytic thresholding expressions are piecewise linear and based on the estimated area or diameter of the lesion. Since SUVmax is the least affected by the PVE [34, 89], there are many equations that use a percentage of the SUVmax to determine a reasonable threshold level (see Table 3, relative thresholding). For an in-depth review on PVE in PET imaging, a survey paper is referred [34].

Reconstruction methods also affect thresholding based segmentation algorithms. As a whole, reconstruction methods used for PET images vary on the amount of smoothing done, especially when attempting to compensate for the high noise of PET images. In addition, greater smoothing increases the difficulty of thresholding because an image with significant smoothing has a smaller absolute range of intensity values, such that a higher thresholding value is required to compensate for the decrease in contrast [102, 108, 110]. In addition, this in turn lowers the range of thresholding values that can be chosen to achieve an acceptable segmentation which makes it more probable that a non-optimal thresholding value is chosen. In [110], five different segmentation algorithms were compared with respect to various reconstruction algorithms, and it was found that SUVmax and SUVmean-based fixed thresholding segmentation methods resulted in much larger volumes when high smoothing methods were used in the reconstruction process. Ideally, the method for threshold selection would compensate for the effect of reconstruction smoothing, though currently this is not considered. For detailed results on the effects of smoothing with reconstruction algorithms and threshold levels, reader may find useful information in [38, 108].

Thresholding methods have proven to be simplest yet computationally the most efficient segmentation method. Their sensitivity to noise and incapability to handle intensity variations make them less than an ideal candidate for complex segmentation tasks in medical image analysis. Furthermore, with the exponential increase in the computational capabilities of hardware onboard medical devices over the past decade, the algorithmic simplicity of thresholding-based methods is becoming less attractive.

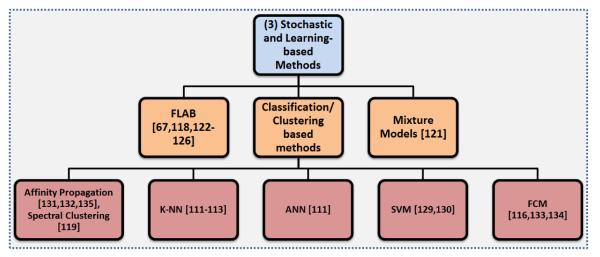

5. Stochastic and Learning-based Methods

Stochastic methods exploit differences between uptake regions and surrounding tissues statistically. Learning-based methods, similarly, use pattern recognition techniques to statistically estimate dependencies in the data. Since there are strong similarities between learning-based methods and stochastic methods, in this section we introduce the core concepts of the both groups together (Figure 7).

Figure 7.

An overview of the Stochastic and learning-based segmentation methods.

5.1. Mixture models

The intensity distribution of objects within PET images are commonly considered to be approximately Gaussian in shape, and this prior knowledge can be useful for segmentation. Gaussian Mixture Models (GMM) assume any distribution of intensities on the PET image can be approximated by a summation of Gaussian densities with the goal of identifying and separating these densities using an optimization technique such as the Expectation Maximization (EM) algorithm. There are currently several state-of-the-art GMMs in the literature that we will highlight here [118, 121]. A GMM-based segmentation technique was created in [121], and three tissue classifications were considered: background, uncertain regions, and the target. A user defined ROI was required to initiate the algorithm, and the EM method was used to estimate the underlying Gaussians. Then, the voxels were assigned to one of the three classes. From these regions, the segmentation was constructed. The algorithm was evaluated on PET images of tumors from non-small cell lung cancer patients and performed favorably compared to 40% SUVmax and to the adaptive thresholding method.

5.2. Fuzzy locally adaptive Bayesian (FLAB) method

Another approach that uses Gaussian mixtures of the objects is a locally adaptive PET segmentation method based on Bayesian statistics [118]. This method is known as fuzzy locally adaptive Bayesian (FLAB) segmentation. FLAB is an unsupervised statistical method that considers the image as having two hard tissue classes and a finite level of “fuzzy levels” that includes mixtures of the hard tissue classes. Due to the fuzzy properties of the model, FLAB allows the co-existence of voxels to belong to one of two hard classes, where voxels belonging to a “fuzzy level” depend on its membership to the two hard classes. A ROI identification step is necessary to perform FLAB (like most of the other PET segmentation methods); therefore, it is not fully automated. Although FLAB has been shown to be quite robust and reproducible [118, 67] for tumor volume assessments, ROI identification may be difficult when heterogeneous or high uptake regions occur [122]. Since heterogeneous or high uptake regions could be close to the object of interest, placing the ROI with these restrictions can in some cases be difficult. Moreover, a significant number of background voxels are necessary for accurate statistical modeling of tissue classes, so the number of background voxels should be small enough to avoid nearby uptake regions to be involved in model computation, other than the lesion of interest. In addition, the use of FLAB for the segmentation of heterogeneous lesions is limited to only two classes (background and foreground); therefore, FLAB may fall short when dealing with more than two classes.

Fortunately, an improved version of FLAB was recently published to deal with heterogenous uptakes by allowing up to three tumor classes instead of just the two hard classes of the initial version of FLAB [123]. The improved version, named 3-FLAB, had a higher accuracy and robustness as compared with adaptive threshold and FCM with a mean classification error of less than 9% ± 8%. Additionally, the accuracy [124], robustness [67], and reproducibility [125], as well as its clinical impact have been demonstrated in numerous papers [126, 127, 128, 129].

5.3. Clustering/Classification of PET image intensities

Classification methods seek to partition a feature space derived from the image by using data with known labels [130]. Due to the requirement of training data for labeling the data, classifiers are known as supervised methods. The most common feature is image intensity itself. Classifiers can transfer the labels of the training data to new data as long as the feature space sufficiently distinguishes each label as well [130]. However, a disadvantage of supervised methods is that they generally do not incorporate spatial information into the decision of labeling, and the requirement of manual interaction to obtain training data is very labor intensive and time consuming. This eventually increases the computational complexity.

Similar to the classification methods, clustering methods can utilize the spatial information contained within the PET images but without the use of training data [131]. Since these methods do not need training data, they are termed unsupervised methods. Compared to supervised methods, clustering methods have less computational complexity; however, they are sensitive to noise and cannot integrate spatial information well due to inherent uncertainty of the data.

Examples of supervised and unsupervised methods used in PET segmentation include k-nearest neighbor (k-NN) [111, 112, 113], support vector machine (SVM) [132, 133], Fuzzy C - Means (FCM) [116], artificial neural network (ANN) [111], and more recently Affinity Propagation (AP) [134, 135] and spectral clustering [119]. Clustering methods aim at gathering items with similar properties (i.e., intensity values, spatial location, etc) into local groups. Similar to some advanced thresholding methods, clustering can also entail hard and soft boundaries or “fuzzy” objects [11]. These methods usually take similarities between data points as inputs and outputs a set of data points that best represent the data with corresponding labels (i.e., foreground, background). Clustering is very useful particularly when the shapes of the uptakes regions are non-convex with a heterogeneous background. Non-convex regions are quite common for different diseases, in particular pulmonary infections; hence, there is a growing interest in the use of clustering based methods to segment complex shaped uptake regions [134, 135].

The spectral clustering method has been shown to have the potential to accurately delineate tumors containing inhomogeneous activities in the presence of a heterogeneous background in [119]. However, the number of tumors segmented in the study was limited, and there was no clear consensus on the choice of similarity parameters, which might not be optimal when only intensity values are used as similarity parameters. Another common clustering method, FCM, was used in PET segmentation context first in [136], and it has been used mainly for PET brain lesion segmentation since [136, 137]. The FCM algorithm classifies voxels into one of two groups, based on “fuzzy” levels where, due to the low resolution and the PVE, a particular voxel is allowed to included a mixture of multiple tissue types. FCM then decides which tissue type the voxel is most likely to belong to (i.e., it has more of one particular tissue type in the voxel over any of the other possible tissue types). Finally, the algorithm cuts the clusters into a foreground and background using a graph-based approach and converges to the global optima iteratively.

Most PET segmentation techniques are suited well for the determination of focal uptake, but generally give poor segmentation results for diffuse and multi-focal radiotracer uptake patterns such as those seen in infectious lung disease (Figure 8). However, a recent study proposes a PET segmentation framework uniquely suited for the segmentation of these difficult uptake patterns [134, 135]. This method utilized a novel similarity function that estimates the “similarity” (or affinity) of the data points on the histogram on the image(s) within the AP platform [138]. AP then uses these similarities to select the optimal thresholds to separate the image into multiple regions. In particular, [134] demonstrates the usability for quantification of small animal infectious disease models such as rabbits with tuberculosis or Ferrets with H1N1 (swine flu).

Figure 8.

Left: A representative slice (segmented to remove non-lung regions) showing focal radiotracer uptake in a small animal model while Right: demonstrates multi-focal/diffuse uptake patterns in a rabbit model infected with tuberculosis (5 weeks). Most PET segmentation techniques focus on segmenting the focal uptakes while ignoring the diffuse uptakes that occur in infectious pulmonary disease.

Table 5 lists some landmark studies that utilize clustering methods for PET image segmentation. The classification method used along with the other PET image segmentation methods are listed in the first column of Table 5. Also, the sample size and the types of images used in the studies (such the type of phantom used or the type of disease if the studies used patient images) are listed in the second column. The quantitative results, as reported in the studies, are listed in the third column.

Table 5.

Examples of the performace of classification algorithms in PET segmentation

| Classification Method | Sample Size | Results | References |

|---|---|---|---|

|

k-NN, ANN, Adaptive Thresh- olding |

6 Phantom spheres | Absolute relative error(%)* 6.83, 0.28, 7.61 |

[111] |

| k-NN | Monte Carlo simu- lation using Zubal whole-body phan- tom as prior |

Dice similarity: ~ 80% - 85% | [112] |

| k-NN, SUV2.5, T50%, TSBR | 10 Head and neck cancer patients |

Sensitivity, Specificity: 0.90, 0.95 - 0.93, 0.84 - 0.48, 0.98 - 0.68, 0.96 |

[113] |

| k-Means, MRF, Multiscale MRF | 4 Lesions | Volume difference (%): 9.09, 6.97, 5.09 |

[114] |

| k-Means, MRF, Multiscale MRF | 6 spheres using the NIRMPA phantom |

Volume difference (%): 42.86, 32.59, 15.36 |

[115] |

| FCM, FCM-SW* | Simulated lesions from the NCAT Phantom 21 NSCLC and 7 LSCC patients |

Classification error (%): −10.8 ± 23.1 , 0.9 ± 14.4 Classification error (%): 21.7 ± 22.0, 8.6 ± 28.3 |

[116] |

| Standard GMM, SVFMM, CA- SVFMM, ICM, MEANF, Dirich- let Gaussian mixture model |

PET Image of dog lung and spherical phantoms |

Misclassification ratio(%): 32.15, 12.43, 11.85, 3.52, 1.19, 0.67 |

[117] |

| FLAB, FHMC, FCM, T42% | 10 Spherical Phan- toms |

Classification errors (%): 25.2, 31.2, 51.6, 55.8 |

[118] |

| Spectral Clustering, Adaptive Thresholding |

30 Simulated lesions | Dice Similarity: ~ 95%, 92% | [119] |

Integrated a trous wavelet transform and spatial information by first smoothing with a nonlinear anisotropic diffusion filter [120]

6. Region-based segmentation methods

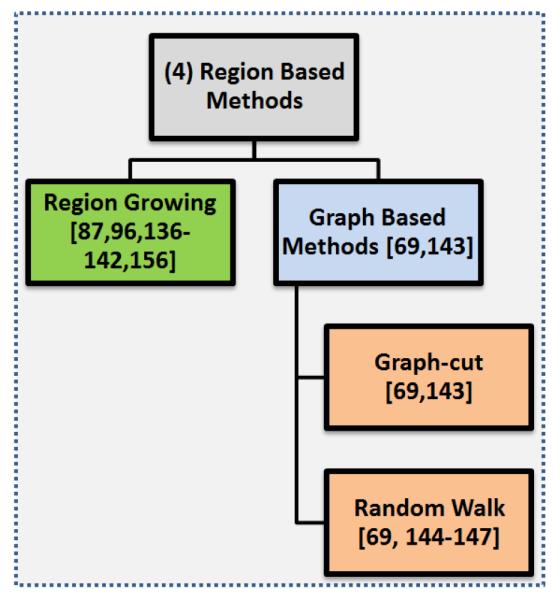

Another distinct type of PET segmentation technique is region-based segmentation methods where the homogeneity of the image is the main consideration for determining object boundaries. While it is true that the region-based segmentation methods also utilize the intensities of the image, they are much more concerned about the local distribution (homogeneity) of the intensities on the image. The region-based methods are mainly divided into two subgroups when considering PET images: Region Growing in Subsection 6.1 and Graph Cut methods in Subsection 6.2. Figure 9 shows further subgroups of these region-based segmentation methods.

Figure 9.

An overview of the region-based segmentation methods.

6.1. Region Growing

The fundamental drawback of histogram based segmentation, methods such as thresholding, is that histograms provide no spatial information which is extremely valuable information not being considered. One such method that incorporates spatial information in the image along with the intensity information is Region Growing, as first presented in [139]. The algorithm starts at a user defined seed and based on the mean and standard deviation of the intensities within the local seed region, connected pixels are either included or excluded in the segmentation results. A second input, a homogeneity metric, is used to decide how different a new pixel can be from the statistics of the region already selected and can still be included in the segmentation [87]. This process is repeated until the entire region of interest has been dissected or the segmented region does not change.

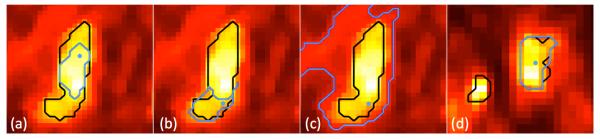

Region growing is a widely applied technique for different segmentation purposes [87, 139, 140, 141, 142, 143]. The main assumption in region growing is that regions of the object of interest have nearly constant or slowly varying intensity values to satisfy the homogeneity requirement. In region growing, it is expected that different homogeneity criterion and initial seed locations could easily affect the final segmentation results. Despite these difficulties, PET images tend to be sufficiently homogeneous in general, so region growing usually gives satisfactory results. For example, when compared to fixed thresholding techniques such as SUV2.5 and SUV43%, the region growing algorithm was shown to be much more accurate with a smaller standard deviation of the segmentation accuracy [87]. Furthermore, segmentation results obtained from the region growing method are highly reproducible, but, again, are strongly depend on the initialization of the segmentation. See Figure 10 for an example of the delineation process with regard to different initializations of the seeds and homogeneity parameters. Although region growing methods have been shown to work well in homogeneous regions with appropriately set intensity homogeneity parameters, segmentation of heterogeneous structures has not been satisfactory (Figure 10 (a,b)). Region growing may fail even for sufficiently homogeneous uptake regions when the homogeneity parameter of the region growing algorithm is not appropriately set (Figure 10 (c)). Moreover, region growing methods reported in the literature for PET segmentation are not able to handle multiple object segmentation (Figure 10 (d)). Thus, homogeneity parameters for multiple lesion cases have yet to be assessed.

Figure 10.

The homogeneity metric for (a), (b) = 0.1 and (c), (d) = 0.3. The black outline in the images is the gold standard while the blue line is found from the region grown algorithm. The blue dot represents the location of the user defined seed.

The main challenge in the region growing algorithm is “leakage”, that often occurs in PET images due to the high PVE, low resolution, and motion artifacts. Leakage can usually be limited using shape information [144], or be removed during an additional step after segmentation [145]. There has also been some methods proposed in the literature that discuss how to prevent or limit the leakage of region growing segmentation on PET images. Here, we highlight a few state-of-the-art methods that aim to “constrain” region growing from leaking into the background or nearby objects. In [146], a region growing algorithm that avoids false-positive segmentation though user incorporated input was introduced. This has been done simply by ROI definition to limit the possible areas where region growing could leak into. Initially, the voxel with the highest intensity in the ROI was chosen as the starting seed. Then, an adaptive version of the conventional region growing algorithm determined the boundaries of a lesion by assessing whether there has been a sharp volume increase between iterations. After the final step, a dual-front active contour based method was applied to the segmented region using the ROI to refine the segmentation and to further reduce any background leakage that may have occurred. Similar to the final step in the previous algorithm, another region growing based method attempted to constrain the algorithm by integrating a threshold based segmentation with region growing to fine-tune an absolute thresholding level after the background signal was subtracted from the PET signals [96]. This improved the robustness of thresholding segmentation against noise by considering the homogeneity and connectedness of the segmented area of the image; however, the selection of stopping criteria and inclusion of non-target structures within the segmented areas are some of the drawbacks [96].

The last approach that we will describe is using the condensed connected region growing (CCRG) method, which is an iterative algorithm utilizing the statistics from a user defined tumor region for segmentation. After defining the ROI, the voxel with the highest intensity is found, and the region growing begins at this location. Iteratively, the mean and standard deviation of this region is calculated, and a value derived by a formula that containing both of these metrics, is used to determine whether or not to include nearby voxels [87]. The CCRG gave significantly better segmentation results compared to thresholding-based methods; however, high false positive rates remains a challenging problem.

6.2. Graph-based methods

Graph-based approaches have a big advantage over other PET segmentation methods by incorporating efficient recognition into the segmentation process by using foreground and background seeds, specified by the user (or automatically) to locate the objects in the image [69]. These seed points act as hard constraints and combine global information with local pairwise pixel similarities [147] for optimal segmentation results. The two most common graph based methods used for PET segmentation are Graph Cut and Random Walk. We describe them in the following subsections in detail.

6.2.1. Graph-cut

Initially, graph-cut constructs a graph where the nodes are the voxels on the image and the edges represent the strength of similarity between the nodes. Once the graph is constructed, the edges are cut using energy functions to minimize the cost of all possible cuts. Graph-cut optimizes these partitions with respect to the energy function. Notably, graph-cut has been shown to optimally segment images using local pairwise pixel similarities [147]. However, graph-cut is not very robust and fails to give optimal results for noisy images [69].

6.2.2. Random walk

The random walk (RW) algorithm first appeared for computer vision applications in [148], and was used later for image segmentation in [69, 149, 150, 151]. RW is robust against noise and weak boundaries, a necessary trait due to the low resolution and high noise characteristics of PET images. It was first proposed for PET image segmentation in [69]. The study compared RW with two well-known threshold based segmentation methods (described previously in this review), FLAB and FCM, and outperformed them. Authors found that RW was superior to these commonly used methods in terms of accuracy, robustness, repeatability, and computational efficiency. RW has also been used in multi-modality segmentation, as described later in Section 8. One drawback of RW is that it may not properly handle multi-focal uptake regions when distributed in large areas of the body region. Although an automated seeding process was proposed recently in [58] for this purpose, human incorporation may still be necessary for some extreme cases.

7. Boundary-based methods

Instead of using the statistics of the entire image or the homogeneity of the image for segmentation, boundary-based segmentation methods were designed to locate and identify the boundaries of the objects in PET images. However, locating the boundaries of the objects in PET images is challenging due to the low resolution and noise of the PET images. The boundary-based methods can be categorized into two subgroups, Level Set/Active Contours and Gradient Based methods, as shown in Figure 11.

Figure 11.

An overview of the boundary-based segmentation methods.

7.1. Level Set and Active Contours

The concept of active contours, also called snakes, was first proposed in [152], where an initial contour around the object of interest deforms and moves towards the desired object’s edges. The deformation of the contour is handled by what is termed as the energy function. The energy function consists of two set of terms: internal and external energies. The internal energy guarantees the smoothness of the contour, whereas the external term forces the contour to move to the desired features (gradient, texture, edge information, etc.) of the image. Classical active contour methods rely on the gradient information and their performance is highly dependent on the location of the initial contour, i.e., the initial contour must be as close to the object of interest as possible so that the external energy is strong enough to move contour towards the target object boundaries. Moreover, the classical model cannot handle the topological changes of the curve. Geometric active contours capable of handling these curves were later introduced by Caselles et al. [153]. Their model utilizes the gradient information to define an edge; whereas the energy functional minimization procedure is carried out using the level set formulation.

As applied to PET images, a number of active contour-based segmentation techniques have been adapted in the literature. For instance, Hsu et al. [154] applied the classical active contour model to segment liver PET images. In their approach they estimated the external energy by solving a Poisson partial differential equation (PDE) and the algorithm was initialized by a Canny edge detection. Geometric active contours combined with an iterative deblurring algorithm were applied to PET images in [66] in the delineation of non-small cell lung cancer. Li et al. [146] used region growing as a pre-processing step to improve the active contour robustness for PET tumor delineation. Recently Abdoli et al. [155] combined geometric active contours with anisotropic diffusion filtering as a preprocessing step for smoothing, followed by a multi-resolution contourlet transform to segment tumors. The purpose of using the contourlet transform is to make the energy functional more effective in directing the evolving contour towards the target object.

The level set (LS) method was proposed in [156] for a way of modeling active contours by tracing interfaces between different phases of fluid flows. It has proven to be a very powerful tool for tracking moving interfaces over time and intensity. LS has been adopted in many applications involving movement of interfaces, including their widespread use in different imaging problems such as image segmentation and image registration [157, 158]. Basically, LS attempts to exploit the intensity gradient information based on the concept of evolving level sets by iteratively solving Euler-Lagrange partial differential equation

| (3) |

where φ is an implicit function (e.g., distance) that monitors the evolving level set and V is the velocity function for controlling the expansion and shrinkage of level set that is directly proportional to the curvature κ and inversely proportional to the image intensity gradient. The spatial regularization imposed by LS-based methods encourages the segmentation to have a smooth boundary; therefore, the resulting segmentations have a more regular shape than obtained by other methods.

Several techniques have been proposed in the literature that employ LS with pre- or post-processing techniques to segment images in different imaging modalities including PET. As a representative method, [159] recently developed a technique that utilizes both spatial and temporal information in dynamic PET data based on a multi-phase level set. The authors explained that in a PET scan, activity contrast between organs varies from frame-to-frame because of changes in tracer distribution over time. The method defines different weighting of absolute difference in the data term to each image frame and considers the noise level and activity difference. The authors validated their segmentation method using both phantom and real mice data [159]. The method was compared with the k-means algorithm and shown to have a higher accuracy accordingly.

As described in the region growing methods, an adaptive region-growing method [143] was used as a preprocessing step, and then the LS method was used to further refine the segmentation result. The method was shown to outperform iterative threshold methods on phantom and real images. Further, a PSF-based deconvolution method was used in [160] as a preprocessing step with LS for co-registered PET/CT in order to segment lung tumors in a semi-automated way. Both methods were tested on phantom as well as clinical data and showed that it produced accurate results with high reproducibility.

LS methods have proven to be an elegant tool for tracking moving interfaces. By implicitly including curve parametrization and geometric property estimation, these methods are able to handle topological changes much better than other boundary and region-based methods. Also LS works well at segmenting multi-objects if initialization of LS has been done properly. However, depending on the energy function, the method can be computationally complex and is highly dependent on the initial condition.

7.2. Gradient-based methods

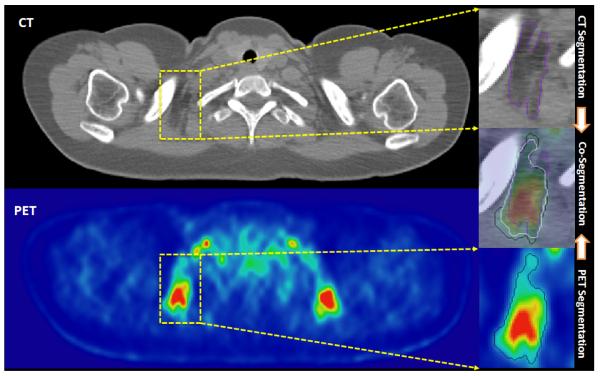

In general, the edges of an image usually have a sharp change in intensity values to signify the boundary of an object. To locate where these local changes of intensity occur, the gradient of the image is usually calculated between a voxel and the neighboring voxels. However, simply analyzing the abrupt changes in the PET intensity values does not often give optimal segmentation results due to several challenges that make the segmentation process less robust or not very accurate. The most significant of these challenges is the low resolution of the PET images and high PVE, which cause the boundaries to be smoothed and sometimes disconnected. PET images have also considerable noise, and this is amplified on gradient-based methods, which may result in a sub-optimal solution as well [161].