Abstract

Purpose

The purposes of this study were to develop a workstation computer that allowed intraoperative touchless control of diagnostic and surgical images by a surgeon, and to report the preliminary experience with the use of the system in a series of cases in which dental surgery was performed.

Materials and Methods

A custom workstation with a new motion sensing input device (Leap Motion) was set up in order to use a natural user interface (NUI) to manipulate the imaging software by hand gestures. The system allowed intraoperative touchless control of the surgical images.

Results

For the first time in the literature, an NUI system was used for a pilot study during 11 dental surgery procedures including tooth extractions, dental implant placements, and guided bone regeneration. No complications were reported. The system performed very well and was very useful.

Conclusion

The proposed system fulfilled the objective of providing touchless access and control of the system of images and a three-dimensional surgical plan, thus allowing the maintenance of sterile conditions. The interaction between surgical staff, under sterile conditions, and computer equipment has been a key issue. The solution with an NUI with touchless control of the images seems to be closer to an ideal. The cost of the sensor system is quite low; this could facilitate its incorporation into the practice of routine dental surgery. This technology has enormous potential in dental surgery and other healthcare specialties.

Keywords: Medical Informatics Computing; User-Computer Interface; Diagnostic Imaging; Surgery, Oral

Introduction

Every day there are new developments in the technology for medical and dental imaging, and clinical and surgical procedures are becoming more dependent on digital images and computers in the operating room and clinical scenarios. Thus far, as input devices for clinical situations, trackballs, followed by touchpads, among others, have been used. However, these devices require physical contact. For appropriate ergonomics integration in clinical/surgical situations, we need to use an appropriate input device; that is, a device with minimum, or ideally no, physical contact with surfaces (which otherwise would need to be regularly sterilized) is needed. The problem of cross-contamination and sterility is one of the greatest challenges in modern healthcare technology. A touchless interface could be an ideal solution to this problem, as it would not require physical contact.

Graphical user interfaces (GUIs) have evolved over more than three decades to become the standard in human-computer interaction. The multitouch screen interfaces of mobile devices such as phones and tablets represent an evolution of the GUI and are very popular today; however, they require physical contact to operate. Natural user interfaces (NUIs) are computer interfaces designed to use natural human behaviors for interacting directly with the computer.1 The human behaviors can be hand gestures.2 A system with an NUI uses, among other hardware, a motion-sensing input device3 linked to a computer with the appropriate software, with the purpose of tracking user extremities (arms, hands, fingers, and joints) and thereby, controlling the operating system and/or specific software. Although this technology is in its early stages, it is revolutionary in many areas of applied computer science, and this is the beginning of a new era in the interaction between humans and machines, and the application of informatics. The purposes of this study were to develop a workstation computer that allows intraoperative touchless control of diagnostic and surgical images by a surgeon, and to test the usability of this system in a case series of dental surgery procedures.

Materials and Methods

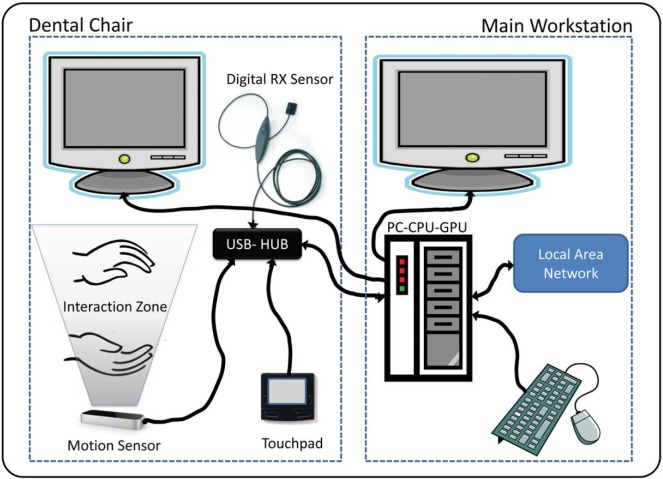

A custom two-dimensional (2D)/three-dimensional (3D) image-viewing workstation based on a personal computer was set up (Fig. 1). It was integrated with a specially modified dental unit chair that had several informatics tools inside the console, and allowed a perfect integration of the NUI with the dental unit chair in an ergonomic way during clinical and surgical procedures. Further, a special video card was used to allow the utilization of two liquid crystal display (LCD) monitors. A motion-sensing input device, Leap Motion (Leap Motion Inc., San Francisco, USA), which is a sensor based on the infrared optical tracking principle, Stereo Vision,3 was incorporated into the system. The motion sensor with the appropriate software was able to track the user's hands (fingers and joints) inside the interaction zone located above the device and thereby, control the software by hand gestures without requiring the user to touch anything. Kodak dental imaging software and Carestream CS 3D imaging software v3.1.9 (Carestream Health Inc., Rochester, USA) were used to handle the 2D and 3D dental images in order to manipulate the preliminary 3D model of the planned implant placement surgery and to acquire new digital radiographic images during surgery. The proposed system also integrated a digital radiography system sensor (DRSS) (Kodak RVG5100, Carestream Health Inc., Rochester, USA) to allow for the acquisition of intraoperative intraoral radiographic images.

Fig. 1.

Schematic diagram shows the custom image workstation set up to use a natural user interface in dental surgery.

Preliminary usability testing was carried out by two surgeons for accessing all kinds of supported digital images, simulating typical dental surgery situations. During this phase, the positions of all components were calibrated and adjusted. After trying different positions, we chose the final location of the controller, taking into account the fact that the interaction space of the controller allowed the operator to move his/her hands in an ergonomic way in order to avoid fatigue during the gestures. Different light conditions were tested to verify whether the controller performance was affected. The data transfer rate of the universal serial bus (USB) line and hub was checked. Different Leap Motion control settings were tested for a smooth and stable interaction, and the proposed system was set at 42 fps with a processing time of 23.2 ms; the interaction height was set as automatic. Touchless application (Leap Motion Inc., San Francisco, USA) in advanced mode was used.

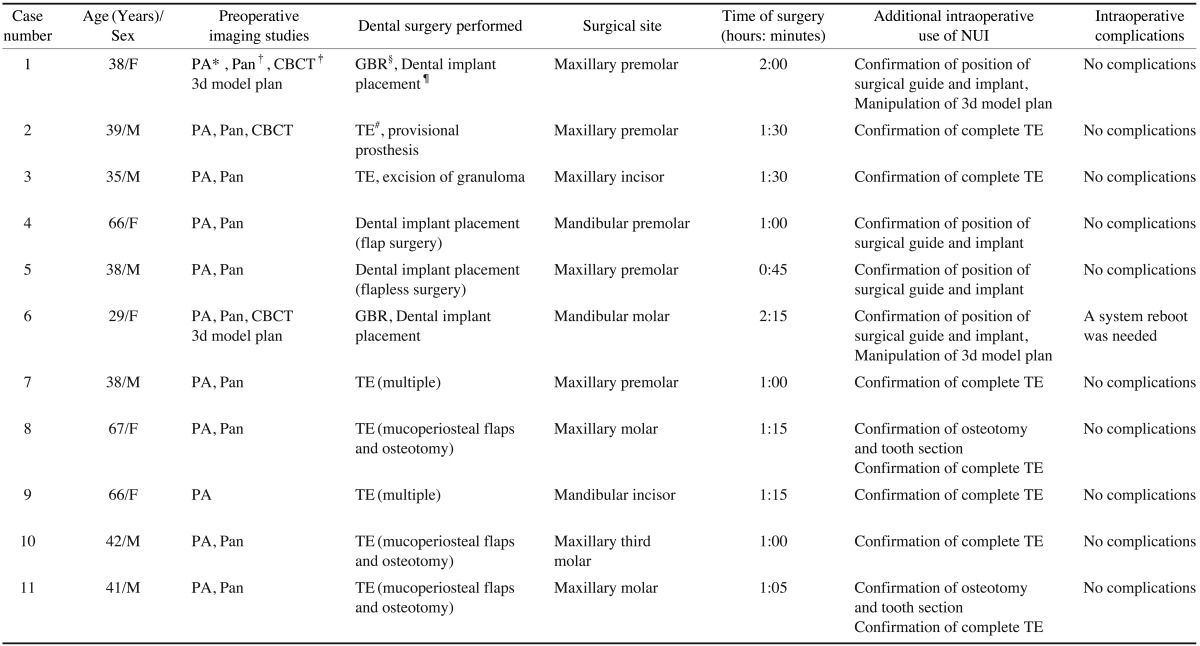

In this pilot study, 11 dental surgery procedures were conducted using the abovementioned custom system and included in this report as a case series. An overview of the procedures accomplished is presented in Table 1. This study was in compliance with the Helsinki Declaration, and the protocol was approved by the Institutional Review Board at CORE Dental Clinic in Chaco, Argentina. Each subject signed a detailed informed consent form.

Table 1.

Case series report on the use of the new NUI in dental surgery

PA: Periapical radiography, Pan: Panoramic radiography, CBCT: Cone beam computed tomography, GBR: Guided bone regeneration, TE: Tooth extraction

Results

Before and during the operations, the customized system was used for navigating through periapical radiographic images, panoramic radiographic images, and cone-beam computed tomography (CBCT) 2D digital images, and manipulating the preliminary 3D simulation model of the planned implant surgery and intraoperatory intraoral digital X-ray images. The abovementioned system allowed intraoperative touchless control during the surgery (Fig. 2). This system recognized gestures that emulated touching a vertical virtual touch surface in the air (in the interaction zone above the sensor). When the operator pointed one or two fingers towards the screen, the system drew a cursor on the screen so that the operator could point items or buttons in the software, and when the operator moved the finger farther towards the screen, the pointed item was selected (similar to a mouse click). The functions that required two points of control (modification of scale, zoom, or rotation) could be controlled with two fingers of one hand, or one finger of each of the two hands. By the use of this hand gesture, the surgeon was able to navigate through the windows, zooming in and out, manipulate the different images and slices, and use imaging tools such as the adjustment of image contrast or brightness, image enhancements, and measurement. It was possible to move and rotate the 3D implant surgery planning model, during dental implant procedures. Further, the system was used to manipulate (zoom in or out, and enhance contrast) the new intraoperatory digital X-ray images to confirm the correct position of the surgical guides and the final position of implants or to radiographically evaluate the outcome of the procedures.

Fig. 2.

A. Photograph shows the general view of use of the natural user interface that enables touchless control of the imaging system and helps to maintain the surgical environment during surgery. The system can be controlled with two fingers of one hand (B), or one finger of each of the two hands (C) can be used to control the images by two points (for modification of scale, zooming, or rotation).

During the surgical procedure of case number 1, when simultaneous dental implant placement and guided bone regeneration (GBR) procedures were achieved, the new NUI system was used extensively and was very useful because the anatomy of the neighbor teeth and the maxillary sinus made the use of images imperative for correct implant placement while avoiding damage to anatomical structures. For this purpose, the Kodak dental imaging system and Carestream CS 3D imaging software v3.1.9 (Carestream Health Inc., Rochester, USA) was used to access and manipulate the 2D CBCT images and 3D model of the previously planned position of the implant. The system was also useful for the touchless manipulation of the intraoperative intraoral image with the imaging software: for zooming the image, enhance the image by applying the sharpness filter and use the highlight tool by pointing with a finger and moving it to indicate the area of interest in the image where the operator wanted to enhance the contrast to an optimal value. After that, it was used for accessing an intraoral image for the confirmation of the implant position. With the purpose of illustrating the use of the new system, a video was filmed during this procedure and can be seen as a supplemental material of this article.4 In case number 6, two dental implants were placed simultaneously with the GBR procedure in the molar region of the mandible. The NUI system was used to access the axial and coronal CBCT images with the previously planned position of the implant. It was also used to confirm the position of the surgical guide in relation to the inferior alveolar nerve (IAN) by an intraoral intraoperatory image. For this purpose, the NUI was used to enhance the contrast of the image, and after that, with a hand gesture of pointing with a finger and by using the measurement tool of the software, the distance from the surgical guide to the IAN was measured. During this intervention, a system reboot was needed because the USB drives failed to recognize the DRSS sensor; apart from this incident, there was no other technical failure in any of the other procedures. Neither intra- nor postoperative surgical complications were reported. The surgeon had easy and direct access to all preoperative and intraoperative images what led to a perceived increase in the intraoperative consultation of the images, but this seemed to have little effect on the operative time; this was similar to the values of our surgical team routine as registered in the protocol of each surgery and compared with similar previous procedures without using the NUI when the image system was accessed before the surgery or by an assistant outside the surgical field.

The combined system performed very well, and it was found to be very useful in controlling the system without touching anything and maintaining the surgical environment. The habituation period for the NUI is not very long; it may depend on how the user is accustomed to other multipoint input devices such as touchscreens, but for use during surgery, it is recommended that the user spend several training sessions (4-6 sessions of 30 min each), assuming that he had previously dominated the software with the standard input devices (mouse, touchpad, and touchscreen). With a little training by the user, without a doubt, it is easier and faster than changing sterile gloves or having an assistant outside the sterile environment.

Discussion

For the first time, to our knowledge, our team used a touchless NUI system with a Leap Motion controller during dental surgery. Previous reports have used a different motion sensor (MS Kinect, Microsoft Corp., Redmond, USA) for touchless control of images in general surgery,5,6 and for controlling virtual geographic maps7 among other uses. A Kinect sensor works on a different principle from that of the Leap Motion. The MS Kinect and Xtion PRO (ASUS Computer Inc., Taipei, Taiwan) are basically infrared depth-sensing cameras based on the principle of structured light.3 Our team has been using the MS Kinect sensor with an NUI system for the last two years as an experimental educational tool during workshops of courses on digital dental photography. It has been very useful for this purpose. It has also been tested during clinical situations at dental offices but found to be inadequate for dental scenarios, mainly because the Kinect uses a horizontal tracking approach and needs a minimal working distance (approximately 1.2 m); in contrast, the Leap Motion tracks the user's hands from below. The interaction zone of the MS Kinect is larger (approximately 18m3) than that of the Leap Motion (approximately 0.23 m3). This means that when using the MS Kinect, the operating room has to be considerably wider and the user has to walk out of the interaction zone in order to stop interacting with the system. In the case of the Leap Motion, the surgeon just has to move his hands out of the smaller interaction zone. The MS Kinect tracks the whole body or the upper part of the body,6 which implies unnecessarily wider movements of the user's arms; this could lead to fatigue during the procedure. On the other hand, the Leap Motion tracks only the user's hands and fingers and has a higher spatial resolution and faster frame rate, which leads to a more precise control for image manipulation.

The proposed system performed quite well and fulfilled the objective of providing access and control of the system of images and surgical plan, without touching any device, thus allowing the maintenance of sterile conditions. This motivated a perceived increase in the frequency of intraoperative consultation of the images. Further, the use of modified dental equipment made the experience of using an NUI for intraoperative manipulation of dental images particularly ergonomic.

The great potential of the amazing developments in the fields of dental and medical imaging can only be exploited if these developments help healthcare professionals during medical procedures. The interaction between surgical staff, under sterile conditions, and computer equipment has been a key point. A possible solution was to use an assistant outside the surgical field for manipulating the computer-generated images, which was not practical and required one additional person. The proposed solution seems to be closer to an ideal one. A very important point is that the cost of the sensor is quite low and all the system components are available at a relatively low cost; this could allow the easy incorporation of this technology in the budget of clinical facilities of poor countries across the globe, allowing them to reduce the number of personnel required in the operating room, who could otherwise be doing more productive work. On the basis of the success of this proof of concept as demonstrated by this pilot report, we have undertaken further research to optimize the gestures recognized by the NUI.

The NUI is producing a revolution in human-machine interaction; it has just started now and will probably evolve over the next 20 years. User interfaces are becoming less visible as computers communicate more like people, and this has the potential to bring humans and technology closer. The contribution of this revolutionary new technology is and will be extremely important in the field of healthcare and has enormous potential in dental and general surgery, as well as in daily clinical practice. What is more important: This would greatly benefit the diagnosis and treatment of a number of diseases and improve the care of people, which is our ultimate and greatest goal.

Acknowledgments

The authors would like to thank Dr. Daniel Rosa at CORE Dental Clinic for his assistance during the surgical procedures.

References

- 1.Josh B. What is the natural user interface? Deconstructing the NUI [Internet] Woodbridge: InfoStrat Advanced Technology Group; 2010. Mar 01, [cited 2013 Oct 4]. Available from: http://nui.joshland.org/2010/03/what-is-natural-user-interface-book.html. [Google Scholar]

- 2.Strazdins G, Komandur S, Styve A. In: Rekdalsbakken W, Bye RT, Zhang H, editors. Kinect-based systems for maritime operation simulators?; Proceedings of 27th European Conference on Modelling and Simulation, ECMS 2013; 2013 May 27-30; Ålesund, Norway. Digitaldruck Pirrot GmbH; 2013. pp. 205–211. [Google Scholar]

- 3.Weichert F, Bachmann D, Rudak B, Fisseler D. Analysis of the accuracy and robustness of the leap motion controller. Sensors (Basel) 2013;13:6380–6393. doi: 10.3390/s130506380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Rosa G. First use of Leap Motion during dental implant surgery [Internet] YouTube.com; [cited 2013 Oct 4]. Available from: https://www.youtube.com/watch?v=1dc8G5lTVtI. [Google Scholar]

- 5.Strickland M, Tremaine J, Brigley G, Law C. Using a depth-sensing infrared camera system to access and manipulate medical imaging from within the sterile operating field. Can J Surg. 2013;56:E1–E6. doi: 10.1503/cjs.035311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Ruppert GC, Reis LO, Amorim PH, de Moraes TF, da Silva JV. Touchless gesture user interface for interactive image visualization in urological surgery. World J Urol. 2012;30:687–691. doi: 10.1007/s00345-012-0879-0. [DOI] [PubMed] [Google Scholar]

- 7.Boulos MN, Blanchard BJ, Walker C, Montero J, Tripathy A, Gutierrez-Osuna R. Web GIS in practice X: a Microsoft Kinect natural user interface for Google Earth navigation. Int J Health Geogr. 2011;10:45. doi: 10.1186/1476-072X-10-45. [DOI] [PMC free article] [PubMed] [Google Scholar]