Abstract

Neuroeconomics applies models from economics and psychology to inform neurobiological studies of choice. This approach has revealed neural signatures of concepts like value, risk, and ambiguity, which are known to influence decision-making. Such observations have led theorists to hypothesize a single, unified decision process that mediates choice behavior via a common neural currency for outcomes like food, money, or social praise. In parallel, recent neuroethological studies of decision-making have focused on natural behaviors like foraging, mate choice, and social interactions. These decisions strongly impact evolutionary fitness and thus are likely to have played a key role in shaping the neural circuits that mediate decision-making. This approach has revealed a suite of computational motifs that appear to be shared across a wide variety of organisms. We argue that the existence of deep homologies in the neural circuits mediating choice may have profound implications for understanding human decision-making in health and disease.

Introduction

Some decisions in life are complex and momentous, like whom to marry or whether to change careers. These choices are informed by a lifetime of accumulated experience and social knowledge, and are made based on predictions about what life will be like in the future. Smaller decisions -- how fast to drive, whether to binge-watch the entire season of “Arrested Development,” whom to talk to -- are less deliberative and require lower cognitive effort. Yet decisions, both large and small, can be viewed as outcomes of the processes by which the brain translates sensation into action. More broadly, the language of decisions connotes behavior, including the aberrant behaviors that attend psychiatric disorders. For instance, drugs of abuse, which act at the molecular level, nonetheless alter brain function at a circuit level as well, bending motivation toward drugs themselves and away from more adaptive activities. But how do such changes in the brain manifest themselves as changes in behavior? To understand this question, we must understand how the brain decides.

Of course, the concept of decision is not unambiguous. One might argue that any motor response to sensory input is a decision, although this would include reflex processes that do not intuitively merit the term (Glimcher, 2004). Alternatively, we might require that decisions be conscious and deliberative, but this, too, excludes many interesting classes of phenomena such as implicit bias and rapid physical responses as in sports. The question also arises as to whether decisions must always occur over multiple options, or whether withholding an action also qualifies as a decision (Gold and Shadlen, 2007). If, as has been suggested (Shadlen et al., 2008), a decision is a commitment to a particular proposition, including actions as well as ideas, there remains the question of what this "commitment" means neurobiologically. Should rapid sequences of motor actions, despite being unconscious, be considered decisions if they can be interrupted or varied? Can all decisions be viewed in a cost-benefit framework, and if so, does this paradigm hold biologically, or simply in an "as if" sense? Clearly, the semantics of what, exactly, constitutes a decision have proven philosophically and practically challenging (Glimcher, 2004). In what follows, we will hew to the idea that decisions commit the organism to one out of several possible behaviors (including thoughts), and that these commitments are flexible and modifiable rather than rigid and ineluctable.

Although most non-human animals do not appear to agonize over life’s decisions (lucky them), some of their behaviors can be described as decision making and, moreover, these decisions can be measured. For instance, in many primate social groups, males do not mate with all females, because to do so would risk reprisals from dominant males. Yet the presence of a sexually receptive female is among the most potent natural stimuli in the animal's sensory world. That the mapping between sensation and behavior is flexible enough to take into account such complex and fluid information as the present state of a group's dominance hierarchy argues against a simple view of stimulus-response mappings and for a richer, more nuanced view phrased in terms of decisions. Even for behaviors of lesser complexity, animals must feed, and in doing so, often navigate their environment in ways that necessitate choosing paths, selecting food items, and switching between exploration and exploitation of food sources. Again, animals pursue these behaviors flexibly, in a way not easily explained as simple reflexes. Later, we will argue that behaviors of this type -- behaviors animals have evolved to perform most efficiently and effectively -- offer distinct advantages to the study of decision making. For now, however, we simply note that these behaviors, and their laboratory analogs, arguably merit the term "decision," if for no other reason than they depend on context and adapt flexibly in ways difficult to reconcile with simple stimulus-response mappings.

So when we refer to “decision neuroscience” in this article, we refer to the processes by which sensory stimuli, some temporally remote, interact with cognitive, motivational, mnemonic, and autonomic systems to select and produce motor output. Physiologically, the study of decision processes focuses on the neural processing stream between its inputs and outputs, including limbic, mnemonic, and associational circuits that interpose between sensory and motor systems. It is the essential processing in the middle of the input/output stream that makes organisms more complicated than reflex machines. Here we review some recent directions in the literature on decision making, paying special attention to the frameworks in which the neurobiology of choice has been studied, and point out what we believe are fruitful recent directions in the literature, in particular from the vantage of ethology and evolutionary ecology.

A neuroscience of choice: The advent of neuroeconomics

In recent years, neuroeconomics has made substantial contributions to our understanding of decision making through the application of quantitative tools and frameworks derived from economics and psychology to neuroscience (Camerer et al., 2005; Glimcher, 2002, 2003, 2004; Glimcher and Rustichini, 2004; Platt and Huettel, 2008; Sugrue et al., 2005). Concepts such as utility and subjective value, delay discounting, risk, and ambiguity, have been shown to have correlates in the nervous systems of both humans and non-human animals, and frameworks including reinforcement learning have provided new conceptual models for analyzing relationships between neural codes and behavior. The rapid expansion of neuroeconomics in the last decade has drawn together disparate strands of research on reward, motivation, and learning under a single banner. Several of the key early discoveries emphasized value-based decisions and culminated in the economic utility synthesis that organizes much contemporary work in this field.

The first major discovery emerged from studies on the representation of reward in the brain. In what would become a foundational work for both computational and decision neuroscience, Schultz, Montague, and Dayan (Schultz et al., 1997) published a synthesis of several studies demonstrating that the firing of dopamine neurons in the primate midbrain signals reward prediction errors as predicted by theories of reinforcement learning, hinting that the brain’s internal motivational processes might yield to quantitative analysis. Around the same time, Knutson and collaborators showed the first signs of robust hemodynamic activation of ventral striatum in human subjects incentivized with money (Knutson et al., 2001), a non-primary reward with no intrinsic survival value, suggesting that the brain might use similar codes to evaluate both primary and secondary rewards.

In another early study, Platt and Glimcher recorded neuronal activity from the lateral intraparietal area (LIP), a cortical area involved in connecting visual stimulation to attention and orienting movements of the eyes, in monkeys choosing between two targets associated with fluid rewards (Platt and Glimcher, 1999). This was a novel departure from previous studies, in which animals had been trained to produce specific, experimenter-determined behaviors in response to physical cues. In this experiment, the two options differed in size or probability of reward across blocks of trials. Platt and Glimcher found that the firing rates of LIP neurons encoded the product of reward size and reward probability, equal to the mean or “expected value,” of each option, a variable first theorized to underlie decision-making over 300 years ago (ref Arnaud and Nichole). Consistent with this notion, firing rates predicted monkeys’ choices. While not the first evidence that the brain combined reward magnitude and probability, this work drew attention to a growing body of literature endorsing utility theory as a useful tool for unveiling the processes by which the brain organizes action (Edmonds and Gallistel, 1974; Shizgal, 1997).

These findings resonated with separate programs of research that had investigated decision-making in prefrontal cortex, particularly the ventromedial/orbitofrontal region (OFC; see below and Box 1). A rich series of studies in prefrontal patients demonstrated that orbitofrontal lesions produce deficits in decision-making, particularly when making choices between probabilistic gambles (Bechara et al., 1999, 2000a). These findings accorded with even earlier work in non-human primates demonstrating the responsivity of single neurons in OFC to associative learning of olfactory cues, appetitive tastants such as fats, and taste-specific satiety (Critchley and Rolls, 1996; O’Doherty et al., 2001; Rolls et al., 1996, 1999). These results were expanded by studies demonstrating the responsiveness of OFC neurons to relative changes in amounts of fluid reward, as well as to relative preference among rewards (Schultz et al., 2000; Tremblay and Schultz, 1999, 2000). Thus evidence from both single neurons and human imaging coalesced around the idea that the orbitofrontal cortex encoded subjective value of rewards and suggested it might serve as a potential hub of decision-making in the brain (Bechara and Damasio, 2005; Bechara et al., 2000b; O’Doherty et al., 2001; Rolls, 2000).

Box 1: OFC vs vmPFC: Distinction with a difference?

The vast imaging literature on decision neuroscience leaves little doubt that both ventromedial prefrontal cortex and orbitoftonal cortex both play some role in processing rewards. Yet confusion persists about the respective definitions and roles of these regions.

The term “ventromedial prefrontal cortex” is a general description of a region of the brain, and does not correspond to any cytoarchitectural boundaries. It is not a term used historically in the anatomical literature, and gained popularity with the advent of functional imaging. Because cytocarchitectonic boundaries can only be defined in post-mortem brains, the use of the term “vmPFC” in the imaging literature is descriptive: a shorter way of saying, “somewhere near the bottom of the brain, in the front, in the middle.” Use of the term vmPFC typically corresponds to regions within anterior cingulate cortex or just anterior to it (though sometimes ACC and vmPFC are reported as separate regions), including Brodmann’s areas 32, 24, and 14.

In contrast, the term “orbitofrontal cortex” is commonly used in the anatomical literature, and has been formally described as being equivalent to Brodmann’s areas 10, 11, 12/47, 13, and 14 (Carmichael and Price, 1994; Petrides and Pandya, 1994). BA 11, 13, and 14 are the portions of OFC most often implicated in studies of value and utility, with 11 lying anterior to 13, and 14 lying medial to 13 (see Figure 3A). Area 14 is the most ventral section of the medial wall, and therefore can ostensibly fall under the heading vmPFC as well as OFC. In fact, connectivity studies imply that area 14 serves as a bridge between BA 13 in OFC, which has extensive sensory input but little motor output, and BA 24 and 32 in cingulate, which has motor output but not sensory input. BA 10 is commonly (though not always) considered synonymous with “the frontal pole” and is thought to have a less prominent role in reward processing; and area 12/47 refers to lateral OFC, and is often associated with punishment, rather than reward. The anatomical maps of OFC are highly similar between humans and non-human primates (Figure 3A (Murray et al., 2007; Petrides and Pandya, 1994; Wallis, 2007)).

Since fMRI-based studies do not provide access to the anatomical markers that differentiate these various subregions of cortex, inconsistencies in brain region nomenclature were inevitable. Consequently, the relationship between frontal cortex and reward processing can be confusing. For example, activity ascribed to vmPFC in one paper will be ascribed as OFC in another, and vice versa, despite similar coordinates [e.g. (0,44,−12) in (Levy and Glimcher, 2012); (−4, 37, −7) in (Cox et al., 2005); see Figure 3B]. For instance, the location of the vmPFC held to encode common currency in many fMRI studies, finds its home on the orbital floor in the work of one prominent group (Hare et al., 2008; Plassmann et al., 2007) and more dorsally, in ACC, in another highly influential paper (Kable and Glimcher, 2007) (see Figure 3C) Recent advances like the brain mapping meta-analysis tool Neurosynth (http://neurosynth.org/) (Yarkoni et al., 2011a, 2011b) have begun the process of disambiguation, but much confusion persists as to the specific roles of these various subregions of cortex and how they contribute to decision making and utility calculation. Moreover, identification of functional differences in neural networks within any single brain region is not yet possible using fMRI: Voxels in the typical fMRI study are about 2 mm2, a smoothing kernel of approximately 5–6 mm is applied during the post-processing phase, and, to avoid false positives, only clusters containing 10 contiguous voxels or greater are considered during the analysis. This level of spatial resolution precludes the differentiation of neuronal populations that are functionally distinct but intercalated into a single, functionally heterogeneous brain region.

Consideration of single unit electrophysiology complements the functional imaging body of knowledge, but adds another level of complexity, as well. Most notably, whereas “common currency” signals in vmPFC are common in the imaging literature, single neurons in the same regions in monkey do not encode this feature (Wallis 2012). Instead, the canonical finding of single neuron common currency representation was described in Area 13 m, a part of lateral OFC (Padoa-Schioppa and Assad, 2006). This finding is inconsistent with the bulk of the functional imaging reports of reward-related OFC activation. In contrast, lesion studies in nonhuman primates implicate medial OFC in value comparison, and lateral OFC in flexibly updating stimulus-reward associations linking environmental stimuli to outcomes (Noonan et al., 2010, 2012; Rudebeck and Murray, 2011; Walton et al., 2011). Yet other studies find only limited evidence of common currency encoding in neurons in either medial and lateral OFC, at least in the context of a social reward task (Watson and Platt, 2012). These differences across studies imply OFC is sensitive to the context in which the reward is received.

Single unit studies of reward in prefrontal cortex make clear that neuronal populations are functionally heterogeneous, that single neurons are multiplexed into different functional roles, and that reward processing occurs in a distributed fashion across many regions of cortex (Abe and Lee, 2011; Sul et al., 2010; Takahashi et al., 2013; Wallis and Kennerley, 2010). In this light, it is likely that vmPFC and OFC will continue to evade a single, modular, functional role assignment. The challenge will be to identify neural circuits and computational structures that span frontal cortex, or indeed the entire brain, that enable animals to perform sophisticated reward-based behaviors.

In parallel with these developments, studies of social decisions in the field of behavioral economics began to be viewed increasingly in biological terms. For example Fehr and colleagues (Fehr and Fischbacher, 2003; Fehr and Gächter, 2002) found that in a monetary investment game in which participants were required to trust others to return the proceeds from their investment, third party individuals punished cheaters at their own expense, promoting future cooperation. This behavior, they suggested, may have enabled their evolution of cooperative human behavior. Such a perspective accorded well with functional imaging (e.g.(McCabe et al., 2001), reviewed in (Fehr and Camerer, 2007)) and non-invasive pharmacological manipulations (Kosfeld et al., 2005), which showed that prefrontal regions were more active for play against a human than computer opponent and that administration of the neuropeptide oxytocin increased trust behavior in participants. This line of research thus began a symbiosis that became a hallmark of decision neuroscience: economics provided the means for a more rigorous investigation of social behavior, while the inclusion of biological factors broadened and deepened our understanding of the context in which decisions took place.

These early studies quickly gave rise to, and coalesced around, a set of ideas linking the neurobiological study of decisions with the quantitative tools and framework of economics. In tandem with the growth of functional MRI as a tool in cognitive neuroscience, investigators moved to identify the neural processes underlying economic variables like probability, risk, ambiguity, and utility (Hayden et al., 2010; Hsu et al., 2005; Huettel et al., 2006; Kable and Glimcher, 2007; Knutson and Peterson, 2005; McCoy and Platt, 2005). Glimcher (Glimcher, 2004) provided an early synthesis of these ideas, in which he argued that, in order to make decisions between disparate types of goods -- food and money, for example -- the brain must rely on a final common pathway for comparing their value. Glimcher specifically argued that this neural amalgam might well correspond to the economic concept of subjective utility, whose axiomatic foundations were laid decades earlier by von Neumann, Morganstern, and Savage (von Neumann and Morgenstern, 1947; Savage, 2012). That is, choice options should be represented in the brain as directly comparable utilities, a “common currency” in which costs and benefits could be weighed together for the purpose of selection appropriate actions (reviewed in (Levy and Glimcher, 2012)). This idea sketched an explicit roadmap for neuroeconomics: namely, to identify the brain structures contributing to utility computation and comparison, and determine their roles in the process.

The brain structures currently thought to underlie decision making emerged as candidates in the earliest studies, and have garnered sustained interest since. They include the orbitofrontal and cingulate cortices, anterior insular cortex, posterior parietal cortex, the amygdala, and ventral striatum. Not only are many of these areas linked by the same “limbic” aspects of the cortico-basal ganglia loop, they share connections (often reciprocal) with dopaminergic and serotonergic nuclei in the midbrain (Haber and Knutson, 2010). Most importantly, these areas are routinely and reliably activated in human subjects anticipating or choosing between options resulting in monetary rewards, as well as nutritive outcomes, altruistic donations to others, and pictures of attractive faces (Smith et al., 2010; Tankersley et al., 2007). These observations, in combination with studies showing neural correlates of reward (Tremblay and Schultz, 1999), risk, evidence accumulation (Gold and Shadlen, 2007), and effort costs in monkeys and rodents (Rudebeck et al., 2006; Walton et al., 2003, 2006), provided strong evidence for a distributed system of utility computation and comparison.

Likewise, subsequent developments have strengthened the influence of computational learning models, particularly reinforcement learning, as frameworks for analyzing choice behavior and understanding the biology of decision-making. Following on the work of Schultz, Montague, and Dayan, investigators looked for and identified correlates of subjective utility (Kable and Glimcher, 2007; Peters and Büchel, 2009), temporal discounting of future rewards (Kable and Glimcher, 2007; Kim et al., 2008; Louie and Glimcher, 2010; McClure et al., 2004, 2007), action values (Barraclough et al., 2004; Behrens et al., 2007; Kennerley et al., 2006; Quilodran et al., 2008), online updating of value in the face of environmental change (Barraclough et al., 2004; Behrens et al., 2007; Daw et al., 2006; Dorris and Glimcher, 2004; Pearson et al., 2009; Sugrue et al., 2004), and decision confidence (Kepecs et al., 2008; Kiani and Shadlen, 2009; Resulaj et al., 2009). These studies further strengthened the hypothesis, long argued by behavioral scientists, that animals, including humans, acquire complex behavioral patterns using algorithms similar to those used in robotics to guide artificial agents. The neuroeconomic findings, however, began to proffer a specific neural code is actually used by the brain to implement the proposed learning algorithms.

It gets complicated: OFC and the common currency of utility

The first generation of neuroeconomic studies found evidence for utility-related activity in brain regions such as orbitofrontal and cingulate cortices, striatum, and posterior parietal cortex, as well as the dopaminergic and serotonergic systems. These findings gave rise to the hypothesis that a distributed network of brain regions was responsible for computing the utility of choice options, with particular functions assigned to particular choice options. However, recent findings have brought new complications to bear on this early consensus, which rested largely, but not entirely, on evidence from human neuroimaging. In particular, single-unit studies from a variety of research groups have noted a heterogeneity in the patterns of neuronal responses to variables like reward (Abe and Lee, 2011; Hayden and Platt, 2010), effort (Rudebeck et al., 2006), and cost (Kennerley et al., 2009) that was not apparent in seminal early studies. That is, individual neurons appear to be responsive to nearly all combinations of task-related variables, with only quantitative differences between regions (Wallis and Kennerley, 2010).

But why should heterogeneity be a problem? At issue is the meaning of statements like “ACC encodes action values” or “OFC encodes utility,” and whether these statements are comparable to more accepted ones like “area MT encodes visual motion” or “the fusiform gyrus encodes faces.” In the latter cases, what we implicitly mean is that a large fraction of the cells in a given area respond reliably to a given class of inputs, typically with a well-characterized response pattern (tuning curve, burst firing, etc.) (Parker and Newsome, 1998). In other words, we expect that the “average” unit in an area is strongly driven by a given variable and that most units are similar to the prototype.

How closely do neurophysiological results align with this picture? In the simplest version of the common currency hypothesis, cells in “utility” regions should compute the building blocks of utility, and firing rates should reflect either utility or its constituent components. In more sophisticated theories (Beck et al., 2008; Ma et al., 2006), a population of preferentially tuned cells encodes utility at a population level, obviating the need for a monotonic representation of utility by individual neurons. Yet, the measured responses of individual cells in many of these regions are often far more complex than the simple “region X encodes Y” pattern.

Consider, for instance, the case of the orbitofrontal cortex (OFC). Neuronal activity in the OFC has been shown to be sensitive to properties of a reward, including its size, probability, and delay (Kennerley and Wallis, 2009; O’Neill and Schultz, 2010; Roesch et al., 2006; Wallis and Miller, 2003). In simple tasks involving nutritive rewards, OFC neurons respond similarly to all cues that lead to the same outcome, even if the stimulus properties of those cue are different (Schoenbaum et al., 2011). Moreover, neurons in primate OFC encode utility for different flavors of fluid reward on a common scale (but see below) (Padoa-Schioppa and Assad, 2006). Combined with corroborative fMRI evidence (Peters and Büchel, 2010), these findings endorse the idea that OFC is a primary site of common currency computation. That is, OFC is the node at which abstract notions of utility are assigned to disparate types of reinforcement. These findings constituted one of the great early breakthroughs of neuroeconomics, in that they demonstrated the power of concepts drawn from economics to assign a specific functional role to a poorly understood brain region (Montague and Berns, 2002). Yet in the studies where utility signals were found in OFC, these signals, corresponding to the offered and chosen values of options, were found in only a small (but highly significant) percentage of neurons (Padoa-Schioppa and Assad, 2006, 2008), while in tasks examining a different set of decision-related variables (Kennerley and Wallis, 2009; Wallis and Kennerley, 2010), half the neurons in this area were responsive to some variable (reward, probability of reward, effort cost). To complicate things further, those subregions of OFC in which neurons reliably encode utility in monkeys do not correspond to those regions that show hemodynamic activation during similar tasks in humans (See Box1). Clearly, signals in OFC carry information about decision variables, but this information appears to be encoded much more heterogeneously and diffusely than in better-characterized sensory or motor areas.

Other evidence also seems to dispute the notion that the primary function of OFC is to compute utility in a common currency. Studies in rats and monkeys demonstrate that features of the predictors, outcomes, or responses required to receive a reward are also encoded by neurons in OFC (Schoenbaum et al., 2011). At the very least, many OFC neurons signal variables other than reward or punishment, and are additionally sensitive to specific features present in the environment. For instance, a recent paper comparing the responses of OFC neurons to two different modalities of reward found little evidence in support of a common currency neural code for utility (Watson and Platt, 2012). In that study, monkeys performed a decision making task in which they chose between two targets offering disparate amounts of fluid reward, one of which was paired with images of other monkeys. In this task, choices are strongly determined by the size of fluid rewards associated with each target, yet monkeys sometimes choose to see some images, particularly the faces of dominant males or the perinea of females (Deaner et al., 2005), even when doing so yields slightly less fluid than choosing not to see the images. This substitutability of fluid rewards and visual images is a key component of utility in economics. Although choices were largely determined by fluid value, neuronal activity in OFC was much more strongly determined by which images were on offer. More OFC neurons discriminated between social image categories (e.g., images of dominant monkey faces vs. subordinate monkey faces) than between fluid amounts (largest possible fluid amount vs. smallest possible fluid amount). This discrepancy between choice behavior and neuronal sensitivity belies any simple, direct correspondence between utility as inferred from revealed preferences and a common neural currency of utility in OFC.

In fact, in this task, decision context was a major determinant of neuronal activity in OFC. Specifically, the type of image available for viewing (dominant male, female, etc.) in each block of 20 -- 30 trials was correlated with firing rates of OFC neurons, regardless of which decision the monkey made. When monkeys chose to view a blank screen, the type of image monkeys chose not to see influenced neuronal activity. Consistent with these findings, Padoa-Schioppa reported that the activity of OFC neurons is not always a good predictor of behavioral preferences (Padoa-Schioppa, 2013). When monkeys were roughly indifferent to two different juice flavors offered in different amounts, and hence the utility of the two offers was by definition equivalent, firing rates of OFC neurons did not predict decisions. Instead, trial-to-trial fluctuations in the activity of neurons encoding the flavor of the chosen juice predicted choices.

Finally, lesion studies in both humans and animals suggest that the OFC’s role in decision making extends beyond the computation and representation of utility on a common neural scale. Although humans with OFC lesions do exhibit profound abnormalities in decision making (e.g. Fellows and Farah (2003, 2007)), these deficits tend to be subtle, impervious even to diagnosis through neuropsychological testing in a standard clinical setting (Eslinger and Damasio, 1985). By contrast, OFC lesions strongly disrupt performance in tasks in which the association between cue and outcome is unstable across trials (Schoenbaum et al., 2011). OFC lesions reliably interfere with reversal learning, in which stimulus-reward associations are abruptly reversed (Schoenbaum et al., 2011). These findings have been replicated in monkeys (Murray and Wise, 2010), revealing that OFC lesions interfere not with the computation of utility, but in the flexible linkage of cues and outcomes. This suggests that OFC guides decision making by allowing the animal to learn and predict relationships between environmental cues and important outcomes (including rewards), particularly in situations in which the relationship between cues and outcomes is subject to change (Murray and Wise, 2010). The need to rapidly update stimulus-outcome associations is particularly profound in social contexts, in which changes in mood or goals in a single individual, or changes in behavioral interactions between several, can happen in an instant. The transition between relaxed tolerance and intense aggression within a social group often occurs nearly instantaneously, and far more rapidly than the depletion of food patches. This demand for rapid updating of appropriate responses in social contexts may have been a primary evolutionary driver of the enlargement and specialization of OFC and its related circuitry in highly social species.

But this complexity is far from limited to OFC. In many of the midline and lateral cortical areas thought to be important to the process of decision-making, neuronal responses have proven highly heterogeneous, with variability in tuning curve shape and direction (increasing or decreasing), which combinations of variables are encoded, and conclusions about which particular computations should be assigned to a given area (Abe and Lee, 2011; Hayden and Platt, 2010; Heilbronner et al., 2011; Kennerley and Wallis, 2009; Pearson et al., 2009; Seo and Lee, 2007; Wallis and Kennerley, 2010). Moreover, studies that have sought to test the prediction of the common currency hypothesis that utility will be encoded consistently across disparate rewards, contexts, and types of decisions within the same experimental session have met with mixed results (Dorris and Glimcher, 2004; Heilbronner et al., 2011; Kennerley and Wallis, 2009; Luk and Wallis, 2009; Padoa-Schioppa and Assad, 2006). Even within a task, prefrontal regions appear to encode different task-related variables at different times during a single trial. For example, neurons in lateral prefrontal cortex (LPFC), which lies anatomically downstream of OFC, are sensitive to value when the monkey has knowledge of the amounts and types of juices available but no knowledge of the action required to report his choice (Cai and Padoa-Schioppa, 2012). Once the information about the necessary action is revealed, the LPFC neurons switch from encoding value to encoding the action plan.

While not ruling out the common currency hypothesis, these findings suggest that simple intuitions about how the nervous system computes decisions--those based on monotonic encoding of utility--do not fully align with evidence at the single neuron level. When neurons respond inconsistently and heterogeneously to multiple experimental variables, it is an indication both that we have yet to hit on the correct “neural axes”--the preferred variables encoded by the brain--and that the actual computations involved are significantly more complex than simple models assume, or that they are distributed among neural populations in a fashion more sophisticated than instantiated in current models.

Not just prediction error: the complications of dopamine

Yet the common currency hypothesis is not the only neuroeconomic theory to have been substantially complicated by recent findings. Even the early computational breakthroughs linking dopamine to reinforcement learning have required reevaluation as evidence has mounted for a broader role for dopamine in motivated behavior. Equally influential theories of dopamine function posit that dopamine is responsible for encoding motivational value more broadly as opposed to hedonic value -- “wanting” instead of “liking” -- and that dopamine’s primary role is in signaling “incentive salience” (Berridge, 2007; Tindell et al., 2009). In many cases, this definition overlaps with that of the reward prediction error hypothesis, but there remain conceptually interesting boundary cases in which events are salient without prediction error and vice-versa (Bromberg-Martin et al., 2010; Horvitz, 2000; Lammel et al., 2014). Indeed, more recent physiological studies have both elucidated connections between the dopaminergic midbrain and other brainstem nuclei and demonstrated that responses of dopamine cells are more diverse than the classic prediction error response (Bromberg-Martin and Hikosaka, 2009; Bromberg-Martin et al., 2010; Fiorillo, 2013; Matsumoto and Hikosaka, 2009; Matsumoto and Takada, 2013). These studies have shown that signaling by the dopaminergic midbrain is both anatomically precise and more diverse than at first suspected. For instance, cells within VTA that stain positive for tyrosine hydroxylase and project to the lateral habenula (LHB), a nucleus that responds to aversive events and indirectly inhibits VTA, nonetheless inhibit the LHB via release of GABA (Jhou et al., 2009; Stamatakis and Stuber, 2012; Stamatakis et al., 2013). While not upending the reward prediction error hypothesis, this suggests that the dopaminergic midbrain transmits a repertoire of diverse, anatomically specific signals rather than broadcasting a single prediction error.

With these new results pushing at the edges of the early consensus, the limits of the first generation of neuroeconomic models have become increasingly apparent. While many of the foundational insights have continued to prove robust, there is also a sense in which the earlier frameworks require a firmer grounding in fundamental biology. Here we argue that, in addition to psychological and economic decision frameworks, our understanding is likely to benefit from renewed focus on the basic ecological problems that animals have actually evolved to solve. To understand decisions within the brain, we must understand brains in their evolutionary and ecological context, starting with the types of problems they have long confronted and only later building toward the sophisticated quantitative reasoning of enculturated humans. In what follows, we elaborate upon this argument and review recent advances along these lines.

Back to nature: decision ethology

The brain is an organ that evolved to generate adaptive behavior responsive to its environment. Just as ecological niches and locomotor apparatus are diverse, so are nervous systems diverse. And yet the vertebrate brain, the mammalian brain, and the primate brain each represent both a refinement and an elaboration of the same basic plan for an organ that maps sensory inputs to motor outputs. Viewed this way, the brain forms the core processor of the behavior machine, and the relevant theoretical constructs for understanding its function are the essential problems it confronts, the algorithms used to solve them, and the underlying architecture that implements them. This is bottom-up language, engineering language, and focuses on constraints, hacks, and specializations for solving common problems.

Decision science, by contrast, has been formulated as a universal approach to mathematizing behavior. It is axiomatic, top-down, and general. It focuses on theorems, optimality, and rationality. Among its strengths is a domain-agnostic set of tools for parsing, discussing, and modeling choice behavior, and it is the power of these tools that makes them attractive as roadmaps to a neurobiology of decision-making. Yet the flexibility and generality of the neuroeconomic approach also impose key limitations for the applicability of these tools to biological systems. This is partly because the neuroeconomic paradigm is designed to offer a parsimonious accounting of behavior, and this parsimony hides much crucial biological complexity. It is also because, as computer scientists have long appreciated, systems are more robust when they separate algorithms from the details of their implementation. It is not because of a focus on rationality and optimality, which serve as useful benchmarks. Rather, it is because there remains little evidence to argue that economic variables are the “right” neural variables, even if they can be used to both prescribe and describe behavior in an "as if" sense.

This last point merits special emphasis. As has often been noted, human behavior displays striking departures from rationality (Ariely, 2009; Gigerenzer and Selten, 2002). Likewise, animal behavior can exhibit some of the same biases (Hayden et al., 2010; Heilbronner and Hayden, 2013; Lakshminaryanan et al., 2008; Pearson et al., 2010; Shafir, 1994; Stephens and Anderson, 2001). Yet these departures from rationality or optimality just as often reflect our ignorance of constraints under which agents operate, and modeling these constraints has been a signal achievement of the mathematical approach to behavioral ecology (i.e., foraging theory, mate selection theory), which we endorse below. In both theories, optimality serves as a benchmark against which real behavior and models may be measured, not a normative claim (or even a realistic hypothesis). Rather, the promise of behavioral ecology when compared with other models of decision making lies in the problems on which it focuses. The problems of behavioral ecology and its offshoots are problems of basic biology -- the four “F”s: fighting, foraging, fleeing, reproduction -- and the most likely to have undergone significant selection pressure. As a result, they are the problems most likely to have shaped the brain and dictated its function. By contrast, the problems most basic to neuroeconomics -- choices between risky lotteries, intuitions about probabilities, purchasing decisions -- require borrowing from overlapping systems adapted to different biological ends -- termed "exaptation" in the evolutionary biology literature (Gould and Vrba, 1982) -- and so may be more likely to constitute difficult “edge cases” for the brain. Thus the ethological approach is not to be preferred because it is less mathematical or suboptimal, but because its central questions are likelier to map directly onto the problems the brain evolved to solve.

In response to these considerations, several recent lines of investigation have attempted to ground the study of decisions more fully in its biological and evolutionary context. These studies borrow from the language of mathematical behavioral ecology -- the mathematical study of animal behavior that encompasses not only foraging, but diet selection, mate choice, and collective dynamics (Giraldeau and Caraco, 2000; Stephens and Krebs, 1986; Stephens et al., 2008) -- and begin not with the most complex decisions human beings can perform, such as quantitative decisions about abstract concepts like money, but with simple decisions common to a wide variety of animals: Should I chase this prey or not? Should I keep foraging where I am, or look for something better? Should I leave the pack and strike out on my own? Viewed mathematically, behavioral ecology is a close cousin of both economics and evolution, borrowing tools, including notions of optimality, from both. But just as importantly, by furnishing us with a set of tractable models that often apply across species, it presents us with an opportunity to study problems that recur across taxa, problems much more likely to result in near-optimal behavior and utilize common algorithms (Adams et al., 2012).

Two lines of thinking motivate this turn from a perspective borrowed from neoclassical and behavioral economics to one rooted in behavioral ecology and ethology. First is the argument from function. Despite the fact that humans now routinely engage in complex decisions like financial investments and interaction via social media, these activities have taken place only very, very recently in evolutionary time. As a result, they recruit circuits adapted for a variety of ancestral behaviors in new and complex ways. Similarly, because most studies generalize from complex tasks to underlying biology, tasks should be closely aligned to basic biological strategies for their required behaviors to map properly onto nervous system function. Second is the argument from practicality. In animal behavior, true cost functions are rarely known, and agents are assumed to possess only limited computational capability. As a result, while ethologists often borrow computational tools from economics and decision theory, the emphasis is on discovering conditions and constraints under which observed behaviors are normative. Underlying this is the assumption that evolution works to punish suboptimal behavior, but this assumption is more valid for common tasks than for rare ones, and for tasks more proximate to reproductive success than those distal to it. Thus, common, essential tasks offer greater potential for behaviors that are reliable, better characterized in an optimality framework, and have much stronger ties to underlying neurophysiology.

Of course, most animal studies necessarily require a certain level of ecological validity to facilitate ease of training, and all laboratory paradigms are to some extent artificial. The key question in the case of animals is whether the demands of the task “make sense” in the context of their natural environment. Some foragers experience primarily sequential, not simultaneous encounter with their foods; for these animals, two-alternative forced choice paradigms may not correspond as well to the natural behavioral repertoire (Stephens and Krebs, 1986). Similarly, an ability to make fine discriminations of probability or to take into account enforced post-reward delays may stretch the cognitive capabilities of some animals (Blanchard et al., 2013; Pearson et al., 2010; Stephens and Anderson, 2001; Stephens and McLinn, 2003). None of which is to say that such tasks teach us nothing about the neurobiology of a given species. Rather, the contrast is analogous to that in visual neuroscience between presenting animals with artificial but mathematically tractable stimuli like Gabor patches and ecologically rich but mathematically difficult natural movies: the latter reveals patterns of activity not always predicted by models developed from former (Lewen et al., 2001; Vinje and Gallant, 2000).

In keeping with this approach, a number of recent papers have begun to examine foraging-like decisions neurobiologically in a host of model species (Bendesky et al., 2011; Flavell et al., 2013; Hayden et al., 2011; Kolling et al., 2012; Kvitsiani et al., 2013; Schwartz et al., 2012; Yang et al., 2008). This work has strong ties to previous studies of decision making in volatile environments (Barraclough et al., 2004; Behrens et al., 2007; Daw et al., 2006; Pearson et al., 2009; Sugrue et al., 2004), but focuses on laboratory analogues of a single prototypical conundrum in foraging—the patch leaving problem—in which an animal must decide when to leave a depleting food patch and search for another, richer one. In its original formulation (Charnov, 1976), patches were spatially separate, necessitating tradeoffs between diminishing returns and the time and energy costs of travel to a new patch. In their modern laboratory incarnations, patch leaving is simulated by delays or costs, though with the same structure as their natural counterparts.

Two features of these studies are remarkable. First is the ubiquity of near-optimal behavior across species. As has long been appreciated (Stephens and Anderson, 2001; Stephens and McLinn, 2003), decision tasks that are superficially very similar can elicit vastly different behavior when framed as foraging or economic temporal discounting problems, with the former provoking near-optimal behavior (in accord with the so-called “marginal value theorem”) and the latter yielding systematically suboptimal behavior described by models of temporal discounting. Second, neural activity in these studies seems remarkably consistent, with activity in dorsal anterior cingulate cortex apparently signaling a shift away from the current default option in humans, primates, and rodents (Hayden et al., 2011; Kolling et al., 2012; Kvitsiani et al., 2013; Wikenheiser et al., 2013). Furthermore, the algorithms describing this behavior appear to be shared across species, including worm and fruit fly (Bendesky et al., 2011; Flavell et al., 2013; Schwartz et al., 2012; Yang et al., 2008), albeit instantiated in different biological “hardware” (Adams et al., 2012).

For instance, in a laboratory task in which monkeys made foraging-like choices between an immediate reward option with diminishing returns and a delayed option that reset the reward option to its initial value, (analogous to the choice to “stay” in a current food patch or “leave” in search of new options) monkeys showed choice behavior very close to that predicted by the marginal value theorem: they continued to forage in each “patch,” choosing the reward option, until the point at which that reward fell below its mean value for the environment as a whole, at which point monkeys chose to “leave” the patch (Hayden et al., 2011). More importantly, firing of individual neurons in anterior cingulate cortex (ACC) showed increasing activity as the reward diminished and the animal neared its “leave” decision, reminiscent of the integration to bound mechanism observed in some brain areas during perceptual decision making (Gold and Shadlen, 2007; Krajbich et al., 2010; Stanford et al., 2010). And indeed, while the delay that followed the “leave” option modulated the rate of rise of the neural response, firing rates at the time of the leave decision were consistent across conditions, in keeping with the hypothesis of a neural threshold for patch-leaving. Yet neural responses in the foraging decision showed key differences, as well: unlike the rapid rise in firing rate observed in various premotor areas during visual motion discrimination, increases in ACC firing rates during foraging took place over an entire series of decisions, on a time scale an order of magnitude greater than those observed during a single perceptual decision. In addition, ACC neurons did not fire continuously for the duration of the task, but in phasic bursts around the time of each decision, with increasing numbers of spikes as the foraging bout progressed. All this suggests that, if integration to bound is indeed the relevant algorithm, it must be instantiated in distinct neural circuits and is involved in separate computations, an example of a computational algorithmic replicated across behaviors (Adams et al., 2012).

Recent results have both deepened and broadened these findings. In a similar study in rodents (Kvitsiani et al., 2013), distinct subtypes of prefrontal neurons preferentially influenced stay and leave decisions. Specifically, perisomatically targeting parvalbumin-positive neurons fired in response to leave decisions and encode the duration of the preceding patch residence. In humans performing a similar foraging task, hemodynamic activity in dACC signaled the decision to abandon a current default option while the ventro-medial prefrontal cortex (vmPFC) was more involved with comparisons between options (Kolling et al., 2012). Finally, a recent study of the related diet selection task, in which monkeys were required to balance the costs and benefits of different types of “prey” items (sequentially offered delayed rewards), found that dACC signaling depended on choice: neurons encoded delay when animals chose the reward, but encoded reward amount when they rejected the offer (Blanchard and Hayden, 2014). Together, these studies paint a picture of ACC as a key node for making flexible decisions, with activity primarily referenced to disengaging from the current option. Nevertheless, what is truly striking is how consistent and robust the findings are across species when probed with such “natural” foraging-like tasks.

Work on the neurobiology of foraging in invertebrates, while confirming the behavioral predictions of the marginal value theorem, has also presented a different and compelling picture of the biological mechanisms that regulate search behavior in these very different species. For instance, two recent papers (Bendesky et al., 2011; Flavell et al., 2013) demonstrated that different species of C. elegans differ in the time at which they choose to leave a nutrient patch, and that these differences are regulated by polymorphisms in a noradrenaline-like catecholamine receptor. Moreover, a pair of neuromodulators, serotonin and pigment dispersing factor (PDF), govern the transition between foraging within a patch and searching for a new one. Along similar lines, studies in fruit flies have shown that the process of egg deposition involves a sensitive calibration of costs and benefits, particularly nutrient density at the site of egg laying and foraging costs of progeny (Schwartz et al., 2012; Yang et al., 2008). In these cases, natural decisions faced by each species appear to require the interplay of neural circuits that control the action pattern with neuromodulators that shift these circuits from one stable pattern to the other. This raises the possibility that foraging decisions, as well as other large-scale behavioral transitions, might likewise rely heavily on neuromodulator signaling. If so, our understanding of decision-making disorders linked to dysfunctional neuromodulatory systems might benefit from consideration of these fundamental biological problems, an important avenue for future research.

Despite these advances, it is important to emphasize that the class of ethologically relevant prototype decisions is not limited to foraging decisions, or even decisions over nutritive rewards. In the next section, we turn to a much larger, richer class of decisions -- those involving the social milieu -- to argue that these behaviors constitute not only a much stronger challenge for the value-based decision paradigm, but a greater potential for addressing larger questions in cognition.

No ape is an island: the promise of social cognition

For humans and most non-human primates, other individuals are the most salient, dynamic, and important part of the natural environment. For social animals, navigating group interactions presents one of the greatest sources of evolutionary pressure, requiring capabilities as diverse as recognizing individuals, knowing social rank, assessing mates, remembering past interactions, competitive foraging, forming and maintaining alliances, predicting the behavior of others, and understanding third-party relationships (Bergman et al., 2003; Cheney and Seyfarth, 2008; Cheney et al., 1986). In fact, the increasing cognitive complexity of group living in anthropoid primates may be responsible for the enlargement of brain size across species (Dunbar, 1998; Dunbar and Shultz, 2007).

From a methodological and operational standpoint, the diversity of social stimuli and large repertoire of potential interactions presents both a challenge and an opportunity. The challenge, of course, is that tasks involving social behavior are incredibly difficult to control, with the separation between “low-level” stimulus features and “higher” cognitive processes a particularly thorny one. However, this dichotomy has also come to seem increasingly subtle, with representations specific to social interactions now thoroughly documented in both visual sensory (Tsao and Livingstone, 2008; Tsao et al., 2003, 2006) and motor (Iacoboni et al., 2005; Rizzolatti and Craighero, 2004) systems, as well as in olfaction (Chamero et al., 2012). Thus, while social processes may seem straightforward to define behaviorally -- grooming, aggression, courtship, mating, etc. -- disentangling generalized neural circuits from those specialized for social functions has proven much more difficult, intimating that these behaviors differ in degree more than kind. But the intrinsic complexity of social interactions also presents an opportunity for decision neuroscience. In a world of kinships, shifting dominance hierarchies, and constant competition for life-or-death stakes, decision systems must rely not only on complex, evolved priors for interactions, but on flexible behavioral updating in response to a rapidly changing social landscape. That is, while nervous systems may not always possess specialized hardware for social interaction, this interaction is important because it constitutes most of what some species do with the hardware they have.

By contrast, most decision-making studies have relied on nutritive rewards, which exist predominantly within a low-dimensional space (one scale for each major nutrient). Though some lines of research have studied multiple types of nutritive reward (Padoa-Schioppa and Assad, 2006, 2008), examined the effects of satiety (Balleine and Dickinson, 1998), or varied costs across modalities like time and muscular exertion (Wallis and Kennerley, 2010; Walton et al., 2006), the space of possible rewards utilized in most neurobiological studies to date has been quite limited. And while such simplifications are often a useful feature in designing well-controlled experiments, they also overlook much of the complexity that social animals have evolved to surmount, including the most critical decisions they face.

Of course, the common currency framework predicts that social stimuli should be comparable to other types of reward at the level of utilities, and early behavioral and neural studies found evidence for social value signals in the brain similar to those identified for fluid and monetary rewards. Deaner and colleagues first demonstrated that the value of social stimuli (images of dominant and subordinate males and female hindquarters) for monkeys could be measured behaviorally by the relative substitutability of images and fluid rewards in a simple choice task (Deaner et al., 2005), and Hayden and colleagues extended the same framework to measure the value of social stimuli for humans by substitutability for monetary rewards (Hayden et al., 2007). Building on these behavioral studies, Smith and colleagues demonstrated that humans viewing pictures of attractive faces activated regions of the vmPFC and OFC that were also involved in processing monetary gains and losses (Smith et al., 2010). Indeed, studies have identified the vmPFC as crucial for encoding the utility of a wide array of reward types (Grabenhorst and Rolls, 2011) (see Box 1). Thus these early studies bolstered the view that social factors in the environment, like other variables, are compressed by the brain into a single currency of utility that is used when comparing options to render a decision.

Despite the attractive simplicity of this framework, subsequent studies have demonstrated much additional complexity that is not easily accounted for by the idea of a common neural currency, a monotonic utilty scale within the brain. For example, Heilbronner demonstrated that neurons in posterior cingulate cortex signal value within a task but not across decision contexts as diverse as risky choice, temporal discounting, and choice of a social stimulus (Heilbronner et al., 2011). Even regions canonically associated in the fMRI literature with the representation of utility, including OFC and striatum, have been shown in single unit neurophysiology studies to comprise segregated networks or intercalated neurons that differentially respond to different types of rewarding stimuli. For instance, when monkeys choose between fluid rewards and viewing social stimuli (Klein and Platt, 2013; Watson and Platt, 2012), single neurons in both striatum and OFC responded much more strongly to the choice of social information, even though fluid reward drove choice behavior more strongly. Just as importantly, for those neurons signaling social information, responses were not ordered by behavioral preference, but instead reflected attentional priority and social context, suggesting a more complex representation of social information than a simple common currency of utility. In the striatum, populations of neurons signaling social information and fluid rewards exist along an anatomical gradient, with neurons in the medial striatum more strongly signaling visual social information and neurons in the lateral striatum, including the putamen, more strongly signaling the value of fluid rewards (Klein and Platt, 2013). We speculate that the parallel processing streams in the primate striatum devoted to gustatory and social information, respectively, arose from the partial repurposing of a primitive neural network (Adams et al., 2012)(Figure 2). This primitive network was probably devoted to nutrient foraging, as variants of such neural mechanisms are seen universally throughout the animal kingdom. Duplication and specialization of such a network for the purpose of social information foraging seems likely to have emerged more recently in the primate lineage, in concert with the evolution of large, complex, dynamic social groups.

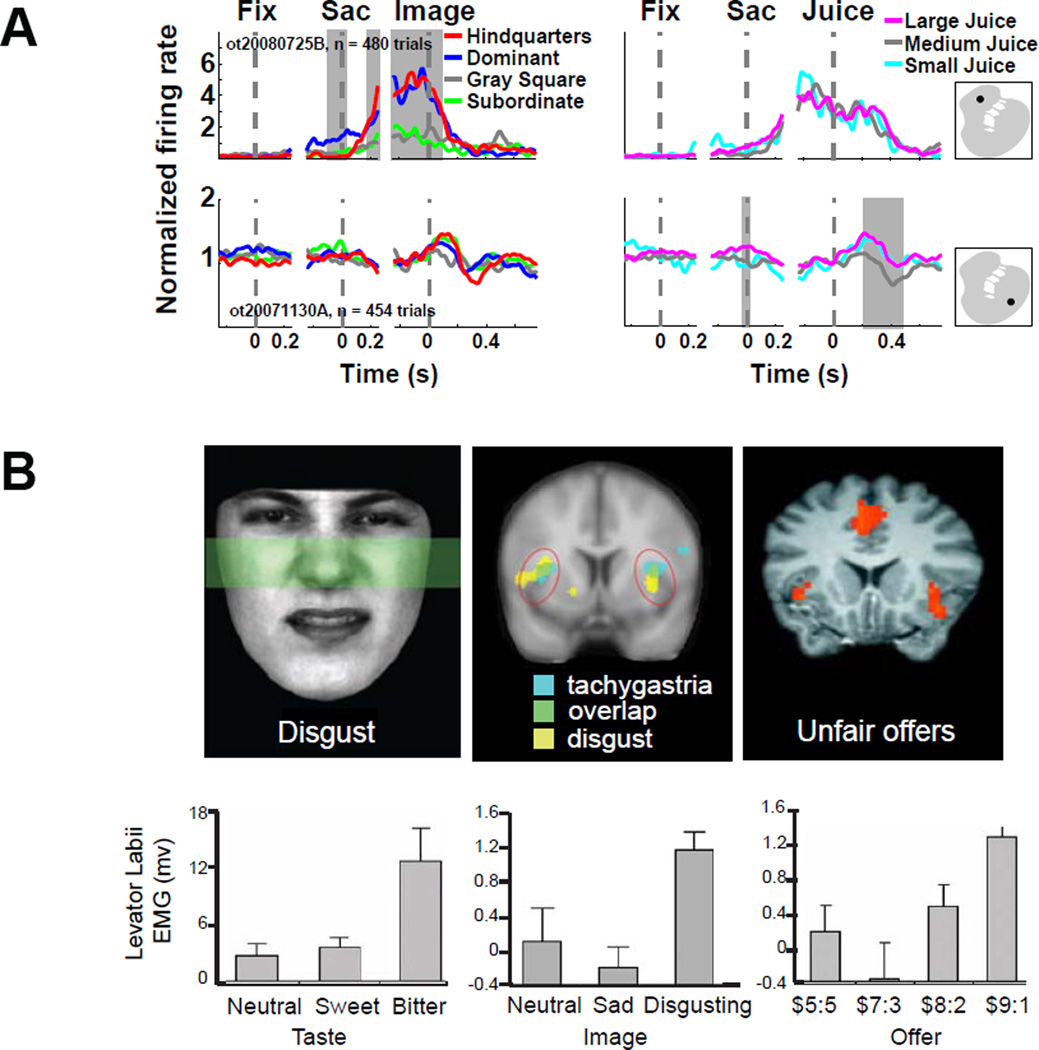

Figure 2.

Putative exaptation of foraging-related behaviors to serve social function in primates. A. Parallel channels for processing social and non-social reinforcers in the primate striatum. Neurons responsive to social rewards are located more medially, whereas neurons responsive to fluid rewards are more lateral. Normalized firing profile of two example neurons in the striatum that differentiate between a single type of reward. Left panels, trials separated by image category; right panels, trials separated by fluid amount. Decision task consisted of a central fixation, a saccade towards a decision target, delivery of juice reward, and an optional image display. Time histograms are aligned to proximate events. Insets illustrate location of each recorded neuron. Reproduced from (Klein and Platt, 2013) B. Facial muscle activity and BOLD activity evoked by aversive gustatory or social outcomes. Top left, activity of the levator labii muscle in the face produces the wrinkled nose characteristic of disgusted facial expression. Top middle, BOLD activity elicited after viewing a disgusting ingestive stimulus (video of an individual gagging and eating raw condensed canned soup) overlaps with BOLD activity correlated with tachygastria, fluctuations of gut activity. This demonstrates the relationship between the experienced emotion of disgust and the state of the digestive system (Harrison et al., 2010). Top right right, BOLD activity associated with receiving unfair offers in the Ultimatum game, a proxy for social/moral disgust (Sanfey et al., 2003). Bottom, similar patterns of facial activity are produced when tasting a bitter substance (bottom left), viewing a disgusting picture (bottom middle), or receiving an unfair offer in the Ultimatum game (bottom right). Modified with permission from (Chapman et al., 2009). These findings suggest a relationship between food avoidance and social avoidance behaviors.

Similar to the striatum, OFC contains neurons that encode social information as well as neurons that encode fluid reward information (i.e., volume), but very few neurons respond to both of these features in parallel. Social and non-social neurons are not segregated anatomically, as they are in the striatum, but are instead intercalated throughout the entire region. This finding demonstrates how different experimental methods can yield quite different pictures of brain function: fMRI studies have repeatedly implicated ventromedial prefrontal cortex and orbitofrontal cortex in reward processing, and have identified these regions as being indiscriminate with respect to reward modality. Non-human primate and rodent electrophysiology studies, however, demonstrate individual neurons in these areas are specialized for specific types of reward information, and that these signals exist on a finer spatial scale than can be resolved from BOLD signals (see Box 1).

A recent study investigating the role of OFC in the social transmission of food preference (STFP) suggests that OFC may be more important for “tagging” environmental features with their current value in order to make appropriate decisions in the future. In STFP, rats actively sniff the breath of other rats in order to detect odors of previously eaten foods, and they use this information to guide food choices in the future (Galef, 1977; Galef and Giraldeau, 2001). This is a naturally occurring behavior that requires no training, is learned in just a single social encounter, and influences choices made weeks afterwards. Such a powerful behavioral effect lends itself to neuroscientific study, and demonstrates the utility of designing experiments that incorporate natural behaviors. Ross and colleagues (Ross et al., 2005) found that this socially transmitted behavior is disrupted when acetylcholine is depleted in OFC. More recently, Lesburgueres, et al (Lesburguères et al., 2011) found that blocking excitatory activity in the OFC at the time of social learning did not alter the expression of food preferences seven days later. Surprisingly, however, it did cause the rats to forget their food preferences when tested 30 days later. Moreover, the OFC-dependent STFP required increases in H3 histone acetylation, implying that an epigenetic mechanism at the time of the social encounter pre-destined a population of OFC neurons for long-term storage of odor-value associations. This result is consistent with the view that, although OFC neurons encode value during simple, well-learned decisions (Padoa-Schioppa and Assad, 2006, 2008), in a rich, real-world environments, they support learning (Schoenbaum et al., 2009). According to this view, OFC enables animals to track and predict dynamic features of the environment, and the outcomes that follow, and thus supports the flexible updating of behavior. It is unsurprising, then, that these OFC circuits would play an important role in social behavior. Although foraging environments are somewhat labile over time -- resource patches become depleted, prey escape or arrive, and fruits ripen and decay -- social environments are even more dynamic, and require split-second modifications of behavior in order to keep up with the fluctuating moods, movements, and interactions of others.

That the signaling of social information may involve richer representation in motivational and limbic structures than initially thought has been born out by subsequent studies, particularly those involving more complex social tasks. Recording from both orbitofrontal and anterior cingulate cortices in monkeys performing a social reward allocation task, Chang and collaborators (Chang et al., 2011) showed that even the encoding of rewards depended on social context. That is, neuronal responses to received rewards depended not only on the amount directly received by the choosing monkey, but on the amount received by a partner. This finding was in line with previous studies that had demonstrated that single neurons encode the amounts of rewards that monkeys might have obtained, had they chosen differently (Abe and Lee, 2011; Hayden et al., 2009). These findings echo the results of other studies in which monkeys made choices in the presence of others (Azzi et al., 2012; Yoshida et al., 2012), which showed that single cells in orbitofrontal and dorsolateral prefrontal cortices, while individually carrying information about both reward and social context, did not do so in a monotonic or uniform manner. Thus, while these cells multiplexed reward-relevant information, they did not appear to reflect a combined utility encoding.

Of course, this is not to say that the brain at no point reduces competing motor plans to a common currency appropriate for action. Some direct comparison is almost certainly necessary for action selection. However, these considerations raise the question of how late in processing such a reduction in dimensionality could take place and whether the early emphasis on fluid reinforcement as a proxy for reward in general is as generalizable as hoped. Similar trends have been apparent in recent studies of social behavior, which have often focused on determining “social” areas of the brain. Yet evidence from neurophysiology (Chang et al., 2013; Klein and Platt, 2013; Klein et al., 2008; Shepherd et al., 2009; Tsao and Livingstone, 2008) indicates that social signals are present in the same subregions -- sometimes even encoded by the same neurons -- as nonsocial information, suggesting that social signals, at least in part, use the same information infrastructure as non-social information.

In addition to the overlap between gustatory reward and social reward described above, social and gustatory avoidance behavior appears to share common neural circuitry. The emotion of disgust is related to the avoidance of pathogens and toxins, and accompanies food rejection (Rozin and Fallon, 1987; Rozin et al., 2009). In humans, disgust is commonly experienced outside of food-related contexts, as moral or social disgust. Disgusted facial expression accompanies bitter taste, disgusting photographs, as well as feelings of social disgust evoked by unfair treatment in an economic game (Figure 2B). Insular activity evoked by viewing disgusting images overlaps with brain representations of digestive tract motility as measured by electrograstrogram (EGG). ((Critchley and Rolls, 1996), Figure 2B). This suggests that signals from the viscera may contribute to the neural representation of disgust, and implies a role for visceral sensation in the experience of social disgust. Insula activity is heightened by unfair offers during the Ultimatum Game (Sanfey et al., 2003), Figure 2B), suggesting a role for this region in social as well as non-social avoidance. Indeed activation in insular cortex is commonly reported in fMRI studies of reward-based decision-making, with a variety of interpretations (Craig, 2002; Liu et al., 2011). Yet we believe, for reasons stated above, that the latter effects in humans are likely to be better understood biologically and (evolutionarily) in terms of the former.

Though separating unique contributions of neural circuits to both social and nonsocial behavior has proven difficult (Carter et al., 2012; Rushworth et al., 2013), comparative neurobiology offers one way to gain traction on this question. For example, studies comparing prairie and montane voles, which possess similar morphological traits and behaviors but have different social and mating systems, have contributed tremendously to our understanding of the biology of social behavior (Insel and Fernald, 2004; McGraw and Young, 2010; Young and Wang, 2004). Prairie voles are known to maintain long term pair bonds in the wild: having copulated, pairs of voles remain in close proximity, show affiliative behaviors such as huddling, and engage in biparental care once the offspring are born. This contrasts starkly with the behavior of montane voles, a closely related species that exhibits no such pair bonding behavior. Anatomical studies have shown that oxytocin and dopamine, and the localization of their receptors in the nucleus accumbens and other reward structures, play a crucial role in orchestrating partner preferences in prairie voles (Young et al., 2001). A sizeable body of work in humans has largely confirmed a role for oxytocin in partner preference, as well as in prosocial decisions more generally (Guastella et al., 2012). The example of the prairie vole demonstrates how the selection of a species in which the behavior of interest has undergone behavioral specialization can help reveal the neurobiological substrate of that behavior.

Most importantly, the study of basic biological drives continues to illuminate the substrates from which more abstract processes are likely to have evolved. Areas of insular cortex responsible for detecting and reacting to physiological perturbations lie along a gradient with more recently evolved areas responsive to negative social emotions like moral outrage (Craig, 2002). Likewise, the amygdala’s ancestral role in predator detection and learning seems likely to have been extended to a broader array of threats. In this view, it is not surprising to find that post-traumatic stress disorder -- the pathological extension of vigilance to non-threatening stimuli -- is associated with amygdala dysfunction (Morey et al., 2011). Thus, studies of low-level functions in these areas point to basic processes like food evaluation and threat detection that underlie more recently adopted functions. These observations strongly endorse comparative studies in multiple divergent animal species to identify the common, fundamental mechanisms and unique specializations supporting decision-making.

Conclusion

In the last fifteen years, the neuroscientific study of decision-making has rapidly expanded from simple questions of reward and learning to embrace topics in finance, ethics, and social psychology. Yet as new tools and methods are brought to bear on these questions, the difficulties and complexities of basic biology seem increasingly at odds with simplified notions of a single, unified brain system that learns and makes decisions. While neuroeconomic analysis continues to provide a powerful normative and descriptive framework for understanding the biology of choice, it has largely ignored the insight that natural selection has worked hardest and longest on a few key decision processes and that complex behaviors are often cobbled together atop older, simpler ones.

As a rejoinder, we have here proposed a complementary framework that borrows insights from studies of ethology -- particularly foraging and social behavior -- to suggest new conceptual underpinnings for the study of the neural basis of decision-making. This framework, too, employs optimality as a benchmark, but it takes as its canonical processes biologically fundamental tasks that many different organisms must solve and thus are most likely to have shaped brain evolution. It is a framework that embraces comparative ethology, and privileges the search for simple, robust algorithms capable of solving multiple types of biological problems (Adams et al., 2012). It is the engineer's and the ethologist's approach to the brain, and we believe it is the one most likely to uncover the basic building blocks of more complex decisions, including those most relevant neuropsychiatric disorders.

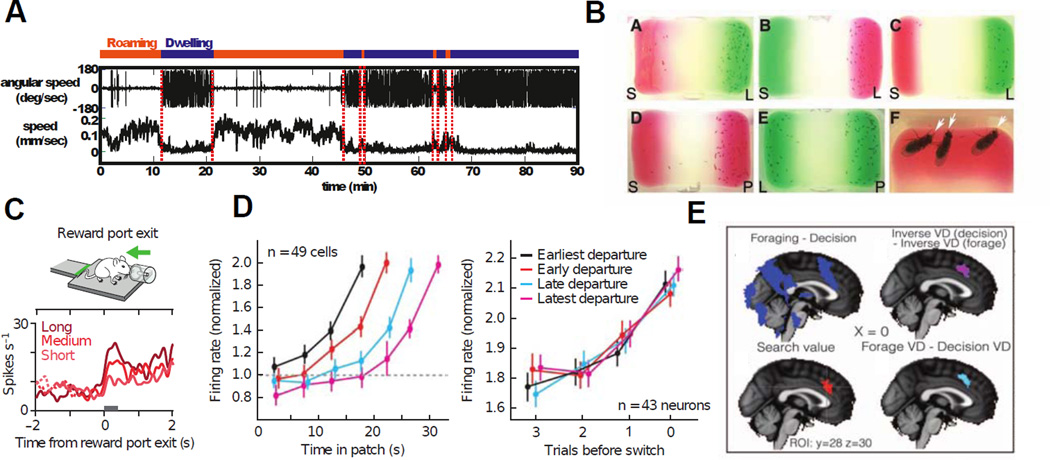

Figure 1.

Comparison of foraging across species. A. Alternation between dwelling and roaming behaviors by C. elegans, under the control of serotonin and the neuropeptide pigment dispersing factor (PDF) (adapted from(Flavell et al., 2013)) B. Egg laying behavior by drosophila varies between nutrient rich and nutrient poor areas in a manner displaying exquisite sensitivity to the costs and benefits implicit moving from one patch of agar to the next (Yang et al., 2008). C,D, and E show neural activation in rodents, non-human primates, and humans, respectively, in versions of the patch-leaving paradigm (Hayden et al., 2011; Kolling et al., 2012; Kvitsiani et al., 2013). In each case, heightened activity in the dorsal anterior cingulate cortex signals an imminent switch away from the current default option. This suggests not only a remarkable degree of behavioral consistency across taxa, with similar decision algorithms across species, but a neural consistency within mammals.

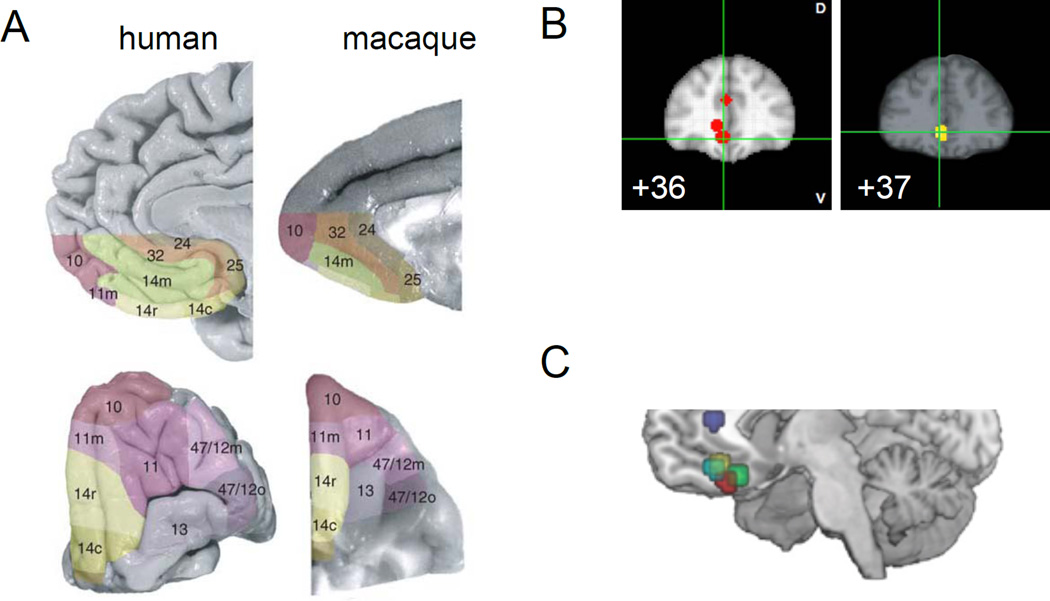

Figure B1.

The terms “vmPFC” and “OFC” are used inconsistently within and across subfields of neuroscience. A. Anatomically delineated regions of OFC have high correspondence between humans (left) and macaques (right). Reproduced with permission from (Mackey and Petrides, 2010). Based on non-human primate neuroanatomy, OFC proper corresponds to all colored brain areas, except 10, 24, 25, and 32. B. Nearly identical brain coordinates are described both as vmPFC, left (data from (Levy and Glimcher, 2012), coordinates illustrated using Neurosynth) and OFC (from (Cox et al., 2005)). C. vmPFC is a descriptive term, and is used in the neuroeconomics literature to refer to regions in the OFC, ACC, and the nearby medial wall (reproduced from (Hare et al., 2009), illustrating data from previous studies [dark blue (Kable and Glimcher, 2007), light blue (Rolls et al., 2008), red (Plassmann et al., 2007), and green (Hare et al., 2008)].

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Abe H, Lee D. Distributed coding of actual and hypothetical outcomes in the orbital and dorsolateral prefrontal cortex. Neuron. 2011;70:731–741. doi: 10.1016/j.neuron.2011.03.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adams GK, Watson KK, Pearson J, Platt ML. Neuroethology of decision-making. Curr. Opin. Neurobiol. 2012;22:982–989. doi: 10.1016/j.conb.2012.07.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ariely D. Predictably irrational, revised and expanded edition: The hidden forces that shape our decisions. HarperCollins; 2009. [Google Scholar]

- Azzi JCB, Sirigu A, Duhamel J-R. Modulation of value representation by social context in the primate orbitofrontal cortex. Proc. Natl. Acad. Sci. U. S. A. 2012;109:2126–2131. doi: 10.1073/pnas.1111715109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. The role of incentive learning in instrumental outcome revaluation by sensory-specific satiety. Anim. Learn. Behav. 1998;26:46–59. [Google Scholar]

- Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat. Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio AR. The somatic marker hypothesis: A neural theory of economic decision - Special Issue on Neuroeconomics. Games Econ. Behav. 2005;52:336–372. [Google Scholar]

- Bechara A, Damasio H, Damasio AR, Lee GP. Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J. Neurosci. 1999;19:5473–5481. doi: 10.1523/JNEUROSCI.19-13-05473.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bechara A, Tranel D, Damasio H. Characterization of the decision-making deficit of patients with ventromedial prefrontal cortex lesions. Brain. 2000a;123(Pt 11):2189–2202. doi: 10.1093/brain/123.11.2189. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio AR. Emotion, decision making and the orbitofrontal cortex. Cereb. Cortex. 2000b;10:295–307. doi: 10.1093/cercor/10.3.295. [DOI] [PubMed] [Google Scholar]

- Beck JM, Ma WJ, Kiani R, Hanks T, Churchland AK, Roitman J, Shadlen MN, Latham PE, Pouget A. Probabilistic population codes for Bayesian decision making. Neuron. 2008;60:1142–1152. doi: 10.1016/j.neuron.2008.09.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Behrens TEJ, Woolrich MW, Walton ME, Rushworth MFS. Learning the value of information in an uncertain world. Nat. Neurosci. 2007;10:1214–1221. doi: 10.1038/nn1954. [DOI] [PubMed] [Google Scholar]

- Bendesky A, Tsunozaki M, Rockman MV, Kruglyak L, Bargmann CI. Catecholamine receptor polymorphisms affect decision-making in C. elegans. Nature. 2011;472:313–318. doi: 10.1038/nature09821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergman TJ, Beehner JC, Cheney DL, Seyfarth RM. Hierarchical classification by rank and kinship in baboons. Science. 2003;302:1234–1236. doi: 10.1126/science.1087513. [DOI] [PubMed] [Google Scholar]

- Berridge KC. The debate over dopamine’s role in reward: the case for incentive salience. Psychopharmacology. 2007;191:391–431. doi: 10.1007/s00213-006-0578-x. [DOI] [PubMed] [Google Scholar]

- Blanchard TC, Hayden BY. Neurons in Dorsal Anterior Cingulate Cortex Signal Postdecisional Variables in a Foraging Task. Journal of Neuroscience. 2014 doi: 10.1523/JNEUROSCI.3151-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanchard TC, Pearson JM, Hayden BY. Postreward delays and systematic biases in measures of animal temporal discounting. Proc. Natl. Acad. Sci. U. S. A. 2013;110:15491–15496. doi: 10.1073/pnas.1310446110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Hikosaka O. Midbrain dopamine neurons signal preference for advance information about upcoming rewards. Neuron. 2009;63:119–126. doi: 10.1016/j.neuron.2009.06.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron. 2010;68:815–834. doi: 10.1016/j.neuron.2010.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cai X, Padoa-Schioppa C. Neuronal encoding of subjective value in dorsal and ventral anterior cingulate cortex. J. Neurosci. 2012;32:3791–3808. doi: 10.1523/JNEUROSCI.3864-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]