Abstract

Gesture facilitates language production, but there is debate surrounding its exact role. It has been argued that gestures lighten the load on verbal working memory (VWM; Goldin-Meadow et al., 2001), but gestures have also been argued to aid in lexical retrieval (Krauss, 1998). In the current study, 50 speakers completed an individual differences battery that included measures of VWM and lexical retrieval. To elicit gesture, each speaker described short cartoon clips immediately after viewing. Measures of lexical retrieval did not predict spontaneous gesture rates, but lower VWM was associated with higher gesture rates, suggesting that gestures can facilitate language production by supporting VWM when resources are taxed. These data also suggest that individual variability in the propensity to gesture is partly linked to cognitive capacities.

Keywords: gesture, language production, working memory, lexical access, individual differences

People often gesture with their hands while speaking. There is considerable evidence that listeners can benefit from speakers' gestures, particularly if the gestures reinforce the information conveyed in speech (e.g., Valenzeno, Alibali, & Klatzy, 2003). However, speakers often gesture in the absence of an audience (Alibali, Heath, & Meyers, 2001), and speakers who are blind from birth gesture – even to blind listeners (Iverson & Goldin-Meadow, 2001). These findings suggest that in addition to a communicative function, gesture may serve speaker-internal needs. At the same time, there is individual variability in the propensity to gesture and, currently, the sources of this variation are largely unknown (Alibali, 2005;cf. Hostetter & Alibali, 2007). Understanding what drives some speakers to gesture more can thus help to elucidate the types of cognitive processes that gesture may benefit and the mechanisms by which those benefits accrue.

Here we test two prominent hypotheses explaining how gesture might aid language production. One is that gesturing aids speakers by “lightening the load” on verbal working memory (VWM) during language production (Goldin-Meadow et al., 2001; Ping & Goldin-Meadow, 2010; Wagner, Nusbaum, & Goldin-Meadow, 2004). Speakers are more likely to recall a word list if they can gesture during a description task that separates the encoding and retrieval of the list suggesting that gesturing can free up VWM resources during speech (as well as spatial working memory; Wagner et al., 2004), which allows for better maintenance of the load during the description phase (Goldin-Meadow et al., 2001). This effect has been observed independent of whether the items being described in the intervening task are present, suggesting that the memory benefits afforded by gesture are not solely due to speakers using gesture to index objects in the immediate environment (Ping & Goldin-Meadow, 2010). Wagner et al. (2004) suggest that gesture reduces working memory load by providing an organizing framework for language production that affords better use of VWM resources (e.g., chunking); cf, Kita, 2000.

A second hypothesis is that gesture supports lexical retrieval by facilitating word activation (Krauss, 1998; Krauss, Chen, & Gottesman, 2000). Krauss and colleagues' (Krauss et al., 2000; Krauss & Hadar, 1999) Lexical Retrieval Hypothesis (LRH) argues that some iconic gestures1, i.e., those that reflect the meaning of the speech, specifically aid in lexical access through cross-modal priming: The motor representation of the activated concept primes the phonological form of the associated word via semantics. Some evidence for the LRH comes from the timing of gestures in relation to speech. The initiation of gestures precedes the articulation of their lexical affiliates by approximately a second, and gestures terminate at approximately the same time at which the articulation of the associated word begins (Morrel-Samuels & Krauss, 1992). Relatedly, late-talkers (i.e, children with delayed onset of productive, expressive vocabulary) use communicative gestures more than typically developing children (Thal & Tobias, 1992), suggesting that gesture rates may be related to vocabulary size as well as lexical retrieval.

The “lightening the load” hypothesis and LRH similarly predict benefits of gesture for language production, and, indeed, these hypotheses are not necessarily mutually exclusive. In this paper we test the predictions of these two accounts by examining whether individual differences in speakers' tendencies to spontaneously gesture during a language production task are related to their working memory resources and/or lexical access abilities. If individuals who are less verbally fluent or have smaller vocabularies produce more iconic gestures, then this would support the hypothesis that lexical retrieval difficulties are a primary driving force for gesture production. If individuals with lower working memory capacity gesture more overall, this supports the “lightening the load” hypothesis and, importantly, extends it by suggesting that a driving force for the spontaneous production of gestures during speech may be points in which working memory is taxed.

The current study used two complex span tasks to measure individuals' VWM capacities. We assume that lexical retrieval is associated with at least two factors: the number of words a speaker knows and how quickly those words can be retrieved from the mental lexicon (for a similar suggestion see Bialystok, Craik, & Luk, 2008). Therefore, lexical retrieval abilities were measured using a standard vocabulary test, and phonemic and semantic verbal fluency tasks (Tombaugh, Kozak, & Rees, 1999), which require speakers to retrieve words from a particular letter or semantic category under time pressure (see Hostetter & Alibali, 2007). Vocabulary has been found to be highly correlated with measures of confrontation naming (e.g., the Boston Naming Test, r=.83; Hawkins and Bender, 2002), which are commonly used to index word finding abilities in older adults and clinical populations (e.g., Calero et al., 2002; Schmitter-Edgecombe et al., 2000), but which, in educated young adults, yield scores that cluster around the mean, producing low sensitivity for indexing individual differences (Hamby, Bardi, and Wilkins, 1997). Fluency tasks, which are used in a wide range of neuropsychological assessments, are complicated and known to be associated with multiple cognitive processes. However, performance on verbal fluency tasks (and especially semantic fluency) has regularly been used to measure lexical retrieval abilities among various populations (bilinguals: Bialystok et al., 2008; Gollan, Montoya, & Werner, 2002; Luo, Luk, & Bialystok, 2010; children: Riva, Nichelli & Devoti, 2000; schizophrenics: Allen, Liddle, & Frith, 1993; Vinogradov, Kirkland, Poole, Drexler, Ober, & Shenaut, 2003) and has been shown to be correlated with both vocabulary size (Bialystok et al., 2008; Luo et al., 2010) and picture naming abilities (Calero et al., 2002; Schmitter-Edgecombe et al., 2000). Thus, verbal fluency has been assumed to be tied to at least some of the processes involved in normal lexical retrieval.

Method

Participants

Fifty University of Illinois Urbana-Champaign undergraduates (18 male) participated in the experiment. All participants were native English speakers and received course credit for their participation.

Individual differences battery

Listening Span

This task was a computer-based version of the listening span task used in Stine and Hindman (1994). Critical trials began with participants listening to a recorded sentence. As soon as the recorded sentence ended, they were prompted to determine whether the sentence was true or false, and made responses by a keypress. Between each sentence a letter was presented auditorily. At the end of a set of sentences, participants were asked to recall the letters that they heard in order by typing the letters. Participants completed 10 critical sentence sets in a random order (2 each at set sizes of 2 through 6). Two practice trials at set size 2 preceded the critical trials. The task was scored according to the Partial-Credit Unit-Weighted method (Conway, Kane, Bunting, Hambrick, Wilhelm, & Engle, 2005).

Subtract Two Span

The task was a computer-based version of the Subtract Two Span task in Salthouse (1988). Critical trials began with a set of digits (0–9) presented one at a time for one second each on a computer screen. Participants read the digits aloud as they appeared. Immediately after digit presentation, participants were required to mentally subtract two from each of the digits retained in memory and recall the resulting answers in the order in which the digits were presented. Set sizes ranged from 2 to 7 and were presented in a random order. Two practice trials at set size 2 preceded the critical trials. The task was scored according to the Partial-Credit Unit-Weighted method (Conway et al., 2005).

Extended Range Vocabulary Test (ERVT)

The task was a computer-based version of the ERVT in the Kit of Factor-Referenced Cognitive Tests (Ekstrom, French, Harman, & Dermen, 1976). Participants were presented with a target word and a list of five words and the option “don't know.” Participants chose the candidate word that was most similar in meaning to the target word, or indicated that they did not know the answer. There were two blocks of 24 items. Participants received a point if they chose the correct response, no points for a “don't know” response, and a −0.25 penalty was applied for incorrect guesses.

Verbal Fluency Tasks

This task was a computer-based version of the phonemic and semantic fluency tasks (Tombaugh et al., 1999). In the phonemic fluency task participants were given 60 seconds to generate as many unique words as possible that began with a particular letter (F, A, S). In the semantic fluency task, participants had 60 seconds to generate as many unique exemplars from a particular semantic category as they could (e.g. animals, furniture). Participants saw a category name appear at the center of the screen with a prompt to begin generating responses. Participants were encouraged to continue to generate responses until the 60 seconds had elapsed. All participants received the category prompts in the same order. All responses were recorded and transcribed. Every unique response was given a point, with repetitions receiving no points.

Composite measures

Each task's scores were standardized by z-scoring. The correlation matrix of individual difference measures is presented in Table 1. A Verbal Working Memory (VWM) composite score was calculated by averaging the standardized Listening Span and Subtract Two Span scores. A Vocabulary composite score was calculated by averaging the standardized scores on each block of the ERVT. A phonemic verbal fluency (PVF) and semantic verbal fluency (SVF) composite score was calculated by averaging the standardized scores for each category in the PVF and SVF tasks, respectively. Composite measures were normally distributed, and only the two verbal fluency composite measures were significantly correlated (PVF/SVF r = .36, p < .05; other |r|s < .23, ps > .13).

Table 1.

Correlation matrix of individual differences tasks.

| Listening Span | Subtract Two Span | ERVT (Part 1) | ERVT (Part 2) | PVF (F) | PVF (A) | PVF (S) | SVF (Animals) | SVF (Furniture) | |

|---|---|---|---|---|---|---|---|---|---|

| Listening Span | .25□ | .12 | .21 | -.02 | .01 | .05 | -.03 | .02 | |

| Subtract Two Span | .04 | .24 | .08 | -.03 | -.21 | .10 | -.30* | ||

| ERVT (Part 1) | .54* | -.02 | .03 | .10 | .21 | -.13 | |||

| ERVT (Part 2) | .20 | .10 | -.01 | .35* | -.02 | ||||

| PVF (F) | .57* | .46* | .20 | .24□ | |||||

| PVF (A) | .51* | .15 | .25□ | ||||||

| PVF (S) | .16 | .38* | |||||||

| SVF (Animals) | .22 |

Note. N = 50.

p < .05;

p < .10.

Gesture elicitation task

Participants watched five silent 30-second clips from Tom and Jerry cartoons. Immediately after watching each clip, participants were told to describe the actions that happened in the clip in order, in as much detail as possible. Participants were informed that they may be video recorded during the course of the experiment, but they were not explicitly told that this task was being recorded.

Gesture coding

Gestures counts were coded by the first author and a research assistant; all coding was completed with video and audio playing. To code the total number of gestures produced, coders watched each video description and counted the total number gestures produced in each description. Gesture presence was coded by assigning descriptions with a non-zero gesture count a score of 1, and descriptions with gesture counts of zero were assigned a score of 0. Inter-rater reliability was high for both measures (counts: ICC(3,2) = 0.98, p <.001, presence: ICC(3,2) = 0.94, p <.001; Shrout & Fleiss, 1979). For each video description, the number of gestures classified as iconic (i.e., gestures that depict or represent the speech produced) using standards outlined in McNeill (1992) were counted, and the presence of an iconic gesture was determined as described above. Inter-rater reliability was high for both measures (counts: ICC(3,2) = 0.90, p <.001, presence: ICC(3,2) = 0.94, p <.001; Shrout & Fleiss). Data coded by the first author were used in all analyses, but statistical patterns are identical when the coding by the second coder is used.

Procedure

Each participant was run individually in the experiment by an experimenter who was present throughout the entire session. All participants completed the main tasks described above, as well as several intervening filler tasks in a fixed order. The main tasks appeared in the following order: Listening Span, Subtract Two Span, ERVT, Gesture elicitation, PVF, and SVF.

Results

Gesture presence

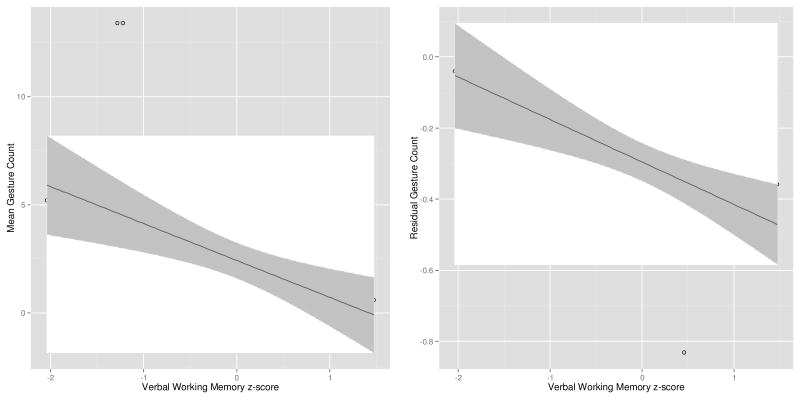

The likelihood of gesturing during a description was analyzed using a logistic mixed-effect regression model with VWM, Vocabulary, PVF, and SVF composite scores and gender as fixed effects, and random intercepts for speaker and video clip2. The predicted probability of gesturing as a function of each composite score is shown in Figure 1. Increased VWM was associated with reduced gesture production (z = −3.68, p < .01). Vocabulary, PVF, SVF, and gender did not predict gesture production (|z|s < 1.28, ps > .20). An analysis of iconic gesture presence utilizing a logistic mixed-effect regression model with identical model specifications produced statistically identical results (VWM: z = −3.18, p < .01; other measures |z|s < 1.01, ps > .31).

Figure 1.

Predicted probability of a gesture being produced in a description as a function of the z-scored composite predictors. Bands around the regression lines indicate 95% CIs computed from SEMs. Raw data (with jitter) are shown as points (1 = gesture, 0 = no gesture). VWM = Verbal Working Memory; PVF = Phonemic Verbal Fluency; SVF = Semantic Verbal Fluency.

Gesture counts

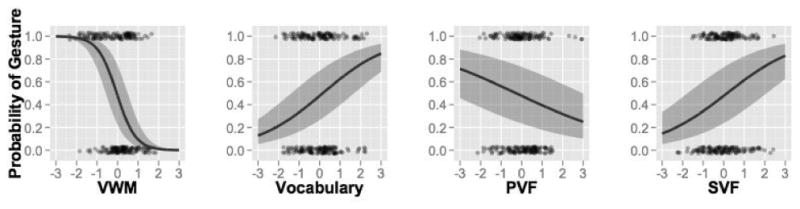

The overall number of gestures produced per description was analyzed using a zero-inflated Poisson mixed regression model (Fournier et al., 2012) with VWM, Vocabulary, PVF, and SVF composite scores and gender as fixed effects, and random intercepts for speaker and video clip. The effect estimates for each predictor are shown in Figure 2. Increased VWM was associated with lower gesture counts (z = −2.82, p < .01). Vocabulary, PVF, SVF, and gender did not predict gesture counts (|z|s < 1.28, ps > .20). An analysis of iconic gesture counts utilizing a zero-inflated Poisson mixed regression model with identical model specifications produced statistically identical results (VWM: z = −3.05, p < .01; other measures |z|s < 1, ps > .37).

Figure 2.

Bars indicate standardized effect estimates (βs) for each composite predictor in the zero-inflated Poisson regression model. Error bars represent 95% confidence intervals calculated from each estimate's SEM. Bars below the horizontal axis indicate that gestures decreased with increases in the composite predictor. VWM = Verbal Working Memory; PVF = Phonemic Verbal Fluency; SVF = Semantic Verbal Fluency.

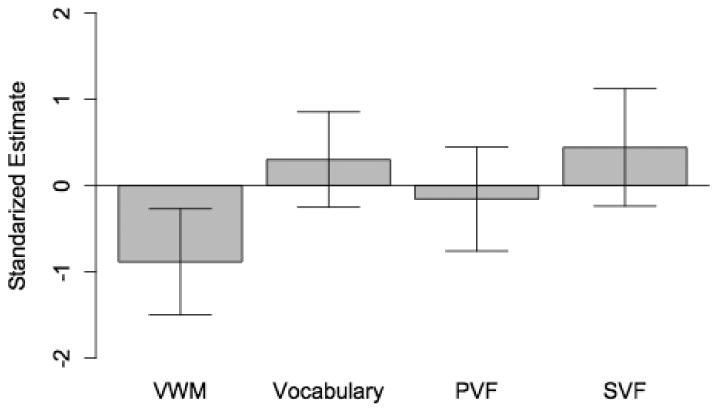

Each subject's VWM z-scores are plotted against their mean gesture counts across the five videos in Figure 3a. Residualized gesture counts were obtained by running a regression model excluding VWM as a predictor. These are plotted against VWM z-scores in Figure 3b.

Figure 3.

(a) Mean gesture counts were computed by averaging the gesture counts for each subject's five videos. Bands around the regression lines indicate 95% CIs. (b) Residual gesture counts were computed using the residuals from a regression model including Vocabulary, PVF, SVF, and gender, but excluding VWM. Bands around the regression lines indicate 95% CIs.

To control for the possibility that participants produced different amounts of speech and consequently varied in the opportunities they had to gesture, we included gesture rate (gesture per 100 words) as a dependent variable. This yielded identical result patterns (t = -2.710, p < .01).

Discussion

The results show that lower VWM was associated with both an increased probability of gesturing and with how many gestures were produced (overall as well as per unit of speech), providing the first evidence that the amount that individual people gesture when speaking is linked to their VWM capacity, for relatively natural speech production tasks (with no special memory demands) wherein gesturing was neither encouraged nor constrained. Thus, these findings constitute a novel and powerful source of evidence supporting the hypothesis that gesture “lightens the load” on VWM during speech production (Goldin-Meadow et al., 2001; Ping & Goldin-Meadow, 2010; Wagner et al., 2004).

In contrast, vocabulary did not predict gesture rates, suggesting that spontaneous gesture rates are not dependent upon the number of words a speaker knows. The verbal fluency tasks employed in this study required speakers to quickly access their mental lexicon in a targeted search of words that either were members of a specific semantic category or started with a particular letter; however, performance on these semantic and phonemic3 verbal fluency tasks also did not predict overall or iconic gesture rates. Moreover, a recent study also examining individual differences in gesture production found that confrontation naming times were not related to gesture rates (Chu, Meyer, Foulkes, & Kita, in press) The LRH (Krauss et al., 2000) holds that iconic gestures aid in lexical access by cross-modal priming of word forms through motor movements. The current findings are not necessarily evidence against the LRH, but they do suggest that individual differences in VWM, rather than lexical access difficulty, may be a more important determinant of the tendency to spontaneously gesture during relatively naturalistic description tasks of the type used here. The mechanisms described by the LRH seem most applicable when a specific lexical item must be retrieved; in freeform production, speakers may be able to get around lexical access difficulties using other strategies (for example, choosing different words or using a vaguer description).

Whereas, in the absence of strong constraints, difficulties with lexical access may not always factor prominently in language production, at least for healthy young adults, current (albeit still limited) evidence points to a tight relationship between VWM and planning processes in production (see Acheson & MacDonald, 2009). The scope of advance grammatical planning in production seems to be dependent on VWM (Martin, Miller, & Vu, 2004), word order choices are affected by VWM load (Slevc, 2011), some types of grammatical speech errors are more likely when VWM is taxed (Hartsuiker & Barkhuysen, 2006), and impairments in verbal short term memory are associated with production deficits (e.g., Martin & Freedman, 2001). Many existing accounts of gesture production claim that gesture and speech planning are carried out by shared processing mechanisms (e.g., de Ruitter, 2000; McNeill, 1992), and, thus, the current findings suggest that gesture production is linked to some of the same VWM-related processing resources recruited during message formulation and grammatical planning in language production.

The current data do not explain precisely how gesture interacts with working memory (WM), but there are several alternatives proposed in the literature. Essentially all models of gesture production claim that WM is the origin of co-speech gesture (see de Ruitter, 2000; Krauss et al., 2000); however, models specify different subcomponents of WM as the source of gesture. Chu et al. (in press) found that spatial working memory was related to gesture rate; however, there was no relationship between performance on a simple (digit) span task and gesture rate. The LRH (Krauss et al., 2000) claims that spatial WM is the source of gesture, and that the gesture formulator interacts with language production processes at the stage when phonological word forms are being planned and produced. In linking increased gesture rates to lower WM capacity, our data point to a role and/or source for cospeech gestures that cannot be explained solely (or even primarily) by lexical access difficulty. Wagner et al. (2004) argue that the representation of gesture is propositional (like language) rather than spatial (cf. Kita, 2000) and that the benefits that gesture affords speech arise because gesture provides a “framework that complements and organizes speech” (p. 406). Additionally, other accounts of gesture production (e.g., de Ruitter, 2000; Goldin-Meadow et al., 2001; Kita, 2000; Kita Özyürek, Allen, Brown, Furman, & Ishizuka, 2007; McNeill & Duncan, 2000) claim that processing underlying gesture and language production are interactive at early and/or nearly all stages of the production process.

Although previous work has convincingly demonstrated that gesture can provide benefits (or at least that suppressing gesture has costs) for learning and memory (Goldin-Meadow, 2001; Ping & Goldin-Meadow, 2010; Wagner et al., 2004), it leaves open questions of precisely when -- and for whom -- such benefits arise. If gesture is a general tool for facilitating speech production, one might expect all speakers to gesture as much as possible so that less pressure is placed on the procesing system. Indeed, if gesture were ubiquitously helpful, one might even expect speakers with more resources to be better able to take advantage of this strategy and thus to gesture more frequently. That we do not see uniform gesture rates in our population and that it is lower WM capacity participants that gesture more frequently suggests instead that gesture is brought on-line particularly at points of complexity, points that low span speakers are likely to reach before high span speakers. This notion is consistent with recent work by Marstaller & Burianová (2013) who found that speakers with low WM capacity suffered from lower accuracy on a WM task in which they were not permitted to gesture. Participants with high WM capacities, as well as low capacity participants who were allowed to gesture, were unaffected. Thus, gesture does not just simply “lighten the load,” but rather does so only at points at which the speaker's load is overwhelming. This view accords well with patterns in language development, wherein children use gesture/speech combinations to create language structures (e.g., saying “bottle” while pointing at a baby to communicate possession; see review in Goldin-Meadow and Alibali, 2013) that they are not yet capable of producing in speech alone -- i.e., to overcome their current capacity limitations.

In sum, the finding that spontaneous gesture production is associated with a speaker's VWM capacity provides novel support for the hypothesis that gestural and linguistic planning rely on similar processing resources. These results support and extend the “lightening the load” hypothesis of gesture production (Goldin-Meadow, 2001; Ping & Goldin-Meadow, 2010; Wagner et al., 2004), suggesting that gesture is a tool that speakers can use to help organize thought for speech when VWM resources are taxed during language production. Most importantly, the current work begins to elucidate the reasons why people gesture when they speak, and why some speakers gesture more than others.

We examine the relationship individual difference measures and spontaneous gesture.

Lower verbal working memory was associated with higher gesture rates.

This finding suggests gesturing may free up working memory resources for speaking.

Acknowledgments

We thank Lauryn Charles and Colleen Clarke for help with running participants and coding data, and Scott Fraundorf for programming the individual differences battery. MG was supported by NIH grant 5T32HD055272. ANJ is supported by NSF grant DGE-1144245. KDF is supported by R01 AG026308, DGW is supported by R01 DC008774, and KDF and DGW are supported by the James S. McDonnell foundation.

Footnotes

Krauss (1998) refers to these gestures as lexical gestures.

Because PVF and SVF composite scores were significantly correlated, all reported models were also run using residualized versions of these predictors to reduce collinearity. All models were statistically identical to the main models reported.

Our results did not replicate the U-shaped main effect of phonemic fluency on gesture rates reported by Hostetter & Alibali (2007). We found no relation between phonemic fluency and gesture rate both in a model with only phonemic fluency (t = -0.57, p =.57) and in a model with all other predictors (t = -0.95, p = 0.32). Importantly, however, their main effect was qualified by an interaction with spatial abilities (in which speakers with low phonemic fluency but high spatial abilities produced nearly double the rate of gestures as any other group), and we did not measure spatial abilities here. Hostetter & Alibali (2007) did not measure working memory, so it is unknown whether there were co-occuring working memory differences across the phonemic fluency groups. Thus, it is not clear to what extent the results in these studies are actually discrepant.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Contributor Information

Maureen Gillespie, Email: gillespie.maureen@unh.edu.

Ariel N. James, Email: anjames2@illinois.edu.

Kara D. Federmeier, Email: kfederme@illinois.edu.

Duane G. Watson, Email: dgwatson@illinois.edu.

References

- Acheson DJ, MacDonald MC. Verbal working memory and language production: Common approaches to the serial ordering of verbal information. Psychological Bulletin. 2009;135:50–68. doi: 10.1037/a0014411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alibali MW. Gesture in spatial cognition: Expressing, communicating, and thinking about spatial information. Spatial Cognition & Computation: An Interdisciplinary Journal. 2005;5:307–331. [Google Scholar]

- Alibali MW, Heath DC, Meyers HJ. Effects of visibility between speaker and listener on gesture production: Some gestures are meant to be seen. Journal of Memory and Language. 2001;44:169–188. [Google Scholar]

- Allen HA, Liddle PF, Frith CD. Negative features, retrieval processes and verbal fluency in schizophrenia. The British Journal of Psychiatry. 1993;163:769–775. doi: 10.1192/bjp.163.6.769. [DOI] [PubMed] [Google Scholar]

- Bialystok E, Craik FIM, Luk G. Lexical access in bilinguals: Effects of vocabulary size and executive control. Journal of Neurolinguistics. 2008;21:522–538. [Google Scholar]

- Chu M, Meyer A, Foulkes L, Kita S. Individual differences in frequency and saliency of speech-accompanying gestures: The role of cognitive abilities and empathy. Journal of Experimental Psychology: General. doi: 10.1037/a0033861. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conway ARA, Kane MJ, Bunting MF, Hambrick DZ, Wilhelm O, Engle RW. Working memory span tasks: A methodological review and user's guide. Psychonomic Bulletin & Review. 2005;12:769–786. doi: 10.3758/bf03196772. [DOI] [PubMed] [Google Scholar]

- de Ruiter JP. The production of gesture and speech. In: McNeill D, editor. Language and gesture. Cambridge, UK: Cambridge University Press; 2000. pp. 284–311. [Google Scholar]

- Ekstrom RB, French JW, Harman HH, Dermen D. Manual for Kit of Factor-Referenced Cognitive Tests. Princeton: Educational Testing Service; 1976. [Google Scholar]

- Founier DA, Skaug HJ, Ancheta J, Ianelli J, Magnusson A, Maunder MN, Neilsen A, Sibert J. AD Model Builder: Using automatic differentiation for statistical inference of highly parameterized complex nonlinear models. Optimization Methods Software. 2012;27:233–249. [Google Scholar]

- Goldin-Meadow S, Alibali MW. Gesture's role in speaking, learning, and creating language. Annual Review of Psychology. 2013;64:257–283. doi: 10.1146/annurev-psych-113011-143802. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldin-Meadow S, Nusbaum H, Kelly SD, Wagner S. Explaining math: Gesturing lightens the load. Psychological Science. 2001;12:516–522. doi: 10.1111/1467-9280.00395. [DOI] [PubMed] [Google Scholar]

- Gollan TH, Montoya RI, Werner GA. Semantic and letter fluency in Spanish-English bilinguals. Neuropsychology. 2002;16:562–576. [PubMed] [Google Scholar]

- Hartsuiker RJ, Barkhuysen PN. Language production and working memory: The case of subject-verb agreement. Language and Cognitive Processes. 2006;21:181–204. [Google Scholar]

- Hotsetter AB, Alibali MW. Raise your hand if you're spatial: Relations between verbal and spatial skills and gesture production. Gesture. 2007;7:73–95. [Google Scholar]

- Iverson JM, Goldin-Meadow S. The resilience of gesture in talk: Gesture in blind speakers and listeners. Developmental Science. 2001;4:416–422. [Google Scholar]

- Kita S. How representational gestures help speaking. In: McNeill D, editor. Language and gesture. Cambridge, UK: Cambridge University Press; 2000. pp. 162–185. [Google Scholar]

- Kita S, Özyürek A, Allen S, Brown A, Furman R, Ishizuka T. Relations between syntactic encoding and co-speech gestures: Implications for a model of speech and gesture production. Language and Cognitive Processes. 2007;22:1212–1236. [Google Scholar]

- Krauss RM. Why do we gesture when we speak? Current Directions in Psychological Science. 1998;7:54–60. [Google Scholar]

- Krauss RM, Chen Y, Gottesman RF. Lexical gestures and lexica l access: A process model. In: McNeill D, editor. Language and gesture. Cambridge, UK: Cambridge University Press; 2000. pp. 261–283. [Google Scholar]

- Krauss RM, Hadar U. The role of speech-related arm/hand gestures in word retrieval. In: Campbell R, Messing L, editors. Gesture, speech, and sign. Oxford; Oxford University Press; 1999. pp. 93–116. [Google Scholar]

- Luo L, Luk G, Bialystok E. Effect of language proficiency and executive control on verbal fluency performance in bilinguals. Cognition. 2010;114:29–41. doi: 10.1016/j.cognition.2009.08.014. [DOI] [PubMed] [Google Scholar]

- Marstaller L, Burianová H. Individual differences in the gesture effect on working memory. Psychonomic Bulletin & Review. 2013;20:496–500. doi: 10.3758/s13423-012-0365-0. [DOI] [PubMed] [Google Scholar]

- Martin RC, Freedman ML. Short-term retention of lexical-semantic representations: Implications for speech production. Memory. 2001;9:261–280. doi: 10.1080/09658210143000173. [DOI] [PubMed] [Google Scholar]

- Martin RC, Miller M, Vu H. Lexical-semantic retention and speech production: Further evidence from normal and brain-damaged participants for a phrasal scope of planning. Cognitive Neuropsychology. 2004;21:625–644. doi: 10.1080/02643290342000302. [DOI] [PubMed] [Google Scholar]

- McNeill D. Hand and Mind: What Gestures Reveal about Thought. Chicago: Chicago University Press; 1992. [Google Scholar]

- McNeill D, Duncan SD. Growth points in thinking-for-speaking. In: McNeill D, editor. Language and gesture. Cambridge, UK: Cambridge University Press; 2000. pp. 141–161. [Google Scholar]

- Morrel-Samuels P, Krauss RM. Word familiarity predicts temporal asynchrony of hand gestures and speech. Journal of Experimental Psychology: Learning, Memory, and Cognition. 1992;18:615–623. [Google Scholar]

- Ping R, Goldin-Meadow S. Gesturing saves cognitive resources when talking about nonpresent objects. Cognitive Science. 2010;34:602–619. doi: 10.1111/j.1551-6709.2010.01102.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauscher FH, Krauss RM, Chen Y. Gesture, speech and lexical access: The role of lexical movements in speech production. Psychological Science. 1996;7:226–231. [Google Scholar]

- Riva D, Nichelli F, Devoti M. Developmental aspects of verbal fluency and confrontation naming in children. Brain and Language. 2000;71:267–284. doi: 10.1006/brln.1999.2166. [DOI] [PubMed] [Google Scholar]

- Salthouse TA. The role of processing resources in cognitive aging. In: Howe ML, Brainerd CJ, editors. Cognitive development in adulthood. New York: Springer-Verlag; 1988. pp. 185–239. [Google Scholar]

- Shrout PE, Fleiss JL. Interclass correlations: Uses in assessing rater reliability. Psychonomic Bulletin. 1979;86:420–428. doi: 10.1037//0033-2909.86.2.420. [DOI] [PubMed] [Google Scholar]

- Slevc LR. Saying what's on your mind: Working memory effects on sentence production. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2011;37:1503–1514. doi: 10.1037/a0024350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stine EAL, Hindman J. Age differences in reading time allocation for propositionally dense sentences. Aging, Neuropsychology, and Cognition. 1994;1:2–16. [Google Scholar]

- Thal DJ, Tobias S. Communicative gestures in children with delayed onset of oral expressive vocabulary. Journal of Speech, Language, and Hearing Research. 1992;35:1281–1289. doi: 10.1044/jshr.3506.1289. [DOI] [PubMed] [Google Scholar]

- Tombaugh TN, Kozak J, Rees L. Normative data stratified by age and education for two measures of verbal fluency: FAS and animal naming. Archives of Clinical Neuropsychology. 1999;14:167–177. [PubMed] [Google Scholar]

- Valenzeno L, Alibali MW, Klatzky R. Teachers' gestures facilitate students' learning: A lesson in symmetry. Contemporary Educational Psychology. 2003;28:187–204. [Google Scholar]

- Vinogradov S, Kirkland J, Poole JH, Drexler M, Ober BA, Shenaut GK. Both processing speed and semantic memory organization predict verbal fluency in schizophrenia. Schizophrenia Research. 2003;59:269–275. doi: 10.1016/s0920-9964(02)00200-1. [DOI] [PubMed] [Google Scholar]

- Wagner SM, Nusbaum H, Goldin-Meadow S. Probing the mental representation of gesture: Is handwaving spatial? Journal of Memory and Language. 2004;50:395–407. [Google Scholar]