Significance

Human cognition is inherently limited: only a finite amount of visual information can be processed at a given instant. What determines those limits? Here, we show that more objects can be processed when they are from different stimulus categories than when they are from the same category. This behavioral benefit maps directly onto the functional organization of the ventral visual pathway. These results suggest that our ability to process multiple items at once is limited by the extent to which those items compete with one another for neural representation. Broadly, these results provide strong evidence that the capacities and limitations of human behavior can be inferred from our functional neural architecture.

Keywords: working memory, capacity limitations, representational similarity, competition, visual cognition

Abstract

High-level visual categories (e.g., faces, bodies, scenes, and objects) have separable neural representations across the visual cortex. Here, we show that this division of neural resources affects the ability to simultaneously process multiple items. In a behavioral task, we found that performance was superior when items were drawn from different categories (e.g., two faces/two scenes) compared to when items were drawn from one category (e.g., four faces). The magnitude of this mixed-category benefit depended on which stimulus categories were paired together (e.g., faces and scenes showed a greater behavioral benefit than objects and scenes). Using functional neuroimaging (i.e., functional MRI), we showed that the size of the mixed-category benefit was predicted by the amount of separation between neural response patterns, particularly within occipitotemporal cortex. These results suggest that the ability to process multiple items at once is limited by the extent to which those items are represented by separate neural populations.

An influential idea in neuroscience is that there is an intrinsic relationship between cognitive capacity and neural organization. For example, seminal cognitive models claim there are distinct resources devoted to perceiving and remembering auditory and visual information (1, 2). This cognitive distinction is reflected in the separate cortical regions devoted to processing sensory information from each modality (3). Similarly, within the domain of vision, when items are placed near each other, they interfere more than when they are spaced farther apart (4, 5). These behavioral effects have been linked to receptive fields and the retinotopic organization of early visual areas, in which items that are farther apart activate more separable neural populations (6–8). Thus, there are multiple cognitive domains in which it has been proposed that capacity limitations in behavior are intrinsically driven by competition for representation at the neural level (4, 7–10).

However, in the realm of high-level vision, evidence linking neural organization to behavioral capacities is sparse, although neural findings suggest there may be opportunities for such a link. For example, results from functional MRI (fMRI) and single-unit recording have found distinct clusters of neurons that selectively respond to categories such as faces, bodies, scenes, and objects (11, 12). These categories also elicit distinctive activation patterns across the ventral stream as measured with fMRI (13, 14). Together, these results raise the interesting possibility that there are partially separate cognitive resources available for processing different object categories.

In contrast, many prominent theories of visual cognition do not consider the possibility that different categories are processed by different representational mechanisms. For example, most models of attention and working memory assume or imply that these processes are limited by content-independent mechanisms such as the number of items that can be represented (15–18), the amount of information that can be processed (19–21), or the degree of spatial interference between items (4, 22–24). Similarly, classical accounts of object recognition are intended to apply equally to all object categories (25, 26). These approaches implicitly assume that visual cognition is limited by mechanisms that are not dependent on any major distinctions between objects.

Here, we examined (i) how high-level visual categories (faces, bodies, scenes, and objects) compete for representational resources in a change-detection task, and (ii) whether this competition is related to the separation of neural patterns across the cortex. To estimate the degree of competition between different categories, participants performed a task that required encoding multiple items at once from the same category (e.g., four faces) or different categories (e.g., two faces and two scenes). Any benefit in behavioral performance for mixed-category conditions relative to same-category conditions would suggest that different object categories draw on partially separable representational resources. To relate these behavioral measures to neural organization, we used fMRI to measure the neural responses of these categories individually and quantified the extent to which these categories activate different cortical regions.

Overall, we found evidence for separate representational resources for different object categories: performance with mixed-category displays was systematically better than performance with same-category displays. Critically, we also observed that the size of this mixed-category benefit was correlated with the degree to which items elicited distinct neural patterns, particularly within occipitotemporal cortex. These results support the view that a key limitation to simultaneously processing multiple high-level items is the extent to which those items are represented by nonoverlapping neural channels within occipitotemporal cortex.

Results

Behavioral Paradigm and Results.

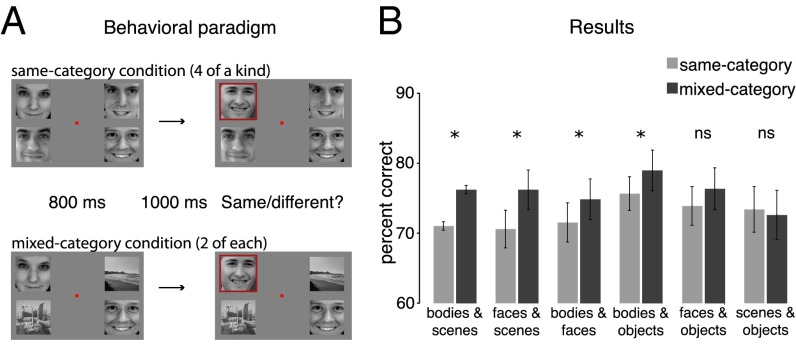

To measure how items from different categories compete for representation, participants performed a task that required encoding multiple items at once. The stimulus set included images of faces, bodies, scenes, and objects matched for luminance and contrast (Fig. S1 shows the full stimulus set). On each trial, four different items were presented for 800 ms with one item in each visual quadrant. Following a blank display (1,000 ms), the items reappeared with one item cued by a red frame, and participants reported if that item had changed (Fig. 1A). Changes occurred on half the trials and could occur only at the cued location.

Fig. 1.

(A) Behavioral paradigm. Participants were shown two successively presented displays with four items in each display (Materials and Methods). On the second display, one item was cued (red frame) and participants reported if that item had changed. In the same-category condition, items came from the same stimulus category (e.g., four faces or four scenes). In the mixed-category conditions, items came from two different categories (e.g., two faces and two scenes). (B) Behavioral experiment results. Same-category (light gray) and mixed-category (dark gray) performance is plotted in terms of percent correct for all possible category pairings. Error bars reflect within-subject SEM (48) (*P < 0.05).

The critical manipulation was that half the trials were same-category displays (e.g., four faces or four scenes), whereas the other half of trials were mixed-category displays (e.g., two faces and two scenes). Whenever an item changed, it changed to another item from the same category (e.g., a face could change into another face, but not a scene). Each participant was assigned two categories, such as faces and scenes, or bodies and objects, to obtain within-subject measures for the same-category and mixed-category conditions. Across six groups of participants, all six pairwise combinations of category pairings were tested (SI Materials and Methods).

If items from one category are easier to process, participants might pay more attention to the easier category in mixed-category displays. To address this concern, we averaged performance across the tested categories (e.g., for the face–scene pair, we averaged over whether a face or scene was tested). Thus, any differences in overall performance for the mixed-category and same-category conditions cannot be explained by attentional bias toward one particular category. We also took several steps to ensure that performance was approximately matched on the same-category conditions for all categories. First, we carefully selected our stimulus set based on a series of pilot studies (SI Materials and Methods). Second, before testing each participant, we used an adaptive calibration procedure to match performance on the same-category conditions, by adjusting the transparency of the items (Materials and Methods). Finally, we adopted a conservative exclusion criterion: any participants whose performance on the same-category displays (e.g., four faces compared with four scenes) differed by more than 10% were not included in the main analysis (SI Materials and Methods). This exclusion procedure ensured that there were no differences in difficulty between the same-category conditions for any pair of categories (P > 0.16 for all pairings; Fig. S2). Although these exclusion criteria were chosen to isolate competition between items, our overall behavioral pattern and its relationship to neural activation is similar with and without behavioral subjects excluded (Fig. S3).

Overall, we observed a mixed-category benefit: performance on mixed-category displays was superior to performance on same-category displays (F1,9 = 19.85; P < 0.01; Fig. 1B). This suggests that different categories draw on separate resources, improving processing of mixed-category displays. Moreover, although there was a general benefit for mixing any two categories, a closer examination suggested that the effect size depended on which categories were paired together (regression model comparison, F5,54 = 2.29; P = 0.059; SI Materials and Methods). The mixed-category benefit for each pairing, in order from largest to smallest, was: bodies–scenes, 5.6%, SEM = 1.5%; faces–scenes, 5.2%, SEM = 1.3%; bodies–faces, 3.3%, SEM = 1.1%; bodies–objects, 3.3%, SEM = 1.2%; faces–objects, 2.4%, SEM = 1.9%; scenes–objects, −0.8%, SEM = 1.9% (Fig. 1B). The variation in the size of the mixed-category benefit suggests that categories do not compete equally for representation and that there are graded benefits depending on the particular combination of categories.

What is the source of the variability in the size of the mixed-category benefit? We hypothesize that visual object information is represented by a set of broadly tuned neural channels in the visual system, and that each stimulus category activates a subset of those channels (7–10, 27, 28). Under this view, items compete for representation to the extent that they activate overlapping channels. The differences in the size of the mixed-category benefit may thus result from the extent to which the channels representing different categories are separated.

Importantly, although this representational-competition framework explains why varying degrees of mixed-category benefits occur, it cannot make a priori predictions about why particular categories (e.g., faces and scenes) interfere with one another less than other categories (e.g., objects and scenes). Thus, we sought to (i) directly measure the neural responses to each stimulus category and (ii) use these neural responses to predict the size of the mixed-category benefit between categories. Furthermore, by assuming a model of representational competition in the brain, we can leverage the graded pattern of behavioral mixed-category benefits to gain insight into the plausible sites of competition at the neural level.

Measuring Neural Separation Among Category Responses.

Six new participants who did not participate in the behavioral experiment were recruited for the fMRI experiment. Participants viewed stimuli presented in a blocked design, with each block composed of images from a single category presented in a single quadrant (Materials and Methods). The same image set was used in the behavioral and fMRI experiments. Neural response patterns were measured separately for each category in each quadrant of the visual field. There are two key properties of this fMRI design. First, any successful brain/behavior relationship requires that behavioral interference between two categories can be predicted from the neural responses to those categories measured in isolation and across separate locations. Second, by using two groups of participants, one for behavioral measurements and another for neural measurements, any brain/behavior relationship cannot rely on individual idiosyncrasies in object processing and instead reflects a more general property of object representation in behavior and neural coding.

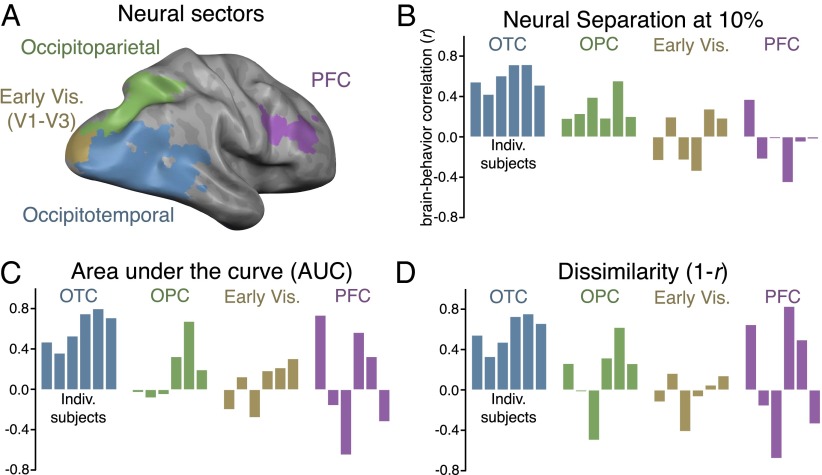

To probe how different neural regions predict behavioral interference, we divided the set of visually active voxels into four sectors in each participant: occipitotemporal, occipitoparietal, early visual (V1–V3), and prefrontal cortex (PFC). These sectors were defined from independent functional localizers (SI Materials and Methods and Fig. S4). Performing the analysis in these sectors allowed us to examine the neural response patterns across the major divisions of the visual system: early retinotopic cortex, the what pathway, the where/how pathway (29, 30), as well as in a frontal lobe region associated with working memory (31).

We defined the neural separation between any two categories as the extent to which the stimuli activate different voxels. To quantify this, we first identified the most active voxels in each sector for each of the categories, at a particular threshold [e.g., the 10% most active voxels for objects (objects > rest) and the top 10% most active voxels for scenes (scenes > rest); Fig. 2]. Next, we calculated the percentage of those voxels that were shared by each category pairing (i.e., percent overlap). This percent overlap measure was then converted to a neural separation measure as 1 minus percent overlap. The amount of neural separation for every category pairing was then calculated at every activation threshold (from 1% to 99%; Materials and Methods). Varying the percent of active voxels under consideration allows us to probe whether a sparse or more distributed pool of representational channels best predicts the behavioral effect. This was done in every sector of each fMRI participant. In addition, we also used an area under the curve (AUC) analysis, which integrates over all possible activation thresholds, to compute an aggregate neural separation measure for each category pairing. Finally, we performed a standard representational similarity analysis in which the neural patterns of each category pairing were compared by using a pattern dissimilarity measure [1 minus the Pearson correlation between two response patterns across the entire sector (14); SI Materials and Methods].

Fig. 2.

Visualization of the neural separation procedure. Activation and overlap among the 10% most active voxels for objects and scenes in the occipitotemporal sector is shown in a representative subject. The 10% most active voxels for each category are colored as follows: objects purple, scenes blue. The overlap among these active voxels are shown in yellow. For visualization purposes, this figure shows the most active voxels and overlapping voxels combined across all locations; for the main analysis, overlap was computed separately for each pair of locations.

Neural Separation Predicts the Mixed-Category Benefit.

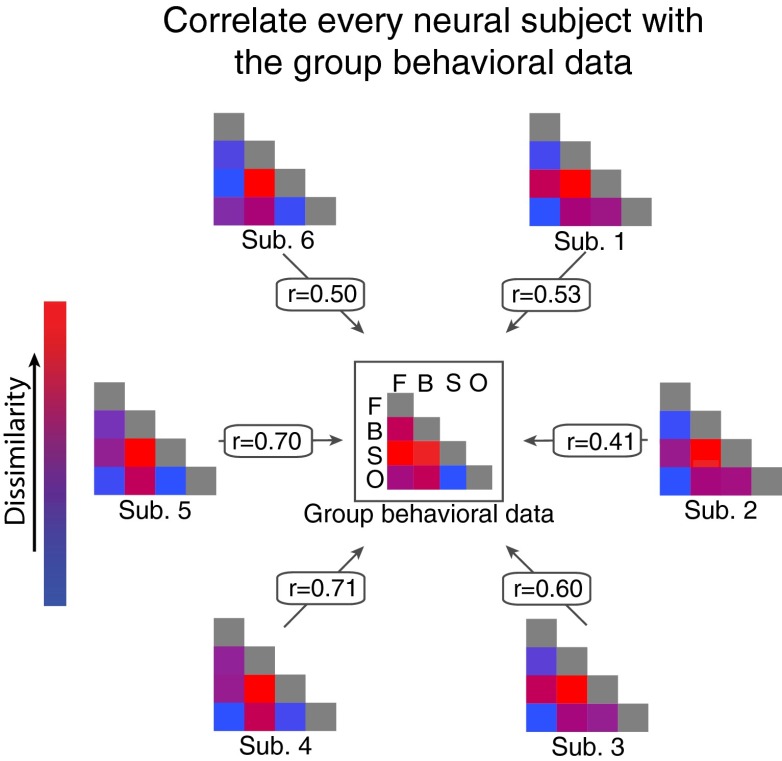

To assess the degree to which neural separation predicted the mixed-category benefit, we correlated the amount of neural separation for every category pairing in each individual fMRI participant with the size of the mixed-category benefits from the behavioral experiments (i.e., a random effects analysis of the distribution of brain/behavior correlations; SI Materials and Methods). An illustration of this analysis procedure using data obtained from occipitotemporal cortex is shown in Fig. 3. We chose this analysis because it allows for a stronger inference about the generality of our results relative to a fixed effects analysis on the neural and behavioral data (14). In addition, we were confident in our ability to analyze each fMRI participant individually given the highly reliable nature of our neural data (average within-subject split-half reliability in occipitotemporal, r = 0.82; occipitoparietal, r = 0.79; early visual, r = 0.86; and prefrontal, r = 0.65; SI Materials and Methods and Figs. S5 and S6).

Fig. 3.

Visualization of our analysis procedure. The center matrix represents the group data from the behavioral experiment with the color of each square corresponding to the size of the mixed-category benefit for that category pairing (Fig. 1). The six remaining matrices correspond to each fMRI participant, with the color of each square corresponding to the amount of neural separation between two categories in occipitotemporal cortex at the 10% activation threshold (Fig. 2). The correlations (r) are shown for each fMRI participant. Note that the r values shown here are the same as those shown in Fig. 4B for occipitotemporal cortex.

To determine whether the most active voxels alone could predict the mixed-category benefit, we correlated the amount of neural separation in the 10% most active voxels with the size of the mixed-category benefit. In this case, we found a significant correlation in occipitotemporal cortex of each participant (average r = 0.59, P < 0.01) with a smaller, but still significant, correlation in occipitoparietal cortex (average r = 0.30, P < 0.01) and no correlation in early visual (average r = −0.03, P = 0.82) or prefrontal cortex (average r = −0.06, P = 0.63; Fig. 4B). A leave-one-category-out analysis confirmed that the correlations in each of these sectors were not driven by any particular category (SI Materials and Methods). It should be noted that, given the fine-grained retinotopy in early visual cortex, objects presented across visual quadrants activate nearly completely separate regions, and this is reflected in the neural separation measure (ranging from 93% to 96% separation). Thus, by design, we anticipated that the neural separation of these patterns in the early visual cortex would not correlate with the behavioral results.

Fig. 4.

(A) Visualization of the four sectors from a representative subject. (B) Brain/behavior correlations in every sector for each fMRI participant at the 10% activation threshold, with r values plotted on the y axis. Each bar corresponds to an individual participant. (C) Brain/behavior correlations in every sector for each participant when using the AUC analysis and (D) representational dissimilarity (1 − r).

To compare correlations between any two sectors, a paired t test was performed on the Fisher z-transformed correlations. In this case, the correlation in occipitotemporal cortex was significantly greater than the correlations in all other sectors (occipitoparietal, t5 = 5.14, P < 0.01; early visual, t5 = 4.68, P < 0.01; PFC, t5 = 4.67, P < 0.01). Together, these results show that the degree of neural overlap between stimulus categories, particularly within occipitotemporal cortex, strongly predicts the variation in the size of the behavioral mixed-category benefit for different categories. Moreover, because this analysis considers only 10% of voxels in a given sector, these results indicate that a relatively sparse set of representational channels predicts the behavioral effect.

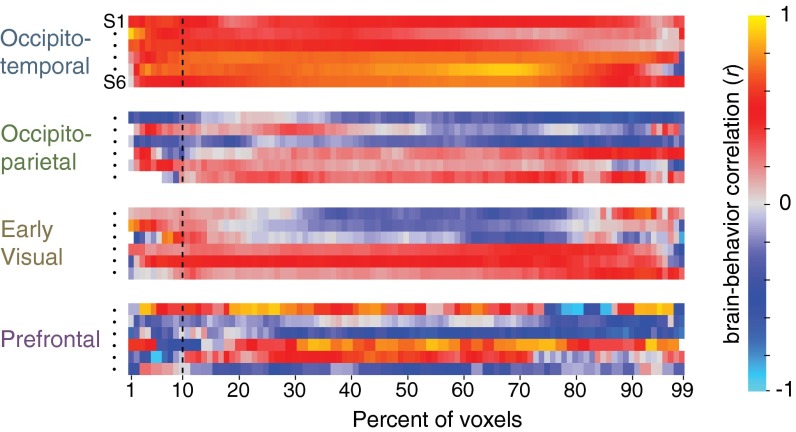

Next, we varied the activation threshold to test whether a more restricted or expansive pool of neural channels could best predict the graded patterns of the behavioral mix effect. The percentage of most-active voxels used for the separation analysis was systematically increased from 1% to 99% (at 100%, there is complete overlap between all pairs of categories because every voxel is included for every category). The brain/behavior correlation of every subject as a function of percentile for each sector is shown in Fig. 5. This analysis revealed that the behavioral effect is well-predicted by the amount of neural separation across a broad range of occipitotemporal cortex, regardless of the percentile chosen for the neural separation analysis. Put another way, for any two categories, the degree of overlap among the most active voxels is similarly reflected across the majority of the entire activation profile.

Fig. 5.

Brain/behavior correlation in each subject for every sector for all possible activation thresholds. Each row shows the results for one of the six individual subjects (in the same order as shown in Fig. 4 for each sector). The x axis corresponds to the percentage of active voxels considered for the neural overlap analysis. The dashed vertical line marks the brain/behavior correlation when considering the 10% most active voxels, corresponding to the data plotted in Fig. 4B.

To assess the statistical reliability of this result in a way that does not depend on a particular activation threshold, we used an AUC analysis to compute the aggregate neural separation between categories for all subjects in all sectors. These values were then correlated with the behavioral results, and the results were similar to those observed when considering only the top 10% most active voxels (Fig. 4C). There was a significant correlation in occipitotemporal cortex (average r = 0.62, t5 = 6.28, P < 0.01), whereas little to no correlation was observed in the remaining sectors (occipitoparietal, r = 0.20; early visual, r = 0.06; prefrontal, r = 0.11; P > 0.21 in all cases; Fig. 4C). In addition, the correlation in occipitotemporal cortex was significantly greater than the correlations in all other sectors (occipitoparietal, t5 = 8.36, P < 0.001; early visual, t5 = 6.86, P < 0.01), except PFC, in which the effect was marginal (t5 = 2.30, P = 0.07), likely because the brain/behavior correlation measures in PFC were highly inconsistent across participants. It is worth noting that, in the dorsal stream sector of occipitoparietal cortex, although there was a significant correlation at the 10% cutoff, the AUC analysis did not show a significant correlation (t5 = 1.45, P > 0.21), suggesting that only the most active voxels in occipitoparietal cortex predict the behavioral data.

A convergent pattern of results was found by using a representational similarity analysis (14). That is, in occipitotemporal cortex, pattern dissimilarity (i.e., 1 − r) across all pairs of categories strongly predicted the magnitude of the mixed-category benefit (average r = 0.60, t5 = 6.77, P < 0.01; Fig. 4D). None of the other sectors showed a significant brain/behavior correlation using this neural measure (P > 0.37 in all cases), and direct comparisons between sectors show that the brain/behavior correlation in occipitotemporal cortex was significantly greater than those in the other sectors [P < 0.05 in all cases except in PFC, in which the difference was not significant (t5 = 1.87, P = 0.12), again likely because of the brain/behavior correlations being highly variable in PFC].

To what extent do the category-selective regions for faces, bodies, scenes, and objects found in the occipitotemporal sector (11) drive these results? To address this question, we calculated the brain/behavior correlation in occipitotemporal cortex when considering only category selective regions (e.g., fusiform face area (FFA)/occipital face area (OFA) and parahippocampal place area (PPA)/retrosplenial cortex (RSC) when comparing faces and scenes) or only the cortex outside the category selective regions by using pattern dissimilarity as our measure of representational similarity (SI Materials and Methods). This analysis revealed a strong brain/behavior correlation within the category-selective regions (average r = 0.62, P < 0.01) and outside the category selective regions (r = 0.60, P < 0.01; Fig. S7), with no difference between these two correlations (t5 = 0.10, P = 0.92).

Different assumptions about neural coding are tested by our two analyses: neural separation tests the idea that information is conveyed by maximal neural responses; neural similarity assumes that information is conveyed over the full distribution of responses within some circumscribed cortical territory. These measures dissociate in the occipitoparietal sector. The neural overlap analysis revealed that only the most active voxels have systematic differences among categories, suggesting that there is reliable object category information along portions of this sector (32). This observation was missed by the representational similarity analysis, presumably because many of the dorsal stream voxels are not as informative, making the full neural patterns subject to more noise. This result also highlights that the selection of voxels over which pattern analysis is performed can be critical to the outcomes. In contrast, in the occipitotemporal cortex, the separation and similarity metrics strongly correlated with behavior, and thus cannot distinguish between the functional roles of strong overall responses and distributed patterns. Nevertheless, this convergence strongly demonstrates that neural responses across the entire occipitotemporal cortex have the requisite representational structure to predict object-processing capacity in behavior.

Discussion

Here we characterized participants’ ability to simultaneously process multiple high-level items and linked this behavioral capacity to the underlying neural representations of these items. Participants performed better in a change-detection task when items were from different categories than when items were from the same category. This suggests that, within the domain of high-level vision, items do not compete equally for representation. By using fMRI to independently measure the pattern of activity evoked by each category, we found that the magnitude of the mixed-category benefit for any category pairing was strongly predicted by the amount of neural separation between those categories in occipitotemporal cortex. These data suggest that processing multiple items at once is limited by the extent to which those items draw on the same underlying representational resources.

The present behavioral results challenge many influential models of attention and working memory. These models are typically derived from studies that use simple stimuli (e.g., colorful dots or geometric shapes), and tend to posit general limits that are assumed to apply to all items equally. For example, some models propose that processing capacity is set by a fixed number of pointers (15–18) or fixed resource limits (19–21), or from spatial interference between items (4, 22–24), none of which are assumed to depend on the particular items being processed. However, the present results demonstrate that the ability to process multiple items at once is greater when the items are from different categories. We interpret this finding in terms of partially separate representational resources available for processing different types of high-level stimuli. However, an alternative possibility is that these effects depend on processing overlap instead of representational overlap. For example, it has been argued that car experts show greater interference between cars and faces in a perceptual task than nonexperts because only experts use holistic processing to recognize both cars and faces (33, 34). The present behavioral data cannot distinguish between these possibilities. Future work will be required to determine which stages of perceptual processing show interference and whether this interference is best characterized in terms of representational or processing overlap.

Given that the size of the behavioral mixed-category benefit varied as a function of which categories were being processed, what is the source of this variability? We found that neural responses patterns, particularly in occipitotemporal cortex, strongly predicted the pattern of behavioral interference. This relationship between object processing and the structure of occipitotemporal cortex is intuitive because occipitotemporal cortex is known to respond to high-level object and shape information (11–14) and has receptive fields large enough to encompass multiple items in our experimental design (35). However, some aspects of the correlation between behavior and occipitotemporal cortex were somewhat surprising. In particular, we found that the relative separation between stimulus categories was consistent across the entire response profile along occipitotemporal cortex. That is, the brain/behavioral relationship held when considering the most active voxels, the most selective voxels (e.g., FFA/PPA), or those voxels outside of classical category selective regions.

The fact that the brain/behavior correlation can be seen across a large majority of occipitotemporal cortex is not predicted by expertise-based (36) or modular (11) models of object representation. If this correlation was caused by differences in expertise between the categories, one might expect to see a significant correlation only in FFA (37). Similarly, if competition within the most category-selective voxels drove the behavioral result, we would expect to only find a significant brain/behavior correlation within these regions. Of course, it is important to emphasize that the present approach is correlational, so we do not know whether all or some subset of occipitotemporal cortex is the underlying site of interference. Future work with the use of causal methods (38), or that explores individual differences in capacity and neural organization (39), will be important to explore these hypotheses.

In light of the relationship between behavioral performance and neural separation, it is important to consider the level of representation at which the competition occurs. For example, items might interfere with each other within a semantic (40),categorical (11), or perceptual (41, 42) space. Variants of the current task could be used to isolate the levels of representation involved in the mixed-category benefit and its relationship to neural responses. For example, the use of exemplars with significant perceptual variation (e.g., caricatures, Mooney faces, and photographs) would better isolate a category level of representation. Conversely, examining the same type of brain/behavioral relationship within a single category would minimize the variation in semantic space and would target a more perceptual space. Although the present data cannot isolate the level of representation at which competition occurs, it is possible that neural competition could occur at all levels of representation, and that behavioral performance will ultimately be limited by competition at the level of representation that is most relevant for the current task.

The idea of competition for representation is a prominent component of the biased competition model of visual attention, which was originally developed based on neurophysiological studies in monkeys (7), and has been expanded to explain certain human neuroimaging and behavioral results (43). These previous studies have shown that, if two items are presented close enough to land within a single receptive field, they compete for neural representation, such that the neural response to both items matches a weighted average of the response to each individual item alone (44). When attention is directed to one of the items, neural responses are biased toward the attended item, causing the neuron to fire as if only the attended item were present (7–10, 44). In the present study, we did not measure neural competition directly. Instead, we measured neural responses to items presented in isolation and used similarity in those responses to predict performance in a behavioral task. We suggest that the cost for neural similarity reflects a form of competition, but we cannot say how that competition manifests itself (e.g., as suppression of overall responses or a disruption in the pattern of responses across the population) or if these mechanisms are the same from early to high-level visual cortex (45–47). Thus, parameterizing neural similarity and measuring neural responses to items presented simultaneously will be essential for addressing the relationship between neural similarity and neural competition.

Overall, the present findings support a framework in which visual processing relies on a large set of broadly tuned coding channels, and perceptual interference between items depends on the degree to which they activate overlapping channels. This proposal predicts that a behavioral mixed-category benefit will be obtained for tasks that require processing multiple items at once, to the extent that the items rely on separate channels. It is widely known that there are severe high-level constraints on our ability to attend to, keep track of, and remember information. The present work adds a structural constraint on information processing and perceptual representation, based on how high-level object information is represented within the visual system.

Materials and Methods

Behavioral Task.

Participants (N = 55) viewed four items for 800 ms, followed by a fixation screen for 1,000 ms, followed by a probe display of four items, one of which was cued with a red frame. For any display, images were randomly chosen from the stimulus set with the constraint that all images on a given display were unique. There were no changes on half the trials. On the other trials, the cued item changed to a different item from the same category (e.g., from one face to another). Participants reported if the cued item had changed. The probe display remained on screen until participants gave a response by using the keyboard.

On same-category trials, all four items came from the same category (e.g., four faces or four scenes), with each category appearing equally often across trials. On mixed-category trials, there were two items from each category (e.g., two faces and two scenes). Items were arranged such that one item from each category appeared in each visual hemifield. Across the mixed-category trials, both categories were tested equally often. The location of the cued item was chosen in a pseudorandom fashion.

fMRI Task.

Six participants (none of whom performed the behavioral task) completed the fMRI experiment. There were eight runs of the main experiment, one run of meridian mapping to localize early visual areas (V1–V3), one run of a working memory task used to localize PFC, and two localizer runs used to define the occipitotemporal and occipitoparietal sectors as well as category-selective regions (FFA/OFA, PPA/RSC, extrastriate body area/fusiform body area, and lateral occipital). Participants were shown the same faces, bodies, scenes, and objects that were used in the behavioral experiments (Fig. S1), presented one at a time in each of the four quadrants [top left (TL), top right (TR), bottom left (BL), bottom right (BR)], following a standard blocked design. In each 16-s stimulus block, images from one category were presented in isolation (one at a time) at one of the four locations; 10-s fixation blocks intervened between each stimulus block. A total of 11 items were presented per block for 1 s with a 0.45-s intervening blank. Participants were instructed to maintain fixation on a central cross and to press a button indicating when the same item appeared twice in a row, which happened once per block. For any given run, all four stimulus categories were presented in two of the four possible locations, for two separate blocks per category × location pair, yielding 16 blocks per run. Across eight runs, the categories were presented at each pair of locations (TL–TR; TL–BR; BL–BR; BL–TR), yielding eight blocks for each of the 16 category × location conditions (SI Materials and Methods provides information on localizer runs).

Neural Separation Analysis.

The logic of this analysis is to compute the proportion of voxels that are activated by any two categories (e.g., faces and scenes): if no voxels are coactivated, there is 100% neural separation, whereas, if all voxels are coactivated, there is 0% separation. This analysis relies on one free parameter, which sets the percent of the most active voxels to consider as the available representational resources of each object category. In addition, we take into account location by considering the overlap between the two categories at all pairs of locations, and then averaging across location pairs.

To compute the neural separation between two categories within a sector (e.g., faces and scenes in occipitotemporal cortex), we used the following procedure. (i) The responses (β) for each category–location pair were sorted and the top n (as a percentage) was selected for analysis, where n was varied from 1% to 99%. (ii) Percent overlap at a particular threshold was computed as the number of voxels that were shared between any two conditions at that threshold, divided by the number of selected voxels for each condition (e.g., if 10 voxels overlap among the top 100 face voxels and the top 100 scene voxels, the face–scene overlap would be 10/100 = 10%). To take into account location, percent overlap was calculated separately for all 12 possible location pairs, e.g., faces-TL–scenes-TR, faces-TL–scenes-BL, faces-TL–scenes-BR. Fig. 2 shows an example whereby n = 10% for the activation patterns of objects (purple) scenes(blue) and shared (yellow); and (iii) Finally, we averaged across these 12 overlap estimates to compute the final overall estimate of overlap between a pair of categories. This measure can be interpreted as the degree to which two different categories in two different locations will recruit similar cortical territory. We computed percent overlap at each percentile (i.e., 1%–99% of the most active voxels), generating a neural overlap curve, and converted this to a percentage separation measure by taking 1 minus the percent overlap. This procedure was conducted for all pairs of categories, for all sectors, for all subjects.

Supplementary Material

Acknowledgments

We thank Marvin Chun, James DiCarlo, Nancy Kanwisher, Rebecca Saxe, Amy Skerry, Maryam Vaziri-Pashkam, and Jeremy Wolfe for comments on the project. This work was supported by National Science Foundation (NSF) Graduate Research Fellowship (to M.A.C.), National Research Service Award Grant F32EY022863 (to T.K.), National Institute of Health National Eye Institute-R01 Grant EY01362 (to K.N.), and NSF CAREER Grant BCS-0953730 (to G.A.A.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1317860111/-/DCSupplemental.

References

- 1.Baddeley AD, Hitch G. Working memory. In: Bower GH, editor. The Psychology of Learning and Motivation: Advances in Research and Theory. New York: Academic; 1974. pp. 47–89. [Google Scholar]

- 2.Duncan J, Martens S, Ward R. Restricted attentional capacity within but not between sensory modalities. Nature. 1997;387(6635):808–810. doi: 10.1038/42947. [DOI] [PubMed] [Google Scholar]

- 3.Gazzaniga M, Ivry RB, Mangun GR. Cognitive Neuroscience. New York: Norton; 2008. [Google Scholar]

- 4.Franconeri SL, Alvarez GA, Cavanagh P. Flexible cognitive resources: Competitive content maps for attention and memory. Trends Cogn Sci. 2013;17(3):134–141. doi: 10.1016/j.tics.2013.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Pelli DG, Tillman KA. The uncrowded window of object recognition. Nat Neurosci. 2008;11(10):1129–1135. doi: 10.1038/nn.2187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wandell BA, Dumoulin SO, Brewer AA. Visual field maps in human cortex. Neuron. 2007;56(2):366–383. doi: 10.1016/j.neuron.2007.10.012. [DOI] [PubMed] [Google Scholar]

- 7.Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- 8.Kastner S, et al. Modulation of sensory suppression: Implications for receptive field sizes in the human visual cortex. J Neurophysiol. 2001;86(3):1398–1411. doi: 10.1152/jn.2001.86.3.1398. [DOI] [PubMed] [Google Scholar]

- 9.Kastner S, De Weerd P, Desimone R, Ungerleider LG. Mechanisms of directed attention in the human extrastriate cortex as revealed by functional MRI. Science. 1998;282(5386):108–111. doi: 10.1126/science.282.5386.108. [DOI] [PubMed] [Google Scholar]

- 10.Beck DM, Kastner S. Stimulus context modulates competition in human extrastriate cortex. Nat Neurosci. 2005;8(8):1110–1116. doi: 10.1038/nn1501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kanwisher N. Functional specificity in the human brain: A window into the functional architecture of the mind. Proc Natl Acad Sci USA. 2010;107(25):11163–11170. doi: 10.1073/pnas.1005062107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bell AH, et al. Relationship between functional magnetic resonance imaging-identified regions and neuronal category selectivity. J Neurosci. 2011;31(34):12229–12240. doi: 10.1523/JNEUROSCI.5865-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Haxby JV, et al. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293(5539):2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- 14.Kriegeskorte N, et al. Matching categorical object representations in inferior temporal cortex of man and monkey. Neuron. 2008;60(6):1126–1141. doi: 10.1016/j.neuron.2008.10.043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Pylyshyn ZW, Storm RW. Tracking multiple independent targets: Evidence for a parallel tracking mechanism. Spat Vis. 1988;3(3):179–197. doi: 10.1163/156856888x00122. [DOI] [PubMed] [Google Scholar]

- 16.Drew T, Vogel EK. Neural measures of individual differences in selecting and tracking multiple moving objects. J Neurosci. 2008;28(16):4183–4191. doi: 10.1523/JNEUROSCI.0556-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Awh E, Barton B, Vogel EK. Visual working memory represents a fixed number of items regardless of complexity. Psychol Sci. 2007;18(7):622–628. doi: 10.1111/j.1467-9280.2007.01949.x. [DOI] [PubMed] [Google Scholar]

- 18.Zhang W, Luck SJ. Discrete fixed-resolution representations in visual working memory. Nature. 2008;453(7192):233–235. doi: 10.1038/nature06860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Alvarez GA, Cavanagh P. The capacity of visual short-term memory is set both by visual information load and by number of objects. Psychol Sci. 2004;15(2):106–111. doi: 10.1111/j.0963-7214.2004.01502006.x. [DOI] [PubMed] [Google Scholar]

- 20.Ma WJ, Husain M, Bays PM. Changing concepts of working memory. Nat Neurosci. 2014;17(3):347–356. doi: 10.1038/nn.3655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Franconeri SL, Alvarez GA, Enns JT. How many locations can be selected at once? J Exp Psychol Hum Percept Perform. 2007;33(5):1003–1012. doi: 10.1037/0096-1523.33.5.1003. [DOI] [PubMed] [Google Scholar]

- 22.Delvenne J-F. The capacity of visual short-term memory within and between hemifields. Cognition. 2005;96(3):B79–B88. doi: 10.1016/j.cognition.2004.12.007. [DOI] [PubMed] [Google Scholar]

- 23.Delvenne J-F, Holt JL. Splitting attention across the two visual fields in visual short-term memory. Cognition. 2012;122:258–263. doi: 10.1016/j.cognition.2011.10.015. [DOI] [PubMed] [Google Scholar]

- 24.Franconeri SL, Jonathan SV, Scimeca JM. Tracking multiple objects is limited only by object spacing, not by speed, time, or capacity. Psychol Sci. 2010;21(7):920–925. doi: 10.1177/0956797610373935. [DOI] [PubMed] [Google Scholar]

- 25.Biederman I. Recognition-by-components: A theory of human image understanding. Psychol Rev. 1987;94(2):115–147. doi: 10.1037/0033-295X.94.2.115. [DOI] [PubMed] [Google Scholar]

- 26.Tarr MJ, Bülthoff HH. Image-based object recognition in man, monkey and machine. Cognition. 1998;67(1-2):1–20. doi: 10.1016/s0010-0277(98)00026-2. [DOI] [PubMed] [Google Scholar]

- 27.Wong JH, Peterson MS, Thompson JC. Visual working memory capacity for objects from different categories: A face-specific maintenance effect. Cognition. 2008;108(3):719–731. doi: 10.1016/j.cognition.2008.06.006. [DOI] [PubMed] [Google Scholar]

- 28.Olsson H, Poom L. Visual memory needs categories. Proc Natl Acad Sci USA. 2005;102(24):8776–8780. doi: 10.1073/pnas.0500810102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Ungerleider LG, Mishkin M. Two cortical visual systems. In: Ingle DJ, Goodale MA, Mansifield RJW, editors. Analysis of Visual Behavior. Cambridge, MA: MIT Press; 1982. pp. 549–583. [Google Scholar]

- 30.Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15(1):20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- 31.Curtis CE, D’Esposito M. Persistent activity in the prefrontal cortex during working memory. Trends Cogn Sci. 2003;7(9):415–423. doi: 10.1016/s1364-6613(03)00197-9. [DOI] [PubMed] [Google Scholar]

- 32.Konen CS, Kastner S. Two hierarchically organized neural systems for object information in human visual cortex. Nat Neurosci. 2008;11(2):224–231. doi: 10.1038/nn2036. [DOI] [PubMed] [Google Scholar]

- 33.McKeeff TJ, McGugin RW, Tong F, Gauthier I. Expertise increases the functional overlap between face and object perception. Cognition. 2010;117(3):355–360. doi: 10.1016/j.cognition.2010.09.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McGugin RW, McKeeff TJ, Tong F, Gauthier I. Irrelevant objects of expertise compete with faces during visual search. Atten Percept Psychophys. 2011;73(2):309–317. doi: 10.3758/s13414-010-0006-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kravitz DJ, Vinson LD, Baker CI. How position dependent is visual object recognition? Trends Cogn Sci. 2008;12(3):114–122. doi: 10.1016/j.tics.2007.12.006. [DOI] [PubMed] [Google Scholar]

- 36.Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 2000;3(2):191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- 37.Tarr MJ, Gauthier I. FFA: A flexible fusiform area for subordinate-level visual processing automatized by expertise. Nat Neurosci. 2000;3(8):764–769. doi: 10.1038/77666. [DOI] [PubMed] [Google Scholar]

- 38.Afraz SR, Kiani R, Esteky H. Microstimulation of inferotemporal cortex influences face categorization. Nature. 2006;442(7103):692–695. doi: 10.1038/nature04982. [DOI] [PubMed] [Google Scholar]

- 39.Park J, Carp J, Hebrank A, Park DC, Polk TA. Neural specificity predicts fluid processing ability in older adults. J Neurosci. 2010;30(27):9253–9259. doi: 10.1523/JNEUROSCI.0853-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Moores E, Laiti L, Chelazzi L. Associative knowledge controls deployment of visual selective attention. Nat Neurosci. 2003;6(2):182–189. doi: 10.1038/nn996. [DOI] [PubMed] [Google Scholar]

- 41.Tanaka K. Columns for complex visual object features in the inferotemporal cortex: Clustering of cells with similar but slightly different stimulus selectivities. Cereb Cortex. 2003;13(1):90–99. doi: 10.1093/cercor/13.1.90. [DOI] [PubMed] [Google Scholar]

- 42.Ullman S. Object recognition and segmentation by a fragment-based hierarchy. Trends Cogn Sci. 2007;11(2):58–64. doi: 10.1016/j.tics.2006.11.009. [DOI] [PubMed] [Google Scholar]

- 43.Scalf PE, Torralbo A, Tapia E, Beck DM. Competition explains limited attention and perceptual resources: Implications for perceptual load and dilution theories. Front Psychol. 2013;4:243. doi: 10.3389/fpsyg.2013.00243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Reynolds JH, Desimone R. The role of neural mechanisms of attention in solving the binding problem. Neuron. 1999;24(1):19–29, 111–125. doi: 10.1016/s0896-6273(00)80819-3. [DOI] [PubMed] [Google Scholar]

- 45.Treue S, Hol K, Rauber H-J. Seeing multiple directions of motion-physiology and psychophysics. Nat Neurosci. 2000;3(3):270–276. doi: 10.1038/72985. [DOI] [PubMed] [Google Scholar]

- 46.Carandini M, Movshon JA, Ferster D. Pattern adaptation and cross-orientation interactions in the primary visual cortex. Neuropharmacology. 1998;37(4-5):501–511. doi: 10.1016/s0028-3908(98)00069-0. [DOI] [PubMed] [Google Scholar]

- 47.MacEvoy SP, Tucker TR, Fitzpatrick D. A precise form of divisive suppression supports population coding in the primary visual cortex. Nat Neurosci. 2009;12(5):637–645. doi: 10.1038/nn.2310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Loftus GR, Masson MEJ. Using confidence intervals in within-subject designs. Psychon Bull Rev. 1994;1(4):476–490. doi: 10.3758/BF03210951. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.