Abstract

Objective

Spectral modulation detection (SMD) provides a psychoacoustic estimate of spectral resolution. The SMD threshold for an implanted ear is highly correlated with speech understanding and is thus a non-linguistic, psychoacoustic index of speech understanding. This measure, however, is time and equipment intensive and thus not practical for clinical use. Thus the purpose of the current study was to investigate the efficacy of a quick SMD task with the following three study aims: (1) to investigate the correlation between the long psychoacoustic, and quick SMD tasks, (2) to determine the test/retest variability of the quick SMD task, and (3) to evaluate the relationship between the quick SMD task and speech understanding.

Design

This study included a within-subjects, repeated-measures design.

Study sample

Seventy-six adult cochlear implant recipients participated.

Results

The results were as follows: (1) there was a significant correlation between the long psychoacoustic, and quick SMD tasks, (2) the test-retest variability of the quick SMD task was highly significant and, (3) there was a significant positive correlation between the quick SMD task and monosyllabic word recognition.

Conclusions

The results of this study represent the direct clinical translation of a research-proven task of SMD into a quick, clinically feasible format.

Keywords: Spectral modulation detection, spectral envelope perception, spectral modulation detection, spectral resolution, cochlear implants, speech understanding, word recognition

The concept of evidence-based practice has focused considerable effort on developing and implementing best practices for a given field or subspecialty. Best practices for audiology have been in place and regularly revised with published guidelines for pure-tone audiometry (American Speech-Language-Hearing Association, 2005; British Society of Audiology, 2011), speech audiometry (American Speech-Language-Hearing Association, 1988), hearing aid fittings for adults and children (American Speech-Language-Hearing Association, 1998; Valente et al, 1998; Audiology, 2004), and newborn hearing screening (Joint Committee on Infant Hearing, 2007), as well as many other facets of audiology practice. With respect to audiologic management of cochlear implant recipients, the adult minimum speech test battery (MSTB) (Auditory Potential, 2011) provides best-practices guidelines for pre- and post-implant assessment of speech recognition performance for adult cochlear implant recipients.

The most recent iteration of the MSTB outlines the use of consonant nucleus consonant (CNC), (Peterson & Lehiste, 1962)] monosyllabic word recognition, AzBio (Spahr et al, 2012) sentence recognition, and Bamford-Kowal-Bench speech in noise test [BKB-SIN, (Killion et al, 2001; Etymotic, 2005)]. With the publication of the original MSTB manual in 1996 and the revised version in 2011, the three FDA-approved cochlear implant manufacturers provided implant centers with a copy of the manual and a free audio CD including a sample recording of the CNC words, AzBio sentences, and BKB-SIN. Thus cochlear implant audiologists in the United States and English-speaking Canada have guidelines for pre- and post-implant assessment of outcomes as well as the free tools at their disposal to implement these guidelines.

Though there are free tools available with speech stimuli spoken in a North American English dialect, there are large populations of individuals living in the U.S. and Canada for whom the language spoken in the home is not English. Using a speech metric in a language that is not native to the individual being assessed may not be appropriate or valid (Nabelek & Donohue, 1984; Takata & Nabelek, 1990; Mayo et al, 1997; Cutler et al, 2008).

From a global perspective, language diversity complicates the assessment of speech understanding. For example, in India alone, it is estimated that there are over 400 languages actively in use (Paul et al, 2013). In China, there are over 250 languages actively in use (Paul et al, 2013). Many of the languages in use in Eastern and Southeastern Asia have drastically different dialects precluding speech intelligibility even amongst individuals who are included in the same language family.

With such global diversity in language, the development of validated speech recognition metrics for every language and dialect is an entirely unrealistic prospect. Despite this, cochlear implant recipients in the most populous areas of the world—which also happen to have a greater incidence of hearing loss (Swanepoel, 2010)—are no less deserving of measures for the validation of surgical and programming outcomes. This, however, is not currently standard of care due not only to the lack of valid measures, but also to the shortage of clinical personnel needed to administer such measures. Thus there is need for a validated outcome metric that is quick, non-language based, highly correlated with speech understanding, and easy to administer.

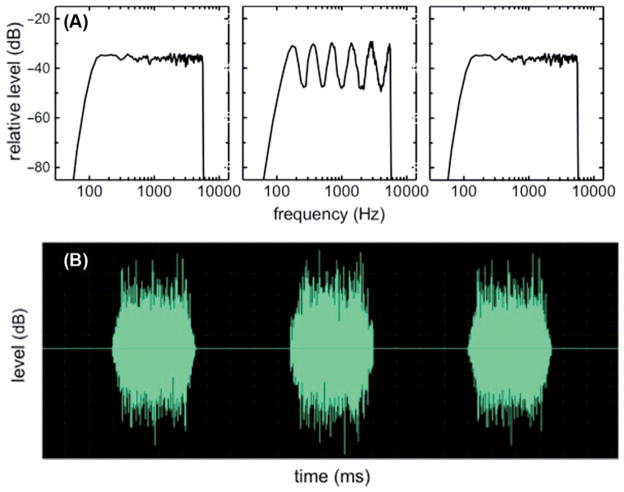

Previous studies have demonstrated a significant correlation between auditory-only speech understanding and spectral modulation detection (Litvak et al, 2007; Saoji et al, 2009; Spahr et al, 2011) as well as spectral modulation discrimination (Henry & Turner, 2003; Henry et al, 2005; Drennan et al, 2011; Won et al, 2011). The spectral modulation detection task requires the listener to discriminate a flat spectrum noise from one with spectral peaks and valleys. Figure 1 displays spectral and temporal representations of stimuli presented in a three-interval SMD task. As shown in Figure 1, A, the first and third intervals contain a flat spectrum noise and the second interval contains a spectrally modulated noise. Figure 1, B displays the temporal waveforms for the SMD stimuli shown in Figure 1, A. Because modulation is applied logarithmically to the spectrum (Figure 1, A), the amplitude envelope remains flat as a function of time (Figure 1, B). Spectral modulation discrimination—also commonly referred to as spectral ripple discrimination—is slightly different in that the spectral modulation is present in all three intervals. In one interval, however, the modulation or ripple is inverted relative to that in the other two intervals (see Won et al, 2007). Thus the task is to discriminate the difference between a noise with standard spectral modulation from a noise for which the modulation has been inverted. For the present study, we will focus on spectral modulation detection (SMD).

Figure 1.

Spectral (A) and temporal (B) displays for a 3-interval SMD task. The spectral representation (A) displays relative level, in dB, as a function of frequency, in Hz. The temporal representation (B) displays level, in dB, as a function of time, in ms.

SMD thresholds are generally described as the minimum modulation depth (peak-to-trough contrast), in dB, required to discriminate a spectrally modulated noise from a flat spectrum noise with the same bandwidth and overall level. There is a reliable, inverse relationship between spectral modulation rate and modulation detection thresholds for listeners with both normal-hearing (NH) and those with CIs (Saoji & Eddins, 2007; Saoji et al, 2009).

Several research groups have shown a significant, positive relationship between spectral modulation detection or discrimination1 with a CI and speech recognition performance for experienced CI users (Henry et al, 2005; Won et al, 2007, Saoji et al, 2009; Drennan et al, 2010; Spahr et al, 2011; Won et al, 2011, 2012; Dorman et al, 2012; Zhang et al, 2013). In fact, these studies have shown that the correlation coefficient between SMD threshold and speech recognition varies from 0.86 to 0.92 for consonants and monosyllables, respectively. Thus it is possible that the SMD task could be useful as a non-language based outcome measure. Such a measure could be adapted across languages as well as potentially useful for postoperative assessment with individuals for whom standard clinical measures of speech recognition may not be possible nor appropriate.

The relationship between speech recognition and SMD has been reported for relatively low modulation rates (up to 2 cycles/octave; Saoji et al, 2009; Spahr et al, 2011; Dorman et al, 2012; Zhang et al, 2013). In studies of spectral ripple discrimination, the majority of subjects’ thresholds were less than 2 ripples/octave (Won et al, 2007, 2011, 2012). In contrast, Anderson and colleagues (2011) showed a rather tenuous relationship between spectral ripple discrimination and speech recognition for cochlear implant recipients; however, nearly half the subjects’ thresholds were above 2 ripples/octave. In a later study, Anderson et al (2012) showed a much stronger relationship between speech recognition and both SMD and spectral ripple discrimination for lower modulation rates (<2–3 ripples/octave). Thus it may be the case that the relationship between speech recognition and SMD or spectral ripple discrimination is rate dependent.

Despite the highly significant correlation between low-rate SMD and speech understanding, the laboratory-based, psychoacoustic version of the SMD task is costly—in terms of both software and time—and can be complicated in setup and interpretation. Further, the 30 + minutes required per ear to obtain a psychoacoustic SMD threshold for a single modulation rate renders it clinically prohibitive, regardless of the global location. Thus we have devoted effort in recent years to adapt the long psychoacoustic SMD to a clinically viable metric that takes just 5 to 6 minutes per ear. Thus the purposes of this study were to (1) determine whether a quick SMD test could produce results equivalent to those of the longer psychoacoustic version, (2) evaluate the reliability and efficacy of the quick SMD task in a busy clinical environment, and (3) to verify the correlation between this quick SMD task and speech understanding. The related working hypotheses were that (1) the long psychoacoustic and quick SMD tasks would yield equivalent outcomes, (2) the quick SMD task would have high test-retest reliability, and (3) the correlation between the quick SMD task and speech recognition would be significant.

Experiment 1: Evaluation of relationship between the long psychoacoustic and quick SMD tasks

Subjects

Twelve adult research participants completed experiment 1. The mean age of the participants was 48.7 years with a range of 22 to 82 years. The twelve participants were all experienced cochlear implant recipients having an average of 6.4 years of implant experience (range 0.6 to 14.2 years). Four of the twelve participants were bilateral implant recipients and thus data were obtained for 16 implanted ears. Across the twelve participants, three were implanted with Advanced Bionics (AB), three with MED-EL, and six with Cochlear devices. All implant recipients were using the most recent generation of implant sound processor available at the time of experimentation, which included the Harmony for AB, Opus2 for MED-EL, and the CP810 for Cochlear.

Equipment and stimuli

Stimuli were generated and presented using MATLAB® installed on a PC with Intel Core i5-3470 quad-core processor (3.2 GHz). Within each trial, all stimuli were scaled to an equivalent overall level of 60 dB SPL (A weighted) presented in the free field from a single loudspeaker placed at a 1-metre distance from the listener. Prior to experimentation, all stimuli were calibrated in the free field using the substitution method with a Larson Davis SoundTrack LxT sound level meter.

Procedures

Quick SMD task

The primary purpose of experiment 1 was to investigate whether the 5- to 6-minute, quick SMD task produced results equivalent to those of the long psychoacoustic version. The quick SMD task included a three-interval, forced choice procedure based on a modified method of constant stimuli (Fechner, 1860, 1966; Gescheider, 1997). Spectral modulation was achieved by applying logarithmically spaced, sinusoidal modulation to the broadband carrier stimulus. The carrier stimulus had a bandwidth of 125–5600 Hz. There were six trials presented for each of five modulation depths (10, 11, 13, 14, and 16 dB) and two modulation frequencies (0.5 and 1.0 cycles/octaves) for a total of 60 trials. Each trial was scored as correct or incorrect and spectral resolution is described as the overall percent correct score for the task (chance = 33%). The five modulation depths were chosen on the basis of piloting work over several months with a number of implant recipients for whom sensitivity to spectral modulation depths ranging from 8 to 20 dB was assessed. Stimulus duration was 350 ms with a 25-ms rise/fall time. The interstimulus interval was 300 ms.

Long psychoacoustic SMD task

The long psychoacoustic SMD measure was administered in a 3-interval, 2-alternative, cued-choice adaptive procedure, where the modulation frequency (peaks/octave) was held constant at either 0.5 or 1.0 cycles/octave and modulation depth (dB) was varied adaptively (Zhang et al, 2013). The carrier stimulus for the long SMD task had a also bandwidth of 125 – 5600 Hz. The first interval of each trial served as the cued interval and thus contained a flat-spectrum noise. A second flat-spectrum noise was randomly assigned to the second or third interval. The spectrally modulated stimulus was assigned to the remaining interval. Stimulus duration was 400 ms with an interstimulus interval also equal to 400 ms. Spectral-modulation depth was varied adaptively, using a 3-down, 1-up procedure to track 79.4% correct on the psychometric function (Levitt, 1970). A run included 80 trials and began with a modulation depth in the range of 20 to 30 dB. The initial step size was 2 dB, decreasing to 0.5 dB after the first two reversals. An even number of at least six reversal points, excluding the first two, was averaged to determine SMD threshold for a given run. The reported threshold was the average of two consecutive runs. If the threshold estimates obtained for each of the two runs differed by more than 3 dB, then a third run was completed and averaged.

Results

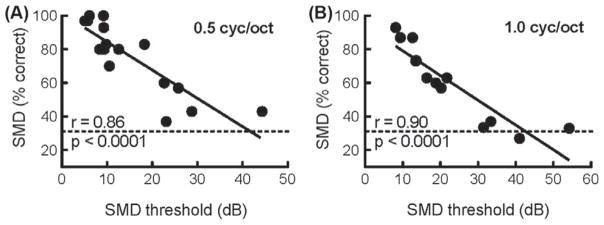

Figure 2 displays individual subject performance for the two SMD tasks. The quick SMD task includes modulation rates 0.5 and 1.0 cycles/octave; however, it was designed to yield a single percent correct score, averaged across the two rates and modulation depths, for ease of reporting and interpretation. For the purposes of this experiment, however, rate-specific performance was extracted from the quick SMD task so as to allow direct comparison to thresholds obtained via the long, psychoacoustic task.

Figure 2.

Individual subject performance on the quick SMD task, in percent correct, as a function of performance on the long SMD task, in dB, for 0.5 and 1.0 cycles/octave. The dotted horizontal line displays chance performance (33.3%) for the quick SMD task.

Performance on the quick and long SMD tasks was defined as percent correct and dB, respectively. As shown in Figure 2, subject performance on the quick SMD task (ordinate) was significantly correlated with performance on the long SMD task (abscissa). The Pearson correlation coefficients were 0.86 and 0.90 for the 0.5 and 1.0 cycle/octave, respectively.

Experiment 2: Test/retest reliability of the quick SMD task

Subjects

Thirty-three adult research participants completed experiment 2. The mean age of the participants was 59.9 years with a range of 18.8 to 82.0 years. The thirty-three participants were all experienced cochlear implant recipients having an average of 3.6 years of implant experience (range 0.7 to 12.9 years). Four of the thirty-three participants were bilateral implant recipients and thus data were obtained for 37 implanted ears. Of the thirty-three participants, nine were implanted with Advanced Bionics (AB), eight with MED-EL, and sixteen with Cochlear devices. All implant recipients were using the most recent generation of implant sound processor available at the time of experimentation, which included the Harmony for AB, Opus2 for MED-EL, and the CP810 for Cochlear.

Equipment and stimuli

Stimuli were generated and presented via MATLAB®. During experimentation, stimuli were routed from a PC situated in the control room of a VUMC clinic sound booth to a GSI 61 audiometer using a 3.5 mm stereo to split RCA cable. The audiometer dial setting was then adjusted to yield a calibrated presentation level of 60 dB SPL (A weighted) in the free field. Stimuli were presented via a single loudspeaker placed at a 1-metre distance from the listener. As in Experiment 1, prior to experimentation, stimuli were calibrated in the free field using the substitution method with a Larson Davis SoundTrack LxT sound level meter.

Experimental design

Quick SMD task

The primary purpose of experiment 2 was to investigate the test/retest reliability of the quick SMD task. Test-retest reliability of the quick SMD test was evaluated by comparing subject performance across two different test sessions. The two test sessions occurred on different days ranging from two consecutive days to a six-week interval between sessions.

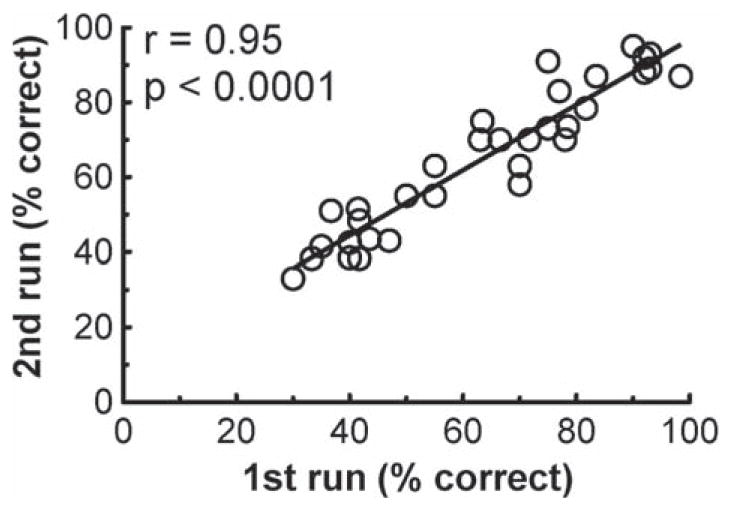

Results

Figure 3 displays individual subject performance on the quick SMD task for each of the two test sessions. Performance, in percent correct, as shown in Figure 3 was averaged across the two modulation rates (0.5 and 1.0 cycle/octave) and five modulation depths, as originally intended in the test design. The Pearson correlation coefficient was highly significant (r = 0.95, p < 0.0001).

Figure 3.

Individual subject performance on the quick SMD task, in percent correct, across two runs obtained on separate days.

To examine the test/retest reliability of the quick SMD task, reliability statistics were calculated using SPSS for scores obtained across the two test sessions. Considering the reliability of the overall measure averaged across modulation rate, Cronbach’s alpha was 0.974, consistent with excellent internal consistency (George & Mallery, 2003), or test/retest reliability.

Experiment 3: Evaluation of the relationship between the quick SMD task and monosyllabic word recognition

Subjects

Seventy-six adult research participants completed experiment 3. The mean age of the participants was 57.8 years with a range of 18.8 to 84.0 years. Twenty-seven of the thirty-three participants from experiment 2 also participated in this experiment. For each of these subjects, the most recent score (i.e. second run) for the quick SMD score was used for the purposes of this experiment. All participants were experienced cochlear implant recipients having an average of 4.4 years of implant experience (range 0.5 to 14.3 years). Fifteen of the seventy-six participants were bilateral implant recipients and thus data were obtained for 91 implanted ears. Of the seventy-six participants, nineteen were implanted with Advanced Bionics (AB), seventeen with MED-EL, and forty with Cochlear devices. All implant recipients were wearing the most recent generation of implant sound processor available at the time of experimentation, which included the Harmony for AB, Opus2 for MED-EL, and the CP810 for Cochlear.

Equipment and stimuli

SMD stimuli were generated and presented using MATLAB®. Stimuli were routed from an HP computer situated in the control room of a VUMC clinic sound booth to a GSI 61 audiometer using a 3.5 mm stereo to split RCA cable. The audiometer dial setting was then adjusted to yield a calibrated presentation level of 60 dB SPL (A weighted) in the free field.

Monosyllabic word recognition was assessed using the CNC (Peterson & Lehiste, 1962) stimuli. A single list of 50 words was presented at 60 dBA in the free field. All stimuli originated from a single loudspeaker placed at a 1-metre distance from the listener.

As in Experiments 1 and 2, prior to experimentation, stimuli were calibrated in the free field using the substitution method with a Larson Davis SoundTrack LxT sound level meter.

Procedures

The primary purpose of experiment 3 was to investigate the relationship between performance on the quick SMD task and monosyllabic word recognition to determine whether the quick SMD task returned a high correlation with word recognition as observed with the long psychoacoustic SMD task. Performance on the quick SMD task as well as CNC word recognition was obtained for all participants within a single test session in the Cochlear Implant Clinic of the Vanderbilt Bill Wilkerson Center at Vanderbilt University Medical Center.

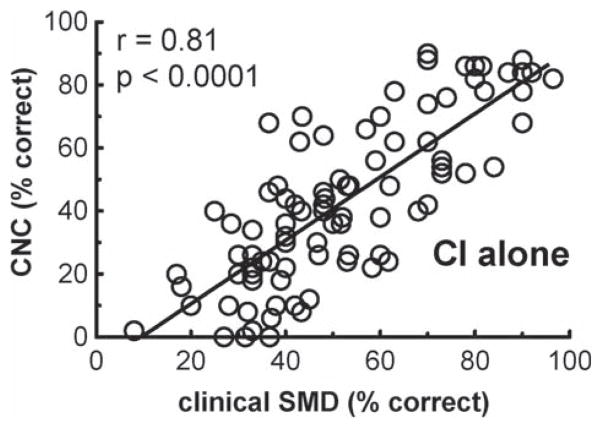

Results

Figure 4 displays CNC word recognition, in percent correct, as a function of performance on the quick SMD task for 91 ears. The Pearson correlation coefficient was highly significant (r = 0.81, p < 0.0001) and equivalent or better than that which has been reported in the literature for the long SMD and speech understanding as well as spectral modulation discrimination and speech understanding (Litvak et al, 2007; Won et al, 2007; Saoji et al, 2009; Spahr et al, 2011).

Figure 4.

Individual subject performance for CNC word recognition (percent correct) as a function of performance on the quick SMD task (percent correct) for 91 ears.

For ease of reporting and interpretation, the quick SMD task was designed to yield a single score (in percent correct), averaged across the modulation rates and depths. Though we have displayed CNC word recognition as a function of performance on the quick SMD task as averaged across the two modulation rates (Figure 4), we also completed correlation analyses independently for each of the modulation rates (0.5 and 1.0 cycle/octave). The correlations were 0.76 and 0.73 for modulation rates 0.5 and 1.0 cycle/octave, respectively.

Discussion

These results demonstrate a successful adaptation of a long psychoacoustic task of SMD making this measure both clinically feasible and relevant. The results presented here are consistent with the three hypotheses stated in the introduction as follows:

The long psychoacoustic and quick SMD tasks yield equivalent outcomes.

The 5- to 6-minute quick SMD task has high, and significant test-retest reliability.

The correlation between the quick SMD task and speech recognition was highly significant and equivalent or higher in correlation to previous reports in the literature.

These results demonstrate that it is possible to use the quick SMD task as a non-language-based outcome measure. Given that experiments 2 and 3 of the current study were carried in a cochlear implant clinic located within a large academic medical center, this measure could easily be adopted for standard clinical use for outcome measures with adult cochlear implant recipients. Also possible is that this measure could be adapted across languages and thus serve as a quick measure of postoperative outcomes. Additional research is necessary, however, to carefully define the relationship between SMD and various metrics of language specific, speech understanding (i.e. words, sentences, sentences in noise, etc.). This is needed so that clinicians across the world could use this one clinical measure of SMD to characterize patient performance.

Conclusion

The results of this work are consistent with previous results showing a significant correlation between SMD at low modulation rates (0.5 and 1.0 cycle/octave) and speech understanding for adult cochlear implant recipients. The current work describes the development of a clinically feasible, quick SMD task with high test/retest reliability and significant correlation with CNC word recognition. This quick SMD task is simple to use (run from an audio CD or computer program and presented through the audiometer), time efficient (5 to 6 minutes per ear), accurate (correlated with the research task), convenient to score (computer scored or pencil/paper), and easy to interpret (results as ‘percent correct’).

Acknowledgments

Portions of this data set were presented at the 2012 conference of the American Auditory Society in Scottsdale, Arizona, USA and the 2013 Conference on Implantable Auditory Prostheses in Tahoe City, California, USA. This research was supported by NIDCD R01 DC013117.

Abbreviations

- CNC

Consonant nucleus consonant

- SMD

Spectral modulation detection

Footnotes

Declaration of interest: The authors alone are responsible for the content and writing of this paper. The authors report no declarations of interest

References

- American Speech-Language-Hearing Association. Determining threshold level for speech [Guidelines] 1988 [Google Scholar]

- American Speech-Language-Hearing Association. Guidelines for hearing aid fitting for adults [Guidelines] 1998 [Google Scholar]

- American Speech-Language-Hearing Association. Guidelines for manual pure-tone threshold audiometry [Guidelines] 2005 [Google Scholar]

- Anderson ES, Nelson DA, Kreft H, Nelson PB, Oxenham AJ. Comparing spatial tuning curves, spectral ripple resolution, and speech perception in cochlear implant users. J Acoust Soc Am. 2011;130(1):364–75. doi: 10.1121/1.3589255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson ES, Oxenham AJ, Nelson PB, Nelson DA. Assessing the role of spectral and intensity cues in spectral ripple detection and discrimination in cochlear implant users. J Acoust Soc Am. 2012;132(6):3925–34. doi: 10.1121/1.4763999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- American Academy of Audiology. Pediatric amplification guidelines. Audiology Today. 2004;16:46–53. [Google Scholar]

- Auditory Potential. The new minimum speech test battery. 2011 http://auditorypotential.com/MSTB.html.

- British Society of Audiology. Recommended procedure: Pure-tone air-conduction and bone-conduction threshold audiometry with and without masking 2011 [Google Scholar]

- Cutler A, Garcia Lecumberri ML, Cooke M. Consonant identification in noise by native and non-native listeners: Effects of local context. J Acoust Soc Am. 2008;124:1264–1268. doi: 10.1121/1.2946707. [DOI] [PubMed] [Google Scholar]

- Drennan WR, Won JH, Jameyson E, Rubinstein JT. Stability of Clinically Meaningful, Non-Linguistic Measures of Hearing Performance with a Cochlear Implant. Conference on Implantable Auditory Prostheses; Pacific Grove, USA. 2011. [Google Scholar]

- Etymotic. Bamford-Kowal-Bench speech-in-noise test (version 1.03) [audio CD] Elk Grove Village, USA: 2005. [Google Scholar]

- Fechner GT. Elemente der Psychophysik. Breitkopf & Härtel, Leipzig (reprinted in 1964 by Bonset, Amsterdam); English translation by H.E. Adler (1966) New York: Holt, Rinehart & Winston; 1860, 1966. [Google Scholar]

- George D, Mallery P. SPSS for Windows Step by Step: A Simple Guide and Reference. 11.0 update. 4. Boston: Allyn & Bacon; 2003. [Google Scholar]

- Gescheider G. Psychophysics: The Fundamentals. 3. New York: Lawrence Erlbaum Associates; 1997. Chapter 3: The Classical Psychophysical Methods. [Google Scholar]

- Henry BA, Turner CW. The resolution of complex spectral patterns by cochlear implant and normal-hearing listeners. J Acoust Soc Am. 2003;113:2861–2873. doi: 10.1121/1.1561900. [DOI] [PubMed] [Google Scholar]

- Henry BA, Turner CW, Behrens A. Spectral peak resolution and speech recognition in quiet: Normal hearing, hearing impaired, and cochlear implant listeners. J Acoust Soc Am. 2005;118:1111–1121. doi: 10.1121/1.1944567. [DOI] [PubMed] [Google Scholar]

- Joint Committee on Infant Hearing. Year 2007 position statement: Principles and guidelines for early hearing detection and intervention. 2007 [PubMed] [Google Scholar]

- Killion MC, Niquette PA, Revit LJ, Skinner MW. Quick SIN and BKB SIN, two new speech in noise tests permitting SNR 50 estimates in 1 to 2 minutes. J Acoust Soc Am. 2001;109:2502–2502. [Google Scholar]

- Levitt H. Transformed up-down methods in psychoacoustics. J Acoust Soc Am. 1970;49:467–477. [PubMed] [Google Scholar]

- Litvak LM, Spahr AJ, Saoji A, Fridman GY. Relationship between perception of spectral ripple and speech recognition in cochlear implant and vocoder listeners. J Acoust Soc Am. 2007;122:982–991. doi: 10.1121/1.2749413. [DOI] [PubMed] [Google Scholar]

- Mayo LH, Florentine M, Buus S. Age of second-language acquisition and perception of speech in noise. J Speech Lang Hear Res. 1997;40:686–693. doi: 10.1044/jslhr.4003.686. [DOI] [PubMed] [Google Scholar]

- Nabelek AK, Donohue AM. Perception of consonants in reverberation by native and non-native listeners. J Acoust Soc Am. 1984;75:632–634. doi: 10.1121/1.390495. [DOI] [PubMed] [Google Scholar]

- Paul LM, Simons GF, Fennig CD. Ethnologue: Languages of the World. 17. Dallas, USA: SIL International; 2013. [Google Scholar]

- Peterson GE, Lehiste I. Revised CNC lists for auditory tests. J Speech Hear Disord. 1962;27:62–70. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- Saoji AA, Litvak LM, Spahr AJ. Spectral modulation detection and vowel and consonant identifications in cochlear implant listeners. J Acoust Soc Am. 2009;126:955–958. doi: 10.1121/1.3179670. [DOI] [PubMed] [Google Scholar]

- Spahr AJ, Dorman MF, Litvak LM, Van Wie S, Gifford RH, et al. Development and validation of the AzBio sentence lists. Ear Hear. 2012;33:112–117. doi: 10.1097/AUD.0b013e31822c2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spahr AJ, Saoji A, Litvak LM, Dorman MF. Spectral cues for understanding speech in quiet and in noise. Cochlear Implants International. 2011;12:S66–S69. doi: 10.1179/146701011X13001035753056. [DOI] [PubMed] [Google Scholar]

- Swanepoel D. The global epidemic of infant hearing loss: priorities for prevention. In: Seewald R, Bamford JM, editors. A Sound Foundation Through Early Amplification: Proceedings of the Fifth International Conference; 2010. pp. 19–27. [Google Scholar]

- Takata Y, Nabelek AK. English consonant recognition in noise and in reverberation by Japanese and American listeners. J Acoust Soc Am. 1990;88:663–666. doi: 10.1121/1.399769. [DOI] [PubMed] [Google Scholar]

- Valente M, Bentler R, Seewald R, Trine T, Van Vliet D. Guidelines for hearing aid fitting for adults. Am J Audiol. 1998;7:5–13. [Google Scholar]

- Won JH, Clinard CG, Kwon S, Dasika VK, Nie K, et al. Relationship between behavioral and physiological spectral-ripple discrimination. Journal of the Association for Research in Otolaryngology. 2011;12:375–393. doi: 10.1007/s10162-011-0257-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Drennan WR, Rubinstein JT. Spectral-ripple resolution correlates with speech reception in noise in cochlear implant users. Journal of the Association for Research in Otolaryngology. 2007;8:384–392. doi: 10.1007/s10162-007-0085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Won JH, Nie K, Drennan WR, Rubinstein JT. Maximizing the spectal and temporal benefits of two clinically used sound processing strategies for cochlear implants. Trends Amplification. 2012;16:201–210. doi: 10.1177/1084713812467855. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang T, Spahr AJ, Dorman MF, Saoji A. Relationship between auditory function of nonimplanted ears and bimodal benefit. Ear Hear. 2013;34:133–141. doi: 10.1097/AUD.0b013e31826709af. [DOI] [PMC free article] [PubMed] [Google Scholar]