Abstract

Background and Objectives

A hardcopy or paper cognitive aid has been shown to improve performance during the management of simulated local anesthetic systemic toxicity (LAST) when given to the team leader. However, there remains room for improvement in order to ensure a system that can achieve perfect adherence to the published guidelines for LAST management. Recent research has shown that implementing a checklist via a designated reader may be of benefit. Accordingly, we sought to investigate the effect of an electronic decision support tool (DST) and designated ‘Reader’ role on team performance during an in-situ simulation of LAST.

Methods

Participants were randomized to Reader+DST (N = 16, rDST) and Control (N = 15, memory alone). The rDST group received the assistance of a dedicated Reader on the response team who was equipped with an electronic DST. The primary outcome measure was adherence to guidelines.

Results

For overall and critical percent correct scores, the rDST group scored higher than Control (99.3% vs 72.2%, P < 0.0001; 99.5% vs 70%, P < 0.0001, respectively). In the LAST scenario, 0 of 15 (0%) in the control group performed 100% of critical management steps, while 15 of 16 (93.8%) in the rDST group did so (P < 0.0001).

Conclusions

In a prospective, randomized single-blinded study, a designated Reader with an electronic DST improved adherence to guidelines in the management of an in-situ simulation of LAST. Such tools are promising in the future of medicine, but further research is needed to ensure the best methods for implementing them in the clinical arena.

INTRODUCTION

It has been reported recently that the risk of local anesthetic systemic toxicity (LAST) as a complication of neuraxial or regional anesthesia blocks is rare and may be decreasing in frequency.1 This follows from several studies of large databases concerning the risk of LAST.2–5 However, the risk has not been reduced to zero. Thus, if and when LAST does occur, prompt recognition and treatment must be initiated according to published guidelines in order to ensure the best possible patient outcome.6–11 Perioperative teams are expected to be able to manage such emergency situations, but prior studies have shown that performance during simulations of perioperative emergencies is often suboptimal when patients are managed from memory alone.12,13 Furthermore, with limited exposure to these rare situations, appropriate care is less likely to be administered and the patient is more likely to experience an adverse outcome than during routine care.14

In light of this concern, a recent study demonstrated that use of a paper cognitive aid containing the American Society of Regional Anesthesia and Pain Medicine (ASRA) management algorithm for LAST improved adherence to guidelines.15 While the results of this study were promising, there is still much room for improvement. If the goal is perfect adherence to guidelines in these high-risk iatrogenic perioperative events, the mere presence of a cognitive aid does not ensure a high level of adherence to published guidelines.16,17 Based upon this understanding, addition of a designated ‘Reader’ of the paper cognitive aid as a team member during simulations of an obstetrical crisis and malignant hyperthermia was found to improve adherence to guidelines.18 As noted in a recent study concerning the simulation of perioperative emergencies, the focus on rare, high-stakes events limits the feasibility of clinical trials.19 As such, we conducted a prospective, single-blinded randomized controlled trial using in-situ simulation to test the hypothesis that a designated Reader with an electronic DST improves adherence to published guidelines in the management of LAST as compared with management from memory alone.

METHODS

Study Participants and Design

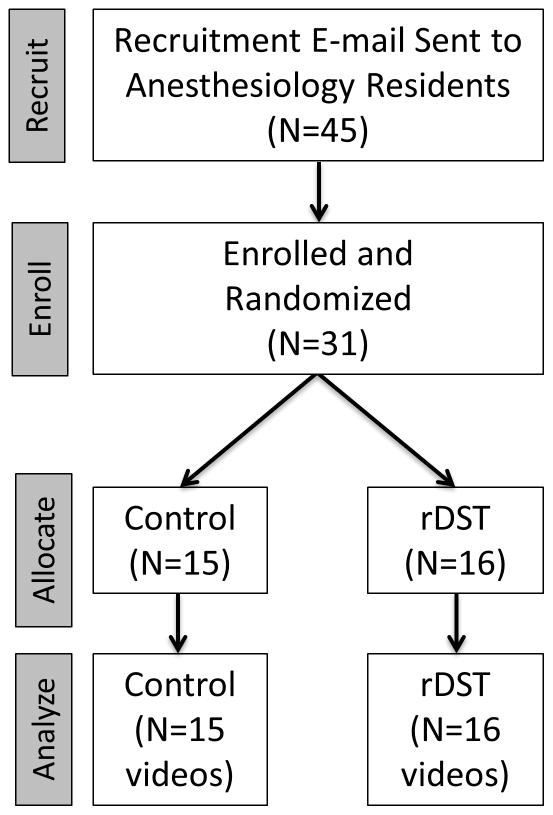

After obtaining Medical University of South Carolina Institutional Review Board (IRB II) approval and written informed consent, we enrolled 31 anesthesiology residents (CA-1 to CA-3) over a 4-day period into this prospective, randomized single-blinded controlled trial (Fig. 1). Participants were blinded to the purpose of the study and randomized into 2 groups using a random number generator: Reader+DST (rDST) and Control (memory alone). The rDST group received the assistance of a dedicated Reader who was equipped with a handheld electronic iOS-based DST (Apple Inc, Cupertino, California). Each resident was asked to manage a high-fidelity in-situ simulation of a LAST event in the Post-Anesthesia Care Unit (PACU) next to the main operating room of our university.

Figure 1.

This CONSORT diagram illustrates the flow of this study through the phases of recruitment, enrollment, allocation and study performance, and data analysis. [rDST = Reader+ Decision Support Tool group]

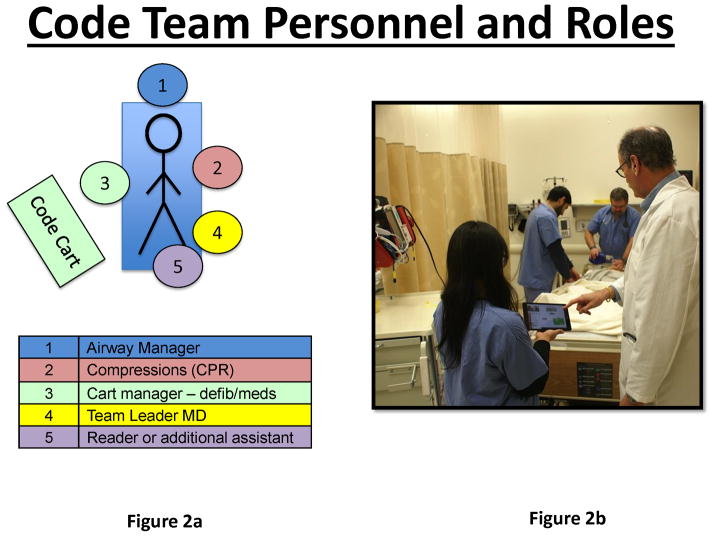

The crisis scenario was constructed and programmed by a team of anesthesiologists with a minimum of 3 years’ experience in simulation education and research. In the simulation sessions, an experienced PACU nurse was trained to be a part of the standardized team, and she gave each participant a brief description of the clinical situation per a script. There was a standard amount of information given to each participant at the beginning of the scenario, and scripted responses were provided for questions that they might ask about the present condition and care of the simulated patient. During a scenario after help was called for, a standardized actor (SA) would arrive and, after 1 minute, prompt the participant for a diagnosis. For the Control group, the SA would simply ask, “Doctor, what do you think is going on with this patient?” For the rDST group, the SA would also act as a Reader and would ask the same question concerning the diagnosis of the patient condition. Then, based upon the answer from the participant, the Reader would find that protocol in the DST and offer to read the protocol steps aloud during the course of the scenario. If the Leader did not desire to have the treatment protocol read at that time, the Reader would notify the Leader that the protocol was available on the DST screen for their reference or to be read when ready in the future. During the scenario, if the DST was not employed by the Leader for more than 1 minute, the Reader would offer again to read the prescribed protocol considerations from the DST (see the Supplemental Digital Content 1 for screenshots of the DST). Additionally, if the Leader suggested an action be performed that was not prescribed or was warned against by the DST (eg, giving vasopressin during LAST with cardiac arrest), then the Reader would notify the Leader of the discrepancy. Figure 2 shows the physical location of the Reader in relation to the Leader and the rest of the team, with the Reader maintaining control of the iPad while also making sure the screen can be easily viewed by the Leader, if desired. For both groups, an equivalent number of team members were present in the room and available to assist with any tasks, as requested by the participant. In addition to the nurse and SA/Reader previously discussed, 2 other SAs were available to assist the participant by drawing labs, performing requested tasks (eg, electrocardiogram or CPR), and administering any ordered medications.

Figure 2.

These two images show the physical layout of the team from above (2a) and also from the end of the patient bed (2b). The image on the right shows how the Reader (holding the DST) could operate the DST and also present it to the Leader (white coat) if needed. The Leader is free to address the team or directly assess the patient while the Reader operates the user interface of the DST. [DST = decision support tool]

All sessions were performed in-situ in the PACU at our institution using the SimMan mannequin (SimMan 3G; Laerdal Inc., Stavanger, Norway). Standard institutional bedside and patient monitors, a code cart, and a lipid emulsion kit were used. All sessions were video recorded using a multi-camera system and software from B-line Medical (SimCapture; B-line Medical, Washington, DC). The scenario was set to end after approximately 15 minutes. Although reports of prolonged resuscitations from LAST (ie, > 1 hour) have been recorded with good patient outcome, we chose 15 minutes as a point during which the diagnosis could be made and major initial and subsequent treatment steps undertaken as per the ASRA guidelines.7 Approximately 30 seconds before the end of the scenario, the SA or Reader (if present) would ask the resident if there were any additional directions for the team at that time. This was a scripted action by the SA or Reader in order to allow the resident to state any additional orders (eg, treatments, labs, or tests).. Residents were given credit for anything stated as orders to complete during this time, even if they were not physically simulated prior to the end of the 15 minutes.

The participants did not know that they were going to manage a simulated LAST scenario. They only knew that they had enrolled to participate in a study investigating simulated perioperative crisis management. On the day of the study, participants received unannounced relief from clinical duties and were told to report to the PACU to evaluate a patient. The clinical stem presented to the team leader at the beginning of the session and the standardized information communicated by the actors and the real-time vital signs display were intended to make diagnosis straightforward, as the goal of this study was to test the effect of the Reader and DST on adherence to guidelines and not on whether the DST aided in real-time diagnosis in a crisis situation. The DST contained management protocols for over 30 perioperative emergencies. This was to ensure that the participant selected the appropriate diagnosis from appropriate clinical cues and not out of convenience due to only having one scenario available.

Checklist Creation and Grading

The videos of the sessions were graded according to checklists that were based upon best-practice guidelines and that were created through a modified Delphi technique using a previously published methodology.20 The grading checklists allowed assessment of protocol adherence with grading occurring in a binary fashion for each item (performed/not performed). Participant scores were reported as percent correct actions (total # correct steps performed ÷ total # of correct steps in checklist). This method of scoring accounted for correct actions, and thereby errors of omission, in the percentage correct score. We also examined the percentage of steps completed that were deemed by the research team to be critical to patient care, as well as the percentage of residents completing all critical steps in each group. Finally, we examined the number of errors committed by the participants, such as giving the wrong dose of epinephrine (eg, > 1 mcg/kg) or administering medications that are to be avoided in the setting of LAST (eg, vasopressin, beta-blockers). The grading checklists can be seen in Table 2.

Table 2.

Performance Data as Percent Correct (%) Between Groups

| Checklist Item Description | Control | rDST | P* |

|---|---|---|---|

| Initial Assessment | |||

| Announced unstable patient and called for help | 93.3% | 93.8% | ns |

| Called for LAST Kit with 20% lipid emulsion | 100.0% | 100.0% | ns |

| Assessed airway and breathing | 100.0% | 100.0% | ns |

| Assessed circulation (minimum pulse check) | 100.0% | 100.0% | ns |

| Asked patient about symptoms/assessed instability | 100.0% | 100.0% | ns |

| Stated diagnosis as LAST or concern for LAST | 93.3% | 100.0% | ns |

| Initial Management | |||

| Stopped local anesthesia or confirmed cessation of infusion | 46.7% | 100.0% | P<0.01 |

| Provided supplemental oxygen while pulse present (face mask or BVM) | 100.0% | 100.0% | ns |

| Confirmed/established adequate IV access | 100.0% | 100.0% | ns |

| Evaluated/treated seizure appropriately | 26.7% | 100.0% | P<0.01 |

| Bolused adequate 20% lipid emulsion dose (1.5ml per kg) | 80.0% | 100.0% | ns |

| Started 20% lipid emulsion infusion (0.25mL/kg/min) | 40.0% | 100.0% | P<0.01 |

| Supported breathing - BVM or intubation (100% O2) within 30s of apnea | 100.0% | 100.0% | ns |

| Subsequent Management | |||

| Re-dosed 20% lipid emulsion after continued hemodynamic instability | 46.7% | 100.0% | P<0.01 |

| Doubled rate of 20% lipid emulsion infusion | 6.7% | 100.0% | P<0.01 |

| Sent arterial blood gas | 73.3% | 100.0% | P=0.04 |

| Mentioned alerting CPB team | 20.0% | 93.3% | P<0.01 |

| Errors | |||

| Gave wrong dose epinephrine (≥1mcg/kg) | 20.0% | 12.5% | ns |

| Gave calcium blocker, beta-blocker, vasopressin | 0.0% | 0.0% | ns |

| Gave local anesthetic (eg, lidocaine) | 0.0% | 0.0% | ns |

| Gave propofol | 0.0% | 0.0% | ns |

P by Fisher exact test for each item;

rDST = Reader+Decision Support Tool; LAST = local anesthetic systemic toxicity; BVM = bag-valve-mask; CPB = cardiopulmonary bypass; IV = intravenous; ns = not significant

Statistical Analysis

Inter-rater agreement on checklist items was assessed between 3 raters on 3 participant videos using Cohen’s kappas and overall percent agreement. Descriptive statistics were used to characterize participants’ scores, stratified by group assignment (ie, rDST vs Control). Wilcoxon rank sum tests were used to compare scores between the 2 groups with respect to overall scores, scores on critical items, scores on objective items, and scores on subjective items. Because the scores reflected proportions, arcsine square root transformations were applied on the scores, and these transformed values were used for the purpose of constructing confidence intervals.21 Because participants’ summary scores comprised scores from individual items, we also assessed, using Fisher’s exact tests, whether participants assigned to the rDST group outperformed the Control group on individual items. All statistical tests were conducted using SAS v9.3 (Cary, North Carolina), and P < 0.05 was considered significant.

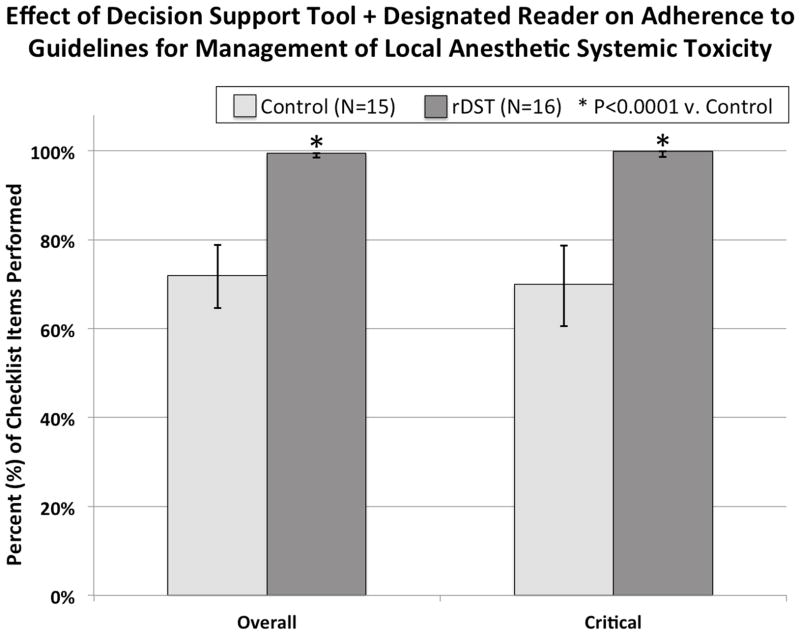

RESULTS

There were no baseline differences in demographics between the 2 groups (Table 1). For overall and critical percent correct scores, the rDST group scored higher than the Control (99.3% vs. 72.2%, P < 0.0001; 99.5% vs. 70.0%, P < 0.0001, respectively), as shown in Figure 3. While there was no difference between groups in any of the initial assessment steps, the rDST group performed significantly better on 3 out of 7 initial management steps and on 4 out of 4 subsequent management steps, as shown in Table 2. In the LAST scenario, 0 of 15 (0%) in the control group performed 100% of critical management steps, while 15 of 16 (93.8%) in the rDST group did this (P < 0.0001 by Fisher exact test).

Table 1.

Participant Demographics

| Control (N = 15) | rDST (N = 16) | p | |

|---|---|---|---|

| Gender (F/M) | 3/12 | 3/13 | ns |

| Training Year (CA 1/2/3) | 6/4/5 | 7/6/3 | ns |

rDST = Reader+Decision Support Tool group; F = Female; M = Male; CA = Clinical Anesthesia Year; ns = not significant

Figure 3.

This figure depicts adherence to guidelines for LAST management as measured by the percentage of checklist items performed. The rDST group scored higher than the Control group for overall and critical percent correct scores.

Concerning the reliability of the grading, inter-rater agreement on checklist items was generally considered good to excellent; all kappas were above 0.74. On average, rater pairs agreed with each other on 93% of the checklist items. There was no difference in rater agreement based on group assignment of the participant.

DISCUSSION

We tested the hypothesis that the implementation of a handheld DST via a designated Reader would improve adherence to published guidelines in the management of an in-situ simulation of LAST as compared with management from memory alone. Our results demonstrate that the employment of the DST by the Reader resulted in near perfect adherence to published guidelines during an in-situ simulation of LAST. These results will be placed in light of what is currently known in the literature and then major limitations of this study will be addressed.

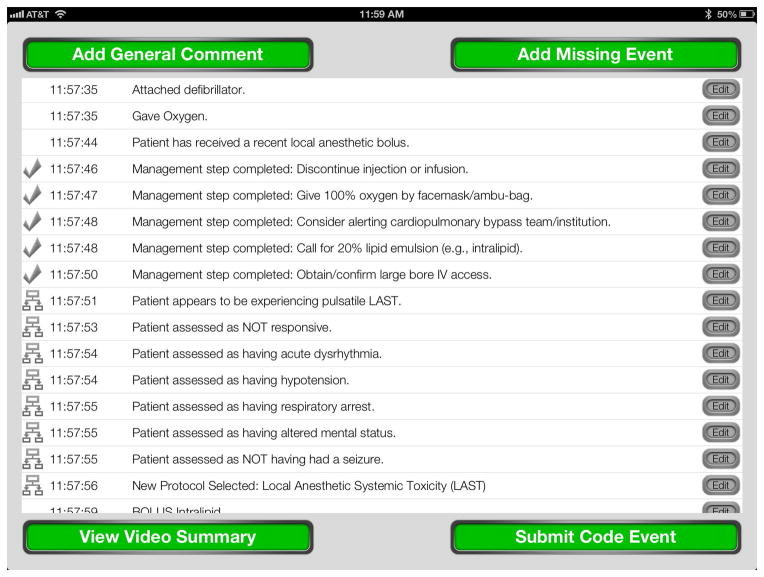

Burden and colleagues first reported that Reader role improved adherence to guidelines during simulated perioperative emergencies.18 However, further investigation of the Reader role was needed, as this study was observational in nature. Two recent studies showed improvement in performance when paper crisis checklists were employed in the operating room team setting, but management of LAST was not investigated.19,22 Additionally, our findings advance upon what was recently reported by Neal and colleagues on the effect of a cognitive aid on the management of LAST.15 Concerning the steps for which the checklist could be used (21 steps), the Control group performed 65% of checklist items and the Checklist group performed 84% of checklist items, which was similar to the Control group in our study (~70% performed) but well below what was achieved in the rDST group, which improved to near perfect performance of all correct steps (>99%). Thus, our results demonstrate that implementation of a cognitive aid (DST in this study) by a designated Reader can lead to near-perfect adherence to guidelines. Furthermore, the type of cognitive aid that we employed, an electronic DST, advances upon paper checklists and a recently described smartphone cognitive aid for advanced cardiac life support,23 as the DST used in our study not only prompts the user with the proper protocol actions to consider but also records all of the steps performed by the end-user (Figure 4). This is a function of our DST that we believe extends well beyond paper cognitive aids and other reports of electronic DSTs. Additionally, the DST used in this study has the functionality already in place to upload a record of the event management into a database immediately, such as one recently called for with respect to LAST.10,24

Figure 4.

This figure shows the Summary View of the assessment and management that would be recorded by the decision support tool during a clinical event. Each row represents a unique action being recorded, with a time stamp to the second and a description of the event. In this view, the operator could add a general comment or missing event to the record, or they could edit or annotate any step that was performed. It would also be possible to view a video summary of the event that is time-linked to each action selected. This could be used for immediate or delayed debriefing of the training or clinical event, as audio and video are captured live throughout the session. Finally, the event record would be submitted to a database for further analysis of the data.

It may be questioned whether this study simply led to expected results. That is, if a Reader role has shown some benefit and paper aids have shown some benefit, were the results of this study predictable? We would propose that the Reader role has not been shown previously to be of benefit in any randomized, controlled trial. Furthermore, it is not completely clear that an electronic aid would be equal to a paper aid, as implementation of electronics and technology into nursing workflow has been shown to introduce hidden work (compared to paper forms) that was not anticipated.25 As such, we chose to randomize between the most common practice for emergency teams today (memory alone) and the implementation one type of cognitive aid (electronic DST) via a specific implementation (Reader). It is not possible to test the Reader role without any tool to read. Furthermore, we did not test the DST when used by the Leader (without the Reader role) because we found in a pilot study that this was no better than memory alone, as the team leader either attended to the tool and missed cues from the patient or attended to the patient and functionally managed from memory alone – either option representing a task saturation situation. Thus, we believe that the results of this study could not be known a priori.

There are several limitations of our study that must be mentioned. First, as compared to real-life LAST events, this study presents data from a setting that was scripted and simulated. While there are likely some real differences compared to an actual event, this study is the only feasible means to rigorously assess how to improve the delivery of care in such a rare event. Second, while our results accord with, and improve upon, those in recent studies, they do emerge from a single residency training program. As such, large, multi-institutional studies need to be performed in order to investigate whether these results are generalizable and thus worth applying to the clinical environment for trainees and for those in practice. Third, in observing the performance of all participants, there is a clear variation in how the decision tool was used by residents and how the Reader enforced implementation of the tool. Participants with lower levels of training would use the Reader and DST to prospectively inform and direct their decisions, while upper level residents would reference the DST through the Reader as a secondary method of validating and double-checking their clinical decisions. On the other hand, while we attempted to standardize the Reader role such that the person in this role interacted with the team in a common fashion in each scenario, it was noted that the Reader tended to correct the team in two primary ways: a) concerning errors in dosing or re-dosing of the lipid emulsion and b) reminding the team to re-assess hemodynamic stability during the scenario, which guided future steps such as doubling of the infusion rate and alerting the cardiopulmonary bypass team. Of note, we have found that a member of the anesthesia/perioperative care team, such as an anesthesia technician or perioperative nurse, is the most appropriate person to serve as the Reader.

Finally, we have clearly shown one main point: namely, that implementation of a cognitive aid via a designated Reader role greatly improves adherence to published guidelines during in-situ crisis simulation. These results are limited to this statement. Future research needs to investigate whether the form of the aid (ie, paper vs electronic) makes as much of a difference as the method by which it is implemented (use of the designated Reader role). There is a large push to introduce checklists in medicine today under the banner of patient safety.22,26–31 Great strides have been demonstrated in this domain, and we believe that our results indicate that implementation of a cognitive aid through the use of the Reader-Leader model can lead to significant improvement in performance as compared to management from memory alone. We also believe that caution is necessary before any cognitive aid, whether checklist or DST, is introduced into routine use. As noted by Dr. Neal about the recent AURORA Study, “it is seldom a single intervention that improves our patients’ care, but rather using the entire toolbox.”1 Proper orientation to the tool and proper implementation of it within the emergency team (eg, Reader role) is likely as important as the tool itself. While we agree that a checklist manifesto may be of utility in the coming years of medical practice, carefully addressing the toolbox that accomplishes the intended goal will require rigorous research as this field of implementation science progresses.

Our specialty found itself at a similar place in the 1980s and 1990s when the ASA Monitoring Standards were being codified and implemented.32,33 Patients have greatly benefitted from such sweeping change.34 However, it was not simply the tool (ie, monitor) that made the difference, but a whole system in which safety monitoring behaviors obtained.35 Global descriptions of what was needed were given, but local implementation was needed as well. While these monitors are now present for every patient, they were not at the time, and many of the same questions emerged: “Who should have them present? How do we implement this massive change? Why do I need to implement this when I am a good doctor?” We would propose that the process outlined above is needed to make the translational leap from simulated settings (T1 evidence) to the real world (T2 and T3 evidence).36 We must get into the local perioperative culture and help people through the following practical steps: 1) know the literature from the simulation laboratory (eg, Grand Rounds), 2) understand the components of the toolbox (tool, form, role, team, and context for implementation), 3) do a local assessment and discussion of these components with the clinical team, and 4) begin to link local venues through national databases when the DSTs have been used so that we can grow in our shared knowledge of what is actually happening across the nation and the world. We believe that this is the way that we can address what have been called complex service interactions in the clinical arena where multi-person teams are interacting around real patients in dynamic and complex care settings that have constant change.37 Goldhaber-Fiebert and colleagues recently summarized this implementation schema of any cognitive aid as: Create, Familiarize, Use, Integrate.29 In addition, payors, professors, and private practitioners should now join forces to ensure that a culture obtains where these tools, having been created, are ones that all members of our care teams become familiar with, use, and integrate into healthcare systems so that we are better prepared when the rare event occurs.

In summary, our results demonstrate that employing a handheld electronic DST through a designated Reader greatly improves adherence to guidelines in an in-situ simulation of LAST, as compared with management from memory alone. Possible benefits of electronic DSTs that do not exist for paper cognitive aids are 1) they can direct clinicians to adhere to management guidelines and 2) they can record the actions of assessment and management that are performed. Such tools are promising in the future of patient care in anesthesiology, perioperative medicine, and acute care medicine. In light of this, even though the best form of the cognitive aid has not been determined, we have shown benefit of the one described in this study and thus plan to share our DST with the healthcare community in the coming months. We are working at the institutional and national healthcare society level to be able to release this through the Apple Store by the summer of 2014.

Supplementary Material

Supplemental Digital Content 1. Decision support tool (DST) screen shots.

Acknowledgments

Funding:

Foundation for Anesthesia Education and Research (FAER), Research in Education Grant (PI: McEvoy). This project was also supported by the South Carolina Clinical & Translational Research Institute, Medical University of South Carolina’s CTSA, NIH/NCATS Grant Number UL1TR000062.

The authors would like to thank Jordan Shealy, BA, Bradley Saylors, BA, Ryan Preston, BA (medical students), Amanda Burkitt, RN, and Monica Davis RN, CCRN, for their assistance in performance of this study.

Footnotes

Prior Presentation:

None

Conflict of Interest:

The authors declare no conflict of interest.

References

- 1.Neal JM. Local anesthetic systemic toxicity: improving patient safety one step at a time. Reg Anesth Pain Med. 2013;38:259–261. doi: 10.1097/AAP.0b013e3182988bd2. [DOI] [PubMed] [Google Scholar]

- 2.Barrington MJ, Kluger R. Ultrasound guidance reduces the risk of local anesthetic systemic toxicity following peripheral nerve blockade. Reg Anesth Pain Med. 2013;38:289–297. doi: 10.1097/AAP.0b013e318292669b. [DOI] [PubMed] [Google Scholar]

- 3.Sites BD, Taenzer AH, Herrick MD, et al. Incidence of local anesthetic systemic toxicity and postoperative neurologic symptoms associated with 12,668 ultrasound-guided nerve blocks: an analysis from a prospective clinical registry. Reg Anesth Pain Med. 2012;37:478–482. doi: 10.1097/AAP.0b013e31825cb3d6. [DOI] [PubMed] [Google Scholar]

- 4.Orebaugh SL, Kentor ML, Williams BA. Adverse outcomes associated with nerve stimulator-guided and ultrasound-guided peripheral nerve blocks by supervised trainees: update of a single-site database. Reg Anesth Pain Med. 2012;37:577–582. doi: 10.1097/AAP.0b013e318263d396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Orebaugh SL, Williams BA, Vallejo M, Kentor ML. Adverse outcomes associated with stimulator-based peripheral nerve blocks with versus without ultrasound visualization. Reg Anesth Pain Med. 2009;34:251–255. doi: 10.1097/AAP.0b013e3181a3438e. [DOI] [PubMed] [Google Scholar]

- 6.Neal JM, Bernards CM, Butterworth JF, 4th, et al. ASRA practice advisory on local anesthetic systemic toxicity. Reg Anesth Pain Med. 2010;35:152–161. doi: 10.1097/AAP.0b013e3181d22fcd. [DOI] [PubMed] [Google Scholar]

- 7.Neal JM, Mulroy MF, Weinberg GL American Society of Regional Anesthesia and Pain Medicine. American Society of Regional Anesthesia and Pain Medicine checklist for managing local anesthetic systemic toxicity: 2012 version. Reg Anesth Pain Med. 2012;37:16–18. doi: 10.1097/AAP.0b013e31822e0d8a. [DOI] [PubMed] [Google Scholar]

- 8.Di Gregorio G, Neal JM, Rosenquist RW, Weinberg GL. Clinical presentation of local anesthetic systemic toxicity: a review of published cases, 1979 to 2009. Reg Anesth Pain Med. 2010;35:181–187. doi: 10.1097/aap.0b013e3181d2310b. [DOI] [PubMed] [Google Scholar]

- 9.Ozcan MS, Weinberg G. Update on the use of lipid emulsions in local anesthetic systemic toxicity: a focus on differential efficacy and lipid emulsion as part of advanced cardiac life support. Int Anesthesiol Clin. 2011;49:91–103. doi: 10.1097/AIA.0b013e318217fe6f. [DOI] [PubMed] [Google Scholar]

- 10.Weinberg GL. Lipid emulsion infusion: resuscitation for local anesthetic and other drug overdose. Anesthesiology. 2012;117:180–187. doi: 10.1097/ALN.0b013e31825ad8de. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Weinberg GL. Treatment of local anesthetic systemic toxicity (LAST) Reg Anesth Pain Med. 2010;35:188–193. doi: 10.1097/AAP.0b013e3181d246c3. [DOI] [PubMed] [Google Scholar]

- 12.Murray DJ, Boulet JR, Avidan M, et al. Performance of residents and anesthesiologists in a simulation-based skill assessment. Anesthesiology. 2007;107:705–713. doi: 10.1097/01.anes.0000286926.01083.9d. [DOI] [PubMed] [Google Scholar]

- 13.Henrichs BM, Avidan MS, Murray DJ, et al. Performance of certified registered nurse anesthetists and anesthesiologists in a simulation-based skills assessment. Anesth Analg. 2009;108:255–262. doi: 10.1213/ane.0b013e31818e3d58. [DOI] [PubMed] [Google Scholar]

- 14.Bould MD, Crabtree NA, Naik VN. Assessment of procedural skills in anaesthesia. Br J Anaesth. 2009;103:472–483. doi: 10.1093/bja/aep241. [DOI] [PubMed] [Google Scholar]

- 15.Neal JM, Hsiung RL, Mulroy MF, Halpern BB, Dragnich AD, Slee AE. ASRA checklist improves trainee performance during a simulated episode of local anesthetic systemic toxicity. Reg Anesth Pain Med. 2012;37:8–15. doi: 10.1097/AAP.0b013e31823d825a. [DOI] [PubMed] [Google Scholar]

- 16.Bould MD, Hayter MA, Campbell DM, Chandra DB, Joo HS, Naik VN. Cognitive aid for neonatal resuscitation: a prospective single-blinded randomized controlled trial. Br J Anaesth. 2009;103:570–575. doi: 10.1093/bja/aep221. [DOI] [PubMed] [Google Scholar]

- 17.Nelson KL, Shilkofski NA, Haggerty JA, Saliski M, Hunt EA. The use of cognitive AIDS during simulated pediatric cardiopulmonary arrests. Simul Healthc. 2008;3:138–145. doi: 10.1097/SIH.0b013e31816b1b60. [DOI] [PubMed] [Google Scholar]

- 18.Burden AR, Carr ZJ, Staman GW, Littman JJ, Torjman MC. Does every code need a “reader?” improvement of rare event management with a cognitive aid “reader” during a simulated emergency: a pilot study. Simul Healthc. 2012;7:1–9. doi: 10.1097/SIH.0b013e31822c0f20. [DOI] [PubMed] [Google Scholar]

- 19.Arriaga AF, Bader AM, Wong JM, et al. Simulation-based trial of surgical-crisis checklists. N Engl J Med. 2013;368:246–253. doi: 10.1056/NEJMsa1204720. [DOI] [PubMed] [Google Scholar]

- 20.McEvoy MD, Smalley JC, Nietert PJ, et al. Validation of a detailed scoring checklist for use during advanced cardiac life support certification. Simul Healthc. 2012;7:222–235. doi: 10.1097/SIH.0b013e3182590b07. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bland M. An Introduction to Medical Statistics. 3. Oxford and New York: Oxford University Press; 2000. [Google Scholar]

- 22.Ziewacz JE, Arriaga AF, Bader AM, et al. Crisis checklists for the operating room: development and pilot testing. J Am Coll Surg. 2011;213:212–217. e210. doi: 10.1016/j.jamcollsurg.2011.04.031. [DOI] [PubMed] [Google Scholar]

- 23.Low D, Clark N, Soar J, et al. A randomised control trial to determine if use of the iResus(c) application on a smart phone improves the performance of an advanced life support provider in a simulated medical emergency. Anaesthesia. 2011;66:255–262. doi: 10.1111/j.1365-2044.2011.06649.x. [DOI] [PubMed] [Google Scholar]

- 24.Weinberg G, Warren L. Lipid resuscitation: listening to our patients and learning from our models. Anesth Analg. 2012;114:710–712. doi: 10.1213/ANE.0b013e31824b7eed. [DOI] [PubMed] [Google Scholar]

- 25.Novak LL. Finding hidden sources of new work from BCMA implementation: the value of an organizational routines perspective. AMIA Annu Symp Proc. 2012;2012:673–680. [PMC free article] [PubMed] [Google Scholar]

- 26.Gawande A. The Checklist Manifest : How to Get Things Right. 1. New York, NY: Metropolitan Books; 2010. [Google Scholar]

- 27.Pronovost P. Interventions to decrease catheter-related bloodstream infections in the ICU: the Keystone Intensive Care Unit Project. Am J Infect Control. 2008;36:S171, e171–175. doi: 10.1016/j.ajic.2008.10.008. [DOI] [PubMed] [Google Scholar]

- 28.Gaba DM. Perioperative cognitive AIDS in anesthesia: what, who, how, and why bother? Anesth Analg. 2013;117:1033–1036. doi: 10.1213/ANE.0b013e3182a571e3. [DOI] [PubMed] [Google Scholar]

- 29.Goldhaber-Fiebert SN, Howard SK. Implementing emergency manuals: can cognitive aids help translate best practices for patient care during acute events? Anesth Analg. 2013;117:1149–1161. doi: 10.1213/ANE.0b013e318298867a. [DOI] [PubMed] [Google Scholar]

- 30.Marshall S. The use of cognitive aids during emergencies in anesthesia: a review of the literature. Anesth Analg. 2013;117:1162–1171. doi: 10.1213/ANE.0b013e31829c397b. [DOI] [PubMed] [Google Scholar]

- 31.Augoustides JG, Atkins J, Kofke WA. Much ado about checklists: who says I need them and who moved my cheese? Anesth Analg. 2013;117:1037–1038. doi: 10.1213/ANE.0b013e31829e443a. [DOI] [PubMed] [Google Scholar]

- 32.Eichhorn JH. The standards formulation process. Eur J Anaesthesiol Suppl. 1993;7:9–11. [PubMed] [Google Scholar]

- 33.Eichhorn JH, Cooper JB, Cullen DJ, Maier WR, Philip JH, Seeman RG. Standards for patient monitoring during anesthesia at Harvard Medical School. JAMA. 1986;256:1017–1020. [PubMed] [Google Scholar]

- 34.Eichhorn JH. Effect of monitoring standards on anesthesia outcome. Int Anesthesiol Clin. 1993;31:181–196. doi: 10.1097/00004311-199331030-00012. [DOI] [PubMed] [Google Scholar]

- 35.Eichhorn JH. Review article: practical current issues in perioperative patient safety. Can J Anaesth. 2013;60:111–118. doi: 10.1007/s12630-012-9852-z. [DOI] [PubMed] [Google Scholar]

- 36.McGaghie WC, Draycott TJ, Dunn WF, Lopez CM, Stefanidis D. Evaluating the impact of simulation on translational patient outcomes. Simul Healthc. 2011;6 (Suppl):S42–47. doi: 10.1097/SIH.0b013e318222fde9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. Translational educational research: a necessity for effective health-care improvement. Chest. 2012;142:1097–1103. doi: 10.1378/chest.12-0148. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Digital Content 1. Decision support tool (DST) screen shots.