ABSTRACT

BACKGROUND

Quality improvement is a central goal of the patient-centered medical home (PCMH) model, and requires the use of relevant performance measures that can effectively guide comprehensive care improvements. Existing literature suggests performance measurement can lead to improvements in care quality, but may also promote practices that are detrimental to patient care. Staff perceptions of performance metric implementation have not been well-researched in medical home settings.

OBJECTIVE

To describe primary care staff (clinicians and other staff) experiences with the use of performance metrics during the implementation of the Veterans Health Administration’s (VHA) Patient Aligned Care Team (PACT) model of care.

DESIGN

Observational qualitative study; data collection using role-stratified focus groups and semi-structured interviews.

PARTICIPANTS

Two hundred and forty-one of 337 (72 %) identified primary care clinic staff in PACT team and clinic administrative/other roles, from 15 VHA clinics in Oregon and Washington.

APPROACH

Data coded and analyzed using conventional content analysis techniques.

KEY RESULTS

Primary care staff perceived that performance metrics: 1) led to delivery changes that were not always aligned with PACT principles, 2) did not accurately reflect patient-priorities, 3) represented an opportunity cost, 4) were imposed with little communication or transparency, and 5) were not well-adapted to team-based care.

CONCLUSIONS

Primary care staff perceived responding to performance metrics as time-consuming and not consistently aligned with PACT principles of care. The gaps between the theory and reality of performance metric implementation highlighted by PACT team members are important to consider as the medical home model is more widely implemented.

KEY WORDS: patient-centered medical home, primary care, performance metrics, team-based care, focus groups, qualitative research

INTRODUCTION

Quality improvement is a central goal of the patient-centered medical home (PCMH) model, and performance measurement has become a foundation for quality improvement.1 Performance measures can be used to stimulate care delivery improvements, establish standards by which success in care delivery can be gauged, and monitor performance.2 Ideally, a collection of performance metrics would guide comprehensive care improvements and minimize unintended harmful effects.2

Performance monitoring has been associated with important improvements in care quality and efficiency.3,4 However, as experience with, and literature examining, performance measurement has increased, there have been concerns raised about the narrowness and burden of measures, the inability to capture all aspects of whole person care, and the potential for negative unintended consequences.4–8

Beginning in 2010, the Veterans Health Administration (VHA) began nationwide implementation of its version of the patient-centered medical home model, called the Patient-aligned Care Team (PACT) model of care, and performance measurement has continued and expanded as part of PACT. Care delivery in the PACT model rests largely with multidisciplinary care teams, and the principles guiding care are that it is patient-driven, team-based, efficient, comprehensive, continuous, and coordinated.9 In practice, PACT teams do much of the daily work to address many process of care measures, such as screening for alcohol misuse and low density lipoprotein measurement in high-risk patients. Clinical reminders built into the electronic health record are a commonly used tool for addressing these measures. There is also a growing slate of PACT-related metrics meant to capture access to care outcomes such as short-term appointment access, and care delivery processes such as use of non face-to-face visits.

One qualitative VHA study conducted prior to PACT implementation found VHA providers perceived that performance metrics detracted from provider focus on patient needs, led to inappropriate care, and compromised patient education.10 Many of these problems were thought to arise from the ways in which metrics are implemented locally.4,10

Provider perceptions of the impact of performance metrics on care delivery within the context of the medical home has not been well researched. Given that PACT teams are on the front lines of performing the work that is being measured, it is important to understand how team members are responding to performance metrics, whether or not they perceive these metrics as aligned with PACT principles, and whether they have recognized any unintended consequences from implementation of these metrics.

Our research team conducted a large focus group study with staff at 15 VHA primary care clinics that were implementing the PACT model. The objective of this analysis is to examine multidisciplinary staff perceptions of the utility of performance metrics in the context of a team-based medical home model of care.

METHODS

Setting

This study focuses on staff comments related to performance metrics collected during a larger qualitative evaluation of PACT implementation. The term staff includes clinicians as well as other staff. We use the term performance metrics in this manuscript to describe any quality metric against which the performance of PACT teams is measured, whether or not there are financial rewards associated with them.

The study took place in 15 VHA primary care clinics, including hospital-based and community-based clinics in urban and rural settings in Oregon and southern Washington. Clinic size varied, with a range of 3–48 primary care staff per clinic. The Institutional Review Board of the Portland VA Medical Center approved this study.

Data Collection

All clinical and administrative staff at the 15 primary care clinics were eligible to participate. We conducted focus groups and interviews between December 2010 and February 2013. Focus groups lasted approximately 1 h in length and were led by one or two of the experienced group facilitators on the research team (DH, CN, and AT). In larger clinics, focus groups were stratified by roles into primary care providers (PCP; ten groups), nurse care managers (NCM; eight groups), or clinical and clerical associates (eight groups). Two focus groups were held with NCMs and clerical and clinical associates participating together. In the four clinics with two or fewer teams, a single focus group was held with all available staff. We also interviewed group practice managers, clinic operations managers, and staff who requested a private interview or who were unable to schedule participation in a focus group.

Interviews lasted from 15 to 50 min, and were conducted by two team members (DH and AT). The interviews and focus groups examined staff-perceived facilitators and barriers to PACT implementation. Our focus group and interview guides did not include questions about performance metrics, but participants often spontaneously discussed performance measures when responding to questions about their overall impressions of PACT. Sessions were recorded and transcribed.

Data Analysis

Two co-investigators conducted a rapid analysis of results and reported preliminary results at feedback sessions to nine of the clinics. These feedback sessions served as a participant validity check and helped identify preliminary themes, which were further refined in analysis of coding. Two research assistants trained in thematic content analysis11 independently coded transcripts and field notes using Atlas.ti software (Atlas.ti GmbH, Berlin). Coding and analysis were supervised by two experienced qualitative researchers, one of whom is also a primary care provider in a university hospital. The lead qualitative researchers and coders met regularly to review and compare coding and to resolve differences by consensus. Our iterative process resulted in two codes related to performance metrics. The code “focus on metrics” included all comments related to perceptions that a focus on performance metrics somehow impacted clinic activities. Another code, “metrics versus what matters,” identified participants’ observations that current performance metrics were either missing or in conflict with other important activities or values in the clinic. These two codes identified the relevant text for further analysis and development underlying all five of our themes in this paper.

RESULTS

Of 337 eligible primary care staff, 241 (71.5 %) participated in this study. Two hundred and twenty-three employees participated in 32 focus groups, with an average size of seven participants (range 2–16 participants). We interviewed 15 clinic managers and six other employees; three of these 21 interviewees also participated in the focus groups. 170 participants (71 %) were women; data on participants’ roles are given in Table 1.

Table 1.

Frequency of Performance Metric Codes

| Employee group | # (% of total) participants* | Codes occurred in : | Average coded passages (range, where codes occurred) |

|---|---|---|---|

| PCPs/MDs | 78 (32 %) | 9 of 10 focus groups (90 %) | Focus groups: 4.8 (2–11) |

| 9 of 13 interviews (69 %) | Interviews: 3.7 (1–7) | ||

| NCMs/RNs | 75 (31 %) | 9 of 10 focus groups (90 %) | Focus groups: 3.7 (1–7) |

| 6 of 8 interviews (75 %) | Interviews 2.8 (1–5) | ||

| Clinical/Clerical Associates | 88 (37 %) | 7 of 8 focus groups (87.5 %) | Focus groups: 4 (2–7) |

| Mixed team roles | ** | 3 of 4 focus groups (75 %) | Focus groups: 1.7 (1–2) |

*Total participants included seven social workers, seven pharmacists, and two optometrists co-located within primary care clinics. Optometrists participated in the PCP/MD focus group; social workers and pharmacists were distributed among all three types of role-stratified focus groups

**Participants in mixed team focus groups are already accounted for in the staff role totals above

Of 1,393 coded passages in the original data set, 159 received one or both of the codes selected for this analysis. These codes occurred in 27 of the 32 focus groups and 17 of the 23 interviews, and were evenly distributed among the different staff groups (see Table 1).

Care Delivery Changes Made in Response to Performance Measures Are Not Always Perceived as Consistent with Key PACT Principles

Many staff expressed concern that the front-line implementation of quality metrics has not always taken into account the alignment with different PACT principles. A number of staff observed that some quality measures may have the unintended consequence of promoting changes that are at odds with PACT principles, such as patient-centered care and efficient delivery of care. Some staff found that quality metrics could have opposing effects on different PACT principles.

For example, one provider elaborated the conflict between a measure of access (the number of days it takes for a patient to be seen in clinic from the time he or she asks for an appointment) and a PACT-related priority (delivering care, when appropriate, without the need for in-person office visits):

…One of the problems is there’s a really strong dichotomy of ideologies. So on one hand we say we want the PACT team, we want to have more phone clinic, we want to have more kind of non face-to-face stuff so we can manage these people better….On the other side of the coin, it’s like, “Boy, that veteran really wants an appointment, and you had better give it to him on the day he wants it.” You can’t win, you’re given two directives that…just bounce off each other, you can’t make that fit. [PCP]

Similarly, participants noted that, in response to performance targets, such as increasing yearly cholesterol testing in patients with heart disease, staff would identify patients who had not had a test in the last year and either order the test or make an appointment. This caused concern that such an approach might compromise patient convenience, and thus, the PACT principle of patient-centered care, since patients had to make a separate trip for laboratory testing and/or an appointment that might not have otherwise been necessary.

Whether the patient even needs to have his cholesterol, or his A1c checked, or any of that nonsense—there’s still those little boxes that we’re still expected to check…. the patients are expected, either by themselves or by the clinic managers, to come in once a year, whether or not they need an appointment. That’s a system thing. So we have this system, these system requirements that don’t speak to the PACT model. [PCP]

The potential downsides of trying to meet performance metrics were magnified by other system challenges. Many PCPs were frustrated by large panel sizes and the perceived expectation that face-to-face visits be minimized. In that setting, performance measures were felt to negatively affect the provider’s ability to address and give appropriate attention to patient priorities as they arose.

So you could be more efficient if you weren’t spending 20 min of a 35 or a 45 min appointment doing reminders and with the expectation that you were only going to see this patient once in this year…. Primary care is about forming long-term relationships and continuity of care with, between a patient and a provider. And that’s not doing everything for a person at one visit…. Today the person’s in mental distress and ya know, they’re having problems, they’re suicidal, then I’m not gonna ask them about their cholesterol and their colonoscopy…I mean that’s just not appropriate, it’s not relevant. But, if you’re empanelled with 1,200 patients and you’re gonna see that one Vet, one point however many times in a year, then you really do need to do everything at that visit—or maybe you need to shrink the panel size. [PCP]

There is a Perception that Performance Metrics Are Not Truly Measuring Patient-Centered Care Quality

Many staff were concerned that the completion of electronic clinical reminders—a key mechanism for addressing and generating data for performance metrics—was viewed as an end in itself. Staff felt these reminders were neither a direct nor accurate reflection of patient priorities.

Unfortunately, at least the perception of some of us, is that’s the way you’re measured…if you put the numbers right, well you’re doing a great job, when that is not the case. [PCP]

To us in the beginning, it was: we’re going to get away from doing all these reminders and worrying about that, and if the patient’s happy, that’s what we’re focused on, we’re gonna be there for the patients. But here we are, like spending half our day, like chasing these reminders…looking at the recalls, trying to preemptively do this stuff, and… …. I feel like this was supposed to be focused towards like making the patient happy, but in the end we’re kind of chasing our own numbers. [Clerical Associate]

There is a Perceived Opportunity Cost in Responding to Performance Metrics

Many staff found that responding to performance metrics required a substantial investment of time, which meant they then did not have time to devote to other important aspects of care not being measured by the metrics.

You just clear your list, versus taking the time to, you know, truly give good education, truly get a good assessment. That time crunch is, you know, a lot of pressure, in all areas I think that you guys [clinical associates], you have all these reminders that you have to do, and some of the reminders, some of these guys have a zillion million of them when you’re checking in, and uh, it’s like, “please don’t say you’re depressed.” [Nurse Care Manager]

Staff also felt that responding to metrics might lead to a piece-meal approach to patient care, focusing attention on certain subgroups, while, because resources are finite, detracting from other patient subgroups and other aspects of patient care.

I think we’re reaching to some of those core performance measures, that we’re able to help our providers reach some of those goals and attain them…but others are being left behind…it feels like maybe our diabetics and our congestive heart failures, COPDers, those kind of people, we’re attaining those goals with them. But it doesn’t seem like we’re giving the same amount of attention and care to all of them across the board. [Clinical Associate]

There is a Perception that Performance Metrics are Imposed by Administration with Little Communication and a Lack of Transparency

Staff were often frustrated by what they perceived as a “top-down” approach to performance metric implementation. They felt metrics were imposed with little warning or training. This dictated approach took away from their own sense of agency as care providers.

For me that’s the biggest frustration with the VA…There is no training, it just shows up that day and you have at it. It’s like, you will get new reminders. No training, no explanation, out of the blue up pops a new reminder. And that complicates PACT model because people are now wasting more time trying to figure out what that reminder is—where it came from and what we’re supposed to do with it, than spending time with the patient saying that hemoglobin A1C is significant. Do you notice yours is 12.9? This is the range we’re hoping for; how can we accomplish lowering that? No, I’m busy trying to figure out how to answer the newest reminder that appeared. [Clinical Associate]

Staff also observed that metrics were prematurely mandated before securing the infrastructure required to adequately respond to them.

What it seems like to me is from the top down they’re dictating that you have to do this [new measure or report]…without making sure the structure at the bottom is built and solid…you don’t even have the staff to put this in place. Then they keep pouring on this additional work and you don’t have the full time staff. [PCP]

There is a Perceived Need to Adapt Performance Metrics to the Team-Based Model of Care

Some staff identified the need for new metrics that capture PACT ideals like team work, and the emphasis on new models of care delivery.

The performance measures…[and] the workload measures are largely based on what the provider is doing. But the work the provider is doing is changing. And you know, a lot of what we do in Primary Care is coordinate care, particularly during transitions when patients are you know, are either decompensating, or getting out of the hospital, you know that kind of thing. And we don’t have any, there’s no acknowledgment, that I can see, that’s kind of built into our schedule. [PCP]

On one hand, we’re saying we’re a PACT team and we’re a team, but…we’re using…the individual’s performance as the metric for how well the team is doing…. To be able to go and say the PACT model doesn’t work because these people haven’t made their metrics, where’s the breakdown? Is it the individual provider that’s not really providing leadership and guidance, is it the NCM who’s not really good, is it that people don’t understand their roles very well? I mean, they’re not really stepping up to the plate to go through the diabetic registry and are the systems in place that support the provider to have maximum performance on those metrics? [PCP]

Despite the promoted emphasis on team-based care, many staff felt that the current incentive structure for performance metrics focused only on the primary care provider. Some felt that rewards for accomplishing performance targets should reflect the work of the whole team, not just the primary care provider.

I’m also frustrated, when his [PCP’s] numbers are clean and better, he gets the pat on the back for it. And they just say, ok, well now that you’ve got that done, here’s what else I need you to do. And so that’s very frustrating for me too. Because, uh, what am I chasing numbers for? To, to make somebody, I mean, it’s not, to me it’s not about patient care, it’s about making him, or the provider meet their marks. [Clinical Associate]

DISCUSSION

In this qualitative study, we found that the nurses, physicians, medical assistants, and administrative assistants comprising Patient-Aligned Care Teams had concerns about the way performance metrics are currently being implemented in the VHA primary care medical home. Their concerns highlight the potential gaps between the theory and reality of performance measurement. Though each individual metric may be well-intentioned, the actual front-line implementation of a collection of metrics is perceived as having unintended negative consequences on patient care, team function, and the satisfaction of team members.

Ideally, performance metrics would inspire systems changes that improve care beyond the measure in question.2 Process-of-care measures such as yearly cholesterol testing are frequently used as proxy measures for anticipated health outcomes, but do not constitute an end in and of themselves. However, a recurring observation of PACT staff was that process-of-care metrics were often viewed as a terminal goal, rather than an opportunity to identify gaps in systems of care.

Team members’ comments echo broader ongoing debates about the accuracy with which some metrics measure care quality.6,12 Many staff comments implied a shared belief that the patient perspective was critical in determining care quality, but that this perspective was not always well-represented in the performance metrics to which they were held responsible. Indeed, there is some evidence that structural and process-of-care metrics may not align with patient-perceived care quality.13

The implementation of a medical home model within a very large, centrally controlled, integrated health system presents its own set of challenges. Often the choice, prioritization, and incentivization of performance metrics are determined by administrators through processes that are not well understood by front-line staff. Some of the frustration expressed by staff reflected this lack of transparency, as well as the incongruence between the emphasis on team-based care and staff empowerment and the perceived “top-down” approach to performance measurement. That performance pay rewarded physicians rather than the entire team was also seen to contradict the principle of team-based care.

Staff concerns about the current state of performance measurement highlight several opportunities for improvement in the team-level implementation of such metrics (see Table 2). Our results suggest that the performance metrics chosen at any given time should be considered collectively in terms of their relationships to PACT goals. Furthermore, there is evidence that use of too many metrics can lead to an “attention shift” away from patient care needs that are not reflected in performance measures.14

Table 2.

Themes and Suggested Responses to Improve Performance Measurement Practices

| Theme | Suggestion for improvement |

|---|---|

| Care delivery changes made in response to some performance measures are not always perceived as consistent with key PACT principles | Review the collection of performance metrics being implemented against a framework of the medical home attributes guiding PACT development. Provide quality improvement training and infrastructure to PACT teams. |

| There is a perception that performance metrics are not truly measuring patient-centered care quality | Investigate patients’ priorities for care quality. |

| There is a perceived opportunity cost in responding to performance metrics | Review performance metrics collectively. Investigate the amount of time PACT teams spend responding to performance metrics. Titrate the portfolio of performance metrics to the amount of time available. |

| There is a perception that performance metrics are imposed by administration with little communication and a lack of transparency | Disseminate the clinical rationale for each performance metric to clinic staff. Allow adequate lead-time when implementing new metrics. Incorporate a feedback loop system so that observations from front-line staff about potential unintended harms of metrics can be communicated to the appropriate officials. |

| There is a perceived need to adapt performance metrics to the team-based model of care | Adjust performance incentives to reflect staff members conducting the work. |

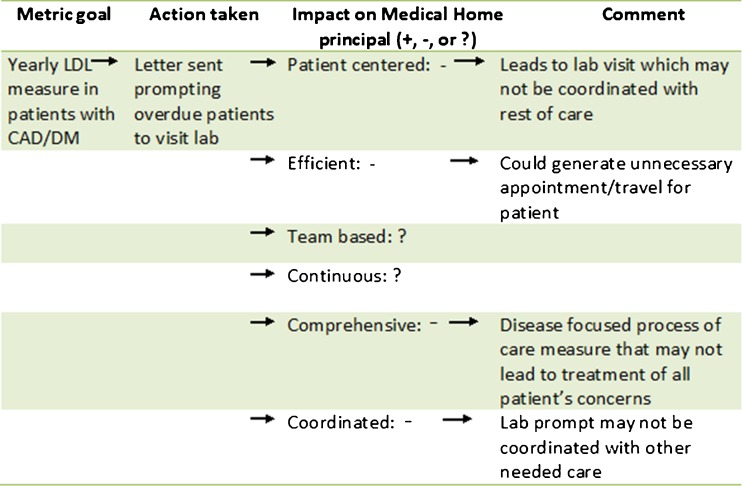

Clinical reminders are often implemented locally as a way to achieve higher performance and to gather data that feeds system-level performance measures and other quality assessments. Since front-line clinical staff encounter performance metrics most directly through the clinical reminder system, our findings may suggest a need to re-examine the collection of electronic reminders in place at any one time, to reduce provider fatigue and ensure a focus on critical performance items. Additional analysis (see example, Fig. 1) could help administrators and providers visualize the relationships between metrics and medical home attributes.

Figure 1.

Example diagram mapping the impact of a performance metric on medical home principles.

The relationships between metrics should also be considered. For instance, near-term access to care is an important central goal of PACT that could be at odds with metrics encouraging alternatives to face-to-face visits, especially if access is measured as time to outpatient appointment and if scheduling triage decisions are made by those outside the team (i.e., by a central scheduling unit).

Second, feedback from staff suggests there are opportunities to increase the transparency of the performance measurement process and better align it with the principles of team-based care. For example, posting metrics, their rationales, their intended effects on patient care, and their relationship to one or more PACT principles in an accessible and understandable format might help engage front-line staff. Providing adequate lead-time for staff education when new measures are implemented may increase the acceptability of new measures. Establishing open channels for communicating feedback to regional and national quality and performance administrators may provide an important avenue for improving the way performance measurement is used in VHA.

Third, the financial incentives tied to some metrics target physicians, but the work to meet performance goals is often done by other PACT team members. Study participants indicated that this was detrimental to team functioning, and should be corrected.

Finally, there are calls for performance metrics to move towards “assessments that are broad-based, meaningful, and patient-centered.”12 One step toward this ideal would be to consider the implementation of various metrics in light of the type of care delivery changes they inspire. As staff noted, the response to some process-of-care measures, for example, has been simply to send patients reminders to complete laboratory testing that meets the requirements for specific measures. Once this metric is achieved, there may be a tendency for providers and administrators to conclude that excellent care for the condition has been achieved. The performance metric approach can thus turn attention away from the need for broader and more sustainable systems change, based on local quality improvement measures that can encourage teams to more deeply evaluate and improve their processes .7 For instance, Group Health, whose medical home pilot has led to reductions in cost and improvement in care quality, emphasized in its standard management practices basic quality improvement techniques such as root cause analysis and PDSA (Plan-Do-Study-Act) cycles.15

The work presented here suggests a need for more research to guide improvements in performance metric implementation in PACT. Future studies should examine how teams respond to performance metrics and reminders, and assess whether metrics are being used as a fulcrum for sustainable and systematic improvements in care. Future studies should also attempt to quantify the opportunity cost of responding to metrics, including information about time, workflow, and personnel engaged. Finally, there is a pressing need to examine patients’ perspectives on performance metrics and whether they are aligned with patient-identified priorities for care quality.16

Like any qualitative study, this study was designed to generate in-depth knowledge of issues and practices in a particular context, rather than generalizable findings. On the other hand, our findings represent input from a large number and broad variety of clinic staff from many different clinics in both urban and rural settings, suggesting broad relevance at least within the VHA system in our region. Our study was designed to assess provider attitudes; whether patient experiences and outcomes mirror providers’ concerns is unclear. The larger qualitative study from which this study was derived was not designed specifically to assess staff perceptions of performance metrics, and thus some relevant observations on the topic may not have surfaced, or negative comments may have been more likely to surface with this approach. However, we believe that, because these themes emerged spontaneously and commonly out of a broader discussion, that the findings represent observations of central importance to many staff.

CONCLUSIONS

Clinic staff representing multiple disciplines perceived performance metrics as time-consuming and not consistently aligned with PACT principles of care. Furthermore, staff perceived some metrics as having negative impacts on patient care and staff satisfaction. The gaps between the theory and reality of performance metric implementation highlighted by PACT team members are important to consider as the medical home model is more widely implemented. Further research is needed to identify performance metric implementation strategies that primary care staff perceive as more fully aligned with the overall goals of PACT.

Acknowledgements

We would like to acknowledge the contributions of all members of the VISN 20 PACT Demonstration Lab research team, as well as the primary care staff who shared their insights and experiences as research participants. Funding for the PACT Demonstration Laboratory initiative is provided by the VA Office of Patient Care Services.

Conflict of Interest

We have no conflict of interest, financial or otherwise, to disclose in relation to the content of this paper. Funding for the PACT Demonstration Laboratory initiative is provided by the VHA Office of Patient Care Services. The Department of Veterans Affairs did not have a role in the conduct of the study, in the collection, management, analysis, interpretation of data, or in the preparation of the manuscript. The views expressed in this article are those of the authors and do not necessarily represent the views of the Department of Veterans Affairs or the U.S. Government.

REFERENCES

- 1.Peikes D, Zutshi A, Genevro J, Smith K, Parchman M, Meyers D. Early Evidence on the Patient-Centered Medical Home. Final Report (Prepared by Mathematica Policy Research, under Contract Nos. HHSA290200900019I/HHSA29032002T and HHSA290200900019I/HHSA29032005T). AHRQ Publication No. 12-0020-EF. Rockville, MD: Agency for Healthcare Research and Quality. February 2012.

- 2.Chassin MR, Loeb JM, Schmaltz SP, Wachter RM. Accountability measures—using measurement to promote quality improvement. N Engl J Med. 2010;363:688–93. doi: 10.1056/NEJMsb1002320. [DOI] [PubMed] [Google Scholar]

- 3.Lindenauer PK, Remus D, Roman S, et al. Public reporting and pay for performance in hospital quality improvement. New Engl J Med. 2007;356(5):486–496. doi: 10.1056/NEJMsa064964. [DOI] [PubMed] [Google Scholar]

- 4.Kizer KW, Kirsh SR. The double edged sword of performance measurement. J Gen Intern Med. 2012;27(4):395–397. doi: 10.1007/s11606-011-1981-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hayward RA. Performance measurement in search of a path. New Engl J Med. 2007;356(9):951–953. doi: 10.1056/NEJMe068285. [DOI] [PubMed] [Google Scholar]

- 6.Casalino LP. The unintended consequences of measuring quality on the quality of medical care. New Engl J Med. 1999;341(15):1147–1150. doi: 10.1056/NEJM199910073411511. [DOI] [PubMed] [Google Scholar]

- 7.Malina D. Performance anxiety—what can health care learn from K–12 education? J Gen Intern Med. 2013;369(13):1268–1272. doi: 10.1056/NEJMms1306048. [DOI] [PubMed] [Google Scholar]

- 8.Petersen LA, Woodard LD, Urech T, Daw C, Sookanan S. Does pay-for-performance improve the quality of health care? Ann Intern Med. 2006;145(4):265–272. doi: 10.7326/0003-4819-145-4-200608150-00006. [DOI] [PubMed] [Google Scholar]

- 9.Klein S. The Veterans Health administration: implementing patient-centered medical homes in the nation’s largest integrated delivery system. Commonwealth Fund. 2011;1537:1–24. [Google Scholar]

- 10.Powell AA, White KM, Partin MR, et al. Unintended consequences of implementing a national performance measurement system into local practice. J Gen Intern Med. 2012;27(4):405–412. doi: 10.1007/s11606-011-1906-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15:1277–88. doi: 10.1177/1049732305276687. [DOI] [PubMed] [Google Scholar]

- 12.Conway PH, Mostashari F, Clancy C. The future of quality measurement for improvement and accountability. JAMA. 2013;309(21):2215–2216. doi: 10.1001/jama.2013.4929. [DOI] [PubMed] [Google Scholar]

- 13.Gray BM, Weng W, Holmboe ES. An assessment of patient-based and practice infrastructure–based measures of the patient‐centered medical home: do we need to ask the patient? Health Serv Res. 2012;47:4–21. doi: 10.1111/j.1475-6773.2011.01302.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Glasziou PP, Bucan H, Del Mar C, et al. When financial incentives do more good than harm: a checklist. BMJ. 2012;345:1–5. doi: 10.1136/bmj.e5047. [DOI] [PubMed] [Google Scholar]

- 15.Reid RJ, Coleman K, Johnson EA, et al. The Group Health medical home at year two: cost savings, higher patient satisfaction, and less burnout for providers. Health Affairs. 2010;29:835–43. doi: 10.1377/hlthaff.2010.0158. [DOI] [PubMed] [Google Scholar]

- 16.Peikes D, Genevro J, Scholle SH, Torda P. The Patient-Centered Medical Home: Strategies to Put Patients at the Center of Primary Care. AHRQ Publication No. 11-0029. Rockville, MD: Agency for Healthcare Research and Quality. February 2011.