Abstract

BACKGROUND

Effective implementation of the patient-centered medical home (PCMH) in primary care practices requires training and other resources, such as online toolkits, to share strategies and materials. The Veterans Health Administration (VA) developed an online Toolkit of user-sourced tools to support teams implementing its Patient Aligned Care Team (PACT) medical home model.

OBJECTIVE

To present findings from an evaluation of the PACT Toolkit, including use, variation across facilities, effect of social marketing, and factors influencing use.

INNOVATION

The Toolkit is an online repository of ready-to-use tools created by VA clinic staff that physicians, nurses, and other team members may share, download, and adopt in order to more effectively implement PCMH principles and improve local performance on VA metrics.

DESIGN

Multimethod evaluation using: (1) website usage analytics, (2) an online survey of the PACT community of practice’s use of the Toolkit, and (3) key informant interviews.

PARTICIPANTS

Survey respondents were PACT team members and coaches (n = 544) at 136 VA facilities. Interview respondents were Toolkit users and non-users (n = 32).

MEASURES

For survey data, multivariable logistic models were used to predict Toolkit awareness and use. Interviews and open-text survey comments were coded using a “common themes” framework. The Consolidated Framework for Implementation Research (CFIR) guided data collection and analyses.

KEY RESULTS

The Toolkit was used by 6,745 staff in the first 19 months of availability. Among members of the target audience, 80 % had heard of the Toolkit, and of those, 70 % had visited the website. Tools had been implemented at 65 % of facilities. Qualitative findings revealed a range of user perspectives from enthusiastic support to lack of sufficient time to browse the Toolkit.

CONCLUSIONS

An online Toolkit to support PCMH implementation was used at VA facilities nationwide. Other complex health care organizations may benefit from adopting similar online peer-to-peer resource libraries.

Electronic supplementary material

The online version of this article (doi:10.1007/s11606-013-2738-0) contains supplementary material, which is available to authorized users.

Key words: primary care redesign, patient-centered care, evaluation, implementation research, quality improvement

Background and Objective

The patient-centered medical home (PCMH) is a widely accepted model for transforming the organization and delivery of primary care.1 PCMHs strive to provide comprehensive care, build partnerships with patients, and emphasize care coordination and communication. Primary care teams within integrated health systems require training and other resources to support their transformation into successful PCMHs and promote high-quality care.

One such resource is the online toolkit, where PCMH staff can easily access materials and strategies. Several free and fee-for-use toolkits are available, usually aiming to support initial PCMH implementation2–4 or PCMH certification.5,6 However, few toolkits focus on quality improvement (QI) once basic PCMH implementation has been completed, and published evaluations of toolkits are limited.

In this article, we present findings from a multimethod evaluation of a PCMH toolkit developed by the Veterans Health Administration (VA). The Consolidated Framework for Implementation Research (CFIR) was used to guide this evaluation, which synthesized data from web analytics, online surveys, and interviews with end users.

Innovation

In 2010, the VA began the Patient-Aligned Care Team (PACT) Initiative to transform more than 800 VA primary care practices into PCMHs.7 As the nation’s largest integrated health care system, the VA established a range of PACT performance measures and defined three “pillars” of PACT success: access to care, care coordination, and practice redesign. The PACT Toolkit (“Toolkit”) was developed to share “tools”—that is, technical, clinical, or organizational innovations—linked to one or more performance measures or pillars.

The Toolkit is a VA intranet site that now contains more than 60 tools, which can be accessed and downloaded by any VA staff. It grew out of in-person regional learning collaboratives, where PACT teams gathered to learn process improvement strategies.8,9 Target Toolkit users are PACT coaches and team members, including providers, nurses, and clinic managers. Tool adoption is voluntary, and most tools can be customized to local needs.

Clinic staff created the component tools, which were vetted by physicians and nurse program leaders. Each tool’s webpage identifies the relevant performance pillar, briefly describes the tool, and provides download links (Figure available online). Examples of tools include EHR note templates, training materials, patient brochures, and care process flowmaps.

To promote use of the Toolkit, a two-pronged social marketing strategy employed direct contact and facilitation to stimulate website visits and the use of individual tools.10–12 Toolkit staff created a brand logo, sent e-brochures to distribution lists of collaborative participants, and e-mailed periodic updates to a Toolkit users’ listserv. They also gave presentations to VA opinion leaders and demonstrated the Toolkit at each regional collaborative. Collaborative attendees were given promotional brochures and encouraged to share information about the Toolkit with colleagues.

Design and Participants

Our evaluation integrated web analytic data, online survey responses, and interview responses. Because the Toolkit was developed as an enhanced Microsoft SharePoint® website, we used SharePoint’s web analytics function to measure the number of website visits by unique users and facilities. We present data from the Toolkit launch in September 2011 through March 2013.

The CFIR guided survey design and interview questions.13 This framework comprises five domains: intervention (i.e., Toolkit) characteristics, outer setting (e.g., health care system policies), inner setting (e.g., facility characteristics, local culture), characteristics of individuals involved, and implementation processes (e.g., dissemination). We focused on the inner setting and individual characteristics domains. The intervention characteristics domain was measured using respondent ratings of the Toolkit’s quality and the implementation process domain using a survey item asking how the respondent heard of the Toolkit. We assumed the outer setting remained constant across facilities because of the VA’s simultaneous, system-wide PACT rollout.

Staff at 142 VA medical centers and subsidiary outpatient clinics were asked to participate in an online survey and telephone interviews. To identify a survey sample frame, we compiled a list of collaborative attendees and other PACT team members. E-mail invitations to complete the anonymous survey using SurveyMonkey™ were sent to 2,899 people. From June-August 2012, we received 893 responses (a 30.8 % response rate). As shown in Table 1, 65 % of respondents were primary care providers, RNs, or care managers, which are roles central to the PACT model. Respondents represent 136 facilities, from all geographic regions and VA facility complexity levels, for a facility response rate of 95.8 %. We excluded 305 respondents who were not PACT team members or were unsure, and 44 who were clerical associates; they were asked only about personal characteristics.

Table 1.

Respondent and Facility Characteristics

| Survey respondents n = 544 (%) | Interviewees n = 32 (%) | ||

|---|---|---|---|

| Role in PACT | Provider (MD, NP, or PA) | 123 (23) | 3 (9) |

| RN/care manager | 230 (42) | 13 (41) | |

| Clinical associate (LPN, MA, or health technician) | 142 (26) | 3 (9) | |

| Other (social worker, dietician, clinic manager, or pharmacist) | 34 (6) | 13 (41) | |

| Other administrative | 15 (3) | 0 (0) | |

| Time in primary care and/or quality improvement | Less than 1 year | 48 (9) | 1 (3) |

| 1 to 4 years | 218 (40) | 19 (59) | |

| 5 or more years | 250 (46) | 12 (38) | |

| Don’t work in primary care or QI | 13 (2) | 0 (0) | |

| Not provided | 15 (3) | 0 (0) | |

| PACT training received | Regional level only | 154 (28) | 20 (62) |

| Facility level only | 196 (36) | 5 (16) | |

| Both regional and facility level | 140 (26) | 6 (19) | |

| None | 54 (10) | 1 (3) | |

| Work location(s) | VA Medical Center (VAMC) | 196(36) | 24 (75) |

| Satellite outpatient clinic | 306 (56) | 8 (25) | |

| Both VAMC and outpatient clinic | 27 (5) | 0 (0) | |

| Unknown/did not report workplace | 15 (3) | 0 (0) | |

| Facility complexity* | High | 310 (57) | 18 (56) |

| Medium | 118 (22) | 8 (25) | |

| Low | 107 (20) | 6 (19) | |

| Not provided or not defined | 9 (1) | 0 (0) | |

| PACT region | West | 123 (23) | 2 (6) |

| Central | 124 (23) | 3 (9) | |

| Mid-South | 122 (22) | 9 (28) | |

| Northeast | 75 (14) | 11 (34) | |

| Southeast | 98 (18) | 7 (22) | |

| Not provided | 2 (<1) | 0 (0) | |

*VA assigns a standardized complexity score to each facility based on volume, teaching, research, and intensive care unit capability. This complexity score is not defined for three facilities

The final survey sample of 544 respondents received up to 23 questions focused on: Toolkit awareness and use, PACT involvement, personal characteristics, and organizational readiness for change. Respondents who had not heard of the Toolkit, had not visited the website, or whose facility had not implemented tools were skipped out of some questions. We included a final open-text field for comments; 124 respondents offered remarks.

Interview subjects were recruited from among the 1,147 PACT collaborative participants and 615 Toolkit super-users (defined as those who had browsed more than ten Toolkit webpages in the first 30 days after their initial visit). Because we suspected there might be regional and facility-level variation in PACT implementation, we purposively recruited individuals from among each of five different regions and three different levels of facility complexity. We completed 35 interviews from August-October 2012; interviews with three staff who were not PACT team members were excluded from the analysis. Super-users comprised approximately 25 % of interviewees. As shown in Table 1, approximately half of interviewees were primary care providers or RNs/care managers.

Semistructured telephone interviews elicited detailed descriptions of facility- and individual-level Toolkit use, enriching survey findings and grounding our understanding of the data. Each 30-min interview was recorded with the interviewee’s consent. The interview guide touched on several CFIR domains, including: (1) demographic information (individual characteristics), (2) awareness and adoption of the Toolkit (implementation process), and (3) regional/facility characteristics and culture (inner setting) contributing to Toolkit use. A note-taker produced notes for review; quotations were confirmed from recordings. We followed a directed content analysis strategy14 using a stepped procedure in which three analysts coded the interviews to ensure reliability. Coded interviews were then used to identify common themes.

This evaluation was determined to be non-human subject research by the Stanford University Institutional Review Board.

Main Measures

We used specific survey items to define three Toolkit use outcomes: awareness of the Toolkit, use of the website, and tool implementation at the facility. For tool implementation, we excluded respondents who worked only at subsidiary outpatient sites and collapsed individual responses to the facility (medical center) level; we inferred that affirmative responses were correct when other responses from the same facility were negative. Facility-level values for primary care patient population and facility complexity were attributed to each respondent.

We constructed multivariable logistic regression models for the Toolkit awareness and website use outcomes, with predictors drawn from CFIR domains as described above. Because respondents were nested within facilities, cluster robust standard errors15 were calculated. A block bootstrap (10,000 re-samplings) of each model was created to address small sample sizes relative to covariate sets16 and nesting of respondents within facilities.17 This procedure repeatedly took a subsample of our survey data, estimated a statistic, and then aggregated all these subsample statistics to reflect likely activity beyond our survey responses. Bias-corrected 95 % confidence intervals are reported for all models. Analyses were conducted using Stata® v11.1.

Key Results

Awareness and Use of the Toolkit

Web analytic data showed that 6,745 unique users visited the Toolkit website in the first 19 months, with an average of 1,108 new and return users per month. Usage was dispersed across all 21 VA regional networks, with a median of 271 users per network. Unique users represented 32.8 % of the 20,300 VA primary care providers and staff implementing PACT.

Survey responses indicated that 80.3 % of respondents had heard of the Toolkit from a variety of sources, including Toolkit promotional emails (46 %), emails from colleagues (14 %), word-of-mouth (8 %), and collaborative sessions (42 %; multiple responses total greater than 100 %). As shown in Table 2a, no significant differences in Toolkit awareness were observed across individual characteristics or inner setting features we measured, including team roles, medical center or outpatient location, facility complexity, or facility primary care enrollment.

Table 2.

Predictors of Toolkit Awareness and Website Access by Survey Respondents

| Covariate* | A. Heard of Toolkit | B. Visited website | ||

|---|---|---|---|---|

| Odds ratio | 95 % CI† | Odds ratio | 95 % CI† | |

| Role in PACT (ref. physician) | ||||

| Nurse (RN) | 1.09 | 0.63-1.91 | 1.72 | 0.93-2.96 |

| Clinical associate | 0.83 | 0.44-1.60 | 0.60 | 0.32-1.15 |

| Received facility-level training | 1.54 | 0.93-2.50 | 1.41 | 0.80-2.42 |

| Received regional training | 2.13 | 1.31-3.43 | 2.07 | 1.23-3.56 |

| Works at VA Medical Center (ref. outpatient site only) | 0.87 | 0.56-1.47 | 1.24 | 0.74-2.07 |

| Facility complexity (decreasing) | 1.03 | 0.78-1.36 | 1.13 | 0.89-1.41 |

| Primary care patients at facility‡ | 1.09 | 0.92-1.28 | 1.06 | 0.90-1.27 |

*Bolded estimates are statistically significant, p < 0.05

†Confidence intervals. Bootstrapped confidence intervals are presented in order to consider variance observed from resampling across the distribution of responses in successive bootstrapped samples

‡Facility primary care patient counts are in units of 10,000 patients as of August, 2012

Among survey respondents who had heard of the Toolkit, 70.3 % had browsed the website. Of those who had not, 73 % cited not having time as the main reason; 16 % cited having no need to adopt tools and 5 % lack of interest. Different categories of users were equally likely to have visited the website, with no significant differences observed across team roles, practice location, facility complexity, or facility primary care enrollment (Table 2b).

Survey respondents who browsed the Toolkit gave it an average quality rating of 3.77/5. Of respondents who visited the website, 34 % spent an hour or more there; 62 % reported having downloaded a tool, and 7 % requested a tool that requires clinical application support for implementation. Ten percent reported having uploaded or suggested a tool, but only 4 % had contributed to an online discussion forum.

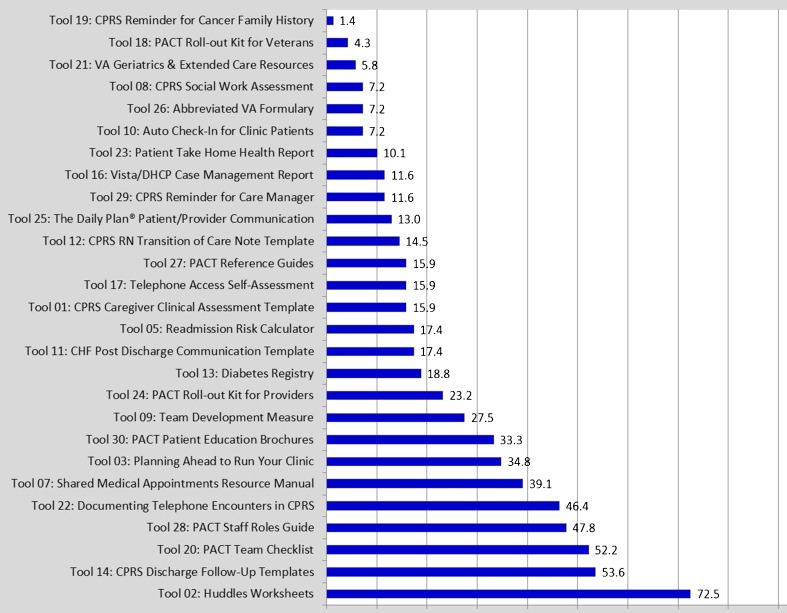

Aggregating multiple responses per facility showed that 65.1 % of facilities had implemented one or more PACT tools. Figure 1 shows how frequently individual tools were adopted.

Figure 1.

Frequency of tool implementation among facilities (n = 69).

The majority of interviewees expressed a favorable view of the Toolkit. Interviewees reported visiting the Toolkit for a number of reasons, including to address a specific quality deficit (approximately one quarter of interviewees) and learn how other clinics solved implementation problems (approximately one third of interviewees). One interviewee expressed support for the Toolkit saying, “I wish…we could have seen it early on in [PACT] development so that we didn’t have to reinvent the wheel…One of the things we had done before the Toolkit was…email around to see who had a best practice” [IntM]. Another explained how they use the Toolkit saying, “When I have to implement something, I go to the tool pages, like the Huddle Worksheet, which is good for new providers and teams…working together as a team, and communication…” [IntR]. Interviewees were most interested in point-of-care support tools, patient materials, and PACT training tools.

Another frequently cited use of the Toolkit was to support PACT training efforts. For example, one interviewee reported “When we do local PACT training…I go in and access some of the tools…I actually use it as a training tool” [IntC]. This finding from interview analysis was supported by survey comments. For example, one survey respondent commented “I work in PACT Learning Center education and include the PACT Toolkit in every session” [SR730].

Impact of Training on Toolkit Use

Survey respondents who reported receiving PACT training at the regional level were significantly more likely to have heard of the Toolkit and to have visited the website. However, receipt of facility-level training was not associated with Toolkit awareness or use (Table 2a, b).

Interviews revealed variation in content and depth of local PACT training sessions. Some interviewees described a top-down training approach where coaches trained at a PACT Center of Excellence, then returned to the facility to deliver standardized content. Some interviewees indicated their clinics were closed so staff could attend training sessions, yet others only mentioned attending monthly in-service trainings. Finally, some interviewees indicated they received almost no formal training. One subject said, “[I]…just had PACT virtual training…PACT info not provided in orientation; what I learned, I found on my own…” [IntL].

Impact of Facility Environment on Toolkit Use

Almost half (46.2 %) of survey respondents who indicated that their facility had implemented one or more tools reported that it was very or somewhat easy to implement new tools to improve primary care there, while 25.8 % reported it was very or somewhat difficult. Similarly, more respondents (44.2 %) reported that no special approval would be needed to implement a tool than reported permission would be required (31.2 %) or were unsure (24.6 %).

To better understand the local culture within which the Toolkit was used, we asked interviewees about their familiarity with QI and motivations to engage in it. Interviewees were, for the most part, familiar with local performance measurement. One interviewee reported that their facility has “monthly performance meetings where we go over that data…then spread out the information to the separate teamlets…” [IntM]. Another indicated that facility leadership plays a role in disseminating information about performance, “We know all our performance measures; we are updated regularly by…leadership…” [IntD].

Dissemination of performance data, by quality teams or facility leadership, was another recurring theme. Almost half of interviewees reported regularly seeking or receiving local performance feedback. They were also aware of the consequences for meeting or failing to meet specific goals. One respondent described these positive and negative consequences, saying, “The penalty is having to give action plans every month…The reward is that you get lots of verbal praise when a facility goes into the green. We are recognized at a monthly ceremony…the [PACT] metrics are part of performance pay for [certain staff positions]” [IntAC].

Survey comments provided additional information for explaining Toolkit use within a facility. Comments reflected three themes (Table 3). Some praised the Toolkit’s usefulness. Another group thought the Toolkit would be helpful if they had time to use it. The last group indicated that using the Toolkit would represent yet another burden within the already stressful environment of PACT implementation. Comments also identified a number of specific barriers to Toolkit use, including: (1) insufficient time to implement required PACT elements, let alone optional elements like the Toolkit; (2) inadequate staff; (3) insufficient PACT training; (4) out-of-touch or uncaring leadership; and (5) insufficient space or equipment.

Table 3.

Select Comments to Open-Ended Survey Items, by Theme

| Theme | Comment |

|---|---|

| Toolkit useful | Toolkits were very valuable in helping to set up the PACT teamlets and educate staff. [SR73] |

| As a manager, I find the PACT toolkit very helpful in assisting me to develop processes for the PACT nursing staff. [SR79] | |

| Really enjoy the PACT toolkit, find it useful and helpful for shared improvements for our facility and PACT program. [SR209] | |

| Toolkit potentially useful but barriers hinder its use | I would probably use the PACT Toolkit if I had more time to access it. I function as the RN Care Manager for two teamlets and am so busy managing the day to day patient issues, that I don’t get the luxury of accessing the Toolkit. [SR170] |

| From the short time I have scanned the [Toolkit web] site, it looks like there is a lot of good information available, but due to short staffing, I have been unable to indulge any further. [SR115] | |

| Having the time to really sit and look at the wonderful things accessible to PACT has been less than achievable. [SR240] | |

| Barriers preclude Toolkit use | Come and observe the nurses here and then tell us when we have time to download, upload or reload all of this…three messages on my phone in the time it took for this…survey. [SR926] |

| I would not use [the Toolkit] even if I [had known] about it. The providers can barely get through the heavy load we have now, much less take time out for this stuff…we are drowning. [SR691] | |

| I really have tried to implement PACT, but without cooperation from the administration, and the constant roadblocks that are thrown at us daily, there is very little…chance for this to succeed. [SR191] | |

| It is impossible to read and utilize the PACT Toolkits when we are not staffed with the appropriate 3:1 ratio and no time is carved out for administrative duties…PACT doesn’t work without having all the [resources] you need. It is more than a PACT Toolkit. [SR827] | |

| Too much info…not enough training. Not enough staff to work [the Toolkit] effectively…not enough office space; teams are spread out. Unable to collaborate with other team members effectively. Poor continuity on the teams as they are so divided…I don’t have time to tell more. Back to work. [SR94] | |

| I’ve been unable to take the time to review the toolkit…PACT activities require more resources at our level. We’re pretty strapped. [SR322] |

Discussion

We evaluated use of an online interactive Toolkit enabling PCMH teams to share QI tools across a national health care system. Website data show that a large number and diverse set of VA staff used the Toolkit. Survey data and interviews show that users rated the Toolkit’s quality highly, and many spent substantial time on the website. Interviews and survey comments provided insights about individual and organizational factors that facilitated use of the Toolkit, but also identified barriers to use.

Several toolkits have been developed by professional societies,2,18 state medical organizations,3,19 or health plans4 to provide primary care practices with the basic building blocks for PCMH implementation. Other toolkits, often fee-based, contain extensive detail to help practices achieve PCMH certification from the National Committee for Quality Assurance or other organizations.5,6 However, these toolkits provide limited support for QI activities, and we are unaware of published evaluations of them.

Outside primary care, Speroff found that an online toolkit was less likely to change care processes than an online collaborative, although neither intervention improved long-term outcomes.20 Supporters view toolkits as empowering staff to customize solutions,21 while others view toolkits as passive resources requiring staff motivation to adopt change.20 DeWalt found clinic staff were willing to adopt “concise and actionable” tools but required some knowledge of QI methods to succeed.22 Our study did not measure clinical outcomes, but is the first to examine the combination of an online toolkit containing concise, actionable tools with in-person collaboratives.

Dissemination of quality improvement techniques presents serious challenges. Statistical analyses of CFIR’s individual characteristic and inner setting domains showed a broad reach of Toolkit awareness and use across medical centers and outpatient settings and from facilities of all sizes, complexities, and regions. This suggests that social marketing is one key to successful dissemination.

Another key is social interaction. Participation in regional PACT training sessions promoted awareness and use of the Toolkit; facility-level training, however, did not. We hypothesize that in-person interaction outside the PCMH team helps explain this difference. Moreover, qualitative results suggest that participation in external and/or internal networks (e.g., learning collaboratives or listservs) promoted Toolkit use and that recommendations from trusted colleagues were especially influential.23 Combined with interview findings about variations in training across facilities, these results suggest that future toolkit efforts may benefit from standardizing local PCMH training content and providing opportunities for out-of-team social interaction.

QI culture also plays a role. Aspects of a facility’s inner setting, including awareness about performance, a learning culture, a supportive climate, and readiness for change were associated with Toolkit use.13 Interviewees highlighted a perceived need for improving local deficits as a driver of performance improvement, and several indicated that a local performance focus was conducive to Toolkit use. Survey respondents in facilities that had implemented tools were more likely than not to report that it was easy to implement new tools there. Survey comments also describe aspects of local environments that are less favorable for Toolkit use, including lack of leadership support and inadequate time to identify and adopt tools.

The barriers to Toolkit use highlighted by our qualitative results may also reflect the inner-setting complexity of launching the Toolkit during the VA’s larger PCMH transformation. Staff whose time is most occupied with patient care seem to have benefitted less from the Toolkit than those whose roles (e.g., care managers) enabled them to review it in depth. But interviewees also report using the Toolkit to learn how other facilities addressed PACT implementation challenges. Thus, in the midst of system-wide primary care transformation, an online toolkit is an accessible knowledge-sharing resource that may allow facilities struggling with PCMH implementation to learn from more successful sites.

Some limitations should be considered when interpreting these findings. Although our survey response rate was good, non-response bias cannot be ruled out. Interview responses may not reflect the range of persons who declined to be interviewed. Data collection occurred during PACT implementation; use and perceptions of the Toolkit may change once PACT becomes routine system-wide. Lastly, we could not evaluate the Toolkit’s effect on provider behavior, a subject for future research.

The VA successfully developed an online peer-to-peer Toolkit to share QI tools across PCMHs nationwide. Other health systems may benefit from developing such online resources. Our findings suggest that they can enhance toolkit use by using systematic social marketing, providing standardized training and opportunities for social interaction, and emphasizing performance measurement. System-level and local leadership, as well as adequate time and resources for clinicians to implement PCMH, can also help overcome barriers to toolkit use.

Electronic supplementary material

(DOC 583 kb)

(XLSX 16 kb)

Acknowledgments

Contributors

The authors wish to thank the following Toolkit development staff: Jenny Barnard, BA; Nina Smith, MPH; Tonya Reznor, BA; Carla Alvarado, MPH; Deborah Griffith, Ed.D.; Gail Edwards, RN. The authors also thank the editor and JGIM reviewers for their helpful critiques and suggestions for this article.

Funding

This study was funded by a grant from the VA Office of Systems Redesign with additional in-kind support from the VA Center for Applied Systems Engineering (VA-CASE).

Prior Presentation

Portions of this article were presented as a poster at the Annual Meeting of the Society of Behavioral Medicine, 21 March 2013.

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Disclaimer

The views expressed in this article are those of the authors and do not necessarily reflect the position or policy of the Department of Veterans Affairs or the US government.

References

- 1.Stange KC, Nutting PA, Miller WL, et al. Defining and measuring the patient-centered medical home. J Gen Int Med. 2010;25(6):601–612. doi: 10.1007/s11606-010-1291-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.American Academy of Pediatrics. Building your medical home. http://www.pediatricmedhome.org/progress_summary/tools_index.aspx. Accessed November 1, 2013.

- 3.Iowa Healthcare Collaborative. Medical Home Toolkit. http://www.ihconline.org/aspx/general/page.aspx?pid=12. Accessed October 30, 2013.

- 4.Anthem Blue Cross. Patient-centered primary care program — provider toolkit. http://www.anthem.com/wps/portal/ca/provider?content_path=provider/f0/s0/t0/pw_e191353.htm&label=Patient-Centered%20Primary%20Care%20Program %20%E2%80 %93 %20 Provider%20Toolkit&rootLevel = 3 . Accessed November 2, 2013.

- 5.Burton RA, Devers KJ, Berenson RA. Patient-Centered Medical Home Recognition Tools: A Comparison of Ten Surveys’ Content and Operational Details. Washington, DC 2012

- 6.i2i Systems. PCMH Toolkit. http://www.i2isys.com/resource-center/i2iToolkits/. Accessed October 29, 2013.

- 7.Rosland AM, Nelson K, Sun H, et al. The patient-centered medical home in the Veterans Health Administration. Am J Man Care. 2013;19(7):e263–e272. [PubMed] [Google Scholar]

- 8.Ayers LR, Beyea SC, Godfrey MM, Harper DC, Nelson EC, Batalden PB. Quality improvement learning collaboratives. Qual Manag Health Care. 2005;14(4):234–247. doi: 10.1097/00019514-200510000-00010. [DOI] [PubMed] [Google Scholar]

- 9.Boushon B, Provost L, Gagnon J, Carver P. Using a virtual breakthrough series collaborative to improve access in primary care. Jt Comm J on Qual Patient Saf. 2006;32(10):573–584. doi: 10.1016/s1553-7250(06)32075-2. [DOI] [PubMed] [Google Scholar]

- 10.Kitson A, Harvey G, McCormack B. Enabling the implementation of evidence based practice: a conceptual framework. Qual Health Care. 1998;7:149–158. doi: 10.1136/qshc.7.3.149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Luck J, Hagigi F, Parker LE, Yano EM, Rubenstein LV, Kirchner JE. A social marketing approach to implementing evidence-based practice in VHA QUERI: the TIDES depression collaborative care model. Implement Sci. 2009;4:64. doi: 10.1186/1748-5908-4-64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Ling JC, Franklin BA, Lindsteadt JF, Gearon SA. Social marketing: its place in public health. Annu Rev Public Health. 1992;13:341–362. doi: 10.1146/annurev.pu.13.050192.002013. [DOI] [PubMed] [Google Scholar]

- 13.Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. doi: 10.1186/1748-5908-4-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–1288. doi: 10.1177/1049732305276687. [DOI] [PubMed] [Google Scholar]

- 15.Williams RL. A note on robust variance estimation for cluster-correlated data. Biometrics. 2000;56(2):645–646. doi: 10.1111/j.0006-341X.2000.00645.x. [DOI] [PubMed] [Google Scholar]

- 16.Efron B, Tibshirani R. An introduction to the bootstrap. New York: Chapman & Hall; 1993. [Google Scholar]

- 17.Cameron AC, Gelbach JB, Miller DL. Bootstrap-based improvements for inference with clustered errors. Rev of Economics and Statistics. 2008;90:414–427. doi: 10.1162/rest.90.3.414. [DOI] [Google Scholar]

- 18.American College of Physicians. ACP Practice Advisor. https://www.practiceadvisor.org/home. Accessed October 30, 2013.

- 19.Michigan Primary Care Consortium. Patient-centered medical home toolkit. http://www.mipcc.org/tools-and-more/patient-centered-medical-home-toolkit. Accessed November 1, 2013.

- 20.Speroff T, Ely EW, Greevy R, et al. Quality improvement projects targeting health care-associated infections: comparing Virtual Collaborative and Toolkit approaches. J Hosp Med. 2011;6(5):271–278. doi: 10.1002/jhm.873. [DOI] [PubMed] [Google Scholar]

- 21.Eagle KA, Gallogly M, Mehta RH, et al. Taking the national guideline for care of acute myocardial infarction to the bedside: developing the guideline applied in practice (GAP) initiative in Southeast Michigan. Jt Comm J Qual Improv. 2002;28(1):5–19. doi: 10.1016/s1070-3241(02)28002-5. [DOI] [PubMed] [Google Scholar]

- 22.DeWalt DA, Broucksou KA, Hawk V, et al. Developing and testing the health literacy universal precautions toolkit. Nurs Outlook. 2011;59(2):85–94. doi: 10.1016/j.outlook.2010.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Borbas C, Morris N, McLaughlin B, Asinger R, Gobel F. The role of clinical opinion leaders in guideline implementation and quality improvement. Chest. 2000;118(2 Suppl):24S–32S. doi: 10.1378/chest.118.2_suppl.24S. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOC 583 kb)

(XLSX 16 kb)