Abstract

Despite extensive evidence that adults and children rapidly integrate world knowledge to generate expectancies for upcoming language, little work has explored how this knowledge is initially acquired and used. We explore this question in 3- to 10-year-old children and adults by measuring the degree to which sentences depicting recently learned connections between agents, actions and objects lead to anticipatory eye-movements to the objects. Combinatory information in sentences about agent and action elicited anticipatory eye-movements to the Target object in adults and older children. Our findings suggest that adults and school-aged children can quickly activate information about recently exposed novel event relationships in real-time language processing. However, there were important developmental differences in the use of this knowledge. Adults and school-aged children used the sentential agent and action to predict the sentence final theme, while preschool children’s fixations reflected a simple association to the currently spoken item. We consider several reasons for this developmental difference and possible extensions of this paradigm.

Keywords: sentence processing, eye tracking, event learning, language development

Fluent listeners understand spoken sentences in their native language by integrating informative cues that span multiple words in real-time and actively generating expectations about upcoming language. For example, adults and preschool children can predictively interpret relationships that extend across an agent and an action (e.g. The pirate hides the…) to determine the likelihood of future words in a sentence (e.g. treasure vs. cat; Borovsky, Elman, & Fernald, 2012; Kamide, Altmann, & Haywood, 2003). This example highlights two (of the many) cognitive mechanisms recruited for simple spoken language interpretation: 1) the ability to rapidly activate knowledge of world events underlying multi-word contingencies and to 2) generate predictions during language processing. The developmental timescales of these two processes may not be identical. While it is thought that the knowledge needed to interpret combinatorial relationships in language is gradually acquired across childhood via extensive world and linguistic experience, basic predictive mechanisms of language processing are evident from at least infancy, though this ability is gradually refined with age (Fernald, Pinto, Swingley, Weinberg, & McRoberts, 1998; Fernald, Thorpe, & Marchman, 2010; Fernald, Zangl, Portillo, & Marchman, 2008). Yet listeners of all ages often encounter (and comprehend) spoken language that describes infrequent or novel situations. Until recently, combinatorial processing has only been examined in cases where the event knowledge underlying these sentential and lexical relationships is highly familiar, and only a single prior study has examined how this knowledge becomes instantiated in sentential processing (and only in adults; Amato & MacDonald, 2010). Here we ask: How do adults and children interpret language depicting novel events? We investigate developmental differences in children’s and adults’ online processing of novel event relationships and examine what these differences reflect. After familiarizing adults and children with novel (cartoon) relationships between agents, actions and objects (such as monkeys riding buses), we measured their subsequent online comprehension of these events conveyed in simple transitive sentences using a visual world eye-tracking task.

Linguistic processing in the visual world

Paradigms that measure eye-movements in response to spoken language have significantly advanced our understanding of how children and adults engage in real-time linguistic processing. In this method, variously termed the Visual World Paradigm (VWP) or Looking-while-Listening (LWL) method, visual attention towards objects is monitored as speech unfolds (Fernald, et al., 2008; Huettig, Rommers, & Meyer, 2011; Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995). Because listeners often orient their gaze towards an object before its label is completely spoken (Allopenna, Magnuson, & Tanenhaus, 1998; Dahan, Magnuson, & Tanenhaus, 2001), or even before it is mentioned (Altmann & Kamide, 1999; Altmann & Mirkovic, 2009; Kamide, et al., 2003), gaze is used as an index of real-time comprehension of language (Tanenhaus, Magnuson, Dahan, & Chambers, 2000).

These eye-tracking paradigms have revealed that adults and children can make use of a variety of linguistic and nonlinguistic cues to generate predictions about likely sentence continuations. For example, adults and children as young as two can actively predict a thematically related item (e.g. cake or cookie) when provided a highly selective verb (e.g. eat; Altmann & Kamide, 1999; Fernald, et al., 2008; Mani & Huettig, 2012). More recently, predictive linguistic processing has been observed in cases that require sensitivity to higher order contingencies that extend across multiple linguistic items. For example, adults and children as young as three can make use of combinatorial information that exists across a sentential agent and an action to generate differential expectations of likely sentence themes (Borovsky, et al., 2012; Kamide, et al., 2003).

This process is demonstrated in a study where adults and children (aged 3–10) heard sentences like, The pirate chases the ship, while simultaneously viewing a scene consisting of items that were thematically related to the agent “pirate” (e.g., SHIP and TREASURE), related to the action “chase” (e.g., SHIP and CAT) or unrelated to either agent or action (e.g., BONE Borovsky, et al., 2012). After hearing the verb, even the youngest participants directed most of their fixations towards the SHIP, indicating that they had successfully integrated across the agent and action to anticipate the likely sentential theme. These results further revealed that listeners as young as age three incrementally integrated these combinatorial contingencies as the sentence unfolded. As soon as listeners heard the sentential agent (pirate), the majority of fixations were directed towards the agent-related items (SHIP and TREASURE). After the verb denoting the action was spoken (chases), they generated a small percentage of fixations towards the locally coherent item, “CAT” even though it did not cohere with the global sentential message. Interestingly, even preschool aged listeners displayed fixation patterns similar to that of older children and adults on this task, suggesting that even young listeners are able to activate world knowledge to generate predictions about upcoming items while simultaneously considering less likely outcomes to a lesser degree. However, this prior research considered only highly familiar sentential relationships that were well known to even the youngest participants.

Learning about event relationships

How do adults and children interpret multi-word contingencies that they have only recently encountered, and which are not yet highly familiar? To succeed at this task, listeners need to initially encode and later (re)activate this event information when it is encountered in speech. There are a number of cases in the developmental learning literature that suggests even young children learn some aspects of lexical and event information from limited experience. For instance, children can acquire novel lexical mappings after a single exposure (Carey & Bartlett, 1978; Dollaghan, 1985; Heibeck & Markman, 1987), although these representations may not be as stable or fully developed as words that have been more extensively trained (McMurray, Horst, & Samuelson, 2012). There is also evidence that this ability may extend to information about novel events. For example, 3 to 7 year olds (Hudson & Nelson, 1983) and kindergartners (Fivush, 1984; Smith, Ratner, & Hobart, 1987) are able to provide well-organized and detailed accounts of events like a first day at school, or the steps in a recipe after only a single experience, although the amount of detail that children provide about these events improves with age. Similarly, infants and toddlers ranging from 11–30 months of age (Bauer & Fivush, 1992; Bauer & Mandler, 1989; Bauer & Shore, 1987; Mandler & McDonough, 1995) are able to re-enact novel event sequences by carrying out a previously observed series of actions with a group of toys after only a single observation. Therefore, it seems likely that even preschool-aged children may be able to quickly learn novel multi-word contingencies from events expressed a single time in stories.

The learning of combinatorial relationships of unfamiliar events has been recently explored in adults in an artificial language learning paradigm accompanied by a cartoon world (Amato & MacDonald, 2010). Adults were extensively exposed to novel events via a text-based artificial language (with an unfamiliar lexicon and grammar) accompanied by illustrations that depict various agents (cartoon monsters) performing various actions on objects. Self-paced reading times to sentences in this artificial language were subsequently used to assess knowledge of these event relationships. Although learners did not seem to show explicit awareness of the relationships trained in this paradigm, reading times indicated otherwise. Adult learners were faster to interpret sentences that contained highly frequent conjunctions of agents, actions and objects, compared to less frequent combinations.

This prior study clearly illustrates that adult learners can acquire novel combinatorial relationships in a complex and novel language through extensive training. However, it does not provide specific insight into a related but relatively more common process in language acquisition: that of how language learners of varying ages use a single prior exposure to a novel event to interpret their native language in real-time. One possibility is that children and adults would use information embedded in a single event as a basis for generating predictive expectancies in language. In one sense, an ability to quickly integrate a single prior experience into subsequent linguistic processing would be advantageous and could usefully guide expectations about similar events. For example, after taking a single ride in a car, a learner might form expectations about things that happen in all car trips, such as turning the key in the ignition, fastening a seatbelt or driving on a particular side of the road. But there is a fine line between useful generalization and inappropriate overextension of event knowledge. We may not want odd, rare, or unusual events to skew our general understanding of the world and language. In this case, single-exposure events may not be sufficient to influence later online interpretation of language, irrespective of age. Prior findings that 3–10 year old children succeed at rapidly interpreting well-known agent-action contingencies in simple sentences, also support the idea that children of varying ages may not differ in their interpretation of recently experienced events (Borovsky, et al., 2012).

A primary interest of this paper is a third possible outcome: learners of varying age (or prior knowledge) may differ in their ability to apply information about a single experience to interpreting language about similar events. The simplest possible effect is a traditional developmental progression; older (and more experienced) children may more effectively interpret recently experienced events through language than younger children. Prior developmental studies of event acquisition in 3-to-8 year-old children lend some support to this possibility. Older (school-age) children’s verbal reports of novel events (like recalling the sequence of a story) tend to include more detail and actions than younger (preschool-age) children (Fivush & Slackman, 1986). The inverse effect is also plausible. Younger children, who have less world experience and knowledge than older children, may be more willing to use a single cartoon experience between various agents, actions, and objects as a basis for generating expectancies during real-time language comprehension.

The current study

Whether and how developmental differences exist in children’s interpretation of novel event-relationships in language hits at core questions of how children learn about the world from limited experience and how this knowledge interacts with language ability. In this study, we introduce a paradigm that begins to address these questions. As a first step, we ask whether and to what degree a single exposure to a novel event containing an unfamiliar combination of known agent, actions and objects, (e.g. experiencing a story about a monkey riding a bus) could support subsequent incremental and predictive processing of language describing these same events. We have designed a task where adults and children aged 3–10 years are first exposed to brief but entirely novel situations in colorful and engaging stories that use vocabulary familiar to preschool children. For example, in a story, two agents (e.g. dog, monkey) might each perform two actions (e.g. eating, riding), with different objects (e.g. candy, bus, apple, car). We then use a visual world eye-tracking task to measure whether the event knowledge conveyed via these stories can support anticipatory sentence interpretation processes similar to those for known events.

Experiment 1

Methods

College-aged Participants

56 native English-speaking college students (36 F) between the ages of 18;4 and 24;6 (M= 20;7 years) participated in return for course credit. Exclusionary criteria for participation were: normal or corrected-to-normal vision and normal hearing, no history of diagnosis or treatment for cognitive, attentional, speech, or language issues, and exposure to English as a primary language from birth. An additional 21 students participated but failed to meet the exclusionary criteria. Three were excluded for an experimental error that resulted in a failure to collect eye-tracking data.

Child Participants

63 monolingual English speaking children between the ages of 3 and 10 years of age (35 F, range: 3;4–10;1; M = 6;11 months) were recruited for this study from the San Diego metropolitan area through local fliers and ads or from families who had previously participated in developmental research. Exclusionary criteria were: normal hearing and vision, no diagnosis of any type of speech, language, cognitive or attentional issue, and normal birth histories. Three other children were excluded for failing to complete the eye-tracking task.

Materials

Story design

Participants listened to four stories depicting novel events in a cartoon context in each version of the experiment. These stories established novel relationships between familiar agents, actions and themes. They consisted of frames of colorful images accompanied by child directed speech. The story illustrations were developed using the Toondoo comic strip creation website (http://www.toondoo.com). Each frame of the story consisted of a 400 × 400-pixel illustration on a white background

The story narrations were pre-recorded by a native English speaking female (AB) in a child-directed voice sampled at 44,100 Hz on a single channel and normalized offline to a mean intensity of 70 dB.

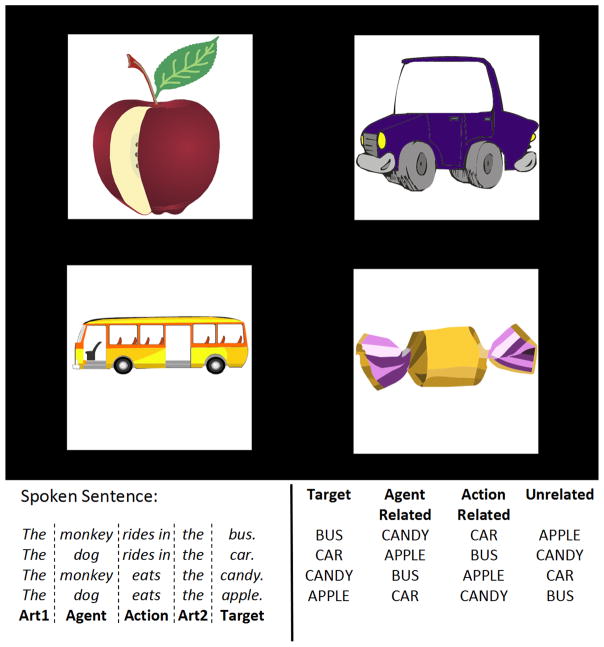

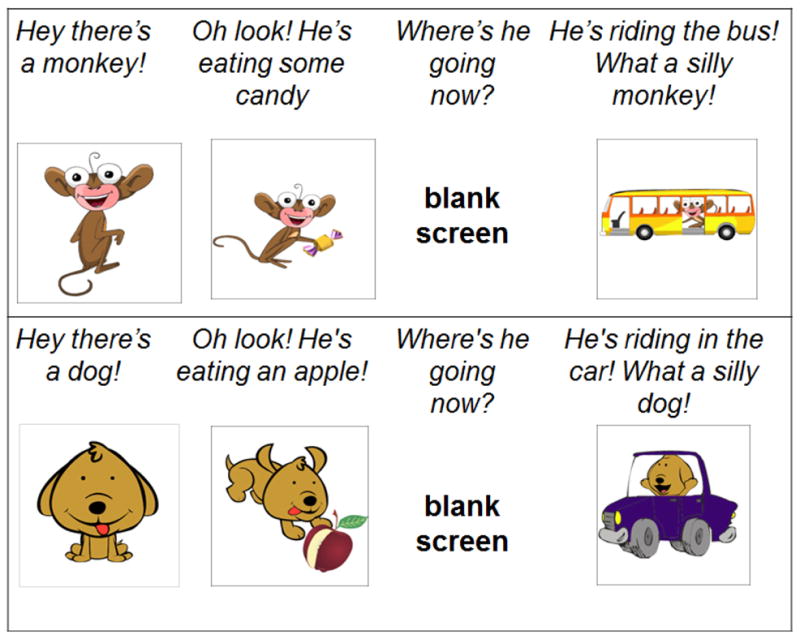

In any story, two agents were depicted as completing the same two actions with different objects (see Figure 1). Each story conformed to standard sequence. First, an agent (a cartoon animal, e.g. MONKEY) would be visually presented before the narrator introduced the agent (e.g. Hey, there’s a monkey). This agent then would complete two different actions (e.g. eating an apple, riding in a bus), illustrated by the pictures and mentioned by the narrator (see Figure 1). Next, the second agent was introduced (e.g. DOG, Hey, there’s a dog!), and this agent completed the same two actions with different objects (e.g. eating candy and riding in a car). All agent-action-theme combinations from all stories are listed in the appendix.

Figure 1.

Illustration of a single version of a story in the study. Each image was presented a single time with accompanying speech (in italics above each image). The story was self-paced, and the experimenter advanced to the next image only when participants were attentive. Across versions, all possible combinations of agents and actions occurred.

This arrangement yielded four novel agent-action-theme pairings that appeared a single time in each story. In the story depicted in Figure 1, the four novel pairings were: monkey-eats-apple, monkey-rides-car, dog-eats-candy, dog-rides-bus. It is important to note that the narrator never mentioned all three elements of each relationship within the same sentence. For example, in the monkey-eats-apple relationship, the narrator introduced the monkey, and then stated that He’s eating an apple, but never explicitly stated that The monkey eats the apple. Although it is not likely that participants had any pre-existing experience with the uncommon events depicted in these stories, we attempted to control for the possibility that any biases regarding the likelihood or plausibility of any event exerted an effect on our experimental findings by shuffling the pairings of agents and themes across versions. All possible combinations of agents, actions and themes appeared across all versions of the study. For example, in one version the monkey might eat an apple and ride in a car, and in another would eat the candy and ride in a bus. Because the agent was never explicitly named by the speaker when mentioning the action, this meant that the same auditory stimulus was also paired with the action-object relationship across counterbalanced version. For example, the same recording of “He’s eating an apple,” was presented for any agent that performed this action (the dog or monkey, depending on the version). Therefore, the acoustic signal containing the spoken information about the event relationships was identical across versions.

Story norming

To determine whether children of all age groups would be able to understand and recall the story content, we asked a separate group of 39 children (10 three-to-four year-olds, 10 five-to-six year-olds, 12 seven-to-eight year-olds, and 7 nine-to-ten year-olds) to complete a story comprehension task. These children did not participate in the eye-tracking study. This task proceeded identically to the eye-tracking procedure except that children were asked to point to pictures corresponding to relationships within the story (e.g. Which one did the monkey eat?/Which one did the dog ride in?) Performance on this task was high among all age groups (ranging from 88%-98% accuracy), and did not vary according to age group, F(3, 35)=1.41, p=.25.

Sentence comprehension stimuli

After each story, participants completed a four-alternative forced-choice online sentence comprehension task. Eight sets of image/sentence quartets were constructed based on the relationships conveyed in the stories. Sentences corresponded to the agent-action-theme relationship conveyed in the story and consisted to a standard structure of Article – Agent – Action – Article – Theme. The example illustrated in Figures 1 and 2 consisted of four sentences:

Figure 2.

Illustration of stimuli and conditions in the sentence comprehension task. In each version of the study, participants heard eight out of 16 possible sentences. Each quartet of four objects was seen twice, with two out of the four possible sentences for each set presented. Across all versions, the position of each object was presented with equal frequency in each quadrant, and in each version, the target image appeared in each quadrant an equal number of times.

The monkey rides the bus.

The monkey eats the candy.

The dog rides in the car.

The dog eats the apple.

Images corresponded to the themes conveyed in the story and the sentences (e.g., BUS, CAR, APPLE and CANDY). For each sentence, the images would correspond to one of the following four image conditions: Target, Agent-Related, Action-Related, or Unrelated. For example, in the sentence, The monkey rides the bus, BUS would be the target image, CANDY would be the agent-related image, CAR would be the Action-Related image, and APPLE would be the Unrelated distractor. This arrangement yielded a completely balanced design for each quartet whereby each image would appear in each condition a single time (see Figure 2). This allowed each word and image to serve as its own control across versions and served to precisely balance out potential differences in saliency, preference or familiarity of the words and images across versions.

Visual images of the themes were copied and enlarged from their original illustration in the story and placed on a white background in a 400 × 400 pixel square. The images depicted typical cartoon illustrations of each of the target items.

The auditory stimuli were recorded by the same female native English speaker for the stories (A.B.) in a child-directed voice, sampled at 44,100 Hz on a mono channel. Since we were interested in the timing of fixations that occurred across the sentence, we precisely controlled the duration of each sentential word by using Praat audio editing software (Boersma & Weenink, 2012), following the same procedure outlined in Borovsky et. al., (2012). The timing of the sentential word durations was as follows: Art 1, 87ms; Agent, 933ms; Action, 737ms; Art 2, 94ms; Theme, 748ms. This ensured that there was equivalent time in each sentence to process the stimuli and predict the sentence final theme.

For each version, 16 sentences were created of equal duration and acoustic intensity, and participants heard 8 out of 16 possible sentences in any single version of the study. Each quartet of four objects was presented twice, immediately after its corresponding story, with two out of the four possible sentences for each set presented. Across versions, the location of each object and target image appeared with equal frequency across all quadrants.

Procedure

Experimental Task

Adult and child participants were first seated in a comfortable, stationary chair in front of a 17-inch LCD display while the eye-tracker camera was focused. A computer running SR Research Experiment Builder software displayed audio and visual stimuli to the participants. Participants were instructed that they would hear a short story followed by some sentences. They were asked to listen carefully to the story and then either use the mouse (adults) or their hands (children) to point to the picture that “goes with the sentence.” Participants were given a single practice sentence before beginning the experimental task. After the practice trial, the eye-tracker was calibrated and validated with a manual 5-point display of a standard black-and-white 20-pixel bull’s-eye image.

First, participants heard a simple story that served to establish novel relationships between agents, actions and themes (see story example in Figure 1). Each story proceeded as a series of colorful digital cartoon images presented in silence for two seconds before the narration began. After the narration was complete, the image remained on the screen until the experimenter (for children) or participant (for adults) clicked the mouse to begin the next frame. For children, the experimenter only advanced the story when the child was attentive to the screen.

Each story was then followed by a visual world comprehension task where participants listened to two sentences as they viewed the four objects presented in the previous story (see sentence comprehension task example in Figure 2). Eye-movements were recorded at this time. Before each sentence, participants were instructed to fixate on a centrally located black-and-white 20-point bull’s-eye image. This served as a drift correction dot prior to the trial. After fixating to this image, the sentence comprehension task began.

Participants viewed a set of four images for 2000 ms before the sentence was spoken. These images remained on the screen after sentence offset and until the participant selected an image from the array with the mouse or by pointing. If needed, recalibration of the eye-tracker was performed between trials, but this was rarely necessary. Participants were given a break halfway through the study, and the entire task took approximately 5–10 minutes.

Eye-movement recording

Eye-movements were sampled at 500 Hz using an EyeLink 2000 remote eye-tracker with remote arm configuration. The eye-tracking camera was attached directly below the LCD display, and was adjusted so that the display and camera were 580–620mm from each participant’s right eye. The eye-tracking system automatically detected the position of a target sticker affixed to each participant’s forehead to accommodate for movements of head and eye-position relative to the camera.

Eye-movements were recorded during the sentence comprehension task from the moment the images initially appeared on the screen until the participant selected a picture. These eye-movements were automatically classified as saccades, fixations and blinks using the eye-tracker’s default threshold setting and were binned into 10-ms intervals offline for subsequent time course analyses.

Results

We first assess behavioral target picture selection responses to the sentence interpretation task as an index of whether participants of all ages understood the sentences and the task. We then focus on real-time interpretation of the sentences by analyzing moment-by-moment changes in eye-movements to the target and competitor pictures. In all of our analysis, we compared adults to children to initially calculate whether and to what degree gross developmental differences exist in the task. Because we were further interested in finer developmental differences within the relatively wide age-span of our child groups, we then sub-divided the children into four age groups: 1) Preschool-aged children (three-to-four years old, N=14), and three school-aged groups: 2) Five-to-six year olds (N=18), 3) Seven-to-eight year olds (N=16), and 4) Nine-to-ten year olds (N=15). Our logic for this subdivision was to compare preschool-aged children to early and later school-aged child groups with similar numbers of participants and age-spans.

Behavioral accuracy

After each sentence was spoken, adult and child participants selected the image that corresponded to the theme (Target) of the sentence either by pointing (children) or via mouse click (adults). Performance on this task was high in both groups, with very few errors in selecting the correct target picture. Children selected the incorrect picture 10 times out of 504 total trials (98.0% accuracy), and adults made a single error out of 448 trials (99.7% accuracy). All of the results reported below exclude these incorrect trials from the analyses.

Eye-movement analyses

We carried out several analyses on the eye-movement data to address two main questions 1) Do adults and children generate predictive fixations for recently experienced events and 2) Does fluency in interpretation of these novel event combinations change with age?

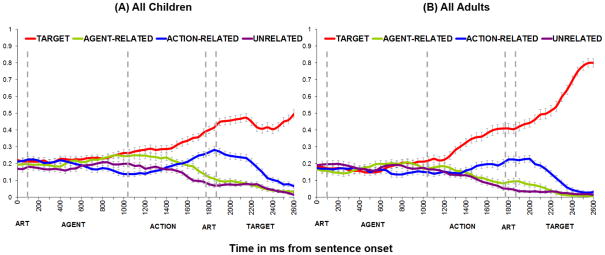

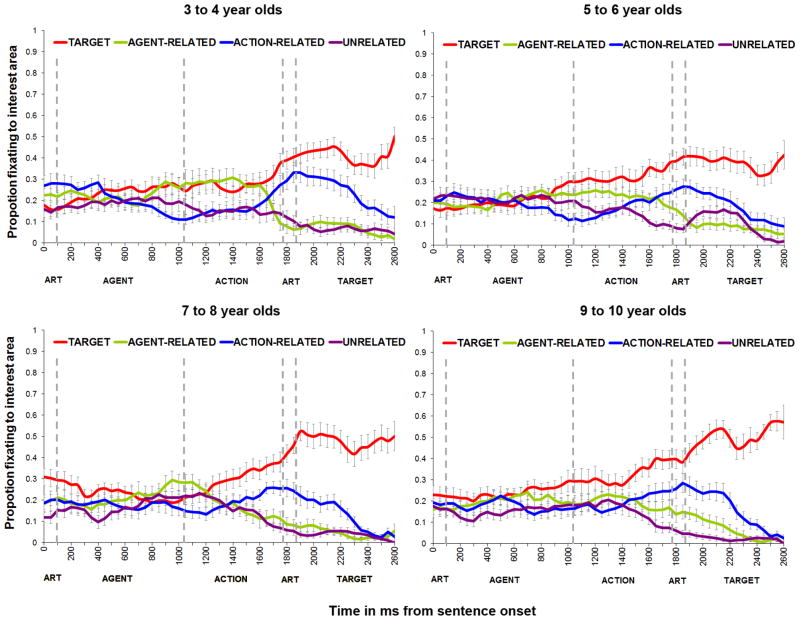

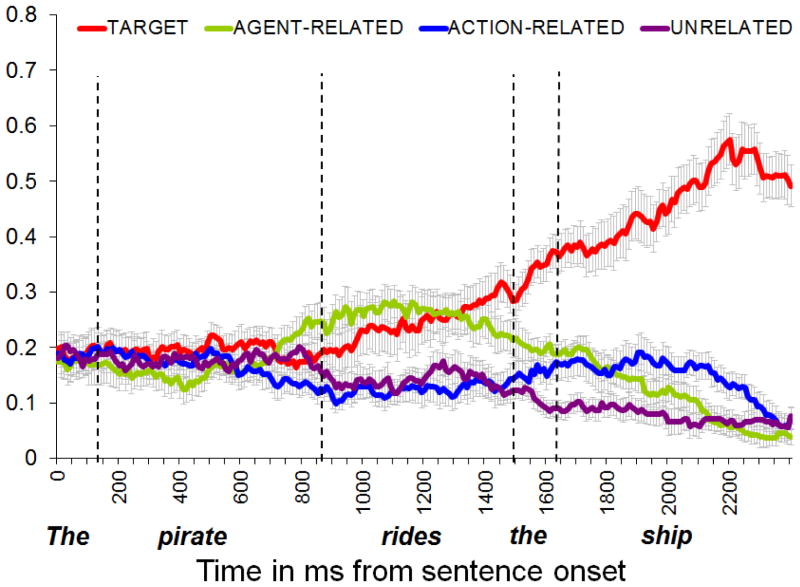

Timecourse visualization

We first illustrated the timecourse of fixations across the sentence for adult and child groups by calculating the mean proportion of time spent fixating to the Target, Agent-Related, Action-Related and Unrelated images in 50-ms time bins, averaged across all participants. Figure 3 illustrates the time-course of online sentence interpretation in adult and child groups. Figure 4 illustrates the time course of looks across the sentence in our pre-defined age subgroups (3–4 year olds, 5–6 year olds, 7–8 year olds, and 9–10 year olds).

Figure 3.

Timecourse of fixations to areas of interest in 50ms time bins for all adults and children during the sentence comprehension task in the first experiment.

Figure 4.

Timecourse of fixations to areas of interest in 50ms time bins for all children in all age groups during the sentence comprehension task in the first experiment.

Visual inspection of these time-course plots reveals several important fixation patterns. All groups show a relative increase in looks towards the Target object as the sentence unfolds. In most groups (all except for 3–4 year olds), these fixations appear to be anticipatory – that is – the fixation proportion to the Target items diverge from looks to all other objects before the Target word (the sentential theme) is spoken. Further, fixation proportions to the Agent-Related and Action-Related items increase soon after the agent and action are spoken, respectively. Across age groups, the relative pattern and timing of Target fixation divergence varies considerably. We explore these potential differences by initially comparing performance between all child and adult participants, and subsequently by comparing child performance across the predefined age subgroups in the analyses below.

Analysis of anticipatory fixations

In this task, we infer that participants have predicted the likely sentence ending if fixations towards that Target images are significantly larger than those to any other interest area (and remain so) before the onset of the Target word. In our analyses, we measure whether anticipatory fixations have occurred by measuring fixation proportions over the 50 ms time bin preceding the onset of the target word. We expect that if participants are predicting the not-yet-mentioned theme of the sentence, then their fixations towards the target items should exceed that of fixations towards any other single distractor at this time point. However, simple proportional measures of looks towards these interest regions violate assumptions of linear independence, because larger mean fixation proportion towards one of the items necessarily results in fewer looks towards the other items. Instead, we carried out our analyses on a measure that defines the relative bias to view the Target object relative to other distractors, log-gaze probability ratio (see Arai, van Gompel, & Scheepers, 2007; Knoeferle & Kreysa, 2012 for a similar approach). That is, in the 50ms time bin preceding the onset of the sentential theme, we calculated three main log-gaze proportion ratios1: (1) Target vs Agent-Related: log(P(Target)/P(Agent-Related), (2) Target vs Action-Related log(P(Target)/P(Action-Related), and (3) Target vs Unrelated, log(P(Target)/P(Unrelated). An additional advantage of log-gaze probability ratio measures is that they can range in value between positive and negative infinity, unlike simple proportion measures that are bounded between 0 and 1 (which additionally violates assumptions of homogeneity of variance). With log-gaze measures, a score of zero indicates that looks towards the Target and distractor are equivalent, while a positive score indicates that Target fixations exceed those of the distractor, and a negative score reflects the opposite.

We are primarily interested in whether developmental effects exist in the task. To explore how the magnitude of anticipatory fixations towards the Target relative distractors varied across age, we carried out two ANOVAs on log-gaze probabilities with participant Age group (Adults vs. Children in the first analysis, and Child age groups: 3–4 years, 5–6 years, 7–8 years, and 9–10 years in the second) as a between-subjects variable.

In the first analysis comparing Adults vs. Children, log-gaze proportions did not vary between Adult and Child groups in the time bin preceding the target word onset (Fs<1) for all three Target-Distractor comparisons. A second analysis compared log-gaze proportions across child age-groups. Log-gaze proportions varied by Age for the Target vs. Agent-Related proportions, F(3, 59)=3.61, p=.018, but not for the Target vs. Unrelated or the Target vs. Action-Related proportions, (Fs<1). This indicated that child age groups varied in viewing the Target relative the Agent-related distractor differently before the onset of the sentential theme.

We were especially interested in whether or not all age groups showed anticipatory fixation effects. To determine if all groups showed anticipatory fixations, we asked if all three log-gaze fixations ratios were significantly above zero across Age-Groups (both Adults vs. Children, and across the four child age groups, as above) in the time bin that preceded the onset of the target word. These analyses are outlined in Table 1 (for Adults and Children) and Table 2 Both adults and children, as a group, showed anticipatory fixations to the Target, as indicated by significantly positive values for all three log-gaze ratios. Analyses on child age groups also indicated that school-aged children (5–10 year olds) generated anticipatory fixations towards the Target more than to all other Distractor images (e.g. the Action-Related, Agent-Related, and Unrelated items). In contrast, three and four year-olds did not show an anticipatory effect. Three and four year-olds’ log-gaze probability scores indicated that fixations to the Target and Action-Related items were statistically equivalent.

Table 1.

Log-gaze probability comparisons preceding target onset for all adult and child participants

| Children n=63 | |

| Target vs. Agent-Related | 1.99b (1.71) |

| Target vs. Action-Related | 0.72b (1.38) |

| Target vs. Unrelated | 2.32b (1.56) |

| Adults n=56 | |

| Target vs. Agent-Related | 2.20b (1.86) |

| Target vs. Action-Related | 0.73a (1.47) |

| Target vs. Unrelated | 2.57b (1.58) |

Note: Standard deviations are reported in parentheses. Cells in bold represent log-gaze probabilities that are significantly positive,

p < .001,

p < .0001

Table 2.

T-statistics for log-gaze probability in time bin preceding target onset for child participants across four age groups

| 3 to 4 yrs (N = 14) | |

| Target vs. Agent-Related | 2.46d (1.55) |

| Target vs. Action-Related | 0.43 (1.20) |

| Target vs. Unrelated | 1.97c (1.53) |

| 5 to 6 yrs (N=18) | |

| Target vs. Agent-Related | 1.16b (1.42) |

| Target vs. Action-Related | 0.82a (1.66) |

| Target vs. Unrelated | 2.28d (1.72) |

| 7 to 8 yrs (N=16) | |

| Target vs. Agent-Related | 2.81d (1.72) |

| Target vs. Action-Related | 0.73a (1.26) |

| Target vs. Unrelated | 2.59d (1.56) |

| 9 to 10 yrs (N=15) | |

| Target vs. Agent-Related | 1.67b (1.75) |

| Target vs. Action-Related | 0.88a (1.38) |

| Target vs. Unrelated | 2.41d (1.49) |

Note: Cells in bold represent log-gaze probabilities that are significantly positive,

p < .05,

p < .01,

p < .001,

p < .0001

Experiment 2

One potential explanation for the presence of anticipatory effects in older but not younger age groups in Experiment 1 was that school-aged and adult participants altered their strategy after the first story-sentence block by explicitly rehearsing and recalling elements of the story during sentence comprehension. We tested this possibility in a second study where we reduced the number of story-sentence task blocks from four to two, while keeping all other methodological details the same. In this case, a block consisted of two stories before the sentence comprehension task. If the prior results can be explained by a shift in strategy from the first to subsequent blocks, then we should not expect to see evidence of anticipatory fixations to the target image in the first block, and that anticipatory effects should exist in the second block. Therefore, we compared performance across blocks to see if participants changed their strategy and generated predictive eye-movements in the second but not the first block.

Method

Participants

Fifty-three college students (34 F, age range: 18;10 – 26;10, M: 20;7) at UCSD who had not participated in the prior study took part in this task. An additional 16 students did not meet exclusionary criteria, outlined in Experiment 1. An additional six were excluded either for a failure to capture eye-movement data (four participants) or for using different audio hardware (two participants).

Stimuli

The stimuli were identical to those used in Experiment 1.

Procedure

The procedure was identical to that used in Experiment 1 except for the blocking arrangement. In this experiment, participants first listened to two stories before completing the sentence comprehension task, yielding two experimental blocks, rather than four.

Results

We sought to determine if participants’ fixations varied between blocks, and if the participants generated anticipatory fixations to the target item before it was spoken across both blocks. Our analyses therefore focused on whether log-gaze fixation proportions indicated greater looking towards the Target relative to other distractors during the time bin preceding onset of the target word.

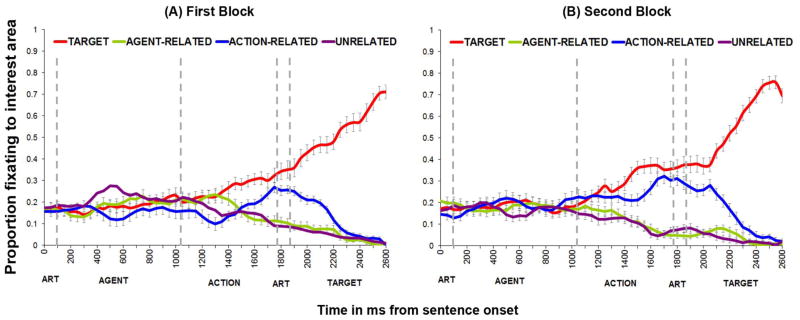

Time course of fixations across the sentence

Using identical procedures to Experiment 1, we first illustrated the time course of fixations to each region of interest as the sentence was spoken. This is illustrated in Figure 5. As in the previous study, fixations swiftly rose to the Target item after the action was spoken. This pattern was similar for trials in the first and second experimental block.

Figure 5.

Timecourse of fixations to areas of interest in 50ms time bins for all across the first and second block of the sentence comprehension task in the second experiment.

Analysis of anticipatory fixations

Following the procedure in Experiment 1, we then calculated log-gaze probabilities for the Target relative to each of the distractor images in the time bin preceding target onset. We first asked whether log-gaze probabilities differed between blocks with a repeated measures ANOVA analysis, with block as the within-subjects factor. This analysis did not find significant differences between blocks for the Target/Action-Related fixations (F<1), and Target/Unrelated fixation probabilities, F(1,52)=1.77, p=.19. Log gaze probability ratios did vary between blocks in viewing the Target relative the Agent-related distractor, F(1,52)=4.37, p=.042, with participants viewing the Target vs. Agent-related distractor relatively more in the second vs. first block. Follow-up analyses, however, indicated that participant’s fixations to the Target exceeded fixations to the Agent-related distractor in both blocks (see Table 3). However, fixations to the Target did not exceed fixations to the Action-related item, although this did not vary by block. This pattern indicates that in both blocks, participants did not show strong evidence of anticipatory fixations at the time bin immediately preceding the onset of the Target object. Our findings also do not support the possibility that participants used different sentence interpretation strategies between blocks.

Table 3.

Log-gaze probability in the time bin preceding target word onset for first and second block in experiment 2.

| First Block | |

| Target vs. Agent-Related | 1.55a (2.86) |

| Target vs. Action-Related | 0.29 (2.60) |

| Target vs. Unrelated | 1.73b (2.61) |

| Second Block | |

| Target vs. Agent-Related | 2.48b (2.02) |

| Target vs. Action-Related | 0.31 (2.21) |

| Target vs. Unrelated | 2.27b (2.11) |

Note: Standard errors are reported in parentheses. Cells in bold represent log-gaze probabilities that are significantly positive,

p < .001,

p < .0001

Discussion

Our study was motivated by the observation that even very young children show significant facility in using well-established knowledge to swiftly interpret complex contingencies across multiple words in spoken sentences. However, little was known about how children and adults initially acquire and use this knowledge during online sentence comprehension. A number of potential outcomes existed, each of which could shed light as to how children and adults use information from a single experience to update their representation and real-time activation of event knowledge during language comprehension. For example, participants could have used a single novel experience to selectively interpret similar events in language, while ignoring previous experience. Another possibility is that a single experience may not be sufficient to lead to rapid predictions of likely themes in similar events, or that this ability may change across childhood. Therefore, the goal of this study was to examine how adults and children use recently learned (fast-mapped) event information to interpret spoken sentences in real-time.

We first introduced novel cartoon situations between agents, actions and themes via a colorful, engaging, and common childhood learning format (story-telling) before measuring their online interpretation of these fast-mapped events in simple sentences in a visual-world eye-tracking task. These sentences were simple transitive constructions that consisted of two main informative cues (the sentential agent and action) that were spoken prior to the final target stimulus (the sentential theme). Prior work had shown that adults and children can rapidly integrate these two cues to generate predictive eye-movements to the sentence-final thematic object when this information is well known (Borovsky, et. al., 2012; Kamide et. al., 2003). In this study, we extend this literature by asking how this knowledge is deployed in online sentence comprehension after a single exposure in a story context.

Adults and school-age children (5–10 year olds) were adept at learning the information from these stories, as established by norming and our experiment. Crucially, they were able to rapidly reactivate and utilize this recently learned information in real-time to generate anticipatory looks to event-relevant targets during a visual world sentence interpretation task. When school-age children heard a recently established agent and action combination, they quickly gazed to a predicted sentence final object, much as adults and children do when they hear similarly structured sentences containing highly familiar knowledge (Borovsky, et. al., 2012; Kamide et. al., 2003).

Our 3 and 4 years olds show a different pattern from that of school-aged and adult participants – one that suggests that they fail to integrate across information present at the agent and action in time to anticipate the final word. This pattern is not entirely consistent with incremental differences in processing fluency across age. Instead, preschool-aged children appear to use simple lexical association more than their older peers do. Immediate lexical association in school-aged children was still evident, but it was clearly down-weighted in favor of a combinatorial interpretation of the previous cues2. This developmental pattern is visually evident in Figure 4, where we see that preschool-aged children initially look equivalently to the target and agent-related item at the onset of the verb, and then show equivalent fixations towards the target and action-related item as the verb unfolds. This visual pattern is reflected in our statistical analysis in Table 2, where the three-four year old children’s fixations to the Target object did not significantly vary from fixations to the Action-related item in the period preceding the onset of the target word. In contrast, older children fixated significantly more to the target relative to the action-related item before the onset of the target word, although fixations to the action-related item exceeded those to the unrelated item, indicating that simple lexical association does influence processing even in these older groups as well, but simply to a lesser degree than in the preschool-aged group. This pattern in our preschool-aged group is strikingly different compared to our observations in prior work where 3 and 4 year-old children activate well-known event relations to integrate across an agent and action (e.g., in a sentence like “The pirate chases the ship”) to direct fixations to the sentence-final object (SHIP), before it is spoken (Borovsky, et. al, 2012; Figure 6).

Figure 6.

3 and 4 year-old participants from Borovsky et. al., 2012. Timecourse of looking to sentences with well-known relationships, such as, The pirate chases the ship, illustrated in 10ms time bins.

Developmental differences

Why do the preschool-aged children favor lexical association over combinatorial prediction for novel information, when older children and adults do not? There are a number of potential explanations. Memory or attentional task demands may be overly challenging for this age group. Or there may be age differences in task-strategy or speed of activation or acquisition of event knowledge.

Regarding memory and attentional demands, there are clear differences between preschool- and school-aged children in a number of markers of memory, including working memory, meta-memory and implicit memory (Gathercole, 1998). Executive functions of attention, like cognitive control, also change significantly from preschool to adolescence (Huizinga, Dolan, & van der Molen, 2006; Kirkham, Cruess, & Diamond, 2003; Zelazo & Müller, 2010). It is likely that development of these cognitive skills could influence language processing (Cragg & Nation, 2010; Deák, 2004).

However, we deliberately constructed our task to minimize the potential effect of these variables in several ways. First, we designed our stories to be short (less than one minute in duration) and engaging (by including colorful cartoon characters and child-directed speech). Second, we minimized the time between story presentation and sentence comprehension by interleaving the two tasks. Third, the timing of the task was self-paced so that it only proceeded if the child was attentive to the stimuli on the computer screen. Finally, we failed to find age-differences in story recall during an additional norming task where we asked a group of 3–10 year olds to recall the relationships in the stories from questions like “Which one did the monkey eat?” Given these measures, we are reasonably confident that our task demands were well within the memory and attentional capacities of even our youngest participants.

Another possibility is that the rate of speech in the task may have influenced our developmental effects. While we did develop stimuli using child directed speech, it is possible that even slower speech stimuli may allow the youngest participants enough time to develop predictive expectations about the event. Future research would need to explore how speech rate may influence predictive sentence processing across development.

Another potential interpretation for the preschool-age children’s performance is that there are developmental differences in strategic engagement in the task. Because the story and sentence comprehension tasks were alternated in four blocks, it is possible that older children and adults engaged in an explicit memory or rehearsal strategy to boost sentence comprehension performance after hearing the first story. This interpretation is unlikely for two reasons. First, the time-course of fixations in the first study across older children and adults was not consistent with this strategy. In this case, we would have expected participants to rely solely and rigidly on their explicit memory to interpret the sentence so that participants use the agent and action cue to gaze only to event-consistent items. However, our participants also generated additional fixations to event-inconsistent but locally-coherent Action-related items after the action was spoken. Secondly, the findings of the second experiment did not support this interpretation. In this study, we found that adults, who would have been the most likely of all participants to exploit such a strategy, showed very similar looking patterns before and after they would have had the chance to develop this strategy. Interestingly, adults did not appear to show strong evidence of anticipation in this second experiment, perhaps indicating that the additional memory demands of this manipulation may weaken the anticipatory effects that we saw in Experiment one.

Younger children may also have been less flexible in their ability to integrate new knowledge into existing event representations than older children and adults. Although our norming indicated that all participants were able to minimally learn and recall the story situations, it is possible that there could have been developmental differences in the activation or representation of the novel events. This might have led younger children to be less efficient at reactivating these novel situations during the sentence task. For example, preschool children may be less willing than older children to generate real-time predictions based on a situation where a monkey could be a reasonable participant in public transit, because this is too far outside their prior understanding of bus-riding events and monkey behavior. This account is consistent with reports that that 3 and 4 year-old children are less accurate than older children and adults in recall of non-canonical or time-varying versions of familiar situations (Hudson & Nelson, 1983). Similarly, Fivush, Kuebli & Clubb (1992) found that three year olds had more difficulty than five-year-olds in learning novel variations of a recently acquired event. Interestingly, this does not seem to stem from a developmental difference in ability to learn about novel situations; even infants and toddlers seem to rapidly learn about new events (Bauer & Fivush, 1992; Bauer & Mandler, 1989; Bauer & Shore, 1987; Mandler & McDonough, 1995). Instead, it may be that preschoolers have relatively more difficulty than school-aged children in learning novel permutations of familiar events than about novel relationships in novel events.

A related idea is that the developmental differences in our study instead relate to variation in the underlying representation of familiar components that make up the novel situation. In the experimental stories, children heard novel relations between known agents, actions and objects. In this case, it may be that preschool children’s representations of these known items may have been less well-established than older children’s, therefore leading to difficulty when learning a novel combination of these elements. This idea is supported by findings where toddlers show difficultly learning novel actions with unfamiliar agents, compared to familiar agents (Kersten & Smith, 2003). Future research would need to delve into whether it is the knowledge underlying the event components or familiarity of the event itself that affects how young children interpret novel situations.

Another possibility is that preschool children’s ability to flexibly control their visual attention may affect how they interpret novel events in real-time. This account suggests that when our younger participants examined both ride-able items (car and bus) when hearing the The monkey rides the…, they may have had greater difficulty than older children in suppressing the inappropriate ride-able item. Indeed, there is an extended developmental trajectory of the ability to selectively inhibit, control and direct visual attention (Davidson, Amso, Anderson, & Diamond, 2006; Deák, 2004). Similarly, measures of cognitive control associate with how four and five year olds re-interpret the syntactic structure of garden path sentences (Choi & Trueswell, 2010). Further research would be necessary to explore how cognitive control abilities affect interpretation of event knowledge in sentences.

These developmental effects also have relevance for recent proposals regarding mechanisms for prediction in language processing (e.g. Pickering and Garrod, 2013). Two mechanisms of prediction are proposed within this framework: (1) prediction by simulation, which involves covert production processes, and (2) prediction by association, which involves activation of long-term representations consistent with the current communicative context. In our findings, we see that the youngest participants do generate predictions based on long-term associations between the action and potential thematic objects (e.g. when hearing the verb “eat” 3 and 4 year olds view both edible items). But the youngest children do not seem to consider the more recently acquired association (e.g. that there is a specific edible item that would be preferred in the current event context). One interpretation is that the youngest children are relying more on longer-term associations than on recently established association to generate predictions from the combinatorial relationship, while the older children do the opposite. This distinction suggests the need to potentially include differential developmental mechanisms for recently-established and long-term knowledge in the prediction-by-association account. This idea is consistent with Mani and Huettig (2013)’s commentary that models of prediction may require the inclusion of additional mechanisms to fully capture developmental processes. It also is consistent with Chang, Kidd and Rowland (2013)’s position that learning processes may provide important insights in models of language processing and prediction.

Future Directions

This investigation introduces a novel learning paradigm that explores how individual experience and knowledge affects real-time sentence comprehension. We show for the first time that fast-mapped relationships between agents, actions and themes can support anticipatory sentential interpretation. Impressively, children as young as five are able to successfully learn and reactivate this knowledge when understanding speech. This investigation represents a beginning step, and raises a number of additional avenues for future study.

One intriguing issue is how novel situations may be generalized to new events. The answer to this question will illuminate how learners can draw upon prior experience to interpret language accompanying completely unfamiliar situations. Although this was not the primary focus of our study, our results are indirectly relevant to this question. The fact that the youngest children (3–4 year olds) failed to predictively activate knowledge of the recently acquired three-way contingencies between agent-action-object suggests that these children may also behave conservatively in building generalizations based on novel events. Thus, it may be particularly fruitful to study the development of event-generalization abilities between age three to six.

It will also be important to understand the role that memory and learning processes play in this task. In our task, it seems likely that additional information or exposure to the story could lead to some modulation of real-time sentence interpretation, especially in preschool-aged children. In particular, it would be interesting to determine how much exposure to a novel event is necessary for preschool-aged children to show predictive interpretation of the events during sentence processing. Future work would need to address not only how this knowledge becomes established with additional training, but also how this may interact with participants’ ability to retain and reactivate this knowledge over time.

An additional question that arises from this work is the extent to which the visual context and linguistic cues in the story contexts may individually facilitate subsequent sentence processing. Would listeners perform similarly on the sentence processing task if they had only listened to stories about novel events, without supporting images? Or, could the opposite be true – would the visual images have been sufficient? In some cases, young children (younger than six years old) seem to have difficulty using referential cues in visual scenes during online sentence processing tasks (Kidd & Bavin, 2005; Snedeker & Trueswell, 2004; Trueswell, Sekerina, Hill, & Logrip, 1999), although there is recent work to suggest that visual context can influence some aspects of sentence processing in five-year olds (Zhang & Knoeferle, 2012). While there has been some studies of how adults integrate event, linguistic and visual cues integrate during sentence comprehension (Knoeferle & Crocker, 2006), less work has examined how these cues conspire to assist learners of all ages in real-time language interpretation of novel situations.

Conclusions

In sum, we have introduced a paradigm that has the potential to address a variety of questions about the role of prior event experiences on sentence comprehension in learners at least as young as age three. This research represents a first step in this process, and we have used this method to explore how a single experience with a situation involving a novel situation between agents, actions and objects can influence subsequent real-time interpretation of sentences describing these events. Our findings reinforce the idea that adult-like mechanisms of linguistic processing develop over an extended period in childhood, and that important differences exist between preschool and school-aged children in their ability to interpret information about novel experiences.

Highlights.

We examine acquisition and interpretation of combinatorial relationships in sentences

We developed an event-teaching paradigm to train agent-action-object relationships

We ask if children and adults predictively interpret novel event relationships

5–10 yr-olds and adults predictively interpreted novel agent-action sequences

3–4 yr-olds did not interpret multiple event components predictively

Acknowledgments

We are deeply grateful to the families and children throughout the San Diego region who have and continue to participate in developmental research. We also wish to thank Falk Huettig, Martin Pickering and two anonymous reviewers for their helpful suggestions that have improved this manuscript. This work was funded by grants received from the National Institutes of Health: HD053136 to JE and DC010106 to AB.

Appendix

Outline of the agent, action and thematic objects sets used in the study. In each story set, each agent performed both actions, and different agents were associated with different objects (e.g. if the monkey eats the candy, then the dog eats the apple). All possible combinations of Agents, Actions and Objects occurred across lists in the study.

| Agents | Action1 | Object 1 | Action2 | Object 2 | |

|---|---|---|---|---|---|

| Set 1: | Monkey, Dog | Eats | Candy, Apple | Rides in | Car, Bus |

| Set 2: | Mouse, Cat | Tastes | Ice cream, cake | Wears | Hat, Glasses |

| Set 3: | Turtle, Frog | Turns on | TV, Computer | Cuts | Paper, Bread |

| Set 4: | Lion, Duck | Flies | Kite, Airplane | Sits on | Rock, Fence |

Footnotes

Because log ratios are undefined for 0, we replaced every instance of a zero value in the numerator or denominator with a value of 0.01

We wish to thank an anonymous reviewer for suggesting this idea.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Allopenna PD, Magnuson JS, Tanenhaus MK. Tracking the Time Course of Spoken Word Recognition Using Eye Movements: Evidence for Continuous Mapping Models. Journal of Memory and Language. 1998;38(4):419. [Google Scholar]

- Altmann GTM, Kamide Y. Incremental interpretation at verbs: Restricting the domain of subsequent reference. Cognition. 1999;73:247–264. doi: 10.1016/s0010-0277(99)00059-1. [DOI] [PubMed] [Google Scholar]

- Altmann GTM, Mirkovic J. Incrementality and Prediction in Human Sentence Processing. Cognitive Science. 2009;33(4):583–609. doi: 10.1111/j.1551-6709.2009.01022.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amato MS, MacDonald MC. Sentence processing in an artificial language: Learning and using combinatorial constraints. Cognition. 2010;116(1):143–148. doi: 10.1016/j.cognition.2010.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arai M, van Gompel RPG, Scheepers C. Priming ditransitive structures in comprehension. Cognitive Psychology. 2007;54(3):218–250. doi: 10.1016/j.cogpsych.2006.07.001. [DOI] [PubMed] [Google Scholar]

- Bauer PJ, Fivush R. Constructing event representations: Building on a foundation of variation and enabling relations. Cognitive Development. 1992;7(3):381–401. [Google Scholar]

- Bauer PJ, Mandler JM. One thing follows another: Effects of temporal structure on 1- to 2- year-olds’ recall of events. Developmental Psychology. 1989;25(2):197–206. [Google Scholar]

- Bauer PJ, Shore CM. Making a memorable event: Effects of familiarity and organization on young children’s recall of action sequences. Cognitive Development. 1987;2(4):327–338. [Google Scholar]

- Boersma P, Weenink D. Praat: doing phonetics by computer (Version 5.3.22) 2012. [Google Scholar]

- Borovsky A, Elman J, Fernald A. Knowing a lot for one’s age: Vocabulary and not age is associated with the timecourse of incremental sentence interpretation in children and adults. Journal of Experimental Child Psychology. 2012;112:417–436. doi: 10.1016/j.jecp.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carey S, Bartlett E. Acquiring a single new word. Papers and Reports on Child Language Development. 1978;15:17–29. [Google Scholar]

- Chang F, Kidd E, Rowland CF. Behavioral and Brain Sciences. 2013. Prediction in processing is a by-product of language learning; pp. 22–23. [DOI] [PubMed] [Google Scholar]

- Choi Y, Trueswell JC. Children’s (in)ability to recover from garden paths in a verb-final language: Evidence for developing control in sentence processing. Journal of Experimental Child Psychology. 2010;106(1):41–61. doi: 10.1016/j.jecp.2010.01.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cragg L, Nation K. Language and the Development of Cognitive Control. Topics in Cognitive Science. 2010;2(4):631–642. doi: 10.1111/j.1756-8765.2009.01080.x. [DOI] [PubMed] [Google Scholar]

- Dahan D, Magnuson JS, Tanenhaus MK. Time Course of Frequency Effects in Spoken-Word Recognition: Evidence from Eye Movements. Cognitive Psychology. 2001;42(4):317–367. doi: 10.1006/cogp.2001.0750. [DOI] [PubMed] [Google Scholar]

- Davidson MC, Amso D, Anderson LC, Diamond A. Development of cognitive control and executive functions from 4 to 13 years: Evidence from manipulations of memory, inhibition, and task switching. Neuropsychologia. 2006;44(11):2037–2078. doi: 10.1016/j.neuropsychologia.2006.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deák GO. The Development of Cognitive Flexibility and Language Abilities. Advances in Child Development and Behavior. 2004;31:271–327. doi: 10.1016/s0065-2407(03)31007-9. JAI. [DOI] [PubMed] [Google Scholar]

- Dollaghan C. Child Meets Word: “Fast Mapping” in Preschool Children. Journal of Speech and Hearing Research. 1985;28(3):449–454. [PubMed] [Google Scholar]

- Fernald A, Pinto JP, Swingley D, Weinberg A, McRoberts GW. Rapid gains in speed of verbal processing by infants in the 2nd year. Psychological Science. 1998;9(3) [Google Scholar]

- Fernald A, Thorpe K, Marchman VA. Blue car, red car: Developing efficiency in online interpretation of adjective†“noun phrases. Cognitive Psychology. 2010;60(3):190–217. doi: 10.1016/j.cogpsych.2009.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fernald A, Zangl R, Portillo AL, Marchman V. Looking while listening: Using eye movements to monitor spoken language comprehension by infants and young children. In: Sekerina I, Fernandez E, Clahsen H, editors. Language Processing in Children. Amsterdam: Benjamins; 2008. [Google Scholar]

- Fivush R. Learning about school: The development of kindergarteners’ school scripts. Child Development. 1984;55:1697–1709. [PubMed] [Google Scholar]

- Fivush R, Kuebli J, Clubb PA. The Structure of Events and Event Representations: A Developmental Analysis. Child Development. 1992;63(1):188–201. [Google Scholar]

- Fivush R, Slackman EA. The Acquisition and Development of Scripts. In: Nelson K, editor. Event knowledge: Structure and function in development. Hillsdale, New Jersey: Lawrence Erlbaum Associates; 1986. pp. 71–96. [Google Scholar]

- Gathercole SE. The Development of Memory. Journal of Child Psychology and Psychiatry. 1998;39(1):3–27. [PubMed] [Google Scholar]

- Heibeck TH, Markman EM. Word Learning in Children: An Examination of Fast Mapping. Child Development. 1987;58(4):1021–1034. [PubMed] [Google Scholar]

- Hudson J, Nelson K. Effects of script structure on children’s story recall. Developmental Psychology. 1983;19(4):625–635. [Google Scholar]

- Huettig F, Rommers J, Meyer AS. Using the visual world paradigm to study language processing: A review and critical evaluation. Acta psychologica. 2011 doi: 10.1016/j.actpsy.2010.11.003. [DOI] [PubMed] [Google Scholar]

- Huizinga M, Dolan CV, van der Molen MW. Age-related change in executive function: Developmental trends and a latent variable analysis. [Article; Proceedings Paper] Neuropsychologia. 2006;44(11):2017–2036. doi: 10.1016/j.neuropsychologia.2006.01.010. [DOI] [PubMed] [Google Scholar]

- Kamide Y, Altmann GTM, Haywood S. The time-course of prediction in incremental sentence processing: Evidence from anticipatory eye movements. Journal of Memory & Language. 2003;49:133–156. [Google Scholar]

- Kersten AW, Smith LB. Attention to novel objects during verb learning. Child Development. 2003;73(1):93–109. doi: 10.1111/1467-8624.00394. [DOI] [PubMed] [Google Scholar]

- Kidd E, Bavin EL. Lexical and referential cues to sentence interpretation: an investigation of children’s interpretations of ambiguous sentences. Journal of Child Language. 2005;32(04):855–876. doi: 10.1017/s0305000905007051. [DOI] [PubMed] [Google Scholar]

- Kirkham NZ, Cruess L, Diamond A. Helping children apply their knowledge to their behavior on a dimension-switching task. Developmental Science. 2003;6(5):449–467. [Google Scholar]

- Knoeferle P, Crocker MW. The Coordinated Interplay of Scene, Utterance, and World Knowledge: Evidence From Eye Tracking. Cognitive Science. 2006;30(3):481–529. doi: 10.1207/s15516709cog0000_65. [DOI] [PubMed] [Google Scholar]

- Knoeferle P, Kreysa H. Can speaker gaze modulate syntactic structuring and thematic role assignment during spoken sentence comprehension? [Original Research] Frontiers in Psychology. 2012:3. doi: 10.3389/fpsyg.2012.00538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mandler JM, McDonough L. Long-term recall of event sequences in infancy. Journal of Experimental Child Psychology. 1995;59(3):457–474. doi: 10.1006/jecp.1995.1021. [DOI] [PubMed] [Google Scholar]

- Mani N, Huettig F. Prediction during language processing is a piece of cake - but only for skilled producers. Journal of Experimental Psychology: Human Perception & Performance. 2012;38(4):843–847. doi: 10.1037/a0029284. [DOI] [PubMed] [Google Scholar]

- Mani N, Huettig F. Towards a complete multiple-mechanism account of predictive language processing. Behavioral and Brain Sciences. 2013:37–38. doi: 10.1017/S0140525X12002646. [DOI] [PubMed] [Google Scholar]

- McMurray B, Horst JS, Samuelson LK. Word learning emerges from the interaction of online referent selection and slow associative learning. Psychological Review. 2012;119(4):831. doi: 10.1037/a0029872. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith BS, Ratner HH, Hobart CJ. The role of cuing and organization in children’s memory for events. Journal of Experimental Child Psychology. 1987;44:1–24. [Google Scholar]

- Snedeker J, Trueswell J. The developing constraints on parsing decisions: The role of lexical-biases and referential scenes in child and adult sentence processing. Cognitive Psychology. 2004;49(3):238–299. doi: 10.1016/j.cogpsych.2004.03.001. [DOI] [PubMed] [Google Scholar]

- Tanenhaus MK, Magnuson JS, Dahan D, Chambers C. Eye Movements and Lexical Access in Spoken-Language Comprehension: Evaluating a Linking Hypothesis between Fixations and Linguistic Processing. Journal of Psycholinguistic Research. 2000;29(6):557–580. doi: 10.1023/a:1026464108329. [DOI] [PubMed] [Google Scholar]

- Tanenhaus MK, Spivey-Knowlton MJ, Eberhard KM, Sedivy JC. Integration of visual and linguistic information in spoken language comprehension. Science. 1995;268:1632–1634. doi: 10.1126/science.7777863. [DOI] [PubMed] [Google Scholar]

- Trueswell JC, Sekerina I, Hill NM, Logrip ML. The kindergarten-path effect: studying online sentence processing in young children. Cognition. 1999;73(2):89–134. doi: 10.1016/s0010-0277(99)00032-3. [DOI] [PubMed] [Google Scholar]

- Zelazo PD, Müller U. The Wiley-Blackwell Handbook of Childhood Cognitive Development. Wiley-Blackwell; 2010. Executive Function in Typical and Atypical Development; pp. 574–603. [Google Scholar]

- Zhang L, Knoeferle P. Visual Context Effects on Thematic Role Assignment in Children versus Adults: Evidence from Eye Tracking in German. Proceedings of the 34th Annual Conference of the Cognitive Science Society; 2012. pp. 2593–2598. [Google Scholar]