Abstract

Background

Patients with inadequate health literacy often have poorer health outcomes and increased utilization and costs, compared to those with adequate health literacy skills. The Institute of Medicine has recommended that health literacy assessment be incorporated into health care information systems, which would facilitate large-scale studies of the effects of health literacy, as well as evaluation of system interventions to improve care by addressing health literacy. As part of the Health Literacy Screening (HEALS) study, a brief health literacy screen (BHLS) was incorporated into the electronic health record (EHR) at a large academic medical center.

Methods

Changes were implemented to the nursing intake documentation across all adult hospital units, the emergency department, and three primary care practices. The change involved replacing previous education screening items with the BHLS. Implementation was based on a quality improvement framework, with a focus on acceptability, adoption, appropriateness, feasibility, fidelity and sustainability. Support was gained from nursing leadership, education and training was provided, a documentation change was rolled out, feedback was obtained, and uptake of the new health literacy screening items was monitored.

Results

Between November 2010 and April 2012, there were 55,611 adult inpatient admissions, and from November 2010 to September 2011, 23,186 adult patients made 39,595 clinic visits to the three primary care practices. The completion (uptake) rate in the hospital for November 2010 through April 2012 was 91.8%. For outpatient clinics, the completion rate between November 2010 and October 2011 was 66.6%.

Conclusions

Although challenges exist, it is feasible to incorporate health literacy screening into clinical assessment and EHR documentation. Next steps are to evaluate the association of health literacy with processes and outcomes of care across inpatient and outpatient populations.

Health literacy is the degree to which individuals have the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions.1 It is a necessary skill for successful navigation of the health care system, communication with providers, and management of chronic conditions. However, an estimated 90 million adults in the United States have low health literacy,2 which is associated with lower rates of preventive .care, poorer disease control, and greater mortality, as well as increased health care utilization and costs.3,4 Addressing health literacy is therefore a national health priority.1,5

Health literacy is also a function of the complexity of the health care system, and many leading health care organizations have provided recommendations and toolkits to appropriately address health literacy.1,6-10 Raising awareness of health literacy and integrating health literacy strategies into quality improvement efforts and interpersonal communication are among the attributes of a health literate organization.11 Such efforts are expected to improve patient-centered care as well as patient outcomes. Since 2012, The Joint Commission has required that hospitals specifically address use of effective oral and written communication and documentation of patients’ communication needs, without dictating the manner in which this be done.1,12 The 2004 Institute of Medicine report on health literacy recommended that “health literacy assessment should be a part of health care information systems and quality data collection.”1(p. 16) This would facilitate large-scale studies of the associations and consequences of low health literacy, as well as evaluation of system interventions designed to improve patient care by addressing health literacy.1 However, we are unaware of any institutions that have done so on a large scale.

Nurses are ideally positioned to systematically screen and document patients’ health literacy skills.13 A number of health literacy assessment tools exist with demonstrated validity and reliability, but these measures are typically conducted by trained research staff and are too time-intensive to be feasible in routine practice.14 In the last several years, shorter screening tools have been developed and validated.15 However, use of such tools by clinical personnel is not well described, except for one outpatient study, in which 98% of patients found health literacy screening acceptable.16

Given the importance of health literacy in delivering care at both individual and system levels, our institution sought to establish a standardized approach to health literacy assessment and documentation. In this article we describe the implementation of a three-item measure,17,18 which we refer to as the Brief Health Literacy Screen (BHLS), in inpatient and outpatient practice at a large academic medical center. The multicomponent implementation strategy entailed selection of a tool well-suited to nursing workflow; garnering key nurse leaders' support and participation; education; electronic health record (EHR) integration; and ongoing evaluation and feedback. We measured the success of implementation using outcomes and measures adapted from Proctor et al.,19 specifically, acceptability, adoption, appropriateness, feasibility, fidelity, and sustainability.

Methods

The Health Literacy Screening (HEALS) Study

The Health Literacy Screening (HEALS) study, conducted from November 2010 to April 2012, was designed to achieve three goals: (1) to assess the feasibility of implementing health literacy screening in clinical practice; (2) to validate screening item performance when administered by clinical nursing staff; and (3) to determine if health literacy screening items predict health outcomes. We used quality improvement techniques to incorporate the BHLS into practice. Screening item performance and predictive validity are reported separately.20,21

The study was approved by the Vanderbilt University Institutional Review Board, and waiver of informed consent was granted for all nurses and staff who may administer the health literacy screening. A subgroup of 500 hospital and 300 clinic patients provided written informed consent for research staff to readminister the BHLS. Research data were collected and managed using REDCap (Research Electronic Data Capture), a secure, web-based application.22

Setting

Vanderbilt University Medical Center (VUMC), located in Nashville, Tennessee, is a principal referral center for the Southeast region, and it serves a diverse population in terms of socioeconomic status and education level. It includes the 658-bed Vanderbilt University Hospital (VUH) and outpatient facilities that receive more than 1.5 million visits each year. The settings for this implementation project consisted of all inpatient adult units, the adult emergency department, and three adult primary care practices.

Usual Practice Before the Initiative

Hospital

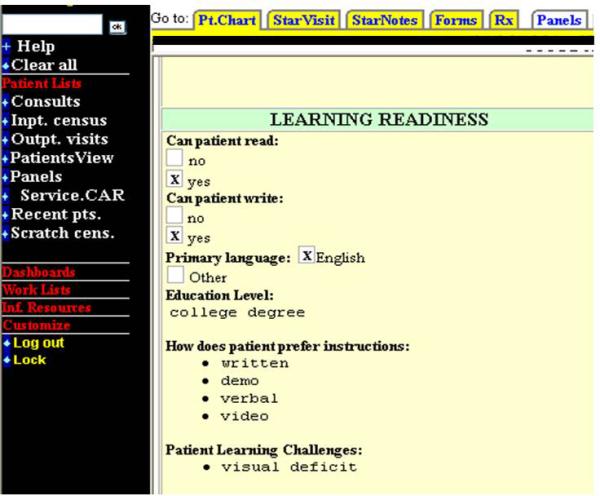

RNs complete an Adult Nursing Admission History on all patients admitted to VUH. The form is completed in the EHR, with one section devoted to patients’ communication needs, or “learning readiness.” Before this initiative, that section was composed of the following items, as shown in Figure 1a (page 000):

Educational attainment (open-ended response field)

“Can patient read” (yes/no)

“Can patient write” (yes/no)

Language (English or other)

Patient's preference for instructions (written, demonstration, verbal, video, TV, or pamphlets)

When completing their documentation, nurses usually interview the patient directly, although they may communicate through an interpreter in the case of a language barrier, or with a family member or caregiver if the patient is otherwise unable to communicate (for example, because of illness). The hospital monitors nursing unit performance monthly to assess documentation compliance and requires corrective action if the completion (uptake) rate drops below 80%. At the time of study implementation, the completion rate was 85%.

Figure 1.

Pre-implementation Learning Readiness section of Adult Nursing Admission History

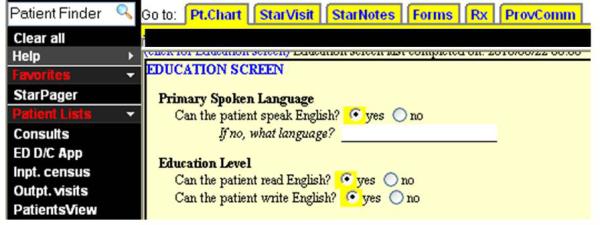

Outpatient Practices

In the outpatient practices, the Clinic Intake form is administered to all patients at the time of check-in. Before this initiative, it contained a brief educational assessment of whether the patient could speak and read English (Figure 1b, page 000), and staff were prompted to complete this annually. The Clinic Intake form was not previously monitored for quality or completion, but on the basis of data from two of the three selected clinics before to project implementation, approximately 44% of patients had the educational assessment completed within one year of the current visit; 99% had the section completed within the previous five years.

There were multiple limitations associated with the previous educational assessments. First, they did not allow for gradations in ability, although literacy skills are rarely an all-or-none phenomenon. Second, asking patients directly if they can read or write may provoke shame23; it is preferable to ask about health literacy in a more sensitive manner that normalizes having difficulty.24 Third, nurses may be uncomfortable asking patients directly about their skills, particularly if not provided guidance; instead, they may assume a person's ability to read and write based on appearance or educational attainment.25

Implementation Strategies

The approximately 5,000 RNs at VUH were made aware of the initiative through staff meetings and an information letter sent via group e-mail. Clinic nurses were informed through staff meetings and during in-person training by the research staff. Table 1 presents a brief summary of the implementation strategies incorporated in the different settings, with further detail provided herein. We used four main strategies to implement the new assessment: (1) selection of an appropriate tool for incorporation into the EHR; (2) identification of key stakeholders to support implementation; (3) provision of education and training on use of the tool; and (4) monitoring of uptake and provision of feedback to key stakeholders on the data collected by nursing staff.

Table 1.

Implementation Elements for Incorporating Health Literacy Assessment in Hospital and Primary Care Settings

| Process Element | Hospital | Clinics | ||

|---|---|---|---|---|

| Activity | Timeframe | Activity | Timeframe | |

| Administrative support | Met with nursing leaders, nursing boards | Prior to grant submission, and from July-Sept 2010 | Met with clinic leaders | Prior to grant submission, and from Aug-Sept 2010, Feb 2011 |

| Education | In-service to nursing boards, email sent to staff nurses, in-service to individual units | Aug-Oct 2010 | In-service to clinic staff | Nov 2010, Dec 2010, May 2011 |

| Incorporation into documentation process | Learning Readiness section of Adult Nursing Admission History | Oct 2010 | Education Assessment section of Clinic Intake form | Nov 2010 (2 clinics), May 2011 (1 clinic) |

| Monitoring | Mediware® Insight software, direct observations | Nov 2010-Oct 2011 | Weekly reports from EDW | May-June 2011 |

| Feedback | Email to nursing leaders | Nov 2010-Apr 2011 | Met with clinic managers | Dec 2010-Feb 2011, May 2011 |

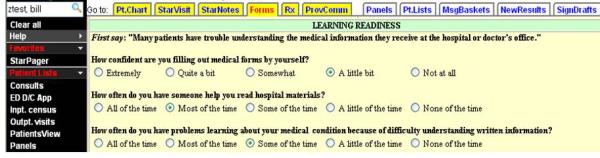

1. Selection of an Appropriate Tool for Incorporation into the Electronic Health Record

We assembled a multidisciplinary team composed of nurses, physicians, and health communication researchers, who determined that the BHLS was the most appropriate health literacy instrument to incorporate into the nursing work flow in the hospital and clinic. The BHLS consists of three questions, as follows:

Confidence with Forms: “How confident are you filling out medical forms by yourself?” (“Extremely,” “Quite a bit,” “Somewhat,” “A little bit,” “Not at all”)

Help Read: “How often do you have someone help you read hospital materials?” (“All of the time,” “Most of the time,” “Some of the time,” “A little of the time,” “None of the time”)

Problems Learning: “How often do you have problems learning about your medical condition because of difficulty understanding written information?” (“All of the time,” “Most of the time,” “Some of the time,” “A little of the time,” “None of the time”)

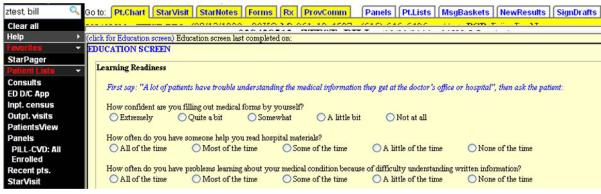

The first question is reverse coded. Items are scored from 1 to 5, and responses of 3 (“Somewhat” or “Some of the time”) or lower indicate inadequate health literacy.17 With support from nursing leadership, we replaced the previous literacy items from the inpatient and outpatient electronic forms with the BHLS (Figure 2a, page 000, and Figure 2b, page 000).

Figure 2.

Pre-implementation Educational Assessment section of Clinic Intake form

2. Identification of Key Stakeholders to Support Implementation

For effective deployment of the BHLS at the hospital, it was essential to gain the support of both nursing and information technology leadership. As a first step for the hospital assessment, we contacted the chief nursing officer, director of nursing informatics, and directors of nursing education to obtain support for project rollout and to collaborate on implementation strategies. We then met with nursing leadership groups (the nurse educator council, leadership board, and staff council) to discuss the best methods for incorporating the health literacy assessment into the existing workflow, to optimize use by frontline nursing staff.

For the clinic assessment, we followed a similar process. We met first with the primary care clinic administrators to obtain support and to select the most appropriate clinics for project implementation. We selected three primary care clinics on the basis of their proximity to the medical center and leaders’ readiness to participate. With their support, we subsequently met with the medical directors and managers in each of the selected practices. These clinic leaders agreed to discuss the project rollout with their frontline physicians and medical staff because of the conceivable impact on their work flows.

3. Provision of Education and Training on Use of The Tool

Education about the inpatient health literacy assessment took place approximately one month before rollout and was delivered to staff nurses through several methods. First, a train-the-trainer model was used, with use of an existing structure in which nurse educators are informed of changes and policies and are then responsible for disseminating that information to the frontline nurses on their respective units. Nurse educators were instructed in setting an appropriate tone before asking the health literacy questions and to normalize admissions of difficulty using the scripted introduction, “Many patients have trouble understanding the medical information they receive at the hospital or doctor's office.” They were also coached on the management of potential problems, such as clarifying indirect patient responses and what to do if a patient is unable or unwilling to answer. They used various methods to educate staff nurses, including providing information during the change of shift report, staff meetings, and unit board meetings; posting flyers in break areas; having a unit leader discuss it during unit rounds; and observing staff administer the new screening items. Second, we delivered similar education electronically, including an established e-mail communication that is regularly sent to all RNs, and we developed web pages on the hospital's nursing website. These pages included details about the new assessment, as well as information about health literacy and clear health communication techniques. We created and posted a five-minute video that included a message from the chief nursing officer, provided a rationale for the change, and demonstrated how to administer the new assessment. Finally, we were invited to provide an in-service to individual nursing units shortly after implementation to provide further detail and address staff questions and concerns. For training of clinic staff, the project coordinator (Cawthon) and an investigator (one clinic each: Kripalani, Mion, Roumie) provided an in-service and educational handouts in each clinic at the time of project implementation. All in-services included details about the prevalence of low health literacy and its significance in patient care. Each session also described how the new assessment was incorporated into the existing documentation, and how to correctly administer the items while putting patients at ease with a potentially sensitive topic. We also provided a link to the web pages with the video demonstration, as well as our contact information for any subsequent questions or concerns.

4. Monitoring of Uptake and Provision of Feedback to Key Stakeholders Collected by Nursing Staff

VUMC has a robust EHR that is used in both hospital and clinic settings. We modified existing EHR documents to incorporate the BHLS. Clinical and administrative data from the EHR are maintained in the Enterprise Data Warehouse (EDW), which can be queried for analysis. In the outpatient setting, consistent with existing practices, responses on health literacy and educational attainment were carried forward for 12 months following the index visit; after one year, staff are prompted to reassess. In the hospital setting, responses on the adult nursing admission history are not carried forward; they are reassessed at each subsequent hospitalization.

We electronically monitored uptake of the inpatient assessment through the hospital's established quality and safety monitoring system software, a web-based dashboard. The system enabled tracking of health literacy item completion over time and by nursing unit. We also monitored fidelity by conducting intermittent, direct observations of hospital nurses’ completion of the intake form.

We monitored outpatient implementation by collecting data weekly from the EDW. We also spoke directly with clinic staff about how patients responded to the new assessment, as well as any barriers to asking the new questions.

On the basis of data monitoring, direct observations, and conversations with staff, we provided aggregate feedback to nursing leaders and clinic managers (key stakeholders) regarding BHLS uptake and expected versus observed prevalence of low health literacy. The frequency of feedback varied by unit and was based on performance but ranged from biweekly to one time only.

Performance Measures and Data Collection

We examined the success of the implementation using the outcomes and framework suggested by Proctor.19,26 We used three methods to collect data: querying the EDW; direct observations; and focus groups, interviews and process recordings. The following key concepts in Proctor's framework were measured as follows:

Acceptability—the perception among stakeholders that a given practice is agreeable or satisfactory—was assessed qualitatively from administrators and frontline nursing personnel.

Adoption—the intention or initial action to try an innovation or practice—was evidenced by nursing, leadership, and informatics actions to implement the BHLS in the EHR.

Appropriateness—the perceived fit, relevance, or compatibility—was assessed qualitatively via nurses' concerns or push-backs.

Feasibility—the extent to which the practice was carried out—was measured using the item completion rates.

Fidelity—the nurses' reliable use of the tool—was measured as the percent agreement between the nurses' and research assistants' independent BHLS assessments of 500 hospital and 300 clinic patients.

Sustainability—the extent to which the new health literacy assessment process was maintained—was measured as monthly completion rates.

Results

Data on admissions were collected between November 2010 and April 2012, during which there were 55,611 adult admissions to VUH. Data on clinic visits were collected from November 2010 to September 2011, during which time 23,186 adult patients made 39,595 clinic visits to the three primary care practices. Patient characteristics were reflective of the population served—middle-aged on average and the majority white (Table 2, page 000).

Table 2.

Description of Patient Population

| Admissions N=55,611 | Clinic visits N=39,595 | |

|---|---|---|

| Age (median, IQR) | 56 (42-67) | 57 (44-69) |

| Gender* N (%) | ||

| Female | 26263 (47.2) | 24144 (61.0) |

| Male | 29345 (52.8) | 15451 (39.0) |

| Race** N (%) | ||

| White | 44719 (80.4) | 29207 (73.8) |

| Black | 8102 (14.6) | 5719 (14.4) |

| Other | 631 (1.1) | 911 (2.3) |

| Years of education† N (%) | ||

| Less than high school | 7115 (15.9) | 1254 (5.3) |

| High school grad | 17754 (39.8) | 6697 (28.1) |

| Some college | 10578 (23.7) | 5354 (22.5) |

| College grad or higher | 9206 (20.6) | 10539 (44.2) |

| Number of admissions or clinic visits per patient ‡ (median, IQR) | 1 (1-2) | 2 (2-4) |

Values correspond to encounters and are presented as n (%) unless noted otherwise.

Gender for admissions has 3 missing responses

Other race includes Asian, Native American, and Pacific Islander. Unknown, declined and missing race account for 2159 (3.9%) hospitalizations and 3758 (9.5%) clinic visits.

Education is reported for valid responses only. For admissions, 44653 (80.3%) had valid responses for education. For clinic visits, 23844 (60.2%) had valid responses for education.

Timeframe for hospital patients is November 2010 through April 2012. For clinic patients, it is November 2010 through September 2011 in 2 of the 3 clinics, and May 2011 through September 2011 in the third clinic.

Outcome Assessment

Acceptability and Adoption

Nursing and administrative leaders actively supported and helped guide the implementation of health literacy screening. In October 2010, the new learning readiness section was rolled out to VUH. The new educational assessment was rolled out to two of the primary care practices in November 2010, and to the third in May 2011. Administrators and nursing leaders provided positive feedback on having the new health literacy screening tool in the EHR.

Appropriateness

Through individual conversations and group discussions with nursing staff, frontline nurses reported that the new assessment was more useful in tailoring education to the patient's needs. Nurses also reported that patients responded well to the new questions and that they were easier to ask than the previous item (“Can you read and write?”). These nurses did, however, note that the length of the third BHLS item was difficult for many patients. Nurses reportedly often had to repeat or reword the item for patients to understand and respond appropriately, although we do not have data on specific patients who had trouble with this item. Some nurses identified a need for additional training on communication techniques for patients they screened as having low health literacy, as well as appropriate patient education resources; these have since been developed.

Feasibility

After the BHLS was implemented, we assessed the completion rate. As shown in Figure 3 (page 000), uptake in the hospital was rapid, reaching 90% within two months of rollout. As shown in Table 3 (page 000), in the hospital, an adult nursing history form was started for 91.8% (n = 51,063) of all admissions, for which all three BHLS items were completed for 46,862 admissions (91.8%). In the clinics, all three BHLS items were completed for 15,434 (66.6%) of the patients.

Figure 3.

New Learning Readiness section of Adult Nursing Admission History

Table 3.

Overall Completion of BHLS* Items Among Hospital Patients with an Adult Nursing Admission History and Clinic Patients with a Clinic Intake Form

| Hospital (N=51063 Patients) N (%) | Clinic (N=23186 Patients) N (%) | |

|---|---|---|

| All 3 items completed | 46862 (91.8) | 15434 (66.6) |

| Confidence with forms completed | 47432 (92.9) | 15543 (67.0) |

| Help reading completed | 47293 (92.6) | 15536 (67.0) |

| Problems learning completed | 47199 (92.4) | 15541 (67.0) |

BHLS = Brief Health Literacy Screening

Fidelity

Interviews with staff nurses and direct observations of nurses revealed variability in practice. We encouraged nurses to read the questions and possible responses verbatim; a number of nurses admitted to shortening the question(s) and/or not listing out the possible responses. Among samples of clinic (n = 300) and hospital (n = 500) patients, we compared item responses obtained by the nurses to responses obtained by research staff. Percent agreement is defined as the number of responses that were the same across the nurse and RA administration of the BHLS, out of the total number of responses. Table 4 (page 000) shows hospital and clinic agreement rates for each item, shown by individual responses (overall agreement), as well as for responses dichotomized by low health literacy versus adequate health literacy (agreement by category).

Table 4.

Agreement Between Nurse-Administered and RA-Administered BHLS Scores, Overall and by Category*

| Overall Outpatient Agreement % (95% CI) | Outpatient Agreement by Category % (95% CI) | Overall Inpatient Agreement % (95% CI) | Inpatient Agreement by Category % (95% CI) | |

|---|---|---|---|---|

| Confident with forms | 72.7 (67.4, 77.5) | 95.3 (92.2, 97.2) | 47.9 (43.5, 52.3) | 74.3 (70.3, 78.0) |

| Help reading | 78.8 (73.9, 83.1) | 89.9 (86.0, 92.9) | 47.1 (42.8, 51.5) | 68.3 (64.1, 72.3) |

| Problems learning | 73.2 (67.9, 77.9) | 86.9 (82.6, 90.3) | 47.9 (43.5, 52.3) | 69.5 (65.4, 73.4) |

Agreement by category shows the % agreement when responses are dichotomized to low health literacy vs. adequate health literacy.

Sustainability

Completion rates during the study period for the hospital (November 2010–April 2012) ranged from 90.1% to 94.4% per month (Figure 3). Overall completion rate for the clinics was 61.1% during the first month of implementation (November 2010) and 39.2% in the final month of data collection (September 2011).

Discussion

We applied a multicomponent implementation strategy to incorporate a structured health literacy screening tool into routine nursing assessments in both hospital and primary care settings. To our knowledge, this is the first published report of systematic health literacy assessment in clinical practice, and the first to incorporate results into health care information systems as recommended by the IOM.1

Some have argued that clinical assessment of health literacy is not yet warranted.27 However, most of these concerns relate to assessing health literacy with testlike research tools, such as Rapid Estimate of Adult Literacy in Medicine (REALM) or Test of Functional Health Literacy in Adults (TOFHLA), as opposed to short screening items that are more acceptable to patients. The BHLS has been given previously to thousands of patients, without significant issues reported.17,18,28-31 Other studies have specifically established the acceptability to patients of health literacy screening, particularly if done in a private area rather than in a waiting room. 16,32,16,32 Moreover, in two investigations, 94% of patients felt that it would be useful for their physicians and nurses to know if they were having difficulties related to low health literacy.33,34 Thus, while we advocate for a “Universal Precautions” approach in which all patients are provided health information that is easy to understand,6 we believe that it is reasonable to proceed with health literacy screening in a sensitive manner, using appropriate instruments such as the BHLS, to identify patients who may need additional assistance. Importantly, health literacy assessment has also enabled our medical center to raise staff awareness of low health literacy, develop appropriate resources, conduct large-scale research on the consequences of low health literacy, and assess the effect of system interventions by patients’ level of health literacy. Our research team is performing other work with the BHLS, including a detailed assessment of psychometric properties and association with measures of disease control and health outcomes.20,21

Nurses in both settings–hospital and clinics—adopted the new process quickly and reported it as beneficial for patient education discussions. The hospital setting had rapid uptake of the BHLS and demonstrated sustained completion rates of greater than 90%. The clinic setting demonstrated higher agreement rates with research staff but lower completion rates and sustainability. Several factors may account for these results.

A major facilitator of the BHLS adoption and acceptability was leadership support and the integration of all phases of the project into the existing infrastructure and work flows. As clinicians within the medical center, we had an appreciation for the potential barriers and facilitators to implementation and therefore designed the process to integrate easily into existing patient intake and documentation procedures. Second, we spent several months collaborating with administrative and clinical leaders in both the planning and implementation. These key stakeholders remained actively engaged, and some of them coached their personnel with our feedback. Third, we capitalized on established nurse education processes to disseminate information about the new screening tool. Fourth, we utilized existing methods for monitoring and feedback, and those mechanisms remain available for ongoing use. The evaluation was not designed to discern which of these factors had the greatest impact on adoption. However, most implementation frameworks incorporate multiple strategies on the basis of the complexity of changing practice behavior and do not seek to test implementation strategies head to head.35,36

There were also several challenges, primarily that of educating and disseminating details on the change in practice to approximately 5,000 nurses. We used a number of strategies that capitalized on the existing nursing education infrastructure: web pages, video, staff meetings, e-mail communication, a printed handout, and train-the-trainer model. We did not measure the fidelity of the nurse educators in delivering the education but did observe variability in the frequency and amount of the education they provided. Thus, it is likely that not all nurses knew of the documentation change nor of the preferred techniques to administer the items, until they appeared in the EHR. Unit variation was unaccounted for in our implementation. For example, admitting procedures may differ across surgical, medical, and ICUs because of differences in acuity of illness. Each hospital unit is allowed to customize exactly when the adult nursing admission history, and therefore the BHLS, is completed, although it is generally within the first 24 hours of hospitalization. These differences in amount of training received and daily work flows across hospital units may explain the lack of agreement at times between nurses’ and RA's administration of the BHLS items.

We also observed differences between hospital and clinic settings, including differences in completion rate and agreement between nurses’ and RAs’ administration. In the clinics, fewer numbers of staff made it possible for us to meet with everyone during project implementation, in contrast to the hospital, where different methods of disseminating information were required. In the clinics, there was also little time between nurses’ and RAs’ administration of the BHLS, compared to the hospital (0.5–1 hour versus 24–72 hours, respectively). These factors may partially account for higher agreement rates in the clinic. However, interrater and test-retest reliability of the BHLS have not been previously reported, and it is not known what effect acute illness may have on patient responses, so other contextual factors may also be at play. A more detailed psychometric evaluation of the BHLS in these settings is reported elsewhere, demonstrating it to be a valid indicator of health literacy in comparison to a reference standard, the s-TOFHLA.21

The difference in sustainability across settings is largely explained by differences in existing requirements for completion, as well as different monitoring and feedback processes. In the hospital, the completion of the “learning readiness” section was required at every admission, and there were already processes in place to take corrective action if completion rates dropped too low. In the clinics, the educational assessment is only recommended to be completed once a year for each patient, and there were no existing mechanisms for feedback in place.

As this initiative involved changing the existing practices of nursing staff, discussions with nurses in the hospital and clinic settings provided us important information on the barriers and facilitators to implementing a health literacy screening tool, which may inform future work, even in settings with a different patient population. Several of the nursing groups involved in planning and education expressed a desire for more training on how to improve their communication with patients identified as having low health literacy, and they highlighted a lack of standardized, health literacy-sensitive patient education tools. Indeed, it is important to be able to respond appropriately when a patient is identified as having low health literacy. Parallel to this project and as part of a broader effort to become a more health literate organization,11 the medical center created a Department of Patient Education, which has supported communication skills training for clinical staff and disseminated an extensive library of print and multimedia patient education tools. In addition, time pressure was highlighted as a significant barrier by nurses in the clinic, where intake must be completed rapidly to maintain clinic flow. Acknowledging competing pressures and other contextual factors is essential in planning and implementation.

Summary

Brief health literacy screening is possible to implement in routine clinical practice in both hospital and clinic settings. Further studies are needed to determine the most effective strategies for dissemination, monitoring, and feedback to maximize screening completion rates and fidelity of administration. Incorporating health literacy assessment into patients’ EHRs will not only facilitate large-scale research on the effect of low health literacy on processes and outcomes of care but will also enable further development and targeted dissemination of patient education resources that meet the needs of adults with low health literacy.

Supplementary Material

Figure 4.

New Educational Assessment section of Clinic Intake form

Acknowledgments

The Health Literacy Screening study was supported by the National Heart, Lung and Blood Institute of the National Institutes of Health under Award Number R21 HL096581 (Dr. Kripalani), and in part by the Vanderbilt Innovation and Discovery in Engineering And Science (IDEAS) Program (Dr. Kripalani). Dr. Willens was supported by the VA Quality Scholars Fellowship Program. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. The authors acknowledge and thank Pam Jones, Marcella Woods, and Ioana Danciu for their support and contribution to project implementation.

Footnotes

Provision of Care (PC) Standard PC.02.02.21: “The hospital effectively communicates with patients when providing care, treatment, and services.” The standard complements Rights and Responsibilities of the Individual (RI) Standard RI.01.01.01: “The hospital respects, protects, and promotes patient rights,” Element of Performance 5: “The hospital respects the patient's right to and need for effective communication.”

Contributor Information

Courtney Cawthon, Center for Health Services Research, Vanderbilt University, Nashville, TN.

Lorraine C. Mion, Vanderbilt University School of Nursing, Nashville, Tennessee, and a member of The Joint Commission Journal on Quality and Patient Safety's Editorial Advisory Board.

David E. Willens, VA Quality Scholars Research Fellow at the Veterans Affairs Tennessee Valley Healthcare System, Nashville, TN, is the Associate Program Director and Internal Medicine Residency Senior Staff Physician at Henry Ford Health System, Detroit, MI.

Christianne L. Roumie, Veterans Affairs Tennessee Valley Healthcare System, Geriatric Research Education Clinical Center (GRECC), HSR&D, Nashville, TN, and an Assistant Professor at Vanderbilt University, Nashville, TN.

Sunil Kripalani, Chief of the Section of Hospital Medicine, and Director of the Effective Health Communication Core at Vanderbilt University, Nashville, TN.

References

- 1.Institute of Medicine . A Prescription to End Confusion. National Academies Press; Washington, DC: 2004. Health Literacy. [PubMed] [Google Scholar]

- 2.Kutner M, Greenberg E, Jin Y, Paulsen C. The Health Literacy of America's Adults: Results from the 2003 National Assessment of Adult Literacy (NCES 2006-483) U.S. Department of Education, National Center for Education Statistics; Washington, DC: 2006. [Google Scholar]

- 3.DeWalt DA, Berkman ND, Sheridan S, Lohr KN, Pignone MP. Literacy and health outcomes: a systematic review of the literature. J Gen Intern Med. 2004;19(12):1129–1139. doi: 10.1111/j.1525-1497.2004.40153.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Berkman ND, Sheridan SL, Donahue KE, Halpern DJ, Crotty K. Low health literacy and health outcomes: an updated systematic review. Ann Intern Med. 2011;155(2):97–107. doi: 10.7326/0003-4819-155-2-201107190-00005. [DOI] [PubMed] [Google Scholar]

- 5.U.S. Department of Health and Human Services Office of Disease Prevention and Health Promotion . National Action Plan to Improve Health Literacy. Washington, DC: 2010. [Google Scholar]

- 6.DeWalt DA, Callahan LF, Hawk VH, et al. Health Literacy Universal Precautions Toolkit. (Prepared by North Carolina Network Consortium, The Cecil G. Sheps Center for Health Services Research, The University of North Carolina at Chapel Hill. Agency for Healthcare Research and Quality; Rockville, MD: 2010. under Contract No. HHSA290200710014. AHRQ Publication No. 10-0046-EF. [Google Scholar]

- 7.Joint Commission Addressing Patients’ Health Literacy Needs. 2009 [Google Scholar]

- 8.Weiss BD. Health Literacy: Help Your Patients Understand. American Medical Association Foundation and American Medical Association; Chicago, IL: 2003. [Google Scholar]

- 9.Centers for Disease Control and Prevention [Jan 2, 2013];Health Literacy for Public Health Professionals. Available at www.cdc.gov/healthliteracy/training.

- 10.Joint Commission [February 15, 2013];“What Did the Doctor Say?:” Improving Health Literacy to Protect Patient Safety. 2007 Available at http://www.jointcommission.org/What_Did_the_Doctor_Say/.

- 11.Brach C, Keller D, Hernandez LM, et al. Ten Attributes of Health Literate Health Care Organizations. IOM Roundtable on Health Literacy. 2012 Jun; [Google Scholar]

- 12.Joint Commission Provision of Care, Treatment, and Services. Hospital standards, PC-02.01.21, Effective July 1, 2012. The Joint Commission E-dition. 2012 accessed July 27. [Google Scholar]

- 13.Speros CI. Promoting health literacy: a nursing imperative. Nurs Clin North Am. 2011;46(3):321–333. doi: 10.1016/j.cnur.2011.05.007. [DOI] [PubMed] [Google Scholar]

- 14.Davis TC, Kennen EM, Gazmararian JA, Williams MV. Literacy testing in health care research. In: Schwartzberg JG, VanGeest JB, Wang CC, editors. Understanding Health Literacy. American Medical Association; Chicago: 2005. pp. 157–179. [Google Scholar]

- 15.Powers BJ, Trinh JV, Bosworth HB. Can this patient read and understand written health information? Jama. 2010;304(1):76–84. doi: 10.1001/jama.2010.896. [DOI] [PubMed] [Google Scholar]

- 16.Ryan JG, Leguen F, Weiss BD, et al. Will patients agree to have their literacy skills assessed in clinical practice? Health Educ Res. 2008;23(4):603–611. doi: 10.1093/her/cym051. [DOI] [PubMed] [Google Scholar]

- 17.Chew LD, Bradley KA, Boyko EJ. Brief questions to identify patients with inadequate health literacy. Family Medicine. 2004;36(8):588–594. [PubMed] [Google Scholar]

- 18.Chew LD, Griffin JM, Partin MR, et al. Validation of screening questions for limited health literacy in a large VA outpatient population. J Gen Intern Med. 2008;23(5):561–566. doi: 10.1007/s11606-008-0520-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Proctor E, Silmere H, Raghavan R, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Willens D, Kripalani S, Schildcrout J, et al. Association of brief health literacy screening and blood pressure in primary care patients with hypertension. Journal of Health Communication. doi: 10.1080/10810730.2013.825663. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wallston K, Cawthon C, McNaughton CD, Rothman R, Osborn CY, Kripalani S. Psychometric properties of the brief health literacy screen in clinical practice. doi: 10.1007/s11606-013-2568-0. manuscript under review. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)--a metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics. 2009;42(2):377–381. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Parikh NS, Parker RM, Nurss JR, Baker DW, Williams MV. Shame and health literacy: the unspoken connection. Patient Educ Couns. 1996;27:33–39. doi: 10.1016/0738-3991(95)00787-3. [DOI] [PubMed] [Google Scholar]

- 24.Kripalani S, Weiss BD. Teaching about health literacy and clear communication. J Gen Intern Med. 2006;21:888–890. doi: 10.1111/j.1525-1497.2006.00543.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cornett S. Assessing and addressing health literacy. OJIN: The Online Journal of Issues in Nursing. 2009;14(3) [Google Scholar]

- 26.Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B. Implementation research in mental health services: an emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Health. 2009;36(1):24–34. doi: 10.1007/s10488-008-0197-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Paasche-Orlow MK, Wolf MS. Evidence does not support clinical screening of literacy. J Gen Intern Med. 2008;23(1):100–102. doi: 10.1007/s11606-007-0447-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Chew LD, Bradley KA, Flum DR, Cornia PB, Koepsell TD. The impact of low health literacy on surgical practice. American Journal of Surgery. 2004;188(3):250–253. doi: 10.1016/j.amjsurg.2004.04.005. [DOI] [PubMed] [Google Scholar]

- 29.Sarkar U, Schillinger D, Lopez A, Sudore R. Validation of self-reported health literacy questions among diverse English and Spanish-speaking populations. J Gen Intern Med. 2011;26(3):265–271. doi: 10.1007/s11606-010-1552-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Wallace LS, Cassada DC, Rogers ES, et al. Can screening items identify surgery patients at risk of limited health literacy? Journal of Surgical Research. 2007;140(2):208–213. doi: 10.1016/j.jss.2007.01.029. [DOI] [PubMed] [Google Scholar]

- 31.Wallace LS, Rogers ES, Roskos SE, Holiday DB, Weiss BD. Brief report: screening items to identify patients with limited health literacy skills. J Gen Intern Med. 2006;21(8):874–877. doi: 10.1111/j.1525-1497.2006.00532.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Farrell TW, Chandran R, Gramling R. Understanding the role of shame in the clinical assessment of health literacy. Family Medicine. 2008;40(4):235–236. [PubMed] [Google Scholar]

- 33.Seligman HK, Wang FF, Palacios JL, et al. Physician notification of their diabetes patients’ limited health literacy. A randomized, controlled trial. J Gen Intern Med. 2005;20(11):1001–1007. doi: 10.1111/j.1525-1497.2005.00189.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Wolf MS, Williams MV, Parker RM, Parikh NS, Nowlan AW, Baker DW. Patients’ shame and attitudes toward discussing the results of literacy screening. Journal of Health Communication. 2007;12(8):721–732. doi: 10.1080/10810730701672173. [DOI] [PubMed] [Google Scholar]

- 35.Grimshaw JM, Shirran L, Thomas R, et al. Changing provider behavior: an overview of systematic reviews of interventions. Med Care. 2001;39(8 Suppl 2):II2–45. [PubMed] [Google Scholar]

- 36.Nelson EC, Batalden PB, Godfrey MM, editors. Quality by Design: A Clinical Microsystems Approach. Jossey-Bass; San Francisco: 2007. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.