INTRODUCTION

The healthcare environment is undergoing rapid change, whether secondary to healthcare reform (1-3), natural organic changes, or accelerated technological advances. The economics of healthcare, changes in the demographics of our population, and the rapidly evolving socioeconomic environment all contribute to a world which presents the radiologist with new challenges. New models of healthcare including Accountable Care Organizations are emerging (4). Our profession must adapt; the traditional approach to delivering imaging services may not be viable. Despite the challenges there are new opportunities presenting themselves in parallel. There are new and exciting information technologies (IT) to offer our patients that can contribute to improving their health and that can position our profession to better tackle the challenges that lie ahead.

We will argue that new informatics tools and developments can help the radiology profession respond to the drive for safety, quality and efficiency. New research realms, both clinical and molecular, require sophisticated informatics tools. The health of the individual and an emerging focus on population health require IT solutions. We will start with a description of some fundamental informatics building blocks and progress to explore new and rapidly evolving applications of interest to radiologists.

A brief look backwards

Radiology Information Systems (RIS) and Picture Archiving and Communications Systems (PACS), commonplace tools, are relatively recent developments. In 1983 the first American College of Radiology (ACR), and the National Electrical Manufacturers Association (NEMA) Committee met to develop the ACR-NEMA standard (5), first published in 1985. In 1993 the rapid rise in the number of digital modalities and the parallel development of robust networking technology prompted the development of Digital Imaging and Communications in Medicine (DICOM) 3.0(6).

Prior to RIS and PACS consider how you viewed images, including cross-sectional exams of several hundred images. How were they displayed, archived, and moved about a department? We had film, dark rooms, light boxes, multi-changers and film libraries requiring numerous personnel. How were copies provided for consultation? How did clinicians see the exams they ordered? Historical exams were often stored off site and not available for days. Exams were often “borrowed” and out of circulation or out rightly lost. How did one manage an office or a department, schedule exams, bill for one's services? These steps took place at a much slower pace than today.

Our new technologies have been “disruptive”. Certain jobs have disappeared; e.g. file room clerks. The number of “schedulers” has usually diminished. The number of radiologists required to read a defined volume of exams has diminished, as PACs has resulted in increased productivity.

Into the future!

We are in the midst of another paradigm shift. The rapid emergence and improvement of networking technologies are fostering this change. “Cloud Computing” encompasses new technologies and services that are often the basis for the developments that we will discuss below (7-9). This term encompasses a wide variety of services that are available over a network, often the internet. This can include access to hardware platforms and applications. In healthcare, security and confidentiality are of particular importance. Cloud Computing has already started to strongly influence the world of radiology. In addition, wireless technologies, including smartphones and tablets are quickly becoming tools used daily by radiologists and clinicians. Though we will not deal extensively with portable devices one should recognize that many of the applications we describe below will find their way onto such platforms.

There is also a rapid increase in processing power available at a reasonable cost. This has enabled several technologies to appear at our desktops as well as on portable devices. A standard desktop computer can deploy voice recognition dictation systems with self-editing. Post-processing solutions can be run on off the shelf equipment. These are services that required extremely expensive processing 15 years ago and were only affordable to a few. Many applications are being delivered as “server-side” solutions. Here the workstation (or local client computer) almost becomes a “dumb terminal” with most of the processing performed on a more powerful central server. The end-product is distributed to the local workstation. Server technology itself is rapidly changing. We are in the era of “virtual machine”; one server hosts the equivalent of multiple standalone servers, optimizing the processing power of that single device.

Radiology Practice: Current State and into the Next Decade

We Order, Schedule, Interpret, Report, Archive, Bill, and Share (exchange) the data we generate. We then close the circle by performing Quality Analytics and Research on this data to improve our performance and advance our knowledge. We educate trainees and certified radiologists. Table I lists these processes and some of the informatics tools employed to perform these tasks. Below we will review each of these activities, starting with the informatics tools that enhance our abilities to address the challenges we face.

Table I.

Workflow and IT tools.

| Task | IT Tool | Description |

|---|---|---|

| Order& Schedule | ||

| EMR- Radiology Order entry Clinical Decision Support (CDS) | The right exam for the right reason | |

| RADLEX playbook | Standard Exam dictionary | |

| Interpretation | ||

| Post-Processing | Thin Client; Integrated into PACS | |

| Cloud Based Post-Processing | High end shared services | |

| Computer Assisted Diagnosis | ||

| Radiologist Decision support | Online tools- Point of Service | |

| Reporting | ||

| Structured Reporting | Common reproducible ways of ensuring certain pieces of information are always present | |

| Natural Language Processing (NLP) | Data mine Free text | |

| Annotation and Image Markup (AIM) | Discrete information within the Image rather than the report | |

| Archive | Storage | |

| Local | ||

| Enterprise | ||

| Cloud | Economies of Scale; Disaster Recovery | |

| Vendor Neutral Archive | Multiple Sources | |

| Image/Report exchange | Images/Reports securely anywhere, anytime | |

| Health Information Exchange (HIE) | ||

| Personal Health Record (PHR) | ||

| Smart Phone/ Tablets | ||

| Quality | ||

| Peer Review | ||

| Radiation dosimetry | ||

| Regulatory reporting / Certification | ||

| Research | ||

| Comparative Effectiveness | ||

| Data Mining- Metadata, NLP | ||

| Education | ||

| Interactive- Audience participation | ||

| SCORM | Repurposed, Tailored to indiv | |

| Realtime -during the interpretation | ||

I. INFORMATICS TOOLS- THE FUNDAMENTAL BUILDING BLOCKS

Here we will discuss the technologies used to build radiology IT solutions. Many of these will reappear in later sections as we discuss specific solutions and their role in a radiology department or office.

Standards

Radiology IT developments have been enabled by the existence of standards. Not only must the standards exist, but the broader community must agree to use them. PACS could not have happened without the ultimate general acceptance of the DICOM 3 standard. Digital modalities existed, computed tomography (CT) and magnetic resonance (MR), prior to the firm entrenchment of DICOM. However, the archival and transport of those images was manual and chaotic until vendors uniformly subscribed to this standard. The same applies to Radiology Information Systems (RIS). Health Level Seven (HL7) is the means of communicating much of the textual and numeric data, including demographics and reports. One vendor's system can be interfaced to another's because of these standard protocols.

While HL7 and DICOM 3 are probably the best known standards in our industry, there are other standards that systems employ to provide interoperability. Sometimes there are multiple standards available to accomplish a given task. Engineers are familiar with all the relevant standards but historically have needed to build custom interfaces to allow systems to exchange information because the standards were not uniformly adopted. Integrating the Healthcare Enterprise (IHE) (10) is an organization with the goal of achieving transparent interoperability. IHE has multiple domains that examine common healthcare workflows and the available standards. Voluntary collaboration on the part of vendors and end users results in the development of “IHE profiles”. These profiles describe a means of applying a group of standards to a given workflow. When vendors agree to follow these profiles the result is transparent interoperability between systems (11). This is true plug and play functionality resulting in reduced costs for everyone.

Standardized terminology

We need a standardized vocabulary (also called terminology or lexicon) if we are to develop smart systems capable of executing transactions, interpreting reports, and performing data mining. Some examples will illustrate the need for a radiology lexicon/terminology.

How can we measure the report “turnaround time” for radiologists in a practice? Today this is a difficult without a standardized terminology. Does “turnaround time” refer to the time from order entry to final signature or exam completion time to the time of a preliminary dictation or some other combination?

This became problem for the ACR in establishing its Dose Index Registry. The ACR wished to collect and compare dose data regarding “head CTs” from participating radiology practices. The ACR discovered that over 1400 names were associated with “head” or “brain” and applied to what is essentially the same CT examination of the “brain” from 60 facilities (12, Personal Communication-Richard L. Morin, Ph.D., F.A.C.R.). A standard terminology would help resolve this problem. The terminology might identify some preferred terms, but when many exist a terminology identifies synonyms (13). Healthcare has yet to fully adopt a single terminology but several are emerging as the primary contenders, including the Systematized Nomenclature of Medicine-Clinical Terms (SNOMED CT). It is a comprehensive clinical terminology, originally created by the College of American Pathologists (CAP), and distributed in the United States through the National Library of Medicine. Another terminology used to code laboratory and clinical observations is the Logical Observation Identifiers Names and Codes (LOINC).

Organized radiology has recognized the need for a standardized terminology focused on our daily work. There are terms and relationships that are unique to radiology not included in the above lexicons. Perhaps the best known radiology lexicon is that included as part of the Breast Imaging Reporting and Data System (BI-RADS®), a quality assurance solution developed by the ACR. It proscribes how mammograms should be described and the terminology to be used for describing imaging features and the suspicion of malignancy. The RSNA has sponsored a project to develop RadLex® (13), a radiology terminology. It is an early effort and there are some gaps in its content. Terms not included within RadLex are continually collected and incorporated (14). This standardization of a vocabulary is a powerful enabler and we will see multiple examples below, even at this early stage where the existence of a radiology terminology brings value (15-18)

Image Metadata

An overarching informatics goal is to expose information in a computable format. Once in such a format we can have systems perform tasks that are mundane, or that we simply cannot perform as they are beyond human capability. Some of this information is available as “image metadata,” the “quantitative” and “semantic” information contained in an image that reflects its content. The quantitative information includes measurements of abnormalities, calculations within ROIs, and numerical features extracted from images by a computer. Semantic information refers to the type of image, the imaging plane, and imaging features observed by the radiologist and the anatomic structures in which they are located. Imaging informatics tools may be used to build applications that use image metadata as the source data. For example, an application that automatically embeds the dose information from an imaging study into the radiology report would need to access the identifier of the study, the name and type of study (semantic data) and the dose information (quantitative data).

In the domain of Radiology there are evolving IT technologies, DICOM GSPS (Grayscale Softcopy Presentation State), DICOM SR (Structured Report), and AIM (Annotation and Image Markup) that represent efforts to capture and expose metadata. These 3 solutions represent a transition from predominantly displaying graphics and measurements (DICOM GSPS) to not only displaying but easily exposing these elements in a form that enables analytics, data mining and application development (AIM). AIM is arguably the most information rich of the 3, because it is built on a semantic model. The semantic model is the essence of AIM specifying the types of image metadata contained in an image, the value types of the metadata, and relationships among those types. We will explore this further when considering the specifics of image interpretation and reporting.

II. IT INFRASTRUCTURE- THE UNDERPINNINGS OF RADIOLOGY OPERATIONS Ordering, Scheduling, Exam protocols, Billing

These processes are hardly new, but they continue to evolve in the face of new technologies that can make them simpler and more efficient. IHE profiles, noted above are but one approach to better orchestrating the use of IT tools in these domains. There are parallel efforts. One such notable effort is that of the Society for Imaging Informatics in Medicine (SIIM). Its TRIP™ (Transforming the Radiological Interpretation Process)(19) initiative and most recently its offshoot SWIM™ (SIIM Workflow Initiative in Medicine)(20) are focused on addressing this problem. SIIM as well as other professional associations and societies are all trying to take existing and new IT technologies and apply them to the daily operational issues faced in radiology practice.

There is growing recognition that there should be some standardization of imaging procedures. For instance a CT of the liver to exclude neoplasia is expected to include a particular mix of sequences. How might we automate the ordering, scheduling and billing processes to achieve this expectation? Most imaging departments and offices start with a chargemaster which includes an exam dictionary. A clinician orders from within an EMR, which could employ a radiology ordering module that includes a “standardized” exam dictionary. The RadLex Playbook is a project directed at developing an exam dictionary with an associated procedure naming grammar, all based upon the RadLex terminology. This dictionary can be directly tied to a chargemaster. The result should be some harmonization of exam dictionaries and chargemasters across enterprises. The RadLex Playbook encompasses terms to describe the devices, imaging exams, and procedure steps performed in radiology.

Once we all agree on a standard exam dictionary, much efficiency follows. There would be consistency across all of healthcare as to how we name exams. The order can be passed to a scheduling system and then to a specific modality through a DICOM service, the “Modality Worklist”. This is commonly used to provide demographic information to a modality, eliminating the need for manual, error prone input. In the near future the modality would recognize the exam name and by convention would launch a preprogrammed protocol consisting of a standard set of imaging sequences. Consistent naming of exams would also make the entire billing process more straightforward, with the development of relatively standard chargemasters.

Radiology Order Entry Clinical Decision Support- CDS

Tools that assist the clinician in ordering the appropriate test have the potential to change the practice of medicine (21-25). When the clinician gets it right the patient benefits! There is a tremendous amount of information available for clinicians to absorb and integrate into their medical practices. Radiology Order Entry CDS is quickly emerging in the era of the EMR as the IT solution to bring this information forward to the clinician when needed.

Safety, quality, and cost are the drivers that have prompted introduction of this technology. There has been extensive analysis of the inappropriate utilization of imaging services in the US. It results in a significant economic burden (26-29) and more importantly exposes the patient and the population to unnecessary radiation (30-35) which is potentially harmful. An increased rate of neoplasia is a concern. Radiologists need to be the solution to this problem based upon their professional expertise.

There are several causes of inappropriate utilization (26, 27, 36) . Physician fear of malpractice litigation (defensive medicine), patient demand, financial incentives for inappropriate utilization, pressures to minimize an overall cost of an episode of care, and simply lack of knowledge (37), are all contributors. Repeat exams, initiated by clinicians, but not necessarily recommended by the radiologist (38) and self-referral on the part of non-Radiologists (39) are issues. Duplication of exams, because a recent result and set of images are not available is an additional factor (27).

Several pilot programs have demonstrated that that CDS at the time of order entry can diminish inappropriate exams (33,40-45). A pilot study in Minnesota (33)Error! Bookmark not defined. demonstrated that imaging growth was curbed while simultaneously improving the rate of indicated examinations. An added benefit was that while Radiology Benefit Manager (RBM) pre-certification required an average of 10 minutes of interaction, the CDS only required 10 seconds.

Making CDS Operational

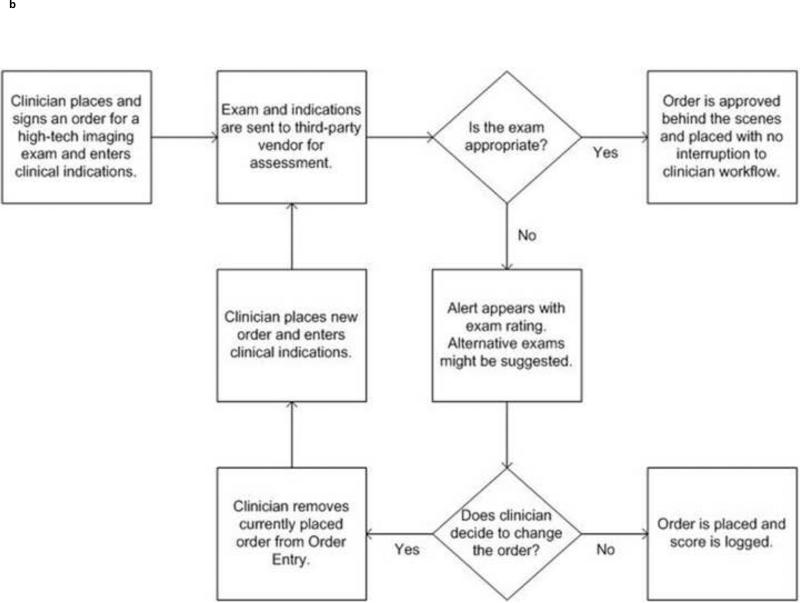

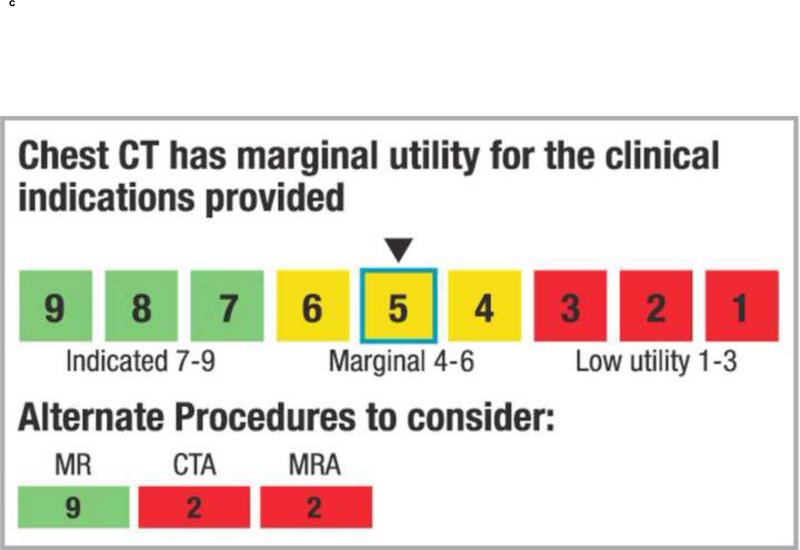

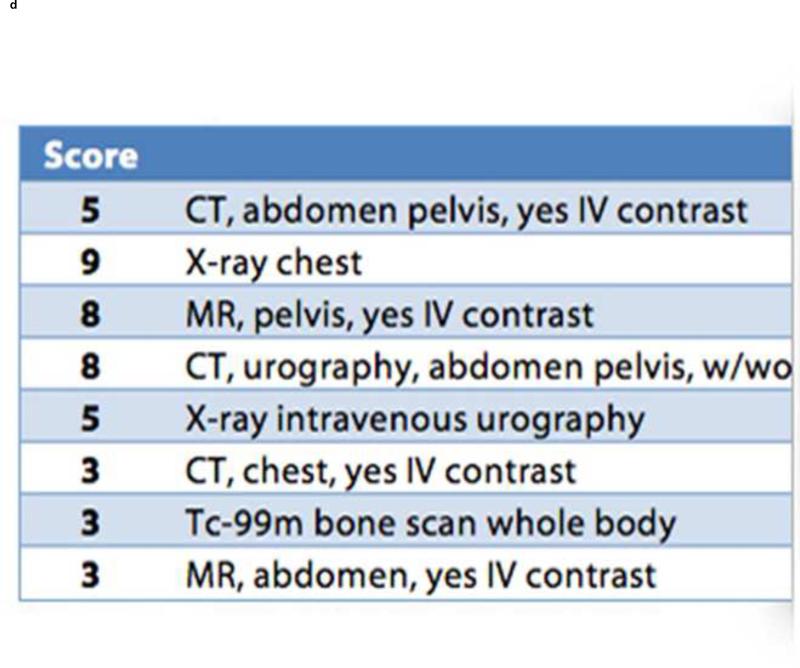

CDS support requires a set of rules. The ACR Appropriateness Criteria® (ACR-AC)(46) are one such source. When a clinician enters an order certain pieces of evidence are collected to justify the exam (Figure 1a&b). Some information is manually entered; often the “reason for exam”. Some of the information can be transparently collected from the Electronic Medical Record (EMR), including age, sex, problem list, etc. This information is electronically compared to the rule set and it is determined if the exam is appropriate. Some applications return a yes/no answer; others provide a utility score (Figure 1c&d). If the exam is indicated the order is accepted and sent from the EMR into a RIS for scheduling. If the score suggests that the exam is not ideal or inappropriate several actions can be taken. Alternative exams may be offered with their utility scores noted. The clinician may be given the option of proceeding with their original order, even if it has a low utility.

1.

The workflow (a&b) start when a clinician enters anorder for an imaging exam into the EMR . In the past the order would have been sent directly into a Radiology Information System and scheduled. In the new workflow the EMR first sends the order to another module or system, The Radiology CDS. Here the order is evaluated to determine if it is appropriate, using a reference source such as the ACR-AC. If the evaluation results in a high score the order is sent directly to the RIS. If the order is receives an intermediate or low score a message is returned to the EMR (c* or d*) indicating that this might not be the best choice. Alternative examinations may be suggested and in some systems references may be provided. Figures c & d simply show different styles of returning this information. The clinician may continue with the original order or choose one of the suggestions.

* Figures c & d courtesy of the National Decision Support Company (ACR Select)

Throughout this process information is collected in the background. A physician's performance and ordering practices can be analyzed and compared to that of their peers or established norms. This information can be used as part of an education and quality improvement process. Sometimes an outlier may be fully justified because of the nature of their practice. At other times the ordering pattern may be truly inappropriate and education may be offered.

This system is directed at the ordering clinician, yet the radiologist is of central importance. It is our expertise, with consultation from other specialties that should determine the rules and evolving guidelines. This is an evolution of our traditional role as consultants to the clinician.

III. INTERPRETING THE IMAGE

Decision Support for the Radiologist

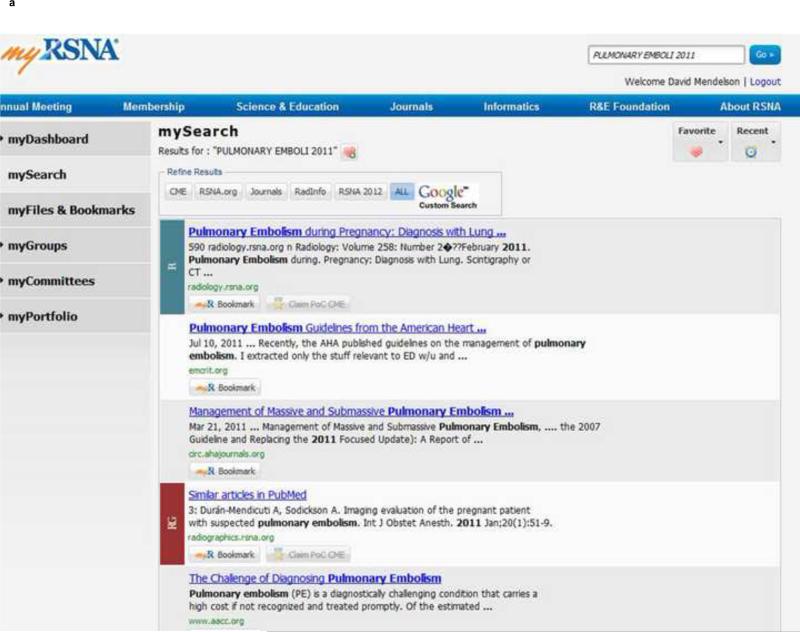

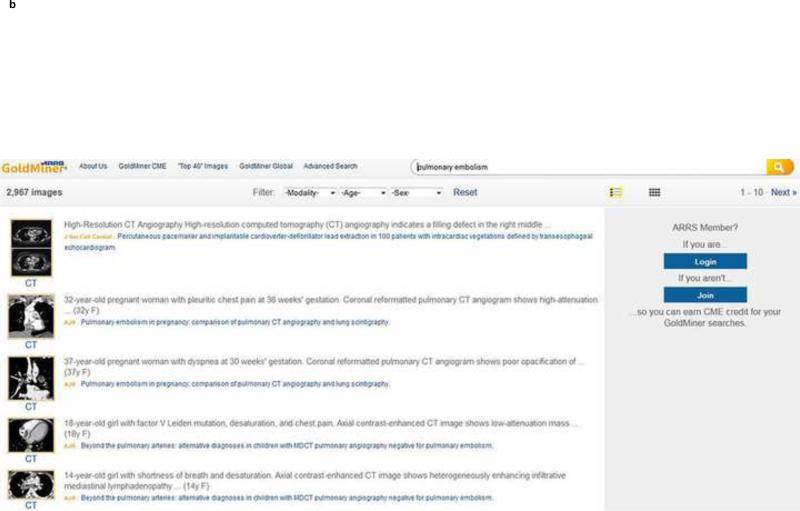

A radiologist when interpreting a set of images occasionally turns to a reference book or journal for further information before delivering the final report. Many have added the internet as a source of information. A simple search engine, be it Google, Bing or one of the many other generic services often can quickly provide the needed information. There are dedicated radiology services available, including myRSNA (Figure 2a), a radiologist's portal, and ARRS GoldMiner® (Figure 2b)(47). Many of these search services are evolving to not only provide the radiologist with quick up to date information but will also credit the radiologist for the educational activity occurring simultaneously by awarding CME credit.

2.

There are a variety of tools that provide the radiologist with decision support. These include online search tools and point of service tools, integrated into the radiology reporting process.

a. myRSNA is a radiology portal hosted by the RSNA. It offers a variety of services including a robust search function. One can bookmark references and even read some for CME credit online.

b. ARRS Goldminer offers a unique approach in searching. It has indexed the text of figure captions. It can search for terms included in the captions and brings back the figures, captions and articles they are included in.

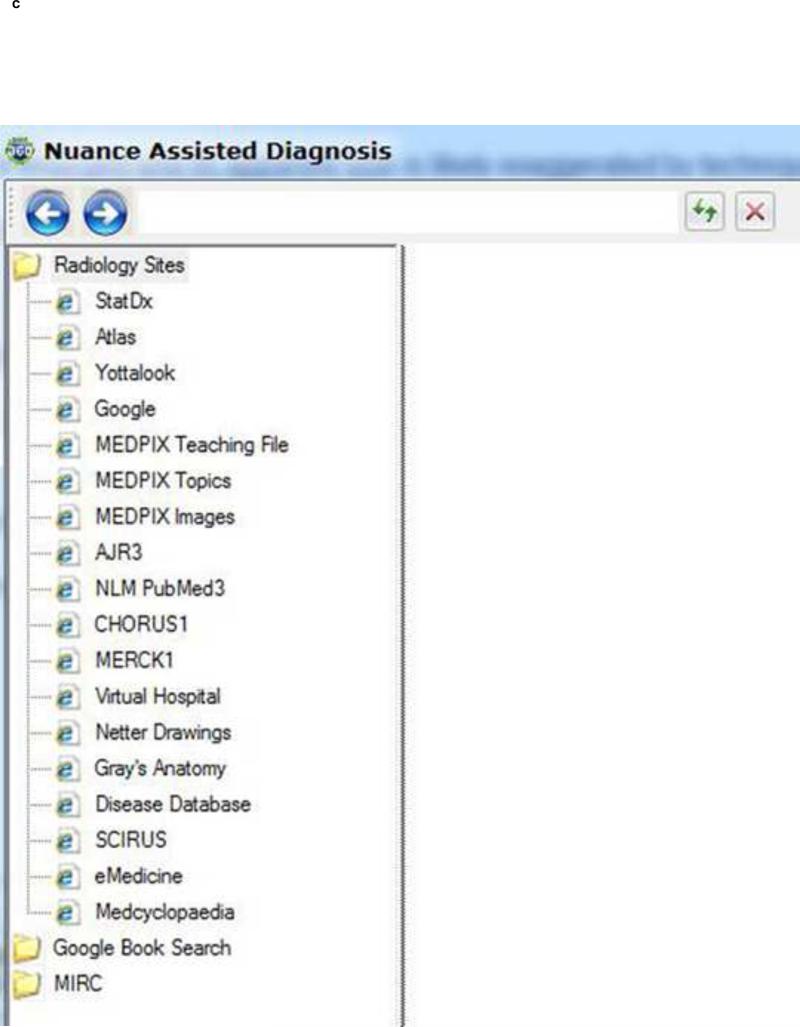

c*. This figure is taken from the interface of a voice recognition dictation product. It embeds a “wizard” to search terms on the fly. The user interface provides a list of the internet sites it has available to search. Some of these may require the user to have an additional license.

* Figure c is courtesy of Nuance, taken from their Powerscribe 360 product.

Here, we see the value of a lexicon such as RadLex in searching. Free text queries have been mapped to RadLex terms. This in effect helps to refine the user's search and focus the search results on the true subject of interest (15).

Paid knowledge services are also growing. A dictation/transcription vendor has incorporated a semi-automatic search wizard (Figure 2C) in the dictation interface so that the radiologist in the midst of reporting can quickly access a rich array of information services. One can expect to see this kind of “point of service” solution appearing in a growing number of applications that are part of the reporting cycle.

The goal is to make the correct knowledge available as easily and efficiently as possible. Tools currently in development are “watching” the radiology dictation real-time, and employing natural language processing (NLP) to identify key trigger words, search the internet resources in background, and bring back relevant information transparently.

Computer assisted Diagnosis- CAD

Post-processing of our image data is now routine. Cross-sectional imaging has leveraged multiplanar and 3-D technology to better depict and assess pathology (48). Advances in the processing power available at the desktop, advances in graphics processors, and algorithms have all contributed to making these tools affordable.

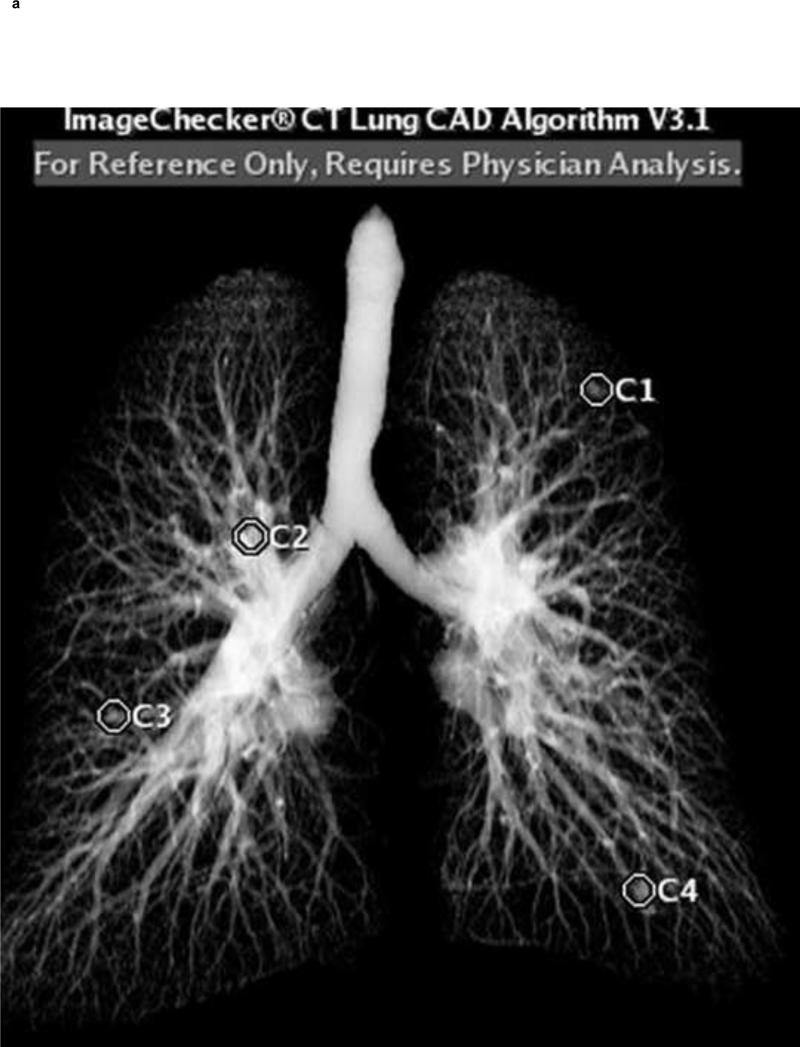

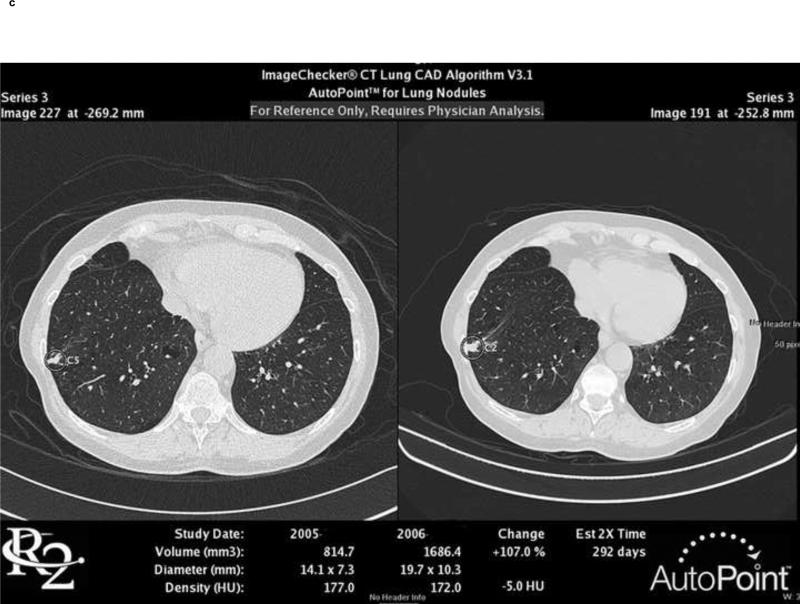

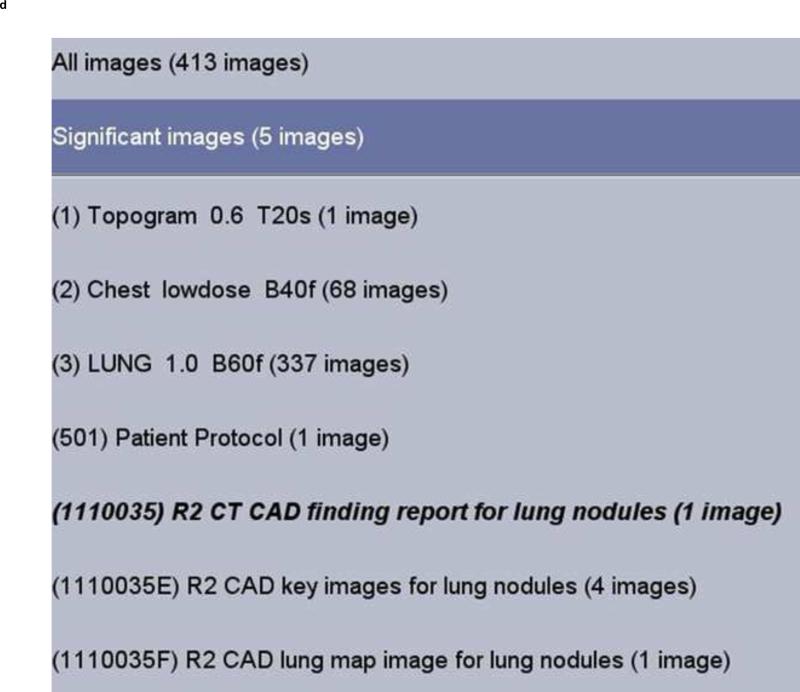

Another form of post-processing is CAD. These applications attempt to directly identify pathology. The greatest availability is for breast imaging (49, 50), but applications are quickly emerging for the analysis of lesions in a variety of organs (51,52). Figure 3 includes images from a Lung CT nodule CAD. It identifies potential lung nodules, shows them in a 3D rendering of the chest (Figure 3a), exports a series of axial images containing the identified nodules to PACS (Figure 3b), and provides detailed information regarding the dimensions and density of the nodules. If sequential exams are available this system will calculate temporal changes and doubling times (Figure 3c).

3.

CAD- a sample set of images is provided from a CT Lung Nodule CAD. A volumetric representation is provided indicating where the potential nodules are located (a). Each individual axial section which includes a nodule is also presented (b). The candidate nodule is circled and volumetric and density measurements are provided. If an historical exam is present this system can perform temporal comparisons (c). Each of these images is sent as part of a series to PACS (d). If there are multiple axial images they are included as a single series. A table (report) listing all the nodules is also sent as a series to PACS.

These solutions are not perfect. Many suffer from a high number of false-positives. Changing parameters for sensitivity will alter the specificity. However, there is a growing literature that suggests these tools, employed as a second read, increase the accuracy of the radiologist. The radiologist is the owner of the final report. These systems are tools that require the knowledge and judgment of the radiologist in understanding how to use the information provided.

A new level of Decision Support

Probably everyone is aware of IBM's Watson which IBM represents as a new model of CDS. IBM is working to leverage this technology in healthcare (53). Existing systems perform a key word search. The user selects and enters keywords, which are then searched upon by an engine that is looking for those words, without context. Watson may improve upon this scenario. It has a sophisticated NLP engine that removes the task of selecting the key words, speeding the overall process. Watson takes the keywords it has chosen, in the context they are presented and generates hypotheses from an extensive knowledge base. It then evaluates each hypothesis by searching for more supporting evidence. The ability to ingest enormous amounts of free text information about a given patient, and mine exhaustive knowledge resources may lead to a level of decision support barely entertained just a few years ago. The time-consuming manual processes that we perform today may be replaced by systems that almost instantaneously direct our thinking to a focused differential diagnoses with supporting documentation. IBM is establishing research relationships with academic healthcare sites to tailor its proposed solution to the healthcare environment.

IV. NEW PARADIGMS IN REPORTING

The New Narrative Report

The radiology report is our primary vehicle for communicating results. Expectations for the information elements that comprise a report are changing (54). While the foremost mission of the report has been to provide a diagnosis to the clinician there has been an increasing demand to expose other pieces of information within a report for quality and financial purposes. Payers wish to know the reason for exam, as do the radiologists and clinicians. Historically the provider could express this in a somewhat whimsical form, yet successfully communicate the desired intent of the exam. Payers have demanded a more regimented indication. Other kinds of information that are expected today include contrast type and volume, and radiation exposure. Notification of a critical alert should be documented in the report. Clinicians are looking for particular positive and negative observations in the assessment of potential disease processes.

The idiosyncratic tomes provided in the past are disappearing and being replaced with “structured” reports (55) with predefined, expected elements. The report may be based upon a template. They may be populated by the radiologist, but information of interest may already be present electronically and can automatically populate the report. The basic elements that should comprise a report have been identified in the American College of Radiology's Practice Guideline for Communication (54).

Structured reports appeared many years ago, but the tool sets available were limiting. Today there are more robust applications available, primarily voice recognition transcription systems. These offerings are now pervasive, with recognition engines that can approach 99% accuracy or better for some users. They often include macros that are templates which can be triggered with a word or automatically populated by recognition of an exam type. No matter the mechanism they provide the means to “structure” a report. Structured fields may be mandatory or optional; they may be filled in verbally or automatically from other systems. Not all this information needs to be displayed for all readers of a report. The presentation state of the report can vary dependent upon the individual reading the report (54), exposing only the information that is valuable to the end-user, but always having the potential to display the complete information set. A provider view of the report might be different than that provided to the patient or a billing office.

The structured report provides the opportunity to consistently include and hence discover, with IT data mining tools, specified data elements. We are enabling our quality assurance and research missions while simultaneously improving patient care by ensuring that the right data elements are always present. Standardized ways of reporting and communicating “critical results” can be launched by including “triggers” in the report. These triggers can spawn communication applications that ensure notification has taken place, and record the receipt of the message by the clinician (55, 57-60).

The RSNA has established a Radiology Reporting Initiative, a committee including domain experts to develop templates. This committee will promote best practices in reporting, including fostering structured reports when appropriate (55).

As much as structured reports can facilitate quality and research initiatives we should not lose sight of innovative opportunities that new technologies offer. Though not routine, we now have the capability to include significant images in the report, with image annotations. Natural Language Processing (NLP) applications such as Watson discussed above, and Leximer(60) are emerging, which can derive meaning from free text.(53,54,60) In the future a combination of structure and free text mined by NLP tools will enable automated actions triggered by text.

REPORTING THE METADATA- AIM

In addition to the radiology report a radiologist commonly indicates the location of a lesion by drawing an arrow or using the measurement tool (quantitative data) and dictates a statement in the report to describe the lesion (semantic data). Recorded as graphical overlays and free text in the radiology report, these data are not easily accessible to computer applications.

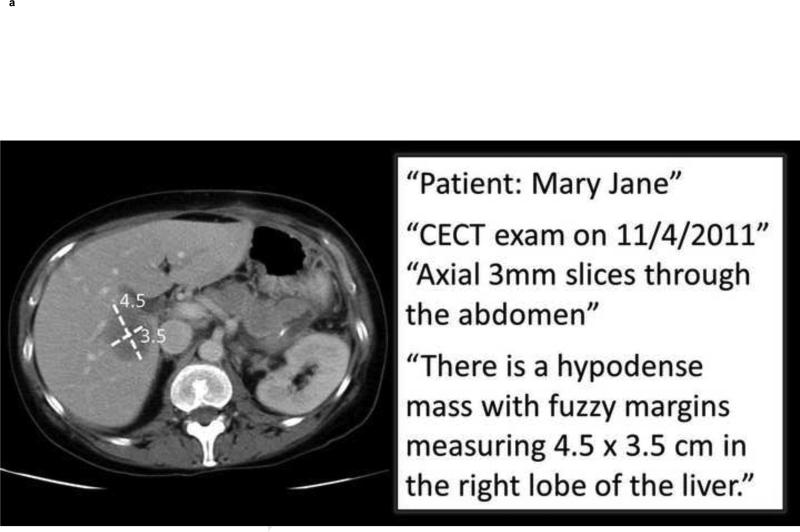

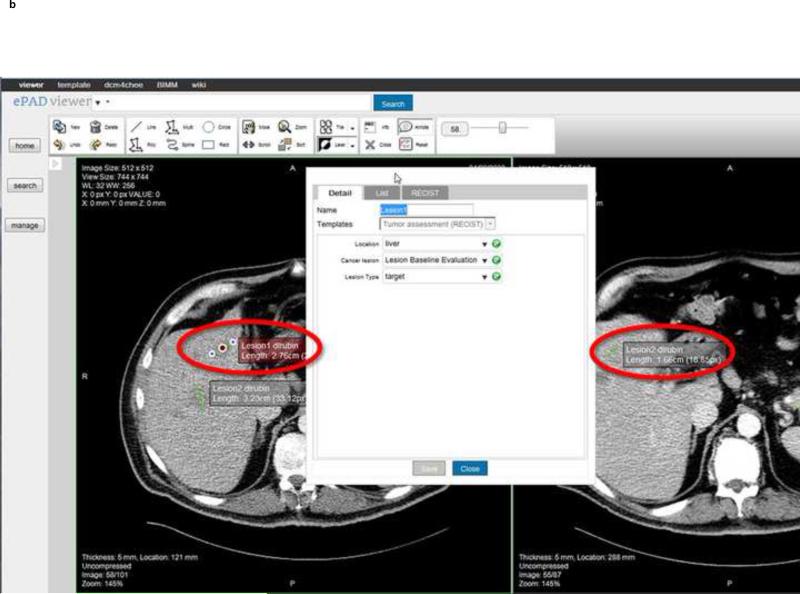

AIM was developed to address this issue (61). It provides (1) a “semantic model” of image markups and annotations, (2) a syntax for capturing, storing, and sharing image metadata, and (3) tools for serializing the image metadata to other formats such as DICOM-SR and HL7-CDA(62). The types of image metadata encoded by AIM include imaging observations, the anatomy, disease, and radiologist inferences(63). AIM distinguishes between image annotation and markup (Figure 4a). Image annotations are descriptive information, generated by humans or machines, directly related to the content of a referenced image. Image markup refers to graphical symbols that are associated with an image and its annotations. Accordingly, all the key image metadata content about an image is in the annotation; the markup is simply a graphical presentation of some of annotation image metadata.

4.

AIM is a new tool to expose image metadata and make it accessible for a variety of applications.

4a. Image metadata. This shows an example image and excerpts from the radiology report. The graphical symbols drawn by the radiologist on the image (“markups” indicating measurements of a lesion—quantitative data) and the statements in the report about the patient, type of exam, technique, date, imaging observations, and anatomic localization (semantic data) collectively comprise the image metadata (“annotation”). These image metadata, if stored in a standardized, machine-accessible format, greatly enable many computer applications to help radiologists in their daily work.

4b. ePAD rich Web client. The ePAD application provides a platform-independent and thin client implementation of an AIM-compliant image viewing workstation. Information about lesions that are marked up and reported by radiologists is captured and stored in AIM XML (or DICOM-SR). User-definable templates capture semantic information about lesions, such as shown in this case for oncology reporting, the type of lesion (target), anatomic location (liver), and type of imaging exam (baseline evaluation).

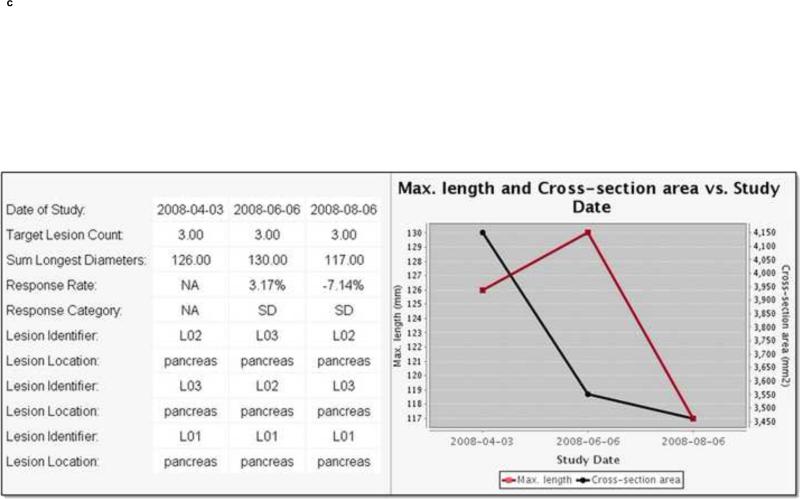

4c. Radiology image information summarization application levering the utility of AIM-encoded image metadata. A cancer lesion tracking application has queried AIM annotations created on different imaging studies (in this case, from 3 studies on 4/3/08, 6/6/08, and 8/6/08). The application automatically calculates the sum of each target lesion measured on each imaging study date and summarizes the results in a table (left) and graph (right). Using metadata from the AIM annotations, the application also displays alternative response measures such as maximum length (red line) or cross-sectional area (black line) of the measured lesions.

AIM is complementary to DICOM-SR with respect to providing a syntax for storing and exchanging image metadata. DICOM-SR however lacks a semantic model of the image metadata, which is the major reason AIM was developed.

AIM makes the semantic contents of images explicit and accessible to machines, thereby providing a framework that can be leveraged by a variety of emerging applications including: (1) image search, (2) content-based image retrieval, (3) just-intime knowledge delivery, (4) imaging information summarization, and (5) decision support. AIM enables systems to search for information that was either hidden or not directly linked to the relevant images. In clinical practice AIM will make it possible to directly retrieve prior images for comparison, rather than simply prior studies. Today, reviewing the prior studies, particularly to identify lesions being followed for assessing cancer response, slows the workflow (64). AIM-compliant image annotation tools that streamline the summarization and review of prior imaging studies are being developed (65). All the data needed by applications to process from images is available in a compact, explicit, and interoperable manner.

A number of image viewing workstations adopt AIM(66-68). These tools provide an annotation palette with drop down boxes and text fields the user accesses to record semantic information about the images (Figure 4b). They save the image metadata in AIM format. The latter can be transformed into DICOM-SR using the AIM toolkit, or users can store AIM in a relational database, enabling access for a variety of applications such as lesion tracking and reporting (67, 69) (Figure 4c). The process by which radiologists view images and create AIM annotations is similar to the current process by which radiologists perform this task, by drawing or notating directly on images.

V. RADIOLOGY IN THE CLOUD

Image and Report Exchange

We have entered an era where patients are extremely mobile and their longitudinal record is often comprised of documentation dispersed across numerous sites. Healthcare data exchange, including imaging (70-72), is fundamental to maintaining the integrity of the patient's longitudinal medical record. When presented with an abnormal exam the first question asked by a radiologist is whether or not there is an historical exam available for comparison. Unfortunately historical exams are often difficult to obtain.

Lack of availability of an historical exam is a contributor to inappropriate utilization through redundant imaging. Easy accessibility to historical exams on either CD or via the internet can diminish this phenomena (73,74) . CDs, representing a significant improvement beyond sharing on film, remain fraught with problems,(71,72) ranging from damaged discs to proprietary formats not readable universally. Lastly, one needs to have the physical media on their person.

We share many things on the internet; music, photos, and videos among the most common. We shop there and it's not unusual to perform banking activities. Why not extend such service to healthcare, enabling your medical record to be available anytime and anywhere? We have seen the beginning of an explosion of Internet based healthcare information exchange. This has included regional Health Information Exchanges (HIEs), Personal Health Records (PHRs), peer to peer sharing, vendor based sharing (sharing limited to the customers of a single vendor) and a multitude of variants. The federal government is fostering exchange through the National Health Information Network (NHIN) as well as several NIH sponsored pilots,(75- 77) . An early federally sponsored foray into sharing is a project known as NHIN Direct which promotes information exchange through a secure email mechanism.

There have been successes and failures. Challenges include establishing a firm economic basis for this service. Economic models are being tested, including costs underwritten by government, patients, providers, and payers. Most agree that such exchange should improve quality and is likely to drive down overall costs. The HITECH Meaningful Use program includes such exchange and clearly sees it as one of the most important long-term outcomes.

Imaging has been relegated to a position of lower priority challenged by the bandwidth required to move images across the internet. Image data sets are exponentially larger than the text and discrete lab information that comprises most of healthcare data. The storage and transmission requirements over consumer and small business internet services have been gating elements. This is all quickly changing as technology advances and costs diminish.

Internet based image exchange has arrived in a spectrum of “Cloud services” including research sponsored trials and some innovative private vendor services.

Internet image exchange commenced a few years ago when enterprises extended image and report viewing outside their local four walls. PACS viewers, often web based, would connect from the external offices of clinicians to a PACS, often through a “Virtual Private Network (VPN)” connection. The key is that the individual with the external connection is usually well known to the enterprise.

The next generation of connectivity has been targeted at large extended enterprises and/or a few independent enterprises with legal arrangements to share data. A growing number of businesses provide proprietary exchange solutions. They use the Internet to permit the linked partners to share information. They provide patient identification services, MRN reconciliation, record locator services and connect disparate systems so that data originating at one site can be seen at another.

But this is not full exchange. There are limiting boundaries present. Full transparent interoperability occurs when anyone with proper patient authorization, provider or other, no matter their location or employer, can view the data. There are several models. The first is the Health Information Exchange (HIE). Many enterprises on a regional level or beyond agree to share information that passes through a central repository. Safeguards are put into place to ensure that patients have consented for such exchange. IHE provides the Cross Enterprise Document Sharing (XDS) (78) profile, a well described technical and workflow solution to support such exchange. Documents arise at a “source” and are “consumed” at the other end of the chain. In the middle are a set of services to:

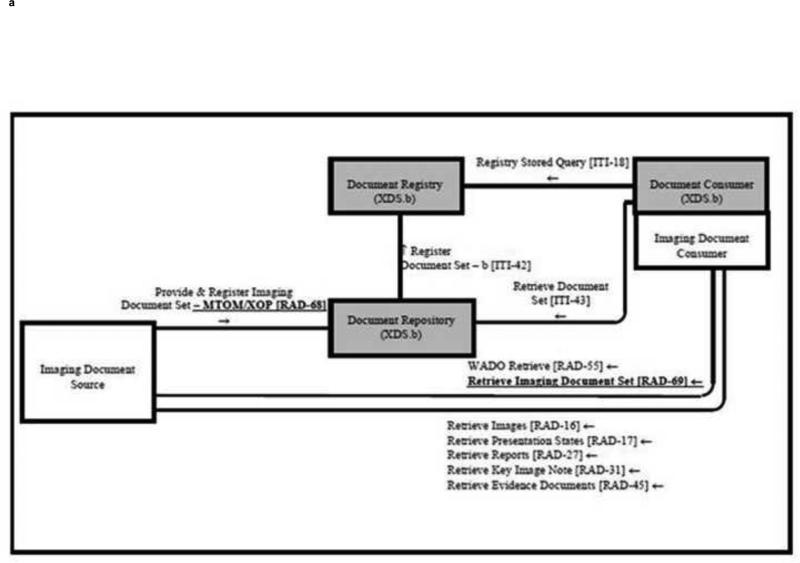

1) identify the patient through reconciliation of their demographic information as they move through the system, 2) register and store data in a common repository and provide record locator services, 3) confirm patient consent and lastly 4) send the data to a properly authenticated recipient. Audit trails are maintained. HIEs built on solutions other than IHE usually provide a similar set of services. An advantage of IHE is that these are standards based solutions, thus non-proprietary. For imaging IHE describes XDS-I(79) (Figure 5) which addresses the large bandwidth issues that accompany imaging.

5.

IHE describes a series of profiles known as XDS or Cross Enterprise Document Sharing. There is a variant to accommodate the large files that comprise images, known as XDS-I. IHE describes sets of transactions based upon common standards so that multiple parties can design systems that can easily interact- true interoperability. The XDS profiles describe a “document source” where a piece of patient data is created, and a “document consumer” which is the destination for the data when exchanging with a remote site. There are set of intermediaries that handle the exchange.

Another solution is putting control of sharing data, including images, into the hands of the patient, through a PHR. Several early proprietary image enabled PHRs have arisen- but attaining a critical mass of patients has been limited by the proprietary nature of those solutions. The RSNA, along with vendor partners, launched a PHR service, The RSNA Image Share (75), under NIH sponsorship employing the XDS-I profile. The goal is to leverage standards and enable the critical mass to be attained. The same standards based infrastructure can enable other forms of sharing. This project is live and enrolling patients.

Another solution is peer to peer networking, usually between providers. In this scenario, physicians take ownership of their patients' images and can share the images with other physicians. All these methods represent early incarnations, constantly undergoing modification in their technology and business models in parallel to government incentives to promote sharing. The ultimate goal is to make the patient's image and report available anywhere, anytime when proper consent and authentication is provided.

CAD Everywhere

Above we describe how the current state of post-processing will advance. Postprocessing workstations, often at high cost, have been available for many years, first introduced as standalone workstations. There has been a trend to move to a thin-client and/or web based applications. In this configuration a “lite” application or web link resides on a local workstation that connects to a central server, possibly in the “cloud” where intensive processing takes place. The application can easily be distributed to numerous distributed workstations. Purchasers acquire these services through concurrent user licenses. An end-user no longer needs to be at a single location to obtain a post-processing result. Location is almost meaningless, availability is ubiquitous. Cost and implementation models are drastically modified.

VI. MISCELLANEOUS FUNCTIONS IN A RADIOLOGY PRACTICE

Quality

We are increasingly facing a regulatory environment where performance is measured and meeting certain thresholds a requirement for practice. Below we cite several scenarios where IT tools are providing solutions that enhance the delivery of and measurement of quality in radiology practice.

Image quality is already being measured, often breast imaging and CT. In addition to inspection by local municipalities the ACR provides certification of these modalities. Currently images are shipped on film and/or CD to demonstrate that a practice meets quality measures. This process can be complex and time consuming. It can be simplified by implementing internet based solutions that aggregate the data from a practice and export it to the regulatory authority.

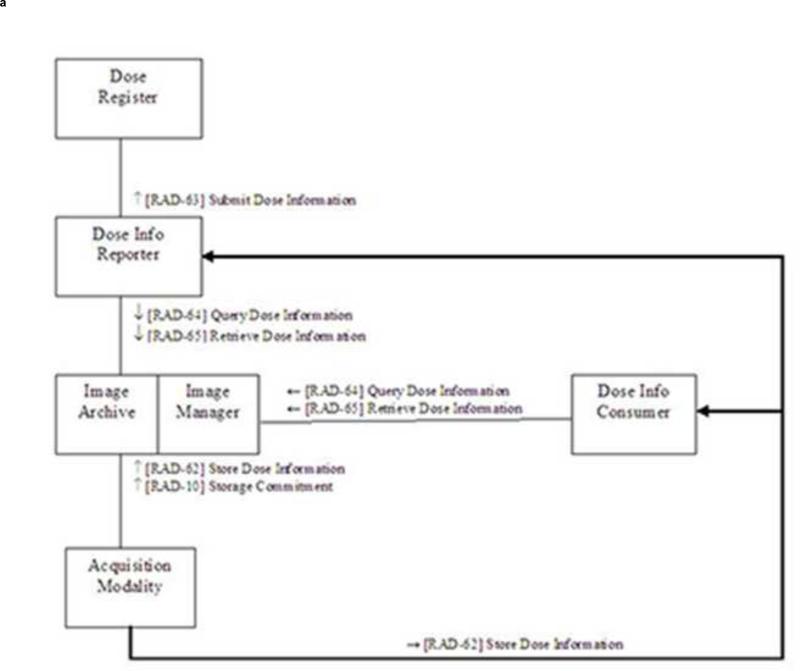

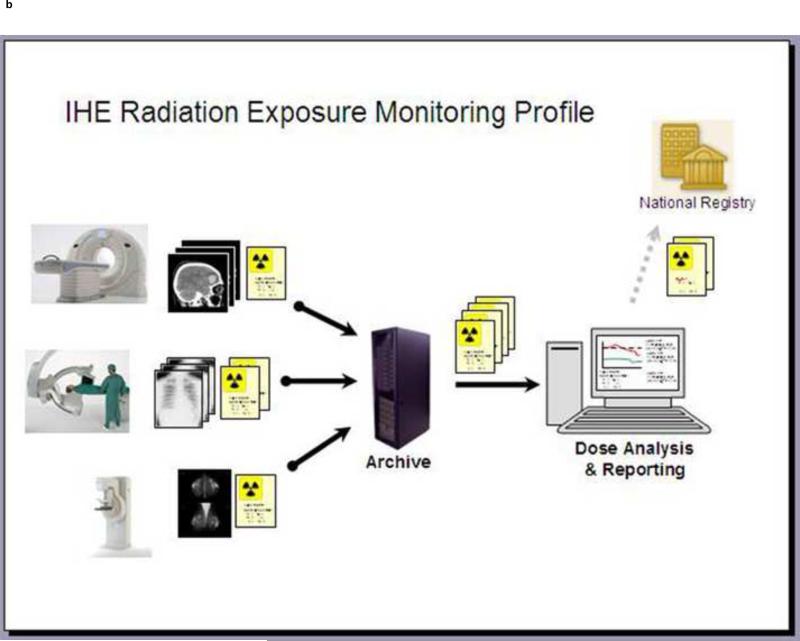

Limiting the radiation exposure of the individual patient and the overall population has become one of the highest priorities of our profession. Best practices are being actively promulgated through efforts such as “Image Gently. In parallel there are evolving IT solutions which will contribute to this effort. The ability to measure radiation exposure is cardinal to addressing this issue. The IHE Radiation Exposure Monitoring (REM) profile (Fig 6) describes the steps and associated standards required to accomplish this task. Several vendors have introduced products that follow this profile, aggregate the exposure data from a variety of modalities, and provide analytics so that a radiology department or imaging center can easily monitor their performance. Some solutions permit an extremely detailed analysis. Performance of individual devices, protocols, and the personnel operating the equipment can all be measured. The practices of each individual radiologist can also be analyzed.

6.

a. IHE includes a Radiation Exposure Monitoring (REM) profile. It describes how to collect dose information from a modality, and store it locally. It also describes a set of transactions to share it with an external registry.

b. A graphical representation demonstrates the flow of the dosimetry information from modalities to a local archive, an analytics application and ultimately to a national registry.

The ACR Dose Index Registry (DIR)(80,81) is a project that has leveraged informatics tools since its inception in order to make the regulatory process easier. In its first incarnation CT scanners provide the dosimetry information, exam by exam, to a local aggregation point (computer). A software application collects information, de-identifies it with regard to patients, specifies what exams were done, and at which practice. It is exported to the ACR. The ACR provides back an analysis including how your practice performs when compared to others. A number of vendors can also provide this data to the ACR and provide even more detailed analytics, as described above, for an individual site.

The DIR is another example of where the availability of a standardized terminology can enhance an application. Earlier in this report we noted that many “Brain CTs” were identified by a large variety of names. The ACR has developed a mapping tool permitting a site to map its exam dictionary to the RadLex Playbook ID, harmonizing the exams conducted in different offices under different names.

Another example of a quality improvement program, built to leverage informatics tools is RADPEER™ (82) the ACR program to encourage peer review. There are a variety of means of entering the peer review score, including manual data entry. Several vendor applications foster peer review during the course of daily interpretation, collecting the necessary data electronically. The scores are aggregated by the application and electronically submitted to the ACR.

Residents and residency programs are being measured by metrics identifying what types of exams have been seen and reported. The ACGME (Accreditation Council for Graduate Medical Education) accreditation programs require reporting this data. Many sites are aggregating that data by mining their RIS or reporting systems. Vendors are delivering new products to enable such data mining.

These early efforts are laying down the fundamental methodology to enable the collection of all kinds of performance indicators from data in our radiology IT systems, permitting measurement, comparison, feedback and remedy when problems are identified. In parallel, QA officers are exploring ways to make this educational rather than punitive.

Research

Comparative Effectiveness Research (CER)

Our profession has an ongoing research mission. How should our modalities be employed in the management and treatment of patients; how do we asses clinical impact? Comparative effectiveness research has emerged as the dominant approach, going forward. When possible clinical trials should compare proposed imaging solutions, to others, and even to managing the patient without imaging.

The American Recovery and Reinvestment Act of 2009 (ARRA) substantially extended federal support for CER and created the Federal Coordinating Council for Comparative Effectiveness Research (FCC)(83) which has issued a report laying out a process for promoting CER(83,84). The report provides this definition of CER: “Comparative effectiveness research is the conduct and synthesis of research comparing the benefits and harms of different interventions and strategies to prevent, diagnose, treat and monitor health conditions in “real world” settings... ” Currently, the minority of Radiology research is directly comparative in nature. In the CER-FCC report imaging was cited as a domain where there is potential to have high impact (85).

The ACR has a formal mechanism to determine the utility of imaging exams to diagnose disease, by evaluating the existing evidence based studies, comparing the modalities evaluated and synthesizing this information into a utility index, the ACR-AC. The initial methodology of establishing the ACR-AC utilizes the RAND/UCLA Appropriateness Method (86-89), based upon both evidence and consensus. The ACR criteria provide a comparative utility score for relevant modalities for varied clinical indications. There is an explanation of the rationale with documentation of the relevant literature. For some of the ACR-AC categories there is an “Evidence-table” provided in which the ACR identifies studies that were comparative, though the comparison is not always between imaging studies, and sometimes reflects the comparison of a single modality to clinical or surgical assessment. There are few controlled studies in the literature related to the clinical impact of the various modalities in many diseases, so CER evidence is generally lacking. The ACR-AC is a hybrid, with primary CER probably represented in only a minority of the criteria.

The combination of decision support tools such as the ACR-AC along with data mining tools that can extract the results and outcomes from a combination of radiology reports and the EMR, can create a closed cycle directing a patient into particular imaging studies and determining which of those studies alter patient outcome, for better or worse. Ideally, prospectively designed randomized controlled studies comparing imaging strategies can be implemented with the data mining tools in place to better understand outcome. Additional methodologies can be considered when prospective studies are not feasible. Using the tools discussed above we can begin to retrospectively examine large volumes of data(90), not possible in the past, and compare the performance of modalities. While less ideal than the carefully constructed prospective trials, the aggregation of large volumes of patients opens the door to statistical analyses that may provide reasonable comparative analysis.

Research Recruitment

The recruitment and identification of appropriate patients for clinical trials is often challenging. Data-mining tools running in the EMR or in the enterprise's data warehouse now offer a solution. Investigators can run real-time algorithms in their EMR to look for trigger events that suggest a patient might be a candidate to participate in a clinical trial. These tools usually provide notification to the provider, who can then choose to inform the patient of a trial.

As clinical trials are conducted there is a desire to recruit patients from a broader number of sites, rather than just academic campuses. The Internet provides an opportunity to efficiently collect data, de-identify it at the local site, and almost instantaneously provide it to a central site. The ACR Triad server has been repeatedly employed to accomplish this in American College of Radiology Imaging Network (ACRIN) trials. The RSNA Clinical Trial Processor (CTP) is another such solution. There are also proprietary solutions.

Patients may enter trials which at times leverage technology far from home, without the cost of travel. Some advances are based upon new technologies not available in every local environment. Data sets obtained on local instrumentation can be exported to sophisticated post-processing environments in the “cloud” and results returned to the local environment and study center. This may be an extremely effective mechanism of efficient resource utilization.

Big Data

Perhaps the most exciting frontier is that of “big data”, involving genomics and proteomics (91,92). Molecular data needs to be analyzed in the context of phenotypic data. This requires high performance computing solutions. These computing environments are searching for relationships between these data elements in order to understand the etiology and predictors of disease. Medicine may well switch from a reactive practice to a proactive preventative paradigm as these investigations mature. Certainly imaging will play a major role as systems supporting analysis of Big Data emerge, and standards in terminology and image metadata described above will severe a major role in enabling these systems.

Education

We educate technologists, physicians, nurses and administrators, as well as the general public. Textbooks and didactic lectures have been our core educational materials. The domain of education has evolved its own informatics tools to provide innovative ways for individuals to learn. The entire field of education is undergoing a revolution related to network based tools, the ability to interact through commonly available devices such as smartphones, and to marry learning to one's daily work These include Learning Management Systems (LMS)(93) which are tools to organize E-Learning, a process to foster learning through interactive, engaging modules free of time and place restrictions.

The informatics tools we have described above expose radiology and medical information. Information is discoverable and can be repurposed in the E-learning environment. The Shareable Content Object Reference Model (SCORM) is a standard, employed by many industries, for the management of educational content that enables the development of e-learning applications. (93,94). There is an initiative, “SCORM for Healthcare”, promoted by the MedBiquitous consortium. Efforts such as the RSNA RadSCOPE® (Radiology Shareable Content for Online Presentation and Education) leverage SCORM to provide content for the development of educational services.

Radiology educators are exploring new ways of bringing information to the radiologist, especially in the context of one's daily work interpreting exams. Education applications nurture just- in-time learning, monitor one's use of such systems and award educational credits. Newer technologies might monitor one's performance and bring forward educational resources when one's performance falls below a certain threshold.

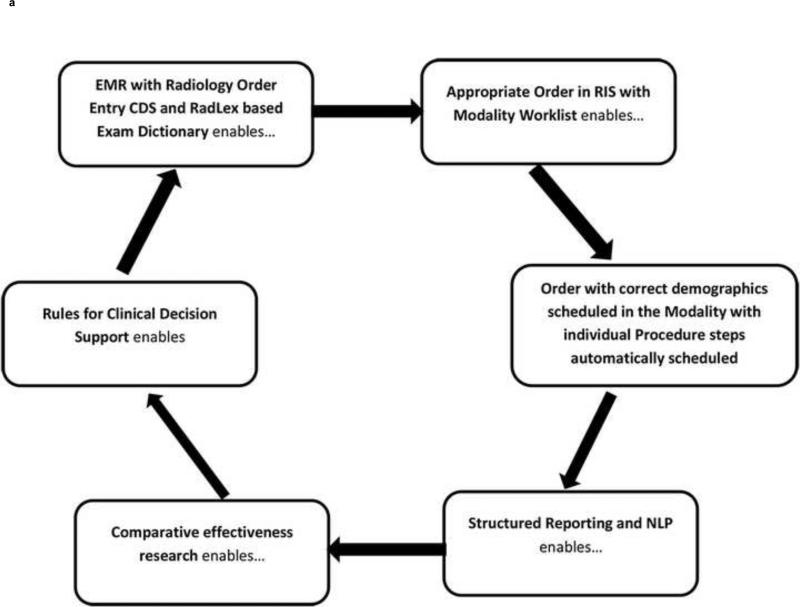

Conclusions

Radiology Informatics may be best understood as a set of tools that enables a continual cycle of enhancing exam workflow, with quality controls, reporting and research (Figure 7). Some informatics tools may seem mundane, others innovative, but together there is a synergy that permits our profession to remain fresh and exciting, providing patients with earlier and better care, often at a diminished cost.

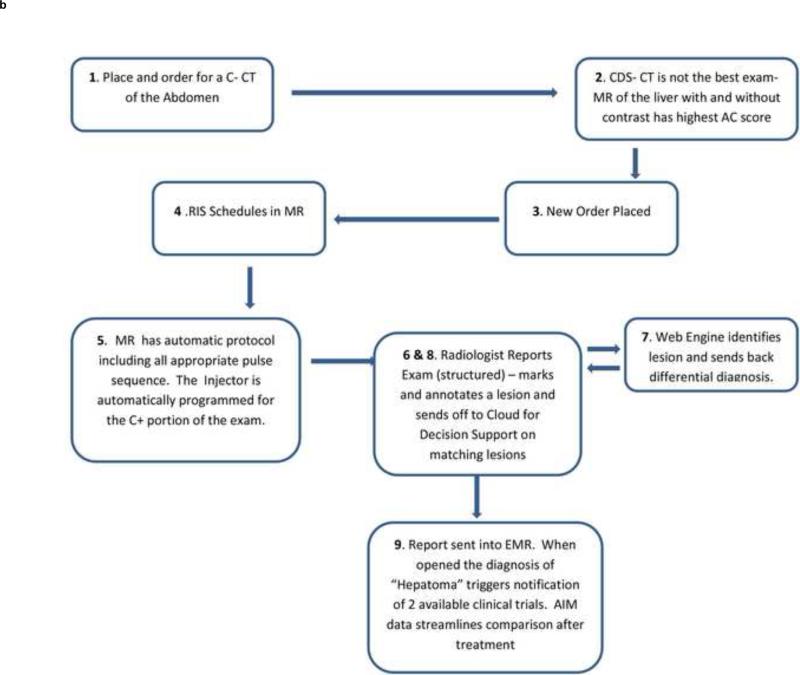

7.

a. This cycle of imaging demonstrates how the science of radiology supports the best practices of patient care and provides new knowledge and feedback to continually advance medical science. The informatics tools described throughout this review are the enablers of this cycle.

7b. A practical example demonstrates how CDS leads the clinician to the best exam and how decision support tools assist the radiologist in making a specific diagnosis. New structured reporting and NLP tools quickly help to direct the patient to ongoing clinical trials.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Based upon a lecture delivered at the Annual meeting of the Associations of University Radiologists 2012 titled: Imaging Informatics: Essential Tool for Regional Models and Increased Efficiencies (Clinical Environment in 2020)

References

- 1.American Recovery and Reinvestment act of 2009. Public Law 111-5. Available at http://www.ignet.gov/pande/leg/PL1115.pdf.

- 2.Lexa FJ. Drivers of Health Reform in the United States: 2012 and Beyond. J Am Coll Radiol. 2012;9:689–693. doi: 10.1016/j.jacr.2012.06.011. [DOI] [PubMed] [Google Scholar]

- 3.Rawson JV. Roots of Health Care Reform. J Am Coll Radiol. 2012;9:684–688. doi: 10.1016/j.jacr.2012.06.018. [DOI] [PubMed] [Google Scholar]

- 4.Reinertsen JL. The Moreton Lecture: Choices Faced by Radiology in the Era of Accountable Health Care. J Am Coll Radiol. 2012;9:620–624. doi: 10.1016/j.jacr.2012.05.011. [DOI] [PubMed] [Google Scholar]

- 5.Bidgood WD, Jr, Horii SC. Introduction to the ACR-NEMA DICOM standard. Radiographics. 1992 Mar;12(2):345–55. doi: 10.1148/radiographics.12.2.1561424. [DOI] [PubMed] [Google Scholar]

- 6.Oleg S. Chapter 4 in Digital Imaging and Communications in Medicine (DICOM): A Practical Introduction and Survival Guide. Kindle Edition Springer; 2008. Pianykh. [Google Scholar]

- 7.Webb G. Making the cloud work for healthcare: Cloud computing offers incredible opportunities to improve healthcare, reduce costs and accelerate ability to adopt new IT services. Health Manag Technol. 2012 Feb;33(2):8–9. [PubMed] [Google Scholar]

- 8.Shrestha RB. Imaging on the Cloud. Applied Radiology. 2011;40(5):8–12. [Google Scholar]

- 9.Langer SG. Challenges for data storage in medical imaging research. J Digit Imaging. 2011;24:203–207. doi: 10.1007/s10278-010-9311-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. IHE available at www.ihe.net.

- 11.IHE Technical Framework. Available at http://wiki.ihe.net/index.php?title=Frameworks. Copyright © IHE International. [Google Scholar]

- 12.Morin Richard L., Ph.D., F.A.C.R. Personal Communication. Brooks - Hollern Professor Department of Radiology, Mayo Clinic Jacksonville; Fl: [Google Scholar]

- 13.Rubin DL. Creating and curating a terminology for radiology: ontology modeling and analysis. J Digit Imaging. 2008;21(4):355–62. doi: 10.1007/s10278-007-9073-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hong Y, Kahn CE., Jr Analysis of RadLex coverage and term co-occurrence in radiology reporting templates. Journal of Digital Imaging. 2012;25:56–62. doi: 10.1007/s10278-011-9423-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rubin DL, Flanders A, Kim W, Siddiqui KM, Kahn CE., Jr Ontology-assisted analysis of web queries to determine the knowledge radiologists seek. Journal of Digital Imaging. 2011;24:160–164. doi: 10.1007/s10278-010-9289-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Langlotz CP. RadLex: A new method for indexing online educational materials. Radiographics. 2006;26:1595–7. doi: 10.1148/rg.266065168. [DOI] [PubMed] [Google Scholar]

- 17.Marwede D, Schulz T, Kahn T. Indexing Thoracic CT Reports Using a Preliminary Version of a Standardized Radiological Lexicon (RadLex). Journal of Digital Imaging. 2008;21(4):363–370. doi: 10.1007/s10278-007-9051-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kahn CE, Jr, Rubin DL. Improving radiology image retrieval through automated semantic indexing of figure captions. Journal of the American Medical Informatics Association. 2009;16:380–386. doi: 10.1197/jamia.M2945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.A TRIP™ Initiative Available at http://www.siimweb.org/index.cfm?id=766. Last accessed on 6/19/2013.

- 20.SIIM Workflow Initiative in Medicine (SWIM™) Available at http://www.siimweb.org/trip. Last accessed on 6/19/2013.

- 21.Hunt DL, Haynes RB, Hanna SE, Smith K. Effects of computer-based clinical decision support systems on physician performance and patient outcomes: a systematic review [see comments]. JAMA. 1998;280(15):1339–1346. doi: 10.1001/jama.280.15.1339. [DOI] [PubMed] [Google Scholar]

- 22.Randolph AG, Haynes RB, Wyatt JC, Cook DJ, Guyatt GH. Users’ Guides to the Medical Literature: XVIII. How to use an article evaluating the clinical impact of a computer-based clinical decision support system. Jama. 1999;282(1):67–74. doi: 10.1001/jama.282.1.67. [DOI] [PubMed] [Google Scholar]

- 23.Broverman CA. Standards for Clinical Decision Support Systems. Journal of Healthcare Information Management , Summer. 1999;13(2):23–31. [Google Scholar]

- 24.Kuperman GJ, Sittig DF, Shabot MM. Clinical Decision Support for Hospital and Critical Care. Journal of Healthcare Information Management Summer. 1999;13(2):81–96. 1999. [Google Scholar]

- 25.Bates DW, Kuperman GJ, Wang S, et al. Ten commandments for effective clinical decision support: making the practice of evidence-based medicine a reality. J Am Med Inform Assoc. 2003;10(6):523–530. doi: 10.1197/jamia.M1370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Borgestede JP. Presentation to American Board of Radiology Foundation; Reston, Va. August 6-7, 2009; Available at http://www.abrfoundation.org/forms/borgestede_summit.pdf. [Google Scholar]

- 27.Thrall J. Presentation to American Board of Radiology Foundation; Reston, Va. August 6-7, 2009; Available at http://www.abrfoundation.org/forms/thrall_summit.pdf. [Google Scholar]

- 28.Duszak R, Jr., Berlin JW. Utilization Management in Radiology, Part 1: Rationale, History, and Current Status. Journal of the American College of Radiology. 2012 Oct;9(10):694–699. doi: 10.1016/j.jacr.2012.06.010. [DOI] [PubMed] [Google Scholar]

- 29.Duszak R, Jr., Berlin JW. Utilization Management in Radiology, Part 2: Perspectives and Future Directions. Journal of the American College of Radiology. 2012 Oct;9(10):700–703. doi: 10.1016/j.jacr.2012.06.009. [DOI] [PubMed] [Google Scholar]

- 30.Amis ES, Butler PF, Applegate EA, et al. American College of Radiology White Paper on Radiation Dose in Medicine. Journal of the American College of Radiology. 2007;4:272–284. doi: 10.1016/j.jacr.2007.03.002. [DOI] [PubMed] [Google Scholar]

- 31.Berrington de Gonzalez A, Darby S. Risk of cancer from diagnostic x-rays: estimates for the UK and 14 other countries. Lancet. 2004;363:345–351. doi: 10.1016/S0140-6736(04)15433-0. [DOI] [PubMed] [Google Scholar]

- 32.Brenner DJ, Hall EJ. Computed tomography: an increasing source of radiation exposure. N Engl J Med. 2007 Nov 29;357(22):2277–84. doi: 10.1056/NEJMra072149. [DOI] [PubMed] [Google Scholar]

- 33.Bershow B, Courneya P, Vinz C. Decision-Support for More Appropriate Ordering of High-Tech Diagnostic Imaging Scans.. Presentation at ICSIColoquium 2009 © 2009 Institute for Clinical Systems Improvement; St. Paul, Mn. May 4th-6th 2009. [Google Scholar]

- 34.Iglehart JK. The new era of medical imaging -- progress and pitfalls. N Engl J Med. 2006;354:2822–2828. doi: 10.1056/NEJMhpr061219. [DOI] [PubMed] [Google Scholar]

- 35.Iglehart JK. Health Insurers and Medical-Imaging Policy — A Work in Progress. N Engl J Med. 2009;360:1030–1037. doi: 10.1056/NEJMhpr0808703. [DOI] [PubMed] [Google Scholar]

- 36.Yong Pierre L., Olsen LeighAnne. 2009 IOM Roundtable on Evidence-Based Medicine the Healthcare Imperative: Lowering costs and improving outcomes; Brief Summary of the Workshop; Prepublication copy: Uncorrected Proofs. Copyright © National Academy of Sciences PRESS 500 Fifth Street, N.W. Washington, DC 20001. Available at http://www.nap.edu/catalog/12750.html. [Google Scholar]

- 37.Taragin BH, Feng L, Ruzal-Shapiro C. Online Radiology Appropriateness Survey: Results and Conclusions from an Academic Internal Medicine Residency. Academic Radiology. 2003;10(7):781–785. doi: 10.1016/s1076-6332(03)80123-x. [DOI] [PubMed] [Google Scholar]

- 38.Lee Si. Does Radiologist Recommendation for Follow-up with the Same Imaging Modality Contribute Substantially to High- Cost Imaging Volume? Radiology. 2007;242:857–864. doi: 10.1148/radiol.2423051754. [DOI] [PubMed] [Google Scholar]

- 39.Gazelle GS, Halpern EF, Ryan HS, Tramontano AC. Utilization of Diagnostic Medical Imaging: Comparison of Radiologist Referral versus Same-Specialty Referral. Radiology. 2007;245(2):517–522. doi: 10.1148/radiol.2452070193. [DOI] [PubMed] [Google Scholar]

- 40.Blackmore CC, Mecklenburg RS, Kaplan GS. Effectiveness of Clinical Decision Support in Controlling Inappropriate Imaging. J Am Coll Radiol. 2011 Jan;8(1):19–25. doi: 10.1016/j.jacr.2010.07.009. [DOI] [PubMed] [Google Scholar]

- 41.Rosenthal DI, Weilburg JB, Schultz T, et al. Radiology order entry with decision support: initial clinical experience. J Am Coll Radiol. 2006;3:799–806. doi: 10.1016/j.jacr.2006.05.006. [DOI] [PubMed] [Google Scholar]

- 42.Khorasani R. Computerized physician order entry and decision support: improving the quality of care. Radiographics. 2001;21(4):1015–8. doi: 10.1148/radiographics.21.4.g01jl371015. [DOI] [PubMed] [Google Scholar]

- 43.Otero HJ, Ondategui-Parra S, Nathanson EM, Erturk SM, Ros PR. Utilization management in radiology: basic concepts and applications. J Am Coll Radiol. 2006;3:351–357. doi: 10.1016/j.jacr.2006.01.006. [DOI] [PubMed] [Google Scholar]

- 44.Sistrom CL. The appropriateness of imaging: a comprehensive conceptual framework. Radiology. 2009 Jun;251(3):637–49. doi: 10.1148/radiol.2513080636. [DOI] [PubMed] [Google Scholar]

- 45.Sistrom CL, Dang PA, Weilburg JL, Dreyer KJ, Rosenthal DI, Thrall JH. Effect of Computerized Order Entry with Integrated Decision Support on the Growth of Outpatient Procedure Volumes: Seven-year Time Series Analysis. Radiology. 2009 Apr;251(1):147–55. doi: 10.1148/radiol.2511081174. Epub 2009 Feb 12. [DOI] [PubMed] [Google Scholar]

- 46. ACR Appropriateness Criteria available at http://www.acr.org/Quality-Safety/Appropriateness-Criteria.

- 47.Kahn CE, Jr., Thao C. GoldMiner: a radiology image search engine. AJR Am J Roentgenol. 2007;188(6):1475–8. doi: 10.2214/AJR.06.1740. URL: (PMID 17515364) [DOI] [PubMed] [Google Scholar]

- 48.Johnson PT, Fishman EK. Computed tomography dataset post processing: from data to knowledge. Mt Sinai J Med. 2012 May-Jun;79(3):412–21. doi: 10.1002/msj.21316. [DOI] [PubMed] [Google Scholar]

- 49.Dromain C, Boyer B, Ferré R, Canale S, Delaloge S, Balleyguier C. Computed-aided diagnosis (CAD) in the detection of breast cancer. Eur J Radiol. 2012 Aug 29; doi: 10.1016/j.ejrad.2012.03.005. [Epub ahead of print] [DOI] [PubMed] [Google Scholar]

- 50.Dromain C, Boyer B, Ferre R, Canale S, Delaloge S, Balleyguier C. Computed aided diagnosis (CAD) in the detection of breast cancer. Eur J Radiol. 2012 doi: 10.1016/j.ejrad.2012.03.005. URL: (PMID 22939365) [DOI] [PubMed] [Google Scholar]

- 51.Lee KH, Goo JM, Park CM, Lee HJ, Jin KN. Computer-aided detection of malignant lung nodules on chest radiographs: effect on observers’ performance. Korean journal of radiology : official journal of the Korean Radiological Society. 2012;13(5):564–71. doi: 10.3348/kjr.2012.13.5.564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Perumpillichira JJ, Yoshida H, Sahani DV. Computer-aided detection for virtual colonoscopy. Cancer Imaging. 2005;5(1):11–6. doi: 10.1102/1470-7330.2005.0016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Yuan Michael J. Watson and healthcare How natural language processing and semantic search could revolutionize clinical decision support Watson and healthcare. Trademarks © Copyright IBM Corporation 2011 Apr 12, 2011 available at http://www.ibm.com/developerworks/industry/library/ind-watson/

- 54.Reiner Bruce, MD Radiology Report Innovation The Antidote to Medical Imaging Commoditization. Journal of the American College of Radiology. 2012;9(7):455–457. doi: 10.1016/j.jacr.2011.12.013. [DOI] [PubMed] [Google Scholar]

- 55.Kahn CE, Jr., Langlotz CP, Burnside ES, Carrino JA, Channin DS, Hovsepian DM, Rubin DL. Toward best practices in radiology reporting. Radiology. 2009;252(3):852–6. doi: 10.1148/radiol.2523081992. [DOI] [PubMed] [Google Scholar]

- 56.American College of Radiology . ACR practice guideline for communication of diagnostic imaging findings. American College of Radiology; Reston, Va: 2005. [Google Scholar]

- 57.Automated Detection of Critical Results in Radiology Reports Paras Lakhani &Woojin Kim & Curtis P. Langlotz Published online. J Digit Imaging. 2012;25:30–36. doi: 10.1007/s10278-011-9426-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Lakhani P, Langlotz CP. Automated detection of radiology reports that document non-routine communication of critical or significant radiology results. J Digital Imaging. 2010 Dec;23:647–57. doi: 10.1007/s10278-009-9237-1. (Epub 2008 Oct) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Lakhani P, Langlotz CP. Documentation of nonroutine communications of critical or significant radiology results: a multiyear experience at a tertiary hospital. J Am Coll Radiol. 2010;7(10):782–790. doi: 10.1016/j.jacr.2010.05.025. [DOI] [PubMed] [Google Scholar]

- 60.Dreyer Keith J., Kalra Mannudeep K., MD, DNB, Maher Michael M., et al. Application of Recently Developed Computer Algorithm for Automatic Classification of Unstructured Radiology Reports: Validation Study. Radiology. 2005 Feb;234(2):323–9. doi: 10.1148/radiol.2341040049. [DOI] [PubMed] [Google Scholar]

- 61.Rubin DL, Mongkolwat P, Kleper V, Supekar K, Channin DS. Medical Imaging on the Semantic Web: Annotation and Image Markup.. 2008 AAAI Spring Symposium Series, Semantic Scientific Knowledge Integration; Stanford University; 2008. URL: http://stanford.edu/~rubin/pubs/Rubin-AAAI-AIM-2008.pdf. [Google Scholar]

- 62.caBIG In-vivo Imaging Workspace. Annotation and Image Markup (AIM) Available from: https://cabig.nci.nih.gov/tools/AIM (Accessed December 26, 2008)

- 63.Channin DS, Mongkolwat P, Kleper V, Dave VV, Rubin DL. The Annotation and Image Markup (AIM) Project; Version 2.0 Update.. Scientific Paper, Society for Imaging Informatics in Medicine Annual Scientific Meeting; Minneapolis, MN. 2010. [Google Scholar]

- 64.Jaffe TA, Wickersham NW, Sullivan DC. Quantitative imaging in oncology patients: Part 1, radiology practice patterns at major U.S. cancer centers. AJR Am J Roentgenol. 2010;195(1):101–6. doi: 10.2214/AJR.09.2850. [DOI] [PubMed] [Google Scholar]

- 65.Abajian AC, Levy M, Rubin DL. Informatics in Radiology: Improving Clinical Work Flow through an AIM Database: A Sample Web-based Lesion Tracking Application. Radiographics. 2012;32(5):1543–52. doi: 10.1148/rg.325115752. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Rubin DL, Rodriguez C, Shah P, Beaulieu C. iPad: Semantic Annotation and Markup of Radiological Images. AMIA Annu Symp Proc. 2008:626–30. URL: (PMID 18999144) [PMC free article] [PubMed] [Google Scholar]

- 67.Zimmerman SL, Kim W, Boonn WW. Informatics in radiology: automated structured reporting of imaging findings using the AIM standard and XML. Radiographics. 2011;31(3):881–7. doi: 10.1148/rg.313105195. [DOI] [PubMed] [Google Scholar]

- 68.Rubin DL, Korenblum D, Yeluri V, Frederick P, Herfkens RJ. Semantic Annotation and Image Markup in a Commercial PACS Workstation.. Scientific Paper, Ninety-sixth annual scientific meeting of the RSNA; Chicago, IL. 2010. [Google Scholar]

- 69.Rubin DL, Snyder A, editors. ePAD: A Cross-Platform Semantic Image Annotation Tool Ninety-seventy annual scientific meeting of the RSNA. Chicago, IL: 2011. [Google Scholar]

- 70.M1 Flanders AE. Medical image and data sharing: are we there yet? Radiographics. 2009 Sep-Oct;29(5):1247–51. doi: 10.1148/rg.295095151. [DOI] [PubMed] [Google Scholar]

- 71.Mendelson DS, Bak PRG, Menschik E, Siegel E. Informatics in Radiology: Image Exchange: IHE and the Evolution of Image Sharing. Radiographics. 2008;28:1817–1833. doi: 10.1148/rg.287085174. Published online September 4, 2008. [DOI] [PubMed] [Google Scholar]

- 72.Mendelson DS. Image Sharing: Where we've been, where we're going. Applied Radiology. 2011 Nov;40(11):6–11. [Google Scholar]

- 73.Sodickson A, Opraseuth J, Ledbetter S. Outside imaging in emergency department transfer patients: CD import reduces rates of subsequent imaging utilization. Radiology. 2011;260:408–413. doi: 10.1148/radiol.11101956. Epub 2011 Apr 19. [DOI] [PubMed] [Google Scholar]

- 74.Flanagan PT, Relyea-Chew A, Gross JA, Gunn ML. Using the Internet for Image Transfer in a Regional Trauma Network: Effect on CT Repeat Rate, Cost, and Radiation Exposure. J Am Coll Radiol. 2012 Sep;9(9):648–56. doi: 10.1016/j.jacr.2012.04.014. [DOI] [PubMed] [Google Scholar]

- 75.RSNA Image Share Internet Database Network for Patient-Controlled Medical Image Sharing Data NIBIB/NHLBI Contract HHSN26800900060C.

- 76.Central Alabama Health Image Exchange.

- 77.Personally Controlled Sharing of Medical Images in the Rural and Urban Southeast North Carolina. NIBIB/NHLBI Grant: 1 RC2 EB011406-01.

- 78.Integrating the Healthcare Enterprise: IHE IT Infrastructure Technical Framework. Vol 1 (ITITF-1), Integration Profiles. Rev 7.0. American College of Cardiology, Health Information Management Systems Society, and the Radiological Society of North America; Chicago, IL: Aug. 2007. Available at: http://www.ihe.net/Technical_Framework. Accessed July 27, 2011. [Google Scholar]

- 79.IHE Radiology Technical Framework Volume 1 (IHE RAD TF-1) Integration Profiles Revision 11.0 – Final Text 20 July 24. 2012 Available at: http://www.ihe.net/Technical_Framework/upload/IHE_RAD_TF_Vol1_FT.pdf.

- 80.The American College of Radiology Dose Index Registry. available at http://www.acr.org/Quality-Safety/National-Radiology-Data-Registry/Dose-Index-Registry.

- 81.The American College of Radiology DIR Mapping Tool User Guide. August 16, 2012 Available at http://www.acr.org/~/media/ACR/Documents/PDF/QualitySafety/NRDR/DIR/MappingToolUserGuideDIR.pdf.

- 82.American College of Radiology RADPEER. available at http://www.acr.org/Quality-Safety/RADPEER.

- 83.Federal Coordinating Council for Comparative Effectiveness Research. Report to the President and Congress. Department of Health and Human Services; Washington,DC: Jun, 2009. Available at http://www.hhs.gov/recovery/programs/cer/cerannualrpt.pdf.) [Google Scholar]

- 84.Conway PH, Clancy C. Comparative-Effectiveness Research — Implications of the Federal Coordinating Council's Report. NEJM. 2009;361:328–330. doi: 10.1056/NEJMp0905631. [DOI] [PubMed] [Google Scholar]

- 85.Report to the President and the Congress on Comparative Effectiveness. Research-available at http://www.hhs.gov/recovery/programs/cer/execsummary.html.

- 86.Brown BB. Rand Corp. Santa Monica, Calif: 1968. Rand Corporation: Delphi Process: A Methodology Used for the Elicitation of Opinions of Experts. [Google Scholar]

- 87.Dalkey NC. The Delphi method: An experimental study of group opinion. Document no. RM-5888-PR.: RAND Corporation. Santa Monica, Calif.: 1969. [Google Scholar]

- 88.Brook RH. The RAND/UCLA Appropriateness Method. In: McCormick KA, Moore SR, Siegel RA, editors. Methodology perspectives. AHCPR Pub. No. 95-0009. Public Health Service, U.S. Department of Health and Human Services; Rockville, MD: pp. 59–70. [Google Scholar]

- 89.Sistrom CL. The appropriateness of imaging: a comprehensive conceptual framework. Radiology. 2009 Jun;251(3):637–49. doi: 10.1148/radiol.2513080636. [DOI] [PubMed] [Google Scholar]

- 90.Sharon Begley The Best Medicine A quiet revolution in comparative effectiveness research just might save us from soaring medical costs. Scientific American. 2011 Jul;305(1):50–55. doi: 10.1038/scientificamerican0711-50. [DOI] [PubMed] [Google Scholar]

- 91.Schadt EE, Linderman MD, Sorenson J, Lee L, Nolan GP. Nat Rev Genet..Cloud and heterogeneous computing solutions exist today for the emerging big data problems in biology. Nat Rev Genet. 2011 Mar;12(3):224. doi: 10.1038/nrg2857-c2. Epub 2011 Feb 8. [DOI] [PubMed] [Google Scholar]

- 92.Schadt EE, Linderman MD, Sorenson J, Lee L, Nolan GP. Computational solutions to large-scale data management and analysis. Nat Rev Genet. 2010 Sep;11(9):647–57. doi: 10.1038/nrg2857. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Njuguna N, Adam E, Flanders AE, Kahn CE., Jr Informatics in Radiology: Envisioning the Future of E-Learning in Radiology: An Introduction to SCORM1. Radiographics. 2011 Jul-Aug;31(4):1173–9. doi: 10.1148/rg.314105191. discussion 1179-80. Epub 2011 May 5. [DOI] [PubMed] [Google Scholar]

- 94.Chang P. Invited Commentary July 2011 RadioGraphics. 31:1179–1180. [Google Scholar]