Abstract

This study investigated the role of the eye region of emotional facial expressions in modulating gaze orienting effects. Eye widening is characteristic of fearful and surprised expressions and may significantly increase the salience of perceived gaze direction. This perceptual bias rather than the emotional valence of certain expressions may drive enhanced gaze orienting effects. In a series of three experiments involving low anxiety participants, different emotional expressions were tested using a gaze-cueing paradigm. Fearful and surprised expressions enhanced the gaze orienting effect compared with happy or angry expressions. Presenting only the eye regions as cueing stimuli eliminated this effect whereas inversion globally reduced it. Both inversion and the use of eyes only attenuated the emotional valence of stimuli without affecting the perceptual salience of the eyes. The findings thus suggest that low-level stimulus features alone are not sufficient to drive gaze orienting modulations by emotion. Rather, they interact with the emotional valence of the expression that appears critical. The study supports the view that rapid processing of fearful and surprised emotional expressions can potentiate orienting to another person’s averted gaze in non-anxious people.

Keywords: Emotional face processing, Eye gaze processing, Social attention

Processing facial signals is fundamental to successfully navigate our social environment, interpreting social signals and cues, and adapting behaviours appropriately. One important social cue is observing the direction of another person’s eye gaze, which can confer information about their direction of attention (Langton, Watt, & Bruce, 2000; see Itier & Batty, 2009, for a review). Interpreting eye gaze is also an important aspect of our social cognition as it allows making inferences regarding the intentions and states of mind of other people (e.g., Baron-Cohen, 1995). Humans are sensitive to the direction of another’s eye gaze from infancy (Farroni, Csibra, Simion, & Johnson, 2002; Hood, Willen, & Driver, 1998) and a large body of research has demonstrated that shifting attention to the direction of another person’s gaze is a highly efficient, possibly automatic process (e.g., Driver et al., 1999; Friesen & Kingstone, 1998; Langton & Bruce, 1999), supporting an important adaptive role of gaze processing for survival. Experimental studies have used an adaptation of Posner’s attentional cueing task (Posner, 1980), in which the directional cue consists of a centrally presented schematic or photographic face whose eye gaze is averted to the left or right. Laterally presented targets whose position is congruent with the direction of gaze are detected faster compared to targets presented at the nongazed at location (Driver et al., 1999; Langton & Bruce, 1999; see Frischen, Bayliss, & Tipper, 2007, for a review). This effect is evident even when participants are aware that the direction of gaze is counterpredictive of target location, demonstrating the strong and involuntary shift of the observers’ attention in the direction of gaze (Driver et al., 1999; Friesen, Ristic, & Kingstone, 2004).

Attention is modulated not only by the direction of eye gaze but also by facial emotion. Attentional vigilance to threatening faces (e.g., Bradley, Mogg, & Millar, 2000; Mogg & Bradley, 1999) and fearful faces (Fox, 2002) has been demonstrated for individuals with high levels of trait anxiety using dot-probe paradigms. In these studies dot probes were detected faster when preceded by threatening stimuli than neutral or positive stimuli. Similarly the “threat-superiority effect” in visual search tasks explains why participants detect angry faces in a search array of neutral or happy faces faster than they detect neutral or happy faces in an array of threatening, angry faces (e.g., Eastwood, Smilek, & Merikle, 2001; Fox et al., 2000; Öhman, Lundqvist, & Esteves, 2001). A recent body of research suggests discrete facial expressions may differently affect the magnitude of gaze orienting effects (GOE), as both expression and gaze impact attentional vigilance. Mathews, Fox, Yiend, and Calder (2003) first reported greater GOE for fearful compared to neutral faces for participants high in trait anxiety, a finding that has since been replicated (Fox, Mathews, Calder, & Yiend, 2007; Holmes, Richards, & Green, 2006; Tipples, 2006). High anxious individuals may have a lower threshold for threat processing, and triggering attentional orienting, thus resulting in greater cueing effects to fearful faces (Mathews et al., 2003). This explanation seems logical given the source of the observed person’s fear may also be a personal threat, thus attending to the gazed-at location can be beneficial for personal safety. Anger is another threatening facial expression, but in contrast to fear, different attentional cueing mechanisms would be expected as another person’s anger is most threatening when directed at one’s self rather than elsewhere. Thus, angry faces with averted gaze would not be expected to result in an enhanced GOE comparable to that demonstrated for fearful faces. Fox et al. (2007) showed an enhanced GOE to fearful compared to angry faces in anxious individuals. In contrast, in a straight-gaze condition, responses were significantly slower for anger than any other emotion, presumably because the direct gaze combined with the angry expression strongly engaged attention, in turn slowing attention orienting (and thus responses) to peripheral targets.

The evidence for enhanced GOE to fearful faces amongst nonanxious individuals is less straightforward. Using six experiments, Hietanen and Leppänen (2003) were unable to demonstrate such an effect in low anxious individuals. In contrast, Tipples (2006) and Putman, Hermans, and van Honk (2006) demonstrated enhanced GOE for fearful compared with neutral and happy expressions respectively. However, in both studies participants’ anxiety scores ranged from low to high and correlated positively with the size of the GOE to fearful faces, in accordance with the studies in high anxious individuals reported above. Neither study reported results separately for low and high anxious subgroups. Other studies like Graham, Friesen, Fichtenholz, and LaBar (2010), who reported in one of their experiments an enhanced GOE for fearful compared to happy and neutral faces did not report trait anxiety scores, making it difficult to know whether their effects were linked to participants’ anxiety status. The evidence for enhanced GOE to fearful faces is thus mixed and incongruent findings are, at least in part, due to differences in participants’ anxiety level. A clear demonstration of GOE enhancement for fear in nonanxious participants is lacking. Here, we report GOE modulations with facial emotions including fear, in non-anxious participants.

Differences in other aspects of experimental design have led to some inconsistent findings and further evidence is needed to shed light on possible mechanisms underlying the GOE to fearful faces. The present set of experiments address some of these issues to further our understanding of gaze orienting in the context of different facial emotions. Two important experimental variables are the type of stimuli (static or dynamic), and the task used. Putman et al. (2006) presented dynamic face videos in which gaze and expression changed simultaneously and a less demanding target localization (as opposed to discrimination) task was required. The authors argue that their use of dynamic displays of emotional expressions is more ecologically valid, and may increase the threat value of fearful faces. This idea is supported by an fMRI study showing greater activation of the amygdala and temporal cortical structures in response to dynamic than static fearful and happy expressions (Sato, Yoshikawa, Kochiyama, & Matsumura, 2004). Moreover, Sato and Yoshikawa (2004) reported that dynamic facial expressions were rated as more naturalistic than static expressions. Thus, in addition to possible effects of participants’ anxiety levels, the enhanced GOE reported for fear in Putman et al.’s study may be due to the greater emotional valence and ecological validity of the dynamic stimuli used, or the enhanced discriminability of eye gaze in the fearful face condition (see later).

Tipples (2006) used static face images in which expression changed before gaze shifted in a target discrimination task. Graham et al. (2010) argue that the effects of enhanced GOE for fear compared to neutral demonstrated in Tipples’s study may be driven by the expression change preceding the gaze shift, which would provide more salient cue stimuli (widened eyes) in the fear condition. Most studies to date have emphasized the affective valence of emotional expressions as the mechanism underlying the modulations of the GOE. An alternative explanation focuses on the perceptual characteristics of the stimuli used in addition to, or in place of, their affective valence. As mentioned in Tipples (2005, 2006), it is possible that the greater gaze-cueing effect found for fearful faces was due to the eyes which are characteristically more open in the expression of fear than in happy, neutral, or angry expressions. The perception of eye gaze direction is based to a great extent on the contrast between the sclera and the iris, as shown by experiments using contrast reversed (photonegative) faces in which gaze is perceived as being in the opposite direction compared to normal, positive-contrast pictures (Ando, 2002; Ricciardelli, Baylis, & Driver, 2000; Sinha, 2000). Thus, the characteristic eye widening in fearful facial expressions may be strongly contributing to the enhanced GOE.

Another emotional expression with characteristic eye widening is surprise. In surprised faces the eyebrows are typically raised accompanied by widened eyes. However, unlike fear, surprise is not specifically categorized as a negative emotion or one signalling threat (Tomkins & McCarter, 1964) and to date surprise has not been included as a comparison expression in any gaze-cueing experiments. Moreover the contribution of the perceptual characteristics of the eyes to the enhanced GOE to fearful faces, particularly in low-anxious individuals, has not been evaluated.

Three experiments were conducted to investigate the contribution of emotional valence of different facial expressions and the role of the eye region in modulating the GOE. In all experiments dynamic emotional expressions were presented to individuals selected for low trait and state anxiety. Experiment 1 examined the effect of surprised expression in comparison to angry, happy, neutral, and fearful expressions. If the GOE difference for fearful faces compared to other emotions is related to basic perceptual differences in the eye region such as the amount of exposed sclera, we expected that fearful and surprised expressions, which both present widened eyes, would elicit an enhanced GOE compared to angry and happy expressions for which the eyes are squinted. However, emotional valence may also explain the predicted modulation of GOE by surprise as attentional vigilance of the observer may be increased in order to locate the unexpected (positive or negative) event responsible for the expression. In order to disentangle the contribution of the emotional valence from that of the basic perceptual characteristics of the eye region, we conducted a second experiment in which all stimuli were presented upright and inverted (upside-down). Processing emotional valence of a face relies, at least in part, on holistic and/or configural processing and has been shown to be disrupted by face inversion in static (Bartlett & Searcy, 1993; McKelvie, 1995; Valentine, 1988) and dynamic (Ambadar, Schooler, & Cohn, 2005) displays of expression. The emotional valence of inverted faces is lessened by inversion, but the perceptual characteristics of the eye region remain unchanged. Thus, if the cueing effect relies mostly on the amount of exposed sclera, we expected it would not be significantly affected by face inversion and very similar enhanced GOE should be seen for fearful and surprised expressions in both upright and inverted stimuli. In contrast, if presenting faces upside-down reduces the GOE modulations by expressions, we would conclude that these modulations are, at least in part, driven by the emotional content of the face. Finally, to directly test whether the eye region alone is sufficient to drive a modulation of gaze cueing by expression, we presented isolated eye regions upright and inverted (Experiment 3). If the eyes, which in isolation are sufficient to produce a gaze cueing effect (Kingstone, Friesen, & Gazzaniga, 2000), can elicit GOE modulations by emotion without the context of a full face, we would expect comparable modulations by expressions in Experiment 3 as seen with full faces in Experiments 1 and 2. Accordingly, the eye stimuli taken from fearful and surprised expressions in which the eyes are maximally open should result in the greatest gaze-cueing effect compared to eye stimuli taken from happy and angry emotional expressions in which the eyes do not widen.

EXPERIMENT 1

In Experiment 1 we used the same design as Putman et al. (2006) involving dynamic emotional face stimuli presented upright. However, they compared only fearful and happy expressions; we also included neutral, angry, and surprise expressions in our design. To our knowledge, surprised expressions have never been tested, alone or in comparison to other expressions, in a static or dynamic design. Dynamic neutral and angry expressions have only been tested in Graham et al. (2010). In addition, we restricted our group to low anxious participants. We sought to replicate Putman et al.’s finding of enhanced GOE to fearful compared to happy expressions. We also predicted that the GOE to fearful expressions would be larger than that to neutral and angry expressions. Finally, given the perceptual similarity between surprised and fearful faces in terms of eye opening (both present larger sclera size than other emotions), we predicted similar enhancement of the GOE for surprised compared to fearful expressions.

Method

Participants

Twenty participants (10 female) were recruited from the Toronto area and paid for their participation. Ages ranged from 20 to 32 years (M=26.25, SD=3.18). Participants had normal or corrected to normal vision and all were right handed. Both state (M=30.25, SD=5.81) and trait (M=36.00, SD=7.56) anxiety scores were within 1 standard deviation of the mean normative scores for state (M=36.36, SD=10.59) and trait (M=35.85, SD=9.65) anxiety (STAI; Spielberger, Gorusch, Lushene, Vagg, & Jacobs, 1983), indicating no elevated anxiety amongst our participants. The study was approved by the Hospital for Sick Children Research Ethics Board and all participants gave informed written consent.

Stimuli

Ten photographs of faces (five men, five women) with happy, fearful, surprised, angry, and neutral expressions were selected from the MacBrain Face Stimulus Set1 (see Tottenham et al., 2009 for a full description and validation of the stimuli). Eye gaze was manipulated using GIMP digital imaging software. For each image, the iris was cut and pasted to the corners of the eyes to produce a directional (left/right) gaze or a nondirectional eye movement (cross-eyed) as a control condition (see Figure 1). The cross-eyed (no-gaze) condition was used rather than a straight-gaze condition to control for motion of the eyes, as straight gaze can capture attention thereby slowing RTs (Senju & Hasegawa, 2005). The images were cropped to remove hair, ears, and shoulders. Dynamic stimuli were created using WinMorph software. A face of neutral expression with direct gaze was used as a starting point, and the same face bearing an emotional expression with directional eye gaze was used as an endpoint. The eyebrows, outline of eyelids, irises, nose, and lips were defined as anchor points for the morphing procedure. Resulting video files consisted of six frames, recorded at 50 frames per second to create a 120 ms movie of a dynamic face. The video stimulus was presented for 120 ms, and the final frame was held for an additional 80 ms, producing a 200 ms stimulus. The face videos were presented against a grey background, and subtended a visual angle of approximately 6°×4°. The stimuli were placed such that the eyes were at the level of the fixation cross, at the centre of the screen. The target consisted of a black asterisk of 1°×1° visual angle, which appeared to the left or right of fixation, at an eccentricity of 9° of visual angle, immediately after offset of the face stimulus.

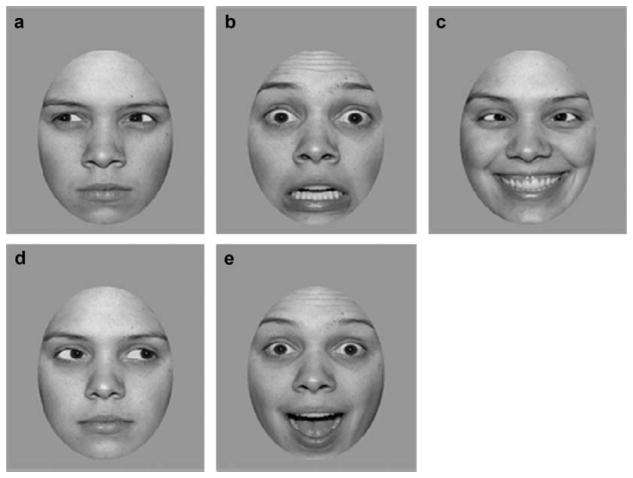

Figure 1.

Example of emotional expressions and gaze directions. (a) Anger, left averted gaze; (b) fearful, straight gaze; (c) happy, cross-eyed; (d) neutral right averted gaze; (e) surprised, straight gaze. The cross-eyed gaze stimuli were used only in Experiment 1.

Design and procedure

Participants performed the experiment in a quiet, well-lit room, seated 60 cm from the computer screen. The task was programmed using Presentation Software (Neurobehavioural Systems) and consisted of 900 trials. Across the whole experiment there were 60 trials per condition (collapsed across left and right targets). The trial order was fully randomized with 10 face models appearing equally often within each condition. Neither face identity nor emotion repeated within fewer than four successive trials. Congruency did not repeat more than twice on successive trials. A trial consisted of a fixation cross at the centre of the screen followed by the dynamic face stimulus (200 ms), which was replaced immediately by a fixation cross with the target to the left or right of fixation. The target was presented until a response was made, but for no longer than 1000 ms. The fixation cross was presented during the intertrial interval, which was jittered between 1000 and 1500 ms (M=1250 ms). Participants were instructed to maintain fixation on the cross at the centre of the screen, and asked to respond to the target as quickly and accurately as possible, using a left-hand button-press for targets appearing on the left, and a right-hand button-press for targets appearing on the right. Participants were told that the direction of eye gaze would not predict the side of appearance of the target. The task was divided into five blocks of 180 trials, and short rests were offered between blocks.

Data analysis

Responses were recorded as correct if the response key matched the side of the target appearance and if reaction times (RTs) were within 100–1000 ms. The remaining responses were marked as incorrect. The percentage of excluded trials (errors, anticipations, and time-outs) ranged from 0.08 to 0.25 for congruent trials, and from 2.42 to 6.67 for incongruent trials. Mean response latencies for correct answers were calculated according to facial emotions (anger, fear, happiness, surprise, neutral) and congruency (congruent, incongruent), with left and right target conditions averaged together. For each subject, only RTs within 2.5 standard deviations from the mean of each condition were kept in the mean RT calculation (van Selst & Jolicoeur, 1994). Averted gaze trials were analysed using a 5 (emotion)×2 (congruency) repeated measures ANOVA. When the Emotion×Congruency interaction was significant, further analyses were conducted separately for congruent and incongruent trials using an ANOVA with the factor emotion. Cross-eyed trials were analysed separately using an ANOVA with the factor emotion and used as a control baseline condition. For each emotion, the gaze orienting effect (GOE) was calculated as the mean RT difference between incongruent and congruent trials. It was analysed using repeated measure ANOVAs with the factor emotion. All statistical tests were set at α<.05 significance level and Greenhouse-Geisser correction for sphericity was applied where necessary. Adjustment for multiple comparisons was carried out using Bonferroni corrections.

Results

Averted gaze trials

As expected there was a main effect of congruency, F(1, 19) = 157.2, p < .0001, such that RTs for congruent trials were shorter than for incongruent trials (Figure 2a). The main effect of emotion, F(4, 76) = 22.3, p < .0001, was due to slower RTs for neutral and fear compared to other emotions (p < .05 for each paired comparison except fear–surprise, with p = .061). Neutral faces also elicited slower RTs than fearful faces (p = .017). Most importantly, the Emotion × Congruency interaction was significant, F(4, 76) = 17.4, p < .0001. Further analyses were thus conducted separately for congruent and incongruent trials (see Figure 2a). A main effect of emotion was found for congruent trials, F(4, 76) = 3.7, p < .05, due to overall shorter RTs for surprise and fear, but no paired comparisons were significant (surprise–happiness comparison approached significance, p = .067). For the incongruent condition, the effect of emotion, F(4, 76) = 36.3, p < .0001, was due to shorter RTs for anger and happiness compared to all other emotions (all comparisons significant at p < .05 or less), and to longer RTs for neutral (p < .0001 for all comparisons except for neutral–fear comparison, with p = .09).

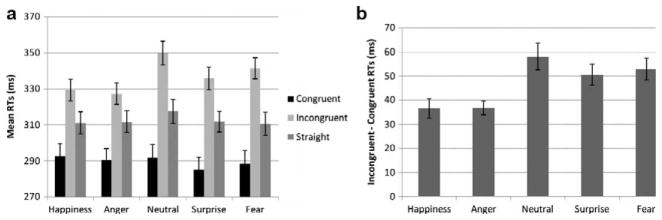

Figure 2.

Experiment 1 (N=20), error bars represent SE. (a) Mean RTs for each gaze condition and emotion; (b) mean gaze-orienting effects (calculated by subtracting the RT to congruent trials from the RT to incongruent trials) for each emotion.

The analysis of the GOE (RT incongruent–RT congruent trials) revealed a main effect of emotion, F(4, 76) = 17.4, p < .0001, due to larger GOE for neutral, surprise and fear compared to happiness and anger (p < .001 for each pairwise comparison) (see Figure 2b). Neutral, surprise, and fear did not differ significantly.

Cross-eyed (no gaze) trials

There was a main effect of emotion for cross-eyed trials F(4, 76) = 4.4, p < .005, due to overall longer RTs for neutral than all emotions (only neutral–happy comparison significant at p < .05). When neutral conditions were taken out of the analysis, the main effect of emotion was no longer significant (p > .8).

Discussion

The results of Experiment 1 provide clear evidence of an enhanced cueing effect to fearful facial emotions compared to both happy and angry emotions. This finding replicates the Putman et al. (2006) fearful–happy comparison using a different set of dynamic face stimuli (but see Graham et al., 2010, Exps. 1–4, to be discussed in the General Discussion). The results are also in accordance with Fox et al. (2007), who reported greater congruency effects to fearful compared to happy and angry emotions using static stimuli. However, Fox et al. reported differences only in a group of high anxious individuals, whereas the present results were obtained from a group of low anxious participants. This is important, as it suggests that in the context of dynamic stimuli, facial emotions can modulate the GOE even in participants with low anxiety. The novelty of our design was the inclusion of surprised facial emotion in addition to the other three emotions. Surprise and fear share the perceptual feature of eye widening, but convey a rather different affect, as surprise does not necessarily imply threat. As predicted, the congruency effects to surprise and fear were remarkably similar and were both greater than happiness and anger.

A less straightforward finding concerns the congruency effect for neutral expressions, which was not different from fear and surprise conditions. Although neutral expressions provide a useful control condition for emotional expressions in the case of static pictures, the comparison is less valid in the context of dynamic stimuli. Indeed, there was no facial movement other than the iris shift in neutral expressions. In all other conditions, the lips, eyebrows, and outline of the eyes, to name just a few, all moved significantly to convey the change from neutral to emotional expression. It is thus possible that neutral trials presented a lower processing load and therefore greater congruency effects than the emotional expressions tested. In other words the motion of the iris (i.e., the averted gaze) could be more salient in neutral than emotional faces as it is the only dynamic change. However, the enhanced GOE due to emotion for fear and surprise would compensate so that neutral, fear, and surprise would not differ in the end. This issue is addressed in more detail in the General Discussion.

The cross-eyed (no-gaze) condition was included as a control for the left and right averted gaze trials. In order to control for motion of the eyes we chose to use a gaze shift that resulted in cross-eyed gaze rather than straight-gaze trials which has been argued to capture attention (Senju & Hasegawa, 2005). A small effect of emotion was initially found, due to longer RTs to neutral than other expressions. This emphasizes again the fact that neutral expression is not directly comparable to the other emotions due to the different amount of facial motion. When neutral trials were removed from the analysis, the effect of emotion was no longer significant, suggesting a similar baseline comparison across emotions. This result suggests that the GOE modulations by emotions in the averted gaze trials are not due to a differential baseline amount of motion in the various expressions. In summary, using dynamic stimuli in a sample of participants selected for low anxiety, we (1) replicated the finding of larger GOE for fearful than happy faces (Putman et al., 2006; Tipples, 2006), (2) demonstrated a larger GOE for fearful than angry faces, which had been reported only for high anxious participants (Fox et al., 2007), and (3) extended the findings to surprise expression, previously unstudied, which showed similar enhancements of the GOE as fear, compared to both happiness and anger. The comparable effect of fear and surprise on the GOE may be due to the shared perceptual characteristics of the two expressions, in particular the eye widening, which increases the salience of the iris/sclera and enhances the perception of averted gaze (Tipples, 2005, 2006). Experiment 2 aimed at replicating the results of Experiment 1 to confirm the novel findings for surprise emotion. In addition, an inverted face condition was included. Face inversion disrupts the processing of emotional expression. Therefore, finding the same emotional modulations of the GOE in inverted faces would strengthen the argument that these modulations rely significantly on basic perceptual properties such as the extent of exposed sclera in the face stimuli, rather than on the emotional content per se.

EXPERIMENT 2

Experiment 2 was designed to replicate the results obtained in Experiment 1 for upright faces, and to extend them to inverted face stimuli. Face inversion disrupts holistic and configural processing and is thought to hamper the perception of emotional facial expressions (e.g., Ambadar et al., 2005). Face inversion has previously been used as a control condition in visual search tasks to demonstrate the reduced threat valence of inverted faces (e.g., Fox & Damjanovic, 2006). If the GOE relies mostly on eye widening, we expect it would not be significantly affected by face inversion and a very similar enhanced GOE should be seen for fear and surprise compared to anger and happiness in both upright and inverted stimuli. In contrast, if presenting faces upside-down changes or reduces the GOE modulations by emotions, we would conclude that these modulations are driven, at least in part, by holistic/configural processing and the emotional content of the face.

Method

Participants

Forty-three participants (26 females) were recruited and tested at the University of Waterloo. They were either paid 10$/hour or received course credit for their participation. Ages ranged from 19 to 23 years (M=20.61, SD=1.29). Participants had normal or corrected to normal vision and two were left handed. Participants recruited for credit were first pre-screened based on their scores on the STAI anxiety test administered during the University mass testing at the beginning of the term. Only participants with scores below 35 were recruited. However, once in the lab, all participants were (re)tested for anxiety using the STICSA anxiety test, which has been shown to be a more reliable measure of anxiety than the STAI (Gros, Antony, Simms, & McCabe, 2007). Both state (M=24.48, SD=3.73) and trait (M=28.26, SD=4.21) STICSA anxiety scores were under the low level score of 35 for each participant. The study was approved by the University of Waterloo Research Ethics Board and all participants gave informed written consent.

Stimuli

The same stimuli as in Experiment 1 were used with the following differences. Eight greyscale face photographs (four men, four women) from the MacBrain Face Stimuli Set were used, instead of 10. The cross-eyed condition was replaced by a straight-gaze condition, as used in Fox et al. (2007). This change was implemented as the cross-eyed stimuli used in Experiment 1 were perceived to be too unrealistic. Instead more naturalistic stimuli were chosen which also facilitated comparison of our data with previous studies. In addition, the morphing sequence consisted of six frames, each presented for 36 ms. In contrast to Experiment 1, all frames were of equal length for a total of 216 ms video presentation. All stimuli were presented in both upright and inverted orientations. For each face stimulus and each eye, the pixel size of the entire eye and that of the iris was measured using Eye Link Data Viewer version 1.9.1. The pixel size of the white sclera was then calculated by subtracting the iris size from the entire eye size. This measure was used for correlation analyses (see later).

Design and procedure

Experiment 2 replicated the design of Experiment 1 with the addition of inverted faces. The same procedure was used, with the following differences. Participants filled out the STICSA questionnaires (instead of the STAI) at the beginning of the study and then performed the gaze cueing task on the computer. The task consisted of eight blocks of 240 trials, with short rests in between. In each block an equal number of trial combinations, using the eight individuals (four per gender), five emotions (neutral, fear, surprise, happiness, and anger), two target positions (left, right), three gaze directions (left, right, straight), and two orientations (upright and inverted), was used. Congruent, incongruent, and straight conditions thus appeared equally often within each block and for each condition. All stimuli combinations were presented across the first two blocks, which were then repeated four times. Block order and stimulus order within blocks were randomized across participants. A total of 1920 trials was thus presented. At the end of the session, participants filled in a questionnaire in which each picture used in the study was printed and had to be labelled according to the appropriate emotion. A score was created for each emotion using the number of correct responses (maximum eight). The entire session lasted less than two hours.

Data analysis

As per Experiment 1, reaction times were filtered to remove incorrect trials and outlier latencies above and below 2.5 standard deviations from each condition’s mean for each subject. The percentage of excluded trials (errors, anticipations, and time-outs) ranged from 4.06 to 5.26 for congruent trials, and from 9.11 to 13.78 for incongruent trials. Mean RTs were then calculated and submitted to a 2 Orientation (upright, inverted)×2 Congruency (congruent, incongruent)×5 Emotion (anger, fear, happiness, neutral, surprise) repeated measures ANOVA for averted-gaze trials. A one-way ANOVA with the factor emotion was used for straight-gaze trials. The GOE was calculated per emotion using the RT difference between incongruent and congruent trials and analysed using an ANOVA with the factor emotion. Greenhouse-Geisser adjusted degrees of freedom and Bonferroni corrections for multiple comparisons were used whenever necessary. Spearman’s correlations between the pixel size of the white sclera and the GOE were carried out across subjects and emotions. Finally, the questionnaire scores were analysed using an ANOVA with the factor emotion, to assess subjects’ emotion recognition of the stimuli.

Results

Averted-gaze trials

A main effect of emotion, F(4, 168) = 8.9, p < .0001, was found as neutral faces elicited on average slower RTs compared to all emotions (p < .05 for each paired comparison). The main effect of congruency, F(1, 42) = 190.8, p < .0001, was also significant, with faster RTs for congruent than incongruent trials. The emotion by congruency interaction was also significant, F(4, 168) = 6.9, p < .001. Although no main effect of orientation (p = .89) was found, the orientation by congruency interaction, F(1, 42) = 29, p < .0001, was significant. Therefore, the data were reanalysed separately for upright and inverted conditions. This also allowed a direct comparison of the upright condition to the results of Experiment 1.

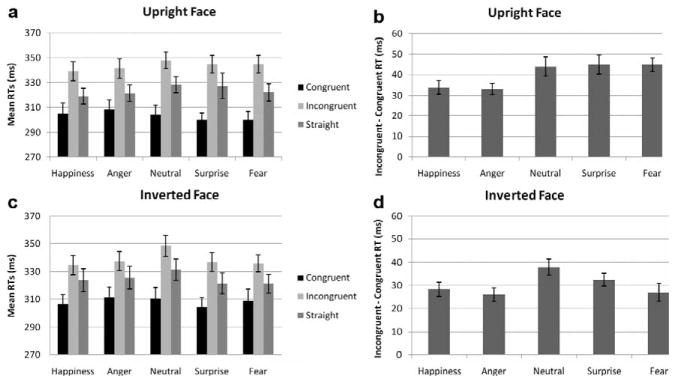

In the upright condition (Figure 3a), the classic main effect of congruency was found, F(1, 42) = 218.7, p < .0001; the effect of emotion was not significant but the emotion by congruency interaction was significant, F(4, 168) = 4.2, p < .05. When the data were further separated across congruency, a main effect of emotion was found only for incongruent trials, F(4, 158) = 4.3, p < .01, reflecting overall shorter RTs for happiness and longer RTs for neutral than other emotions but paired comparisons were not significant except for the happy–neutral pair (p < .05). Similarly, the GOE analysis revealed an effect of emotion, F(4, 168) = 4.2, p = .012, with larger GOE for fear compared to anger and happiness (p < .005 each) (see Figure 3b), reproducing the results of Experiment 1. As seen in Figure 3b, surprise also yielded a larger GOE than anger and happiness although the difference was significant only for the surprise–anger comparison (p < .05). Overall the same pattern of results was found for Experiment 1 and 2 (compare Figure 2b and Figure 3b).

Figure 3.

Experiment 2 (N=43), error bars represent SE. (a) Mean RTs for upright faces for each gaze condition and emotion; (b) mean gaze-orienting effects for each emotion for upright faces; (c) mean RTs for inverted faces for each gaze condition and emotion; (d) mean gaze-orienting effects for each emotion for inverted faces.

In the inverted condition, the main effect of congruency, F(1, 42) = 126.4, p < .0001, was also significant. The main effect of emotion, F(4, 168) = 13.2, p < .0001, was due to slower responses to neutral compared to all other emotions (p < .005 for all comparisons). The Emotion×Congruency interaction, F(4, 168) = 5.7, p < .001, was also significant, so congruent and incongruent trials were reanalysed separately. For inverted congruent trials the main effect of emotion, F(4, 168) = 3.4, p < .05, was due to faster RTs for surprise compared to the other emotions (surprise–neutral at p=.061 and surprise–anger at p=.009) (see Figure 3c). For inverted incongruent trials, the effect of emotion, F(4, 168) = 17.7, p < .0001, was due to slower RTs for neutral condition (p < .0001 for each paired comparison).

The GOE was also analysed using a 5 (emotion)×2 (orientation) repeated measures ANOVA. The main effect of orientation was significant, F(1, 42) = 29, p < .0001, with overall smaller GOE for inverted than upright trials (Figure 3b and 3d). There was a significant main effect of emotion, F(4, 168) = 6.9, p < .001, and paired comparisons revealed a larger GOE for neutral, surprise, and fear compared to anger (p < .01 for each comparison) and happiness (p < .005 for neutral–happiness, trend for surprise–happiness, p=.09). Importantly, the emotion by orientation interaction was not significant (p > .1) and paired-sample t-tests comparing directly the effect of orientation on each emotion confirmed a smaller GOE for inverted than upright condition for anger, t=2.9, p<.01, fear, t=4.3, p<.0001, and surprise, t=2.52, p=.016, with a trend for happiness, t=1.98, p=.054. No difference was found for neutral (p=.096).

Straight-gaze trials

A main effect of emotion was found, F(4, 168) = 7.3, p < .005, due to slower RTs for neutral compared to all other emotions. No effect of orientation (p > .2) or emotion by orientation interaction was found. When the neutral condition was taken out of the analysis, the effect of emotion was no longer significant (p > .3), as was seen for Experiment 1 and suggesting a comparable motion baseline for all emotions.

Correlation analyses

Sclera size varied across emotions (averaged across both eyes, M=492.9 pixels for happy, M=582.2 for anger, M=780.5 for neutral, M=870.8 for surprise, M=1065.4 for fear). For upright faces, the correlation between mean sclera size averaged across both eyes and the GOE across emotions and subjects was significant (rho=.21, p < .005). Thus, the larger the sclera size, the larger the GOE. Importantly, the correlation was no longer significant for inverted faces (p > .2).

Questionnaire

The analysis of the questionnaire scores revealed that overall the recognition of all emotions was accurate. A main effect of emotion was found, F(4, 168) = 12.4, p < .0001. Fear (M=6.2, SE=0.2) and anger (M=6.6, SE=0.2) were less well categorized than happiness (M=7.3, SE=0.1), surprise (M=7.1, SE=0.1), and neutral (M=7.5, SE=0.1) emotions, which did not differ significantly (comparisons significant for fear–happy, fear–surprise, and fear–neutral at p < .005; anger–neutral at p < .005; anger–happy at p=.056).

Discussion

Experiment 2 replicated the design of Experiment 1 with the inclusion of an inverted face condition. For upright faces, the expected pattern of results was observed with greater GOEs for fear compared to happiness and anger, thus replicating the findings of Experiment 1, Fox et al. (2007), Graham et al. (2010, Exps. 5 and 6), Putman et al. (2006), and Tipples (2006). In contrast to the latter studies, participants in our experiment were specifically selected for low levels of anxiety. The finding of enhanced GOE for surprise was also significant compared to anger and in the predicted direction compared to happiness, thus replicating Experiment 1’s findings. The questionnaire scores showed that fear and surprise were reliably discriminated. Therefore, the similar modulation of the GOE by these two emotions is not due to the confusion of their emotional valence. The present study thus demonstrated an increased GOE for fearful and surprised compared to happy and angry emotions in low anxious participants.

The effect of emotion on straight-gaze trials was only due to longer RTs in the neutral condition: When neutral trials were removed the effect disappeared, suggesting a similar motion baseline comparison across emotions, as seen in Experiment 1 with the cross-eyed condition. Thus, the emotional differences found for averted-gaze conditions cannot be due to different levels of salience between emotions such as different levels of motion. In contrast, neutral faces do present a different baseline compared to the other emotions, which we attribute to a lower level of movement. Unlike Fox et al. (2007), we did not observe slower RTs to angry faces compared with other emotions for these straight-gaze faces. This could be due to differences in participant samples. Whereas Fox et al. tested participants selected for high levels of trait anxiety, participants in the current study were selected for low anxiety levels. Attentional engagement effects on angry faces with straight gaze are likely to be greatest for individuals with elevated anxiety levels (Fox et al., 2007).

Processing facial emotions relies on holistic and/or configural processing and is disrupted by face inversion in static (Bartlett & Searcy, 1993; McKelvie, 1995; Valentine, 1988) as well as dynamic (Ambadar et al., 2005) displays. In the present study we used the inversion effect to investigate the importance of component information (i.e., enlarged sclera size) on the GOE enhancements for fear and surprise. We reasoned that inversion would hamper the emotional modulations of the GOE if these were driven by the configural, emotional content of faces. That is, an interaction between orientation and emotion should be seen. In contrast, inversion would have no or a minor impact on the GOE if it was driven predominantly by the characteristics of the eye region such as the sclera size, which remained unchanged between upright and inverted faces.

Inverted faces reliably produced a congruency effect, with longer RTs to incongruent than congruent faces, but this effect was overall attenuated for inverted compared to upright faces. This was seen as a Congruency × Orientation interaction in the omnibus ANOVA, and more strikingly as a main effect of orientation for the GOE. This suggests that inversion slightly disrupts gaze orienting, which is consistent with previous studies reporting reduced congruency effects in rotated and inverted faces (Jenkins & Langton, 2003; Kingstone et al., 2000; Langton & Bruce, 1999).

Importantly, however, no three-way interaction between congruency, orientation, and emotion was seen, and the emotion by orientation interaction for the GOE was not significant. Thus, disrupting configural processing of emotional faces overall reduced the GOE but did not impact it differently depending on the emotion. Direct upright-inverted comparisons confirmed an effect of orientation for each emotion separately although not significantly for neutral. This lack of interaction between orientation and emotion suggests that the modulations of the GOE by emotions are strongly driven by facial componential information.

Previous research has suggested that the enhanced GOE to fearful faces may at least in part be carried by the perceptually more salient eye region inherent in fearful faces (e.g., Tipples, 2005, 2006), and the pattern of present results support this interpretation. However, correlation analyses in the present study demonstrated a significant positive association, albeit modest, between sclera size and GOE, for upright but not inverted faces. That is, the normal upright facial context of the face was necessary for the sclera size to impact the GOE. This result suggests that there is a little more than just the eyes in driving the pattern of results. If the inversion effects were solely driven by the sclera size, which remains identical between upright and inverted faces, then the correlations should be significant regardless of orientation. The fact that correlations were significant only for upright faces suggests that the effect of local feature processing of the eyes was interacting with the emotional valence of the expression.

In summary, the findings of Experiment 2 support a mechanism that involves an interaction between local feature processing of the eye region and configural/holistic processing of facial emotion content, both of which are disrupted by inversion. To further investigate the role of the eye region in driving the modulations of GOE, Experiment 3 was designed to replicate Experiment 2 using only the eye region taken from the emotional face stimuli. Based on the results of Experiment 2, we predicted that the GOE would not show the enhancement for fearful and surprised expressions seen in upright faces (Experiments 1–2) due to the absence of face context providing configural/holistic emotional processing cues.

EXPERIMENT 3

Experiment 3 investigated whether differences in the magnitude of the GOE are observed when only the eye regions of faces with different emotional expressions are presented. Eyes alone produce a gaze cueing effect (Schwaninger, Lobmaier, & Fischer, 2005) and can communicate emotional cues (Baron-Cohen, Wheelwright, Hill, Raste, & Plumb, 2001). If the perceptual properties of the eyes are the main driving force behind the GOE, we would expect to see greater GOE to stimuli with large sclera size, i.e., with eye regions taken from fearful and surprised emotional faces compared with eyes taken from other emotional expressions. However, presenting the eyes in the absence of the entire face provides less emotional information and based on the results of Experiment 2, which suggests that emotional content and sclera size interact, we expected the GOE to upright eyes would not show the enhancement for fearful and surprised expressions seen in upright faces due to the lack of face context. To further control for low-level features an inverted condition was also included. Jenkins and Langton (2003) demonstrated that inversion of the eye region disrupts gaze sensitivity regardless of the orientation of face context (see also Senju & Hasegawa, 2006; Vecera & Johnson, 1995). We thus expected an overall reduction of the GOE with inverted eyes. The correlation between the size of the sclera and the GOE for each condition was calculated, with strong positive correlations indicating a central role of basic component information in driving enhanced GOE.

Method

Participants

Thirty-two participants with normal or corrected to normal vision (22 females, 19=25 years, M=20.5, SD=1.5, 1 left handed) were recruited and tested at the University of Waterloo. They were paid 10$/hour or received course credit for their participation. Both state (M=26, SD=5.1) and trait (M=27.2, SD=4) anxiety scores were under 35 for each participant (STICSA; Gros et al., 2007). The study was approved by the University of Waterloo Research Ethics Board and all participants gave informed written consent.

Stimuli

Stimuli consisted of a rectangular area around the eye region taken from the faces used in Experiment 2. Emotional expressions thus consisted of a dynamic transition from neutral to emotion which involved changes in the outline of the eyes and the eyebrows (e.g., eye widening and eyebrows rising for fearful and surprised expression). Dynamic gaze shifts were presented in exactly the same fashion as in Experiment 2.

Design, procedure, and data analysis

These exactly replicated Experiment 2, except for the questionnaire, which presented the eye stimuli rather than the faces, to be labelled according to their emotion. The percentage of excluded trials (errors, anticipations, and time-outs) ranged from 4.22 to 5.33 for congruent trials, and from 10.53 to 12.61 for incongruent trials.

Results

Averted-gaze trials

Reaction times for congruent trials were shorter than incongruent trials as reflected in the main effect of congruency, F(1, 31) = 136.1, p < .0001. The main effect of orientation was not significant (p > .7; Figure 4a and 4c) and there were no significant interactions involving orientation. Although there was no significant main effect of emotion (p > .9), there was an emotion by congruency interaction, F(4, 124) = 6.3 p < .0001. Thus, congruent and incongruent conditions were reanalysed separately. The main effect of emotion was significant for congruent, F(4, 124) = 3.4, p < .05, but no paired comparisons were significant. The main effect of emotion for incongruent was also significant, F(4, 124) = 2.92, p<.05, with slightly shorter RTs for anger (anger–neutral significant, p < .05).

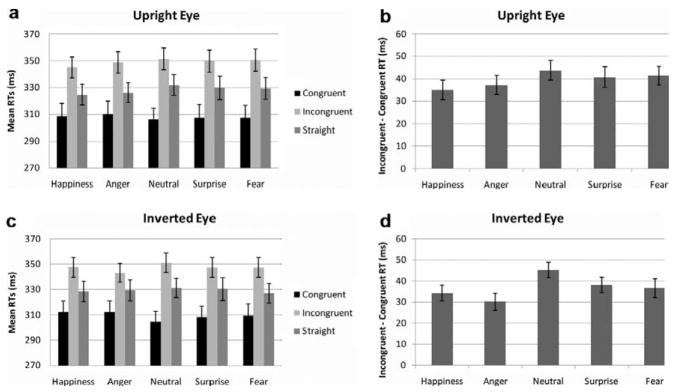

Figure 4.

Experiment 3 (N=32), error bars represent SE. (a) Mean RTs for upright eyes for each gaze condition and emotion; (b) mean gaze-orienting effects for each emotion for upright eyes; (c) mean RTs for inverted eyes for each gaze condition and emotion; (d) mean gaze-orienting effects for each emotion for inverted faces.

The GOE was analysed separately using a 5 (Emotion)×2 (Orientation) repeated measures ANOVA. A main effect of emotion, F(4, 124) = 6.3, p < .001, was found due to overall larger GOE for neutral than the other emotions (neutral–anger comparison at p < .005 and neutral–happiness p < .0001) (Figure 4b and 4d). When neutral expressions were taken out of the analysis, the main effect of emotion was still significant (p < .05), but paired comparisons were not. No other effects were found.

Straight-gaze trials

No main effects or interactions were found.

Correlation analyses

In contrast to Experiment 2, no significant correlations were found between mean pixel size of the white sclera and the mean GOE for either upright or inverted conditions (p > .2).

Questionnaire

The analysis of the questionnaire scores revealed a main effect of emotion, F(4, 124) = 21.2, p < .0001. Overall, categorization of all emotional eyes was not accurate, with low scores obtained for happiness (M=2.6, SE=0.3), fear (M=2.8, SE=0.3), and surprise (M=3.2, SE=0.3), average scores for anger (M=4.3, SE=0.3), and the best scores obtained for neutral (M=6.1, SE=0.3). Thus, neutral eyes were the best categorized of all emotions (all paired comparisons at p < .001). Scores for anger were better than both fear and happiness (p < .005 for each comparison). Fear, happiness, and surprise did not differ significantly.

Discussion

Experiment 3 investigated the effects of presenting isolated eye regions from different expressions on the GOE. As expected a robust GOE was observed to eyes-only stimuli (Schwaninger et al., 2005) in both upright and inverted conditions, confirming that eyes alone are sufficient to orient attention. However, the pattern of enhanced GOE to fear and surprise compared to happiness and anger found in Experiments 1–2 was no longer observed. The small emotional modulation found was due to neutral eyes and vanished when neutral was taken out of the analysis. The findings suggest that despite the eye region conveying emotional cues (Baron-Cohen et al., 2001), an isolated eye region taken from emotional facial expressions is not sufficient to elicit modulation of gaze cueing by emotion. The results of the questionnaire showed that participants did not categorize the emotions well based on isolated eye regions, and this may be one reason for the present results. The current findings do not support the idea that the GOE modulations by emotions are driven predominantly by basic low-level characteristics of the eye regions. This is further supported by an absence of correlation between the sclera size and the GOE. In contrast, the present findings, along with the questionnaire results, confirm the hypothesis of a more complex mechanism driving the emotional modulation of the GOE as suggested by Experient 2’s results. The results also indicate that face context, in addition to eyes and eyebrows, plays a significant part in communicating emotional valence and thus in modulating gaze cueing effects. Finally, the lack of inversion effect on the GOE was surprising given that inversion disrupts the GOE in faces (Jenkins & Langton, 2003; Kingstone et al., 2000; Langton & Bruce, 1999) and also disrupts the perception of gaze regardless of face orientation (Jenkins & Langton, 2003; Senju & Hasegawa, 2006; Vecera & Johnson, 1995). However, inversion disrupts configural/holistic information, which may not have been salient enough from the sole eye regions.

Overall, in line with the conclusions of Experiment 2, the findings of Experiment 3 do not support the view that basic component information is significant in driving the enhanced GOE demonstrated for fearful and surprised whole faces. These findings and their implications are discussed further next.

GENERAL DISCUSSION

A critical component of social cognition is the ability to interpret the intentions and feelings of other people with whom we interact. Social cues such as facial emotional expressions, body posture, head direction, and especially eye gaze direction (e.g., see Itier & Batty, 2009) contribute significantly to our ability to interact socially. Of specific recent interest is the interaction between eye gaze and facial expression, and research has produced somewhat inconclusive findings. We conducted three experiments to determine the extent to which processing of eye-gaze cues (e.g., Driver et al., 1999) may interact with rapid and automatic processing of threat-related information (e.g., Öhman & Mineka, 2001) and unexpected events. We used dynamic facial expressions combined with dynamic gaze-shifts in a modified Posner attentional cueing paradigm. The experiments compared the effects of different facial expressions on the gaze-congruency effect, in the context of upright and inverted faces, and when only eye regions were used as a cueing stimulus. We predicted that interfering with the processing of emotional content (by inversion, or presenting only eyes) would attenuate enhanced GOE to certain emotions. If the enhanced GOE in the context of certain expressions is carried predominantly by the extent of exposed sclera, a low-level stimulus feature, then stimulus manipulations such as inversion and presentation of eye regions alone should have no effect on the GOE for those emotional expressions.

Across all three studies we demonstrated the classic gaze-cueing effect (Driver et al., 1999; Friesen & Kingstone, 1998) such that response times to gazed-at targets were shorter than response times to targets at the location opposite to the direction of eye gaze. In other words, consistent with previous research a GOE was present across all experimental manipulations including different emotional facial expressions (e.g., Fox et al., 2007), inversion of faces, and presentation of only the eye region (e.g., Schwaninger et al., 2005). Furthermore, compared to neutral faces, emotional facial expressions modulated RTs to congruent and incongruent targets across all three experiments. Of specific interest in the present study was to what extent different facial expressions modulate the GOE and what role low-level features of the eye region may have in driving this phenomenon.

Various experiments have shown enhanced GOE to fearful faces compared with faces expressing happiness, anger, or neutral expressions (e.g., Fox et al., 2007; Putman et al., 2006). The same effect was observed in both Experiments 1 and 2. There are two complementary explanations for the mechanism underlying this effect. One draws upon the threat value of fearful expressions potentiating spatial attention and thus facilitating responses at the gazed-at location while slowing responses to the opposite location (e.g., Fox et al., 2007; Putman et al., 2006). The alternative but complementary mechanism relates to the characteristic eye widening inherent to fearful expressions, which increases the salience of averted eye gaze (see, e.g., Tipples, 2006, 2007). In practice a combination of both mechanisms may be at work as processing of emotional expressions relies on specific relational information in faces such as eye widening, raised eyebrows, and shape of the mouth. We introduced three experimental manipulations to examine the importance of basic low-level features in driving the GOE modulation to different emotions: We added surprise emotion to our design, we used the inversion manipulation, and we presented eyes alone. Surprised facial expressions share characteristic eye widening with fearful expressions, but are not inherently threat related. Nevertheless surprised expressions have not been included as a comparison expression in previous studies. Increased GOE to surprised compared with happy and angry expressions was observed in both Experiments 1 and 2, and could indicate that eye widening rather than emotional valence is contributing to the GOE. However, this simple conclusion is ruled out based on the effects of inverting face stimuli (Experiment 2) and presenting only the eye region from different emotional expressions (Experiment 3).

Inverting face stimuli is known to disrupt holistic or configural processing of faces, and to interfere with emotional processing. It was thus argued that the enhanced GOE to fearful and surprised faces should be retained in inverted stimuli if the GOE relies mostly on basic low-level information of the eyes rather than the emotional expression communicated by the face. Although inversion of face stimuli resulted in a general attenuation of the GOE rather than modulating differently certain emotions, eye size correlated with GOE only for upright faces. This suggests enhanced GOE to upright fearful and surprised faces is not solely driven by low-level information derived from the extent of exposed sclera but is also conveyed by the emotional content provided from the rest of the (upright) face. Most convincingly, the presentation of eye regions alone did not result in a replication of the enhanced GOE for fear and surprise in Experiment 3, despite the eye region alone communicating at least some emotional expression (e.g., Baron-Cohen et al., 2001), and retaining the salience of low-level features such as eye widening. Based on the findings of the three experiments we thus conclude that the enhanced GOE to fearful and surprised faces is driven by a mechanism which relies on the combination of holistic/configural processing of emotional information, and local feature processing of the eye region. This view is consistent with evolutionary theorizing (Öhman & Mineka, 2001), which suggests that there is an efficient and automatic process for responding to threat, and in line with evidence from previous studies of high anxious participants, which suggest that emotional valence of fearful faces drives the enhanced GOE (Fox et al., 2007; Holmes et al., 2006; Putman et al., 2006; Tipples, 2006). A novel finding of the present study is that the enhanced GOE to fearful faces is not specific to individuals with elevated trait anxiety, as participants in all experiments were selected for low-level trait anxiety. Another novel finding is the increased GOE to surprised faces in low anxious participants. It is possible that the neural system responds similarly to threat (fear) and heightened vigilance/unexpectedness (surprise).

Previous studies have suggested that facial emotion processing and gaze direction analysis are tightly integrated. For example, the identification of approach-related emotions (such as happiness or anger) is faster when these faces are directing their gaze at the observer, rather than averting their gaze, and similarly identification of avoidance-related emotions (such as fear) is faster in the context of averted compared with direct gaze (Adams & Kleck, 2003), but see Bindemann, Burton, and Langton (2008) for an alternative view. Despite suggestions that emotion and gaze direction processing are handled by independent modules (see, e.g., Hietanen & Leppänen, 2003), the accumulating evidence is now in favour of an integration based on gaze× emotion interactions. However, evidence for the temporal sequencing of this information is somewhat uncertain. Recently, Graham et al. (2010) demonstrated an enhanced GOE to fearful faces only when the SOA used was longer than 300 ms. At shorter SOAs they do not report any interactions between gaze cue and emotional expression. In line with their findings there is electrophysiological evidence suggesting that gaze and expression information are not fully integrated neurally until after 300 ms poststimulus onset (e.g., Fichtenholz, Hopfinger, Graham, Detwiler, & LaBar, 2007). Fichtenholz et al. (2007) used a gaze-cueing paradigm to characterize the ERP indices of gaze-directed orienting in the context of fearful and happy facial expressions. Although an interaction between facial expression and gaze cueing was evident only for the P3 component (indicating an interaction only after 300 ms), it is important to note that this study used emotionally salient target objects (either negative or positive) and the ERPs were time-locked to target presentation. Several studies have reported an interaction between fearful faces and gaze direction with SOAs of 300 ms of more and using neutral targets such as letters or an asterisk (e.g., Fox et al., 2007; Graham et al., 2010; Holmes et al., 2006; Mathews et al., 2003; Tipples, 2006), and some have reported this effect even at shorter SOAs (e.g., Putman et al., 2006), as in the present study. This would suggest that some level of integration is taking place early, especially as the effect seems to be robust for fearful expressions. Importantly, however, studies using SOAs of less than 300 ms have used simpler target detection tasks, whereas those with longer SOAs have used more demanding target discrimination tasks. In target detection tasks the target location is often confounded with the hand used to make a response (i.e., left-hand button-press to left-lateralized targets). It is thus important to note that observed gaze direction does induce spatial “Simon” effects (Simon, 1969; Zorzi, Mapelli, Rusconi, & Umilta, 2003); in other words, responses are facilitated for the hand corresponding to the cued location. It is thus possible that the interaction between facial expression and gaze direction observed at short SOAs (less than 300 ms) represents a potentiation of the “Simon” effect rather than a full integration of gaze direction and emotion processing involving spatial attention. Emotion-specific responses to fearful faces have been recorded as early as 100 ms (e.g., Batty & Taylor, 2003; Pourtois, Grandjean, Sander, & Vuilleumier, 2004), and gaze-specific responses have not been recorded before about 180 ms (e.g., Itier, Alain, Kovacevic, & McIntosh, 2007; Taylor, George, & Ducorps, 2001). Thus, the alerting effect of the fearful expression may become active much sooner than the processing of gaze direction such that a potentiation of response is initiated in advance of the spatial effects elicited by averted-gaze cues. Regardless of potential “Simon” effects in the present study, our findings emphasize the valence of fearful and surprised emotional expressions as driving the enhanced GOE, although low-level stimulus features do seem to play a role but only in the context of the (upright) facial expressions. Ideally future studies should employ a design that involves either a target discrimination task, or a target detection task in which the target side is not confounded with the response hand.

One strength of the present study is the use of dynamic emotional face stimuli. Dynamic changes in facial emotions are considered more realistic stimuli and elicit greater emotional valence than static stimuli (Sato & Yoshikawa, 2004; Sato et al., 2004). One factor that might explain the mixed findings of previous studies of gaze–emotion interactions is the use of static or semi-dynamic face stimuli. In the current study both the emotional expression and eye gaze changed simultaneously. In some previous studies (Hietanen & Leppänen, 2003; Mathews et al., 2003), gaze was shifted dynamically in the context of static facial expression, which arguably introduces a processing advantage for the gaze shift over the expression. In other studies both gaze and expression were presented statically (e.g., Holmes et al., 2006). This variation in stimulus presentation may account at least in part for the equivocal findings regarding interactions between emotional expression and eye gaze. As outlined in Graham et al. (2010), using dynamic gaze and emotion transitions avoids the bias towards a dynamic or implied gaze shift. However, the simultaneous onset of gaze and emotion shift does confound the salience of the eyes (pupil/sclera contrast) with the emotional expression. It is thus possible that in the current design and that of Putman et al. (2006) the salience of the gaze cues was increased in the context of fearful and surprised faces as the eyes begin to widen before the gaze shift is complete. Ideally, therefore, the stimuli should be designed such that gaze shift is completed before the onset of emotional expression change (Graham et al., 2010). However, our finding that the enhanced GOE for fearful and surprised faces is abolished when only the eye region is presented strengthens the suggestion that the effect is not carried solely by low-level features such as the extent of exposed sclera. Thus, our conclusion that both the expression of the face and component information from the eyes are important in determining the enhanced GOE to fearful and surprised faces is still valid.

A significant drawback of using dynamic changes of facial expression is the unequal amounts of biological motion presented in emotional face stimuli compared with neutral face stimuli. In dynamic emotional stimuli several parts of the face undergo change due to motion. However, in neutral stimuli none of the facial features changed position or extent, introducing a processing advantage for gaze in neutral compared with emotional stimuli. This confound is potentially problematic for all three experiments in the present study. Even in Experiment 3 where only the eye region was presented as the cueing stimulus, dynamic emotional eye stimuli displayed significantly more movement (by the eyebrows and the outline of the eyes, for instance) than neutral dynamic eye stimuli. It can thus be argued that biological motion per se (rather than emotional expression) is the cause of generally shorter RTs in the context of emotional face/eye stimuli compared with neutral face/eye stimuli. Although a neutral stimulus involving for instance opening and closing of the mouth might improve the comparability of the emotional and neutral full face stimuli, a comparable solution for manipulating the neutral stimuli consisting of only the eye region is not feasible. Nevertheless, consistent with Graham et al.’s Experiment 4, the inversion of face stimuli significantly affected the impact of emotional expression on RTs. If biological motion were solely responsible for the differences between neutral and emotional stimuli, then inversion would not affect RTs. Thus, despite our neutral face stimuli not being ideal control comparisons for emotional face stimuli, we feel that our conclusions regarding the relative impact of low-level stimulus features and emotional valence of expressive faces on the GOE are valid.

In summary, our study demonstrated that despite characteristic low-level stimulus features of fearful and surprised facial expressions such as eye widening which may increase the salience of gaze direction, the mechanism driving enhanced GOEs to fearful and surprised expressions relative to other emotions such as happiness or anger relies on a combination of processes involving emotional expression and component features of the eye region such as sclera size. Importantly, manipulations which attenuate the processing of emotional valence of faces such as inversion, and presenting isolated eye regions, abolished the enhanced GOE to fearful and surprised upright faces. The findings contribute to the growing body of research addressing the mechanisms of face and gaze processing involved in humans’ refined social cognition. Specifically the study provides evidence in favour of the theory that rapid and evolutionarily beneficial processing of fearful and surprised faces rather than basic low-level stimulus features potentiates the orienting to another person’s averted eye gaze.

Acknowledgments

This study was supported by funding from CIHR (MOP-87393, 89822) and the CRC programme to RJI and CIHR (MOP-81161) to MJT. We would also like to thank two anonymous reviewers for their helpful comments on the previous version of this manuscript.

Footnotes

Development of the MacBrain Face Stimulus Set was overseen by Nim Tottenham and supported by the John D. and Catherine T. MacArthur Foundation Research Network on Early Experience and Brain Development. Please contact Nim Tottenham at tott0006@tc.umn.edu for more information concerning the stimulus set.

References

- Adams RB, Jr, Kleck RE. Perceived gaze direction and the processing of facial displays of emotion. Psychological Science. 2003;14(6):644–647. doi: 10.1046/j.0956-7976.2003.psci_1479.x. [DOI] [PubMed] [Google Scholar]

- Ambadar Z, Schooler JW, Cohn JF. Deciphering the enigmatic face: The importance of facial dynamics in interpreting subtle facial expressions. Psychological Science. 2005;16(5):403–410. doi: 10.1111/j.0956-7976.2005.01548.x. [DOI] [PubMed] [Google Scholar]

- Ando S. Luminance-induced shift in the apparent direction of gaze. Perception. 2002;31:657–674. doi: 10.1068/p3332. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S. Mindblindness: An essay on autism and theory-of-mind. Cambridge, MA: MIT Press; 1995. [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The “Reading the mind in the eyes” test revised version: A study with normal adults, and adults with Asperger syndrome or high-functioning autism. Journal of Child Psychology and Psychiatry. 2001;42(2):241–251. [PubMed] [Google Scholar]

- Bartlett JC, Searcy J. Inversion and configuration of faces. Cognitive Psychology. 1993;25(3):281–316. doi: 10.1006/cogp.1993.1007. [DOI] [PubMed] [Google Scholar]

- Batty M, Taylor MJ. Early processing of the six basic facial emotional expressions. Cognitive Brain Research. 2003;17:613–620. doi: 10.1016/s0926-6410(03)00174-5. [DOI] [PubMed] [Google Scholar]

- Bindemann M, Burton AM, Langton SRH. How do eye gaze and facial expression interact? Visual Cognition. 2008;16(6):708–733. [Google Scholar]

- Bradley BP, Mogg K, Millar NH. Covert and overt orienting of attention to emotional faces in anxiety. Cognition and Emotion. 2000;14(6):789–808. [Google Scholar]

- Driver J, Davis G, Ricciardelli P, Kidd P, Maxwell E, Baron-Cohen S. Gaze perception triggers reflexive visuospatial orienting. Visual Cognition. 1999;6(5):509–540. [Google Scholar]

- Eastwood JD, Smilek D, Merikle PM. Differential attentional guidance by unattended faces expressing positive and negative emotions. Perception and Psychophysics. 2001;63:1004–1013. doi: 10.3758/bf03194519. [DOI] [PubMed] [Google Scholar]

- Farroni T, Csibra G, Simion G, Johnson MH. Eye contact detection in humans from birth. Proceedings of the National Academy of Science USA. 2002;99(14):9602–9605. doi: 10.1073/pnas.152159999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fichtenholz HM, Hopfinger JB, Graham R, Detwiler JM, LaBar KS. Happy and fearful emotion in cues and targets modulate event-related potential indeces of gaze-directed attentional orienting. Social Cognitive and Affective Neuroscience. 2007;2(4):323–333. doi: 10.1093/scan/nsm026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E. Processing emotional facial expressions: The role of anxiety and awareness. Cognitive Affective and Behavioural Neuroscience. 2002;2:52–63. doi: 10.3758/cabn.2.1.52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E, Damjanovic L. The eyes are sufficient to produce a threat superiority effect. Emotion. 2006;6(3):534–539. doi: 10.1037/1528-3542.6.3.534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E, Lester V, Russo R, Bowles RJ, Pichler A, Dutton K. Facial expressions of emotion: Are angry faces detected more efficiently? Cognition and Emotion. 2000;14(1):61–92. doi: 10.1080/026999300378996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox E, Mathews A, Calder AJ, Yiend J. Anxiety and sensitivity to gaze direction in emotionally expressive faces. Emotion. 2007;7(3):478–486. doi: 10.1037/1528-3542.7.3.478. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friesen CK, Kingstone A. The eyes have it! reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin and Review. 1998;5:490–495. [Google Scholar]

- Friesen CK, Ristic J, Kingstone A. Attentional effects of counterpredictive gaze and arrow cues. Journal of Experimental Psychology: Human Perception and Performance. 2004;30(2):319–329. doi: 10.1037/0096-1523.30.2.319. [DOI] [PubMed] [Google Scholar]

- Frischen A, Bayliss AP, Tipper SP. Gaze cueing of attention: Visual attention, social cognition, and individual differences. Psychological Bulletin. 2007;133(4):694–724. doi: 10.1037/0033-2909.133.4.694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graham R, Friesen CK, Fichtenholz HM, LaBar KS. Modulation of reflexive orienting to gaze direction by facial expressions. Visual Cognition. 2010;18(3):331–368. [Google Scholar]

- Gros DF, Antony MM, Simms LJ, McCabe RE. Psychometric properties of the State-Trait Inventory for Cognitive and Somatic Anxiety (STICSA): Comparison to the State-Trait Anxiety Inventory (STAI) Psychological Assessment. 2007;19(4):369–381. doi: 10.1037/1040-3590.19.4.369. [DOI] [PubMed] [Google Scholar]

- Hietanen JK, Leppänen JM. Does facial expression affect attention orienting by gaze direction cues? Journal of Experimental Psychology: Human Perception and Performance. 2003;29(6):1228–1243. doi: 10.1037/0096-1523.29.6.1228. [DOI] [PubMed] [Google Scholar]

- Holmes A, Richards A, Green S. Anxiety and sensitivity to eye gaze in emotional faces. Brain and Cognition. 2006;60(3):282–294. doi: 10.1016/j.bandc.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Hood BM, Willen JD, Driver J. Adult’s eyes trigger shifts of visual attention in human infants. Psychological Science. 1998;9(2):131–134. [Google Scholar]

- Itier RJ, Alain C, Kovacevic N, McIntosh AR. Explicit versus implicit gaze processing assessed by ERPs. Brain Research. 2007;1177:79–89. doi: 10.1016/j.brainres.2007.07.094. [DOI] [PubMed] [Google Scholar]

- Itier RJ, Batty M. Neural bases of eye and gaze processing: The core of social cognition. Neuroscience and Biobehavioral Reviews. 2009;33(6):843–863. doi: 10.1016/j.neubiorev.2009.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkins J, Langton SRH. Configural processing in the perception of eye-gaze direction. Perception. 2003;32:1181–1188. doi: 10.1068/p3398. [DOI] [PubMed] [Google Scholar]

- Kingstone A, Friesen CK, Gazzaniga MS. Reflexive joint attention depends on lateralized cortical connections. Psychological Science. 2000;11:159–166. doi: 10.1111/1467-9280.00232. [DOI] [PubMed] [Google Scholar]

- Langton SRH, Bruce V. Reflexive visual orienting in response to the social attention of others. Visual Cognition. 1999;6:541–567. [Google Scholar]

- Langton SR, Watt RJ, Bruce II. Do the eyes have it? Cues to the direction of social attention. Trends in Cognitive Sciences. 2000;4(2):50–59. doi: 10.1016/s1364-6613(99)01436-9. [DOI] [PubMed] [Google Scholar]

- Mathews A, Fox E, Yiend J, Calder AJ. The face of fear: Effects of eye gaze and emotion on visual attention. Visual Cognition. 2003;10(7):823–835. doi: 10.1080/13506280344000095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKelvie SJ. Emotional expression in upside-down faces: Evidence for configurational and componential processing. British Journal of Social Psychology. 1995;34(3):325–334. doi: 10.1111/j.2044-8309.1995.tb01067.x. [DOI] [PubMed] [Google Scholar]

- Mogg K, Bradley BP. Orienting of attention to threatening facial expressions presented under conditions of restricted awareness. Cognition and Emotion. 1999;13:713–740. [Google Scholar]

- Öhman A, Lundqvist D, Esteves F. The face in the crowd revisited: A threat advantage with schematic stimuli. Journal of Personality and Social Psychology. 2001;80(3):381–396. doi: 10.1037/0022-3514.80.3.381. [DOI] [PubMed] [Google Scholar]

- Öhman A, Mineka S. Fears, phobias and preparedness: Toward an evolved module of fear and fear learning. Psychological Review. 2001;108:483–522. doi: 10.1037/0033-295x.108.3.483. [DOI] [PubMed] [Google Scholar]

- Posner MI. Orienting of attention. Quarterly Journal of Experimental Psychology. 1980;32:3–25. doi: 10.1080/00335558008248231. [DOI] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, Vuilleumier P. Electrophysical correlates of rapid spatial orienting towards fearful faces. Cerebral Cortex. 2004;14:619–633. doi: 10.1093/cercor/bhh023. [DOI] [PubMed] [Google Scholar]

- Putman P, Hermans E, van Honk J. Anxiety meets fear in perception of dynamic expressive gaze. Emotion. 2006;6(1):94–102. doi: 10.1037/1528-3542.6.1.94. [DOI] [PubMed] [Google Scholar]

- Ricciardelli P, Baylis G, Driver J. The positive and negative of human expertise in gaze perception. Cognition. 2000;77(1):B1–B14. doi: 10.1016/s0010-0277(00)00092-5. [DOI] [PubMed] [Google Scholar]

- Sato W, Yoshikawa S. The dynamic aspects of emotional facial expressions. Cognition and Emotion. 2004;18(5):701–710. [Google Scholar]

- Sato W, Yoshikawa S, Kochiyama T, Matsumura M. The amygdala processes the emotional significance of facial expressions: An fMRI investigation using the interaction between expression and face direction. Neuroimage. 2004;22(2):1006–1013. doi: 10.1016/j.neuroimage.2004.02.030. [DOI] [PubMed] [Google Scholar]

- Schwaninger A, Lobmaier JS, Fischer MH. The inversion effect on gaze perception reflects processing of component information. Experimental Brain Research. 2005;167(1):49–55. doi: 10.1007/s00221-005-2367-x. [DOI] [PubMed] [Google Scholar]

- Senju A, Hasegawa T. Direct gaze captures visuospatial attention. Visual Cognition. 2005;12:127–144. [Google Scholar]

- Senju A, Hasegawa T. Do the upright eyes have it? Psychonomic Bulletin and Review. 2006;13:223–228. doi: 10.3758/bf03193834. [DOI] [PubMed] [Google Scholar]

- Simon JR. Reactions towards the source of stimulation. Journal of Experimental Psychology. 1969;81:174–176. doi: 10.1037/h0027448. [DOI] [PubMed] [Google Scholar]

- Sinha P. Last but not least: Here’s looking at you, kid. Perception. 2000;29(8):1005–1008. doi: 10.1068/p2908no. [DOI] [PubMed] [Google Scholar]

- Spielberger CD, Gorusch RL, Lushene R, Vagg PR, Jacobs GA. Manual for the state-trait anxiety inventory (Form Y) Palo Alto, CA: Consulting Psychologists Press; 1983. [Google Scholar]

- Taylor MJ, George N, Ducorps A. Magnetoencephalographic evidence of early processing of direction of gaze in humans. Neuroscience Letters. 2001;316(3):173–177. doi: 10.1016/s0304-3940(01)02378-3. [DOI] [PubMed] [Google Scholar]

- Tipples J. Orienting to eye gaze and face processing. Journal of Experimental Psychology: Human Perception and Performance. 2005;31(5):843–856. doi: 10.1037/0096-1523.31.5.843. [DOI] [PubMed] [Google Scholar]

- Tipples J. Fear and fearfulness potentiate automatic orienting to eye gaze. Cognition and Emotion. 2006;20(2):309–320. [Google Scholar]

- Tomkins S, McCarter R. What and where are the primary affects? Some evidence for a theory. Perceptual and Motor Skills. 1964;18:119–158. doi: 10.2466/pms.1964.18.1.119. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka J, Leon AC, McCarry T, Nurse M, Hare TA, et al. The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research. 2009;168(3):242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]