Abstract

Objective To investigate the effectiveness of open peer review as a mechanism to improve the reporting of randomised trials published in biomedical journals.

Design Retrospective before and after study.

Setting BioMed Central series medical journals.

Sample 93 primary reports of randomised trials published in BMC-series medical journals in 2012.

Main outcome measures Changes to the reporting of methodological aspects of randomised trials in manuscripts after peer review, based on the CONSORT checklist, corresponding peer reviewer reports, the type of changes requested, and the extent to which authors adhered to these requests.

Results Of the 93 trial reports, 38% (n=35) did not describe the method of random sequence generation, 54% (n=50) concealment of allocation sequence, 50% (n=46) whether the study was blinded, 34% (n=32) the sample size calculation, 35% (n=33) specification of primary and secondary outcomes, 55% (n=51) results for the primary outcome, and 90% (n=84) details of the trial protocol. The number of changes between manuscript versions was relatively small; most involved adding new information or altering existing information. Most changes requested by peer reviewers had a positive impact on the reporting of the final manuscript—for example, adding or clarifying randomisation and blinding (n=27), sample size (n=15), primary and secondary outcomes (n=16), results for primary or secondary outcomes (n=14), and toning down conclusions to reflect the results (n=27). Some changes requested by peer reviewers, however, had a negative impact, such as adding additional unplanned analyses (n=15).

Conclusion Peer reviewers fail to detect important deficiencies in reporting of the methods and results of randomised trials. The number of these changes requested by peer reviewers was relatively small. Although most had a positive impact, some were inappropriate and could have a negative impact on reporting in the final publication.

Introduction

The International Committee of Medical Journal Editors defines peer review as the “critical assessment of manuscripts submitted to journals by experts who are not part of the editorial staff.”1 The practise of peer reviewing manuscripts has been around for more than 200 years and today editorial peer review is used almost universally by scientific journals as a tool to assess and improve the quality of submissions to biomedical journals.2 One of the main aims of peer review is to improve the quality and transparency of a publication by checking that the reported research has been carried out correctly and that the results presented have been interpreted appropriately.3 Clinical decisions are then made on the basis of this research evidence, so if it is misleading, by being incomplete, inaccurate, or poorly reported this has a direct impact on patient care.

Traditionally, the process of peer review has been carried out “blinded” so that the identity of the peer reviewers is concealed from the authors and the comments made by the peer reviewers are never available in the public domain. Some journals have since switched to a process of open peer review,4 whereby the identity of the peer reviewers is disclosed and, in some instances, the peer reviewer’s comments are included alongside the published article. However, despite several studies assessing the effectiveness of the peer review process, and its wide acceptance by the scientific community, little is known about its impact on the quality of reporting of the published research.5 Given the wealth of evidence on the poor and inadequate reporting in published research activities, particularly the reporting of randomised trials,6 peer reviewers may be failing to detect important deficiencies in the scientific literature.

We investigated the effectiveness of open peer review as a mechanism to improve the reporting of randomised trials published in biomedical journals. In particular, we examined the extent of changes made to the reporting of methodological aspects of manuscripts of randomised trials after peer review, the type of changes requested by peer reviewers, and the extent to which authors adhere to these requests.

Methods

Sample selection

We searched the US National Library of Medicine’s PubMed database to identify all primary reports of randomised trials published in the BioMed Central series medical journals (see supplementary appendix 1 for search strategy) in 2012 and indexed with the publication type “Randomized Controlled Trial” (search as of 28 May 2013). One reviewer (SH) screened the titles and abstracts of all retrieved reports to exclude any obvious reports of non-trials. A copy of the full article was then obtained for all non-excluded reports, and two reviewers assessed each article for eligibility. We chose to use the BMC-series medical journals as they operate a system of open peer review whereby all submitted versions of a manuscript are published along with the corresponding peer reviewers’ comments and authors’ responses.7 Manuscripts submitted to BMC-series medical journals are typically handled by external academic editors, in house editors, or a combination of both at different stages in the manuscript submission process. Comments made by the external academic editors are not usually published alongside the submitted manuscript. Journals included in the BMC-series medical journals also endorse the CONSORT (Consolidated Standards of Reporting Trials) statement.8

Eligibility criteria

We included all primary reports (that is, those reporting the main study outcome) of randomised trials, defined as a prospective study assessing healthcare interventions in human participants who were randomly allocated to study groups. We included all studies of parallel group, crossover, cluster, factorial, and split body design. We excluded protocols of randomised trials, secondary analyses, systematic reviews, methodological studies, pilot studies, and early phase trials (for example, phase 1).

Data extraction

Eight reviewers working in pairs extracted data. Each pair independently extracted data from eligible reports; any differences between reviewers were resolved by discussion, with the involvement of an arbitrator if necessary. To ensure consistency between reviewers we first piloted the data extraction form. We discussed any disparities in the interpretation and modified the data extraction form accordingly. By accessing the prepublication history from each report (using the URL cited at the end of each published article) we recorded the number of submitted versions of the manuscript and the date of the original submission, final submission, and published article. For each eligible report we used the “compare documents” function in PDF Converter (Enterprise 7) to determine differences in reporting between the original submitted version of the manuscript and the final submitted version.

For each trial report we first examined whether specific items related to the methodological aspects of randomised trials were reported; based on items in the CONSORT checklist8; on average this step took between 15 and 20 minutes per trial report. We then examined any changes in reporting of these items between the original and final version of the manuscript. We classified changes as being items added (item new to final version, not in original version of manuscript), subtracted (item removed from final version, was in original version of manuscript), or altered (item in both original and final version of manuscript, but wording changed). By accessing the prepublication history from each report we then assessed each of the peer reviewer’s comments about reporting of methodological items and how authors responded to these requests for each round of the peer review process. In particular we examined comments relating to the reporting of trial design (randomisation and blinding), sample size, details of primary and secondary outcomes, requests for additional analyses, and the trial conclusion. We classified the nature of the comments as having either a positive impact on reporting (comment by peer reviewer judged to have beneficial effect on reporting) or negative impact (comment by peer reviewer judged to have harmful effect on reporting). We were unable to assess any additional comments made by the academic editors as these reports were typically not published alongside the peer reviewers’ comments.

Data analysis

We summarised the characteristics of the studies and details of peer review. The primary analysis focused on the nature and extent of changes made to manuscripts after peer review, in relation to the reporting of methodological aspects of randomised trials, measured as the number and type of items added, subtracted, or altered in the final submitted version of the manuscript. We also carried out a descriptive analysis of the type of methodological changes requested in the peer reviewers’ comments and the extent to which authors responded to these requests in their manuscript revision.

Results

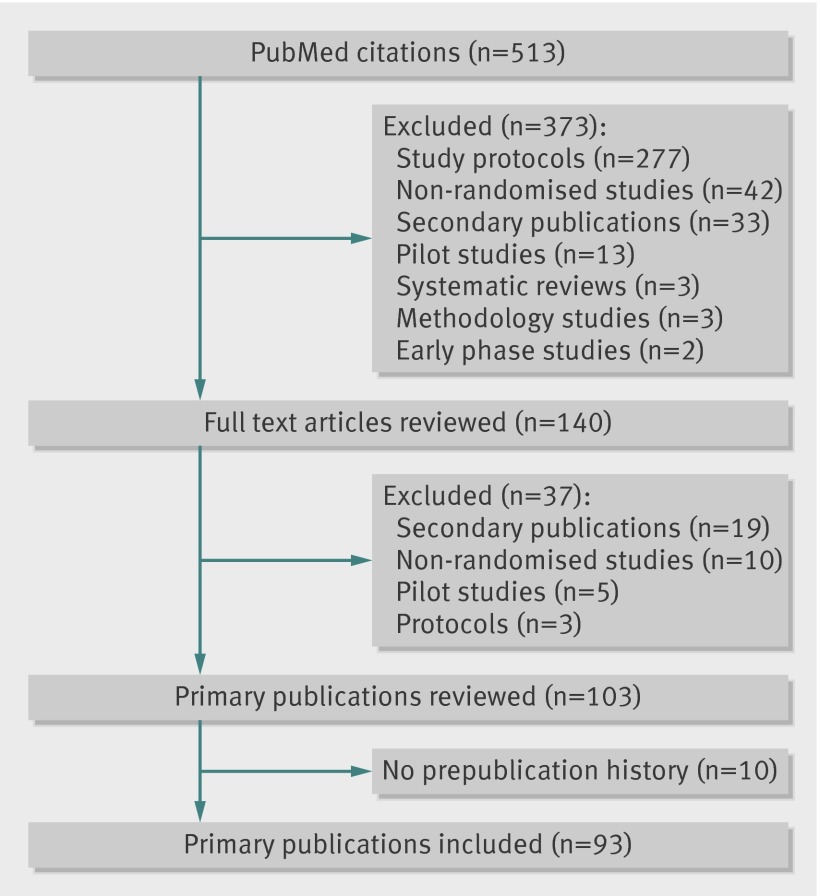

The PubMed publication type search term “Randomized Controlled Trial” identified 513 possible reports of such trials published in the BMC-series medical journals in the specified period. After screening the titles and abstracts of all retrieved citations, we reviewed 140 full text articles, resulting in 103 primary reports of randomised trials (figure); we excluded 10 as no prepublication history was available electronically (for technical reasons) at the time of performing this study. This resulted in 93 primary reports of randomised trials (see supplementary appendix 2 for list of included BMC-series journals, and appendix 3 for list of included trials) where all submitted versions of the manuscript and the corresponding peer review comments and author responses were available online from the respective BMC-series medical journal websites.

Identification of randomised trials published in BMC-series medical journals from PubMed citations indexed from January to December 2012

Characteristics of primary reports of randomised trials

Table 1 provides information on the general characteristics of the primary reports of randomised trials as described in the published article. The majority of reports were single centre (n=47; 51%), parallel group trials (n=75; 81%), with two study groups (n=75; 81%) and a median sample size of 132 participants in each trial (10th to 90th centile, 30 to 527). Around a third (n=35; 38%) of reports assessed counselling or lifestyle interventions, 27% (n=25) drug interventions, and 14% (n=13) surgical interventions. The remaining studies (n=20, 21%) assessed other types of interventions, such as educational strategies or use of equipment.

Table 1.

General characteristics of randomised trials published in BMC-series medical journals in 2012

| Characteristics | No (%) of manuscripts (n=93) |

|---|---|

| Trial design: | |

| Parallel | 75 (81) |

| Crossover | 7 (7.5) |

| Cluster | 9 (9.5) |

| Other | 2 (2) |

| Intervention: | |

| Drug | 25 (27) |

| Surgery or procedure | 13 (14) |

| Counselling or lifestyle | 35 (38) |

| Other* | 20 (21) |

| Study centres: | |

| Single | 47 (51) |

| Multiple | 30 (32) |

| Unclear | 16 (17) |

| No of study groups | |

| 2 | 75 (81) |

| 3 | 9 (9.5) |

| ≥4 | 9 (9.5) |

| Median No of participants per trial (10th to 90th centile) | 132 (30 to 527) |

*For example, education, equipment.

Peer reviewers and numerical changes to manuscripts

By accessing the prepublication history for each published article we were able to record information on the life cycle of each manuscript (table 2). The median time interval between the original and final submitted version of a manuscript was 148 days (range 29-240) with 36 days (range 4-142) from final submission to online publication. The number of submitted versions of a manuscript varied (median 3, range 2-8), with a median of two peer reviewers (range 1-5) and two review rounds of peer review for each manuscript (range 1-4). Overall, the median proportion of words deleted from the original manuscript was 11% (range 1-60%) and for words added was 20% (range 2-88%).

Table 2.

Details of peer review and numerical changes to manuscripts (n=93). Values are medians (ranges), interquartile ranges unless stated otherwise

| Details of peer review | Results |

|---|---|

| Factors per manuscript: | |

| Median No (range) of peer reviewers | 2 (1-5) |

| Median No (range) of peer review rounds | 2 (1-4) |

| Median No (range) of author responses | 2 (1-7) |

| Median No (range) of submitted versions | 3 (2-8) |

| Words added (range) to final submitted version (%) | 20 (2-88), 12-36 |

| Words deleted (range) from final submitted version (%) | 11 (1-60), 5-21 |

| Time between original and final submitted version (days) | 148 (29-240), 105-204 |

| Time between final submitted and published version (days) | 36 (4-142), 23-54 |

Comparison between the original and final submitted manuscript

Table 3 shows the extent of changes in the reporting of methodological details made to manuscripts after peer review. More than half of the articles did not adequately report the results for the primary or secondary outcomes (for example, the estimated effect size and confidence interval) (n=51; 55%), whether the study was blinded (n=46; 50%), concealment of the random allocation sequence (n=50; 54%), important harms (n=60; 65%), or details of the trial protocol (n=84; 90%), in either the original or the final version of the manuscript. More than one third of articles also failed to describe the method of random sequence generation (n=35; 38%), report a sample size calculation (n=32; 34%), or clearly specify the primary and secondary outcomes (n=33; 35%).

Table 3.

Extent of changes between original and final versions of manuscript (n=93)

| Items | No (%) | ||||

|---|---|---|---|---|---|

| Reported (no change)* | Not reported (no change)† | Added‡ | Subtracted§ | Altered¶ | |

| “Randomised” in title | 71 (76) | 15 (16) | 4 (4) | 3 (3) | 0 |

| Author names | 87 (94) | 0 | 3 (3) | 3 (3) | 0 |

| Abstract: | |||||

| Method of randomisation | 8 (9) | 84 (90) | 1 (1) | 0 | 0 |

| Primary outcome | 22 (24) | 67 (72) | 1 (1) | 0 | 3 (3) |

| No randomised | 28 (30) | 64 (69) | 1 (1) | 0 | 0 |

| No analysed | 14 (15) | 79 (85) | 0 | 0 | 0 |

| Conclusion | 78 (84) | 0 | 0 | 0 | 15 (16) |

| Methods: | |||||

| Sequence generation | 44 (47) | 35 (38) | 11 (12) | 0 | 3 (3) |

| Allocation sequence | 32 (34) | 50 (54) | 9 (10) | 0 | 2 (2) |

| Blinding | 31 (33) | 46 (50) | 11 (12) | 0 | 5 (5) |

| Primary outcome | 47 (51) | 33 (35) | 0 | 0 | 13 (14) |

| Secondary outcomes | 47 (51) | 33 (35) | 2 (2) | 0 | 11 (12) |

| Sample size | 42 (45) | 32 (34) | 9 (10) | 2 (2) | 8 (9) |

| Results: | |||||

| Flow diagram | 52 (56.5) | 25 (27) | 9 (10) | 0 | 6 (6.5) |

| No randomised | 77 (83) | 8 (9) | 6 (6) | 0 | 2 (2) |

| No analysed | 73 (78) | 14 (15) | 5 (5) | 0 | 1 (1) |

| Primary outcome | 33 (35) | 51 (55) | 0 | 0 | 9 (10) |

| Secondary outcomes | 31 (33) | 51 (55) | 3 (3) | 0 | 8 (9) |

| Additional analyses | 9 (10) | 65 (70) | 19 (20) | 0 | |

| Tables (important changes) | 56 (60) | 3 (3) | 17 (18) | 3 (3) | 14 (15) |

| Figures (important changes) | 56 (60) | 15 (16) | 14 (15) | 4 (4) | 4 (4) |

| Harms | 28 (30) | 60 (65) | 2 (2) | 0 | 3 (3) |

| Conclusions | 63 (68) | 0 | 0 | 0 | 30 (32) |

| Other information: | |||||

| Funding | 67 (72) | 15 (16) | 5 (5) | 0 | 4 (4) |

| Trial protocol | 8 (9) | 84 (90) | 1 (1) | 0 | 0 |

| Trial registration | 47 (51) | 21 (23) | 22 (24) | 1 (1) | 2 (2) |

*Item reported in both original and final submitted version of manuscript (no change on wording).

†Item not reported in either original or final submitted version of manuscript.

‡Item new to final submitted version, was not in original version of manuscript.

§Item removed from final submitted version, was in original version of manuscript.

¶Item in both original and final submitted version of manuscript but wording changed (usually involved giving more detail for a specific item).

Where there were changes between manuscript versions these usually involved “adding” new information that had been missing from the original submission, or “altering” existing information. Changes included adding information about the random sequence generation (n=11; 12%) and concealment of allocation sequence (n=9; 10%), blinding (n=11; 12%), sample size (n=9; 10%), a CONSORT flow diagram (n=9; 10%), changes to tables (n=17; 18%) or figures (n=14; 15%), details of trial registration (n=22; 24%), or adding additional analyses (n=19; 20%) recommended or requested by the peer reviewer. Other changes included altering information about the trial primary (n=13; 14%) or secondary (n=11; 12%) outcomes in the methods section, altering the presentation of the results for the primary (n=9; 10%) or secondary (n=8; 9%) outcomes, or altering the trial conclusions (n=30; 32%). In addition, several items were omitted from the final manuscript, these included “randomised” in the manuscript title (n=3), one or more study authors (n=3), sample size calculations (n=2), tables (n=3), and figures (n=4). Few changes were made to the abstract, those that were made concerned altering of the abstract conclusion (n=15; 16%).

Nature of changes requested by peer reviewers and impact on reporting

In the final part of this study we looked at the nature of changes requested by peer reviewers and the extent to which authors responded to these requests (table 4). In around a third of manuscripts peer reviewers commented on the trial design (for example, the method of randomisation, blinding) (n=29; 31%), sample size (n=30; 32%), and trial conclusions (n=30; 32%); 24% (n=22) commented on the primary or secondary outcomes and 16% (n=15) on the outcome results. In 22% (n=20) of the manuscripts, peer reviewers recommended or requested additional analyses, 22% (n=20) mentioned the CONSORT statement, 5% (n=5) mentioned the trial protocol, and 12% (n=11) mentioned trial registration.

Table 4.

Nature of changes requested by peer reviewers (per manuscript) and impact on reporting

| Nature of changes | No (%) | ||||

|---|---|---|---|---|---|

| Manuscripts (n=93) | Positive impact* | No impact on reporting† | No impact on reporting§ | Negative impact¶ | |

| Abstract conclusion | 15 (16) | 14 (93) | 0 | 0 | 1 (7) |

| Trial design (randomisation and blinding) | 29 (31) | 27 (93) | 2 (7) | 0 | 0 |

| Sample size | 30 (32) | 15 (50) | 11 (37) | 0 | 4 (13) |

| Primary and secondary outcomes: methods | 22 (24) | 16 (73) | 3 (13) | 1 (5) | 2 (9) |

| Primary and secondary outcomes: results | 15 (16) | 14 (93) | 0 | 0 | 1 (7) |

| Additional analyses | 20 (22) | 4 (20) | 0 | 1 (5) | 15 (75) |

| Conclusion | 30 (32) | 27 (90) | 0 | 0 | 3 (10) |

*Peer reviewers’ comments judged to have beneficial effect on reporting, and author made change.

†Peer reviewers’ comments judged to have beneficial effect on reporting, and author did not make change.

§Peer reviewers’ comments judged to have harmful effect on reporting, and author did not make change.

¶Peer reviewers’ comments judged to have harmful effect on reporting, and author made change.

The number of changes requested by peer reviewers was relatively small and most were classified as having a positive impact on the reporting of the final manuscript (table 5). For example, where peer reviewers commented on the method of randomisation or blinding, the authors either added the item (n=19) or gave more details on how it was done (n=8). Other positive changes included the adding (n=7) or justification (n=8) of the sample size, clarification of the primary and secondary outcomes (n=5) and how they were measured (n=11), clarification (n=12) or the addition (n=2) of the outcomes results, and toning down the conclusion to reflect the trial results (n=27). In some instances peer reviewers’ comments had no impact on reporting, in that the authors ignored the comments or responded in the “response to reviewers” without making a change in the manuscript (n=16). For example, the peer reviewer commented on the lack of or small sample size (n=11), clarification of how the primary outcome was measured (n=3), or details on randomisation (n=2), to which the authors either did not respond to the peer reviewers request or responded but said that they did not do it, which was not reflected in the final manuscript.

Table 5.

Description of peer reviewer (PR) comments (per manuscript), author responses (AU), and impact of reporting

| Item | Positive impact* | No impact† | No impact‡ | Negative impact§ |

|---|---|---|---|---|

| Abstract conclusion | PR: conclusions do not reflect results. AU: toned down conclusion (n=14) | PR: revise conclusions. AU: inflated conclusion not reflecting results (n=1) | ||

| Trial design | PR: no details on randomisation/blinding. AU: added item (n=19). PR: clarify details on randomisation/blinding. AU: gave more detail (n=8) | PR: explain randomisation. AU: added text but did not understand concept (n=2) | ||

| Sample size | PR: no sample size. AU: added item (n=7). PR: justify sample size. AU: gave more detail (n=8) | PR: no sample size. AU: did not response or said did not do one (n=6). PR: small sample size. AU: justified sample size in response but not in manuscript (n=4). PR: small sample size. AU: added to limitations section (n=1) | PR: justify how sample done. AU: deleted sample size (n=2). PR: justify sample size. AU: added post hoc sample size, but was not reported as such in manuscript (n=2) | |

| Primary and secondary outcomes measured | PR: clarify which primary and secondary outcomes. AU: gave more detail (n=5). PR: how measured. AU: gave more detail (n=11) | PR: clarify how primary outcome measured. AU: did not respond (n=3) | PR: change primary outcome. AU: said no as not primary outcome for the study (n=1) | PR: add new secondary outcome. AU: added new outcome in methods (n=2) |

| Primary and secondary outcomes results | PR: clarify results. AU: gave more detail (n=12). PR: no results. AU: added results for secondary outcome (n=2) | PR: add new secondary outcome. AU: added new outcome in results (n=1) | ||

| Additional analyses | PR: clarify analysis. AU: added results for comparison across groups (n=4) | PR: add new additional analyses. AU: said no was not purpose of the study (n=1) | PR: add new additional analyses. AU: added subgroup/sensitivity analysis (n=15) | |

| Conclusion | PR: conclusions do not reflect results. AU: toned down conclusion (n=27) | PR: revise conclusions. AU: inflated conclusion but did not reflect results (n=3) |

*Peer reviewers’ comments judged to have beneficial effect on reporting, and author made change.

†Peer reviewers’ comments judged to have beneficial effect on reporting, and author did not make change.

‡Peer reviewers’ comments judged to have harmful effect on reporting, and author did not make change.

§Peer reviewers’ comments judged to have harmful effect on reporting, and author made change.

However, some of the changes requested by peer reviewers were classified as having a negative impact on reporting. This was particularly apparent in the analysis where authors added new subgroup or sensitivity analyses (n=15) requested by the peer reviewer (that is, were not part of the original study and were not reported as such in the final version of the manuscript). Other negative changes included deleting (n=2) or adding retrospective (n=2) sample size calculations, adding a new secondary outcome (n=2), and over-inflation of the conclusion that did not reflect the trial results (n=3). In addition there were two instances where the peer reviewers had requested that the authors change the primary outcome (n=1) or add additional analyses (n=1), and the authors responded to say that was not the purpose of their study. Supplementary appendix 4 provides examples of the type of changes requested by peer reviewers and the authors’ response to these requests.

Discussion

This sample of BMC-series medical journals offered a unique opportunity to investigate the effects of peer review on the reporting of published manuscripts, something which for most journals remains hidden within the editorial decision making process.9 In our study, the number of changes between the original and final submitted version of a manuscript was relatively small. Most involved adding new information (where this had been missing from the original submission) or altering existing information to give more detail about a specific item. The majority of changes recommended or requested by peer reviewers were classified as having a positive impact on reporting, such as adding information about randomisation, blinding, and sample size, altering the trial outcomes (for example, by specifying how the outcome was measured or at what time point), the presentation of the trial results, and the toning down of the conclusion to reflect the trial results.10 Some of the changes, however, were classified as having a negative impact on reporting, such as requests by peer reviewers for unplanned additional analyses that were not prespecified in the original trial protocol and that were not reported as such in the final manuscript. There is a concern that these additional analyses could be driven by an existing knowledge of the data, or the interests of the reader, rather than the primary focus of the study.11 Importantly, despite some improvements in reporting after peer review, it is clear that peer reviewers often fail to detect several important deficiencies in the reporting of the methods and results of randomised trials. The extent to which these findings are generalisable to other journals with different editorial and peer review processes is unclear. However, the problem of poor reporting is something that is seen consistently across the medical literature,12 suggesting that the findings seen in this sample of journals might be representative of other journals.

Comparison with other studies

We are not aware of other studies specifically dealing with the type and nature of changes made to manuscripts after peer review in the reporting of methodological aspects of randomised trials. Other studies on the impact of peer review have predominantly looked at the use of checklists to improve the quality of peer review, the effects of blinding authors and peer reviewers,13 14 and the implementation of training strategies for peer reviewers,15 16 with little empirical evidence to support the use of editorial peer review as a mechanism to ensure quality of biomedical research.5 More recently, two randomised controlled trials have shown that adding a statistical reviewer as part of the peer review process has a positive effect on the quality of the final manuscript,17 18 and that additional review based on reporting guidelines such as STROBE (strengthening the reporting of observational studies in epidemiology) and CONSORT can also improve the quality of submitted manuscripts.19

Limitations of this study

Our study has several limitations. Firstly, our sample was limited to BMC-series medical journals where peer reviews are published and available in the public domain. It is unclear the extent to which open peer review hinders reviewers from being overly critical or demanding and whether journals with a closed system might see more changes. Secondly, it is possible that manuscripts published in journals with different editorial and peer review processes, such as journals with a higher impact, greater editorial control, and more resources, may show a greater number of changes after peer review.9 Thirdly, we do not know the level of methodological expertise of the peer reviewers and whether they were predominately from a clinical, trial methodology, or statistical background. Finally, we only assessed the effects of peer review on the reporting of methodological aspects of randomised trials. We did not look at the clinical aspects of peer review as this would have required content expertise in each of the specific disease areas under investigation. We are therefore unable to comment on the effect of peer review on improving the reporting of clinical aspects of randomised trials.

Implications for peer review

This sample of BMC-series medical journals, whereby all peer reviewers’ comments, authors’ responses, and submitted versions of the manuscript are included alongside the published article provides a unique insight into the editorial process. This information is essential to enable readers to have a clear and transparent account of the peer review process. We would strongly recommend this model should be followed by other leading journals.

In our study it is clear that not all changes requested by peer reviewers are appropriate, as authors sometimes acceded to requests for inappropriate revisions to their manuscript; presumably in the hope that it would increase the likelihood of the manuscript being accepted for publication if they made the changes. Getting good peer reviewers for manuscripts is a common problem, particularly for smaller “lower impact” journals. Peer reviewers who do not engage with the manuscript and do it merely to say they review for a particular journal is equally as harmful as an author who fails to provide full details in how the study was carried out. Journal editors need to have better mechanisms to ensure that comments by peer reviewers are appropriate20 and that authors respond appropriately. Editors should also consider peer reviewers who are dedicated to reviewing different elements of the manuscript.18 The use of checklists and reporting guidelines (such as the CONSORT statement which aims to improve the completeness and transparent reporting of randomised trials) has been shown to help peer reviewers to identify problems in the reporting of manuscripts more effectively.19 In our study it took on average between 15 and 20 minutes to review each manuscript against the CONSORT checklist items; a task that could be done internally by a trained editorial assistant (internal peer review) before sending a manuscript out for external peer review. It is extremely unlikely that the “average” peer reviewer has the relevant clinical and methodological expertise to perform each task effectively.21

Conclusion

Based on this sample of BMC-series medical journals, we found that peer reviewers failed to detect important deficiencies in the reporting of the methods and results in randomised trials. The number of changes requested by peer reviewers was relatively small; however, most authors did comply with recommendations or requests by peer reviewers in their revised manuscript. The majority of requests could be seen to have a positive impact on reporting, although there were instances of suggestions that were inappropriate and had a negative impact on the reporting of the final manuscript. Better use and adherence of reporting checklists (www.equator-network.org) by journal editors, peer reviewers, and authors could be one important step towards improving the reporting of published articles.

What is already known on this topic

Despite the widespread use of peer review little is known about its impact on the quality of reporting of published research articles

Inadequacies in the methodology and reporting of research is widely recognised

Substantial uncertainty exists about the peer review process as a mechanism to improve reporting of the scientific literature

What this study adds

Peer reviewers often fail to detect important deficiencies in the reporting of the methods and results of randomised trials

Peer reviewers requested relatively few changes for reporting of trial methods and results

Most requests had a positive impact on reporting but in some instances the requested changes could have a negative impact

Contributors: SH and DA were involved in the design, implementation, and analysis of the study, and in writing the final manuscript. GC, LY, JC, MS, RW, IB, and LS were involved in the implementation of the study and in commenting on drafts of the final manuscript. SH is responsible for the overall content as guarantor.

Funding: This study received no external funding.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare: no support from any organisation for the submitted work; no financial relationships with any organisations that might have an interest in the submitted work in the previous three years; no other relationships or activities that could appear to have influenced the submitted work.

Data sharing: No additional data available.

Transparency: The lead author (the manuscript’s guarantor) affirms that the manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies from the study as planned (and, if relevant, registered) have been explained.

Cite this as: BMJ 2014;348:g4145

Web Extra. Extra material supplied by the author

Supplementary information

References

- 1.International Committee of Medical Journal Editors. Uniform requirement for manuscripts submitted to biomedical journals. 2008. www.icmje.org.

- 2.Hames I. Peer review and manuscript management in scientific journals: guidelines for good practice. Blackwell, 2007.

- 3.Rennie D. Suspended judgment. Editorial peer review: let us put it on trial. Control Clin Trials 1992;13:443-5. [DOI] [PubMed] [Google Scholar]

- 4.Smith R. Opening up BMJ peer review. BMJ 1999;318:4-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Jefferson T, Rudin M, Brodney FS, Davidoff F. Editorial peer review for improving the quality of reports of biomedical studies. Cochrane Database Syst Rev 2007;2:MR000016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Turner L, Shamseer L, Altman DG, Schulz KF, Moher D. Does use of the CONSORT statement impact the completeness of reporting of randomised controlled trials published in medical journals? A Cochrane review. Syst Rev 2012;1:60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.BMC Series Journals: peer review process. 2014. www.biomedcentral.com/authors/bmcseries.

- 8.Moher D, Hopewell S, Schulz KF, Montori V, Gotzsche PC, Devereaux PJ, et al. CONSORT 2010 explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ 2010;340:c869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Dickersin K, Ssemanda E, Mansell C, Rennie D. What do the JAMA editors say when they discuss manuscripts that they are considering for publication? Developing a schema for classifying the content of editorial discussion. BMC Med Res Methodol 2007;7:44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Boutron I, Dutton S, Ravaud P, Altman DG. Reporting and interpretation of randomized controlled trials with statistically nonsignificant results for primary outcomes. JAMA 2010;303:2058-64. [DOI] [PubMed] [Google Scholar]

- 11.Sun X, Briel M, Busse JW, You JJ, Akl EA, Mejza F, et al. Credibility of claims of subgroup effects in randomised controlled trials: systematic review. BMJ 2012;344:e1553. [DOI] [PubMed] [Google Scholar]

- 12.Hopewell S, Dutton S, Yu LM, Chan AW, Altman DG. The quality of reports of randomised trials in 2000 and 2006: comparative study of articles indexed in PubMed. BMJ 2010;340:c723. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Alam M, Kim NA, Havey J, Rademaker A, Ratner D, Tregre B, et al. Blinded vs. unblinded peer review of manuscripts submitted to a dermatology journal: a randomized multi-rater study. Br J Dermatol 2011;165:563-7. [DOI] [PubMed] [Google Scholar]

- 14.Cho MK, Justice AC, Winker MA, Berlin JA, Waeckerle JF, Callaham ML, et al. Masking author identity in peer review: what factors influence masking success? PEER Investigators. JAMA 1998;280:243-5. [DOI] [PubMed] [Google Scholar]

- 15.Schroter S, Black N, Evans S, Godlee F, Osorio L, Smith R. What errors do peer reviewers detect, and does training improve their ability to detect them? J R Soc Med 2008;101:507-14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Schroter S, Black N, Evans S, Carpenter J, Godlee F, Smith R. Effects of training on quality of peer review: randomised controlled trial. BMJ 2004;328:673. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Arnau C, Cobo E, Ribera JM, Cardellach F, Selva A, Urrutia A. [Effect of statistical review on manuscript quality in Medicina Clinica (Barcelona): a randomized study]. Med Clin (Barc) 2003;121:690-4. [DOI] [PubMed] [Google Scholar]

- 18.Cobo E, Selva-O’Callagham A, Ribera JM, Cardellach F, Dominguez R, Vilardell M. Statistical reviewers improve reporting in biomedical articles: a randomized trial. PLoS One 2007;2:e332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cobo E, Cortes J, Ribera JM, Cardellach F, Selva-O’Callaghan A, Kostov B, et al. Effect of using reporting guidelines during peer review on quality of final manuscripts submitted to a biomedical journal: masked randomised trial. BMJ 2011;343:d6783. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kravitz RL, Franks P, Feldman MD, Gerrity M, Byrne C, Tierney WM. Editorial peer reviewers’ recommendations at a general medical journal: are they reliable and do editors care? PLoS One 2010;5:e10072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Black N, van RS, Godlee F, Smith R, Evans S. What makes a good reviewer and a good review for a general medical journal? JAMA 1998;280:231-3. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary information