Abstract

Objective

To develop and psychometrically evaluate an audio digitised tool for assessment of comprehension of informed consent among low-literacy Gambian research participants.

Setting

We conducted this study in the Gambia where a high illiteracy rate and absence of standardised writing formats of local languages pose major challenges for research participants to comprehend consent information. We developed a 34-item questionnaire to assess participants’ comprehension of key elements of informed consent. The questionnaire was face validated and content validated by experienced researchers. To bypass the challenge of a lack of standardised writing formats, we audiorecorded the questionnaire in three major Gambian languages: Mandinka, Wolof and Fula. The questionnaire was further developed into an audio computer-assisted interview format.

Participants

The digitised questionnaire was administered to 250 participants enrolled in two clinical trials in the urban and rural areas of the Gambia. One week after first administration, the questionnaire was readministered to half of the participants who were randomly selected. Participants were eligible if enrolled in the parent trials and could speak any of the three major Gambian languages.

Outcome measure

The primary outcome measure was reliability and validity of the questionnaire.

Results

Item reduction by factor analysis showed that 21 of the question items have strong factor loadings. These were retained along with five other items which were fundamental components of informed consent. The 26-item questionnaire has high internal consistency with a Cronbach's α of 0.73–0.79 and an intraclass correlation coefficient of 0.94 (95% CI 0.923 to 0.954). Hypotheses testing also showed that the questionnaire has a positive correlation with a similar questionnaire and discriminates between participants with and without education.

Conclusions

We have developed a reliable and valid measure of comprehension of informed consent information for the Gambian context, which might be easily adapted to similar settings. This is a major step towards engendering comprehension of informed consent information among low-literacy participants.

Keywords: Ethics (see Medical Ethics), Medical Ethics

Strengths and limitations of this study.

Our study demonstrates that a locally appropriate informed consent tool can be developed and scientifically tested to ensure an objective assessment of comprehension of informed consent information among low and non-literate research participants.

This is capable of minimizing participants’ vulnerability and ultimately engenders genuine informed consent.

Our findings are based on data collected from specific research context in a small country with three major local languages. Further research is needed to validate this tool in other settings.

Introduction

Conduct of clinical trials in developing countries faces considerable ethical challenges.1 2 One of these constraints includes ensuring that informed consent is provided in a comprehensible manner that allows potential participants to freely decide whether or not they are willing to enrol in the study. According to the Helsinki Declaration3 and other internationally agreed guidelines,4 5 special attention should be given to the specific information needs of potential participants and to the methods used to deliver the information. This implies, among other things, that the information must be provided in the participant's native language. If the informed consent documents have been originally written in one of the major international languages, they must be translated to the local languages of potential study participants.6 7 The translated documents are subsequently back-translated by another independent group to the initial language to confirm that the original meaning of the contents of the document is retained.

In sub-Saharan Africa, this process may become extremely challenging because many research concepts such as randomisation and placebo do not have direct interpretations in the local languages.8 Furthermore, in some African countries, local languages exist only in oral forms and they do not have standardised writing formats, which makes written translation and back-translations of informed consent documents not only impractical, but also less precise.9 Further adding to these difficulties are the high rates of illiteracy and functional illiteracy in such contexts, which may contribute to the socioeconomical vulnerability of these research populations.10

Nevertheless, it remains crucial to ensure an understanding of vulnerable participants about study information because the voluntary nature of informed consent could be easily jeopardised by cultural diversity, an incorrect understanding of the concept of diseases, a mix of communal and individual decision-making, huge social implications of some infectious diseases and inadequate access to care.11 Use of an experiential model at the pre-enrolment, enrolment and postenrolment stages of clinical research11 as well as tailoring of cultural and linguistic requirements to the informed consent process has been reported to improve comprehension of basic research concepts.12

Furthermore, international guidelines3–5 emphasise that informed consent must be based on a full understanding of the information conveyed during the consent interview. In contexts characterised by high linguistic variability and illiteracy rates, the use of tools to ascertain comprehension of study information conveyed during the informed consent process may be recommended. These tools could vary from a study quiz to complex questionnaires.13 14 Tools that have been used extensively to assess informed consent comprehension include Brief Informed Consent Evaluation Protocol (BICEP),15 Deaconess Informed Consent Comprehension Test (DICCT)16 and the Quality of Informed Consent test (QuIC).17 These tools were limited in usability across other studies because they were developed for specific trials. In addition, because they were designed for the developed world, they are not easily adaptable to African research settings. To the best of our knowledge, there is no published article to support the availability of an appropriate measure of informed consent comprehension in African research settings. To comply with the ethical principle of respect for persons,4 a systematically developed tool could contribute to achieving an adequate measurement of comprehension of study information among the vulnerable research population in Africa. This is consistent with a framework incorporating aspects that reflect the realities of participants’ social and cultural contexts.11

This study was designed to develop and psychometrically evaluate an informed consent comprehension questionnaire for a low-literacy research population in the Gambia, for whom English is not the native language. This is the first step towards contextualising strategies of delivering study information to research participants; objectively measuring their comprehension of the information using a validated tool and, based on this, improving the way information is delivered during informed consent process.

Disease profile in the Gambia and research activities of the Medical Research Council Unit, the Gambia

The Gambia is one of the smallest West African countries with an estimated population of 1.79 million people.18 According to the 2012 World Bank report, Gambia's total adult literacy rate was 45.3% while the adult literacy rate of the female population, which constitutes a large majority of clinical trial participants, was 34.3%.19

Three major ethnolinguistically distinct groups, Mandinka, Fula and Wolof, populate the country. The languages do not have standardised writing formats and they are not formally taught in schools. The ethnic groups have similar sociocultural institutions such as the extended family system and patrilineal inheritance. Health-seeking behaviour is governed by traditions rather than modern healthcare norm. Because the people live in a closely knit, extended family system, important decisions like research participation is taken within the kinship structure.20

Like other low-income countries characterised by social and medical disadvantages,21 infectious diseases such as malaria, pneumonia and diarrhoea constitute major reasons for hospital presentations in the Gambia.22 In addition to the high disease burden, low literacy, a high poverty rate and inadequate access to healthcare tend to make the people vulnerable to research exploitation.21

The Medical Research Council (MRC) Unit in the Gambia was established to conduct biomedical and translational research into tropical infectious diseases. The institute has key northern and southern linkages and a track record of achievements spanning over 67 years. The research portfolio of MRC covers basic scientific research, large epidemiological studies and vaccine trials. Important recent and current vaccine trials include those on malaria, tuberculosis, HIV, Haemophilus influenzae type B, measles, pneumococcal and the Gambia Hepatitis Intervention study. Preventive research interventions include intermittent preventive treatment with sulfadoxine-pyrimethamine versus intermittent screening and treatment of malaria in pregnancy, a cluster randomised controlled trial on indoor residual spraying plus long-lasting insecticide impregnated nets. Ethical conduct of these studies takes place through sustained community involvement and engagement of participants as research partners.20

Methods

Study design

Questionnaire development

The items on the questionnaire were generated from the basic elements of informed consent obtained from an extensive literature search on guidelines for contextual development of informed consent tools,23–32 international ethical guidelines3–5 and operational guidelines from the Gambia Government/Medical Research Council Joint Ethics Committee.33

We identified and generated a set of question items on 15 independent domains of informed consent. These domains are voluntary participation, rights of withdrawal, study knowledge, study procedures, study purpose, blinding, confidentiality, compensation, randomisation, autonomy, meaning of giving consent, benefits, risks/adverse effects, therapeutic misconception and placebo.

Because evidence has shown the deficiencies of using one question format in assessing comprehension of informed consent information,30 we developed a total of 34 question items under three different response formats. These response options are a combination of Yes/No/I don't know, multiple choice and open ended with free text response options. The inclusion of the ‘I don't know’ option was meant to avoid restricting participants to only two options of ‘yes or no’, which is capable of inducing socially desirable responses and also helps to reduce guesswork.

The questionnaire was made up of five sections: the first section contains 10 closed ended and 7 follow-up question items; the second section has 6 single choice response items; the third section has 4 multiple choice response items; the fourth section has 7 free-text open-ended question items. The last section has 9 questions on sociodemographic information of participants and these were not included in the psychometric analysis of the questionnaire.

The follow-up question items were included in the first section to ensure that the responses given by participants truly reflected their understanding as asked in the closed-ended questions, for example, “Have you been told how long the study will last?” was followed by “If yes, how many months will you be in this study?”. No response options were given and the participants were expected to give the study duration based on their understanding of information given during the informed consent process. The order of responses to the questions was reversed for some items to avoid participants defaulting to the same answer for each question.

The use of multiple choice and open-ended response items was meant to explore participants’ ‘actual’ understanding of study information, because this could not be adequately measured using the closed-ended response options.

To enable non-literate participants understand how to answer questions under multiple and open-ended response options, we included locally appropriate sample question items before the main questions. For example, ‘Domoda’ soup is made from: a. Bread, b. Groundnut, c. Yam, d. Orange. Groundnut is the correct response and participants were directed to choose only one correct response in the question items that followed the sample question. For items with multiple response options, we included: “Which of these are Gambian names for a male child: a. Fatou, b. Lamin, c. Ebrima, d. Isatou.” The correct responses are Lamin and Ebrima; participants were directed to choose more than one correct response that applies to the question items.

As the questionnaire was intended to be used across different clinical trials, we developed question items that aimed to be applicable to most clinical trials and yet specific to individual trials. This was achieved with the inclusion of open-ended question items in which participants could give trial-specific responses. An example of this was: “What are the possible unwanted effects of taking part in this study?” which allowed participants to explain in his/her words the adverse events peculiar to the clinical trials in which he/she is participating.

Face and content validity

Face validity was performed to assess the appearance of the questionnaire regarding its readability, clarity of words used, consistency of style and likelihood of target participants being able to answer the questions. Content validity was performed to establish whether the content of the questionnaire was appropriate and relevant to the context for which it was developed.34 After generating the question items, we requested five researchers: two from the London School of Hygiene and Tropical Medicine UK (LSHTM) and three from MRC, the Gambia, who are experienced in clinical trials methodology, bioethics and social science methods to review the English version of the questionnaire for face and content validity. All of them agreed that essential elements of informed consent information were addressed in the questionnaire, and that the items adequately covered the essential domains of informed consent, with special attention to those whose understanding may be especially challenged in African research settings. They also supported the use of multiple response options as being capable of eliciting appropriate responses that might reflect a true ‘understanding’ of participants. One of the reviewers recommended presenting the item in the form of a question instead of a statement, for example, “I have been told that I can freely decide to take part in this study” was changed to “Have you been told you can freely decide to take part in this study?”. The response option was also changed from True/False to Yes/No.

We further gave the revised English version of the questionnaire to three experienced field assistants at MRC and three randomly chosen laypersons to assess the clarity and appropriateness of the revised question items and their response options. The laypersons were selected randomly from a list of impartial witnesses by choosing one person each from three ethnolinguistic groups in the Gambia. They independently agreed that the questions were clear, except for three items addressing confidentiality, compensation and the right to withdraw. On the basis of these feedbacks, we reworded the question items to improve clarity. The question on confidentiality was reframed from “Will non-MRC workers have access to your health information?” to “Will anyone not working with MRC know about your research information?”. Similarly, “Will you be rewarded for taking part in this study?” was changed to “Will you receive money for taking part in this study?”.

Audiorecording in three local languages and development into a digitised format

Owing to the lack of acceptable systems of writing Gambian local languages, the question items were audiorecorded in three major Gambian languages, Mandinka, Wolof and Fula, by experienced linguistic professionals who are native speakers of the local languages and are also familiar with clinical research concepts. Audio back-translations were made for each language by three independent native speakers and corrections were made in areas where translated versions were not consistent with the English version. A final audio proof was conducted by three clinical researchers (native speakers) who independently confirmed that the translated versions retained the original meaning of the English version.

The revised questionnaire was developed into an audio computer-assisted interview format at the School of Medicine, Tufts University, Boston, USA. In conjunction with the MRC community relations officer, we identified and selected locally acceptable symbols and signs, for example, star, moon, house, fish, bicycle, to represent the response options. The question items were serially developed into the digitised format and draft copies were sent to the first author, MOA, for review at each stage. After ensuring that the wordings of the paper questionnaire were consistent with the digitised version, translated audios in Mandinka, Wolof and Fula were subsequently recorded as voice-overs on the digitised questionnaire, which will be subsequently referred to as the Digitised Informed Consent Comprehension Questionnaire (DICCQ) in this manuscript.

Piloting

On completion of the initial development, DICCQ was piloted among 18 mothers of infants participating in an ongoing malaria vectored vaccine trial at the MRC Sukuta field site (ClinicalTrials.gov NCT01373879). The field site is located about 5 km from the MRC field site targeted for field testing of the questionnaire. DICCQ was administered through an interviewer (MOA) on a computer laptop in a private consultation room within the Sukuta field site. After entering the participant's assigned identification number and interviewer's initials into DICCQ, the participant's local language of choice was selected on the computer screen. Operated by MOA, the question items were serially read aloud to the participants in the local language with the click of a button on the lower toolbar of the computer screen and a ‘forward arrow’ button to move to the next question item. Participants answered either by vocalising their responses or by pointing to the symbols on the computer screen that corresponded to their choice of responses. The participants generally reported the questionnaire to be clear and easy to follow. The audio translations were also accepted as conforming with the dialects spoken by the majority of Gambians. The average administration time was 29.4 min. Suggestions were made to include ‘backward’, ‘repeat’ and ‘skip’ function buttons in the computer toolbar. These amendments were incorporated into the final version of the digitised questionnaire.

Field testing

The final version of DICCQ (see online supplementary appendix 1) was tested sequentially among participants in two clinical trials. The two sites were selected for field testing of the questionnaire based on some similarities of the clinical trials taking place simultaneously at the two diversely distinct research communities within the Gambia.

The first field test took place from 4 to 20 February 2013 among mothers of children enrolled in an ongoing randomised controlled, observer blind trial that aimed to evaluate the impact of two different formulations of a combined protein-polysaccharide vaccine on the nasopharyngeal carriage of Streptococcus pneumoniae in Gambian infants at the Fajikunda field site of MRC (ClinicalTrials.gov NCT01262872). The site is located within an urban health centre, about 25 km south of the capital, Banjul. A total of 1200 infants were enrolled in the trial and mothers brought their children for a total of six study visits over a period of one year.

The second field test took place from 22 February to 15 March 2013 in villages around Walikunda, about 280 km east of Banjul, among participants in an ongoing randomised controlled, observer blind trial (http://www.who.int/whopes/en/). The study was designed to compare the efficacy of two different doses of a newly developed insecticide with the conventional one, used for indoor residual spraying for malaria vector control in the Gambia. Over 900 households in 18 villages around the Walikunda field station of MRC were randomly selected to receive any of the three doses of insecticides. Household participants gave informed consent before indoor spraying of the insecticides. Entomologists visited the households every month for 6 months to collect mosquitoes and interviewed the participants for perception of efficacy and adverse effects of the insecticides.

In the two studies, written informed consent was obtained based on the English version of the respective study information sheets. These were explained in the local languages by trained field staff, in the presence of an impartial witness in case of illiteracy. Similarly, prior to administering DICCQ at each trial site, written informed consent was obtained from participants or their parents. One week after first administration, DICCQ was readministered to the randomly selected group among the participants.

After obtaining a written informed consent, trained interviewers administered DICCQ on a laptop computer to each participant in his/her preferred local language in noise-free consulting rooms at the MRC facility located within the Fajikunda Health Centre and at designated areas within the households in Walikunda villages. In addition, at the end of the first questionnaire administration, each participant in the two sites was administered an Informed Consent Questionnaire (ICQ),35 which has been validated in a different context. Briefly, ICQ consists of two subscales: the ‘understanding’ subscale, which has four question items, and the ‘satisfaction’ subscale, which has three question items on satisfaction with study participation (see online supplementary appendix 2). The questionnaire was validated in English among participants in a randomised clinical trial of Gulf War veterans’ illnesses. ICQ exhibited good psychometric properties following standard item-reduction techniques.35 Similar to DICCQ, the ‘understanding’ subscale of ICQ covers the domain on the meaning of consenting, benefits and risks of trial participation. However, unlike ICQ, the ‘study expectation’ domain was not covered by DICCQ. ICQ was orally translated to Mandinka, Fula and Wolof by three independent native speakers who confirmed consistency with the original English version. To establish construct validity, the participants’ scores on ICQ were compared with their scores on DICCQ.

Sample size estimation

Sample size for validation studies is usually determined with the aim of minimising SE of the correlation coefficient for reliability test. Also, 4–10 participants per question items are recommended to obtain a sufficient sample size in order to ensure stability of the variance–covariance matrix in factor analysis.36 37 Based on these recommendations, we chose seven participants per question items. DICCQ has 34 question items (excluding the first 9 questions on sociodemographic data and information on previous clinical trial participation) to give 34×7=238 participants. Allowing for a 5% non-response rate, the sample size was approximated to 250. Half of these participants (n=125) were invited 1 week after first administration of the questionnaire for a retest. Written informed consents were obtained from each consenting participant. Participation was voluntary and confidential.

Scoring system for the questionnaire

The scoring algorithm consistent with the level of increasing difficulty of the question items is summarised in table 1. In designing the scoring algorithm, we considered the possibility that certain question items should attract greater weight than others in determining the summated scores. For example, closed-ended question items were scored 0–3, question items with multiple response options were scored 0–4 and open-ended question items with no response option were scored 0–5. The first author, MOA, scored all participants to avoid inter-rater variations. Participant scores on closed-ended question items were summed up as the ‘recall’ scores while participant scores on open-ended question items were summed as the ‘understanding’ scores. The total sum of ‘recall’ and ‘understanding’ scores for each participant constitutes the ‘comprehension’ scores38 (not shown in this manuscript).

Table 1.

Scoring of question items

| Closed-ended question items in the first section | Each correct answer was scored 3; wrong answer was scored 0 and responses with ‘I don't know’ were scored 1 |

| Open-ended question items which are follow-up questions to the closed-ended question items in the first section | Each correct answer was scored 5, partially correct answer was scored 3, incorrect answer was scored 0, while ‘I don't know’ responses were scored 1 |

| In the second section, participants chose one correct answer out of four option responses | Each correct answer was scored 3, incorrect answer was scored 0 and ‘I don't know’ responses were scored 1 |

| In the third section, participants chose more than one correct answers from four option responses | Full correct answers were scored 4, partially correct answers were scored 2, wrong answers were scored 0 and ‘I don't know’ answers were scored 1 |

| In the fourth section, participants responded using their own words to open-ended question items | Full correct answer was scored 5, partially correct answers were scored 3, wrong answers were scored 0 and ‘I don't know’ responses were scored 1 |

For ICQ,35 responses were scored as follow: 3 for ‘Yes, completely’, 2 for ‘Yes, partially’, 1 for ‘I don't know’ and 0 for ‘No’. The first author, MOA, assigned the scores based on the responses ticked by trained assistants who administered the questionnaire to the participants.

Data analysis

Data were retrieved from the in-built database of DICCQ and converted to the Microsoft Excel format. Analysis was performed with Stata V.12.1 (College Station, USA) and the Statistical Package for Social Sciences software V.20.0 (Chicago, Illinois, USA). The significance of group differences was tested by Mann-Whitney U tests for demographic variables with p<0.05 (two-tailed) considered as significant. Psychometric properties of DICCQ were evaluated in terms of reliability and validity using the following steps:

Steps in validation analysis

Construct validity: Construct validity refers to the degree to which items on the questionnaire relate to the relevant theoretical construct. It represents the extent to which the desired independent variable (construct) relates to the proxy independent variable (indicator).39 40 For example, in DICCQ, ‘recall’ and ‘understanding’ were used as indicators of comprehension. This is based on an earlier study41 which defined ‘recall’ as success in selecting the correct answers in the question items and ‘understanding’ as correctness of interpretation of statements presented in the question items. When an indicator consists of multiple question items like in DICCQ, factor analysis is used to determine construct validity.39 42

To verify construct validity, the design of DICCQ was analysed in a stepwise procedure. First, we tested whether the sample size of 250 was sufficient to perform factor analysis of the 34-item DICCQ according to the Kaiser-Meyer-Olkin (KMO) coefficient (acceptable value should be >0.5). In a second step, we conducted a principal component analysis (PCA) to derive an initial solution. Third, we determined the number of factors to be extracted according to three different criteria: (1) eigenvalue >1, (2) Cattell's scree plot and (3) the number of factors identical with the proposed number of subscales (ie, the ‘recall’ and ‘understanding’ subscales).34 43 In the last step, we compared the unrotated and the rotated factor solutions. The rationale of rotating factors is to obtain a simple factor structure that is more easily interpreted and compared. We chose the varimax rotation as the most commonly used orthogonal rotation undertaken to rotate the factors to maximise the loading on each variable and minimise the loading on other factors.34 43 44

Furthermore, owing to a lack of a specific ‘gold standard’ tool to measure informed consent comprehension, we could not examine concurrent (criterion) validity in which participants’ scores on DICCQ could be compared with the participants’ scores on the ‘gold standard’ obtained at approximately the same point in time (concurrently). Nevertheless, construct validity provided evidence of the degree to which participants’ scores on the questionnaire were consistent with hypotheses formulated about the relationship of DICCQ with the participants’ scores on other instruments measuring similar or dissimilar constructs, or differences in the instrument scores between subgroups of study participants.40 Two forms of construct validity based on hypothesis testing were examined:

Convergent validity: A good example of an instrument measuring the same construct as DICCQ is ICQ which contains four question items on ‘understanding’ subscale and three items on ‘satisfaction’ subscale.

The following a priori hypotheses were made: convergent validity—participants’ scores on DICCQ will correlate positively with their scores on ‘understanding’ subscale of ICQ because both constructs relate to informed consent comprehension in clinical trial contexts. However, the correlation is not expected to be high, because DICCQ covers more domains of informed consent comprehension than the ICQ subscale.

2. Discriminant validity which examines the extent to which a questionnaire correlates with other questionnaires of construct that are different from the construct the questionnaire is intended to assess. To determine this, it was hypothesised that participants’ scores on DICCQ will correlate negatively with the ‘satisfaction’ subscale of ICQ because DICCQ does not include the ‘satisfaction’ domain about study participation. Spearman’s correlation coefficients were used because the data of the questionnaires (DICCQ and ICQ) were not normally distributed.

3. To establish further evidence of construct validity, we examined the discriminative validity in which participants’ scores on DICCQ were compared between subgroups of participants who differed on the construct being measured. Using the Mann-Whitney U test, the differences of participants’ scores on DICCQ were compared based on their demographic variables (ie, gender, place of domicile: urban vs rural and education status).

Reliability

After completing item reduction in the validity analysis, the item-reduced DICCQ was investigated for reliability. Reliability describes the ability of a questionnaire to consistently measure an attribute and how well the question items conceptually agree together.34 45 Two commonly used indicators of reliability, internal consistency and test–retest reliability were employed to examine the reliability of DICCQ. Cronbach's α was computed to examine the internal consistency of the questionnaire. Because the questionnaire contains the ‘recall and understanding’ subscales, Cronbach's α was computed for each subscale as well as for the entire scale. An acceptable value for Cronbach's α was ≥0.7.37 39

Test–retest reliability was examined by administering the same questionnaire to half of the study participants who were randomly selected on two different occasions, one week apart. This is based on the assumption that there would be no substantial change in the comprehension scores of participants between the two time points.34 46 A high correlation between the scores at the two time points indicates that the instrument is stable over time.34 46 Analysis of participants’ scores between the test and retest was conducted by estimating the intraclass correlation coefficients and 95% CI.

Results

Two hundred and fifty participants consisting of 130 participants from the clinical trial in the urban setting and another 120 clinical trial participants in the rural setting were interviewed. To address the missing data, participants (n=3) who did not respond to three or more items (5%) in DICCQ were excluded from further analysis.44 Those with one or two missing items (n=6) were replaced with the mean value of the participant scores for the question item.44 Thus, data from 247 participants were included in the final analysis. The mean age was 37.06±15 years; there were 129 participants (52.2%) in the urban group and 118 participants (47.8%) in the rural group. The overall mean time of administration of the questionnaire was 22.4±7.4 min while the overall mean time for retest of the questionnaire was 18.5±5.4 min. The reduction in duration of administration of the questionnaire might be because a majority of the participants became familiar with the question items as a result of the short interval of one week between the first and second administration.

The sociodemographic characteristics of the study participants are summarised in table 2.

Table 2.

Sociodemographic characteristics of study participants

| Characteristics | Frequency (%; N=247) |

|---|---|

| Age group (years) | |

| 18–25 | 67 (26.8) |

| 26–33 | 65 (26.0) |

| 34–41 | 40 (16.0) |

| 42–49 | 23 (9.2) |

| >49 | 55 (22.0) |

| Gender | |

| Female | 156 (63.2) |

| Male | 91 (36.8) |

| Domicile | |

| Urban | 129 (52.2) |

| Rural | 118 (47.8) |

| Occupation | |

| Farming | 80 (32.3) |

| Trading | 39 (15.8) |

| Artisans | 7 (2.8) |

| Civil servant | 18 (7.3) |

| Housewife | 94 (38.2) |

| Schooling | 4 (1.6) |

| Unemployed | 5 (2.0) |

| Education group | |

| Had western education | 62 (25.1) |

| Had no western education | 185 (74.9) |

| Religious affiliation | |

| Islam | 239 (96.8) |

| Christianity | 8 (3.2) |

| Previous clinical trial participation | |

| Only one | 200 (81.0) |

| More than one | 47 (19.0) |

Table 2 shows that a majority of the participants (about 27%) were in the age group 18–25 years; about 63% were women and about 40% had no formal education. The index trial was the first clinical exposure in 81% of the participants, while the rest had participated in at least one trial apart from the current trial.

Factor analysis

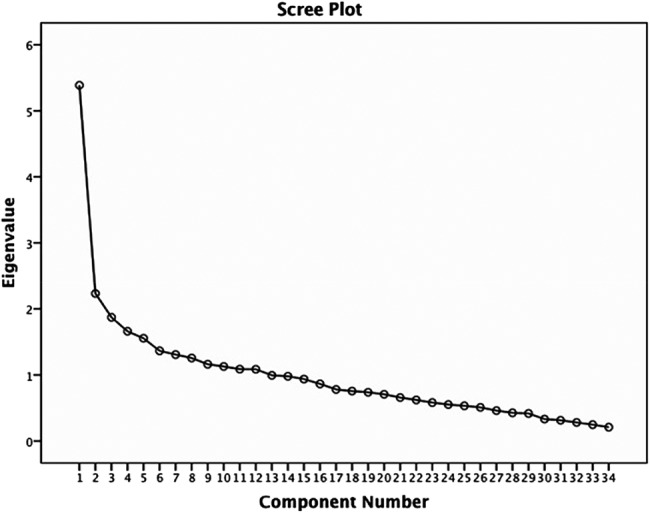

The KMO coefficient for DICCQ was 0.62 (acceptable value was >0.5), confirming a sufficient degree of common variance and the factorability of the intercorrelation matrix of the 34 items. The first PCA yielded a total variance of 69.02%, which implied that at least 50% of the variance could be explained by common factors, and this is considered acceptable. This initial solution after PCA revealed 13 components with eigenvalues >1. However, the scree plot began to level off after two components, consistent with the number of subscales (figure 1). As the scree plot is considered more accurate in determining the numbers of factors to retain especially when the sample size is ≥250, or the questionnaire has more than 30 items,42 a two factor solution with varimax rotation was considered conceptually relevant and statistically appropriate for DICCQ. To give the correct explanation, the values of factor loadings were checked using Steven's guideline of acceptable value of 0.29–0.38 for a sample size of 200–300 participants.42 As the sample size used in this study was 250, eight items: two items on study duration, four items on the funder/sponsor of the study and two items on the number of study participants with factor loadings of <0.3, were deleted. Five items: voluntary participation, rights of withdrawal, placebo, blinding and study purpose, were retained despite low factor loadings because they were theoretically important components of informed consent. The final PCA of the two-factor solution with 26 items (corresponding to ‘recall and understanding’ themes) accounted for 60.25% of the total variance. The factor loadings of the final PCA and their factorial weights are shown in table 3.

Figure 1.

Cattell's scree plot for the item-level factor analysis.

Table 3.

Principal component analysis (PCA) with varimax rotation: final two-component solution and Cronbach's α of each component

| PCA factor loadings | |

|---|---|

| Recall items (n=17): closed-ended and multiple choice response formats (Cronbach's α=0.79) | |

| Told I can freely take part | 0.719 |

| Told I can withdraw anytime | 0.314 |

| Will know the study drug/vaccine | 0.552 |

| Unauthorised person will not know about my participation | 0.372 |

| Told the contact person | 0.540 |

| My participation can be stopped without my consent | 0.420 |

| Will I be paid for taking part | 0.395 |

| How were participants divided into groups | 0.403 |

| At what point can I leave the study | 0.371 |

| Meaning of signing/thumb-printing consent form | 0.390 |

| How I decided to take part | 0.429 |

| What will I receive as compensation | 0.520 |

| What will happen if I decide to withdraw | 0.464 |

| Reason for doing the parent study | 0.393 |

| Which are the study procedures | 0.489 |

| Which are the study activities | 0.617 |

| Which are the main benefits of taking part | 0.390 |

| Understanding items: open-ended response format (n=9; Cronbach's α=0.73) | |

| Describe the function of the study drug/vaccine | 0.647 |

| Mention the name of the contact person | 0.451 |

| Tell what researchers want to find in this study | 0.312 |

| Number of study visits | 0.492 |

| Tell what were done during the study visits | 0.498 |

| Describe how participants were divided | 0.689 |

| Tell the difference between taking part in a study and going to hospital | 0.464 |

| What are the possible unwanted effects of a study drug/vaccine | 0.388 |

| Why were participants given different drugs/vaccines | 0.437 |

Table 3 shows that the factorial weights of each item of the two components are greater than 0.3 and that Cronbach's α coefficient of each component is greater than 0.7, suggesting high internal consistency.

Internal consistency reliability

Cronbach's α computed for the item-reduced DICCQ was 0.79 and 0.73, respectively, for ‘recall and understanding’ domains. This indicates a high correlation between the items and that the questionnaire is reliable.

Test–retest reliability

One hundred and twenty-six (51%) of 247 participants completed the second questionnaire at a mean of 7.5 days after the first administration. The mean age of respondents who had a retest was 36.9±15.1 years; 77 (60.6%) were women and 50 (39.4%) were men; 60 (47.2%) were from a rural setting while 67 (52.8%) lived in the city. The average time of administration was 18.5±5.4 min (range 9–39 min). An intraclass correlation coefficient of 0.94 (95% CI 0.923 to 0.954) was obtained, showing that the questionnaire was consistently reliable over the two periods of administration.

Validity

Convergent validity

To test the expected relationships between DICCQ and ICQ, we correlated total DICCQ scores with ICQ scores in the sample population (n=247). As expected, DICCQ was significantly positively correlated with the ‘understanding’ subscale of ICQ (r=0.306, p<0.001). These findings provide some evidence of convergent validity.

Discriminant validity

Also as predicted, DICCQ was significantly negatively correlated with the ‘satisfaction’ subscale of ICQ (r=−0.105, p=0.049), providing evidence of discriminant validity.

Discriminative validity

Expectedly, there was a significant statistical difference in the comprehension scores on DICCQ among female and male participants (z=8.8, p<0.001), rural and urban participants (z=−11.1, p<0.001) and educated and non-educated participants (z=4.27, p<0.001). This provides further evidence of construct validity (table 4).

Table 4.

Discriminative validity showing differences of comprehension scores by participants’ demographic variables

| Rank sum | Expected | Significance | |

|---|---|---|---|

| Gender | |||

| Male (n=91) | 5765.5 | 11 284 | z=8.80, p<0.001 |

| Female (n=156) | 24 862.5 | 19 344 | |

| Domicile | |||

| Urban (n=129) | 7640.5 | 14 632 | z=−11.1, p<0.001 |

| Rural (n=118) | 22 987.5 | 15 996 | |

| Education status | |||

| Educated (n=62) | 9765 | 7688 | z=4.27, p<0.0001 |

| No western education (n=185) | 20 863 | 22 940 | |

Table 4 shows that there were significant differences in the comprehension scores of participants based on gender, place of domicile and education status.

Discussion

This study reports the psychometric properties of a digitised audio informed consent comprehension questionnaire when tested in a sample of clinical trial participants in urban and rural settings in the Gambia. This is the first validation process of the questionnaire and the results suggest that it has good psychometric properties. The digitised audio questionnaire in local languages could be useful as a measure of comprehension of informed consent. DICCQ demonstrated good internal consistency and convergent, discriminant and discriminative validity. This study adds to knowledge by demonstrating that the digitised questionnaire can be developed and psychometrically evaluated in three different oral languages.

Expectedly, DICCQ scores were significantly positively correlated with the ‘understanding’ subscale of ICQ, and significantly negatively correlated with the ‘satisfaction’ subscale of the questionnaire. These significant correlations are evidence of convergent and discriminant validity of DICCQ, because DICCQ scores correlated with scores on ICQ in the theoretically expected directions. Furthermore, there were significant statistical differences in the participants’ scores on DICCQ based on their gender, domicile and education status (p<0.0001), providing evidence of the discriminative validity of the questionnaire. Taken together, these findings establish a construct validity of DICCQ.

This innovative approach of developing and delivering questions has enabled a rapid measurement of informed consent comprehension in rural, remote and urban research settings. It overcomes the obstacles of multiple written translations, which are quite challenging in some African countries due to the lack of standardised written languages and low literacy. The use of orally recorded interpretations of the questionnaire and delivery through a digitalised format ensured that the questions were consistently presented to all participants. Given that the communication skills of an interviewer could influence comprehension of the information, it was important that we used experienced native speakers to interpret the English version of the questionnaire to Gambian local languages that were understandable to the participants in rural and urban settings.

Nevertheless, we fully recognise that it could be counterproductive to depend solely on the technology of a tool to meet the comprehension assessment of participants during informed consent process; hence, we involved trained interviewers to administer the audio computerised questionnaire to the participants. In our study, we ensured that the research team had sufficient time to discuss participants’ concerns about the research, in addition to the use of the comprehension tool. Thus, we believe that the overall acceptance and success of the tool will ultimately depend on a well-balanced combination of the technology and human elements.38

The questionnaire software also has an in-built database which minimises errors in data entry and reduces data entry time. This improves the accuracy and quality of the data and ultimately the psychometric properties of the questionnaire.

Another important strength of this study is the reasonable sample sizes used in the rural and urban populations. Almost 99% of the participants for the first and retest questionnaires completed the study. The representative sample and high response rates could be due to the fact that the participants were recruited from ongoing clinical trials with regimented study visits. Also, the strategy used in administering the questionnaire in the local languages of choice of the participants encouraged greater participation and high retention rates.

A major limitation could be that this experience is very specific to the Gambia, a relatively small country with three major local languages. It may be challenging to translate this experience to other contexts. Also, the scoring of all participant assessments by a single researcher eliminated inter-rater variability, which could create a possibility of error that might lead to underestimation or overestimation of participants’ comprehension scores. Nevertheless, this effort represents an important development towards improving informed consent comprehension. Until now, a lot of literature has explained the challenges of informed consent comprehension in resource-poor contexts, but few concrete recommendations have improved it. If DICCQ can help to identify elements of informed consent which are less understood in a specific context, then further work could be carried out with a multidisciplinary team and the community for developing better approaches, wordings and examples for describing those aspects which are more difficult to understand in that very context. This will, in addition to improving participants’ comprehension, protect their freedom to decide, and also potentially improve the quality of data and outcome of the research.

Another limitation of this study is that known group validity and sensitivity to change could not be determined. This is because known group validity requires a strong a priori hypothesis that groups differ on the construct. There is insufficient previous research on informed consent comprehension to develop strong a priori expectations about differences in comprehension levels between different subgroups of participants. There is an expectation of higher comprehension levels when the tool is used following an intervention, which will make a preintervention and postintervention comparison test a test of sensitivity to change and of known group validity. This will be explored in a future study where the ability of the questionnaire to detect changes in the participants’ level of comprehension will be calculated by determining the effect size and the standardised response means.

Conclusions

DICCQ was developed using a combination of international and local guidelines. The present study suggests that the questionnaire has two factors, consistent with the definition proposed by Minnies et al41 suggesting comprehension as comprising recall and understanding components.

We conclude that DICCQ not only has good psychometric properties, but also has potential as a useful measure of comprehension of informed consent among clinical trial participants in low-literacy communities. As with all psychometric instruments, the evidence for the psychometric properties and usefulness of DICCQ for evaluating informed consent comprehension will be strengthened by further research. In particular, it will be important to (1) test the psychometric properties of the questionnaire in other African populations, (2) conduct long-term follow-up studies and (3) explore the properties of DICCQ in different phases of clinical trials, in particular preventive and therapeutic trials. This will enable predictive testing including further tests of known group validity; overall, it will also provide us with more reliable information to improve the process of informed consent in African contexts, in a relationship of mutual partnership between study participants and researchers.

Supplementary Material

Acknowledgments

The authors would like to thank Dr Jenny Mueller and Sister Vivat Thomas-Njie of the Clinical Trials Support Office of the Medical Research Council (MRC) Unit, The Gambia. They appreciate the support of Drs Alice Tang and Sugrue Scott of the School of Medicine, Tufts University, Boston, USA for developing the questionnaire software. They also acknowledge the support and cooperation of Drs Odile Leroy and Nicola Viebig of the European Vaccine Initiative, Germany as well as the clinical trial teams and participants at the Fajikunda and Walikunda field sites of MRC.

Footnotes

Contributors: MOA conceived, designed and conducted the study and also drafted the manuscript. KB, UD’A, MOCO, EBI, RR, HJL, NM and DC made substantial inputs to the analysis and interpretation of data, revision of the article and approved the final version of the manuscript.

Funding: This study is supported by a capacity building grant (IP.2008.31100.001) from the European and Developing Countries Clinical Trials Partnership.

Competing interests: NM was supported by a Wellcome Trust Fellowship (grant # WT083495MA).

Patient consent: Obtained.

Ethics approval: The ethics committee of the London School of Hygiene and Tropical Medicine, UK and the Gambia Government/Medical Research Council Joint Ethics Committee approved the study.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: No additional data are available.

References

- 1.Angell M. The ethics of clinical research in the Third World. N Engl J Med 1997;337:847–9 [DOI] [PubMed] [Google Scholar]

- 2.Annas GJ. Globalized clinical trials and informed consent. N Engl J Med 2009;360:2050–3 [DOI] [PubMed] [Google Scholar]

- 3.WMA. World Medical Association Declaration of Helsinki––ethical principles for medical research involving human subjects: adopted by the 18th World. Helsinki, Finland: Medical Association General Assembly, June 1964, and last amended by the 64th World Medical Association General Assembly in Fortaleza, Brazil, October 2013. http://jama.jamanetwork.com/article.aspx?articleid=1760318 (accessed 18 Nov 2013) [Google Scholar]

- 4.U.S. Department of Health & Human Services. The Belmont report: ethical principles and guidelines for the protection of human subjects of research. Washington, DC: U.S. Department of Health & Human Services, 1979. http://www.hhs.gov/ohrp/humansubjects/guidance/belmont.html (accessed 18 Nov 2013) [Google Scholar]

- 5.CIOMS. International ethical guidelines for biomedical research involving human subjects, prepared by the Council for International Organizations of Medical Sciences. 3rd edn Geneva, Switzerland, 2002. http://www.cioms.ch/publications/guidelines/guidelines_nov_2002_blurb.htm (accessed 2 Jun 2013) [PubMed] [Google Scholar]

- 6.Onvomaha Tindana P, Kass N, Akweongo P. The informed consent process in a rural African setting: a case study of the Kassena-Nankana district of Northern Ghana. IRB 2006;28:1–6 [PMC free article] [PubMed] [Google Scholar]

- 7.Ravinetto R, Tinto H, Talisuna A, et al. Health research: the challenges related to ethical review and informed consent in developing countries. G Ital Med Trop 2010;15:15–20 [Google Scholar]

- 8.Fitzgerald DW, Marotte C, Verdier RI, et al. Comprehension during informed consent in a less-developed country. Lancet 2002;360:1301–2 [DOI] [PubMed] [Google Scholar]

- 9.Saidu Y, Odutola A, Jafali J, et al. Contextualizing the informed consent process in vaccine trials in developing countries. J Clin Res Bioeth 2013;141 doi:10.4172/2155-9627.1000141 [Google Scholar]

- 10.Ravinetto RM, Grietens KP, Tinto H, et al. Medical research in socio-economically disadvantaged communities: a delicate balance. BMJ 2013;346:f2837 [Google Scholar]

- 11.Woodsong C, Karim QA. A model designed to enhance informed consent: experiences from the HIV prevention trials network. Am J Public Health 2005;95:412–19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Penn C, Evans M. Assessing the impact of a modified informed consent process in a South African HIV/AIDS research trial. Patient Educ Couns 2010;80:191–9 [DOI] [PubMed] [Google Scholar]

- 13.Lindegger G, Milford C, Slack C, et al. Beyond the checklist: assessing understanding for HIV vaccine trial participation in South Africa. J Acquir Immune Defic Syndr 2006;43:560–6 [DOI] [PubMed] [Google Scholar]

- 14.Flory J, Emanuel E. Interventions to improve research participants’ understanding in informed consent for research: a systematic review. J Am Med Assoc 2004;292:1593–601 [DOI] [PubMed] [Google Scholar]

- 15.Sugarman J, Lavori PW, Boeger M, et al. Evaluating the quality of informed consent. Clin Trials 2005;2:34–41 [DOI] [PubMed] [Google Scholar]

- 16.Miller CK, O'Donnell DC, Searight HR, et al. The Deaconess Informed Consent Comprehension Test: an assessment tool for clinical research subjects. Pharmacotherapy 1996;16:872–8 [PubMed] [Google Scholar]

- 17.Joffe S, Cook EF, Cleary PD, et al. Quality of informed consent: a new measure of understanding among research subjects. J Natl Cancer Inst 2001;93:139–47 [DOI] [PubMed] [Google Scholar]

- 18.Department of Central Statistics, The Gambia. Provisional figures for 2013 Population and Housing Census. http://www.gambia.gm/Statistics/Statistics.htm (accessed 5 Mar 2014)

- 19.World Bank Indicators 2012—Gambia—Outcomes. http://www.tradingeconomics.com/gambia/literacy-rate-adult-total-percent-of-people-ages-15-and-above-wb-data.html (accessed 2 Feb 2013)

- 20.Afolabi MO, Adetifa UJ, Imoukhuede EB, et al. Early phase clinical trials with HIV-1 and malaria vectored vaccines in the Gambia: frontline challenges in study design and implementation. Am J Trop Med Hyg 2014;90:908–14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tangwa GB. Research with vulnerable human beings. Acta tropica 2009;112(Suppl 1):S16–20 [DOI] [PubMed] [Google Scholar]

- 22.The Gambia Demographic Profile 2013. http://www.indexmundi.com/the_gambia/demographics_profile.html (accessed 3 May 2014)

- 23.Buccini L, Iverson D, Caputi P, et al. A new measure of informed consent comprehension: part I—instrument development. New South Wales, Australia: Faculty of Education, University of Wollongong, 2011 [Google Scholar]

- 24.Buccini LD, Iverson D, Caputi P, et al. Assessing clinical trial informed consent comprehension in non-cognitively-impaired adults: a systematic review of instruments. Res Ethics Rev 2009;5:3–8 [Google Scholar]

- 25.Mandava A, Pace C, Campbell B, et al. The quality of informed consent: mapping the landscape. A review of empirical data from developing and developed countries. J Med Ethics 2012;38:356–65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Marsh V, Kamuya D, Mlamba A, et al. Experiences with community engagement and informed consent in a genetic cohort study of severe childhood diseases in Kenya. BMC Med Ethics 2010;11:13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Marshall PA. Ethical challenges in study design and informed consent for health research in resource-poor settings. Special Topics in Social, Economics, Behavioural (SEB) Research report series; No. 5 WHO on behalf of Special Programme for Research and Training in Tropical Diseases 2007.

- 28.Molyneux CS, Peshu N, Marsh K. Understanding of informed consent in a low-income setting: three case studies from the Kenyan Coast. Soc Sci Med 2004;59:2547–59 [DOI] [PubMed] [Google Scholar]

- 29.Mystakidou K, Panagiotou I, Katsaragakis S, et al. Ethical and practical challenges in implementing informed consent in HIV/AIDS clinical trials in developing or resource-limited countries. SAHARA J 2009;6:46–57 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Nishimura A, Carey J, Erwin P, et al. Improving understanding in the research informed consent process: a systematic review of 54 interventions tested in randomized control trials. BMC Med Ethics 2013;14:28. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Préziosi M-P, Yam A, Ndiaye M, et al. Practical experiences in obtaining informed consent for a vaccine trial in rural Africa. N Engl J Med 1997;336:370–3 [DOI] [PubMed] [Google Scholar]

- 32.Sand K, Kassa S, Loge JH. The understanding of informed consent information—definitions and measurements in empirical studies. AJOB Prim Res 2010;1:4–24 [Google Scholar]

- 33.Guidelines for Scientists: Gambia Government/Medical Research Council Joint Ethics Committee. Banjul, 2000 [Google Scholar]

- 34.DeVon HA, Block ME, Moyle-Wright P, et al. A psychometric toolbox for testing validity and reliability. J Nurs Scholarsh 2007;39:155–64 [DOI] [PubMed] [Google Scholar]

- 35.Guarino P, Lamping DL, Elbourne D, et al. A brief measure of perceived understanding of informed consent in a clinical trial was validated. J Clin Epidemiol 2006;59:608–14 [DOI] [PubMed] [Google Scholar]

- 36.Kline P. Handbook of psychological testing. London: Routledge, 2000 [Google Scholar]

- 37.Nunnally JC, Bernstein IH. Psychometric theory. New York, NY: McGraw-Hill, 1994 [Google Scholar]

- 38.Afolabi MO, Bojang K, D'Alessandro U, et al. Multimedia informed consent tool for a low literacy African research population: development and pilot-testing. J Clin Res Bioeth 2014;5:178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kane M. Current concerns in validity theory. J Educ Meas 2001;38:319–42 [Google Scholar]

- 40.Mokkink LB, Terwee CB, Patrick DL, et al. International consensus on taxonomy, terminology, and definitions of measurement properties for health related patient-reported outcomes: results of the COSMIN study. J Clin Epidemiol 2010;63:737–45 [DOI] [PubMed] [Google Scholar]

- 41.Minnies D, Hawkridge T, Hanekom W, et al. Evaluation of the quality of informed consent in a vaccine field trial in a developing country setting. BMC Med Ethics 2008;9:15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Stevens J, ed. Applied multivariate statistics for the social sciences. Lawrence Erlbaum Associates, 2002 [Google Scholar]

- 43.Bryman A, Cramer D. Quantitative data analysis with SPSS 12 and 13: a guide for social scientists. Routledge, London and New York: Taylor and Francis Group, 2005:327–5 [Google Scholar]

- 44.Tabachnick BG, Fidell LS. Using multivariate statistics. New York: Harper Collins College Publishers, 2008 [Google Scholar]

- 45.Terwee CB, Bot SD, de Boer MR, et al. Quality criteria were proposed for measurement properties of health status questionnaires. J Clin Epidemiol 2007;60:34–42 [DOI] [PubMed] [Google Scholar]

- 46.DeVellis RF. Scale development: theory and application (applied social sciences methods series). Newsbury Park: Sage, 1991 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.