Summary

Objective

Several studies have documented the preference for physicians to attend to the impression and plan section of a clinical document. However, it is not clear how much attention other sections of a document receive. The goal of this study was to identify how physicians distribute their visual attention while reading electronic notes.

Methods

We used an eye-tracking device to assess the visual attention patterns of ten hospitalists as they read three electronic notes. The assessment included time spent reading specific sections of a note as well as rates of reading. This visual analysis was compared with the content of simulated verbal handoffs for each note and debriefing interviews.

Results

Study participants spent the most time in the “Impression and Plan” section of electronic notes and read this section very slowly. Sections such as the “Medication Profile”, “Vital Signs” and “Laboratory Results” received less attention and were read very quickly even if they contained more content than the impression and plan. Only 9% of the content of physicians’ verbal handoff was found outside of the “Impression and Plan.”

Conclusion

Physicians in this study directed very little attention to medication lists, vital signs or laboratory results compared with the impression and plan section of electronic notes. Optimizing the design of electronic notes may include rethinking the amount and format of imported patient data as this data appears to largely be ignored.

Keywords: Electronic health records, documentation, attention, information seeking behavior, humans

1. Background

Electronic health records (EHRs) have the potential to improve clinical documentation and patient care by improving the legibility and accessibility of patient notes [1, 2, 3]. Some of the components of electronic clinical documentation include narrative text, structured data entry and/or directly imported patient data such as vital signs, laboratory results, or medication lists. Each data format has potential benefits and limitations for use in the entry and retrieval of information in electronic documentation. For example, narrative text enables unrestricted entry of patient information and is praised for its ability to capture and communicate cohesive patient histories [4]. However, narratives are not easily searched and do not typically support features such as clinical decision support. By contrast, structured data entry can assist electronic documentation through the use of pre-defined data fields, although physicians complain that structured data entry can be cumbersome [5, 6]. Electronic documents also have the capacity to directly import large volumes of patient data into the note, although there are concerns that this feature has clogged electronic documents with too much data – making it more difficult to glean meaningful information from electronic clinical documents [7, 8, 9].

Understanding how clinicians obtain information from electronic clinical documents is gaining more importance as hospitalized patients are increasingly cared for by teams of physicians who may have limited time to familiarize themselves with a patient’s history. While physicians have many information sources available to them when providing care to a hospitalized patient, the electronic progress note is frequently used to gather an up-to-date assessment of the patient’s condition [10]. A recent sequential pattern analysis of EHR navigation in an ambulatory clinic showed that physicians seem to jump to specific areas of a patient chart (e.g. assessment and plan) over others (e.g. medication lists) without regard to the sequential design of the EHR [11]. This has led some to propose redesigning clinical notes to place this information at the beginning of the note (e.g. APSO notes) or limit the amount of imported data to lessen the impact of “note-bloat” on a document’s usability [12, 13, 14]. However, it is not clear how physicians attend to different areas of a clinical note or whether sections other than the impression and plan receive a proportional amount of attention.

2. Objectives

The purpose of this study is to use an eye-tracking device to assess how physicians allocate their visual attention when reading electronic notes and determine if their attention is influenced by the volume of information within a particular section of a note. If physicians attend to areas of a note based on the volume of information, we would anticipate that they would take more time to read notes that contained more information than others. Similarly, within a given note we would expect physicians to spend relatively more time reading sections that contained proportionally more information. If we can objectively identify how physicians direct their visual attention to different sections of a note, we may be able to better inform design changes for electronic notes.

3. Methods

3.1 Setting and Sample

This study was conducted at an academic medical center that has been using an enterprise-wide EHR (Cerner Millennium, Kansas City, MO) since 2005. Forty three hospitalists were asked to take part in this study through a combination of email announcements and in-person presentations at faculty meetings. Enrollment was stopped after we reached a convenience sample of ten physicians.

3.2 Study Procedure

Each subject read three de-identified electronic progress notes on a computer screen (progress notes are included in the appendix). To limit the impact that a patient’s length of stay in a hospital might have on the content and volume of the notes, the three progress notes were all taken from the second hospital day of an adult medicine service. We chose the first progress note of the hospital stay to limit the amount of material copied and pasted from other progress notes. The notes were produced using the EHR’s proprietary note-writing software (PowerNote). This tool allows notes to be produced through a combination of narrative text, data importation and the selection of structured text (point-and-click). The volume and content of imported data can vary at the discretion of each note’s author.

All of the notes included patients with a primary diagnosis of congestive heart failure although other diagnoses such as pneumonia, chest pain, dyspnea, atrial fibrillation, hypertension, etc. were presents in varying degrees. Notes 1 and 2 included a total of six diagnoses while Note 3 included seven diagnoses. To limit the chance that a hospitalist might have personal familiarity with a particular note, we chose all of the samples from hospital visits that occurred eighteen months before the study was conducted. The three notes were written by different authors.

To preserve patient confidentiality, images of the notes were taken using screen capture software (TechSmith, Okemos, MI) and de-identified by redacting data such as the patient’s name, date of birth, medical record number, etc. These de-identified images were transferred into an electronic document (Microsoft, Redmond WA) with no page breaks to allow subjects to scroll through the notes on a computer screen in a manner similar to the way electronic notes appear in the EHR. All of the notes were organized with the same subject headings (e.g. Overnight Events, Review of Systems, Physical Exam.) but varied in the amount of narrative text, structured data entry, and imported data within each subject heading.

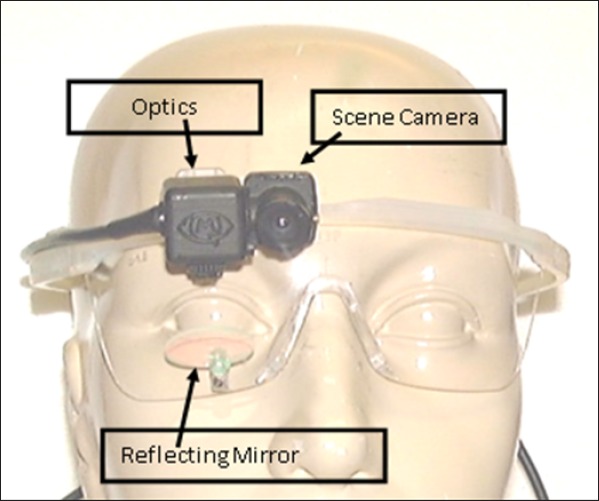

Subjects viewed the notes on a computer screen as if they were in the EHR and were instructed to read each note at their own pace with no time limit applied. To record their visual attention patterns, subjects wore a portable eye-tracking device while reading. The ASL Mobile Eye (Applied Science Laboratories, Bedford, MA) is a lightweight (76 grams), tetherless eye-tracking device that includes a scene camera, eye optics, ultraviolet light source and ultraviolet reflecting monocle all mounted on safety glasses (►Figure 1). The Mobile Eye software program overlays cross hairs on a video, specifying the exact locations where the subjects fixated as they read the notes and conducted the handoffs. This type of visual scanning data is commonly used as proxy for visual attention [15, 16].

Fig. 1.

Eye tracker image

After calibrating the eye-tracking device for each participant, subjects were asked to read each note one at a time and dictate a verbal handoff to summarize the case as if they were transitioning care to another hospitalist. We added the verbal handoff task to establish a specific context for the participants and provide a way to analyze the link between where participants directed their visual attention and what they thought was important to convey in their summary. This approach reduces the probability of making erroneous conclusions based solely on observations of visual scanning patterns. After reading and conducting handoffs for the three notes, we asked each subject the following debriefing questions:

Were the notes believable?

What strategies do you typically use to read notes?

How does context influence how you approach reading a note?

The dictations and interviews were recorded, transcribed and analyzed for content.

3.3 Note analysis

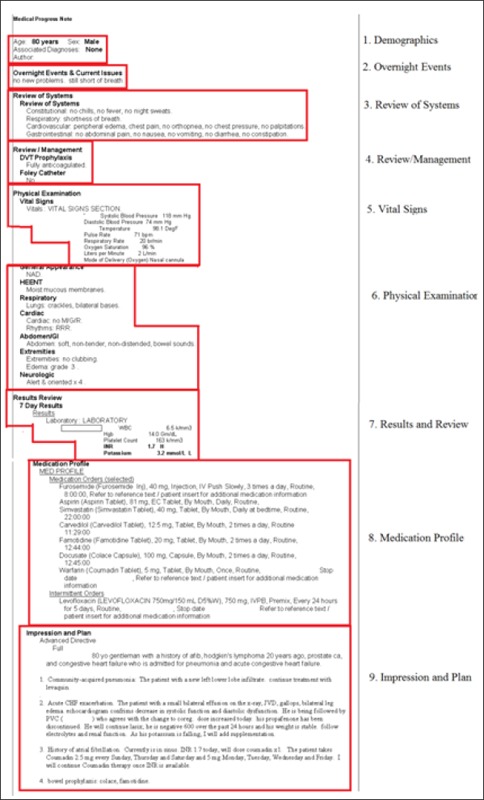

Because all of the notes utilized the same heading structure (e.g., “Overnight Events” always preceded “Review of Systems” followed by “Physical Examination”, etc.) it was possible to map each note into nine “zones”, shown in ►Figure 2. However, because different zones of the notes contained different types of data (e.g., numbers in the vital signs and laboratory results and words in other zones) we utilized character count rather than word count to standardize the volume of data in each zone. The character count of each zone was analyzed to compare the volumes of text in each zone across the three notes.

Fig. 2.

Note with zones

3.4 Visual attention analysis

Visual attention was measured via the eye-tracking device data in terms of “fixations” and “glances”. A fixation is defined as visual attention directed at the same location for 0.1 seconds or more while a glance is defined as a series of consecutive fixations within an area of interest [17]. The eye tracker is accurate to within ±0.5 degrees of visual angle. To account for this level of accuracy, eye fixations were counted if the subject looked within a 1 cm border around one of the nine pre-defined zones (±0.5 degrees = ~1.1cm in our study). We used ASL Result Pro software (Bedford, MA) to determine the locations and durations of subjects’ fixations. This software allows the investigator to draw boxes to articulate where a zone begins and ends. Two analysts went through the video frame-by-frame to make sure that the boxes were in the correct locations. We then analyzed the total amount of time that each subject read each note and the time they spent glancing at the text within each zone.

Principal outcomes included raw reading time in seconds as well as seconds per character (reading time in seconds divided by characters per zone). When necessary, dependent variables were transformed to meet assumptions of normality and examined using repeated-measures analysis of variance or linear mixed models with “individual” specified as random effect. Fixed effects included categorical variables for note (3 levels) and zone (9 levels); these were tested in a backwards fashion using the Wald test (alpha = 0.05). Once the final models were specified, marginal means were estimated with 95% confidence intervals derived using the Delta method. Pairwise comparisons were performed using linear combinations of coefficients. Statistical analyses were performed in (Excel) or Stata/MP 12.1 for Windows (Copyright 1985–2011 StataCorp LP).

3.5 Verbal handoff analysis

The audiotaped handoffs were transcribed and organized into discrete statements for the purpose of coding. A second author reviewed the discrete statements and disagreement in how the text was organized was resolved through discussion. Discrete statements were then coded by by two investigators using the “SIGNOUT” categories previously developed for describing elements of a handoff [18]. The investigators coded each of the 30 transcripts independently. The first note (10 transcripts) was used to develop consensus in coding and the second two notes (n = 20) were used to assess inter-rater reliability [19]. The SIGNOUT categories include 8 pre-defined elements of a handoff:

Sick or DNR (highlights unstable patients and DNR/DNI status)

Identifying Data (name, age, gender, diagnosis)

General hospital course (hospital course up to the current hospital day)

New events of day (specific events on the current hospital day)

Overall health status/Clinical condition

Upcoming possibilities with plan, rationale (theoretical concerns)

Tasks to complete overnight with plan, rationale (specific instructions)

Any questions? (opportunity for the recipient of sign-out to ask questions).

A “0” code was used for statements that were not pertinent to these elements. Because subjects in this study were providing an audiotaped handoff, there was no opportunity for questions. Therefore, we excluded the last element of SIGNOUT and replaced this with an “other” category onto which we could map statements both coders felt did not fit any of the other seven categories.

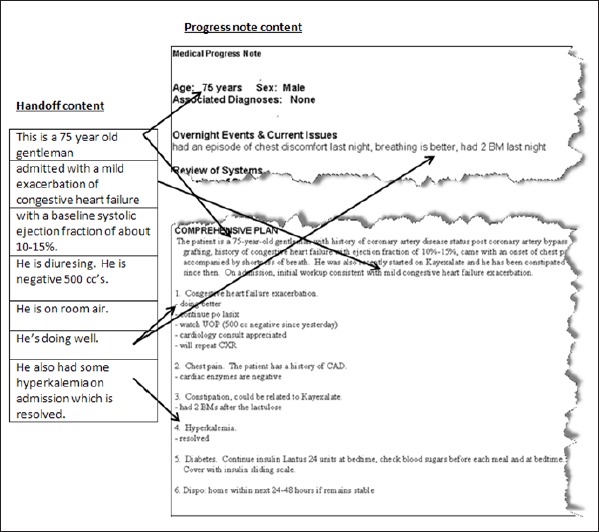

To map the handoff information within each of the SIGNOUT categories to specific zones of a corresponding note, the statements were matched to any zone where that information could possibly be found. If a statement could be found in more than one zone, both zones were included as possibilities. For example, a statement such as “This is a 75 year old gentleman” could be found in both the Demographic zone of the note (where age and gender are included) as well as the Impression and Plan zone. An example of this method is illustrated in ►Figure 3. After assigning zones to each statement we then calculated the percentage of the total statements in each zone for that note and compared percentages across zones.

Fig. 3.

Handoff mapping

4. Results

4.1 Subjects

Our sample (n = 10) included five men and five women with an average age of 37 years (range from 34 to 42 years). The average time since the completion of residency for the group was 6 years (range from 3 to 8 years).

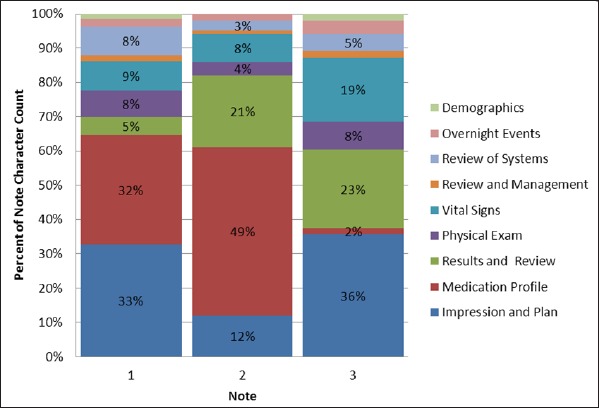

4.2 Note Analysis

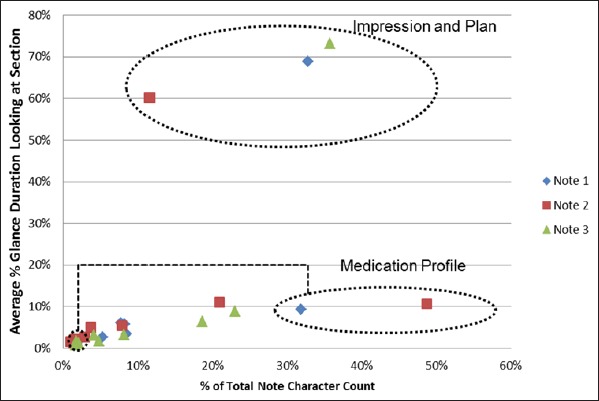

►Figure 4 displays the percentage of characters located in each zone of the notes. On average, the “Impression and Plan” and “Medication Profile” zones showed the highest relative volumes of text, although there was considerable variation in the volume of text between notes. For example, approximately one third of the volume of Note 1 was contained in the “Impression and Plan” zone with another third in the “Medication Profile”. Note 3 also had about a third of its volume in the “Impression and Plan” but only 2% of its volume in the “Medication Profile”. By contrast, Note 2 had only 12% of its volume in the “Impression and Plan” zone but almost 50% of the note volume in the “Medication Profile”.

Fig. 4.

Percent character count by zone.

4.3 Visual Attention Analysis

The average reading duration across all notes was 112 seconds. The averaged time ranged from 103 – 121 seconds across the three notes, with additional variation seen among the ten subjects’ average reading times (range 62 – 193 seconds). In unadjusted analyses, a two-way ANOVA showed significant differences between subjects (F-test p-value<0.001) and notes (F-test p-value = 0.04).

When assessing reading duration compared to character count we found that subjects on average took more time to read notes with a greater volume of text, even after adjusting for contents of zone and note (adjusted estimate +0.005 seconds per additional character, 95% CI 0.002, 0.009). For example, Note 2 had the highest character count (5371) and on average took the most time to read (121 seconds) while Note 3 had the lowest character count (2096) and on average took the least time to read (103 seconds). A significant linear association was found between time spent reading and character count (univariable R2 = 0.24, p<0.001) when assessing the entire note.

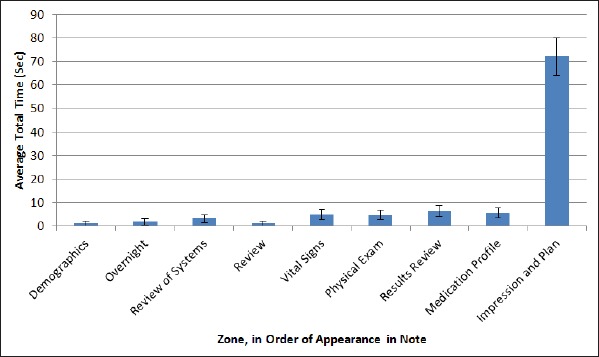

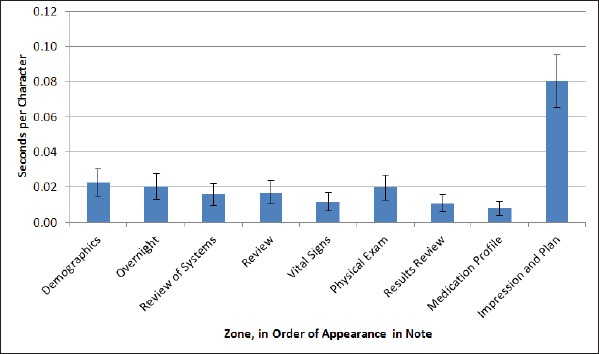

Within notes, we found that physicians spent an overwhelming amount of their time reading the “Impression and Plan” over other zones. As shown in ►Figure 5 and ►Figure 6, subjects demonstrated the longest glance duration and slowest reading rate in this zone for each note (p<0.001, all pairwise comparisons). By contrast, subjects seemed to spend very little time in other zones such as the “Medication Profile”. ►Figure 7 highlights the stability of this association across character count and note. Irrespective of the percent character count (horizontal axis), the Impression and Plan zone consistently receives the highest percent glance duration in all three notes. Conversely, the Medication Profile zone consistently receives the lowest percent glance duration, regardless of the amount of data contained within that zone.

Fig. 5.

Average glance duration (in seconds)

Fig. 6.

Average reading rate (seconds per character).

Fig. 7.

Glance duration & character count.

4.4 Verbal Handoff Content Analysis

The verbal handoffs contained between 68 and 76 discrete statements per note. Inter-rater reliability for category assignment was 90%. The most common codes assigned were #3 General Hospital Course and #2 Identifying Data (►Table 1).

Table 1.

Statement count by note and SIGNOUT category

| Category | Note 1 | Note 2 | Note 3 |

|---|---|---|---|

| 1.Sick or DNR | 0 | 0 | 0 |

| 2. Identifying data | 10 | 12 | 11 |

| 3.General hospital course | 36 | 37 | 44 |

| 4. New events of the day | 2 | 6 | 0 |

| 5.Overall health status | 5 | 4 | 5 |

| 6. Upcoming possibilities | 6 | 8 | 8 |

| 7. Tasks to complete | 6 | 8 | 3 |

| 8. Other | 3 | 1 | 3 |

| Total | 68 | 76 | 74 |

4.5 Verbal Handoff Mapping

The coded statements mapped to one of four categories: “Impression and Plan exclusively”, “Impression and Plan & other zones”, “Zones other than Impression and Plan exclusively”, and “No identified zone”. ►Table 2, demonstrates that most coded statements could be mapped to the “Impression and Plan” zone of the note; either exclusively (36%) or in combination with other zone(s) (45%). Taken together, these two groups contributed to 81% of the statements in the verbal handoffs with only 9% of the verbal handoff statements not having material included in “Impression and Plan” and 10% of statements mapped to “No identified zone”. Examples of statements with “No identified zone” would include comments such as “That’s it”, “Done”, etc.

4.6 Debriefing interveiws

When we asked subjects if the notes were believable, all agreed that the images we provided were reasonable representations of the way that progress notes appeared in the electronic health record. We then asked subjects to describe a general strategy that they use when reading a progress note. In most cases, subjects expressed a general approach of skimming through or ignoring some zones of the note other than the “Impression and Plan”:

When I look to give sign-out I tend to go straight to the summary section of the ‘Assessment and Plan’ because that tells you what’s been going on with the patient since they’ve been in the hospital. I tend to gloss over the “Physical Exam” and I’ll gloss over a lot of the “Labs” that get imported in.

We also asked subjects to comment on other contexts in which they look at a progress note and how the way they approach a note is influenced by context. Subjects pointed out that they mostly use the progress note if they are picking up a patient for the first time or are being called about a patient as a covering provider overnight. In the first context, the progress note is typically used in addition to other sources of information (such as the admission note) while for overnight coverage of patients the progress note is used to obtain a quick summary of the patient’s clinical story. In both cases, the “Impression and Plan” is perceived as a valuable summary of patient information, while other zones are not.

5. Discussion

The purpose of this study was to assess how physicians direct their attention while reading electronic progress notes. We used an eye-tracking device to observe physicians’ visual attention patterns while reading the sample progress notes, and compared their visual attention patterns to the information they included in a simulated verbal handoff. Physicians spent a great deal of time reading the “Impression and Plan” zone of the notes (67% of total reading time), which dwarfed the time spent on the next most common zones; “Laboratory Results” (8%) and the “Medication Profile” (7%). Our results also demonstrated that the length of time spent reading a particular zone or the rate of reading had very little relation to the volume of data in a particular zone. Indeed, zones that sometimes contained the most text, such as the medication list, were often read the most quickly, suggesting that the inclusion of large volumes of patient data does not add proportional value to a note. When we assessed where information included in the transcribed verbal handoffs was located in the note, we found that only 9% could be found in zones other than the “Impression and Plan”.

Physicians’ preference for the “Impression and Plan” is consistent with prior studies that highlight the role of narrative sources of patient information [20, 21]. This preference could be due to the fact that the “Impression and Plan” section contains summary information and thus allows more efficient access to a patient’s story. It is also possible that this section is preferred because it contains more naturalistic language than other sections of the note. Narrative text has been reported to be more reliable and understandable to providers [21] and it is possible that a note written entirely in a prose style might show a different visual scanning pattern.

Beyond demonstrating physicians’ preferences for the “Impression and Plan” the use of eye-tracking data in this study offered a chance to study physicians’ attention patterns more quantitatively. More specifically, we are able to demonstrate not only that physicians spend more time on the “Impression and Plan”, but also slow down when reading this zone while apparently racing through zones such as the “Medication Profile”. This methodology provides evidence to support physicians’ qualitative assessment of their approach to clinical documents.

5.1 Limitations

There are several limitations which may impact the study’s generalizability. These include a relatively small sample size (both the number of physician volunteers and the number of notes) and the use of notes from one proprietary EHR at a single institution. With respect to sample size, our study was conducted in a convenience sample of hospitalists who may or may not be representative of other clinicians. For example, our volunteers may be more comfortable using new technologies, and they may have more experience reading and interpreting clinical notes than their peers. Other clinicians, such as nurses, pharmacists or consultants, may focus their attention to parts of the note differently than the hospitalists did. While the sample size for this study was small, the consistency in results across subjects suggests that the tendency to preferentially skim over zones other than the “Impression and Plan” reflects nonrandom behavior. In addition, this study looked at only an inpatient hospital progress note for an adult medicine service. It is not clear if the pattern observed in this study would be consistent across different medical services (e.g. Surgery, Obstetrics, Emergency Medicine, etc.) or for other healthcare providers such as nurses, pharmacists or respiratory therapists, who may rely on other sections of the note, such as medications. Further, this study only focused on the value of progress notes for the conveyance of clinical content to physicians for signout. While this may be one goal for patient notes, clinical notes are also used to support billing as well as providing evidence of care for medico-legal purposes. We did not assess how visual attention might vary depending on these contexts.

5.2 Directions for future research

Future studies are needed to explore whether these findings can be replicated in different service lines (e.g. surgery, pediatrics, obstetrics), for different types of notes, in different EHRs or for purposes other than physician signout. Further research should also help determine if and how the context and purpose of information needs influence how physicians navigate through the medical record. If the pattern found in this study is seen in other settings, it would be worth studying the impact of different types of data display on the efficiency and ease of using the notes. It might also be helpful to study how progress notes written on subsequent hospital days – where the use of copy-and-paste may be used – impacts how and where physicians direct their attention. This work would build on existing efforts to establish reliable means of assessing the overall quality of clinical notes [22, 23] and could help establish guidelines to improve the usability of patient information in electronic notes. Research is also needed to determine whether physicians’ attention to the impression and plan zone causes them to miss important elements of a patient’s history recorded elsewhere in the note.

6. Conclusion

In this study, we used eye-tracking technology to evaluate physicians’ visual attention patterns as they read three progress notes, using verbal handoffs as a context to determine what information in the notes subjects thought was most important. Despite variation in the volume of data in different zones of the notes, subjects overwhelmingly skimmed over zones containing imported patient data, while fixating largely on the “Impression and Plan” zone of the notes. Consistent with this finding, the verbal handoffs contained very little information found exclusively outside the “Impression and Plan”. Subjects’ responses to the debriefing questions suggest that they are aware of the differences in how they attend to various sections of a note.

Another contribution of this research is the application of eye-tracking as a means of correlating attention (where physicians directed their gaze in the EHR) with intention (what they included in a simulated handoff). The addition of eye-tracking data adds objective data to the picture of how physicians navigate through an electronic note. The results demonstrate a consistent mapping between what the subjects considered important in their handoff and where they directed their visual attention to presumably gather this information. These findings suggest that eye-tracking technology can be a useful tool in future studies to assess efforts to optimize the design and content of electronic documentation.

Table 2.

Potential sources of handoff information across notes

| Potential sources of information | Note 1 | Note 2 | Note 3 | All |

|---|---|---|---|---|

| Impression and Plan only | 12 (18%) | 29 (38%) | 37 (50%) | 78 (36%) |

| Impression and Plan & other zones | 40 (59%) | 36 (47%) | 23 (31%) | 99 (45%) |

| Zones excluding Impression and Plan | 10 (15%) | 5 (7%) | 4 (5%) | 19 (9%) |

| No identified zone | 6 (9%) | 6 (8%) | 10 (14%) | 22 (10%) |

Footnotes

Clinical Relevance Statement

The use of eye-tracking technology can aid the understanding of how physicians navigate through an EHR by quantifying not only what physicians attend to, but also what they ignore. Comparing the rate of reading and duration of time spent reading different zones of a note with the volume of data in each zone shows that physicians tend to ignore medication lists or laboratory results even if these zones contain more data than zones such as the impression and plan. Pairing the objective analysis of visual attention with subjective assessments of clinical relevance provides suggests that the current practice of importing large amounts of patient data may not add clinical value to electronic notes.

Conflicts of Interest

The authors declare that they have no conflicts of interest in the research.

Protection of Human Subjects

The study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects, and was reviewed by Baystate Health’s Institutional Review Board.

References

- 1.Roukema J, Los RK, Bleeker SE, van Ginneken AM, van der Lei J, Moll HA.Paper versus computer: Feasibility of an electronic medical record in general pediatrics. Pediatrics 2006; 117(1): 15–21 [DOI] [PubMed] [Google Scholar]

- 2.Walsh SH.The clinician’s perspective on electronic health records and how they can affect patient care. BMJ 2004; 328(7449): 1184-1187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Amarasingham R, Plantinga L, Diener-West M, Gaskin DJ, Powe NR.Clinical information technologies and inpatient outcomes: a multiple hospital study. Arch Intern Med 2009;169(2): 108–114 [DOI] [PubMed] [Google Scholar]

- 4.Kumagai AK.A conceptual framework for the use of illness narratives in medical education. Acad Med 2008; 83(7): 653-658 [DOI] [PubMed] [Google Scholar]

- 5.Johnson SB, Bakken S, Dine D, Hyun S, Mendonça E, Morrison F, Bright T, Van Vleck T, Wrenn J, Stetson P.An electronic health record based on structured narrative. J Am Med Inform Assoc 2008; 15(1): 54–64 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Johnson KB, Cowan J.Clictate: a computer-based documentation tool for guideline-based care. J Med Syst 2002; 26(1): 47–60 [DOI] [PubMed] [Google Scholar]

- 7.Hartzband P, Groopman J.Off the record — avoiding the pitfalls of going electronic. N Engl J Med 2008; 358(16): 1656-1658 [DOI] [PubMed] [Google Scholar]

- 8.Payne TH, Patel R, Beahan S, Zehner J.The physical attractiveness of electronic physician Nnotes. AMIA Annu Symp Proc 2010; 2010: 622–626 [PMC free article] [PubMed] [Google Scholar]

- 9.Weir CR, Nebeker JR.Critical issues in an electronic documentation system. AMIA Annu Symp Proc 2007: 786–790 [PMC free article] [PubMed] [Google Scholar]

- 10.Reichert D, Kaufman D, Bloxham B, Chase H, Elhadad N.Cognitive analysis of the summarization of longitudinal patient records. AMIA Annu Symp Proc 2010; 2010: 667–671 [PMC free article] [PubMed] [Google Scholar]

- 11.Zheng K, Padman R, Johnson MP, Diamond HS.An interface-driven analysis of user interactions with an electronic health records system. J Am Med Inform Assoc 2009; 16(2): 228–237 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Shoolin J, Ozeran L, Hamann C, Bria W.Association of Medical Directors of Information Systems consensus on inpatient electronic health record documentation. Appl Clin Inform 2013; 4(2): 293–303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Lin CT, McKenzie M, Pell J, Caplan L.Health care provider satisfaction with a new electronic progress note format: SOAP vs APSO format. JAMA Intern Med 2013; 173(2): 160-162 [DOI] [PubMed] [Google Scholar]

- 14.Hahn JS, Bernstein JA, McKenzie RB, King BJ, Longhurst CA.Rapid implementation of inpatient electronic physician documentation at an academic hospital. Appl Clin Inform 2012; 3(2): 175-185 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Duchowski AT.Eye tracking methodology: Theory and practice. 2nd ed London: Springer-Verlag; 2007 [Google Scholar]

- 16.Rayner K.Eye movements in reading and information processing: 20 years of research. Psychol Bull 1998: 124(3): 372–422 [DOI] [PubMed] [Google Scholar]

- 17.Jacob RJ, Karn KS.Eye tracking in human-computer interaction and usability research: Ready to deliver the promises. The mind’s eye: Cognitive and applied aspects of eye movement research. Hyona J, Radach R, Deubel H.(eds.)Oxford: Elsevier; 2003. p573–605 [Google Scholar]

- 18.Horwitz LI, Moin T, Green ML.Development and implementation of an oral sign-out skills curriculum. J Gen Intern Med 2007; 22(10): 1470–1474 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Crabtree BF, Miller WL, Doing Qualitative Research. 2nd ed London: Sage; 1999 [Google Scholar]

- 20.Coiera E.When conversation is better than computation. J Am Med Inform Assoc 2000; 7(3): 277-286 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Rosenbloom ST, Denny JC, Xu H, Lorenzi N, Stead WW, Johnson KB.Data from clinical notes: A perspective on the tension between structure and flexible documentation. J Am Med Inform Assoc 2011: 18(2): 181–186 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Stetson PD, Morrison FP, Bakken S, Johnson SB.eNote Research Team. Preliminary development of the physician documentation quality instrument. J Am Med Inform Assoc 2008; 15(4): 534–541 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Stetson PD, Bakken S, Wrenn JO, Siegler EL.Assessing electronic note quality using the physician documentation quality instrument (PDQI-9). Appl Clin Inform 2012; 3(2): 164–174 [DOI] [PMC free article] [PubMed] [Google Scholar]