Summary

Objective

The Instrument for Evaluating Human-Factor Principles in Medication-Related Decision Support Alerts (I-MeDeSA) was developed recently in the US with a view towards improving considerations of human-factor principles when designing alerts for clinical decision support (CDS) systems. This study evaluated the generalizability of this tool, in cooperation with its authors, across cultures by applying it to a Korean system. We also examined opportunities to promote user acceptance of the system.

Methods

We developed a Korean version of the I-MeDeSA (K-I-MeDeSA) and used it to evaluate drug-drug interaction alerts in a large academic tertiary hospital in Seoul. We involved four reviewers (A, B, C, and D). Two (A and B) conducted the initial independent scoring, while the other two (C and D) performed a final review and assessed feedback from the initial reviewers. The obtained scores were compared with those from 13 previously reported CDS systems. The feedback was summarized qualitatively.

Results

The translation of the I-MeDeSA had excellent interrater agreement in terms of face validity (scale-level content validity index = 0.95). The system’s K-I-MeDeSA score was 10 out of 26, with a good agreement between reviewers (κ = 0.77), which showed a lack of human-factor considerations. The reviewers readily identified two of the nine principles that needed primary improvement: prioritization and text-based information. The reviewers also expressed difficulty judging the following four principles: alarm philosophy, visibility, color, and learnability and confusability.

Conclusion

The K-I-MeDeSA was semantically and operationally equivalent to the original tool. Only minor cultural problems were identified, leading the reviewers to suggest the need for clarification of certain words plus a more detailed description of the tool’s rationale and exemplars. Further evaluation is needed to empirically assess whether the implementation of changes in an electronic health record system could improve the adoption of CDS alerts.

Keywords: Clinical decision support, interfaces and usability, human-computer interaction, computerized order entry, alert fatigue

1. Introduction

The use of clinical decision support (CDS) in electronic health records (EHRs) is known to have a significant impact on improving patient safety for decades [1–6]. Systematic reviews have demonstrated that prescribing with CDS systems can reduce medication errors and adverse drug events [2, 6–9]. However, clinicians often override the recommendations provided in CDS alerts, even the ones that are clinically significant [6,9–14]. In one inpatient study, Payne et al. [15] found override rates of 88% and 69% for drug-interaction and drug-allergy alerts, respectively. Similarly, Weingart et al. [12] found that ambulatory physicians overrode 91% of drug-allergy alerts and 89% of high-severity drug-drug interaction (DDI) alerts. In Korea, one recent study performed at a tertiary teaching hospital found that the override rate ranged from 96% to 98.1%, which is much higher than the 79% to 89% rates found within general and community hospitals [16]. These high override rates and variations have raised serious concerns that clinicians are overriding or ignoring clinically important warnings, or that the alerts may be causing unnecessary interruption to clinicians, which could threaten consistent work processes or waste time and resources [17]. The latter could mean that the alert content may either be incorrect or outdated, while the former relates to a lack of consideration of usability in the system design.

Researchers, policy makers, and consumers have recognized the shortcomings regarding the usability of the current EHR systems, which can impact patient outcomes [18]. The field of system usability has been researched quite extensively. Several studies [19–21] have highlighted the usability problems of current EHR systems and have suggested improvements to their user interfaces. Despite these advances the adoption of usability principles in the design of clinical information systems is limited. Thus, despite several studies indicating the benefits of adoption of human factors principles to the improved adoption of systems in other domains, healthcare has lagged behind on this front. This indicates the need for more practical tools that can be used easily and provide specific directions and information in the context of medication-related CDS. To this end, the Instrument for Evaluating Human-Factor Principles in Medication-Related Decision Support Alerts (I-MeDeSA) has recently been developed in the US by Phansalkar et al. [22] and Zachariah et al. [23] to evaluate DDI alert systems. However, the cross-cultural generalizability of the I-MeDeSA as an evaluation instrument has not been previously explored. In a sociotechnical model of health information technology (HIT), external rules, regulations, and cultural and internal policy pressures can influence the design, development, implementation, use, and evaluation of HIT [24].

Korea has a different health insurance program, drug utilization review (DUR) regulations, safety cultures, and language as compared to the US. To apply the tool to the Korean system we need a Korean-language version. Translating a tool into another language requires the achievement of several types of equivalencies between the original version and the translated version of the scale, including conceptual equivalence, item equivalence, semantic equivalence, operational equivalence, and measurement equivalence [25]. After checking for the existence of conceptual equivalence and ensuring that items that may present problems can be changed, we began the translation process to assess semantic and operational equivalence. For operational equivalence, we focused on the sustainability of the tool for Korean informaticians, who would most likely be its potential users.

In cooperation with the authors of the tool (S.P. and D.B.), we evaluated the cross-cultural generalizability of the I-MeDeSA by applying it to a Korean system, with the following two aims: (1) to determine the extent to which Korean informaticians can understand and use the Korean version of the I-MeDeSA (K-I-MeDeSA) for evaluating a Korean EHR, and (2) to identify any opportunities to improve system design specifically on the human factors principles measured by the instrument in order to promote users’ acceptance of the system. The knowledge generated by this study will contribute to the cross-cultural validation of this instrument and therefore its generalizability for international evaluations of EHR systems.

2. Materials and Methods

2.1 Study Site

This study examined CDS within a Korean EHR system, namely AMIS (ASAN Medical Center Information System). This EHR system is used in an acute care, teaching, tertiary hospital with a high volume of admissions into the 63 specialty services provided, covering general medical, surgical, and specialty care, including oncology.

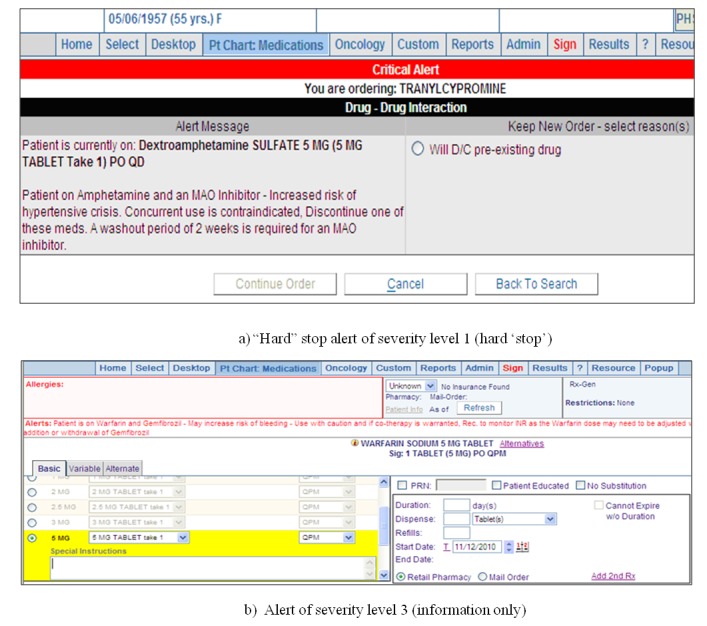

The hospital implemented a computerized physician order entry (CPOE) system and a complete in-house-designed electronic medical record (EMR) system from 1990 to 2005. The systems were used throughout the hospital by all physicians working in both inpatient and outpatient settings. The hospital instituted DDI checking as a decision-support component of the CPOE system, with four different types of alerts from the national DUR program. These alerts were implemented based on the drug list that was released by the DUR governor. They were initially designed to be displayed separately by type on the CPOE screen when orders were stored on the database; however, due to complaints from physicians regarding alert fatigue, the hospital redesigned the alert screens as a single summary window showing all alerts triggered simultaneously (►Figure 1). Physicians are required to take different actions according to the type of alert that appears in the summary window. For DDI alerts, physicians can cancel the order they are writing or discontinue a preexisting drug order, or they can continue the order by choosing the reason from among several coded options.

Fig. 1.

Example screenshot of the redesigned medication-related alert including the DDIs in the EHR. (a) Several different types of alert stratified according to group. (b) An DDI alert.

2.2 The I-MeDeSA and the Korean version

The I-MeDeSA was developed by after conducting a previously published literature review of human factors principles that have been considered important consideration when designing safety systems in other domains [22]. Thereafter the authors content validation on quantifiable human-factor principles in 2010 [23]. The initial version comprised 8 principles and 34 allocated items. During the development, the instrument was assessed for content validity and interrater reliability utilizing the expertise of highly qualified reviewers, and a preliminary evaluation of construct validity was performed. While testing the instrument on DDI alerts from 3 unique EHRs, 1 principle was developed and 8 items across multiple principles were eliminated, resulting in 9 principles and a total of 26 items (►Table 1). Items in the I-MeDeSA inquire as to whether or not a specific human factor is considered, and the responses are binary, whereby scores of 0 and 1 are equivalent to answering “no” (for the absence of the characteristic) and “yes” (for its presence), respectively. If the subject system includes alerts of various severities and the designs of these stratified alerts are unique, each design would require evaluation, and the scores within each item should be averaged. The possible instrument summary score ranges from 0 to 26, with a higher score indicating a greater consideration of human factors.

Table 1.

The I-MeDeSA (summarized from Zachariah et al. [23]).

| Principle | Items |

|---|---|

|

Alarm Philosophy:

The logic that underpins the determination of an event as unsafe and results in the system issuing an alert. |

Does the system provide a general catalog of unsafe events correlating the alert level with the severity of the consequences? |

|

Placement:

Appropriate screen alert placement improves the likelihood that an alert will be seen by the user. When the screen is divided into four quadrants, users tend to focus most of their attention on the top left and bottom right corners, while devoting less attention to the top right and bottom left corners. |

Are different types of alerts meaningfully grouped? |

| Is the response to the alert provided along with the alert, as opposed to being located in a different window or in a different area on the screen? | |

| Is the alert appropriately timed with the medication order? | |

| Does the layout of critical information contained within the alert facilitate the user’s quick uptake? | |

|

Visibility:

A visual alert must be legible and visible, meaning that there is enough contrast in relation to the remaining screen, thereby enabling the user to easily read the alert content. |

Is the area where the alert is located distinguishable from the rest of the screen? |

| Is the background contrast sufficient to allow the user to easily read the alert message? | |

| Does the font used to display the textual message enable the user to easily read the alert? | |

|

Prioritization:

Visual alerts should be prioritized, and prioritization goes hand in hand with “hazard matching.” |

Is the prioritization of alerts indicated appropriately by color? |

| Does the alert use prioritization with colors other than green and red take into consideration users who may be colorblind? | |

| Are signal words appropriately assigned to each existing alert level? | |

| Does the alert utilize shapes or icons in order to indicate the alert priority? | |

| In the case of multiple alerts, are they placed on the screen in the order of their importance? | |

|

Color:

Color should be used to code visual alerts and make them more distinguishable. |

Does the alert utilize color coding to indicate the type of unsafe event? |

| Is color minimally used to focus the user’s attention? | |

|

Learnability and Confusability:

Potential confusability between visual alerts can be minimized by reducing the number of visual features that are shared between alerts. Text-based information: Visual alerts can contain text-based information to provide specific details about the unsafe event. The following items evaluate whether key pieces of information are incorporated in the textual message contained within the alert. |

Are the different severities of alerts easily distinguishable from one another? For example, do major alerts possess visual characteristics that are distinctly different from minor alerts? |

| A signal word to indicate the priority of the alert (i.e., “note,” “warning,” or “danger”). | |

| A statement of the nature of the hazard describing why the alert is shown. This may be a generic statement in which the interacting classes are listed, or an explicit explanation in which the specific drug-drug interactions are clearly indicated. | |

| If yes, are the specific interacting drugs explicitly indicated? | |

| An instruction statement (telling the user how to avoid the danger or the desired action). | |

| If yes, does the order of recommended tasks reflect the order of required actions? | |

| A consequence statement telling the user what might happen. | |

|

Proximity of task components being displayed:

A well-designed alert is capable of integrating information from different sources and presenting it to the user to allow for successful task completion. |

Are the informational components needed for decision making on the alert present either within, or in close spatial and temporal proximity to, the alert? |

|

Corrective Actions:

A corrective action is a response to an alert that enables the user to efficiently communicate intended actions to the system. The response to an alert should be more than a mere acknowledgment of having seen the alert. |

Does the system possess corrective actions that serve as an acknowledgment of having seen the alert? |

| If yes, does the alert utilize intelligent corrective actions that allow the user to complete a task? | |

| Is the system able to monitor and alert the user to follow through with corrective actions? |

The Korean I-MeDeSA (K-I-MeDeSA)

One of the authors (I.C.) who has been involved in an I-MeDeSA validation study with the developers carried out the translation. The preliminary K-I-MeDeSA was then back-translated into English by a clinical expert with language expertise in both Korean and English in order to assess the linguistic, and clinical validity of terms. We compared the two language versions and revised the K-I-MeDeSA iteratively to attain semantic equivalence of terms. The face validity was assessed by providing both the final version and the original instrument to three Korean doctoral students in Boston, who determined the level of agreement between the translations using a 5-point Likert scale (where 1 = strongly disagree and 5 = strongly agree) for each principle and item. Agreement among the three reviewers was assumed when their scores all reached 4 or more; the item-level content validity index (I-CVI) was thus calculated using modified kappa (κ) statistics with adjustment of each I-CVI for chance agreements on relevance [26].

2.3 Evaluation Procedures

The target system was assessed by two medical informatics reviewers – a physician and a clinical pharmacist (designated Reviewers A and B, respectively) – who independently evaluated the DDI alerts. They were given the K-I-MeDeSA, along with explanations about the instrument. The same DDI exemplars that were used in a previous study [27] were provided to the reviewers for use in exploring the system: dextroamphetamine and monoamine oxidase inhibitor as a hard-stop alert, dexfenfluramine and tricyclic antidepressant as an interruptive alert, and omeprazole and H2 receptor blocker as only an information source. However, these DDIs failed to trigger an alert because the system had only interruptive alerts. Furthermore, dextroamphetamine, dexfenfluramine, and omeprazole were not included in the DDI rules; therefore, methotrexate and aspirin were used as alternate DDIs.

The two reviewers were asked to interact with the actual EHR system and to send the score sheet with data supporting their judgments on each item, along with relevant opinions. The interrater reliability was calculated using Cohen’s κ. Reviewer C (I.C.), who is Korean and has knowledge and experience regarding the validation of the I-MeDeSA, assessed the two score sheets and the opinions of Reviewers A and B. Reviewer C assigned a final score to each item and resolved the discrepancies between the other two reviewers. She also coordinated with Reviewer D (S.P.) to assess jointly whether the reviewers’ feedback was due to the original tool or cultural or other factors, or if it reflected subtle variations in the tool used. These processes were conducted with the approval from the hospital’s Institutional Review Board for our data collection and interview methods. A comparison with other EHR systems involved the evaluation scores of eight in-house-designed systems and five vendor products that have been described elsewhere [26]. The user interface screens and the subtotal scores of the highest scorer were provided to Reviewers A and B in order to stimulate their feedback (►Figure 2).

Fig. 2.

Sample screenshots of DDI alerts from the highest-scoring EHR system.

3. Results

3.1 Semantic validation of the K-I-MeDeSA

Concerning the translation agreement among the three reviewers, of the 9 principles and 26 items, 32 showed perfect agreement (κ = 1.0), and 3 items had an agreement level of 0.47. The scale-level CVI, calculated using an averaging method, was 0.95, which reflected excellent interrater agreement.

3.2 Operational evaluation of a Korean CDS system using the K-I-MeDeSA

The two reviewers disagreed on three of the instrument’s 26 items. The three items upon which the reviewers disagreed were items concerning the principles of visibility, color, and learnability and confusability (►Table 2). For the visibility principle, Reviewer A responded that the alert window had sufficient background contrast to allow the user to easily read the alert message, but Reviewer B did not agree with this, and Reviewer C considered that the similarities between the appearances of the alert and the CPOE windows made it difficult to distinguish the alerts from the CPOE user interface. Regarding the color principle, Reviewer B responded that the alert used color coding to indicate the type of unsafe event; however, the DDI alerts were not stratified into levels, and no color coding was used.

Table 2.

Results of an evaluation of the DDI alert system of the target hospital.

| Principle (numbers of items) | Item number | Reviewer A score | Reviewer B score | Final score |

|---|---|---|---|---|

| Alarm philosophy (1) | 1i | 0 | 0 | 0 |

| Placement (4) | 2i | 0 | 0 | 0 |

| 2ii | 1 | 1 | 1 | |

| 2iii | 0 | 0 | 0 | |

| 2iv | 1 | 1 | 1 | |

| Visibility (3) | 3i | 1 | 1 | 1 |

| 3ii | 1 | 0 | 0 | |

| 3iii | 1 | 1 | 1 | |

| Prioritization (5) | 4i | 0 | 0 | 0 |

| 4ii | 0 | 0 | 0 | |

| 4iii | 0 | 0 | 0 | |

| 4iv | 0 | 0 | 0 | |

| 4v | 0 | 0 | 0 | |

| Color (2) | 5i | 0 | 1 | 0 |

| 5ii | 1 | 0 | 1 | |

| Learnability and confusability (1) | 6i | 0 | 1 | 0 |

| Text-based information (6) | 7i | 0 | 0 | 0 |

| 7ii | 1 | 1 | 1 | |

| 7iia | 1 | 1 | 1 | |

| 7iii | 1 | 1 | 1 | |

| 7iiia | 0 | 0 | 0 | |

| 7iv | 0 | 0 | 0 | |

| Proximity of task components being displayed (1) | 8i | 0 | 0 | 0 |

| Corrective actions (3) | 9i | 1 | 1 | 1 |

| 9ia | 1 | 1 | 1 | |

| 9ii | 0 | 0 | 0 | |

| Total (26) | 11 | 12 | 10 | |

As for learnability and confusability, Reviewer B thought that the DDI alerts were easily distinguishable from one another; however, a question was raised about the differences between the severity and types of alerts. The DDI system alerts did not have severity levels, and all types of alerts appeared on one screen. The third reviewer cross-checked all alerts as having the same visual characteristics, and Reviewer B subsequently agreed with this assessment. The overall observed and expected agreements were 0.88 and 0.50, respectively, from which Cohen’s κ was calculated as 0.77. This was interpreted as a good reflection of strong agreement.

The final score was 10 out of 26, which revealed four principles that were absent from the system (alarm philosophy, prioritization, learnability and confusability, and proximity of task; ►Table 2) and five principles that were partially satisfied (placement, visibility, color, text-based information, and corrective actions).

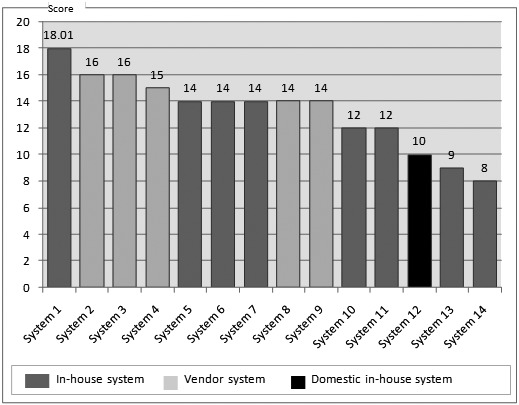

The comparison between the total score of the I-MeDeSA (i.e., 10) with those of other systems (range: 8–18) gave it a ranking of 12 out of all 14 systems (►Figure 3). Most of the vendor systems scored better than the target system. In comparison with the highest scorer, the subject system showed weakness in six principles and strength at the corrective action principle (►Table 3).

Fig. 3.

Comparison of the evaluation results with other in-house and commercial alert systems (the systems are ordered according to score and not in the order they are listed in Table 2)

Table 3.

Comparison of principle scores with the highest scorer.

| Principle | Maximum score | Highest scorer | Subject system |

|---|---|---|---|

| Alarm philosophy | 1 | 0 | 0 |

| Placement | 4 | 2.67 | 2 |

| Visibility | 3 | 2.33 | 2 |

| Prioritization | 5 | 2.67 | 0 |

| Color | 2 | 1 | 1 |

| Learnability and confusability | 1 | 1 | 0 |

| Text-based information | 6 | 5.67 | 3 |

| Proximity of task components being displayed | 1 | 1 | 0 |

| Corrective actions | 3 | 1.67 | 2 |

| Total | 26 | 18.01 | 10 |

3.3 Reviewer feedback on the K-I-MeDeSA

Reviewers A and B identified several explicit shortcomings through the evaluation of the subject system. They noted that the gaps were largest for the principles of prioritization and text-based information. Regarding prioritization, the subject system does not consider alert priority, so that several hundred DDI alerts look similar. For text-based information, which carries a maximum score of 6, the target system achieved a moderate score due to the limited use of text-based information (►Figure 1). The system has limitations with regards to the delivery of explicit text-based information delivery about hazards and expected consequences. However, despite attaining generally low scores in most principles, the system did receive a very high score for corrective actions. The interface of the alert screen has explicit ‘Delete’ buttons for canceling each drug order that triggers a DDI alert, which is reflected automatically on the active order screen through a screen refresh. If the user persists with ordering two drugs that interact, he/she must enter free text or select one of the five coded override reasons: no reasonable alternatives, alternatives did not work, consideration of the time interval between taking the two medications, PRN order, and educate patient/caregiver to take the oral medicine home. The reviewers reported judgment difficulties in the following areas due to a lack of objective criteria, lack of examples, and item-level redundancy: alarm philosophy, visibility, color, and learnability and confusability.

4. Discussion

The K-I-MeDeSA showed excellent semantic equivalence in comparison with the original tool. It was also operationally feasible for Korean informaticians to assess human-factor considerations regarding medication-related CDS. Through the present experimental evaluation, the reviewers found the strengths of the subject system and the weaknesses that need to be improved. They also suggest that the I-MeDeSA needs to provide more concrete definitions that include rationales and explicit examples.

4.1 K-I-MeDeSA

The translation and validation of the I-MeDeSA was straightforward. One item queried whether the font used to display the textual message, which included a mixture of upper- and lower-case lettering, is optimal for ensuring readability by Korean users. In the Korean language there are no upperor lower-case letters, but the system uses both languages on screen and lists drug or ingredient names usually only in English. However, it is not always the case for Korean system. Except for the lettering difference, the experimental evaluation revealed no language problems resulting from interpretation. This finding is attributed to the fact that the use of CPOE systems and EHRs are driven by similar medication prescription workflow functions in both Korean and US systems which allowed the items in I-MeDeSA to be valid for this evaluation.

4.2 Prioritization of alerts

For the subject system, the reviewers found that two main areas of the K-I-MeDeSA were particularly problematic. The first principle was prioritization. The subject system contains an excessive number of drug pairs (about 476) for DDIs that are all considered as contraindications. These alerts are designed to force users to take action every time they are triggered. These contraindicated pairs are provided by the Korean national DUR system without any prioritization that requires the submission of reasons why physicians use them [28, 29]. Physicians are frequently being interrupted by similar-appearing alerts when prescribing medication, which almost certainly results in what has been termed “alert fatigue” and “habitual override.” The delivery of too many alerts may result in important ones being missed and possibly even physicians refusing to use the application altogether, due to workflow disruption [27, 30, 31]. This indicates that an appropriate balance needs to be maintained between useful and excessive alerting.

It has also been noted that the use of simple DDI criteria, involving simply checking the drug list without considering patient characteristics and comorbidities, would be insufficient to contextualize the alert of an interaction for acutely ill patients [32, 33]. The lack of standardized criteria for evaluating the severity and clinical significance of DDIs in the DUR program requires more active user involvement at the hospital level. Each hospital has different characteristics, levels of severity among the patient population, and drug use patterns, so user experts could customize the DDI rules accordingly.

One study [33] employed a panel of medical experts in an attempt to initially identify high-severity, clinically significant DDIs that warrant interruptive alerting. The expert panel agreed on 15 DDIs representing drugs that should never be coprescribed and acknowledged that there are drug pairs that exist in the knowledge base but for which the interactions have not yet been sufficiently corroborated by evidence. As a result, these drugs were safely omitted from the alert-generating system. Another study utilized a small display set and assigned different levels of interactions according to their severity [17]. Hard stops were used only for Level 1, while Level 2 providers were asked to either cancel or consider additional monitoring. Level 3 alerts were specifically designed to convey clinical information but were not interruptive. This approach increased the overall acceptance rate to 67%, corresponding to an override rate of only 33%. All of the most severe alerts were accepted, and moderately severe alerts were also more likely to be accepted. As for Korean DDIs, such overrides seem to be very difficult to solve at present due to the national DUR requirement as an external regulation and pressure, one of the sociotechnical aspects that hinders the human-factor principles from being applied to medication-related CDS [2].

4.3 Text-based information

The second problematic area was text-based information, which is also relevant to prioritization. Visual alerts can contain text-based information to provide specific details about unsafe events; such text could be an explicit explanation of the interaction and/or specific instructions as to what to do and why. The target system evaluated in the present study provides limited text-based information about a specific DDI. The alert displays a text indicating the presence of a DDI with an order code, drug name, and the form in which it is prescribed. Hazard information indicating expected or possible consequences to patients is not provided, possibly due to the limited physical space available for the alert window or to concerns about information overload to physicians.

The national DUR governor does not provide information pertaining to alert prioritization, hazard nature, instructions, or expected consequences. However, providing insufficient information could result in users feeling that there are no alternative options, or that they are not able to balance the risks and benefits of the proposed therapy. Research in the area of safety has emphasized that while it is necessary to include instructions and hazard statements, space considerations sometimes make it impossible to include all of the required information. Providing an alternative medication list may be an effective strategy to directing users toward more desirable actions.

4.4 Suggestions for I-MeDeSA improvement

The reviewers reported several difficulties and questions regarding the I-MeDeSA. The first was the ambiguity of several words used in items and explanations; for example, in the principle of alarm philosophy there was no general catalog of unsafe events. The reviewers wondered if this component should be an introductory outline or a summary about the DDI rules, and they questioned when and where the information should be available. Introductory information on CDS systems is provided by the hospital to users through training sessions every year, although this information does not explicitly appear on the screen. Considering that the reviewers were the hospital’s staff members involved in the system’s development, they would already have a designer’s model rather than a user’s model, which might cause difficulties in decision making. Another possible explanation is that the reviewers’ questions might have arisen as a result of whether the CDS system was designed first from internal initiatives on patient safety or as a mandatory requirement of the national DUR governor. The regulation might lead hospitals to use a more passive approach to patient safety. An emphasis on alarm philosophy is dependent upon the individual institutional safety culture.

Another example of a problem with our study was the term “learnability and confusability,” which has one item to inquire regarding the distinction between alerts. One reviewer pointed out that the meaning of learnability encompasses more than just alert clarity. Furthermore, there were questions regarding the relationships between the two items, asking whether the different types of alerts are meaningfully grouped and whether the timing is appropriate for how the alerts are linked with the order of medications. The given explanation was that a DDI alert should appear as soon as the drug is chosen and should not wait for the user to complete the order before alerting him/her about a possible interaction. To satisfy the two items, a drug that triggers multiple DDIs with different levels, or with both a DDI and another type of alert, should be tested. However, it is difficult to test a system that does not have severity levels. In this case, the addition of a branch question at the beginning of the tool would be helpful.

Another concern was with the appropriate I-MeDeSA users. The tool was targeted at system developers to improve the alert design and to inform the organization’s purchase of a usable EHR system [23]. The two reviewers who participated in this present study were user representatives with over 10 years of experience in system development and informatics research. The reviewers requested concrete definitions with rationales, explicit examples, and better-defined comments that would allow for a clearer distinction between items that are conceptually close to one another. One example of the latter was the item querying the prioritization of alerts by color and the item querying the use of color coding.

4.5. Limitation of the study

The findings of the present study are subject to several limitations. First, an alerting system of a single hospital was examined by only four reviewers; hence, the results might only reflect the features of this study site. However, the present study is the first to apply the I-MeDeSA across cultures and to qualitatively assess its efficacy in a foreign-language CDS system; that is, using a language that differs from that of the original instrument. The present findings will contribute to international use of the I-MeDeSA thus increasing its generalizability and will support comparisons of CDS systems in terms of human-factor principles.

Second, the results may be limited with respect to the features of the DDI functions; hence, they may not be generalizable to other types of medication-related alerts. Further assessment of the appropriateness of the K-I-MeDeSA to different alert types is needed in order to enable its usefulness to be extrapolated to different situations. Third, measurement equivalence could not be addressed here due to the limits of our bilingual samples and the fact that there are no observable statistics from a large group in the original language.

Despite these limitations, the K-I-MeDeSA was found to be a useful and sustainable tool that enables informaticians to obtain a practical sense of human-factor considerations for a specific type of CDS system. Such practical tools should be made available to clinicians and informaticians to enable them to approach usability issues and to drive improvements of real systems. In addition, the tools should be continuously improved, obtaining feedback from potential or actual users in practice.

5. Conclusion

There are many ways in which CDS systems can be improved, one of which is the consideration of human-factor principles in the design of system-generated alerts. The K-I-MeDeSA was used successfully in this regard for screening the issues related to human-factor considerations of medication alerts. Although several criticisms were made regarding the terms used and the lack of exemplars in the I-MeDeSA, the present study has shown that the measure is useful and generalizable for evaluating CDS systems across cultures.

Acknowledgments

This work was supported by a Korea Research Foundation Grant, which was funded by the Korean Government (MOEHRD; No. KRF-2013R1A1A2006387). This project was also supported by the Centers for Education and Research on Therapeutics (CERT Grant # U19HS021094), Agency for Healthcare Research and Quality, Rockville, MD. USA.

Footnotes

Clinical Relevance Statement

The I-MeDeSA will enable clinical system developers and informaticians to obtain a practical sense of cross-cultural human-factor considerations for a specific type of CDS system. The K-I-MeDeSA can be used for CDS systems in 195 general hospitals, as well as vendor systems in Korea. The I-MeDeSA is generalizable and can be used to improve the design of CDS systems in an international context.

Conflicts of Interest

None of the authors has any conflicts of interest.

Protection of Human and Animal Subjects

This study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects and was reviewed and approved by the Asan Medical Center Institutional Review Board (reference number: S2013–0111–0001).

References

- 1.Linder JA, Ma J, Bates DW, Middleton B, Stafford RS.Electronic health record use and the quality of ambulatory care in the United States. Arch Intern Med 2007; 167(13):1400–1405 [DOI] [PubMed] [Google Scholar]

- 2.Bates DW, Gawande AA.Improving safety with information technology. N Engl J Med 2003; 348(25):2526–24534 [DOI] [PubMed] [Google Scholar]

- 3.Fischer MA, Solomon DH, Teich JM, Avorn J.Conversion from intravenous to oral medications: Assessment of a computerized intervention for hospitalized patients. Arch Intern Med 2003; 163(21):2585–259 [DOI] [PubMed] [Google Scholar]

- 4.Wang SJ, Middleton B, Prosser LA, Bardon CG, Spurr CD, Carchidi PJ, Kittler AF, Goldszer RC, Fairchild DG, Sussman AJ, Kuperman GJ, Bates DW.A cost-benefit analysis of electronic medical records in primary care. Am J Med 2003; 114(5):397–403 [DOI] [PubMed] [Google Scholar]

- 5.Tierney WM, Overhage JM, Murray MD, Harris LE, Zhou XH, Eckert GJ, Smith FE, Nienaber N, McDonald CJ, Wolinsky FD.Effects of computerized guidelines for managing heart disease in primary care. J Gen Intern Med 2003; 18(12):967–976 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Khajouei R, Jaspers MWM.The impact of CPOE medication systems’ design aspects on usability, workflow and medication orders: a systematic review. Methods Inf Med 2010; 49(1):3–19 [DOI] [PubMed] [Google Scholar]

- 7.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, Morton SC, Shekelle PG.Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006; 144(10): 742-752 [DOI] [PubMed] [Google Scholar]

- 8.Johnston D, Pan E, Walker J.The value of CPOE in ambulatory settings. J Healthc Inf Manag 2004; 18(1):5–8 [PubMed] [Google Scholar]

- 9.Van Der Sijs H, Aarts J, Vulto A, Berg M.Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc 2006; 13(2):138–147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Nightingale PG, Adu D, Richards NT, Peters M.Implementation of rules based computerised bedside prescribing and administration: intervention study. BMJ. 2000; 320(7237): 750-753 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Abookire SA1, Teich JM, Sandige H, Paterno MD, Martin MT, Kuperman GJ, Bates DW.Improving allergy alerting in a computerized physician order entry system. Proc AMIA Symp 2000: 2–6 [PMC free article] [PubMed] [Google Scholar]

- 12.Weingart SN, Toth M, Sands DZ, Aronson MD, Davis RB, Phillips RS.Physicians’ decisions to override computerized drug alerts in primary care. Arch Intern Med 2003; 163(21):2625–2631 [DOI] [PubMed] [Google Scholar]

- 13.Ash JS, Sittig DF, Dykstra RH, Guappone K, Carpenter JD, Seshadri V.Categorizing the unintended sociotechnical consequences of computerized provider order entry. Int J Med Inform 2007; 76 (Suppl. 1): S21-S27 [DOI] [PubMed] [Google Scholar]

- 14.Jung M1, Hoerbst A, Hackl WO, Kirrane F, Borbolla D, Jaspers MW, Oertle M, Koutkias V, Ferret L, Massari P, Lawton K, Riedmann D, Darmoni S, Maglaveras N, Lovis C, Ammenwerth E.Attitude of physicians towards automatic alerting in computerized physician order entry systems. A comparative international survey. Methods Inf Med 2013; 52(2):99–108 [DOI] [PubMed] [Google Scholar]

- 15.Payne TH, Nichol WP, Hoey P.Characteristics and override rates of order checks in a practitioner order entry system. Proc AMIA Symp 2002: 602-606 [PMC free article] [PubMed] [Google Scholar]

- 16.Cho I, Kim JA, Kho YT, Song SH, Park RW.Clinical decision support system. Seoul: Elsevier Korea; 2010 [Google Scholar]

- 17.Paterno MD, Maviglia SM, Gorman PN, Seger DL, Yoshida E, Seger AC, Bates DW, Gandhi TK.Tiering drug-drug interaction alerts by severity increases compliance rates. J Am Med Inform Assoc. 2009; 16(1): 40-46 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.President’s Council of Advisors on Science and Technology. Report to the president realizing the full potential of helath information technology to improve healthcare for Americans: The path forward. Washington, DC: 2010. pp. 1–108 [Google Scholar]

- 19.Zhang J, Walji MF.TURF: Toward a unified framework of EHR usability. J Biomed Inform. 2011; 44(6): 1056-1067 [DOI] [PubMed] [Google Scholar]

- 20.Bertman J, Skolnik N, Frieden J.Poor usability keeps EHR adoption rates low. Family Practice News 2010; 40(8): 54 [Google Scholar]

- 21.Saitwal H, Feng X, Walji M, Patel V, Zhang J.Assessing performance of an electronic health record (EHR) using cognitive task analysis. Int J Med Inform. 2010; 79(7): 501-506 [DOI] [PubMed] [Google Scholar]

- 22.Phansalkar S, Edworthy J, Hellier E, Seger DL, Schedlbauer A, Avery AJ, Bates DW.A review of human factors principles for the design and implementation of medication safety alerts in clinical information systems. J Am Med Inform Assoc 2010; 17(5):493–501 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Zachariah M, Phansalkar S, Seidling HM, Neri PM, Cresswell KM, Duke J, Bloomrosen M, Volk LA, Bates DW.Development and preliminary evidence for the validity of an instrument assessing implementation of human-factors principles in medication-related decision-support systems – I-MeDeSA. J Am Med Inform Assoc 2011; 18 (Suppl. 1): i62-i72 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Sittig DF, Singh H.A new sociotechnical model for studying health information technology in complex adaptive healthcare systems. Qual Saf Health Care 2010; 19 (Suppl. 3): i68–i74 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Herdman M, Fox-Rushby J, Badia X.A model of equivalence in the cultural adaptation of HRQoL instruments: the universalist approach. Quality of life Research. 1998; 7(4): 323-335 [DOI] [PubMed] [Google Scholar]

- 26.Polit DF, Beck CT, Owen SV.Is the CVI an acceptable indicator of content validity? Appraisal and recommendations. Res Nurs Health. 2007; 30(4): 459-467 [DOI] [PubMed] [Google Scholar]

- 27.Zachariah M, Phansalkar S, Seidling HM, Volk LA, Bloomrosen M, Bates DW.Evaluation of medication alerts for compliance with human factors principles: a multi-center study. Proc AMIA Symp 2011: 2021 [Google Scholar]

- 28.Lee Y, Lee J, Lee S.Analysis of drug interaction information. Kor J Clin Pharm 2009; 19(1):1–17 [Google Scholar]

- 29.Park J-Y, Park K-W.The contraindication of comedication drugs and drug utilization review. J Korean Med Assoc. 2012; 55(5): 484-490 [Google Scholar]

- 30.Shah NR, Seger AC, Seger DL, Fiskio JM, Kuperman GJ, Blumenfeld B, Recklet EG, Bates DW, Gandhi YK.Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc 2006; 13(1):5–11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ash JS, Berg M, Coiera E.Some unintended consequences of information technology in health care: the nature of patient care information system-related errors. J Am Med Inform Assoc. 2004; 11(2): 104-112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Choi N-K, Park B-J.Strategy for establishing an effective Korean drug utilization review system. J Korean Med Assoc. 2010; 53(12): 1130-1138 [Google Scholar]

- 33.Phansalkar S, Desai AA, Bell D, Yoshida E, Doole J, Czochanski M, Middleton B, Bates DW.High-priority drug-drug interactions for use in electronic health records. J Am Med Inform Assoc. 2012; 19(5): 735-743 [DOI] [PMC free article] [PubMed] [Google Scholar]