Abstract

Over the last 30 years hemispheric asymmetries in speech perception have been construed within a domain general framework, where preferential processing of speech is due to left lateralized, non-linguistic acoustic sensitivities. A prominent version of this argument holds that the left temporal lobe selectively processes rapid/temporal information in sound. Acoustically, this is a poor characterization of speech and there has been little empirical support for a left-hemisphere selectivity for these cues. In sharp contrast, the right temporal lobe is demonstrably sensitive to specific acoustic properties. We suggest that acoustic accounts of speech sensitivities need to be informed by the nature of the speech signal, and that a simple domain general/specific dichotomy may be incorrect.

Introduction – explaining the imbalance

It is generally well agreed that, for most people, both speech production and speech perception, as it serves language, are functions of the left hemisphere [1, 2]. The vast majority of patients with aphasia have a left hemisphere lesion, and a left dominance for speech and language has also been reported in intact brains using dichotic listening, TMS and functional neuroimaging. One influential body of research over the last 2 decades has concentrated on the hypothesis that this left dominance might emerge from a sensitivity to non-linguistic acoustic information which happens to be critical for speech. In approaches strongly influenced by hypotheses about the role of temporal information in speech (Box 1), emergent theories proposed that that the left hemisphere would show a preference for processing of rapid temporal information in sound. For example, Robert Zatorre and colleagues set out a model in which left auditory fields were sensitive to temporal structure and the right to spectral structure, by direct analogy with the construction of wide band and narrow band spectrograms [3]. Around the same time, David Poeppel advanced a theory informed by the patterns of oscillations studied in electrophysiological approaches (see Box 2). The Asymmetric Sampling in Time hypothesis [4] proposed that the left temporal lobe preferentially samples information over short time windows comparable to the duration of phonemes, and the right over longer time windows comparable to the length of syllables and intonation profiles. These theories have been widely used as explanatory frameworks over the last decade.

Box 1. A Journey in Time and Space.

Nearly 50 years ago, Robert Efron [5] suggested that the processing of temporal information and speech may share some resources, showing that patients with aphasia were impaired at overt temporal order judgments, needing longer intervals between two stimuli before they could determine which came first. Efron’s finding inspired a more general interest in how speech perception might be supported by non-linguistic processes, and raised the possibility that we could account for hemispheric specializations in speech perception in a domain-general manner. It was striking that the temporal ordering problems exhibited by the patients were notable from quite long inter-stimulus intervals (around 400ms), which in terms of speech perception is on the order of syllables, rather than phonemes.

Tallal and Piercy [6] investigated how children with aphasia (who today would likely be diagnosed with specific language impairment) performed on auditory temporal order judgments. These children, like the adult patients with aphasia, needed longer ISIs than controls to be able to discern which sound came first. As in Efron’s study, the children with ‘aphasia’ began to show problems at relatively long ISIs (around 300ms). These findings have been widely interpreted as demonstrations of problems with rapid temporal sequencing. However, the time intervals at which the impairments emerged in both studies (around 300-400ms) are at the supra-segmental level in the phonological hierarchy – that is, at the level of syllables, not phonemes.

A parallel approach used dichotic listening paradigms to reveal hemispheric asymmetries in the processing of speech and non-speech stimuli. Studdert-Kennedy and Shankweiler [60, 61] demonstrated that consonant-vowel combinations are processed with a right ear advantage (REA; suggesting a left hemisphere dominance). Cutting [62] used sine wave analogues of consonant-vowel (CV; e.g. ‘ba’ or ‘di’) stimuli in a dichotic listening task, and argued that there was an REA for the processing of formant transitions, whether or not these were in speech. It was striking, however, that there was no right ear advantage for the sine wave formant “syllables” alone. In 1980, Schwartz and Tallal [63] showed that, while both “normal” CV stimuli (with a 40ms formant transition) and extended CV stimuli (modified to have a 80ms transition) showed an REA, this was less strong for the stimuli with the modified, longer profiles. Though no non-speech stimuli were tested, the authors concluded this was strong evidence for a non-linguistic preference for shorter, faster acoustic transitions in the left hemisphere.

Box 2. Neural oscillations and temporal primitives.

The Asymmetric Sampling in Time hypothesis [4] predicts that ongoing oscillatory activities in different frequency bands form computational primitives in the brain. Theta range (4-8Hz) activity should be maximally sensitive to information at the rate of syllables in speech, while neuronal populations operating in the low gamma range (~40Hz) should be sensitive to the rapid temporal changes assumed to be central to phonemic information. The theory proposes a predominance of gamma populations in the left auditory cortex, and of theta on the right [64]. The correlation between ongoing EEG and BOLD activity at rest supports this view, for the right hemisphere [64].

Are these different oscillations integral to the processing of speech? Phase-locked oscillatory theta activity can discriminate between three heard sentences, but not when stimulus intelligibility is degraded [65]. Theta rhythms track incoming information in a stimulus-specific manner, and this tracking reflects more than slavish responsivity to the sentence acoustics [66]. Phase-locked delta-theta (2-7Hz) responses support the coordination of incoming audio and visual streams [67]. When listeners were attending to the speech of one of two simultaneous talkers, significant sentence discrimination was possible using phase-locked activity in the 4-8Hz (theta) range, which was modulated by selective attention [7]. Moreover, in partial support of the AST model, these theta effects were right-dominant. The complementary gamma activity proposed by the AST model is relatively lacking in these studies (though see [8,9]). Notably, speech perception is not restricted to gamma and theta frequencies. Selective attention to speech is associated with lateralized alpha (8-13Hz) activity [68], correlated with enhanced theta activity in auditory regions. Neural responses to degraded words show an alpha suppression, some 0.5 seconds after word onset, sensitive to both spectral detail and comprehension ratings [69].

The inter-relationship of measures available from electrophysiological recordings is complex. Thus, the differences in functional significance between induced changes in power, evoked responses, phase-resetting and coherence are not yet fully understood [70]. Moreover, higher-frequency modulations can become ‘nested’ in lower frequency ranges [71, 72].

Combined EEG and fMRI has shown that during learning a significant correlation between a beta power decrease and the BOLD response in left inferior frontal gyrus is specific to the encoding of later-remembered items [73]. A similar method could be used to identify the spatial location of task-critical oscillatory activity in response to heard speech, and asymmetries might be seen in the network dynamics connecting speech-sensitive nodes in the two hemispheres.

The nature of speech

It is important to consider whether these neural models make reasonable assumptions about the nature of the information in speech (Figure 1). The approaches of Zatorre and Poeppel both clearly associate left auditory fields with a sensitivity to temporal or rapid information. However this may be an inaccurate characterization of speech.

Not all the information in speech exists over short time scales. Plosives, which are frequently the only consonants studied (possibly because they can be neatly fitted into a matrix of place/manner/presence or absence of voicing cues, unlike many other speech sounds), do include very rapidly evolving acoustic structure. However, fricatives, affricates, nasals, liquids and vowels are often considerably longer in duration than the (40-ms) window specified by Poeppel.

As any sound, by its very nature, can only exist over time, ‘temporal’ is not a very well specified term. There is no non-temporal information available in sound. In turn, ‘temporal’ has been used in a variety of senses; Efron [5] used the concept of time to point out that phonemes need to be heard in the right order to result in meaningful speech, while Tallal and Piercy [6] expressly considered the processing of temporal order judgments to reflect similar processes to those necessary for the identification of phonemes in the incoming speech stream. More recently, ‘temporal’ has been used to refer to the amount of smoothing applied to the amplitude envelope of speech [7].

Phonetic information in speech is not expressed solely over short time scales, and occur over a supra-segmental level. Consecutive articulatory manouevres are not independent, and this co-articulation is reflected in the acoustics. This process can have effects over adjacent speech sounds as the articulation of one sound anticipates the next (e.g. the ‘s’ at the start of ‘sue’ is very different from the ‘s’ at the start of ‘see’[8]). These anticipatory effects can also take place over the whole syllable: the ‘l’ sound at the start of ‘led’ is different from that at the start of ‘let’ because of the voicing of the final syllable. Listeners are sensitive to these cues [9].

No single acoustic cue underlies the way that information is expressed in speech, and any one ‘phonetic’ contrast is underpinned by a variety of acoustic features. In British English, the phonetic difference between /apa/ and /aba/ concerns only the voicing of the medial /p/ or /b/ phones: however, there are more than 16 separable acoustic features which differ between the two plosives, including the length of the pre-stop vowel being longer in /aba/, and the aspiration on the release of /p/ being more pronounced than for /b/ [10].

All sounds can be characterized in terms of their spectral and amplitude modulation profiles – in speech, for example, most of the energy is carried in low spectral and amplitude modulations. Speech intelligibility can be preserved when the spectral and amplitude modulations are quite coarse (e.g. listeners can understand speech vocoded to 6 broad spectral channels, and considerable smoothing of the amplitude envelope), however neither kind of modulation in isolation can yield an intelligible percept. Given that speech perception requires spectral modulations [11], it is unclear why spectral detail should be processed preferentially in the right hemisphere (e.g.[3, 7]). In some cases, ‘spectral’ is used to mean ‘pitch’ [3, 12] although these two terms are in fact not synonymous.

Finally, a serious issue for both the approaches of Poeppel and Zatorre is the identification of the phoneme as the fundamental unit of perceptual information in speech. That is, the assumption that access to phoneme representations is the cardinal aspect of speech perception, and hence that theories need to account for a left-dominant sensitivity to phenomena which might underlie phoneme perception. There is considerable evidence that this assumption is incorrect [13-18]. Indeed, the growing evidence for the importance of suprasegmental structures like onset-rime structure, or syllables, would surely call for a temporal account that applies a wider time window on the speech signal, on the order of hundreds, rather than tens, of milliseconds.

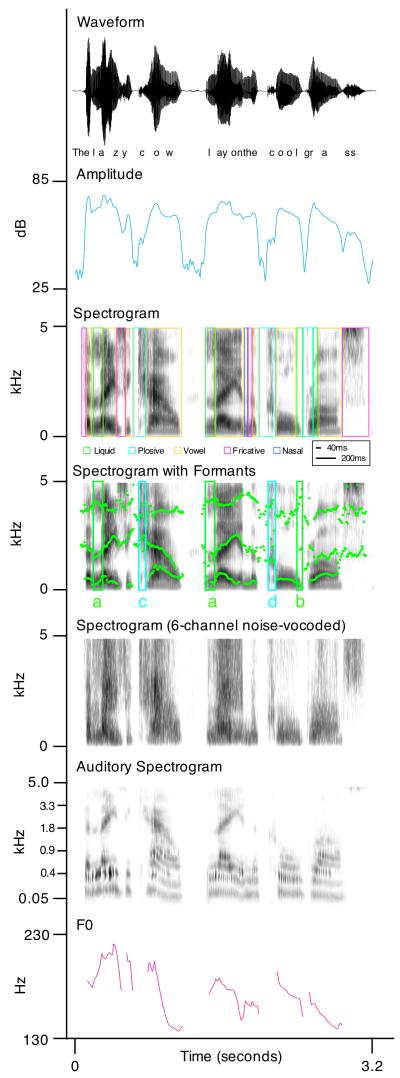

Figure 1. What’s in a sound?

Speech is an immensely complex signal. The upper panel (A) shows the waveform of a spoken sentence, plotted as an amplitude/time profile (an oscillogram). Panel B shows the amplitude envelope of the sentences, in which the oscillogram is rectified and smoothed. Panel C shows a spectrogram of the sentence, in which the frequency components of the sound are represented on the ordinate, time on the abscissa, and intensity is expressed by darker colours. This enables the complex spectral structure (formants) in speech to be visualized, reflecting the changes in spectral prominences associated with different movements and positions of the articulators. Different kinds of phonemes are indicated in coloured boxes: note that most of these are longer than a 40-ms time window. Panel D shows the same spectrogram, now with the formants specifically being tracked over time. Note that the different /l/ sounds have very different spectral structures (‘clear’ at word onsets (a) and ‘dark’ at word offsets (b)), and that the two /k/ sounds (at the start of ‘cow’ (c) and ‘cool’ (d)) are also different. These are due to phonotactic constraints and co-articulation, respectively. Panel E shows a spectrogram of the same sentence noise vocoded to 6 channels: this speech can still be understood although it only contains coarse spectral and amplitude structure. Panel F shows an auditory spectrogram, which more accurately reflects the way that frequency information is represented in the human auditory system: note that this greatly magnifies the detail at lower spectral frequencies. Panel G shows the fundamental frequency (F0) of the sentence (i.e. the shape of the intonation profile of the sentence).

Hemispheric asymmetries in sound processing

Thus, there are a priori reasons to suggest that a simple left-right dichotomy of acoustic processing may not account for the asymmetric processing of speech. In line with these reservations, it has proved difficult to show unambiguous evidence for a left lateralized dominance for ‘temporal’ processing of sounds, regardless of the size of the sampling window. Left lateralized neural responses to fast versus slow formant transitions were reported by Johnsrude and colleagues [19], but these lay in the left inferior frontal cortex (see also [20-22]) rather than the early auditory fields in temporal cortex associated with hearing and speech perception (Figure 2). Using elegant speech-like stimuli that contained either fast and slow formant transitions without being intelligible (English) speech, Belin and colleagues [23] showed that the left superior temporal gyrus (STG), extending into primary auditory cortex (PAC), was sensitive to both fast and slow transitions, and that the right STG (extending into PAC) was responsive to slow transitions only. This study is widely interpreted as showing a left dominance for rapid transitions, but was actually the first study to show that it is perhaps the right temporal lobe that responds selectively to acoustic manipulations in sounds. A caveat, however, is that the duration of the stimuli co-varied with the rapid/slow transitions, and so it may be that the right temporal lobe simply prefers longer sounds.

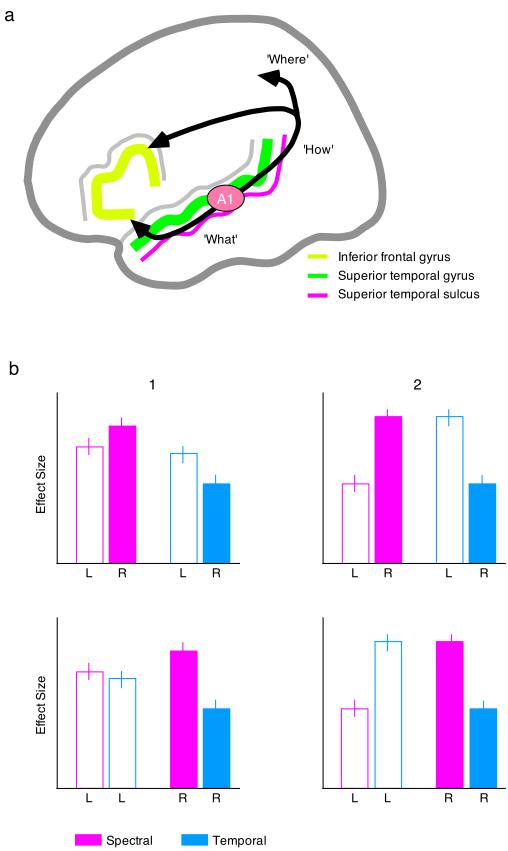

Figure 2. Locations and configurations of hemispheric asymmetries.

There are several different distinct auditory, heteromodal and prefrontal regions which are seen in speech and sound processing. Generally, the field is open minded about where (PAC, STG, STS, IFG) hemispheric asymmetries might be identified, although different interpretations might be made of the consequences of asymmetries in these different cortical areas. Thus asymmetries in primary auditory cortex might lead to an assumption that speech (and sounds that share its hypothesized acoustic features) is selectively processed from its earliest encoded entry into the cortex. Asymmetries in the superior temporal gyrus might point to somewhat later acoustic selectivity process, while asymmetric responses in the STS and IFG could arguably be related to non-acoustic sensitivities. Furthermore, the rostral/causal orientation of anatomically and functionally distinct streams of auditory processing may be important in interpreting the results of such functional imaging studies.

The bar charts in the lower section use hypothetical data to illustrate how different ways of plotting effect sizes can lead to varying interpretations of the data. The plots on the right of the figure (1) reflect potentially valid hemispheric asymmetries according to the hypothesis of Zatorre and colleagues (i.e. the left responds selectively to temporal information, and the right prefers spectral properties), while the plots on the left (2) show effects driven by an acoustic selectivity in the right hemisphere response only. The responses of the left and the right hemisphere are illustrated using clear and filled bars, respectively, with the only difference between the upper and lower panels being the order in which the bars are arranged. Note that the similarities between the two upper panels disappears when the results are plotted by hemisphere, rather than by condition.

This pattern of right hemisphere preference for longer sounds, and sounds with pitch variation, has since been seen in a wide variety of studies [3, 12, 23, 24]. A dominant trend has been to interpret such data as showing a left hemisphere preference for ‘temporal’ or ‘rapid’ temporal processing [25], although none of these studies actually shows a selective sensitivity (i.e. greater activation) in the left hemisphere for rapid or short temporal cues. A claim for a selective response to ‘temporal’ cues on the left has even been made when the neural response in the left superior temporal sulcus is greater to increasing ‘spectral’ detail than to increasing ‘temporal’ detail [7]. While some studies have found differences in the correlation of the BOLD response in the left and right auditory fields with spectral and temporal cues [24], the lack of a direct comparison of the effect sizes of the two parameters makes these results difficult to interpret (Figure 2).

Some studies have reported a left-lateralized or left-dominant response to temporal characteristics of sounds. Zatorre and Belin [3] and Jamieson and colleagues [26] showed a dominant left PAC response that correlated with decreasing interstimulus intervals and stimulus durations across pure tones of two different pitches (separated by one octave). Zaehle and colleagues [27] compared the neural responses to noise stimuli containing gaps (where the gaps were either 8 or 32ms in duration, after a leading element of 5 or 7ms of noise) with responses to tones, in the context of a delayed match to sample task. This contrast revealed a unilateral response in left medial primary auditory cortex (encroaching on planum temporale and insular cortex) to the noise stimuli with gaps. While all three studies converge on similar conclusions with regard to a left-hemisphere specialization for temporal processing, it is also the case that there are other acoustic factors that need to be considered. All three studies conflate ‘temporal’ manipulations with changes in the number of onsets of acoustic stimulation (including unwanted onset phenomena such as spectral splatter). For example, when the rate of change between two tones is low [3, 26], there are fewer perceived onset events associated with the change from one pitch to another. When the rate of change is high, there are many more onsets. Furthermore, these studies [3, 26] included tone repetition times and durations so brief (around 21ms) that the sequences could be perceived as two separate auditory streams (Beauvois, 1998). Consequently, it is not easy to determine whether the left-lateralized responses arise due to the ‘temporal’ nature of the manipulations, or the fact that the manipulations led to the formation of multiple auditory onsets.

The concept of a left auditory preference for (rapid) temporal processing is certainly appealing, especially if one favours a domain-general rather than a domain-specific argument about the systems underlying the left-lateralized nature of speech [25]. However, approaching a theory of hemispheric asymmetries by positing complementary hemispheric networks might in fact impose a symmetry or balance to the brain that simply isn’t there. In other words, offering an account that affords a particular acoustic preference to each hemisphere, might lead to overlooking a true asymmetry, in which the right hemisphere shows genuine preferences for certain properties of the acoustic signal, while the left does not.

Hemispheric asymmetries in speech processing

Although it is generally assumed that speech perception is left-dominant in the human brain, methodologically it has not been simple to identify a satisfactory means of hemispheric comparisons using the standard univariate analysis of the signal obtained in PET or fMRI (Box 3). In contrast to the studies on spectral/temporal information or time windows, straightforward comparisons of conditions (e.g. speech > acoustically-matched control stimuli) has shown clearer evidence of left-dominant responses to speech. This preference has been shown for sentences [28-32], words [33], syllables [34], isolated phonemes [35] and phonologically relevant acoustic cues [36] as well as syntactic [37-39] and semantic information [40, 41]. This dominance is typically seen both in the extent of activation and in the size of the effect (although note the limitations of interpretation for these parameters, discussed in Box 3), and is most easily interpreted when right sided activation is not found. Arguments that the domain-general processing of sound might operate in a relatively subtle way are at odds with the strong left dominant responses to different kinds of linguistic information in speech, and with the commonly seen preferential response to sounds with pitch variation on the right.

Box 3. You say lateral, I say (bi)lateral.

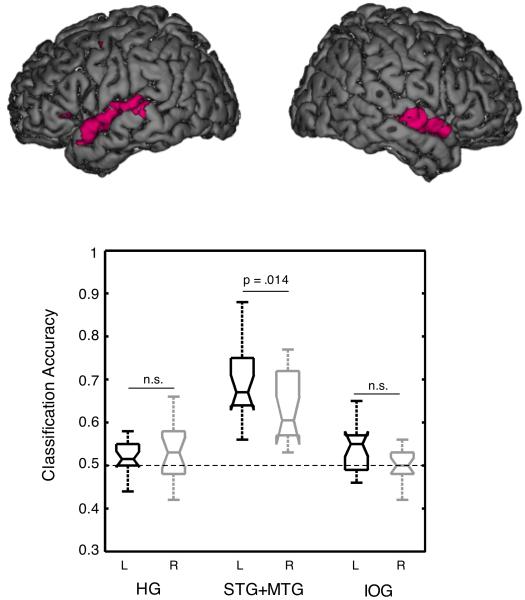

A problem in investigating of functional hemispheric asymmetries is how to offer sufficient proof that an effect is present, or not. Laterality indices have been calculated using the difference in the peak statistical height and spatial extent of activations in the two hemispheres [7, 26]. However, counting voxels makes unwarranted assumptions about the way that thresholding the data effects the activation extents. In other cases, authors have ‘flipped’ images to facilitate subtractive comparisons of the left and right hemispheres (e.g. [27, 74]), but this does not take account of the anatomical asymmetries present between the hemispheres. Further difficulties arise in the selection of regions-of-interest (ROI) for targeted extraction of local data in the left and right temporal lobes. Selection of ROIs based on functional data, or using the coordinates of peak activations from the same or previous studies, potentially introduces problems of non-independence and arbitrariness to the analyses. Moreover, the sensitivity of the mean BOLD signal to subtle differences between conditions is compromised in large ROIs [75], which is an important consideration when the effects at hand are hypothesized to be subtle [4]. A recent study [31] introduced multivariate pattern analysis of unsmoothed fMRI data in anatomically-defined ROIs as a means of directly compare acoustic and linguistic processing in the left and right temporal lobes. A linear support vector machine (SVM) was used to train and validate models in the classification of pairs of speech-derived conditions that varied in their acoustic properties and intelligibility (see main text and Figure). This method presents several advantages over previous approaches. First, the data were not subject to thresholding and its effects on apparent activation extent. Second, the ROIs were anatomically defined for each subject through parcellation of the cortical surface, allowing for the cross-hemispheric comparison of processing within task-relevant (STG, Heschl’s Gyrus) and control (inferior occipital gyrus) structures. Moreover, as SVMs are relatively robust to large numbers of voxels [76], this approach avoided the arbitrariness of small ROI selection without compromising the ability to detect effects.

Indexical cues in speech – the right for a voice?

Speech contains multiple cues in addition to the linguistic signal, and so cortical responses to undistorted speech may also include some of the speaker indexical information that has been shown to be associated with the right temporal lobe [42-49]. It is possible that aspects of this right-wards preference for speaker-related information may indeed be associated with an acoustic preference, and the right temporal lobe responds more than the left to sounds that are longer, have pitch variation, or musical structure [23, 49-51]). One candidate explanation, in line with work on the ‘temporal voice areas’ in the right dorsolateral temporal lobe [42], is that the right hemisphere is sensitive to speaker-related acoustic information in speech, above and beyond the linguistic signal (see also [52] for a discussion of how this asymmetry might bear on the processing of vocal emotion processing). It is certainly striking that the non-linguistic specialization which can be demonstrated in auditory cortex is associated with non-linguistic processing of sounds in the right hemisphere, rather than with speech or the left hemisphere.

Information and modulations in speech

If rapidity/temporal information is not key to speech perception (and, as discussed above, this is at best a weak characterization of the nature of speech acoustics), then are there other acoustic cues to which the left temporal lobe might be specially sensitive? Two recent studies addressed this question, using PET [31, 53] and fMRI [31] to measure the neural response to amplitude and spectral modulations derived from actual speech signals. The experimental stimuli were constructed such that these different types of modulation could be investigated separately, and in combination. A further manipulation allowed for the comparison of conditions where the combination of amplitude and spectral detail led to an intelligible speech percept, or to an unintelligible sequence (when the amplitude and spectral modulations came from different sentences). The results of these studies showed strongly bilateral responses to amplitude modulations, spectral modulations and both in combination (when unintelligible). In the PET study, there was a numerical indication that the right STG responded additively to the combined (unintelligible) modulations, while the left hemisphere did not. In both studies, the contrast of the intelligible and unintelligible conditions was associated with left-dominant effects, and in the fMRI study this was confirmed statistically with direct comparison of left and right superior temporal regions using multivariate pattern classification (see Box 3). The unintelligible stimuli, which had comparable amplitude and spectral modulations to the intelligible condition, did not lead to left-lateralized effects, suggesting that the presence of the amplitude and spectral modulations is not sufficient to yield a speech-selective response profile in the brain.

Beyond “temporal” – the instruments of speech

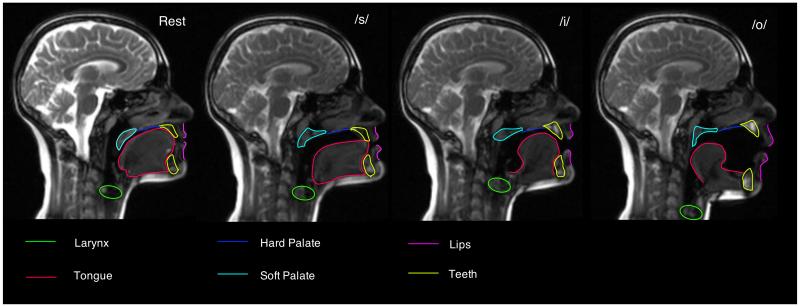

Does this mean that there can be no acoustic factors that might underlie the left-dominant responses to speech? Almost certainly not. However, those acoustic factors will not be simple, and they will be dictated by the nature of speech and the way it is produced [54]. For example, the studies described above [31, 53] explored the neural responses to spectral and amplitude modulations that are sufficient for speech comprehension, revealing a left-dominant effect only when those combinations could be understood. However, for the unintelligible combinations in those studies, it is possible that the manipulations altered the structure of the syllables, for example the onset/rhyme characteristics of the sequences (which are associated with amplitude onsets in mid-band spectral energy). Recent developments in speech science have demonstrated that multiple correlated cues are used by listeners when processing speech, and these cues are tethered as concurrent consequences of mechanics and dynamics of articulation ([55]). It is possible that brain activations that are selective for speech may reflect experience with these patterns as they occur within the sound structures of a language. Figure 3 shows examples of the configurations of human articulators during different kinds of speech sound production. While the tongue is positioned with great flexibility and range to make speech sounds, the movements of the articulators are constrained by the anatomy such that certain configural patterns are difficult or impossible – the speech sound ‘s’ is not possible with the jaw configuration of ‘o’. Furthermore, speech requires the controlled flow of air (voiced or not) and the movements of the articulators effect changes in the emphasis of the air flow by constriction (which is what is generally meant by place of articulation). This means that many possible configurations of the vocal tract are never used in speech: there are no nasalized /s/ sounds, and no glottal sonorant sounds.

Figure 3. Configurations of the vocal tract.

This figure shows images from a real-time MRI sequence during which an adult speaker of British English produced continuous speech. The leftmost panel shows the articulators at rest. The next panels show the configurations associated with the speech sounds, ‘s’, ‘eee’ and ‘aw’, respectively.

A domain general approach to speech perception will also require a more complex, dynamic model of the perceptual processes involved. The perceptual processing of sounds, even after only a brief exposure, is strongly affected by learnt expectations [56, 57]. It is hard to reconcile models which rely on basic acoustic factors (and which are implicitly feed-forward in their approach) with the ways that context and expectations influence perception (which require a more overt incorporation of bi-direction processes (e.g. predictive coding [58, 59]).

Conclusions: What’s right, and what’s left?

Need we abandon the pleasing simplicity of dimensions of auditory sensitivities, which lead to different patterns of processing in the left and right temporal lobes? No, in that the right temporal lobe is strongly selective for certain aspects of the signal; yes, in that the left temporal lobe is not. The complex pattern emerging suggests domain general mechanisms at play in the right temporal lobe, and more domain specific mechanisms in the left (see Box 4). Furthermore, if there are speech-selective acoustic sensitivities in the left hemisphere, these acoustic properties are highly unlikely to be dissociable from the articulatory systems and mechanisms that produce the sounds of speech.

Box 4. Outstanding questions.

To what extent is the speech perception left lateralized purely due to the left lateralized nature of language, and what factors underlie this?

Do these left hemisphere preferences for speech and language interact with left/right attentional systems, and if so, how?

What is the functional relationship between the dominant hemisphere and handedness?

Box Figure. Determining asymmetries with multivariate pattern analysis.

The rendered cortical surface images show activation resulting from a contrast of intelligible speech with acoustically-matched control sounds. The boxplot illustrates multivariate classification of responses to intelligible and unintelligible items in left and right Heschl’s gyrus (HG), superior temporal cortex (STG+MTG) and inferior occipital gyrus (IOG). MTG = middle temporal gyrus.

References

- 1.Markus HS, Boland M. Cognitive activity monitored by noninvasive measurement of cerebral blood-flow velocity and its application to the investigation of cerebral-dominance. Cortex. 1992;28:575–581. doi: 10.1016/s0010-9452(13)80228-6. [DOI] [PubMed] [Google Scholar]

- 2.Knecht S, et al. Handedness and hemispheric language dominance in healthy humans. Brain. 2000;123:2512–2518. doi: 10.1093/brain/123.12.2512. [DOI] [PubMed] [Google Scholar]

- 3.Zatorre RJ, Belin P. Spectral and temporal processing in human auditory cortex. Cerebral cortex. 2001;11:946–953. doi: 10.1093/cercor/11.10.946. New York, N.Y.: 1991. [DOI] [PubMed] [Google Scholar]

- 4.Poeppel D. The analysis of speech in different temporal integration windows: cerebral lateralization as ‘asymmetric sampling in time’. Speech Communication. 2003;41:245–255. [Google Scholar]

- 5.Efron R. Temporal perception, aphasia and deja vu. Brain. 1963;86:403–&. doi: 10.1093/brain/86.3.403. [DOI] [PubMed] [Google Scholar]

- 6.Tallal P, Piercy M. Defects of nonverbal auditory-perception in children with developmental aphasia. Nature. 1973;241:468–469. doi: 10.1038/241468a0. [DOI] [PubMed] [Google Scholar]

- 7.Obleser J, et al. Bilateral speech comprehension reflects differential sensitivity to spectral and temporal features. Journal of Neuroscience. 2008;28:8116–8123. doi: 10.1523/JNEUROSCI.1290-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Bailey PJ, Summerfield Q. Information in speech: observations on the perception of [s]-stop clusters. Journal of experimental psychology. Human perception and performance. 1980;6:536–563. doi: 10.1037//0096-1523.6.3.536. [DOI] [PubMed] [Google Scholar]

- 9.Hawkins S. Roles and representations of systematic fine phonetic detail in speech understanding. Journal of Phonetics. 2003;31:373–405. [Google Scholar]

- 10.Lisker L. Rapid versus rabid – catalog of acoustic features that may cue distinction. Journal of the Acoustical Society of America. 1977;62:S77–S78. [Google Scholar]

- 11.Stilp CE, et al. Cochlea-scaled spectral entropy predicts rate-invariant intelligibility of temporally distorted sentences. Journal of the Acoustical Society of America. 2010;128:2112–2126. doi: 10.1121/1.3483719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Boemio A, et al. Hierarchical and asymmetric temporal sensitivity in human auditory cortices. Nature Neuroscience. 2005;8:389–395. doi: 10.1038/nn1409. [DOI] [PubMed] [Google Scholar]

- 13.McGettigan C, et al. Neural Correlates of Sublexical Processing in Phonological Working Memory. Journal of Cognitive Neuroscience. 2011;23:961–977. doi: 10.1162/jocn.2010.21491. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vaden KI, Jr., et al. Sublexical Properties of Spoken Words Modulate Activity in Broca’s Area but Not Superior Temporal Cortex: Implications for Models of Speech Recognition. Journal of Cognitive Neuroscience. 2011;23:2665–2674. doi: 10.1162/jocn.2011.21620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Boucher VJ. Alphabet-related biases in psycholinguistic inquiries – considerations for direct theories of speech production and perception. Journal of Phonetics. 1994;22:1–18. [Google Scholar]

- 16.Port R. How are words stored in memory? Beyond phones and phonemes. New Ideas in Psychology. 2007;25:143–170. [Google Scholar]

- 17.Port RF. Rich memory and distributed phonology. Language Sciences. 2010;32:43–55. [Google Scholar]

- 18.Port RF. Language as a Social Institution: Why Phonemes and Words Do Not Live in the Brain. Ecological Psychology. 2010;22:304–326. [Google Scholar]

- 19.Johnsrude IS, et al. Left-hemisphere specialization for the processing of acoustic transients. Neuroreport. 1997;8:1761–1765. doi: 10.1097/00001756-199705060-00038. [DOI] [PubMed] [Google Scholar]

- 20.Fiez JA, et al. PET studies of auditory and phonological processing – effects of stimulus characteristics and task demands. Journal of Cognitive Neuroscience. 1995;7:357–375. doi: 10.1162/jocn.1995.7.3.357. [DOI] [PubMed] [Google Scholar]

- 21.Temple E, et al. Disruption of the neural response to rapid acoustic stimuli in dyslexia: Evidence from functional MRI. Proceedings of the National Academy of Sciences of the United States of America. 2000;97:13907–13912. doi: 10.1073/pnas.240461697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Poldrack RA, et al. Relations between the neural bases of dynamic auditory processing and phonological processing: Evidence from fMRI. Journal of Cognitive Neuroscience. 2001;13:687–697. doi: 10.1162/089892901750363235. [DOI] [PubMed] [Google Scholar]

- 23.Belin P, et al. Lateralization of speech and auditory temporal processing. Journal of Cognitive Neuroscience. 1998;10:536–540. doi: 10.1162/089892998562834. [DOI] [PubMed] [Google Scholar]

- 24.Schonwiesner M, et al. Hemispheric asymmetry for spectral and temporal processing in the human antero-lateral auditory belt cortex. European Journal of Neuroscience. 2005;22:1521–1528. doi: 10.1111/j.1460-9568.2005.04315.x. [DOI] [PubMed] [Google Scholar]

- 25.Zatorre RJ, Gandour JT. Neural specializations for speech and pitch: moving beyond the dichotomies. Philosophical Transactions of the Royal Society B-Biological Sciences. 2008;363:1087–1104. doi: 10.1098/rstb.2007.2161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Jamison HL, et al. Hemispheric specialization for processing auditory nonspeech stimuli. Cerebral Cortex. 2006;16:1266–1275. doi: 10.1093/cercor/bhj068. [DOI] [PubMed] [Google Scholar]

- 27.Zaehle T, et al. Evidence for rapid auditory perception as the foundation of speech processing: a sparse temporal sampling fMRI study. European Journal of Neuroscience. 2004;20:2447–2456. doi: 10.1111/j.1460-9568.2004.03687.x. [DOI] [PubMed] [Google Scholar]

- 28.Scott SK, et al. Identification of a pathway for intelligible speech in the left temporal lobe. Brain. 2000;123:2400–2406. doi: 10.1093/brain/123.12.2400. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Eisner F, et al. Inferior frontal gyrus activation predicts individual differences in perceptual learning of cochlear-implant simulations. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2010;30:7179–7186. doi: 10.1523/JNEUROSCI.4040-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Narain C, et al. Defining a left-lateralized response specific to intelligible speech using fMRI. Cerebral cortex (New York, N.Y. : 1991) 2003;13:1362–1368. doi: 10.1093/cercor/bhg083. [DOI] [PubMed] [Google Scholar]

- 31.McGettigan C, et al. An application of univariate and multivariate approaches in FMRI to quantifying the hemispheric lateralization of acoustic and linguistic processes. J Cogn Neurosci. 2012;24:636–652. doi: 10.1162/jocn_a_00161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.McGettigan C, et al. Speech comprehension aided by multiple modalities: Behavioural and neural interactions. Neuropsychologia. 2012;50:762–776. doi: 10.1016/j.neuropsychologia.2012.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Mummery CJ, et al. Functional neuroimaging of speech perception in six normal and two aphasic subjects. J Acoust Soc Am. 1999;106:449–457. doi: 10.1121/1.427068. [DOI] [PubMed] [Google Scholar]

- 34.Liebenthal E, et al. Neural substrates of phonemic perception. Cerebral Cortex. 2005;15:1621–1631. doi: 10.1093/cercor/bhi040. [DOI] [PubMed] [Google Scholar]

- 35.Agnew ZK, et al. Discriminating between Auditory and Motor Cortical Responses to Speech and Nonspeech Mouth Sounds. Journal of Cognitive Neuroscience. 2011;23:4038–4047. doi: 10.1162/jocn_a_00106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Jacquemot C, et al. Phonological grammar shapes the auditory cortex: A functional magnetic resonance imaging study. Journal of Neuroscience. 2003;23:9541–9546. doi: 10.1523/JNEUROSCI.23-29-09541.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Friederici AD. Towards a neural basis of auditory sentence processing. Trends in Cognitive Sciences. 2002;6:78–84. doi: 10.1016/s1364-6613(00)01839-8. [DOI] [PubMed] [Google Scholar]

- 38.Friederici AD, et al. Disentangling syntax and intelligibility in auditory language comprehension. Hum Brain Mapp. 2010;31:448–457. doi: 10.1002/hbm.20878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Herrmann B, et al. Dissociable neural imprints of perception and grammar in auditory functional imaging. Human Brain Mapping. 2012;33:584–595. doi: 10.1002/hbm.21235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Obleser J, et al. Functional integration across brain regions improves speech perception under adverse listening conditions. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2007;27:2283–2289. doi: 10.1523/JNEUROSCI.4663-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Obleser J, Kotz SA. Expectancy Constraints in Degraded Speech Modulate the Language Comprehension Network. Cerebral Cortex. 2010;20:633–640. doi: 10.1093/cercor/bhp128. [DOI] [PubMed] [Google Scholar]

- 42.Belin P, et al. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- 43.Belin P, Zatorre RJ. Adaptation to speaker’s voice in right anterior temporal lobe. Neuroreport. 2003;14:2105–2109. doi: 10.1097/00001756-200311140-00019. [DOI] [PubMed] [Google Scholar]

- 44.Belin P, et al. Human temporal-lobe response to vocal sounds. Cognitive Brain Research. 2002;13:17–26. doi: 10.1016/s0926-6410(01)00084-2. [DOI] [PubMed] [Google Scholar]

- 45.von Kriegstein K, et al. Modulation of neural responses to speech by directing attention to voices or verbal content. Cognitive Brain Research. 2003;17:48–55. doi: 10.1016/s0926-6410(03)00079-x. [DOI] [PubMed] [Google Scholar]

- 46.Kriegstein KV, Giraud AL. Distinct functional substrates along the right superior temporal sulcus for the processing of voices. Neuroimage. 2004;22:948–955. doi: 10.1016/j.neuroimage.2004.02.020. [DOI] [PubMed] [Google Scholar]

- 47.von Kriegstein K, et al. Interaction of face and voice areas during speaker recognition. Journal of Cognitive Neuroscience. 2005;17:367–376. doi: 10.1162/0898929053279577. [DOI] [PubMed] [Google Scholar]

- 48.Petkov CI, et al. A voice region in the monkey brain. Nature Neuroscience. 2008;11:367–374. doi: 10.1038/nn2043. [DOI] [PubMed] [Google Scholar]

- 49.Johnsrude IS, et al. Functional specificity in the right human auditory cortex for perceiving pitch direction. Brain. 2000;123:155–163. doi: 10.1093/brain/123.1.155. [DOI] [PubMed] [Google Scholar]

- 50.Meyer M, et al. FMRI reveals brain regions mediating slow prosodic modulations in spoken sentences. Human Brain Mapping. 2002;17:73–88. doi: 10.1002/hbm.10042. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Meyer M, et al. Brain activity varies with modulation of dynamic pitch variance in sentence melody. Brain and Language. 2004;89:277–289. doi: 10.1016/S0093-934X(03)00350-X. [DOI] [PubMed] [Google Scholar]

- 52.Kotz SA, Schwartze M. Cortical speech processing unplugged: a timely subcortico-cortical framework. Trends in Cognitive Sciences. 2010;14:392–399. doi: 10.1016/j.tics.2010.06.005. [DOI] [PubMed] [Google Scholar]

- 53.Rosen S, et al. Hemispheric Asymmetries in Speech Perception: Sense, Nonsense and Modulations. Plos One. 2011;6 doi: 10.1371/journal.pone.0024672. 10.1371/journal.pone.0024672. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Diehl RL. Acoustic and auditory phonetics: the adaptive design of speech sound systems. Philosophical Transactions of the Royal Society B Biological Sciences. 2008;363:965–978. doi: 10.1098/rstb.2007.2153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Kluender KR, Alexander JM. Perception of speech sounds. In: Basbaum AI, Kaneko A, Shepard GM, Westheimer G, editors. The Senses: a Comprehensive Reference. Elsevier; 2010. pp. 829–860. [Google Scholar]

- 56.Stilp CE, et al. Auditory color constancy: Calibration to reliable spectral properties across nonspeech context and targets. Attention Perception & Psychophysics. 2010;72:470–480. doi: 10.3758/APP.72.2.470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Ulanovsky N, et al. Processing of low-probability sounds by cortical neurons. Nature Neuroscience. 2003;6:391–398. doi: 10.1038/nn1032. [DOI] [PubMed] [Google Scholar]

- 58.Gagnepain P, et al. Temporal predictive codes for spoken words in auditory cortex. Current Biology. 2012 doi: 10.1016/j.cub.2012.02.015. 10.1016/j.cub.2012.1002.1015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Schwartze M, et al. Temporal aspects of prediction in audition: Cortical and subcortical neural mechanisms. International journal of psychophysiology : official journal of the International Organization of Psychophysiology. 2012;83:200–207. doi: 10.1016/j.ijpsycho.2011.11.003. [DOI] [PubMed] [Google Scholar]

- 60.Shankweiler D, Studdert-Kennedy M. Identification of consonants and vowels presented to left and right ears. Q J Exp Psychol. 1967;19:59–63. doi: 10.1080/14640746708400069. [DOI] [PubMed] [Google Scholar]

- 61.Studdert-Kennedy M, Shankweiler D. Hemispheric specialization for speech perception. J Acoust Soc Am. 1970;48:579–594. doi: 10.1121/1.1912174. [DOI] [PubMed] [Google Scholar]

- 62.Cutting JE. 2 Left-Hemisphere Mechanisms in Speech-Perception. Perception & Psychophysics. 1974;16:601–612. [Google Scholar]

- 63.Schwartz J, Tallal P. Rate of Acoustic Change May Underlie Hemispheric-Specialization for Speech-Perception. Science. 1980;207:1380–1381. doi: 10.1126/science.7355297. [DOI] [PubMed] [Google Scholar]

- 64.Giraud A-L, et al. Endogenous cortical rhythms determine cerebral specialization for speech perception and production. Neuron. 2007;56:1127–1134. doi: 10.1016/j.neuron.2007.09.038. [DOI] [PubMed] [Google Scholar]

- 65.Luo H, Poeppel D. Phase patterns of neuronal responses reliably discriminate speech in human auditory cortex. Neuron. 2007;54:1001–1010. doi: 10.1016/j.neuron.2007.06.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ahissar E, et al. Speech comprehension is correlated with temporal response patterns recorded from auditory cortex. Proceedings of the National Academy of Sciences of the United States of America. 2001;98:13367–13372. doi: 10.1073/pnas.201400998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Luo H, et al. Auditory Cortex Tracks Both Auditory and Visual Stimulus Dynamics Using Low-Frequency Neuronal Phase Modulation. Plos Biology. 2010;8 doi: 10.1371/journal.pbio.1000445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Kerlin JR, et al. Attentional Gain Control of Ongoing Cortical Speech Representations in a "Cocktail Party". Journal of Neuroscience. 2010;30:620–628. doi: 10.1523/JNEUROSCI.3631-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Obleser J, Weisz N. Cerebral cortex. New York, N.Y.: 2011. Suppressed Alpha Oscillations Predict Intelligibility of Speech and its Acoustic Details. 1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Palva S, Palva JM. New vistas for alpha-frequency band oscillations. Trends in neurosciences. 2007;30:150–158. doi: 10.1016/j.tins.2007.02.001. [DOI] [PubMed] [Google Scholar]

- 71.Jensen O, Colgin LL. Cross-frequency coupling between neuronal oscillations. Trends in Cognitive Sciences. 2007;11:267–269. doi: 10.1016/j.tics.2007.05.003. [DOI] [PubMed] [Google Scholar]

- 72.Palva JM, et al. Phase synchrony among neuronal oscillations in the human cortex. Journal of Neuroscience. 2005;25:3962–3972. doi: 10.1523/JNEUROSCI.4250-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Hanslmayr S, et al. The relationship between brain oscillations and BOLD signal during memory formation: a combined EEG-fMRI study. The Journal of neuroscience : the official journal of the Society for Neuroscience. 2011;31:15674–15680. doi: 10.1523/JNEUROSCI.3140-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Josse G, et al. Predicting Language Lateralization from Gray Matter. Journal of Neuroscience. 2009;29:13516–13523. doi: 10.1523/JNEUROSCI.1680-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Poldrack RA. Region of interest analysis for fMRI. Social Cognitive and Affective Neuroscience. 2007;2:67–70. doi: 10.1093/scan/nsm006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Misaki M, et al. Comparison of multivariate classifiers and response normalizations for pattern-information fMRI. Neuroimage. 2010;53:103–118. doi: 10.1016/j.neuroimage.2010.05.051. [DOI] [PMC free article] [PubMed] [Google Scholar]