Summary

Functional additive models (FAMs) provide a flexible yet simple framework for regressions involving functional predictors. The utilization of data-driven basis in an additive rather than linear structure naturally extends the classical functional linear model. However, the critical issue of selecting nonlinear additive components has been less studied. In this work, we propose a new regularization framework for the structure estimation in the context of Reproducing Kernel Hilbert Spaces. The proposed approach takes advantage of the functional principal components which greatly facilitates the implementation and the theoretical analysis. The selection and estimation are achieved by penalized least squares using a penalty which encourages the sparse structure of the additive components. Theoretical properties such as the rate of convergence are investigated. The empirical performance is demonstrated through simulation studies and a real data application.

Keywords: Component selection, Additive models, Functional data analysis, Smoothing spline, Principal components, Reproducing kernel Hilbert space

1. Introduction

Large complex data collected in modern science and technology impose tremendous challenges on traditional statistical methods due to their high-dimensionality, massive volume and complicated structures. Emerging as a promising field, functional data analysis (FDA) employs random functions as model units and is designed to model data distributed over continua such as time, space and wavelength; see Ramsay and Silverman (2005) for a comprehensive introduction. Such data may be viewed as realizations of latent or observed stochastic processes and are commonly encountered in many fields, e.g. longitudinal studies, microarray experiments, brain images.

Regression models involving functional objects play a major role in the FDA literature. The most widely used is the functional linear model, in which a scalar response Y is regressed on a functional predictor X through a linear operator

| (1) |

where X(t) is often assumed to be a smooth and square-integrable random function defined on a compact domain , and β(t) is the regression parameter function which is also assumed to be smooth and square-integrable. A commonly adopted approach for fitting model (1) is through basis expansion, i.e. representing the functional predictor as the linear combinations of a set of basis , where μ(t) = EX(t). Model (1) is then transformed to a linear form of the coefficients , where and . More references on functional linear regression can be found in Cardot et al. (1999, 2003), Fan and Zhang (2000), etc. Extensions to generalized functional linear models were proposed by James (2002), Müller and Stadtmüller (2005) and Li et al. (2010). The basis set {ϕk} can be either predetermined (e.g. Fourier basis, wavelets, B-splines), or data-driven. One convenient choice for the latter is the eigenbasis of the auto-covariance operator of X, in which case the random coefficients {ξk} are called functional principal component (FPC) scores. The FPC scores have mean zero and variances equal to the corresponding eigenvalues {λk, k = 1, 2, …}. This isomorphic representation of X is referred to as the Karhunen-Loève expansion, and the related methods are often called functional principal component analysis (FPCA) (Rice and Silverman, 1991; Yao et al., 2005; Hall et al., 2006; Hall and Hosseini-Nasab, 2006; Yao, 2007). Due to the rapid decay of the eigenvalues, the orthogonal eigenbasis provides a more parsimonious and efficient representation compared to other basis. Furthermore, FPC scores are mutually uncorrelated, which can considerably simplify the model fitting and theoretical analysis. We focus on the FPC representation of the functional regression throughout this paper, nevertheless, the proposal is also applicable to other prespecified basis.

Although widely used, the linear relationship can be restrictive for general applications. This linear assumption is then relaxed by Müller and Yao (2008) who proposed the functional additive model (FAM). The FAM provides a flexible yet practical framework that accommodates nonlinear associations and at the same time avoids the curse of dimensionality encountered in high dimensional nonparametric regression problems (Hastie and Tibshirani, 1990). In the case of scalar response, the linear structure was replaced by the sum of nonlinear functional components, i.e.

| (2) |

where {fk(·)} are unknown smooth functions. In Müller and Yao (2008), the FAM was fitted by estimating {ξk} using FPCA (Yao et al., 2005) and estimating {fk} using local polynomial smoothing.

Apparently regularizing (2) is necessary. In Müller and Yao (2008) the regularization was achieved by truncating the eigen-sequence to the first K leading components, where K was chosen to explain the majority of the total variation in the predictor X. Despite its simplicity, this naive truncation procedure can be inadequate in many complex problems. First, the impact of FPCs on the response does not necessarily coincide with their magnitudes specified by the auto-covariance operator of the predictor process alone. For instance, some higher order FPCs may contribute to the regression significantly more than the leading FPCs. This phenomenon was discussed by Hadi and Ling (1998) in the principal component regression context, and later was observed in real examples of high-dimensional data (Bair et al., 2006) and functional data (Zhu et al., 2007), respectively. Second, although a small number of leading FPCs might be able to capture the major variability in X due to the rapidly decaying eigenvalues, one often needs to include more components for better regression performance, especially for the prediction purpose as observed in Yao and Müller (2010). On the other hand, retaining more than needed FPCs brings the risk of over-fitting, which is caused by including components that contribute little to the regression but introduce noise. Therefore a desirable strategy is to automatically identify “important” components out of a sufficiently large number of candidates, whereas shrink those “unimportant” ones to zero.

With the above consideration, we seek an entirely new regularization and estimation framework for identifying the sparse structure of the FAM regression. Model selection that encourages sparse structure has received substantial attention in the last decade mostly due to the rapidly emerging high-dimensional data. In the context of linear regression, the seminal works include Lasso (Tibshirani, 1996), adaptive Lasso (Zou, 2006), SCAD (Fan and Li, 2001) and the references therein. Traditional additive models are considered by Lin and Zhang (2006), Meier et al. (2009) and Ravikumar et al. (2009); and the extensions to generalized additive models (GAM) are studied by Wood (2006) and Marra and Wood (2011). In comparison with these works, the sparse estimation in functional regression is much less explored. To our knowledge, most existing works are for functional linear models with sparse penalty(James et al., 2009; Zhu et al., 2010) or L2 type penalty (Goldsmith et al., 2011). Relevant research for additive structures is scant in the literature. In this paper, we consider the selection and estimation of the additive components in FAMs that encourage a sparse structure, in the framework of reproducing kernel Hilbert space (RKHS). Unlike in standard additive models, the FPC scores are not directly observed in FAMs. They need to be firstly estimated from the functional covariates and then plugged into the additive model. The estimated scores are random variables, which creates a major challenge to the theoretical exploration. It is necessary to properly take into account the influence of the unobservable FPC scores on the resulting estimator. Furthermore, the functional curve X is not fully observed either. We typically collect repeated and irregularly spaced sample points, which are subject to measurement errors. The presence of measurement error in data adds extra difficulty for model implementation and inference. All of these issues are tackled in this paper. We propose a two-step estimation procedure to achieve the desired sparse structure estimation in FAM. For the regularization, we adopt the COSSO (Lin and Zhang, 2006) penalty due to its direct shrinkage effect on functions in the reproducing kernel Hilbert space. On the practical side, the proposed method is easy to implement, by taking advantage of existing algorithms of FPCA.

The rest of the article is organized as follows. In Section 2, we present the proposed approach and algorithm, as well as the theoretical properties of the resulting estimator. Simulation results with comparison to existing methods are included in Section 3. We apply the proposed method to the Tecator data in Section 4, studying the regression of the protein content on the absorbance spectrum. The concluding remarks are provided in Section 5, while the details of the estimation procedure and technical proofs are deferred to the appendices.

2. Structured functional additive model regression

Let Y be a scalar response associated with a functional predictor , and let , be the i.i.d. realizations of the pair {Y, X(·)}. The trajectories are often observed intermittently on possibly irregular grids ti = (ti1, …, tiNi)T Denote the discretized xi(t) in the vector form as xi = (xi1, …, xiNi)T. To be realistic, we also assume that the trajectories are subject to i.i.d. measurement error, i.e. xij = xi(tij) + eij with Eeij = 0 and Var(eij) = ν2. Following the FPCA of Yao et al. (2005) and Yao (2007), denote ξi,∞ = (ξi1, ξi2, …)T as the sequence of FPC scores of xi, which is associated with eigenvalues {λ1, λ2, …} such that λ1 ≥ λ2 ≥ ⋯ ≥ 0.

2.1. Proposed methodology

As discussed in Section 1, the theory of FPCA enables isomorphic transformation of random functions to their FPC scores, which brings tremendous convenience to model fitting and theoretical development in functional linear regression. To establish a framework for the nonlinear and nonparametric regression, we consider regressing the scalar responses {yi} directly on the sequences of FPC scores {ξi,∞} of {xi}. For the convenience of model regularization, we would like to restrict the predictor variables (i.e. FPC scores) to take values on a closed and bounded subset of the real line, e.g. [0, 1] without loss of generality. This is easy to achieve by taking a transformation of the FPC scores through a monotonic function , for all {ξik}. In fact the choice of Ψ is rather flexible. A wide range of CDF functions can be used (see (a.2) in Section 2.2 for the regularity condition). Additionally one may choose Ψ so that the transformed variables have similar/same variations. This can be achieved by allowing Ψ(·) to depend on the eigenvalues {λk}, where {λk} serve as scaling variables. For simplicity, in the sequel we use a suitable CDF (e.g. normal), denoted by Ψ(·, λk), from a location-scale family with mean zero and variance λk. It is obvious that, if ξik’s are normally distributed, the normal CDF leads to uniformly distributed transformed variables on [0, 1].

Denoting the transformed variable of ξik by ζik, i.e. ζik = Ψ(ξik, λk), and denoting ζi,∞ = (ζi1, ζi2, …)T, we propose an additive model as follows:

| (3) |

where {∊i} are independent errors with zero mean and variance σ2, and is a smooth function. For each k, let Hk be the lth order Sobolev Hilbert space on [0, 1], defined by Hk([0, 1]) = {g | g(ν) is absolutely continuous for ν = 0, 1, … , l – 1; g(l) ∈ L2}. One can show that Hk is an reproducing kernel Hilbert space (RKHS) equipped with the norm

See Wahba (1990) and Lin and Zhang (2006) for more details. Note that Hk has the orthogonal decomposition . Then the additive function f0 corresponds to which is a direct sum of subspaces, i.e. with , for all k. It is easy to check that, for any , we have . In this paper, we take l = 2 but the results can be extended to other cases straightforwardly. To distinguish the Sobolev norm from the L2 norm, we write ∥ · ∥ for the former and for ∥ · ∥L2 for the latter.

As motivated in Section 1, it is desirable to impose some type of regularization conditions on model (3) to select “important” components. An important assumption commonly made in high-dimensional linear regression is the sparse structure of the underlying true model. This assumption is also critical in the context of functional data analysis, which enables us to develop a systematic strategy than the heuristic truncation that retains the leading FPCs. Although widely adopted, retaining the leading FPCs is a strategy guided solely by the covariance operator of the predictor X, and therefore it fails to take into account the response Y . To be more flexible, we assume that the number of important functional additive components that contribute to the response is finite, but not necessarily restricted to the leading terms. In particular, we denote the index set of the important components and assume that , where ∣ · ∣ denotes the cardinality of a set. In other words, there exists a sufficiently large s such that , which implies that fk ≡ 0 as long as k > s. The FAM is thus equivalent to

| (4) |

It is noticed that the initial truncation s merely controls the total number of additive components to be considered, which is different from the heuristic truncation suggested by Yao et al. (2005) and Müller and Yao (2008) based on model selection criteria such as cross validation, AIC, or the fraction of variance explained. In practice we suggest to choose s large so that nearly 100% of the total variation is explained. This often leads to more than 10 FPCs in most empirical cases.

With the above assumption, the regression function lies in the truncated subspace of , where ζ is the truncated version of ζ∞, i.e. ζ = (ζ1, … , ζs)T with the dependence on s suppressed if no confusion arises. To nonparametrically regularize the unknown smooth functions {f0k}, we employ the COSSO regularization defined for the function estimation in RKHS and estimate f0 by finding that minimizes

| (5) |

where Pkf is the orthogonal projection of f onto . Here τn is the only smoothing parameter that requires tuning, whereas the common smoothing spline approach involves multiple smoothing parameters. The penalty J(f) is a convex functional and is a pseudonorm in . One interesting connection between COSSO and LASSO is that, when f0k(ζk) = ζkβ0k, the penalty in (5) reduces to , which becomes the adaptive Lasso penalty (Zou, 2006).

Different from the standard additive regression models, the transformed FPC scores {ζi} serving as predictor variables in (5) cannot be observed. Therefore we need to estimate the FPC scores first before the estimation and structure selection of f. A simple two-step algorithm is given as follows.

Algorithm

Step 1

Implement FPCA to estimate the FPC scores {ξi1, … , ξis} of xi, and then the transformed variables , where is the estimated eigenvalue, and s is chosen to explain 100% of the total variation.

Step 2

Implement the COSSO algorithm of Lin and Zhang (2006) to solve

| (6) |

We would like to refer to Appendix A for the details in case of densely or sparsely observed predictor trajectories. We call the proposed method the component selection and estimation for functional additive model, abbreviated as CSE-FAM.

2.2. Theoretical properties

We focus on the consistency of the resulting estimator of CSE-FAM for the case when {xi(t)} are densely observed in this subsection, where the rate of convergence is assessed using the empirical norm. In particular, we introduce the empirical norm and the entropy of as follows. Let , the empirical norm of g is defined as . The empirical inner product of the error term ∊ and g is defined as . Similarly, the empirical inner product of f and g in is .

The assumptions on the regression function f and the transformation Ψ(·,·) are listed below in (a.1)–(a.2), while the commonly adopted regularity conditions on the functional predictors {xi(t)}, the dense design, and the smoothing procedures are deferred to (b.1)–(b.3) in Appendix B.

(a.1) For any , there exist independent with , such that with probability 1,

(a.2) The transformation function Ψ(ξ, λ) is differentiable at ξ and λ, and satisfies that ∣∂/∂ξΨ(ξ, λ)∣ ≤ Cλγ and ∣∂/∂λΨ(ξ, λ)∣ ≤ Cλγ∣ξ∣ for some constant C and γ (γ < 0). The assumption (a.1) is a regularization condition that controls the amount of fluctuation in f relative to its L2 norm. For (a.2), one can easily verify that, if choosing Ψ(·,·) to be the normal CDF with zero mean and variance λ, then C = 1 and γ = −1/2 (when λ > 1) or γ = −3/2 (when 0 < λ < 1). One can also choose the CDF of student-t distributions with variances λ.

For brevity of the presentation, the technical lemmas and proofs are deferred to Appendix B. It is noticed that the existence of the minimizer for the criterion (5) is guaranteed in analogy to Theorem 1 of Lin and Zhang (2006), by considering a design conditional on the input {yi, ζi1, … , ζis}, i = 1, … , n, where s is the initial truncation parameter.

Theorem 1

Consider the regression model (4) with ζik = Ψ(ξik, λk), where are FPC scores of xi(t) based on densely observed trajectories, and are the corresponding eigenvalues. Let be the minimizer of the target function (6) over , and let τn be the tuning parameter in (6). Assume that the assumptions (a.1)–(a.2) and (b.1)–(b.3) hold. If J(f0) > 0 and

| (7) |

then and . If J(f0) = 0 and

| (8) |

then and . It is worth mentioning that the technical difficulty arises from the unobserved variables ζi, and major effort has been devoted to tackle the influence of the estimated quantities on the resulting estimator by utilizing the analytical tools from the spectral decomposition of the auto-covariance operator of X. Theorem 1 suggests that, if the repeated measures observed for all individuals are sufficiently dense and J(f0) is bounded, the resulting estimator obtained from (6) has the rate of convergence n−2/5, which is the same as the rate when {ζi} are directly observed.

3. Simulation studies

To demonstrate the performance of the proposed CSE-FAM approach, we conduct simulation studies under different settings. In Section 3.1 and 3.2, we study the performance of CSE-FAM for dense and sparse functional data, respectively, assuming that the underlying true model contains both “important” and “unimportant” additive components. We compare the CSE-FAM approach with the FAM-type methods and the multivariate adaptive regression splines (MARS). The FAM-type methods are implemented in three different ways, two of which are the “oracle” methods: FAMO1 and FAMO2, both assuming full knowledge of the underlying model structure. In particular, the FAMO1 serves as the gold standard, in which both the true values of {ζik} and the true non-vanishing additive components are used. The FAMO2 is another type of oracle, in which the values of {ζik} are estimated through FPCA, but the true non-vanishing additive components are used. In Section 3.3, we study the performance of CSE-FAM when the underlying true model is actually non-sparse, and compare the results with the saturated and truncated FAM models. For each setting, we perform 100 Monte Carlo simulations and present the model selection and prediction results for the methods under comparison.

3.1. Dense functional data

We generate 1, 000 i.i.d. trajectories using 20 eigenfunctions, among which n = 200 are randomly allocated to the training set and the rest 800 form the test set. The functional predictors xi(t), t ∈ [0, 10], are measured over a grid with 100 equally spaced points, with independent measurement error eij ~ N(0, v2), v2 = 0.2. The eigenvalues of xi(t) are generated by λk = abk−1 with a = 45.25, b = 0.64. The true FPC scores {ξik} are generated from N(0, λk), and the eigenbasis {ϕk(·)} is taken to be the first twenty Fourier basis functions on [0, 10]. The mean curve is set to be μx(t) = t + sin(t). We use the normal CDF to obtain the transformed variables: ζk = Ψ(ξk; 0, λk), k = 1, … , 20. The values of yi are then generated by yi = f0(ζi) + ∊i, where ∊i ~ N(0, σ2) and σ2 = 1. We assume that the f0 only depends on three nonzero additive components, the first, the second and the fourth, i.e. f0(ζi) = b0 + f01(ζi1) + f02(ζi2) + f04(ζi4), . Here we take b0 = 1.4, f01(ζ1) = 3ζ1 − 3/2, f02(ζ2) = sin(2π(ζ2 − 1/2)), f04(ζ4) = 8(ζ4 − 1/3)2 − 8/9 and f0k(ζk) ≡ 0 for . This gives the signal-to-noise ratio (SNR) 2.2, where the SNR is defined as SNR = Var(f0(ζ))/Var(∊), where given that ζk ~ U[0, 1].

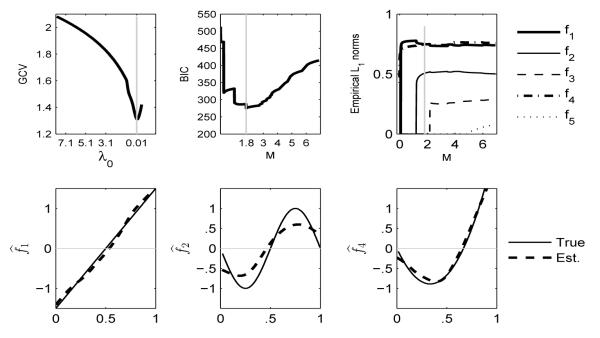

We apply the proposed CSE-FAM algorithm to the training data, following the FPCA and COSSO steps described in Section 2.1 and Appendix A. For illustration, we pick one Monte Carlo simulation and display the component selection and estimation results in Figure 1. In FPCA, the initial truncation is s = 18 accounting for nearly 100% of the total variation, and is passed to the COSSO step. The component selection is then achieved by tuning the regularization parameters λ0 in (9) with generalized cross validation(GCV) and M in (10) with Bayesian information criterion(BIC), illustrated in the top left and top middle panels of Figure 1, while the empirical L1 norms of , (computed by at different M) are shown in the top right panel. In the bottom panels of Figure 1, the resulting estimates of fk, k = 1, 2, 4, are displayed, and are shrunk to 0 as desired.

Fig. 1.

The plots of component selection and estimation results from one simulation. Top Left: GCV vs. λ0. Top middle: BIC vs. M. Top right: empirical L1 norms at different M values. The gray vertical bars in the top panels indicate the tuning parameters chosen. The three bottom panels show the estimated fk’s (dashed line) vs. the true (solid line), for k = 1, 2, 4.

The model selection and prediction results are presented in the top panel of Table 1. We implement the FAM procedure in a different manner from that in Müller and Yao (2008). Instead of using local polynomial smoothing for estimating each fk separately, we perform a more general additive fitting, the generalized additive model (GAM), on the transformed FPC scores which allows back-fitting and also provides a p-value for each additive components. The only reason for doing so is that the GAM algorithm shows more numerical stability especially when the number of additive components is large. Due to the use of the true model structure, both oracle methods FAMO1 and FAMO2 are expected to outperform the rest. Because of the estimation error induced in the FPCA step, FAMO2 is expected to sacrifice certain estimation accuracy and prediction power as compared to FAMO1. The FAMS is the saturated model based on the estimated FPC scores and the leading s terms used in the CSE-FAM. No model selection is performed in FAMS. The s values vary from 17 to 19 which take into account nearly 100% of the total variation of {xi(t)}. The MARS method is based on Hastie et al. (2001).

Table 1.

Summary of the model selection and prediction in 100 Monte Carlo simulations under the dense and sparse design.

| Data | Model | Model Size |

Selection Frequency |

PE | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |||||||||||

| CSE-FAM | 0 | 5 | 61 | 29 | 5 | 0 | 0 | 0 | 100 | 94 | 22 | 100 | 7 | 3 | 0 | 1 | 1.30 (.13) | |

| FAMS | 0 | 0 | 10 | 32 | 21 | 21 | 8 | 4 | 100 | 98 | 51 | 100 | 32 | 14 | 12 | 8 | 1.50 (.17) | |

| Dense | MARS | - | - | - | - | - | - | - | - | 100 | 99 | 60 | 100 | 41 | 23 | 25 | 18 | 1.46 (.16) |

| design | FAMO2 | 0 | 1 | 99 | - | - | - | - | - | 100 | 99 | - | 100 | - | - | - | - | 1.28 (.12) |

| FAMO1 | 0 | 0 | 100 | - | - | - | - | - | 100 | 100 | - | 100 | - | - | - | - | 1.07 (.06) | |

|

| ||||||||||||||||||

| CSE-FAM | 0 | 22 | 61 | 13 | 4 | 0 | 0 | 0 | 100 | 78 | 10 | 82 | 12 | 9 | 7 | 1 | 2.07 (.16) | |

| FAMS | 0 | 0 | 14 | 30 | 25 | 20 | 9 | 2 | 100 | 98 | 41 | 96 | 35 | 17 | 9 | 12 | 2.17 (.16) | |

| Sparse | MARS | - | - | - | - | - | - | - | - | 100 | 98 | 58 | 98 | 56 | 30 | 20 | 23 | 2.11 (.14) |

| design | FAMO2 | 0 | 4 | 96 | - | - | - | - | - | 100 | 98 | - | 98 | - | - | - | - | 2.01 (.14) |

| FAMO1 | 0 | 0 | 100 | - | - | - | - | - | 100 | 100 | - | 100 | - | - | - | - | 1.05 (.05) | |

It is noticed that the subjective truncation based on the explained variation in X is suboptimal for regression purpose (results not reported for conciseness). Therefore, in Table 1, we report (under the “model size” column) the counts of selected number of nonvanishing additive components in CSE-FAM, and the counts of the number of significantly nonzero additive components in FAM, FAMO1 and FAMO2. For display convenience, only the counts for model size up to 8 are reported. The “selection frequency” of Table 1 records the number of times that each additive component is estimated to be nonzero. For MARS, if the jth covariate is selected in one or more basis functions, we counted as 1 and 0 otherwise. Regarding the prediction error (PE), we use the population estimate from the training set (e.g. mean, covariance and eigenbasis) to get the FPC scores for both training and test set, then apply the estimated from the training set to get predictions for {yi} in the test set. The prediction errors are calculated by . From the top panel of Table 1, we see that under the dense design, the CSE-FAM chooses the correct models (with model size equals three) 61% of the time whereas the FAMS always overselects (α = 0.05 is used to retain significant additive components). The PE of CSE-FAM is the smallest among the three non-oracle models. As compared with the oracle methods, the CSE-FAM has less prediction power than FAMO2 (slightly) and FAMO1, which can be regarded as the price paid by both estimating the ζ and selecting the additive components.

To assess the estimation accuracy, the averaged integrated squared errors (AISE) for the first eight additive components are presented in the top panel of Table 2, where the ISE is defined by . From Table 2, we see the CSE-FAM provides smaller ISE for the truly zero components (fj, j = 1, 3, 6, 7, 8) than the FAMS. For the nonzero components, the CSE-FAM, FAMS and FAMO2 have similar AISE values.

Table 2.

Averaged ISE for 100 Monte Carlo simulations under the dense and sparse design.

| Name | AISE |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| f 1 | f 2 | f 3 | f 4 | f 5 | f 6 | f 7 | f 8 | f | ||

| CSE-FAM | .038 | .117 | .022 | .038 | .005 | .001 | .000 | .001 | .226 | |

| Dense | FAMS | .030 | .095 | .050 | .047 | .031 | .018 | .016 | .015 | .476 |

| design | FAMO2 | .027 | .090 | - | .042 | - | - | - | - | .158 |

| FAMO1 | .007 | .028 | - | .019 | - | - | - | - | .054 | |

|

| ||||||||||

| CSE-FAM | .033 | .22 | .036 | .298 | .055 | .040 | .045 | .001 | .720 | |

| Sparse | FAMS | .016 | .118 | .032 | .159 | .102 | .121 | .399 | 2.64 | > 103 |

| design | FAMO2 | .026 | .129 | - | .220 | - | - | - | - | .376 |

| FAMO1 | .007 | .016 | - | .013 | - | - | - | - | .036 | |

3.2. Sparse functional data

To compare with the dense case, we also conducted a simulation to examine the performance of CSE-FAM for sparse functional data. We generated 1, 200 i.i.d. trajectories, with 300 in the training set and 900 in the test set. In each trajectory, there are 5 – 10 repeated observations uniformly located on [0, 10], with the number of points chosen from 5 to 10 with equal probabilities. The other settings are the same as in the dense design. The summary of the model selection, prediction and estimation results are presented in the bottom panel of Table 1 and Table 2. We observe the similar pattern as in the dense design case. Moreover, Table 2 suggests that, for the sparse design, the FAMS estimate of fk becomes quite unstable for higher order components (e.g. k ≥ 7). The AISE increases rapidly due to the influence of outlying estimates. This is not a surprise, because under the sparse design the high-order eigenfunctions and FPC scores are difficult to be estimated accurately due to the sparseness of the data and the moderate sample size, which leads to inaccurate fk estimates when the saturated model FAMS is used. In this situation, we see that the proposed CSE-FAM model still performs quite stable, since the COSSO penalty has the effect of automatically down-weighting the “unimportant” components. This provides further support for the proposed CSE-FAM approach.

3.3. Non-sparse underlying additive components

To show the model performance when the true additive components are actually non-sparse, we conduct an additional simulation with two settings (Study I and Study II) for the dense design, and compare the CSE-FAM with two versions of the FAM model: the saturated model FAMS as defined in Section 3.1, and the truncated model FAMT with a truncation chosen to retain 99% of the total variation. In Study I, the true model contains three “larger” additive components {f01, f02, f04}, taking the same form as in Section 3.1 except being rescaled by a constant 1/2. The rest are “smaller” additive components, each randomly selected from {f01, f02, f04} with equal probability and rescaled by a smaller constant uniformly chosen from [1/17, 1/14]. The data generated have a lower (more challenging) signal-to-noise ratio (SNR) around 0.60, among which 8.7% are from the “smaller” components. The results are listed in the top panel of Table 3, which shows that the CSE-FAM tends to favor smaller model size than FAMS. We also observe that the model size of FAMT tends to be smaller than CSE-FAM since it adopts more truncation with the 99% threshold. It is important to note that CSE-FAM in fact yields PE and AISE substantially smaller than FAMS, and the results of CSE-FAM are comparable to that of FAMT. In Study II, we replace the three “larger” components by the “smaller” ones, therefore all additive components have roughly equal small contributions. We select the scaling constant uniformly from [1/8, 1/6] so that the total SNR is 0.30 on average. The results listed in the bottom panel of Table 3 suggest that the CSE-FAM now tends to select more components (i.e. produce non-sparse fits) and again yields smaller PE and AISE than both FAMS and FAMT. Overall, this simulation suggests that, the proposed CSE-FAM is still a reasonable option even if the underlying true model is non-sparse. It is also worth mentioning that the gain of the CSE-FAM is more apparent in the challenging settings with low SNR.

Table 3.

An additional simulation for cases with non-sparse additive components. I: the true model contains both “larger” and “smaller” additive components; II: the true model only contains “small” additive components.

| Model | Model Size |

Selection Frequency |

PE | AISE of f |

|||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||||||||||||

| CSE-FAM | 0 | 3 | 20 | 34 | 26 | 9 | 6 | 2 | 100 | 88 | 12 | 100 | 17 | 12 | 10 | 10 | 1.19 (.08) | .17 | |

| I | FAMS | 0 | 4 | 16 | 18 | 29 | 17 | 7 | 8 | 100 | 92 | 16 | 99 | 20 | 17 | 19 | 16 | 1.33 (.12) | .33 |

| FAMT | 0 | 4 | 39 | 35 | 15 | 6 | 0 | 1 | 100 | 91 | 15 | 100 | 19 | 15 | 9 | 9 | 1.22 (.08) | .18 | |

|

| |||||||||||||||||||

| CSE-FAM | 1 | 2 | 4 | 12 | 13 | 20 | 26 | 13 | 46 | 42 | 33 | 42 | 36 | 42 | 38 | 44 | 1.25 (.07) | .12 | |

| II | FAMS | 1 | 6 | 8 | 25 | 14 | 13 | 13 | 10 | 42 | 45 | 29 | 37 | 29 | 38 | 36 | 36 | 1.38 (.11) | .42 |

| FAMT | 13 | 30 | 22 | 20 | 6 | 6 | 2 | 0 | 34 | 35 | 20 | 31 | 25 | 38 | 34 | 30 | 1.32 (.08) | .20 | |

4. Real data application

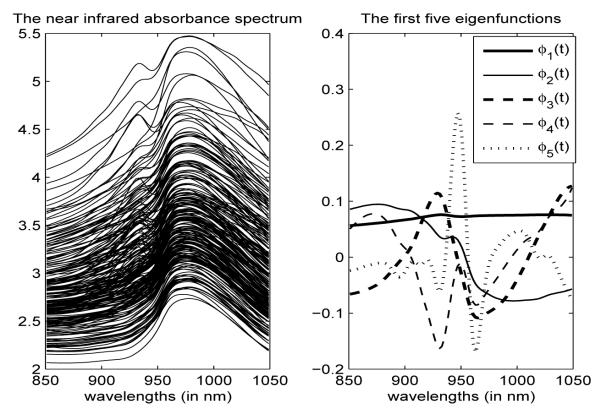

We demonstrate the performance of the proposed method through the regression of protein content on the near infrared absorbance spectral measured over 240 meat samples. The dataset is collected by the Tecator company and is publicly available on the StatLib web-site (http://lib.stat.cmu.edu). The measurements were made through a spectrometer named Tecator Infratec Food and Feed Analyzer. The spectral curves were recorded at wavelengths ranging from 850nm to 1050nm. For each meat sample the data consist of a 100 channel spectrum of absorbances (100 grid points) as well as the contents of moisture (water), fat and protein. The absorbance is −log10 of the transmittance measured by the spectrometer. The three contents, measured in percent, are determined by analytic chemistry. Of primary interest is to predict the protein content using the spectral trajectories. The 240 meat samples were randomly split into a training set (with 185 samples) and a test set (with 55 samples). We aim to predict the content of protein in the test set based on the training data. Figure 2 illustrates the spectral curves and the first five eigenfunctions estimated using FPCA.

Fig. 2.

The near infrared absorbance spectral curves (left) and the first five estimated eigenfunctions(right).

We initially retain the first 20 FPCs which take into account nearly 100% of the total variation. The proposed CSE-FAM is then applied for component selection and estimation. The determination of the tuning parameters in the COSSO step is guided by the GCV criterion for λ0 which gives λ0 = 0.0013, and by 10-fold cross-validation for M which gives M = 10.0. The estimated additive components are plotted in Figure 3, from which we see that the CSE-FAM selects 12 out of the 20 components, , and the other components are estimated to be zero. To assess the performance of the proposed method, we report the prediction error (PE) on the test set in Table 4, where the PE is calculated in the same way as in Section 3. We also report the quasi-R2 for the test set, which is defined as . To show the influence of the initial truncation, we also use a small value of s, s = 10 in CSE-FAM, which gives suboptimal results. This suggests that we shall use a suffciently large s to begin with. The FAM is carried out with the leading 5, 10, 20 FPCs, respectively. An interesting phenomenon is that, though the high-order FPCs (over 10) explain very little variation of the functional predictor (less than 1%), their contribution to the prediction is surprisingly substantial. Such phenomena are also observed for MARS and partial least squares (PLS is a popular approach in chemometrics; see Xu et al. (2007) and the references therein). One more comparison is with the classical functional linear model (FLM) with the estimated leading FPCs served as predictors, where a heuristic AIC is used to choose the first 7 components. From Table 4, we see that, when the initial truncation is set at 10, the proposed CSE-FAM is not obviously advantageous compared to FAM. As the number of FPCs increases to 20, the proposed method provides much smaller PE and higher than all other methods. A sensible explanation is that, for this data, most of the first 10 FPCs (except the 9th) have nonzero contributions to the response (shown in Figure 3), therefore penalizing these components does not help to improve the prediction. However, as the number of FPC scores increases, more redundant terms come into play, so the penalized method (CSE-FAM) gains more prediction power. We have repeated this analysis for different random splits of the training and test sets, and the conclusions stay virtually the same.

Fig. 3.

Plots of the estimated additive components {fk(·), k = 1, … , 20}.

Table 4.

Prediction results on the test set as compared with several other methods. Note: PC10 indicates that 10 FPC scores are used. PLD20 indicates the number of PLS directions used is 20. AIC7: 7 FPC scores are used based on the regression AIC criterion.

| CSE-FAM |

FAM |

MARS | PLS | FLM | ||||

|---|---|---|---|---|---|---|---|---|

| s = 10 | s = 20 | PC5 | PC10 | PC20 | PC20 | PLD20 | AIC7 | |

| PE | 2.22 | 0.72 | 3.98 | 2.13 | 0.84 | 0.77 | 1.02 | 1.50 |

| 0.82 | 0.94 | 0.68 | 0.83 | 0.93 | 0.93 | 0.92 | 0.88 | |

5. Discussion

We proposed a structure estimation method for functional data regression where a scalar response is regressed on a functional predictor. The model is constructed in the framework of FAM, where the additive components are functions of the scaled FPC scores. The selection and estimation of the additive components are performed through penalized least squares using the COSSO penalty in the context of RKHS. The proposed method allows for more general nonparametric relationships between the response and predictors, therefore serves as an important extension of the functional linear regression. Through the adoption of the additive structure, it avoids the curse of dimensionality caused by the infinite-dimensional predictor process. The proposed method provides a way to select the important features of the predictor processes and simultaneously shrink the unimportant ones to zero. This selection scenario takes into account not only the explained variation of the predictor process, but also its contribution to the response. The theoretical result shows that, under the dense design, the nonparametric rate from component selection and estimation will dominate the discrepancy due to the unobservable FPC scores.

A concern raised is whether the sparsity is necessary in the FAM framework. The sparseness assumption in general helps balance the trade-off between variance and bias, which may lead to improved model performance. This can be particularly useful when part of the predictor has negligible contribution to the regression. Even if the underlying model is in fact non-sparse and one only cares about estimation and prediction, the proposed CSE-FAM is still a reasonable option, as illustrated by the simulation in Section 3.3. We also point out that, when all non-zero additive components are linear, the COSSO penalty reduces to the adaptive Lasso penalty. An additional simulation (not reported for conciseness) has shown that the proposed method produces estimation and prediction results comparable with adaptive Lasso. Moreover, the COSSO penalty requires that s < n, which does not conflict with the requirement that the initial truncation s is chosen suffciently large to include all important features. In practice the number of FPCs accounting for nearly 100% predictor variation is often far less than the sample size n due to the fast decay of the eigenvalues. Finally both simulated and real examples indicate that the model performance is not sensitive to s as long as it is chosen large enough.

On the computation side, our algorithm takes advantage of both FPCA and COSSO. On a desktop with Intel(R) Core(TM) i5-2400 CPU with a 3.10 GHZ processor and 8GB RAM, each Monte Carlo sample in Section 3.1 takes 30 seconds; and the real data analysis takes about 10 seconds. As far as the dimensionality is concerned, the capacity and speed depend on the particular FPCA algorithm used. We have used the PACE algorithm which can deal with fairly large data (http://anson.ucdavis.edu/~ntyang/PACE/). For dense functional data with 5000 or more dimensions, pre-binning is suggested to accelerate the computation. FPCA algorithm geared towards extremely large dimension (with identical time grid for all subjects) is also available; for instance, Zipunnikov et al. (2011) considered fMRI data with dimension in the order of O(107) through partitioning the original data matrix to blocks and performing SVD using blockwise operation.

Although we have focused on the FPC-based analysis in this work, the CSE-FAM framework is generally applicable to other basis structure, e.g. splines and wavelets, where the additive components are functions of the corresponding basis coeffcients of the predictor process. It may also work for nonparametric penalties other than COSSO, such as the sparsity-smoothness penalty proposed in Meier et al. (2009). The proposed method may be further extended to accommodate categorical responses, where an appropriate link function can be chosen to associate the the mean response with the additive structure. Another possible extension is the regression with multiple functional predictors, where component selection can be performed for selecting significant functional predictors. In this case the additive components associated with each functional predictor need to be selected in a group manner.

Acknowledgements

This work was conducted through the Analysis of Object Data program at Statistical and Applied Mathematical Sciences Institute, U.S.A. Fang Yao’s research was partially supported by an individual discovery grant and DAS from NSERC, Canada. Hao Helen Zhang was supported by US National Institutes of Health grant R01 CA-085848 and National Science Foundation DMS-0645293.

Appendix A. The estimation procedure

To estimate ζi, we assume that the functional predictors are observed with measurement error on a grid of . We adopt two different procedures for functional data that are either densely or sparsely observed.

Obtain in the dense design. If {xi(t)} are observed on a suffciently dense grid for each subject, we apply the local linear smoothing to the data {tij, xij} j=1,…,Ni individually, which gives the smooth approximation . The mean and covariance function are obtained by , and , respectively. The eigenvalues and eigenfunctions are estimated by solving the equation for λk and ϕk(·), subject to and ∫τ ϕm(t)ϕk(t)dt = 0 for m ∉ k, k,m = 1, … , s. The FPC scores are obtained by . Finally CDF transformation yields .

Obtain in the sparse design. We adopt the principal component analysis through the conditional expectation (PACE) proposed by Yao et al. (2005), where the mean estimate is obtained using local linear smoothers based on the pooled data of all individuals. In particular, with K(·) a kernel function and b a bandwidth. For the covariance estimation, denote and the be a bivariate kernel function with a bandwidth h, one minimizes . One may estimate the noise variance ν2 by taking the difference between the diagonal of the surface estimate and the local polynomial estimate obtained from the raw variances {(tij, Gijj) : j = 1, … , Ni; i = 1, … , n}. The eigenvalues/functions are obtained as in the dense case. To estimate the FPC scores, denote xi = (xi1, … , xiNi)T, the PACE estimate is given by , which leads to . Here ϕik = (ϕk(ti1), … , ϕk(tiNi))T, μi = (μ(ti1), … , μ(tiNi))T, and the (j, l)th element (∑xi)j,l = G(tij, til) + ν2δjl. with δjl = 1 if j = l and 0 otherwise, and “” is the generic notation for their estimates.

We next estimate by minimizing (6), following the COSSO procedure conditional on the estimated values . It is important to note that the target function (6) is equivalent to , subject to θk ≥ 0 (Lin and Zhang, 2006), which enables a tow-step iterative algorithm. Specifically, one first find and by minimizing

| (9) |

with fixed θ = (θ1, … , θs)T, where y = (y1, … , yn)T, λ0 is the smoothing parameter, 1n is the n × 1 vector of 1’s, , and Rk is the reproducing kernel of , i.e. . This optimization is exactly a smoothing spline problem. We then fix c and b, and find θ by minimizing

| (10) |

where z = y – (1/2)nλ0c – b1n and Q is an n × s matrix with the kth column being Rkc. This step is the same as calculating the non-negative garrote estimate using M as the tuning parameter. Upon convergence, the final estimation of f is then given by .

Regarding the choice of tuning parameters, besides the sufficiently large initial truncation s, the most relevant are the λ0 and M in the COSSO step, while the bandwidths in the smoothing step of FPCA are chosen by traditional cross-validation or its generalized approximation. For more details, see Fan and Gijbels (1996) for the dense case and Yao et al. (2005) for the sparse case. We suggest to select λ0 using the generalized cross validation(GCV), i.e. with . For choosing M, we adopt the BIC criterion i.e. where df is the degree of freedom in (10), while an alternative is the cross-validation which requires more computation.

Appendix B. Technical assumptions and proofs

We first lay out the commonly adopted regularity conditions on the functional predictor process X for the dense design. Recall that {tij, j = 1, … , Ni; i = 1, … , n} is the grid on the support over which the functional predictor xi(t) is observed. Without loss of generality, let . Denote ti0 = 0, tiNi = a and for some d > 0. Denote the bandwidth used for individually smoothing the ith trajectory as bi.

(b.1) Assume that the second derivative X(2)(t) is continuous on with probability 1 (w.p.1.), and ∫E[{X(k)(t)}4]dt < ∞ w.p.1. for k = 0, 2. Also assume , where eij is the i.i.d. measurement error of the observed trajectory xi.

(b.2) Assume that there exists m ≡ m(n) → ∞, such that miniNi ≤ m as n → ∞. Denoting Δi = max{tij – ti,j–1 : j = 1, … ,Ni + 1}, assume that maxiΔi = O(m−1).

(b.3) Assume that there exists a sequence b = b(n), such that cb ≤ minibi maxibi ≤ Cb for some C ≥ c > 0. Furthermore, b → 0 and m → ∞ as n → ∞ in rates such that (mb)−1 + b4 + m−2 = O(n−1), e.g. b = O(n−1/2), m = O(n3/2). Also assume that the kernel function K(·) is compactly supported and Lipschitz continuous.

Denote the operator associated with the covariance function G(s, t) by G, and define . Denote the smoothed trajectory of Xi(t) using local linear smoothing with bandwidth bi by and the estimated eigenvalue/function and FPC score in the dense design by , respectively. Since the decay of eigenvalues plays an important role, define δ1 = λ1 – λ2 and δk = minj≤k(λj–1 – λj, λj – λj+1) for k ≥ 2.

Lemma 1

Under the assumptions (b.1)–(b.3), we have

| (11) |

| (12) |

| (13) |

where O(·) and Op(·) are uniform over 1 ≤ i ≤ n. Note that the measurement error eij is independent of the process Xi, which makes it possible to factor the probability space Ω = ΩX × Ωe and characterize the individual smoothing and cross-sectional averaging separately. Then (11) can be shown using standard techniques with local polynomial smoothing (not elaborated for conciseness), see Hall et al. (2006) for more details of this type of arguments. Consequently (12) and (13) follow immediately by the classical perturbation result provided in Lemma 4.3 of Bosq (2000). We see from Lemma 1 that, when the measurements are suffciently dense for each subject satisfying (b.3), the impact due to individual smoothing on the estimated population quantities (e.g. mean, covariance, eigenvalues/functions) are negligible.

The following lemma characterizes the discrepancy between the underlying and estimated transformed variables ζik, as well as the boundedness of the derivative of the resulting estimate .

Lemma 2

Under the assumptions (a.2) and (b.1)–(b.3), we have

| (14) |

| (15) |

Additionally, if the assumption (a.1) holds, let be the estimate of f0 obtained by minimizing (6). Then there exists a constant ρ > 0, such that

| (16) |

uniformly over 1 ≤ k ≤ s and 1 ≤ i ≤ n.

Proof of Lemma 2

From Lemma 1 and (a.2), one has in probability,

Abbreviate to ∑k and Op(·) to ~. Since , it easy to see that To show (15) for any fixed s, note . Then

Denoting the additive terms in above formula E1 through E6, we have O(1). For E4, applying Cauchy-Schwarz inequality,

Similarly, one has E5 = Op(n−1), using the facts that and . This proves (15).

We now turn to (16). For any , one has

where R(·, ·) is the reproducing kernel of space and is the corresponding inner product. Therefore,

Since J(f) is a convex functional and a pseudonorm, we have

| (17) |

We first claim that ∥f∥ ≤ J(f), due to . If b = 0, the inequality in (17) implies that ∥f∥ ≤ J(f). If b ≠ 0, one can write . For minimizing (5), it is equivalent to substitute J(f) with , and (17) implies . Therefore we have ∥f∥ ≤ J(f) in general. Secondly, due to the orthogonality of , we can write R(u, v) = R1(u1, v1)+R2(u2, v2)+ … + Rs(us, vs) by Theorem 5 in Berlinet and Thomas-agnan (2004), where Rk(·, ·) is the reproducing kernel of the subspace . For being a second order Sobolev Hilbert space, we have Rk(s, t) = h1(s)h1(t) + h2(s)h2(t) – h4(∣s – t∣), with h1(t) = t – 1/2, and . Therefore Rk(s, t) is continuous and differentiable over [0, 1]2 and we can find constants ak and bk such that

for k = 1,…, s. One can find a uniform bound c with . On the other hand, a minimizing (6) is equivalent to minimizing under the constraint that for some . Therefore let ρ = c1/2 · , we have

Before stating Lemma 3, we define the entropy of with respect to the ∥·∥n metric. For each ω > 0, one can find a collection of functions {g1, g2,…gN} in such that for each , there is a j = j(g) ∈ {1, 2, … N} satisfying ∥g – gj∥n ≤ ω. Let be the smallest value of N for which such a cover of balls with radius ω and centers g1, g2,…, gN exists. Then is called the ω-entropy of .

Lemma 3

Assume that , where is second order Sobolev space. Denote the ω-entropy of Then

| (18) |

for all ω > 0, n ≥ 1, and for some constants A > 0. Furthermore, for independent with finite variance and J(f0) > 0,

| (19) |

The inequality (18) is implied by Lemma A.1. of Lin and Zhang (2006). As the {∊i} satisfy the sub-Gaussian error assumption, the same argument as in Van de Geer (2000) (pg. 168) leads to (19). We are now ready to present the proof of the main theorem.

Proof of Theorem 1

We first center the functions as in the proof of theorem 2 in Lin and Zhang (2006) so that (18) and (19) holds. Write , such that , and write such that and . Since the target function can be written as

one must have that minimizes {(c0 – c)2 + 2n−1 (c0 – c) Σi∊i} and the additive parts of minimizes the rest. Therefore we have , implying . Denote

| (20) |

One can substitute with in (20). In the rest of the proof, we suppress the tilde notation of and for convenience. Since , one has , which implies

Simplification of the above inequality gives

| (21) |

Let . Since both and are in , . Taylor expansion of g(·) gives , for all ζ ∈ (0, 1)s, where

and plug it into the right hand side (r.h.s.) of (21), leading to the following upper bound,

| (22) |

Applying Lemma 3, one can bound the first term in (22) as follow,

For the left hand side (l.f.s.) of (21), applying the Taylor expansion, , to the first term

where . Substituting the terms on both sides of (21), we obtain

Dropping the positive term on the l.h.s. and rearranging the terms,

| (23) |

where and

For T1, by Cauchy-Schwarz inequality and Lemma 2, we have , where

i.e. . From (a.1) and (16) of Lemma 2, there exists independent r.v. {Bi} with such that maxk. Also note that ∥g∥n→∥g∥L2 a.s. by the strong law of large numbers. Therefore we have, for some constant c,

For the remaining terms, , and

We can now simplify (23) as follows:

If , we have

| (24) |

otherwise,

| (25) |

The proof will be completed by solving them separately. For the case of (24), there are two possibilities.

Note that the results (26) and (27) are equivalent under the condition (7),.

For the case of (25), if , we have , otherwise . The first inequality implies that

| (28) |

For the second inequality, if , we , implying

| (29) |

If and , then

| (30) |

When J(f0) > 0, given the condition (7), the rates of and from (29), (26) and (27) are the same, and dominate those of (28) and (30). Therefore we have and . When J(f0) = 0, then (24) implies (26), while (25) implies (28) and (30). The possibility (ii) of (24) does not exist, nor does the result in (29). Under condition (8), the result of (26) is the same as those of (28) and (30). Therefore and

Contributor Information

Hongxiao Zhu, Virginia Tech, Blacksburg, USA.

Fang Yao, University of Toronto, Toronto, Canada.

Hao Helen Zhang, University of Arizona, Tucson, USA.

References

- Bair E, Hastie T, Paul D, Tibshirani R. Prediction by supervised principal components. J. Am. Statist. Ass. 2006;101(473):119–137. [Google Scholar]

- Berlinet A, Thomas-agnan C. Reproducing Kernel Hilbert Space in Probability and Statistics. Kluwer Academic Publishers; Norwell, Massachusetts USA: 2004. [Google Scholar]

- Bosq D. Linear Processes in Function Spaces: Theory and Applications. Volume 149. Springer-Verlag Inc.; New York: 2000. [Google Scholar]

- Cardot H, Ferraty F, Mas A, Sarda P. Testing hypotheses in the functional linear model. Scand. J. Stat. 2003;30:241–255. [Google Scholar]

- Cardot H, Ferraty F, Sarda P. Functional linear model. Stat. Probabil. Lett. 1999;45:11–22. [Google Scholar]

- Fan J, Gijbels I. Local Polynomial Modelling and Its Applications. Chapman and Hall; London: 1996. [Google Scholar]

- Fan J, Li R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Statist. Ass. 2001;96:1348–1360. [Google Scholar]

- Fan J, Zhang J. Two-step estimation of functional linear models with applications to longitudinal data. J.R. Statist. Soc. B. 2000;62:303–322. [Google Scholar]

- Goldsmith J, Bobb J, Crainiceanu CM, Caffo B, Reich D. Penalized functional regression. J. Comput. Graph. Stat. 2011;20(4):830–851. doi: 10.1198/jcgs.2010.10007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadi AS, Ling RF. Some cautionary notes on the use of principal components regression. Am. Stat. 1998;52(1):15–19. [Google Scholar]

- Hall P, Hosseini-Nasab M. On properties of functional principal components analysis. J.R. Statist. Soc. B. 2006;68:109–126. [Google Scholar]

- Hall P, Müller H, Wang J. Properties of principle component methods for functional and longitudinal data analysis. Ann. Statist. 2006;34:1493–1517. [Google Scholar]

- Hastie T, Tibshirani R, Friedman J. The Elements of Statistical Learning. Springer; New York: 2001. [Google Scholar]

- Hastie T, Tibshirani RJ. Generalized Additive Models. Chapman & Hall/CRC; London: 1990. [Google Scholar]

- James GM. Generalized linear models with functional predictors. J.R. Statist. Soc. B. 2002;64(3):411–432. [Google Scholar]

- James GM, Wang J, Zhu J. Functional linear regression that’s interpretable. Ann. Statist. 2009;37:2083–2108. [Google Scholar]

- Li Y, Wang N, Carroll R. Generalized functional linear models with semi-parametric single-index interactions. J. Am. Statist. Ass. 2010;105:621–633. doi: 10.1198/jasa.2010.tm09313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin Y, Zhang H. Component selection and smoothing in multivariate non-parametric regression. Ann. Statist. 2006;34:2272–2297. [Google Scholar]

- Marra G, Wood SN. Practical variable selection for generalized additive models. J. Comput. Graph. Stat. 2011;55(7):2372–2387. [Google Scholar]

- Meier L, Van de Geer S, Bühlmann P. High-dimensional additive modeling. Ann. Statist. 2009;37:3779–3821. [Google Scholar]

- Müller H, Stadtmüller U. Generalized functional linear models. Ann. Statist. 2005;33(2):774–805. [Google Scholar]

- Müller H, Yao F. Functional additive models. J. Am. Statist. Ass. 2008;103(484):1534–1544. [Google Scholar]

- Ramsay JO, Silverman BW. Functional Data Analysis. Section Edition Springer; New York: 2005. [Google Scholar]

- Ravikumar P, Lafferty J, Liu H, Wasserman L. Sparse additive models. J. R. Statist. Soc. B. 2009;71:1009–1030. [Google Scholar]

- Rice JA, Silverman BW. Estimating the mean and covariance structure nonparametrically when the data are curves. J. R. Statist. Soc. B. 1991;53:233–243. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. J. R. Statist. Soc. B. 1996;58:267–288. [Google Scholar]

- Van de Geer S. Empirical Processes in M-estimation. Cambridge University Press; 2000. [Google Scholar]

- Wahba G. Spline Models for Observational Data. SIAM; Philadelphia: 1990. [Google Scholar]

- Wood SN. Generalized Additive Models: An Introduction with R. Chapman and Hall; New York: 2006. [Google Scholar]

- Xu L, Jiang J, Wu H, Shen G, Yu R. Variable-weighted PLS. Chemometr. Intell. Lab. 2007;85:140–143. [Google Scholar]

- Yao F. Asymptotic distributions of nonparametric regression estimators for longitudinal or functional data. J. Multivariate Anal. 2007;98:40–56. [Google Scholar]

- Yao F, Müller HG. Functional quadratic regression. Biometrika. 2010;97:49–64. [Google Scholar]

- Yao F, Müller HG, Wang JL. Functional data analysis for sparse longitudinal data. J. Am. Statist. Ass. 2005;100:577–590. [Google Scholar]

- Zhu H, Vannucci M, Cox DD. Functional data classification in cervical pre-cancer diagnosis - a bayesian variable selection model. Proceedings of the 2007 Joint Statistical Meetings.2007. [Google Scholar]

- Zhu H, Vannucci M, Cox DD. A Bayesian hierarchical model for classification with selection of functional predictors. Biometrics. 2010;66:463–473. doi: 10.1111/j.1541-0420.2009.01283.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zipunnikov V, Caffo B, Yousem DM, Davatzikos C, Schwartz BS, Crainiceanu C. Functional principal component model for high-dimensional brain imaging. NeuroImage. 2011;58(3):772–784. doi: 10.1016/j.neuroimage.2011.05.085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zou H. The adaptive lasso and its oracle properties. J. Am. Statist. Ass. 2006;101:1418–1429. [Google Scholar]