Abstract

Hearing aids are used to improve sound audibility for people with hearing loss, but the ability to make use of the amplified signal, especially in the presence of competing noise, can vary across people. Here we review how neuroscientists, clinicians, and engineers are using various types of physiological information to improve the design and use of hearing aids.

1. Introduction

Despite advances in hearing aid signal processing over the last few decades and careful verification using recommended clinical practices, successful use of amplification continues to vary widely. This is particularly true in background noise, where approximately 60% of hearing aid users are satisfied with their performance in noisy environments [1]. Dissatisfaction can lead to undesirable consequences, such as discontinued hearing aid use, cognitive decline, and poor quality of life [2, 3].

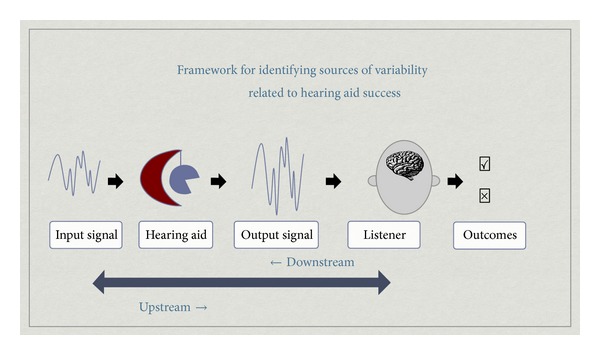

Many factors can contribute to aided speech understanding in noisy environments, including device centered (e.g., directional microphones, signal processing, and gain settings) and patient centered variables (e.g., age, attention, motivation, and biology). Although many contributors to hearing aid outcomes are known (e.g., audibility, age, duration of hearing loss, etc.), a large portion of the variance in outcomes remains unexplained. Even less is known about the influence interacting variables can have on performance. To help advance the field and spawn new scientific perspectives, Souza and Tremblay [4] put forth a simple framework for thinking about the possible sources in hearing aid performance variability. Their review included descriptions of emerging technology that could be used to quantify the acoustic content of the amplified signal and its relation to perception. For example, new technological advances (e.g., probe microphone recordings using real speech) were making it possible to explore the relationship between amplified speech signals, at the level of an individual's ear, and the perception of those same signals. Electrophysiological recordings of amplified signals were also being introduced as a potential tool for assessing the neural detection of amplified sound. The emphasis of the framework was on signal audibility and the ear-to-brain upstream processes associated with speech understanding. Since that time, many new directions of research have emerged, as has an appreciation of the cognitive resources involved when listening to amplified sounds. We therefore revisit this framework when highlighting some of the advances that have taken place since the original Souza and Tremblay [4] article (e.g., SNR, listening effort, and the importance of outcome measures) and emphasize the growing contribution of neuroscience (Figure 1).

Figure 1.

Framework for identifying sources of variability related to hearing aid success.

2. Upstream, Downstream, and Integrated Stages

A typical example highlighting the interaction between upstream and downstream contributions to performance outcomes is that involving the cocktail party. The cocktail party effect is the phenomenon of a listener being able to attend to a particular stimulus while filtering out a variety of competing stimuli, similar to partygoer focusing on a single conversation in a noisy room [5, 6]. The ability of a particular individual to “tune into” a single voice and “tune out” all that is coming out of their hearing aid is also an example of how variables specific to the individual can also contribute to performance outcomes.

When described as a series of upstream events that could take place in someone's everyday life, the input signal refers to the acoustic properties of the incoming signal and/or the context in which the signal is presented. It could consist of a single or multiple talkers; it could be an auditory announcement projected overhead from a loudspeaker at the airport, or it could be a teacher giving homework instructions to children in a classroom. It has long been known that the ability to understand speech can vary in different types of listening environments because the signal-to-noise ratio (SNR) can vary from −2 dB, when in the presence of background noise outside the home, to +9 dB SNR, a level found inside urban homes [7]. Support for the idea that environmental SNR may influence a person's ability to make good use of their hearing aids comes from research showing that listeners are more dissatisfied and receive less benefit with their aids in noise than in quiet environments (e.g., [1, 8, 9]). From of a large-scale survey, two of the top three reasons for nonadoption of aids were that aids did not perform well in noise (48%) and/or that they picked up background sounds (45%; [10]). And of the people who did try aids, nearly half of them returned their aids due to lack of perceived benefit in noise or amplification of background noise. It is therefore not surprising that traditional hearing aid research has focused on hearing aid engineering in attempt to improve signal processing in challenging listening situations, so that optimal and audible signals can promote effective real-world hearing.

The next stage emphasizes the contribution ofthe hearing aid and how it modifies the acoustic signal (e.g., compression, gain and advanced signal processing algorithms). Examples include the study of real-world effectiveness of directional microphone and digital noise reduction features in hearing aids (e.g., [11, 12]). Amplification of background noise is one of the most significant consumer-based complaints associated with hearing aids, and directional hearing aids can improve the SNR of speech occurring in a noisy background (e.g., [13, 14]). However, these findings in the laboratory may not translate to perceived benefit in the real world. When participants were given a four-week take-home trial, omnidirectional microphones were preferred over directional microphones [15]. Over the past several decades, few advances in hearing aid technology have been shown to result in improved outcomes (e.g., [9, 16]). Thus, attempts at enhancing the quality of the signal do not guarantee improved perception. It suggests that something, in addition to signal audibility and clarity, contributes to performance variability.

What is received by the individual's auditory system is not the signal entering the hearing aid but rather a modified signal leaving the hearing aid and entering the ear canal. Therefore, quantification of the signal at the output of the hearing aid is an important and necessary step to understanding the biological processing of amplified sound. Although simple measures of the hearing aid output (e.g., gain for a given input level) in a coupler (i.e., simulated ear canal) have been captured for decades, current best practice guidelines highlight the importance of measuring hearing aid function in the listener's own ear canal. Individual differences in ear canal volume and resonance and how the hearing aid is coupled to an individual's ear can lead to significant differences in ear canal output levels [17]. Furthermore, as hearing aid analysis systems become more sophisticated, we are able to document the hearing aid response to more complex input signals such as speech or even speech and noise [18], which provides greater ecological validity than simple pure tone sweeps. In addition, hearing aid features can alter other acoustic properties of a speech signal. For example, several researchers have evaluated the effects of compression parameters on temporal envelope or the slow fluctuations in a speech signal [19–23], spectral contrast or consonant vowel ratio [20, 24–26], bandwidth [24], effective compression ratio [23, 24, 27], dynamic range [27], and audibility [24, 27, 28]. For example, as the number of compression channels increases, spectral differences between vowel formants decrease [26], the level of consonants compared to the level of vowels increases [29], and dynamic range decreases [27]. Similarly, as compression time constants get shorter, the temporal envelope will reduce/smear [20, 21, 23] and the effective compression ratio will increase [27]. A stronger compression ratio has been linked to greater temporal envelope changes [21, 23]. Linear amplification may also create acoustic changes, such as changes in spectral contrast if the high frequencies have much more gain than the low frequencies (e.g., [24]). The acoustic changes caused by compression processing have been linked to perceptual changes in many cases [19–22, 24, 26, 30]. In general, altering compression settings (e.g., time constants or compression ratio) modifies the acoustics of the signal and the perceptual effects can be detrimental. For this reason, an emerging area of interest is to examine how frequency compression hearing aid technology affects the neural representation and perception of sound [31].

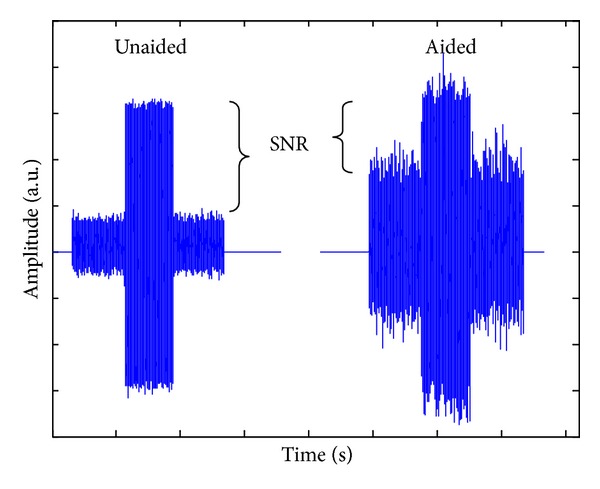

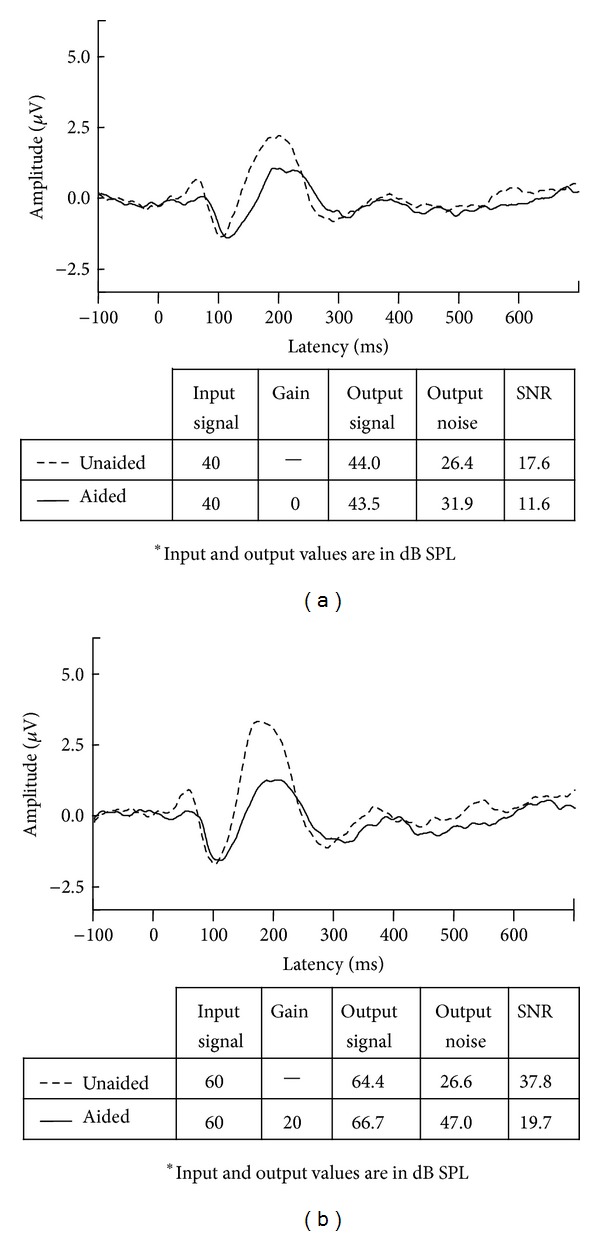

Characteristics of the listener (e.g., biology) can also contribute to a person's listening experience. Starting with bottom-up processing, one approach in neuroscience has been to model the auditory-nerve discharge patterns in normal and damaged ears in response to speech sounds so that this information can be translated into new hearing aid signal processing [32, 33]. The impact of cochlear dead regions on the fitting of hearing aids is another example of how biological information can influence hearing aid fitting [34]. Further upstream, Willott [49] established how aging and peripheral hearing loss affects sound transmission, including temporal processing, at higher levels in the brain. For this reason, brainstem and cortical evoked potentials are currently being used to quantify the neural representation of sound onset, offset and even speech envelope, in children and adults wearing hearing aids, to assist clinicians with hearing aid fitting [36–39]. When evoked by different speech sounds at suprathreshold levels, patterns of cortical activity (e.g., P1-N1-P2—also called acoustic change responses (ACC)) are highly repeatable in individuals and can be used to distinguish some sounds that are different from one another [4, 37]. Despite this ability, we and others have since shown that P1-N1-P2 evoked responses do not reliably reflect hearing aid gain, even when different types of hearing aids (analog and digital) and their parameters (e.g., gain and frequency response) are manipulated [40–45]. What is more, the signal levels of phones when repeatedly presented in isolation to evoke cortical evoked potentials are not the same as hearing aid output levels when phonemes are presented in running speech context [46]. These examples are provided because they reinforce the importance of examining the output of the hearing aid. Neural activity is modulated by both endogenous and exogenous factors and, in this example, the P1-N1-P2 complex was driven by the signal-to-noise ratio (SNR) of the amplified signal. Figure 2 shows the significant effect hearing aid amplification had on SNR when Billings et al. [43] presented a 1000 Hz tone through a hearing aid. Hearing aids are not designed to process steady-state tones, but results are similar even when naturally produced speech syllables were used [37]. Acoustic waveforms, recorded in-the-canal, are shown (unaided = left; aided=right). The output of the hearing aid, as measured at the 1000 Hz centered 1/3 octave band, was approximately equivalent at 73 and 74 dB SPL for unaided and aided conditions. Noise levels in that same 1/3 octave band, however, approximated 26 dB in the unaided condition and 54 dB SPL in the aided condition. Thus SNRs in the unaided and aided conditions, measured at the output of the hearing aid, were very different, and time-locked evoked brain activity shown in Figure 3 was influenced more by SNR than absolute signal level. Most of these SNR studies have been conducted in normal hearing listeners and thus the noise was audible, something unlikely to occur at some frequencies if a person has a hearing loss. Nevertheless, noise is always present in an amplified signal and contributors may range from amplified ambient noise to circuit noise generated by the hearing aid. It is therefore important to consider the effects of noise, among the many other modifications introduced by hearing aid processing (e.g., compression) on evoked brain activity. This is especially important because commercially available evoked potential systems are being used to estimate aided hearing sensitivity in young children [47].

Figure 2.

Time waveforms of in-the-canal acoustic recordings for one individual. The unaided (left) and aided (right) conditions are shown together. Signal output as measured at the 1000 Hz centered 1/3 octave band was approximately equivalent at 73 and 74 dB SPL for the unaided and aided conditions. However, noise levels in the same 1/3 octave band were approximately 26 and 54 dB SPL, demonstrating the significant change in SNR.

Figure 3.

Two examples showing grand mean CAEPs recorded with similar mean output signal levels. Panels: (a) 40 dB input signals and (b) 60 dB input signals show unaided and aided grand mean waveforms evoked with corresponding in-the-canal acoustic measures. Despite similar input and output signal levels, unaided and aided brain responses are quite different. Aided responses are smaller than unaided responses, perhaps because the SNRs are poorer in the aided condition.

What remains unclear is how neural networks process different SNRs, facilitate the suppression of unwanted competing signals (e.g., noise), and process simultaneous streams of information when people with hearing loss wear hearing aids. Individual listening abilities have been attributed to variability involving motivation, selective attention, stream segregation, and multimodal interactions, as well as many other cognitive contributions [48]. It can be mediated by the biological consequences of aging and duration of hearing loss, as well as the peripheral and central effects of peripheral pathology (for reviews see [44, 49]). Despite the obvious importance of this stage and the plethora of papers published each year on the topics of selective attention, auditory streaming, object formation, and spatial hearing, the inclusion of people with hearing loss and who wear hearing aids remains relatively slim.

Over a decade ago, a working group that included scientists from academia and industry gathered and discussed the need to include central factors when considering hearing aid use [50] and since then there has been increased awareness about including measures of cognition, listening effort, and other top-down functions when discussing rehabilitation involving hearing aid fitting [51]. However, finding universally agreed upon definitions and methods to quantify cognitive function remains a challenge. Several self-report questionnaires and other subjective measures have evolved to measure listening effort, for example, but there are also concerns that self-report measures do not always correlate with objective measures [52, 53]. For this reason, new explorations involving objective measures are underway.

There have been tremendous advances in technology that permit noninvasive objective assessments of sensory and cognitive function. With this information it might become possible to harness cognitive resources in ways that have been previously unexplored. For example, it might become possible to use brain measures to guide manufacturer designs. Knowing how the auditory system responds to gain, noise reduction, and/or compression circuitry could influence future generations of biologically motivated changes in hearing aid design. The influence of brain responses is especially important with current advances in hearing aid design featuring binaural processing, which involve algorithms making decisions based on cues received from both hearing aids. Returning to the example of listening effort, pupillometry [54], an objective measure of pupil dilation, and even skin conductance (EMG activity; [55]) are being explored as an objective method for quantifying listening effort and cognitive load. Other approaches include the use of EEG and other neuropsychological correlates of auditive processing for the purpose of setting a hearing device by detecting listening effort [56]. In fact, there already exist a number of existing patents for this purpose by hearing aid manufacturers such as Siemens, Widex, and Oticon, to name a few. These new advances in neuroscience make it clear that multidisciplinary efforts that combine neuroscience and engineering and are verified using clinical trials are innovative directions in hearing aid science. Taking this point one step further, biological codes have been used to innervate motion of artificial limbs/prostheses, and it might someday be possible to design a hearing prosthesis that includes neuromachine interface systems driven by a person's listening effort or attention [57–59]. Over the last decade, engineers and neuroscientists have worked together to translate brain-computer-interface systems from the laboratory for widespread clinical use, including hearing loss [60]. Most recently, eye gaze is being used as a means of steering directional amplification. The visually guided hearing aid (VGHA) combines an eye tracker and an acoustic beam-forming microphone array that work together to tune in the sounds your eyes are directed to while minimizing others [61]. The VGHA is a lab-based prototype whose components connect via computers and other equipment, but a goal is to turn it into a wearable device. But, once again, the successful application of future BCI/VGHA devices will likely require interdisciplinary efforts, described within our framework, given that successful use of amplification involves more than signal processing and engineering.

If a goal of hearing aid research is to enhance and empower a person's listening experience while using hearing aids, then a critical metric within this framework is the outcome measure. Quantifying a person's listening experience using a hearing aid as being positive [✓] or negative [✗] might seem straight forward, but decades of research on the topic of outcome measures show this is not the case. Research aimed at modeling and predicting hearing aid outcome [9, 62] shows that there are multiple variables that influence various hearing aid outcomes. A person's age, their expectations, and the point in time in which they are queried can all influence the outcome measure. The type of outcome measure, self-report or otherwise, can also affect results. It is for this reason that a combination of measures (e.g., objective measures of speech-understanding performance; self-report measures of hearing aid usage; and self-report measures of hearing aid benefit and satisfaction) is used to characterize communication-related hearing aid outcome. Expanding our knowledge about the biological influences on speech understanding in noise can inspire the development of new outcome measures that are more sensitive to a listener's perception and to clinical interventions. For example, measuring participation in communication may assess a listener's use of their auditory reception on a deeper level than current outcomes asking how well speech is understood in various environments [63], which could be a promising new development in aided self-report outcomes.

3. Putting It All Together

Many factors can contribute to aided speech understanding in noisy environments, including device centered (e.g., directional microphones, signal processing, and gain settings) and patient centered variables (e.g., age, attention, motivation, and biology). The framework (Figure 1) proposed by Souza and Tremblay [4] provides a context for discussing the multiple stages involved in the perception of amplified sounds. What is more, it illustrates how research aimed at exploring one variable in isolation (e.g., neural mechanisms underlying auditory streaming) falls short of understanding the many interactive stages that are involved in auditory streaming in a person who wears a hearing aid. It can be argued that it is necessary to first understand how normal hearing ear-brain systems stream, but it can also be argued that interventions based on normal hearing studies are limited in their generalizability to hearing aid users.

A person's self-report or aided performance on an outcome measure can be attributed to many different variables illustrated in Figure 1. Each variable (e.g., input signal) could vary in different ways. One listener might describe themselves as performing well [✓] when the input signal is a single speaker in moderate noise conditions, provided they are paying attention to the speaker while using a hearing aid that makes use of a directional microphone. This same listener might struggle [✗] if this single speaker is a lecturer in the front of a large classroom who paces back and forth across the stage and intermittently speaks into a microphone. In this example, changes in the quality and direction of a single source of input may be enough to negatively affect a person's use of sound upstream because of a reduced neural capacity to follow sounds when they change in location and in space. This framework and these examples are overly simplistic, but they are used to emphasize the complexity and multiple interactions that contribute to overall performance variability. We also argue that it is overly simplistic for clinicians and scientists to assume that explanations of performance variability rest solely one stage/variable. For this reason, interdisciplinary research that considers the contribution of neuroscience as an important stage along the continuum is encouraged.

The experiments highlighted here serve as examples to show how far, and multidisciplinary, hearing aid research has come. Since the original publication of Souza and Tremblay [4], advances have been made on the clinical front as shown through the many studies aimed at using neural detection measures to assist with hearing aid fitting. And it is through neuroengineering that that next generation of hearing prostheses will likely come.

Acknowledgments

The authors wish to acknowledge funding from NIDCD R01 DC012769-02 as well as the Virginia Merrill Bloedel Hearing Research Center Traveling Scholar Program.

Conflict of Interests

The authors declare that there is no conflict of interests regarding the publication of this paper.

References

- 1.Kochkin S. Customer satisfaction with hearing instruments in the digital age. Hearing Journal. 2005;58(9):30–39. [Google Scholar]

- 2.Chia EM, Wang JJ, Rochtchina E, Cumming RR, Newall P, Mitchell P. Hearing impairment and health-related quality of life: The blue mountains hearing study. Ear and Hearing. 2007;28(2):187–195. doi: 10.1097/AUD.0b013e31803126b6. [DOI] [PubMed] [Google Scholar]

- 3.Chisolm TH, Johnson CE, Danhauer JL, et al. A systematic review of health-related quality of life hearing aids: final report of the American Academy of Audiology Task Force on the Health-Related Quality of Life Benefits of Amplication in Adults. Journal of the American Academy of Audiology. 2007;18(2):151–183. doi: 10.3766/jaaa.18.2.7. [DOI] [PubMed] [Google Scholar]

- 4.Souza PE, Tremblay KL. New perspectives on assessing amplification effects. Trends in Amplification. 2006;10(3):119–143. doi: 10.1177/1084713806292648. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Cherry EC. Some experiments on the recognition of speech, with one and with two ears. The Journal of the Acoustical Society of America. 1953;25(5):975–979. [Google Scholar]

- 6.Wood N, Cowan N. The cocktail party phenomenon revisited: how frequent are attention shifts to one's name in an irrelevant auditory channel? Journal of Experimental Psychology: Learning, Memory, and Cognition. 1995;21(1):255–260. doi: 10.1037//0278-7393.21.1.255. [DOI] [PubMed] [Google Scholar]

- 7.Pearsons KS, Bennett RL, Fidell S. EPA Report. 1977;(600/1-77-025)

- 8.Cox RM, Alexander GC. Maturation of hearing aid benefit: objective and subjective measurements. Ear and Hearing. 1992;13(3):131–141. doi: 10.1097/00003446-199206000-00001. [DOI] [PubMed] [Google Scholar]

- 9.Humes LE, Ahlstrom JB, Bratt GW, Peek BF. Studies of hearing-aid outcome measures in older adults: a comparison of technologies and an examination of individual differences. Seminars in Hearing. 2009;30(2):112–128. [Google Scholar]

- 10.Kochkin S. MarkeTrak VII: Obstacles to adult non-user adoption of hearing aids. Hearing Journal. 2007;60(4):24–51. [Google Scholar]

- 11.Bentler RA. Effectiveness of directional microphones and noise reduction schemes in hearing aids: a systematic review of the evidence. Journal of the American Academy of Audiology. 2005;16(7):473–484. doi: 10.3766/jaaa.16.7.7. [DOI] [PubMed] [Google Scholar]

- 12.Bentler R, Wu YH, Kettel J, Hurtig R. Digital noise reduction: Outcomes from laboratory and field studies. International Journal of Audiology. 2008;47(8):447–460. doi: 10.1080/14992020802033091. [DOI] [PubMed] [Google Scholar]

- 13.Palmer CV, Bentler R, Mueller HG. Amplification with digital noise reduction and the perception of annoying and aversive sounds. Trends in Amplification. 2006;10(2):95–104. doi: 10.1177/1084713806289554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Wu YH, Bentler RA. Impact of visual cues on directional benefit and preference: Part I-laboratory tests. Ear and Hearing. 2010;31(1):22–34. doi: 10.1097/AUD.0b013e3181bc767e. [DOI] [PubMed] [Google Scholar]

- 15.Wu YH, Bentler RA. Impact of visual cues on directional benefit and preference. Part II-field tests. Ear and Hearing. 2010;31(1):35–46. doi: 10.1097/AUD.0b013e3181bc769b. [DOI] [PubMed] [Google Scholar]

- 16.Bentler RA, Duve MR. Comparison of hearing aids over the 20th century. Ear and Hearing. 2000;21(6):625–639. doi: 10.1097/00003446-200012000-00009. [DOI] [PubMed] [Google Scholar]

- 17.Fikret-Pasa S, Revit LJ. Individualized correction factors in the preselection of hearing aids. Journal of Speech and Hearing Research. 1992;35(2):384–400. doi: 10.1044/jshr.3502.384. [DOI] [PubMed] [Google Scholar]

- 18.Audioscan. Verifit Users Guide Version 3.10. Ontario, Canada: Etymonic Design Inc; 2012. [Google Scholar]

- 19.Hickson L, Thyer N. Acoustic analysis of speech-through a hearing aid: Perceptual effects of changes with two-channel compression. Journal of the American Academy of Audiology. 2003;14(8):414–426. [PubMed] [Google Scholar]

- 20.Jenstad LM, Souza PE. Quantifying the effect of compression hearing aid release time on speech acoustics and intelligibility. Journal of Speech, Language, and Hearing Research. 2005;48(3):651–667. doi: 10.1044/1092-4388(2005/045). [DOI] [PubMed] [Google Scholar]

- 21.Jenstad LM, Souza PE. Temporal envelope changes of compression and speech rate: combined effects on recognition for older adults. Journal of Speech, Language, and Hearing Research. 2007;50(5):1123–1138. doi: 10.1044/1092-4388(2007/078). [DOI] [PubMed] [Google Scholar]

- 22.Stone MA, Moore BCJ. Quantifying the effects of fast-acting compression on the envelope of speech. Journal of the Acoustical Society of America. 2007;121(3):1654–1664. doi: 10.1121/1.2434754. [DOI] [PubMed] [Google Scholar]

- 23.Souza PE, Jenstad LM, Boike KT. Measuring the acoustic effects of compression amplification on speech in noise. Journal of the Acoustical Society of America. 2006;119(1):41–44. doi: 10.1121/1.2108861. [DOI] [PubMed] [Google Scholar]

- 24.Stelmachowicz PG, Kopun J, Mace A, Lewis DE, Nittrouer S. The perception of amplified speech by listeners with hearing loss: acoustic correlates. Journal of the Acoustical Society of America. 1995;98(3):1388–1399. doi: 10.1121/1.413474. [DOI] [PubMed] [Google Scholar]

- 25.Hickson L, Thyer N, Bates D. Acoustic analysis of speech through a hearing aid: consonant-vowel ratio effects with two-channel compression amplification. Journal of the American Academy of Audiology. 1999;10(10):549–556. [PubMed] [Google Scholar]

- 26.Bor S, Souza P, Wright R. Multichannel compression: effects of reduced spectral contrast on vowel identification. Journal of Speech, Language, and Hearing Research. 2008;51(5):1315–1327. doi: 10.1044/1092-4388(2008/07-0009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Henning RLW, Bentler RA. The effects of hearing aid compression parameters on the short-term dynamic range of continuous speech. Journal of Speech, Language, and Hearing Research. 2008;51(2):471–484. doi: 10.1044/1092-4388(2008/034). [DOI] [PubMed] [Google Scholar]

- 28.Souza PE, Turner CW. Quantifying the contribution of audibility to recognition of compression-amplified speech. Ear and Hearing. 1999;20(1):12–20. doi: 10.1097/00003446-199902000-00002. [DOI] [PubMed] [Google Scholar]

- 29.Hickson L, Dodd B, Byrne D. Consonant perception with linear and compression amplification. Scandinavian Audiology. 1995;24(3):175–184. doi: 10.3109/01050399509047532. [DOI] [PubMed] [Google Scholar]

- 30.Schwartz AH, Shinn-Cunningham BG. Effects of dynamic range compression on spatial selective auditory attention in normal-hearing listeners. Journal of the Acoustical Society of America. 2013;133(4):2329–2339. doi: 10.1121/1.4794386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Glista D, Easwar V, Purcell DW, Scollie S. A pilot study on cortical auditory evoked potentials in children: aided caeps reflect improved high-frequency audibility with frequency compression hearing aid technology. International Journal of Otolaryngology. 2012;2012:12 pages. doi: 10.1155/2012/982894.982894 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Carney LH. A model for the responses of low-frequency auditory-nerve fibers in cat. Journal of the Acoustical Society of America. 1993;93(1):401–417. doi: 10.1121/1.405620. [DOI] [PubMed] [Google Scholar]

- 33.Sachs MB, Bruce IC, Miller RL, Young ED. Biological basis of hearing-aid design. Annals of Biomedical Engineering. 2002;30(2):157–168. doi: 10.1114/1.1458592. [DOI] [PubMed] [Google Scholar]

- 34.Cox RM, Johnson JA, Alexander GC. Implications of high-frequency cochlear dead regions for fitting hearing aids to adults with mild to moderately severe hearing loss. Ear and Hearing. 2012;33(5):573–587. doi: 10.1097/AUD.0b013e31824d8ef3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Willott JF. Anatomic and physiologic aging: a behavioral neuroscience perspective. Journal of the American Academy of Audiology. 1996;7(3):141–151. [PubMed] [Google Scholar]

- 36.Tremblay KL, Kalstein L, Billings CJ, Souza PE. The neural representation of consonant-vowel transitions in adults who wear hearing aids. Trends in Amplification. 2006;10(3):155–162. doi: 10.1177/1084713806292655. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tremblay KL, Billings CJ, Friesen LM, Souza PE. Neural representation of amplified speech sounds. Ear and Hearing. 2006;27(2):93–103. doi: 10.1097/01.aud.0000202288.21315.bd. [DOI] [PubMed] [Google Scholar]

- 38.Small SA, Werker JF. Does the ACC have potential as an index of early speech-discrimination ability? A preliminary study in 4-month-old infants with normal hearing. Ear and Hearing. 2012;33(6):e59–e69. doi: 10.1097/AUD.0b013e31825f29be. [DOI] [PubMed] [Google Scholar]

- 39.Anderson S, Kraus N. The potential role of the cABR in assessment and management of hearing impairment. International Journal of Otolaryngology. 2013;2013:10 pages. doi: 10.1155/2013/604729.604729 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Jenstad LM, Marynewich S, Stapells DR. Slow cortical potentials and amplification. Part II: acoustic measures. International Journal of Otolaryngology. 2012;2012:14 pages. doi: 10.1155/2012/386542.386542 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Marynewich S, Jenstad LM, Stapells DR. Slow cortical potentials and amplification. Part I: n1-p2 measures. International Journal of Otolaryngology. 2012;2012:11 pages. doi: 10.1155/2012/921513.921513 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Billings CJ, Tremblay KL, Souza PE, Binns MA. Effects of hearing aid amplification and stimulus intensity on cortical auditory evoked potentials. Audiology and Neurotology. 2007;12(4):234–246. doi: 10.1159/000101331. [DOI] [PubMed] [Google Scholar]

- 43.Billings CJ, Tremblay KL, Miller CW. Aided cortical auditory evoked potentials in response to changes in hearing aid gain. International Journal of Audiology. 2011;50(7):459–467. doi: 10.3109/14992027.2011.568011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Billings CJ, Tremblay KL, Willott JW. The aging auditory system. In: Tremblay K, Burkard R, editors. Translational Perspectives in Auditory Neuroscience: Hearing Across the Lifespan. Assessment and Disorders. San Diego, Calif, USA: Plural Publishing, Inc; 2012. [Google Scholar]

- 45.Billings CJ. Uses and limitations of electrophysiology with hearing aids. Seminars in Hearing. 2013;34(4):257–269. doi: 10.1055/s-0033-1356638. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Easwar V, Purcell DW, Scollie SD. Electroacoustic comparison of hearing aid output of phonemes in running speech versus isolation: implications for aided cortical auditory evoked potentials testing. International Journal of Otolaryngology. 2012;2012:11 pages. doi: 10.1155/2012/518202.518202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Munro KJ, Purdy SC, Ahmed S, Begum R, Dillon H. Obligatory cortical auditory evoked potential waveform detection and differentiation using a commercially available clinical system: HEARLab. Ear and Hearing. 2011;32(6):782–786. doi: 10.1097/AUD.0b013e318220377e. [DOI] [PubMed] [Google Scholar]

- 48.Rudner M, Lunner T. Cognitive spare capacity as a window on hearing aid benefit. Seminars in Hearing. 2013;34(4):298–307. [Google Scholar]

- 49.Willott JF. Physiological plasticity in the auditory system and its possible relevance to hearing aid use, deprivation effects, and acclimatization. Ear and Hearing. 1996;17:66S–77S. doi: 10.1097/00003446-199617031-00007. [DOI] [PubMed] [Google Scholar]

- 50.Kiessling J, Pichora-Fuller MK, Gatehouse S, et al. Candidature for and delivery of audiological services: special needs of older people. International Journal of Audiology. 2003;42(2):S92–S101. [PubMed] [Google Scholar]

- 51.Gosselin PA, Gagné J-P. Use of a dual-task paradigm to measure listening effort. Canadian Journal of Speech-Language Pathology and Audiology. 2010;34(1):43–51. [Google Scholar]

- 52.Saunders GH, Forsline A. The Performance-Perceptual Test (PPT) and its relationship to aided reported handicap and hearing aid satisfaction. Ear and Hearing. 2006;27(3):229–242. doi: 10.1097/01.aud.0000215976.64444.e6. [DOI] [PubMed] [Google Scholar]

- 53.Shulman LM, Pretzer-Aboff I, Anderson KE, et al. Subjective report versus objective measurement of activities of daily living in Parkinson’s disease. Movement Disorders. 2006;21(6):794–799. doi: 10.1002/mds.20803. [DOI] [PubMed] [Google Scholar]

- 54.Koelewijn T, Zekveld AA, Festen JM, Rönnberg J, Kramer SE. Processing load induced by informational masking is related to linguistic abilities. International Journal of Otolaryngology. 2012;2012:11 pages. doi: 10.1155/2012/865731.865731 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Mackersie CL, Cones H. Subjective and psychophysiological indexes of listening effort in a competing-talker task. Journal of the American Academy of Audiology. 2011;22(2):113–122. doi: 10.3766/jaaa.22.2.6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Strauss DJ, Corona-Strauss FI, Trenado C, et al. Electrophysiological correlates of listening effort: Neurodynamical modeling and measurement. Cognitive Neurodynamics. 2010;4(2):119–131. doi: 10.1007/s11571-010-9111-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Debener S, Minow F, Emkes R, Gandras K, de Vos M. How about taking a low-cost, small, and wireless EEG for a walk? Psychophysiology. 2012;49(11):1617–1621. doi: 10.1111/j.1469-8986.2012.01471.x. [DOI] [PubMed] [Google Scholar]

- 58.Hill NJ, Schölkopf B. An online brain-computer interface based on shifting attention to concurrent streams of auditory stimuli. Journal of Neural Engineering. 2012;9(2) doi: 10.1088/1741-2560/9/2/026011.026011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Wronkiewicz M, Larson E, Lee AK. Towards a next-generation hearing aid through brain state classification and modeling. Annual International Conference of the IEEE Engineering in Medicine and Biology Society. 2013;2013:2808–2811. doi: 10.1109/EMBC.2013.6610124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Wolpaw JR, Birbaumer N, McFarland DJ, Pfurtscheller G, Vaughan TM. Brain-computer interfaces for communication and control. Clinical Neurophysiology. 2002;113(6):767–791. doi: 10.1016/s1388-2457(02)00057-3. [DOI] [PubMed] [Google Scholar]

- 61.Kidd G, Jr., Favrot S, Desloge JG, Streeter TM, Mason CR. Design and preliminary testing of a visually guided hearing aid. Journal of the Acoustical Society of America. 2013;133(3):EL202–EL207. doi: 10.1121/1.4791710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Humes LE, Ricketts TA. Modelling and predicting hearing aid outcome. Trends in Amplification. 2003;7(2):41–75. doi: 10.1177/108471380300700202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Baylor C, Burns M, Eadie T, Britton D, Yorkston K. A Qualitative study of interference with communicative participation across communication disorders in adults. The American Journal of Speech-Language Pathology. 2011;20(4):269–287. doi: 10.1044/1058-0360(2011/10-0084). [DOI] [PMC free article] [PubMed] [Google Scholar]