Abstract

Learning how to obtain rewards requires learning about their contexts and likely causes. How do long-term memory mechanisms balance the need to represent potential determinants of reward outcomes with the computational burden of an over-inclusive memory? One solution would be to enhance memory for salient events that occur during reward anticipation, because all such events are potential determinants of reward. We tested whether reward motivation enhances encoding of salient events like expectancy violations. During functional magnetic resonance imaging, participants performed a reaction-time task in which goal-irrelevant expectancy violations were encountered during states of high- or low-reward motivation. Motivation amplified hippocampal activation to and declarative memory for expectancy violations. Connectivity of the ventral tegmental area (VTA) with medial prefrontal, ventrolateral prefrontal, and visual cortices preceded and predicted this increase in hippocampal sensitivity. These findings elucidate a novel mechanism whereby reward motivation can enhance hippocampus-dependent memory: anticipatory VTA-cortical–hippocampal interactions. Further, the findings integrate literatures on dopaminergic neuromodulation of prefrontal function and hippocampus-dependent memory. We conclude that during reward motivation, VTA modulation induces distributed neural changes that amplify hippocampal signals and records of expectancy violations to improve predictions—a potentially unique contribution of the hippocampus to reward learning.

Keywords: expectancy violation, hippocampus, motivation, reward, ventral tegmental area

Introduction

Relationships between events are often clear only in retrospect. For example, when something good happens, we want to know why it happened and how to make it happen again. However, many events may precede a reward; how do we know which of them caused it? One way to solve this “credit assignment problem,” is by recording in memory all potential determinants of behaviorally relevant events like rewards (Fu and Anderson 2008). From an over-inclusive mnemonic record, the relationships between behaviorally relevant events can be disambiguated as experience accumulates, improving mnemonic models for predicting future rewards and knowing how to get them.

How long-term memory supports the resolution of credit assignment for rewards and similar computational problems must be reconciled with combinatorial explosion in representing potential relationships. These two opposing constraints could both be satisfied if the contents of memory were biased as follows: Because it indicates an expectation that reward is possible, reward motivation should result in enhanced memory encoding for salient events, even if those events are not explicitly associated with reward. Conversely, over-inclusive memory encoding should occur only during reward motivation, to limit computational burden. Because any salient event encountered during reward motivation is potentially predictive of reward outcomes, such a memory bias would offer a computationally feasible way to facilitate obtaining future rewards.

Expectancy violations are salient events because they are surprising; they signal environmental volatility and act as a cue to update mnemonic representations. Thus, expectancy violations encountered during pursuit of a goal should be more salient and better remembered than those encountered when no goal is active. For example, an individual might memorize the location of a new, soon-to-be opened restaurant better if she notices it when hungry than when full. This adaptive memory bias would ensure that when behaviorally relevant events do occur, mnemonic models of the environment include an exuberant set of possible predictive relationships to be refined by additional experience.

No research has yet investigated whether reward motivation affects the encoding of behaviorally salient events encountered during goal pursuit, but the hippocampal memory system is well positioned for such a function. First, the hippocampus is specialized to create inclusive records of the surrounding environment: the hippocampus generates detailed representations of multiple interconnected events (Davachi 2006; Ranganath 2010), unfolding in time (Devito and Eichenbaum 2011; Tubridy and Davachi 2011), with preferential representation of surprises (i.e., expectancy violations) (Ranganath and Rainer 2003). Second, the hippocampus is modulated by activation of the dopaminergic midbrain, a region strongly implicated in reward-motivated behaviors (Berridge and Robinson 1998; Wise 2004). Accumulating evidence suggests that engagement of the midbrain (including the ventral tegmental area [VTA]) biases hippocampal memory to support future adaptive behaviors (Shohamy and Adcock 2010; Lisman et al. 2011). However, although VTA activation and motivation have both been shown to influence memory encoding (Shohamy and Adcock 2010; Lisman et al. 2011), the mechanisms of these effects in behaving organisms remain to be elucidated.

Prior research suggests that reward motivation could influence hippocampal memory either directly or indirectly. Plausible direct mechanisms include dopaminergic modulation of dynamic hippocampal physiology (Lisman and Otmakhova 2001; Hammad and Wagner 2006; Swant et al. 2008) and stabilization of long-term potentiation (Wang and Morris 2010; Lisman et al. 2011). Alternatively, given the widespread influence of dopamine on distributed brain systems beyond the hippocampus, changes in these broader networks could alter hippocampal physiology indirectly. Indirect hippocampal modulation could result from changes in a variety of processes that contribute to memory encoding (Nieoullon and Coquerel 2003; Mehta and Riedel 2006; Watanabe 2007). For example, the VTA modulates prefrontal neurophysiology during working memory, and attentional or executive processes (Williams and Goldman-Rakic 1995; Durstewitz et al. 2000; Goldman-Rakic et al. 2000; Seamans and Yang 2004), and recent evidence from the rodent literature has demonstrated synchronized activity across the VTA, hippocampus, and prefrontal cortex (PFC) during a working memory paradigm (Fujisawa and Buzsaki 2011). Because working memory and executive processes support episodic memory encoding (Blumenfeld and Ranganath 2007; Jeneson and Squire 2012), these processes could be recruited during reward-motivated memory encoding to indirectly modulate the hippocampus. This possibility is supported by recent electrophysiological evidence linking frontal theta to reward-motivated memory (Gruber et al. 2013) but a link to hippocampal physiology has yet to be demonstrated.

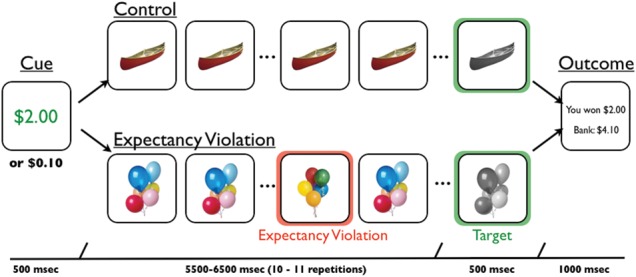

The first goal of the current study was to test for the existence of a mnemonic bias for encoding expectancy violations during reward motivation. The second goal was to adjudicate between direct and indirect mechanistic accounts of this adaptive memory bias. To address these questions, we collected fMRI data while participants performed a rewarded reaction-time task with goal-irrelevant expectancy violations embedded in a series of goal-relevant stimuli (Fig. 1). Specifically, participants viewed serial repetitions of a trial-unique object, responding via button press when it changed from color to grayscale, under high- or low-reward motivation. On half of the trials, the series was interrupted by a temporally unpredictable, novel but highly similar object: a goal-irrelevant expectancy violation. Following scanning, participants' memory was tested for the novel objects that served as expectancy violations. This design allowed us to independently measure activations to reward cues and expectancy violation events, and thus, to identify a novel candidate mechanism of midbrain dopamine effects on hippocampal physiology and memory.

Figure 1.

Experimental task. In each trial, participants first viewed a reward cue that indicated whether they had the opportunity to earn a high ($2.00) or low reward ($0.10) for a speeded button press to a target image (a grayscale image). In control trials, the reward cue was followed by serial repetitions of a trial-unique, color object image. After 10 to 11 repetitions that image turned grayscale, to which participants were to make a speeded button press. In expectancy violation trials, serial representations of the trial-unique, color object image was interrupted by a novel, yet highly similar, object image at a temporally unpredictable time. Participants were instructed that expectancy violations had no bearing on earning rewards, and earnings were solely based on their button press to target images. Following the button press to the target, participants were presented an outcome screen that indicated how much money they earned on that trial and how much they had accumulated over the course of the experiment.

Materials and Methods

Participants

Twenty-seven healthy, right-handed participants were paid $40 to participate, plus any additional earned monetary bonuses (mean = $69.78; standard deviation = $19.06). All participants gave written informed consent for a protocol approved and monitored by the Duke University Institutional Review Board. One participant was excluded due to a computer malfunction. The current analysis included 26 participants (18 females, age range: 18–36 years; median age = 24.5 years).

Experimental Task

To test for the effects of reward motivation on expectancy violation processing, participants perform a speeded reaction-time task, modified from the monetary incentive delay task (Knutson et al. 2000). This task was designed to manipulate 2 factors: participants' motivational state and the presence of expectancy violations. To manipulate motivational state, every trial of the task began with a 500-ms cue that indicated whether participants could earn either a high ($2.00) or low ($0.10) reward for a speeded button press to a target image (Figure 1). Following a variable delay (between 5.5 and 6.5 s), the target appeared on the screen. Targets were trial-unique, grayscale object images. If participants were sufficiently fast at responding to targets, they earned the monetary bonus indicated by the cue. The target reaction time for earning money was determined by an adaptive algorithm, which estimated the response time threshold at which the subject would be successful on ∼65% of trials. Thresholds were calculated independently for each condition to ensure that reinforcement rates would be equated across all four conditions. Following the presentation of the target image, participants viewed an outcome screen that indicated their success on the current trial as well as their accumulated monetary bonuses. The intertrial interval between reward feedback and the following reward cues was 1–15.8 s (mean = 4.84).

To manipulate expectancy violation, following the cue but prior to the target presentation, participants viewed 10–11 serial presentations of color object images for 409 ms with an interstimulus interval (ISI) of 136 ms. During control trials (CO), participants viewed repeated presentations of a color version of the upcoming target stimulus. During expectancy violation trials (EV), participants viewed repeated presentations of a color version of the upcoming target stimulus interrupted by a highly similar, but novel, image. This expectancy violation stimulus always appeared randomly between the fourth and eighth object presentation.

Participants performed 2 runs of this task. During each task run, participants completed 10 High-Reward CO, 10 Low-Reward CO, 10 High-Reward EV, and 10 Low-Reward EV trials. Trial order was pseudo-randomized across each run, with each run lasting 7 min and 56 s. Trial onsets, cue-scene intervals, and trial order were optimized using Opt-seq software (Dale 1999).

Immediately prior to scanning, participants were shown a visual schematic of the task and given verbal instructions. Participants were instructed about the incentives for detecting the grayscale object. They were also instructed that on some trials a different object would interrupt the stream of repeating objects, and that these interruptions were irrelevant to earning money. Participants were informed that they would receive all money earned during the performance of this task. Participants performed an unpaid, practice version of the task that consisted of 10 High-Reward CO and 10 Low-Reward CO to familiarize themselves with the paradigm and to calibrate reaction-time thresholding.

Following scanning (∼30 min after the encoding session), participants performed a 2-alternative forced-choice recognition memory task for objects that constituted expectancy violations. During this test, participants saw pairs of object images, one of which was an object that constituted an expectancy violation and the other a similar, yet novel, object that participants had never seen. For each object pair, participants had to identify which object they saw during the encoding session by pressing either the “1” or “2” button to indicate the object on the left or right, respectively. Following each memory decision, participants had to indicate their confidence in their response (i.e., 1 = very sure, 2 = pretty sure, 3 = just guessing). Participants received 40 recognition memory trials (20 high reward and 20 low reward).

MRI Data Acquisition and Preprocessing

fMRI data were acquired on a 3.0-T GE Signa MRI scanner using a standard echo-planar imaging sequence (echo-planar imaging [EPI], TE = 27 ms, flip = 77 degrees, TR = 2 s, 34 contiguous slices, size = 3.75 mm × 3.75 mm × 3.80 mm) with coverage across the whole brain. Each of the 2 functional runs consisted of 238 volumes. Prior to the functional runs, we collected a whole-brain, inversion recovery, spoiled gradient high-resolution anatomical image (voxel size = 1 mm, isotropic) for use in spatial normalization.

fMRI preprocessing was performed using fMRI Expert Analysis Tool (FEAT) Version 5.92 as implemented in FSL 4.1.5 9 (www.fmrib.ox.ac.uk/fsl). The first 6 scans were discarded to allow for signal saturation. Bold images were then skull stripped using the Brain Extraction Tool (Smith 2002). Images were then realigned within-run, intensity normalized by a single multiplicative factor, spatially smoothed with a 4.0-mm full width half maximum (FWHM) kernel, and subjected to a high-pass filter (100 s). Spatial normalization was performed using a 2-step procedure on fMRIb Linear Registration Tool (Jenkinson et al. 2002). First, mean EPIs from each run were co-registered to the high-resolution anatomical image. Then, the high-resolution anatomical image was normalized to the high-resolution standard space image in Montreal Neurological Institute (MNI) space using a nonlinear transformation with a 10-mm warp resolution, as implemented by fMRI NonLinear Registration Tool. All coordinates are reported in MNI space.

Behavioral Analysis

Reaction times and hit rates to target images were submitted to separate repeated measures ANOVAs with motivation (high vs. low trials) and expectancy violation (EV vs. CO) as within-subjects factors. Of note, the adaptive nature of our reaction-time algorithm was explicitly programmed to keep reinforcement rates equivalent across conditions. For both of these ANOVAs, we tested for main effects of motivation and expectancy violation as well as their interaction at a significance level of P < 0.05. Recognition memory for objects that constituted expectancy violations was tested by submitting the number of hits to Student’s t-test with a significance level of P < 0.05 with motivation (high vs. low) as a within-subject factor. All memory responses were included in this analysis, irrespective of participants' reported confidence.

fMRI Data Analysis

fMRI data were analyzed using FEAT Version 5.92 as implemented in FSL 4.1.5. Time-series statistical analyses used FILM with local autocorrelation correction (Woolrich et al. 2001).

General Linear Model: Task-Related Activations

To investigate task-related activations, first-level (i.e., within-run) general linear models (GLMs) included 8 regressors that modeled high-reward cues, low-reward cues, high-reward target images, low-reward target images, high-reward EV events, high-reward CO events, low reward EV events, and low reward CO events. The latency to CO events was determined by randomly sampling from the latency of EV events without replacement. All trial events were modeled with an event duration of 0 s and a standard amplitude of 1. These events were then convolved with a double-gamma hemodynamic response function. EV and CO events were orthogonalized with respect to cue and target events. Using this GLM, individual maps of parameter estimates were generated for 4 contrasts of interest: high-reward cue > low reward cue, [high-reward EV + low-reward EV] > [high-reward CO + low-reward CO], high-reward EV > high-reward CO, and low-reward EV > low-reward CO. Second-level analyses for each of these contrasts (i.e., across runs, but within-subject) were modeled using a fixed effects analysis.

General Linear Model: Cue-Expectancy Violation Interactions

To investigate interactions between cue-related VTA activation and EV signaling, first-level parametric GLMs were constructed which investigated the parametric modulation of EV and CO events by cue-evoked VTA activation. First, a single-trial, beta-series analysis was performed to extract single-trial parameter estimates for cue-evoked VTA activation (for a similar approach, see Rissman et al. (2004)). GLMs were constructed for each participant that separately modeled cue-evoked activations for each individual trial (40 high-cue and 40 low-cue regressors). EV/CO events and target images were modeled as described above. Then, a weighted average of cue-evoked β-values was extracted for each individual trial from a probabilistic VTA region-of-interest (ROI). This probabilistic ROI was generated by 1) independently hand-drawing the structure on 50 high-resolution anatomical images from an independent sample of 50 participants, 2) normalizing those images to standard space, and 3) averaging across those images (Shermohammed et al. 2012). Details for the anatomical landmarks used to define the VTA in individual participants can be found in Ballard et al. (2011).

We chose to focus on the VTA over the SN in this paradigm because although there may be more dopaminergic neurons in the SN, the VTA has been demonstrated to be more critical in guiding reward-motivated behavior. In rodents, dopaminergic activity in the VTA has been demonstrated to track reward-motivated behaviors and learning (Aragona et al. 2008; Stuber et al. 2008), and selective stimulation of the VTA has been demonstrated to elicit reward-motivated behavior (Tsai et al. 2009; Adamantidis et al. 2011). In humans, high-resolution functional imaging has localized reward-motivated learning to the VTA and not the SN (D'Ardenne et al. 2008). Selective lesions to the human SN, as a result of Parkinson's disease, leave many reward-motivated behaviors in tact (Dagher and Robbins 2009). Together these findings suggest that the VTA may be the more relevant dopaminergic nucleus for reward-motivated behaviors. Additionally, work from rodents, primates, and humans suggest that both anatomic and functional connectivity between the VTA and hippocampus contributes to declarative memory processes (Haber 2003; Adcock et al. 2006; Shohamy and Wagner, 2008; Shohamy and Adcock, 2010; Wang and Morris, 2010; McGinty et al. 2011; Kahn and Shohamy, 2012; Wolosin et al. 2012).

Separate parametric GLMs were constructed which included 8 standard task-related regressors (described above) and 2 additional parametric regressors modeling VTA modulations of EV and CO events. For the parametric regressors, each EV/CO event amplitude was weighted by the preceding cue-evoked VTA activation from the same trial. Thus, these parametric regressors reflected how cue-related VTA activations affected subsequent processing of EV/CO events on a trial-by-trial basis. Using this GLM, individual maps of parameter estimates were generated for 2 contrasts of interest: VTA-modulation of EV events > baseline, VTA-modulation of CO events > baseline. Second-level analyses for each of these contrasts (i.e., across runs, but within-subject) were modeled using a fixed effects analysis.

Psychophysiological Interaction (PPI): Cue-Related Functional Connectivity

To investigate cue-evoked VTA connectivity, we identified PPI of cues upon functional connectivity with the VTA. For this analysis, first-level GLMs were constructed which included 8 standard task-related regressors (described above), 1 physiological regressor, and 2 PPI regressors. The physiological regressor was the weighted mean time-series extracted from an in-house probabilistic VTA ROI (described above). The PPI regressors multiplied this VTA physiological regressor separately with 1) the task-related regressor for high-reward cues and 2) the task-related regressor for low reward cues. Thus, these PPI regressors modeled VTA coupling in the brain as a function of response to reward cues. Using this GLM, individual parameter estimate maps were generated for the contrast of interest: [high-reward PPI + low-reward VTA PPI] > baseline. Second-level analyses for each of these contrasts (i.e., across runs, but within-subject) were modeled using a fixed effects analysis.

Group-Level Analysis

Third-level analyses (i.e., across participants) were modeled using FSL's mixed-effects analyses (FLAME 1), which accounts for within-session/subject variance calculated at the first and second levels, on the parameter estimates for contrasts of interest derived from the second-level analysis. Statistical tests for fMRI analyses were set to an overall = 0.05 family-wise error rate as calculated within AlphaSim tool in AFNI (http://afni.nimh.nih.gov/afni/doc/manual/AlphaSim) which uses actual data structure to determine the number of independent statistical tests and thus balance Type 1 and 2 errors. With 1000 Monte Carlo simulations and a voxelwise significance of P < 0.001, a smoothing kernel of 4-mm FWHM, an overall 0.05 corresponded to a cluster extent minimum of 33 voxels for the whole brain and 15 voxels for the MTL ROI analyses (MTL ROIs were limited to bilateral hippocampus and parahippocampal gyrus volumes as defined by the WFU PICKATLAS [Maldjian et al. 2003]).

Results

Behavior: Speeded Reaction-Time Task Behavior

To manipulate their motivational states, participants viewed cues that indicated whether a speeded button press to a target image would result in a high ($2.00) or low ($0.10) monetary reward (Figure 1). Both reward motivation [high- vs. low-reward cues: F1,25 = 12.43, P = 0.002] and the presence of expectancy violations [EV vs. CO: F1,25 = 9.65, P = 0.005] decreased participants' reaction times to target images, without any significant interactions across these factors [F1,25 = 1.27, P = 0.27] (Table 1). These findings suggest that reward cues were successful in manipulating participants' motivational state, and that expectancy violations were sufficiently salient to influence later behavior. Despite significant differences in reaction times, target hit rate/positive feedback did not differ across conditions due to the adaptive algorithm, we implemented (Table 1) [reward motivation: F1,25 = 2.95, P = 0.10; expectancy violation: F1,25 = 0.38, P = 0.55; interaction: F1,25 = 0.31, P = 0.58]. Participants on average earned $69.78 (SD = 19.06).

Table 1.

Behavioral performance on the rewarded speeded reaction-time task

| Condition | Reaction time ± SE | Accuracy ± SE |

|---|---|---|

| High reward | ||

| Expectancy violation | 205.01 ± 4.63 | 73.3 ± 1.4 |

| Control | 219.02 ± 5.41 | 71.7 ± 1.5 |

| Low reward | ||

| Expectancy violation | 214.62 ± 4.68 | 70.2 ± 1.8 |

| Control | 223.78 ± 3.79 | 70.2 ± 1.6 |

fMRI: Main Effect of Reward Motivation (High-Reward Cue > Low-Reward Cue)

To identify brain regions modulated by reward motivation independent of the presence of expectancy violations, we compared brain activations in response to high- versus low-reward cues. Reward motivation (high > low) resulted in greater activation in a broad network of regions including key regions in the mesolimbic and mesocortical dopamine networks: Significant activations were observed in bilateral dorsolateral PFC, ventrolateral PFC, premotor cortex, motor cortex, medial frontal cortex, dorsal anterior cingulate cortex, superior parietal cortex, inferior parietal cortex, ventral visual stream, hippocampus, parahippocampal cortex, thalamus, dorsal striatum, ventral striatum, cerebellum, and the midbrain (encompassing the VTA/SN) as well as the right anterior temporal lobe (P < 0.05, whole-brain corrected; Supplementary Table 1). Activations significant at the whole-brain level included robust activation within our a priori ROI in the VTA (VTA: left: t(25) = 3.30, P = 0.003, right: t(25) = 4.66, P < 0.001).

fMRI: Main Effect of Expectancy Violation (Expectancy Violation > Control)

To identify brain regions modulated by the presence of expectancy violations independent of reward motivation, we compared brain activations in response to expectancy violation (EV) versus control (CO) events. The presence of expectancy violations resulted in greater activation throughout a fronto-parietal network as well as regions in the medial temporal lobe. Specifically, significant activations were seen in dorsolateral PFC, premotor cortex, superior parietal cortex, inferior parietal cortex, ventral visual stream, ventral striatum, and cerebellum, as well as, right anterior PFC and hippocampus (P < 0.05, whole-brain corrected; Supplementary Table 1).

fMRI: Motivation's Influence on Expectancy Violations (High Reward [EV − CO] − Low Reward [EV − CO])

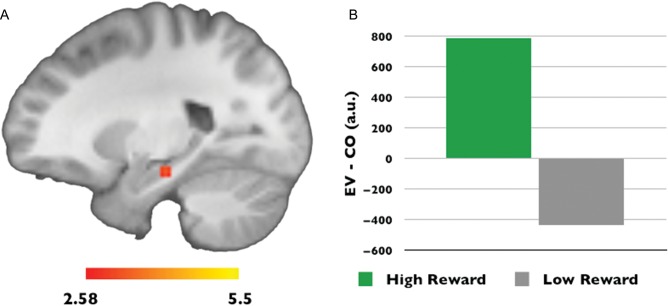

To characterize the influence of reward motivation on expectancy violation processing, we compared the processing of expectancy violation events (EV > CO) encountered in the context of high- versus low-reward motivation. This analysis yielded only one significant cluster, in the left hippocampus, indicating that this region was more sensitive to expectancy violations in the context of high- compared with low-reward motivation (x = −22 y = −22 z = −6, cluster size = 16, P < 0.05, corrected for comparisons within the entire bilateral MTL, 5278 voxels, 42 224 mm3) (Fig. 2).

Figure 2.

Reward motivation influences hippocampus expectancy violation processing. (A) A cluster in the left hippocampus showed significantly greater sensitivity to expectancy violations under states of high-versus low-reward motivation (P < 0.05, corrected for comparisons within the entire bilateral medial temporal lobe, 5278 voxels, 42 224 mm3). (B) Mean parameter estimates from the medial temporal lobe cluster are plotted to illustrate the direction of the interaction between reward motivation and expectancy violation processing.

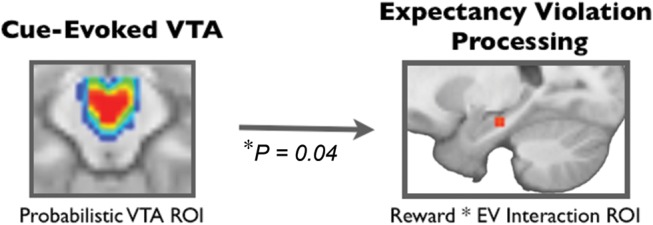

VTA Influence on Hippocampus Expectancy Violation Processing

To determine if there was a relationship between cue-related VTA activation and hippocampal sensitivity to expectancy violations, we used trial-by-trial variability in cue-evoked VTA activation to predict EV activations in the hippocampus. We found that, on a trial-by-trial basis, VTA activation during the cue significantly predicted hippocampus activation during EV (relative to CO events; cluster identified at motivation by expectancy violation interaction during EV); (P = 0.04) (Fig. 3).

Figure 3.

Cue-evoked ventral tegmental area activations predict hippocampus expectancy violation processing. On a trial-by-trial basis, VTA activations in response to reward cues predicted left hippocampus activations in response to expectancy violation events (EV > CO events); ROI, region of interest.

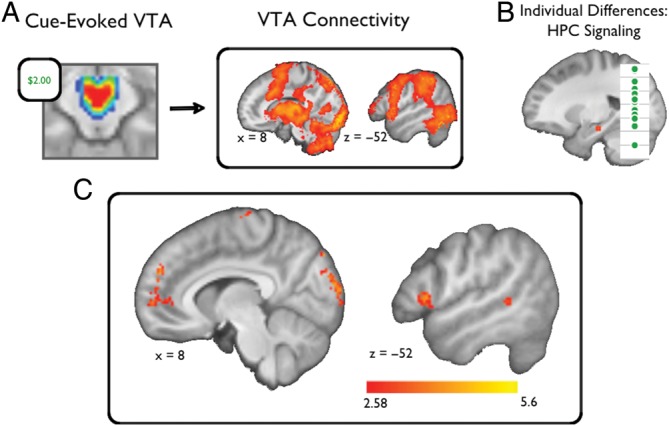

To elucidate potential mechanisms of the relationship between cue-related VTA activation and reward effects on hippocampal EV processing, we performed a two-step procedure. First, we characterized within-subject, cue-evoked functional coupling with the VTA across the entire brain (Supplementary Figure). Then, we identified the subset of regions whose cue-evoked functional coupling with the VTA predicted the mean motivational influence on hippocampal EV activations for the session (High Reward [EV − CO] − Low Reward [EV − CO]). Compared with the broad network of regions that showed cue-evoked functional coupling with the VTA, only an isolated set of regions predicted reward-motivated, hippocampus EV sensitivity (Table 2, Fig. 4). Hippocampus EV sensitivity was predicted by VTA coupling with bilateral visual cortex, medial PFC, medial frontal cortex, subgenual cingulate cortex, as well as right premotor cortex, left ventrolateral PFC, left ventromedial PFC, and right temporal gyrus.

Table 2.

Regions in which functional coupling with the VTA predicts reward-motivated, hippocampus expectancy violation signaling

| Region | Cluster size | Z-stat | x | y | z | Brodmann's area |

|---|---|---|---|---|---|---|

| Visual cortex | 249 | 4.42 | 8 | −86 | 42 | 19 |

| 193 | 5.63 | 12 | −96 | 18 | 19 | |

| Medial prefrontal cortex | 162 | 4.35 | 16 | 64 | 2 | 10 |

| 101 | 4.15 | −12 | 52 | 28 | 9 | |

| 45 | 4.11 | 8 | 52 | 28 | 9 | |

| 63 | 4.17 | −26 | 34 | 28 | 9 | |

| Premotor/motor cortex BA (4/6) | 43 | 4.24 | 54 | −12 | 54 | 4/6 |

| Ventrolateral PFC (BA 45/44) | 57 | 4.18 | −50 | 20 | 2 | 45/44 |

| Middle temporal gyrus (BA 22) | 50 | 4.14 | −60 | −42 | 0 | 22 |

| Medial frontal cortex (BA 6) | 58 | 3.92 | 2 | −20 | 78 | 6 |

| Subgenual cingulate cortex (BA 24) | 84 | 3.75 | −2 | 6 | −12 | 24 |

| Ventral PFC (BA 47) | 159 | 3.51 | 22 | 16 | −16 | 47 |

Figure 4.

VTA-Cortical coupling predicts reward-motivated enhancements in expectancy violation processing. To address relationships between cue-evoked VTA activity and expectancy violation processing in the left hippocampus, we performed the following analysis. (A) First, we characterized functional coupling of the VTA across the whole brain. (B) Then, using linear regression, we identified regions whose functional coupling with the VTA predicted reward-motivated enhancements in hippocampus expectancy violation processing. (C) This analysis identified a network of cortical regions, including the medial PFC, ventrolateral PFC, and visual cortices (P < 0.05, whole-brain corrected; see also Table 2).

Behavior: Declarative Memory for Expectancy Violations

Following scanning, participants performed a recognition memory task for the goal-irrelevant objects that constituted expectancy violations. For objects seen in the low-motivation condition, memory was no better than chance. In contrast, for expectancy violation objects seen during high-reward motivation, memory was significantly greater than chance and significantly greater than for objects that followed low reward cues (low: mean ± SE = 50.2 ± 1.9%; high: mean ± SE = 61.2 ± 1.6%; t(25) = 4.89, P < 0.001).

Discussion

The current study demonstrates that motivational drive to obtain reward modulates a distributed cortical network to amplify hippocampal signals and records of unexpected events. Neuroimaging results indicated that reward motivation increased hippocampal activations following expectancy violations. VTA activation following reward cues dynamically predicted this increased hippocampal sensitivity, and a network of cortical regions linked VTA activation following cues to hippocampal activation following expectancy violations. Specifically, we found that functional coupling of the VTA with medial prefrontal, ventrolateral prefrontal, and visual cortices predicted reward-motivated enhancements in hippocampal sensitivity to unexpected events. Finally, we found that unexpected events were remembered if they were encountered during high- but not low-reward motivation. Together these findings suggest that during pursuit of reward, the VTA engages a network of cortical intermediaries that facilitates hippocampus-dependent encoding of salient events.

Consistent with an adaptive bias for enriched memory encoding during reward motivation, we found that an individual's motivational state was a significant determinant of memory for goal irrelevant, unexpected events: a surprise memory test revealed declarative memory for objects that violated expectancy only if they were seen during high-reward motivation. Previous studies have demonstrated that reward motivation enhances memory for events that are explicitly goal relevant, such as incentivized information (Adcock et al. 2006; Murayama and Kuhbandner 2011; Wolosin et al. 2012) and reward-predicting cues (Wittmann et al. 2005; Bialleck et al. 2011; Wittmann et al. 2011). Related research has also demonstrated that prior novel events enhance striatal responses to reward cues (Guitart-Masip et al. 2010; Bunzeck et al. 2011), consistent with striatal convergence of novelty and reward signals (Lisman and Grace 2005). Here, from the vantage point of a mechanistically distinct hypothesis, we offer a novel demonstration of a complementary relationship: an effect of reward anticipation on processing of salience by the hippocampus. Notably, we demonstrate that reward motivation can enhance memory for surprising information even though it is explicitly not associated with reward: in the experiment, participants were instructed that the salient events were irrelevant to earning rewards. Nevertheless, here, as in real life, the true relationship of salient events to rewards is ambiguous. The enriched encoding of salient events seen in the current study during reward motivation, but not during unmotivated behavior, supports our broad hypothesis that encoding of the environment is over-inclusive selectively during reward motivation to permit the disambiguation of the causes of reward outcomes (Fu and Anderson 2008). However, given that here no relationship existed between expectancy violation processing and future reward outcomes, this broader hypothesis could not be explicitly tested. Further studies will be needed to establish that memory enhancements for expectancy violations can contribute to disambiguating the causal determinants of rewards to solve the “credit assignment” problem.

fMRI analyses revealed that reward motivation increased responses to expectancy violations selectively in the hippocampus; indeed, the hippocampus was the only region to show this pattern. Lesion studies in rodents, nonhuman primates, and humans have demonstrated that the hippocampus supports declarative memory for expectancy violation events (Ranganath and Rainer 2003; Kishiyama et al. 2004; Axmacher et al. 2010). The striking selectivity of this result argues that the memory enhancements we observed were not due to general increases in arousal or attention during reward motivation. Interestingly, reward motivation has been demonstrated to enhance hippocampus activation prior to and during the encoding of incentivized information (Adcock et al. 2006; Wolosin et al. 2012) as well as during presentation of cues that predict reward (Wittmann et al. 2005; Bunzeck et al. 2011). The current finding that reward motivation enhances hippocampal responsivity not only to incentivized information but also to goal irrelevant, unexpected events further implies an adaptive memory bias for enriched representation of contexts where reward is expected or pursued. In the current study, we were not able to demonstrate a direct relationship between motivation's influence on hippocampal sensitivity and declarative memory (i.e., a subsequent memory analysis). Specifically, these types of analyses could not be performed because, 1) when sorted by later memory performance, there were relatively low number of trials in some conditions (i.e., <10), and 2) there was very low variability in memory performance across participants. Thus, it will be important for future studies to investigate the direct relationship of hippocampal expectancy violation processing on later declarative memory during reward motivation.

We also identified a candidate mechanism of this enhancement: VTA activation in response to reward cues dynamically predicted hippocampal activation in response to expectancy violations. A relationship between VTA activation and hippocampal-dependent encoding has previously been described (Adcock et al. 2006; Wolosin et al. 2012); however, these findings were consistent with at least two underlying mechanisms: First, the VTA could directly modulate the hippocampus, consistent with the prominent theory that dopamine release in the hippocampus stabilizes long-term potentiation during consolidation (reviewed by Shohamy and Adcock 2010; Lisman et al. 2011). Alternatively, because dopamine has widespread actions in the brain, the VTA could also indirectly modulate hippocampal neurophysiology via the coordinated engagement of distributed neural intermediaries. To delineate the contributions of these 2 mechanisms, we conducted an analysis that first identified neural regions that showed cue-evoked functional coupling with the VTA, and then determined if this coupling predicted expectancy violation processing that occurred later in the trial. This analysis demonstrated that functional coupling of the VTA with medial prefrontal, ventrolateral prefrontal, and visual cortices (but not hippocampus itself) predicted reward-motivated enhancements in hippocampal expectancy violation processing. Hence, our findings support the interpretation that the VTA engages a network of cortical intermediaries to influence hippocampal encoding during reward motivation. A similar mechanism of VTA facilitation of prefrontal-hippocampus connectivity has recently been demonstrated during a spatial working memory paradigm in rodents (Fujisawa and Buzsaki 2011). By relating hippocampal physiological changes to VTA-prefrontal interactions, our findings offer a mechanistic integration of the literatures describing dopaminergic effects on prefrontal physiology (Williams and Goldman-Rakic 1995; Durstewitz et al. 2000; Goldman-Rakic et al. 2000; Seamans and Yang 2004) and on long-term memory encoding supported by the hippocampus (Shohamy and Adcock 2010; Wang and Morris 2010; Lisman et al. 2011).

Only a subset of the regions that correlated with VTA activation during motivation also predicted hippocampal sensitivity. These candidate intermediaries between VTA signatures of motivational state and hippocampal responses to expectancy violation have each been implicated by prior literature in reward motivation and hippocampus-dependent memory encoding. The medial PFC is strongly implicated in reward valuation processes (Rangel and Hare 2010) and the generation of affective meaning (Roy et al. 2012). This region has also been associated with memory encoding for self-relevant memoranda (Leshikar and Duarte 2012), and coordinated activity between the medial PFC and hippocampus has been associated with better reward-related learning in rodents (Benchenane et al. 2010). Given these literatures, the medial PFC is a likely source of signals that behaviorally relevant information is expected, and thus promote encoding of that information. The VLPFC is thought to be critical for selecting and prioritizing goal-relevant information for storage in long-term memory (Blumenfeld and Ranganath 2007). Thus, engagement of this region prior to memory encoding would be expected to enhance memory for events potentially relevant to future goal pursuit. Finally, visual cortex activation and visual stimulus processing are increased during states of reward motivation (Shuler and Bear 2006; Serences 2008; Seitz et al. 2009; Pessoa and Engelmann 2010; Baldassi and Simoncini 2011). During episodic memory encoding, visual cortex activations have been demonstrated to reliably predict successful memory encoding (Spaniol et al. 2009; Kim 2011), and priming of the visual cortices for increased detection of salient visual information, would be expected to contribute to hippocampal sensitivity. The current findings could also be conceptualized as reflecting transient shifts in attention to guide greater sensitivity to expectancy violation processing; in our view attentional mechanisms are not mutually exclusive with the memory mechanisms proposed above. Interestingly, the regions described in this network interaction analyses (i.e., medial PFC, lateral PFC, and visual cortex) have all been implicated during enhancements in goal-relevant attentional processes (Corbetta and Shulman 2002; Baluch and Itti 2011). In sum, the current neuroimaging findings suggest that a select network of cortical regions are engaged by the VTA which then contribute to enhanced sensitivity and better hippocampus-dependent encoding.

Our analysis did not provide evidence that direct modulation of the hippocampus by the VTA predicted expectancy violation encoding. Although there was significant functional coupling between the VTA and the hippocampus in response to reward cues, this relationship did not itself predict reward-motivated enhancements in hippocampal expectancy violation processing. This pattern stands in contrast to previous studies that have demonstrated functional connectivity between the VTA and the hippocampus, prior to encoding, that predicts reward-motivated declarative memory (Adcock et al. 2006) and individual differences in reward-motivated memory enhancements (Wolosin et al. 2012). Interestingly, both prior studies investigated the encoding of goal-relevant material (i.e., participants were explicitly rewarded for the successful encoding of memoranda), whereas we investigated the encoding of information not explicitly associated with reward but encountered during reward motivation. Together, this evidence suggests multiple possible routes whereby the VTA can influence hippocampal neurophysiology. We speculate that these different mechanisms arise because the targets of VTA neuromodulation are flexible and reflect an individual's specific behavioral goals. Thus, during states of reward motivation, there may be an integrated modulation of distributed regions involved in valuation, representation, and sensory processing; whereas when specific cognitive processes are incentivized (e.g., memory encoding), these actions may be adjusted so that specific target regions are also directly modulated (e.g., the hippocampus) (Adcock et al. 2006). Future studies will need to confirm this dissociation, and further investigate how these different mechanisms of VTA neuromodulation affect the content and structure of declarative memory.

In summary, our findings characterize a novel mechanism whereby reward motivation can enhance hippocampal sensitivity to—and declarative memory for—salient events. First, we found that motivation to obtain reward enhanced memory to include expectancy violations, even though they were goal irrelevant. This finding suggests a broader role for motivation in shaping memory encoding than previously appreciated, consistent with a bias for enriched encoding of contexts and potential determinants of reward experienced during reward motivation. Second, we identified a novel modulation of hippocampal neurophysiology, such that VTA-cortical interactions were predictive of hippocampal responses to expectancy violations; this finding suggests a modification of the prevailing view that dopamine shapes memory mainly by stabilizing lasting plasticity in the hippocampus after encoding (Lisman et al. 2011). This novel mechanism embodies a unified view of dopaminergic influence on memory formation, by illustrating how the previously described effects of dopamine in the PFC and hippocampus may integrate to enhance memory encoding. We propose that during reward motivation the VTA organizes distributed brain systems to create an over-inclusive, enriched mnemonic record—an adaptive memory bias—so that if and when reward outcomes occur, their causes can be discerned and consolidated in memory.

Supplementary Material

Supplementary material can be found at: http://www.cercor.oxfordjournals.org/

Notes

We thank I. Ballard and K. Macduffie for assistance with data collection and analysis. We also thank I. Ballard and S. Stanton for helpful discussions and comments on the manuscript. Conflict of Interest: None declared.

Funding

This work was supported by National Institutes of Health (grant numbers DA027802, MH094743) and the Alfred P Sloan Foundation.

References

- Adamantidis AR, Tsai HC, Boutrel B, Zhang F, Stuber GD, Budygin EA, de Lecea L. Optogenetic interrogation of dopaminergic modulation of the multiple phases of reward-seeking behavior. J Neurosci. 2011;31:10829–10835. doi: 10.1523/JNEUROSCI.2246-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adcock RA, Thangavel A, Whitfield-Gabrieli S, Knutson B, Gabrieli JD. Reward-motivated learning: mesolimbic activation precedes memory formation. Neuron. 2006;50:507–517. doi: 10.1016/j.neuron.2006.03.036. [DOI] [PubMed] [Google Scholar]

- Aragona BJ, Cleaveland NA, Stuber GD, Day JJ, Carelli RM, Wightman RM. Preferential enhancement of dopamine transmission within the nucleus accumbens shell by cocaine is attributable to a direct increase in phasic dopamine release events. J Neurosci. 2008;28:8821–8831. doi: 10.1523/JNEUROSCI.2225-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Axmacher N, Cohen MX, Fell J, Haupt S, Dumpelmann M, Elger CE, Schlaepfer TE, Lenartz D, Sturm V, Ranganath C. Intracranial EEG correlates of expectancy and memory formation in the human hippocampus and nucleus accumbens. Neuron. 2010;65:541–549. doi: 10.1016/j.neuron.2010.02.006. [DOI] [PubMed] [Google Scholar]

- Baldassi S, Simoncini C. Reward sharpens orientation coding independently of attention. Front Neurosci. 2011;5:13. doi: 10.3389/fnins.2011.00013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ballard IC, Murty VP, Carter RM, MacInnes JJ, Huettel SA, Adcock RA. Dorsolateral prefrontal cortex drives mesolimbic dopaminergic regions to initiate motivated behavior. J Neurosci. 2011;31:10340–10346. doi: 10.1523/JNEUROSCI.0895-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baluch F, Itti L. Mechanisms of top-down attention. Trends Neurosci. 2011;34:210–224. doi: 10.1016/j.tins.2011.02.003. [DOI] [PubMed] [Google Scholar]

- Benchenane K, Peyrache A, Khamassi M, Tierney PL, Gioanni Y, Battaglia FP, Wiener SI. Coherent theta oscillations and reorganization of spike timing in the hippocampal-prefrontal network upon learning. Neuron. 2010;66:921–936. doi: 10.1016/j.neuron.2010.05.013. [DOI] [PubMed] [Google Scholar]

- Berridge KC, Robinson TE. What is the role of dopamine in reward: hedonic impact, reward learning, or incentive salience? Brain Res Brain Res Rev. 1998;28:309–369. doi: 10.1016/s0165-0173(98)00019-8. [DOI] [PubMed] [Google Scholar]

- Bialleck KA, Schaal HP, Kranz TA, Fell J, Elger CE, Axmacher N. Ventromedial prefrontal cortex activation is associated with memory formation for predictable rewards. PLoS One. 2011;6:e16695. doi: 10.1371/journal.pone.0016695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blumenfeld RS, Ranganath C. Prefrontal cortex and long-term memory encoding: an integrative review of findings from neuropsychology and neuroimaging. Neuroscientist. 2007;13:280–291. doi: 10.1177/1073858407299290. [DOI] [PubMed] [Google Scholar]

- Bunzeck N, Doeller CF, Dolan RJ, Duzel E. Contextual interaction between novelty and reward processing within the mesolimbic system. Hum Brain Mapp. 2011;33:1309–1324. doi: 10.1002/hbm.21288. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:215–229. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Dagher A, Robbins TW. Personality, addiction, dopamine: insights from Parkinson's disease. Neuron. 2009;61:502–510. doi: 10.1016/j.neuron.2009.01.031. [DOI] [PubMed] [Google Scholar]

- Dale AM. Optimal experimental design for event-related fMRI. Hum Brain Mapp. 1999;8:109–114. doi: 10.1002/(SICI)1097-0193(1999)8:2/3<109::AID-HBM7>3.0.CO;2-W. [DOI] [PMC free article] [PubMed] [Google Scholar]

- D'Ardenne K, McClure SM, Nystrom LE, Cohen JD. BOLD responses reflecting dopaminergic signals in the human ventral tegmental area. Science. 2008;319:1264–1267. doi: 10.1126/science.1150605. [DOI] [PubMed] [Google Scholar]

- Davachi L. Item, context and relational episodic encoding in humans. Curr Opin Neurobiol. 2006;16:693–700. doi: 10.1016/j.conb.2006.10.012. [DOI] [PubMed] [Google Scholar]

- Devito LM, Eichenbaum H. Memory for the order of events in specific sequences: contributions of the hippocampus and medial prefrontal cortex. J Neurosci. 2011;31:3169–3175. doi: 10.1523/JNEUROSCI.4202-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durstewitz D, Seamans JK, Sejnowski TJ. Neurocomputational models of working memory. Nat Neurosci. 2000;3(Suppl):1184–1191. doi: 10.1038/81460. [DOI] [PubMed] [Google Scholar]

- Fu WT, Anderson JR. Solving the credit assignment problem: explicit and implicit learning of action sequences with probabilistic outcomes. Psychol Res. 2008;72:321–330. doi: 10.1007/s00426-007-0113-7. [DOI] [PubMed] [Google Scholar]

- Fujisawa S, Buzsaki G. A 4 Hz oscillation adaptively synchronizes prefrontal, VTA, and hippocampal activities. Neuron. 2011;72:153–165. doi: 10.1016/j.neuron.2011.08.018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman-Rakic PS, Muly EC, 3rd, Williams GV. (D1) receptors in prefrontal cells and circuits. Brain Res Brain Res Rev. 2000;31:295–301. doi: 10.1016/s0165-0173(99)00045-4. [DOI] [PubMed] [Google Scholar]

- Gruber MJ, Watrous AJ, Ekstrom AD, Ranganath C, Otten LJ. Expected reward modulates encoding-related theta activity before an event. Neuroimage. 2013;64:68–74. doi: 10.1016/j.neuroimage.2012.07.064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guitart-Masip M, Bunzeck N, Stephan KE, Dolan RJ, Düzel E. Contextual novelty changes reward representations in the striatum. J Neurosci. 2010;30:1721–1726. doi: 10.1523/JNEUROSCI.5331-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haber SN. The primate basal ganglia: parallel and integrative networks. J Chem Neuroanat. 2003;26:317–330. doi: 10.1016/j.jchemneu.2003.10.003. [DOI] [PubMed] [Google Scholar]

- Hammad H, Wagner JJ. Dopamine-mediated disinhibition in the CA1 region of rat hippocampus via D3 receptor activation. J Pharmacol Exp Ther. 2006;316:113–120. doi: 10.1124/jpet.105.091579. [DOI] [PubMed] [Google Scholar]

- Jeneson A, Squire LR. Working memory, long-term memory, and medial temporal lobe function. Learn Mem. 2012;19:15–25. doi: 10.1101/lm.024018.111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Kahn I, Shohamy D. Intrinsic connectivity between the hippocampus, nucleus accumbens, and ventral tegmental area in humans. Hippocampus. 2012 doi: 10.1002/hipo.22077. doi:10.1002/hipo.22077. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H. Differential neural activity in the recognition of old versus new events: an activation likelihood estimation meta-analysis. Hum Brain Mapp. 2011 doi: 10.1002/hbm.21474. [Epub ahead of print] [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kishiyama MM, Yonelinas AP, Lazzara MM. The von Restorff effect in amnesia: the contribution of the hippocampal system to novelty-related memory enhancements. J Cogn Neurosci. 2004;16:15–23. doi: 10.1162/089892904322755511. [DOI] [PubMed] [Google Scholar]

- Knutson B, Westdorp A, Kaiser E, Hommer D. fMRI visualization of brain activity during a monetary incentive delay task. Neuroimage. 2000;12:20–27. doi: 10.1006/nimg.2000.0593. [DOI] [PubMed] [Google Scholar]

- Leshikar ED, Duarte A. Medial prefrontal cortex supports source memory accuracy for self-referenced items. Soc Neurosci. 2012;7:126–145. doi: 10.1080/17470919.2011.585242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lisman J, Grace AA. The hippocampal-VTA loop: controlling the entry of information into long-term memory. Neuron. 2005;46:703–713. doi: 10.1016/j.neuron.2005.05.002. [DOI] [PubMed] [Google Scholar]

- Lisman J, Grace AA, Duzel E. A neoHebbian framework for episodic memory; role of dopamine-dependent late LTP. Trends Neurosci. 2011;34:536–547. doi: 10.1016/j.tins.2011.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lisman JE, Otmakhova NA. Storage, recall, and novelty detection of sequences by the hippocampus: elaborating on the SOCRATIC model to account for normal and aberrant effects of dopamine. Hippocampus. 2001;11:551–568. doi: 10.1002/hipo.1071. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. Neuroimage. 2003;19:1233–1239. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- McGinty VB, Hayden BY, Heilbronner SR, Dumont EC, Graves SM, Mirrione MM, Haber S. Emerging, reemerging, and forgotten brain areas of the reward circuit: notes from the 2010 Motivational Neural Networks conference. Behav Brain Res. 2011;225:348–357. doi: 10.1016/j.bbr.2011.07.036. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehta MA, Riedel WJ. Dopaminergic enhancement of cognitive function. Curr Pharm Des. 2006;12:2487–2500. doi: 10.2174/138161206777698891. [DOI] [PubMed] [Google Scholar]

- Murayama K, Kuhbandner C. Money enhances memory consolidation—but only for boring material. Cognition. 2011;119:120–124. doi: 10.1016/j.cognition.2011.01.001. [DOI] [PubMed] [Google Scholar]

- Nieoullon A, Coquerel A. Dopamine: a key regulator to adapt action, emotion, motivation and cognition. Curr Opin Neurol. 2003;16(Suppl 2):S3–S9. [PubMed] [Google Scholar]

- Pessoa L, Engelmann JB. Embedding reward signals into perception and cognition. Front Neurosci. 2010;4 doi: 10.3389/fnins.2010.00017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ranganath C. A unified framework for the functional organization of the medial temporal lobes and the phenomenology of episodic memory. Hippocampus. 2010;20:1263–1290. doi: 10.1002/hipo.20852. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Rainer G. Neural mechanisms for detecting and remembering novel events. Nat Rev Neurosci. 2003;4:193–202. doi: 10.1038/nrn1052. [DOI] [PubMed] [Google Scholar]

- Rangel A, Hare T. Neural computations associated with goal-directed choice. Curr Opin Neurobiol. 2010;20:262–270. doi: 10.1016/j.conb.2010.03.001. [DOI] [PubMed] [Google Scholar]

- Rissman J, Gazzaley A, D'Esposito M. Measuring functional connectivity during distinct stages of a cognitive task. Neuroimage. 2004;23:752–763. doi: 10.1016/j.neuroimage.2004.06.035. [DOI] [PubMed] [Google Scholar]

- Roy M, Shohamy D, Wager TD. Ventromedial prefrontal-subcortical systems and the generation of affective meaning. Trends Cogn Sci. 2012;16:147–156. doi: 10.1016/j.tics.2012.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seamans JK, Yang CR. The principal features and mechanisms of dopamine modulation in the prefrontal cortex. Prog Neurobiol. 2004;74:1–58. doi: 10.1016/j.pneurobio.2004.05.006. [DOI] [PubMed] [Google Scholar]

- Seitz AR, Kim D, Watanabe T. Rewards evoke learning of unconsciously processed visual stimuli in adult humans. Neuron. 2009;61:700–707. doi: 10.1016/j.neuron.2009.01.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Serences JT. Value-based modulations in human visual cortex. Neuron. 2008;60:1169–1181. doi: 10.1016/j.neuron.2008.10.051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shermohammed M, Murty VP, Smith DV, Carter RM, Huettel S, Adcock RA. Resting-state analysis of the ventral tegmental area and substantia nigra reveals differential connectivity with the prefrontal cortex. Chicago (IL): Society for the Cognitive Neuroscience Society; 2012. [Google Scholar]

- Shohamy D, Adcock RA. Dopamine and adaptive memory. Trends Cogn Sci. 2010;14:464–472. doi: 10.1016/j.tics.2010.08.002. [DOI] [PubMed] [Google Scholar]

- Shohamy D, Wagner AD. Integrating memories in the human brain: hippocampal-midbrain encoding of overlapping events. Neuron. 2008;60:378–389. doi: 10.1016/j.neuron.2008.09.023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shuler MG, Bear MF. Reward timing in the primary visual cortex. Science. 2006;311:1606–1609. doi: 10.1126/science.1123513. [DOI] [PubMed] [Google Scholar]

- Smith SM. Fast robust automated brain extraction. Hum Brain Mapp. 2002;17:143–155. doi: 10.1002/hbm.10062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spaniol J, Davidson PS, Kim AS, Han H, Moscovitch M, Grady CL. Event-related fMRI studies of episodic encoding and retrieval: meta-analyses using activation likelihood estimation. Neuropsychologia. 2009;47:1765–1779. doi: 10.1016/j.neuropsychologia.2009.02.028. [DOI] [PubMed] [Google Scholar]

- Stuber GD, Klanker M, de Ridder B, Bowers MS, Joosten RN, Feenstra MG, Bonci A. Reward-predictive cues enhance excitatory synaptic strength onto midbrain dopamine neurons. Science. 2008;321:1690. doi: 10.1126/science.1160873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Swant J, Stramiello M, Wagner JJ. Postsynaptic dopamine D3 receptor modulation of evoked IPSCs via GABA(A) receptor endocytosis in rat hippocampus. Hippocampus. 2008;18:492–502. doi: 10.1002/hipo.20408. [DOI] [PubMed] [Google Scholar]

- Tsai HC, Zhang F, Adamantidis A, Stuber GD, Bonci A, de Lecea L, Deisseroth K. Phasic firing in dopaminergic neurons is sufficient for behavioral conditioning. Science. 2009;324:1080–1084. doi: 10.1126/science.1168878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tubridy S, Davachi L. Medial temporal lobe contributions to episodic sequence encoding. Cereb Cortex. 2011;21:272–280. doi: 10.1093/cercor/bhq092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wang SH, Morris RG. Hippocampal-neocortical interactions in memory formation, consolidation, and reconsolidation. Annu Rev Psychol. 2010;61:49–79. doi: 10.1146/annurev.psych.093008.100523. C41.–44. [DOI] [PubMed] [Google Scholar]

- Watanabe M. Role of anticipated reward in cognitive behavioral control. Curr Opin Neurobiol. 2007;17:213–219. doi: 10.1016/j.conb.2007.02.007. [DOI] [PubMed] [Google Scholar]

- Williams GV, Goldman-Rakic PS. Modulation of memory fields by dopamine D1 receptors in prefrontal cortex. Nature. 1995;376:572–575. doi: 10.1038/376572a0. [DOI] [PubMed] [Google Scholar]

- Wise RA. Dopamine, learning and motivation. Nat Rev Neurosci. 2004;5:483–494. doi: 10.1038/nrn1406. [DOI] [PubMed] [Google Scholar]

- Wittmann BC, Dolan RJ, Duzel E. Behavioral specifications of reward-associated long-term memory enhancement in humans. Learn Mem. 2011;18:296–300. doi: 10.1101/lm.1996811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wittmann BC, Schott BH, Guderian S, Frey JU, Heinze HJ, Duzel E. Reward-related fMRI activation of dopaminergic midbrain is associated with enhanced hippocampus-dependent long-term memory formation. Neuron. 2005;45:459–467. doi: 10.1016/j.neuron.2005.01.010. [DOI] [PubMed] [Google Scholar]

- Wolosin SM, Zeithamova D, Preston AR. Reward modulation of hippocampal subfield activation during successful associative encoding and retrieval. J Cogn Neurosci. 2012;24:1532–1547. doi: 10.1162/jocn_a_00237. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM. Temporal autocorrelation in univariate linear modeling of fMRI data. Neuroimage. 2001;14:1370–1386. doi: 10.1006/nimg.2001.0931. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.