Abstract

Women typically remember more female than male faces, whereas men do not show a reliable own-gender bias. However, little is known about the neural correlates of this own-gender bias in face recognition memory. Using functional magnetic resonance imaging (fMRI), we investigated whether face gender modulated brain activity in fusiform and inferior occipital gyri during incidental encoding of faces. Fifteen women and 14 men underwent fMRI while passively viewing female and male faces, followed by a surprise face recognition task. Women recognized more female than male faces and showed higher activity to female than male faces in individually defined regions of fusiform and inferior occipital gyri. In contrast, men’s recognition memory and blood-oxygen-level-dependent response were not modulated by face gender. Importantly, higher activity in the left fusiform gyrus (FFG) to one gender was related to better memory performance for that gender. These findings suggest that the FFG is involved in the gender bias in memory for faces, which may be linked to differential experience with female and male faces.

Keywords: face recognition, own-gender bias, sex differences, fMRI, fusiform gyrus

INTRODUCTION

Behavioral findings have consistently shown that own-group faces are better recognized than other-group faces. A well-replicated instance of this phenomenon is the own-race bias, showing that memory is typically better for own-race than for other-race faces (e.g. Meissner and Brigham, 2001). Similarly, there is evidence of an own-gender bias in memory for faces, which has been replicated in several studies for women, but not for men (McKelvie, 1987; Wright and Sladden, 2003; Lovén et al., 2011). Hence, women’s advantage over men in memory for faces is particularly marked for female faces and is typically smaller for male faces (Rehnman and Herlitz, 2007).

Little is known about the neural processes underlying the own-gender bias in face recognition memory. To investigate the neural correlates of the own-gender bias, we used functional magnetic resonance imaging (fMRI) to assess women and men’s blood-oxygen-level-dependent (BOLD) response to female and male faces during incidental encoding of faces. Lesion and BOLD fMRI studies have shown that face perception relies on a bilateral neural system in the ventral occipito-temporal visual cortex (Kanwisher et al., 1997; Barton et al., 2002). Two important regions in this ‘face network’ (Haxby et al., 2000) for visual analysis of faces are the inferior occipital gyrus (IOG), involved in the early visual analysis of facial features and the fusiform gyrus (FFG), which seems to be engaged in processing of facial features and their configurations (Haxby et al., 2000; Liu et al., 2010). Interestingly, increased expertise for a non-face material is related to a higher BOLD response in FFG (Gauthier et al., 2000; Bilalić et al., 2011).

To date, few fMRI studies have assessed the influence of face gender on BOLD response in core regions of the face network. Ino et al. (2010) used an exploratory whole-brain group analysis to assess men and women’s responses to female and male faces and found that women showed a higher BOLD response in right FFG for female than male faces during encoding, whereas men’s BOLD response was increased for male faces. However, there are several studies on the own-race bias, showing that for unfamiliar faces, BOLD response in FFG was greater for own-race than for other-race faces (Golby et al., 2001; Lieberman et al., 2005; Kim et al., 2006; Feng et al., 2011). Furthermore, Feng et al. (2011) observed that BOLD response in IOG was magnified for own-race compared with other-race faces, indicating that race of face influences the early visual analysis of faces. Finally, Golby et al. (2001) found that higher activity in left FFG for one race compared with the other was related to better memory performance for faces of this race (cf. Feng et al., 2011). This suggests that differences in BOLD response to own- and other-race faces in FFG may, at least partly, explain differences in the magnitude of the own-race bias in face recognition memory.

Taken together, it is currently not clear whether women have a higher BOLD response to female than male faces in FFG and IOG. Also, it remains to be investigated whether a differential BOLD response in FFG to female and male faces contributes to the own-gender bias in face recognition memory. To answer these questions, we used an event-related fMRI design and a region of interest (ROI)-based approach. Thus, the first aim of this study was to determine whether face gender modulated BOLD response to faces in FFG and IOG. We hypothesized that women would show a higher BOLD response in FFG and IOG for female than male faces during incidental encoding. Based on previous behavioral findings indicating that men do not show an own-gender bias (Lovén et al., 2011), no differences were expected for men. The second aim was to assess whether differences in left FFG BOLD response to female and male faces were related to differences in recognition memory for female and male faces, as studies on the own-race bias have found an association between differences in performance and BOLD response to own- and other-race faces in the left FFG, but not in the right FFG or IOG (Golby et al., 2001; cf. Feng et al., 2011). Thus, we expected a positive association between the differences in left FFG BOLD response and memory for female and male faces. The third and more exploratory aim was to determine whether there were sex differences in BOLD response to female and male faces. There are some findings indicating that men show a higher BOLD response to neutral and emotional faces in FFG than women, particularly in response to male faces (Ino et al., 2010; Mather et al., 2010), although other studies have not found evidence of sex-differential activation in FFG during encoding of neutral and emotional faces (Fischer et al., 2004a, 2004b, 2007).

METHODS

Participants

Participants were 31 right-handed adults (16 women), who were recruited by advertisement in local newspapers. No participant reported any previous or current neuropsychiatric diseases. The total sample included 29 participants (15 women), as two participants were excluded because of response pad problems during the face recognition test. Independent samples t-tests showed that there were no sex differences in age (Mwomen = 24.20, s.d.women = 3.49; Mmen = 25.93, s.d.men = 3.38) or years of education (Mwomen = 14.27, s.d.women = 2.34; Mmen = 15.39, s.d.men = 1.86), ts > −1.43, Ps > 0.16. In accordance with the Declaration of Helsinki, written informed consent was obtained from all participants, and the regional ethical review board approved the study. Participants received 1000 SEK for study participation. The study was a part of a larger data collection and participants completed a cognitive test battery on a separate test session ∼1 week before the fMRI session.

Materials and procedure

Color photographs depicting 24 younger women, 24 younger men, 24 older women and 24 older men with neutral facial expressions were selected from the FACES database (Ebner et al., 2010). Two face sets were created, each including 12 faces of each face category (younger female, younger male, older female and older male). For half of the participants, the first set served as targets and the second set as lures, and for the other half, the first set served as lure faces and the second set as targets. Behavioral and fMRI data analyzed in this study only included younger adult female and male faces, given evidence of an own-age bias in face recognition memory (Rhodes and Anastasi, 2012).

During the incidental encoding session, faces were presented pseudo-randomly intermixed with one-third low-level baseline trials. In these baseline trials, three Xs were presented centrally on the screen. Faces and baseline trials were presented for 3500 ms each, followed by a variable jitter (3000, 3250, 3500, 3750 or 4000 ms), during which a fixation cross was presented to mark the beginning of each new trial. Participants were instructed to view faces and baseline trials as if they were at home watching TV. The encoding session was completed in ∼9 min. Next, a T1-weighted image was acquired, followed by a short practice run for the recognition task. Thus, after a retention interval of ∼10 min, participants completed the face recognition test in the scanner. Participants indicated old/new face recognition (yes or no) via button press.

In order to determine that the groups of men and women were performing at a similar level cognitively, their performance on a semantic memory task was assessed ∼1 week before the fMRI session. Men and women typically perform at a similar level on this vocabulary task (e.g. Herlitz et al., 1997), in which participants are asked to choose the correct synonym for a target word among five alternatives (Dureman, 1960). The task comprised 30 items and the time limit was 5 min.

fMRI data acquisition

fMRI was performed on a 3T Siemens Magnetom Trio Tim scanner. Following localizer scans and four dummy volumes, one run of 206 functional images was acquired during face encoding with a T2*-weighted echo planar sequence (TR = 2500 ms, TE = 40 ms, flip angle = 90°, FOV = 230 mm, voxel size = 3 × 3 × 3 mm). Thirty-nine slices were positioned oblique axial, parallel with the AC–PC line and were acquired interleaved ascending. In addition, a 1 × 1 × 1 mm T1-weighted image was acquired (MP–RAGE; TR = 1900 ms, TE = 2.52 ms; FoV = 256 mm).

Behavioral data analyses

As a measure of recognition memory, participants’ hit and false alarm rates for female and male faces, respectively, were converted into d′ (Snodgrass and Corwin, 1988). Paired samples t-tests were computed to confirm that women showed an own-gender bias and men did not, and independent samples t-tests were computed to assess potential sex differences in face recognition memory for female and male faces. The standardized average difference between two independent groups was estimated as d = (M1−M2)/s.d.Pooled. The standardized difference between two dependent measures was estimated as d = (M1−M2)/s.d.Within, where s.d.Within = s.d.Difference/√(2 × [1−r]). S.d.Difference is the standard deviation of the difference scores and r is the correlation between the pairs of observations (Borenstein, 2009).

fMRI analyses

The run of 206 volumes acquired during incidental encoding of faces entered the fMRI analyses. To perform these analyses, we used SPM8 (http://www.fil.ion.ucl.ac.uk/spm). The EPI volumes were slice acquisition time corrected and realigned to the first volume. Next, images were transformed into MNI space using the EPI template in SPM8 and resampled into 2 mm voxels. Finally, the images were smoothed using an 8 mm FWHM Gaussian kernel.

Participants’ responses to faces and baseline were modeled within the general linear model framework. For each participant, a fixed-effects model was specified. To form regressors, trial onsets (converted into delta functions) of the face categories (younger female, younger male, older female and older male) and the low-level baseline trials (three Xs) were convolved with the canonical hemodynamic response function provided by SPM8. The three regressors of interest were younger female faces, younger male faces and the low-level baseline trials. Note that the jittered periods of fixation were not explicitly modeled. To correct for motion artifacts, each participant’s six movement parameters, obtained from the spatial realignment procedure, were included as covariates of no interest. Finally, a high-pass filter with a 128 s cutoff was applied and an autoregressive model (AR[1]) was used for parameter estimation.

Next, contrasts of interest were created. As mentioned earlier, only younger adult female and male faces were included in the analyses. The first contrast was specified as the effect of female and male faces. For this contrast, the effect of baseline was subtracted from the effect of faces (faces > baseline). Two more contrasts were created, one for the effect of female faces (> baseline) and one for the effect of male faces (> baseline).

ROIs in the bilateral FFG and IOG were defined individually using each participant’s contrast of faces vs baseline. These functional ROIs were defined as clusters of activation (≥8 contiguous voxels; Minnebusch et al., 2009) within the anatomical FFG and IOG ROIs from the WFU Pickatlas (Maldjian et al., 2003, 2004). The significance threshold was set at P < 0.0001, uncorrected (Kanwisher et al., 1997). At this threshold, no activated clusters in IOG were found in four participants (three women). Therefore, more liberal thresholds were used to define these participants’ functional ROIs (n = 3, P < 0.001, uncorrected; n = 1, P < 0.05, uncorrected). For each participant’s spatial coordinates of peak voxel activations within the ROIs, see Supplementary Tables S1 and S2.

MarsBaR (Brett et al., 2002) was used to extract each individual’s average parameter estimates (β-values) for female faces (>baseline) and male faces (>baseline) from the individually defined ROIs. To assess men and women’s BOLD response in left and right FFG and IOG to female and male faces, respectively, separate mixed 2 (face gender: female, male) × 2 (sex of participant: woman, man) ANOVAs were computed. Planned comparisons were conducted with independent and dependent samples t-tests.

For descriptive purposes, a group parametric map was computed to illustrate participants’ general BOLD response to faces vs baseline in the whole brain. Participant’s contrast images for this effect were entered into a second-level model (one sample t-test). The significance threshold was set to P < 0.05 (FWE-corrected) with an extent threshold of 10 or more contiguous voxels.

Associations between differences in BOLD response and memory

Pearson product-moment correlations were computed to examine the relation between the difference in BOLD response and the difference in recognition memory for female and male faces. For the behavioral difference score, recognition memory (d′) for male faces was subtracted from recognition memory (d′) for female faces. Thus, a positive value indicates that female faces were better recognized than male faces, and a negative value indicates that male faces were better recognized than female faces.

For the difference scores based on FFG BOLD responses within each hemisphere, each individual’s parameter estimate of BOLD response to male faces was subtracted from the parameter estimate of BOLD response to female faces (Feng et al., 2011). Difference scores for IOG were computed in the same manner. As there were outliers in two of the difference score variables (left FFG, n = 2; left IOG, n = 1), an arctangent function was applied to transform these variables.

RESULTS

Behavioral findings

There was no sex difference in performance on the vocabulary task, t(27) = 0.07, P = 0.94 (Mwomen = 22.60, s.d.women = 4.07; Mmen = 22.50, s.d.men = 3.25), confirming that the two groups were comparable with respect to semantic memory (Herlitz et al., 1997).

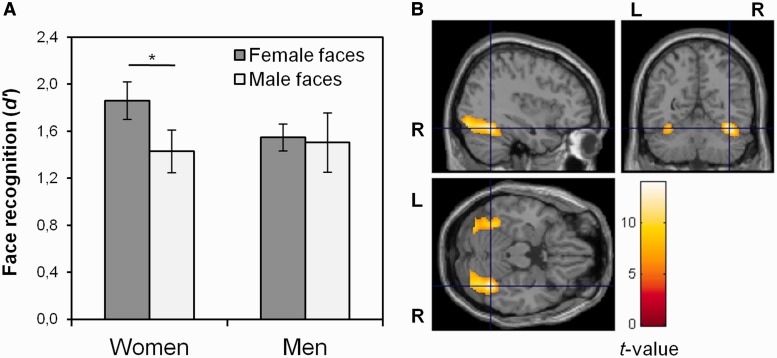

As expected, women recognized more female than male faces, t(14) = 2.25, P = 0.04, d = 0.65 (Figure 1A). Men recognized female and male faces with equal facility, t(13) = 0.19, P = 0.86. Women’s advantage over men in memory for female faces was not statistically significant, t(27) = 1.56, P = 0.13, d = 0.58 and there was no sex difference in memory for male faces, t(27) = −0.25, P = 0.81.

Fig. 1.

Results from behavioral and exploratory whole-brain analyses. (A) Women and men’s face recognition memory (d′) for female and male faces, respectively. Error bars represent standard error of the mean. *P < 0.05. (B) Men and women’s BOLD response to faces, displayed at the peak voxel activation in right FFG (MNI coordinates: x = 38, y = −56, z = −20), P < 0.05 (FWE-corrected), cluster extent ≥10 contiguous voxels.

fMRI findings

Whole-brain analysis

At the group level, incidental encoding of faces was associated with bilateral BOLD response in FFG, cuneus, lingual gyrus, middle occipital gyrus and IOG (Figure 1B and Supplementary Table S3).

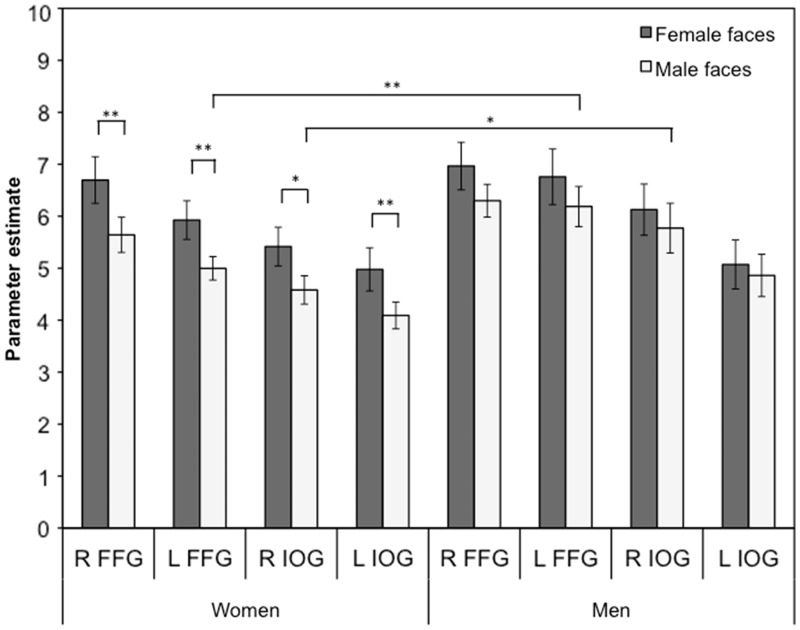

Fusiform gyrus

First, BOLD response in right FFG was assessed. A main effect of face gender showed that BOLD response in right FFG was greater for female than for male faces, F(1, 25) = 9.66, P = 0.005,  = 0.28. There was no main effect of sex, F(1, 25) = 0.91, P = 0.35 and no interaction between face gender and sex of participant, F(1, 25) = 0.49, P = 0.49. However, in line with our hypothesis, paired samples t-tests showed that women’s BOLD response in right FFG was greater for female than for male faces, t(13) = 3.81, P = 0.002, d = 0.66 (Figure 2). In contrast, there was no significant difference between men’s BOLD response to female and male faces, t(12) = 1.36, P = 0.20. Independent samples t-tests showed that there were no sex differences in right FFG BOLD response to female faces, t(25) = −0.42, P = 0.68 or male faces, t(25) = −1.41, P = 0.17.

= 0.28. There was no main effect of sex, F(1, 25) = 0.91, P = 0.35 and no interaction between face gender and sex of participant, F(1, 25) = 0.49, P = 0.49. However, in line with our hypothesis, paired samples t-tests showed that women’s BOLD response in right FFG was greater for female than for male faces, t(13) = 3.81, P = 0.002, d = 0.66 (Figure 2). In contrast, there was no significant difference between men’s BOLD response to female and male faces, t(12) = 1.36, P = 0.20. Independent samples t-tests showed that there were no sex differences in right FFG BOLD response to female faces, t(25) = −0.42, P = 0.68 or male faces, t(25) = −1.41, P = 0.17.

Fig. 2.

Women and men’s average BOLD response (β-value) in left and right FFG and IOG to female and male faces during incidental encoding. Error bars represent standard error of the mean. Note: **P < 0.01, *P < 0.05. R, right; L, left; FFG, fusiform gyrus and IOG, inferior occipital gyri.

Next, the analysis of left FFG showed that there was a main effect of face gender, indicating that BOLD response was greater for female than for male faces, F(1, 24) = 5.66, P = 0.03,  = 0.19. A main effect of sex showed that men had a higher BOLD response to faces than women, F(1, 24) = 4.79, P = 0.04,

= 0.19. A main effect of sex showed that men had a higher BOLD response to faces than women, F(1, 24) = 4.79, P = 0.04,  = 0.17. The interaction between face gender and sex of participant was not significant, F(1, 24) = 0.32, P = 0.58, but the planned comparison showed that women’s BOLD response in left FFG was greater for female than for male faces, t(12) = 3.75, P = 0.003, d = 0.72 (Figure 2). Men’s BOLD response to female and male faces did not differ significantly, t(12) = 0.99, P = 0.34. There was no sex difference in left FFG BOLD response to female faces, t(24) = −1.28, P = 0.21, although men had a greater BOLD response to male faces than women, t(24) = −2.66, P = 0.01, d = −1.04.

= 0.17. The interaction between face gender and sex of participant was not significant, F(1, 24) = 0.32, P = 0.58, but the planned comparison showed that women’s BOLD response in left FFG was greater for female than for male faces, t(12) = 3.75, P = 0.003, d = 0.72 (Figure 2). Men’s BOLD response to female and male faces did not differ significantly, t(12) = 0.99, P = 0.34. There was no sex difference in left FFG BOLD response to female faces, t(24) = −1.28, P = 0.21, although men had a greater BOLD response to male faces than women, t(24) = −2.66, P = 0.01, d = −1.04.

Inferior occipital gyrus

A main effect of face gender was found, showing that BOLD response in right IOG was greater for female than for male faces, F(1, 25) = 4.30, P = 0.049,  = 0.15. The main effect of sex of participant, F(1, 25) = 3.60, P = 0.069 and the interaction between sex of participant and face gender were not statistically significant, F(1, 25) = 0.69, P = 0.41. Planned comparisons showed that women’s BOLD response in right IOG was greater for female than for male faces, t(13) = 3.04, P = 0.01, d = 0.65 (Figure 2). In contrast, there was no significant difference between men’s BOLD response to female and male faces, t(12) = 0.69, P = 0.50. There was no sex difference in right IOG responses to female faces, t(25) = −1.16, P = 0.26, but men had a greater BOLD response to male faces in right IOG than women, t(25) = −2.20, P = 0.04, d = −0.85.

= 0.15. The main effect of sex of participant, F(1, 25) = 3.60, P = 0.069 and the interaction between sex of participant and face gender were not statistically significant, F(1, 25) = 0.69, P = 0.41. Planned comparisons showed that women’s BOLD response in right IOG was greater for female than for male faces, t(13) = 3.04, P = 0.01, d = 0.65 (Figure 2). In contrast, there was no significant difference between men’s BOLD response to female and male faces, t(12) = 0.69, P = 0.50. There was no sex difference in right IOG responses to female faces, t(25) = −1.16, P = 0.26, but men had a greater BOLD response to male faces in right IOG than women, t(25) = −2.20, P = 0.04, d = −0.85.

For the left IOG, there was a main effect of face gender, showing that BOLD response was greater for female than for male faces, F(1, 25) = 5.23, P = 0.03,  = 0.17. No other effects were significant, Fs < 1.99, Ps > 0.17. Planned comparisons showed that women’s BOLD response was greater for female than for male faces in left IOG, t(13) = 3.09, P = 0.009, d = 0.61 (Figure 2), while face gender did not modulate men’s BOLD response, t(12) = 0.54, P = 0.60. There were no sex differences in left IOG response to either female or male faces, ts > −1.63, Ps > 0.11.

= 0.17. No other effects were significant, Fs < 1.99, Ps > 0.17. Planned comparisons showed that women’s BOLD response was greater for female than for male faces in left IOG, t(13) = 3.09, P = 0.009, d = 0.61 (Figure 2), while face gender did not modulate men’s BOLD response, t(12) = 0.54, P = 0.60. There were no sex differences in left IOG response to either female or male faces, ts > −1.63, Ps > 0.11.

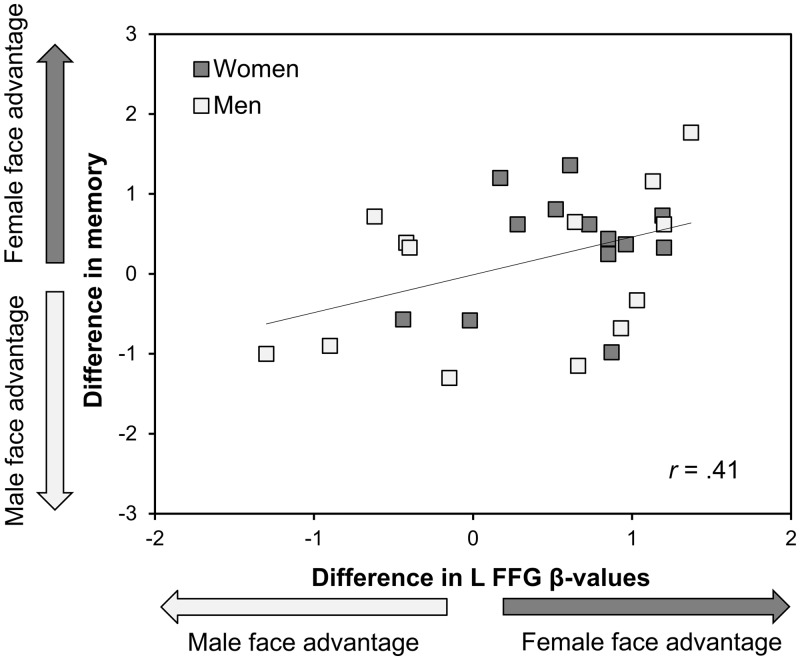

Associations between differences in BOLD response and memory

As can be seen in Figure 3, there was a positive correlation between the difference in face recognition memory for female vs male faces and the difference in left FFG response to female and male faces, r(24) = 0.41, P = 0.037. Individuals with a greater BOLD response to one gender compared with the other tended to recognize faces of this gender better. For the other regions, there were no significant correlations between the difference in face recognition memory for female and male faces and difference scores in BOLD response (left IOG, r[25] = 0.36, P = 0.07; right IOG, r[25] = 0.07, P = 0.73; right FFG, r[25] = 0.26, P = 0.19).

Fig. 3.

The relation between differences in left FFG BOLD response (β-value female faces minus β-value male faces) and face recognition memory (d′ female faces minus d′ male faces). A positive value indicates a female face advantage (higher BOLD response or memory for female than for male faces). A negative value indicates a male face advantage. Note that the difference score variable for BOLD response to female vs male faces was transformed; the direction of the difference represents the original direction before transformation.

DISCUSSION

The aims of this study were to investigate whether face gender modulated BOLD response in FFG and IOG, and whether greater BOLD response in left FFG to one gender was related to better face recognition memory for this gender. As expected, women showed an own-gender bias, whereas men recognized female and male faces with equal facility (Lovén et al., 2011). Also in line with previous findings, incidental encoding of faces was associated with activity mainly in the occipito-temporal cortex (Haxby et al., 2000).

In line with our predictions, women showed a greater BOLD response in FFG for female faces than for male faces. In contrast, men’s BOLD response was not reliably modulated by face gender. The present results expand previous findings of differential BOLD responses in FFG to own- and other-race faces (e.g. Golby et al., 2001) by showing that face gender influences women’s BOLD response in FFG. Moreover, women showed an increased BOLD response in IOG to female compared with male faces. This indicates that women’s increased brain activity to female faces is present early in the face processing stream, as face-specific areas in the IOG seem to be involved in the initial visual analysis of face parts (Liu et al., 2010). In contrast, areas of face-specific activations in FFG seem to be involved in the processing of both face parts and their configuration (Yovel and Kanwisher, 2005; Liu et al., 2010). It is possible that an increased FFG response to stimuli that one has more experience with, such as own-race faces, represents visual expertise (Golby et al., 2001; Feng et al., 2011). On the other hand, increased interest in, attention to, or motivation to process own-group faces may also modulate FFG response to faces (Van Bavel et al., 2011). However, whether greater FFG activity to own-group compared with other-group faces indeed is a result of perceptual expertise and/or whether top-down (e.g. motivation) processes are at work remains to be determined in future research.

Importantly, the difference in memory for female and male faces was associated with the female-vs-male face difference in left FFG BOLD response: Individuals who had a greater BOLD response in left FFG to one gender also tended to recognize faces of this gender better, indicating that differences in left FFG activity underlie better memory for one gender compared with the other. This suggests that a similar association holds for the gender bias as for the race bias in face recognition memory (Golby et al., 2001; cf. Feng et al., 2011). However, it is not clear why primarily differences in the left FFG BOLD response are related to differences in the magnitude of the own-group bias, although it has been suggested that the left FFG has additional properties at encoding that enhance later recognition (Prince et al., 2009), such as encoding of facial features (Rossion et al., 2000).

Men showed a greater BOLD response than women to male faces in left FFG and right IOG, while there was no sex difference in recognition of male faces. Similar findings have been reported for BOLD response to neutral and faintly smiling faces in left and right FFG, and to male faces in right FFG (Ino et al., 2010), as well as in right FFG response to neutral and angry faces—in the absence of a sex difference in performance (Mather et al., 2010). In contrast, other studies have not found sex differences in BOLD response to either emotional or neutral faces in these regions (Fischer et al., 2004a, 2004b, 2007). At present, it is unclear why men may display a greater response in FFG to (male) faces than women, in the absence of differences in face recognition performance. One suggestion is that this difference is a result of women recruiting regions involved in face processing more efficiently than men do (Ino et al., 2010).

At this point, it can only be speculated about why women but not men show an own-gender bias in face recognition memory. There are several studies showing that the female own-gender bias is present in childhood (Feinman and Entwisle, 1976; Rehnman and Herlitz, 2006). In addition, there is evidence of an early sex difference in attention to faces, as neonatal girls, in contrast to boys, preferentially attend to a face rather than to a moving mobile (Connellan et al., 2000). Girls aged 3-4 months engage in eye-to-eye contact with an unfamiliar individual to a higher extent than boys, and this sex difference is particularly pronounced when the individual is female (Leeb and Rejskind, 2004). This gender-specific bias, found very early in development, may be strengthened in girls throughout childhood and adolescence by close interaction with other females, and in turn could be the precursor of women’s own-gender bias in face recognition memory.

This study has some limitations. First, to define each individual’s ROIs in FFG and IOG, faces were contrasted with low-level baseline trials. The comparison stimulus that we used in this study is less complex than the materials commonly used to define face-specific functional ROIs in the occipito-temporal cortex, such as houses (Kanwisher et al., 1997). However, the type of comparison stimulus does not seem to greatly affect the localization of functional ROIs in FFG (Berman et al., 2010). For instance, very similar ROI definitions in FFG were found when faces were contrasted with a low-level baseline and common objects (chairs), respectively (Lehmann et al., 2004). Second, the functional ROIs were not defined with an independent localizer. However, both female and male faces were included in the contrast that we used to define each individual’s face-specific functional ROIs (for a similar approach, see Golby et al., 2001). This ensured that the findings were not biased toward either female or male faces, as parameter estimates of responses to female and male faces were extracted from the same regions within each individual. Third, the sex difference in recognition of female faces did not reach the conventional level of statistical significance (P < 0.05), although the magnitude of the effect (d = 0.58) was in good agreement with previous findings (e.g., d = 0.64; Rehnman and Herlitz, 2007). Finally, given mixed evidence of sex differences in BOLD response to faces (Fischer et al., 2004a, 2004b, 2007; Ino et al., 2010; Mather et al., 2010), the present findings of sex differences in BOLD response need to be replicated and further explored in future studies.

To our knowledge, this study is the first to investigate the relation between BOLD response in regions of the face network and recognition of female and male faces. It provides novel evidence that a differential BOLD response in left FFG to female and male faces is related to the magnitude of the gender bias in face recognition memory. Importantly, we found that face gender influenced women’s but not men’s BOLD response in FFG and IOG during incidental face encoding, as women’s BOLD response was greater for female than for male faces in these core regions of the face network. This is in line with behavioral evidence indicating an own-gender bias in women’s face recognition memory, but no such effect in men (Lovén et al., 2011). Given the involvement of especially the FFG in successful face encoding (Prince et al., 2009), the present results support previous behavioral findings suggesting that women have higher face processing skills for female than for male faces (Lovén et al., 2011; Megreya et al., 2011).

SUPPLEMENTARY DATA

Supplementary data are available at SCAN online.

Conflict of Interest

None declared.

Supplementary Material

Acknowledgments

The authors wish to thank Sebastian Gluth for assistance in programming the task and Dr Anna Rieckmann for collecting the data. This research was conducted at Karolinska Institutet MR-Center, Huddinge Hospital, Stockholm, Sweden, and was supported by grants from Konung Gustaf V:s och Drottning Victorias Frimurarstiftelse (awarded to H.F.) and the Swedish Research Council (2008–2356; 2009–2255).

References

- Barton JJS, Press DZ, Keenan JP, O’Connor M. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 2002;58:71–8. doi: 10.1212/wnl.58.1.71. [DOI] [PubMed] [Google Scholar]

- Berman MG, Park J, Gonzalez R, et al. Evaluating functional localizers: the case of the FFA. NeuroImage. 2010;50:56–71. doi: 10.1016/j.neuroimage.2009.12.024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bilalić M, Langner R, Ulrich R, Grodd W. Many faces of expertise: fusiform face area in chess experts and novices. The Journal of Neuroscience. 2011;31:10206–14. doi: 10.1523/JNEUROSCI.5727-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Borenstein M. Effect sizes for continuous data. In: Cooper H, Hedges LV, Valentine JC, editors. The Handbook of Research Synthesis and Meta-Analysis. 2nd edn. New York: Russell Sage Foundation; 2009. pp. 221–35. [Google Scholar]

- Brett M, Anton JL, Valabregue R, Poline JB. Regions of interest analysis using an SPM toolbox. Neuroimage. 2002;16:497. [Google Scholar]

- Connellan J, Baron-Cohen S, Wheelwright S, Batki A, Ahluwalia J. Sex differences in human neonatal social perception. Infant Behavior & Development. 2000;23:113–8. [Google Scholar]

- Dureman I. SRB:1. Stockholm: Psykologiförlaget; 1960. [Google Scholar]

- Ebner N, Riediger M, Lindenberger U. FACES—a database of facial expressions in young, middle-aged, and older women and men: development and validation. Behavior Research Methods. 2010;42:351–61. doi: 10.3758/BRM.42.1.351. [DOI] [PubMed] [Google Scholar]

- Feinman S, Entwisle DR. Children's ability to recognize other children’s faces. Child Development. 1976;47:506–10. [PubMed] [Google Scholar]

- Feng L, Liu J, Wang Z, et al. The other face of the other-race face categorization advantage. Neuropsychologia. 2011;49:3739–49. doi: 10.1016/j.neuropsychologia.2011.09.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischer H, Fransson P, Wright CI, Bäckman L. Enhanced occipital and anterior cingulate activation in men but not in women during exposure to angry and fearful male faces. Cognitive, Affective, & Behavioral Neuroscience. 2004a;4:326–34. doi: 10.3758/cabn.4.3.326. [DOI] [PubMed] [Google Scholar]

- Fischer H, Sandblom J, Herlitz A, Fransson P, Wright CI, Bäckman L. Sex-differential brain activation during exposure to female and male faces. NeuroReport. 2004b;15:235–38. doi: 10.1097/00001756-200402090-00004. [DOI] [PubMed] [Google Scholar]

- Fischer H, Sandblom J, Nyberg L, Herlitz A, Bäckman L. Brain activation while forming memories of fearful and neutral faces in women and men. Emotion. 2007;4:767–73. doi: 10.1037/1528-3542.7.4.767. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nature Neuroscience. 2000;3:191–7. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Golby AJ, Gabrieli JDE, Chiao JY, Eberhardt JL. Differential responses in the fusiform region to same-race and other-race faces. Nature Neuroscience. 2001;4:845–50. doi: 10.1038/90565. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends in Cognitive Sciences. 2000;4:223–33. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Herlitz A, Nilsson L-G, Bäckman L. Gender differences in episodic memory. Memory & Cognition. 1997;25:801–11. doi: 10.3758/bf03211324. [DOI] [PubMed] [Google Scholar]

- Ino T, Nakai R, Azuma T, Kimura T, Fukuyama H. Gender differences in brain activation during encoding and recognition of male and female faces. Brain Imaging and Behavior. 2010;4:55–67. doi: 10.1007/s11682-009-9085-0. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. The Journal of Neuroscience. 1997;17:4302–11. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim J, Yoon H, Kim B, Jeun S, Jung S, Choe B. Racial distinction of the unknown facial identity recognition mechanism by event-related fMRI. Neuroscience Letters. 2006;397:279–84. doi: 10.1016/j.neulet.2005.12.061. [DOI] [PubMed] [Google Scholar]

- Leeb RT, Rejskind FG. Here’s looking at you, kid! A longitudinal study of perceived gender differences in mutual gaze behavior in young infants. Sex Roles. 2004;50:1–14. [Google Scholar]

- Lehmann C, Mueller T, Federspiel A, et al. Dissociation between overt and unconscious face processing in fusiform face area. Neuroimage. 2004;21:75–83. doi: 10.1016/j.neuroimage.2003.08.038. [DOI] [PubMed] [Google Scholar]

- Lieberman MD, Hariri A, Jarcho JM, Eisenberger NI, Bookheimer SY. An fMRI investigation of race-related amygdala activity in African-American and Caucasian-American individuals. Nature Neuroscience. 2005;8:720–2. doi: 10.1038/nn1465. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Perception of face parts and face configurations: an fMRI study. Journal of Cognitive Neuroscience. 2010;22:203–11. doi: 10.1162/jocn.2009.21203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lovén J, Herlitz A, Rehnman J. Women’s own-gender bias in face recognition memory: the role of attention at encoding. Experimental Psychology. 2011;58:333–40. doi: 10.1027/1618-3169/a000100. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Burdette JB, Kraft RA. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. NeuroImage. 2003;19:1233–9. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Burdette JH. Precentral gyrus discrepancy in electronic versions of the Talairach atlas. NeuroImage. 2004;21:450–5. doi: 10.1016/j.neuroimage.2003.09.032. [DOI] [PubMed] [Google Scholar]

- Mather M, Lighthall NR, Nga L, Gorlick MA. Sex differences in how stress affects brain activity during face viewing. Neuroreport. 2010;21:933–7. doi: 10.1097/WNR.0b013e32833ddd92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKelvie SJ. Sex differences, lateral reversal, and pose as factors in recognition memory for photographs of faces. Journal of General Psychology. 1987;114:13–37. [Google Scholar]

- Megreya AM, Bindemann M, Havard C. Sex differences in unfamiliar face identification: evidence from matching tasks. Acta Psychologica. 2011;137:83–9. doi: 10.1016/j.actpsy.2011.03.003. [DOI] [PubMed] [Google Scholar]

- Meissner CA, Brigham JC. Thirty years of investigating the own-race bias in memory for faces: a meta-analytic review. Psychology, Public Policy, and Law. 2001;7:3–35. [Google Scholar]

- Minnebusch DA, Suchan B, Köster O, Daum I. A bilateral occipitotemporal network mediates face perception. Behavioral Brain Research. 2009;198:179–85. doi: 10.1016/j.bbr.2008.10.041. [DOI] [PubMed] [Google Scholar]

- Prince SE, Dennis NA, Cabeza R. Encoding and retrieving faces and places: distinguishing process- and stimulus-specific differences in brain activity. Neuropsychologia. 2009;47:2282–89. doi: 10.1016/j.neuropsychologia.2009.01.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rehnman J, Herlitz A. Higher face recognition ability in girls: magnified by own-sex and own-ethnicity bias. Memory. 2006;14:289–96. doi: 10.1080/09658210500233581. [DOI] [PubMed] [Google Scholar]

- Rehnman J, Herlitz A. Women remember more faces than men do. Acta Psychologica. 2007;124:344–55. doi: 10.1016/j.actpsy.2006.04.004. [DOI] [PubMed] [Google Scholar]

- Rhodes MG, Anastasi JS. The own-age bias in face recognition: a meta-analytic and theoretical review. Psychological Bulletin. 2012;138:146–74. doi: 10.1037/a0025750. [DOI] [PubMed] [Google Scholar]

- Rossion B, Dricot L, Devolder A, et al. Hemispheric asymmetries for whole-based and part-based face processing in the human fusiform gyrus. Journal of Cognitive Neuroscience. 2000;12:793–802. doi: 10.1162/089892900562606. [DOI] [PubMed] [Google Scholar]

- Snodgrass JG, Corwin J. Pragmatics of measuring recognition memory: applications to dementia and amnesia. Journal of Experimental Psychology: General. 1988;117:34–50. doi: 10.1037//0096-3445.117.1.34. [DOI] [PubMed] [Google Scholar]

- Van Bavel JJ, Packer DJ, Cunningham WA. Modulation of the fusiform face area following minimal exposure to motivationally relevant faces: evidence of in-group enhancement (not out-group disregard) Journal of Cognitive Neuroscience. 2011;23:3343–54. doi: 10.1162/jocn_a_00016. [DOI] [PubMed] [Google Scholar]

- Wright DB, Sladden B. An own gender bias and the importance of hair in face recognition. Acta Psychologica. 2003;114:101–14. doi: 10.1016/s0001-6918(03)00052-0. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. The neural basis of the face inversion effect. Current Biology. 2005;15:2256–62. doi: 10.1016/j.cub.2005.10.072. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.