A growing literature has been showing a profound impact of alphabetization at several levels of the visual system, including the primary visual cortex (Szwed et al., 2014) and higher-order ventral and dorsal visual areas (Carreiras et al., 2009; Dehaene et al., 2010). Importantly, in typical alphabetization courses, learning to read is not isolated but instead combined with both learning to write and learning to segment the spoken language, relating all these different representations to each other. Indeed, learning to write and to pronounce the elementary sounds of language promotes additional mapping between the visual and motor systems by linking visual representations of letters and motor plans for handwriting and speech production. Thus, besides the already recognized influence of the phonological system, the potential influence from other neural systems in the functioning of the visual system seems to be relatively neglected. In this opinion paper we highlight the importance of multi-systems interplay during literacy acquisition, focusing on the question of how literacy breaks mirror invariance in the visual system. Specifically, we argue for a large contribution of top-down inputs from phonological, handwriting and articulatory representations toward the ventral visual cortex during the development of the visual word form system, which then plays a pivotal role in mirror discrimination of letters in literate individuals.

How phonology affects visual representations for reading

A key aspect of alphabetization is to set in place the audio-visual mapping known as “phoneme-grapheme correspondence,” whereby elementary sounds of language (i.e., phonemes) are linked to visual representations of them (i.e., graphemes) (Frith, 1986). This correspondence is progressively acquired and becomes automatized typically after 3–4 years of training (Nicolson et al., 2001; Van Atteveldt et al., 2004; Lachmann and van Leeuwen, 2008; Dehaene et al., 2010; Lachmann et al., in this special issue). Illiterates, who do not learn this audio-visual correspondence, are unable to show “phonological awareness” (i.e., the ability to consciously manipulate language sounds) at the phonemic level (Morais et al., 1979; Morais and Kolinsky, 1994), presenting different visual analytical characteristics (Lachmann et al., 2012; Fernandes et al., 2014). Accordingly, activations in phonological areas increases in proportion to the literacy level of participants, e.g., planum temporale responses to auditory sentences and left superior temporal sulcus responses to visual presentations of written sentences (Dehaene et al., 2010). These results therefore suggest an important link between the visual and auditory systems created by literacy training. Indeed, the reciprocal inter-regional coupling between visual and auditory cortical areas may constitute a crucial component for fluent reading, since dyslexic children, who present slow reading, show reduced activations to speech sounds in the perisylvian language areas and ventral visual cortex including the Visual Word Form Area (VWFA) (Monzalvo et al., 2012).

How writing affects visual representations for reading

In parallel, children (and adults) under alphabetization also learn to draw letters of the alphabet. Indeed, writing requires fine motor coordination of hand gestures, a process guided by online feedback from somatosensory and visual systems (Margolin, 1984). In particular, gestures of handwriting are thought to be represented in the dorsal part of the premotor cortex, rostral to the primary motor cortex responsible for hand movements, i.e., a region first coarsely described by Exner as the “graphic motor image center” (see Roux et al., 2010 for a review). Exner's area is known to be activated when participants write letters but not when they copy pseudoletters (Longcamp et al., 2003). Moreover, direct brain stimulation of the same region produces a specific inability to write (Roux et al., 2009). Importantly, this region is activated simply by visual presentations of handwritten stimuli (Longcamp et al., 2003, 2008), even when they are presented unconsciously (Nakamura et al., 2012). Additionally these activations take place in the premotor cortex contra-lateral to the dominant hand for writing (Longcamp et al., 2005). These results suggest that literacy training establishes a tight functional link between the visual and motor systems for reading and writing. In fact, it has been proposed that reading and writing rely on distributed and overlapping brain regions, each showing slightly different levels of activation depending on the nature of orthography (Nakamura et al., 2012). As for the reciprocal link between the visual and motor components of this reading network, brain-damaged patients and fMRI data from normal subjects consistently suggest that top-down activation of the posterior inferior temporal region constitutes a key component for both handwriting (Nakamura et al., 2002; Rapcsak and Beeson, 2004) and reading (Bitan et al., 2005; Nakamura et al., 2007).

How speech production affects visual representations for reading

While the impact of auditory phonological inputs for literacy acquisition has been well demonstrated (e.g., phonological awareness studies), relatively less explored has been the connection between the speech production system and other systems during alphabetization. Indeed, although all alphabetizing children already speak fluently, an unusual segmentation and refinement of motor plans for speech production should be learned to pronounce isolated phonemes, allowing a multisensory association (explicitly or implicitly) of these new fine-grained phonatory representations with visual and auditory representations. One study has shown activation in a cortical region involved in speech production (Broca's area) in relation to handwriting learning and letter identification (Longcamp et al., 2008). In fluent readers, the inferior frontal area involved in speech production in one hand and the VWFA in another hand show fast and strong inter-regional coupling (Bitan et al., 2005), which operates even for unconsciously perceived words (Nakamura et al., 2007). This distant visual and articulatory link mediating print-to-sound mapping is probably established during the earliest phase of reading acquisition and serves as a crucial foundation for the development of a dedicated reading network (Brem et al., 2010).

Literacy acquisition as a multi-system learning process: the example of mirror discrimination learning

Taken together, these studies converge to the idea that far fromiinfar from a unimodal training on visual recognition, literacy acquisition is an irreducibly multi-system learning process. This lead us to predict that as one becomes literate, the expertise acquired through a given modality is not restricted to it, but can have an impact on other neural systems.

Perhaps the most spectacular case in point, and the one we choose to focus on in this article, is the spontaneous link between the motor and visual systems during literacy acquisition. This link is revealed in the beginning of the alphabetization process by the classic emergence of spontaneous mirror writing, i.e., writing letters in both orientations indistinctly (Cornell, 1985). Indeed our primate visual system presents a mirror invariant representation of visual stimuli, which enables us to immediately recognize one image independently of left or right viewpoints (Rollenhagen and Olson, 2000; Vuilleumier et al., 2005; Biederman and Cooper, 2009). This generates a special difficulty to distinguish the left-right orientation of letters (e.g., b vs. d) (Orton, 1937; Corballis and Beale, 1976; Lachmann, 2002; Lachmann et al. in this special issue). One account for the emergence of mirror writing is that writing gestures can be “incorrectly” guided by mirror invariant visual representations of letters, a framework referred to as “perceptual confusion” (see Schott, 2007 for a review on this topic).

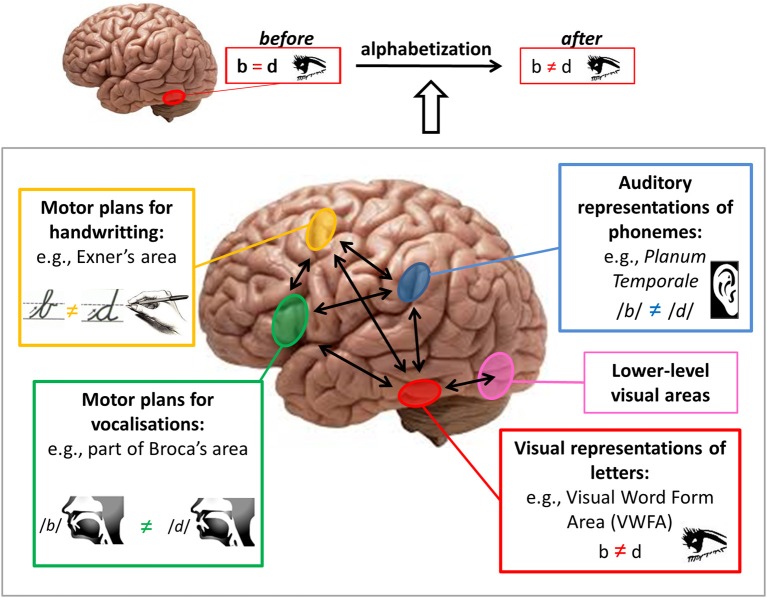

In complement, recent studies demonstrate that after literacy acquisition, mirror invariance is lost for letter strings (Kolinsky et al., 2011; Pegado et al., 2011, 2014) and that the VWFA shows mirror discrimination for letters (Pegado et al., 2011); see figure upper part. Interestingly, in this special issue, Nakamura and colleagues provide evidence for the causal role of the left occipito-temporal cortex (encompassing the VWFA) in mirror discrimination by using transcranial magnetic stimulation. However, it is still an open question whether this region becomes completely independent to discriminate the correct orientation of letters or if it still depends on inputs from phonological, gestural, and/or vocal representations.

A multi-system model of mirror discrimination learning

How is mirror discrimination acquired during the process of literacy acquisition? Here we sketch a model that takes into account not only the multisensory nature of alphabetization but also the multi-systems interplay, i.e., how representations in one system could influence the functioning of another system (e.g., mirror invariance in the visual system). In Figure 1, we present the hypothetical “multi-system input model” for mirror-letters discrimination learning during literacy acquisition. In order to correctly and rapidly identify letters for a fluent reading, the VWFA (in red) should visually distinguish between mirror representations of letters (see figure upper part). Top-down inputs from phonological, handwriting and speech production representations can provide discriminative information to the VWFA, helping this area that presents intrinsic mirror invariance, to accomplish its task of letter identification. This process probably requires focused attention (not represented in the figure) during the learning process and is likely to become progressively automatized. These top-down inputs toward the VWFA possibly influence this region to select relevant bottom-up inputs from lower-level visual areas (represented in pink in the figure) carrying information about the orientation of stimuli. For simplicity inter-hemispheric interactions are not represented here, but it should be acknowledged that during this learning process, local computations in the VWFA can include inhibition of mirror-inversed inputs from the other hemisphere.

Figure 1.

Brain pathways for mirror discrimination learning during literacy acquisition. Upper: The Visual Word Form Area [VWFA] (in red) presents mirror invariance before alphabetization and mirror discrimination for letters after alphabetization. Lower: During alphabetization, the VWFA can receive top-down inputs with discriminative information from phonological, gestural (handwriting) and speech production areas and bottom-up inputs from lower level visual areas. All these inputs can help the VWFA to discriminate between mirror representations, thus correctly identifying letters to enable a fluent reading.

Note that although we illustrate it by using mirror-letters (b-d or p-q), our model can eventually be extended to non-mirror letters, such as “e” or “r” for instance, given that each letter has a specific representation at the phonological, gestural (handwriting) and phonatory system. It cannot be excluded however that for these non-mirror letters, the simple extensive visual exposure to their fixed orientation could, in principle, be sufficient to induce visual orientation learning for them. In contrast, this simple passive learning mechanism is unlikely to explain orientation learning for mirror letters given that both mirror representations are regularly present (e.g., b and d). Thus at least for mirror letters, the discrimination mechanism is more likely to involve cross-modal inputs, as represented in our figure. Accordingly, it is known that learning a new set of letters by handwriting produces a better discrimination of its mirror images than when learning by typewriting (Longcamp et al., 2006, 2008). Moreover, despite low performances in pure perceptual visual tasks in mirror discrimination, illiterates are as sensitive as literates in mirror discrimination on vision-for-action tasks (Fernandes and Kolinsky, 2013). Thus, inputs of gestural representations of letters influencing the VWFA perception could have a special weight in the processes of learning mirror discrimination.

It can also be expected that the existence of mirror letters forces the visual system to discriminate them, because it is necessary to correctly read words comprising mirror letters, such as in “bad” (vs. “dad”) for instance. Moreover, evidence suggest that such mirror discrimination sensitivity in literates can be partially generalized to other visual stimuli such as false-fonts (Pegado et al., 2014) and geometric figures (Kolinsky et al., 2011). Thus, it is plausible that during literacy acquisition mirror letters could “drive” the learning process of letter orientation discrimination, eventually extending it for non-mirror letters. Accordingly, in writing systems that do not have mirror letters in their alphabet (e.g., tamil script), even after learning to read and write, literates still present difficulties in mirror discrimination (Danziger and Pederson, 1998). In addition, a superior mirror priming effect for inverted non-mirror letters (e.g., “r”) relative to mirror letters (e.g., “b”) has been reported (Perea et al., 2011), suggesting thus a more intensive automatic discrimination for mirror-letters in comparison to non-mirror letters.

Although it is not known how mirror discriminations of letters and words could be achieved in the complete absence of feedback from phonological, gestural or speech representations, recent empirical and computational modeling work on baboons, who can be trained to acquire orthographic representations in a purely visual manner (Grainger et al., 2012; Hannagan et al., 2014) paves the way to answer this question.

Acknowledging this multi-system interplay during literacy acquisition can have potential implications for educational methods. Interestingly, experiments have suggested that multisensory reinforcement can present an advantage for literacy acquisition: arbitrary print-sound correspondences could be facilitated by adding an haptic component (tactile recognition of letters) during the learning process (Fredembach et al., 2009; Bara and Gentaz, 2011). Large scale studies are now needed to test if promoting multi-system learning is able to provide a clear advantage in real life alphabetization.

Conflict of interest statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Bara F., Gentaz E. (2011). Haptics in teaching handwriting: the role of perceptual and visuo-motor skills. Hum. Mov. Sci. 30, 745–759 10.1016/j.humov.2010.05.015 [DOI] [PubMed] [Google Scholar]

- Biederman I., Cooper E. E. (2009). Translational and reflectional priming invariance: a retrospective. Perception 38, 809–817 10.1068/pmkbie [DOI] [PubMed] [Google Scholar]

- Bitan T., Booth J. R., Choy J., Burman D. D., Gitelman D. R., Mesulam M.-M. (2005). Shifts of effective connectivity within a language network during rhyming and spelling. J. Neurosci. Off. J. Soc. Neurosci. 25, 5397–5403 10.1523/JNEUROSCI.0864-05.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brem S., Bach S., Kucian K., Guttorm T. K., Martin E., Lyytinen H., et al. (2010). Brain sensitivity to print emerges when children learn letter–speech sound correspondences. Proc. Natl. Acad. Sci. U.S.A. 107, 7939–7944 10.1073/pnas.0904402107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carreiras M., Seghier M. L., Baquero S., Estévez A., Lozano A., Devlin J. T., et al. (2009). An anatomical signature for literacy. Nature 461, 983–986 10.1038/nature08461 [DOI] [PubMed] [Google Scholar]

- Corballis M. C., Beale I. L. (1976). The Psychology of Left and Right. New York, NY: Erlbaum [Google Scholar]

- Cornell J. M. (1985). Spontaneous mirror-writing in children. Can. J. Exp. Psychol. 39, 174–179 10.1037/h0080122 [DOI] [Google Scholar]

- Danziger E., Pederson E. (1998). Through the looking glass: literacy, writing systems and mirror-image discrimination. Writ. Lang. Lit. 1, 153–169 10.1075/wll.1.2.02dan [DOI] [Google Scholar]

- Dehaene S., Pegado F., Braga L. W., Ventura P., Nunes Filho G., Jobert A., et al. (2010). How learning to read changes the cortical networks for vision and language. Science 330, 1359–1364 10.1126/science.1194140 [DOI] [PubMed] [Google Scholar]

- Fernandes T., Kolinsky R. (2013). From hand to eye: the role of literacy, familiarity, graspability, and vision-for-action on enantiomorphy. Acta Psychol. (Amst) 142, 51–61 10.1016/j.actpsy.2012.11.008 [DOI] [PubMed] [Google Scholar]

- Fernandes T., Vale A. P., Martins B., Morais J., Kolinsky R. (2014). The deficit of letter processing in developmental dyslexia: combining evidence from dyslexics, typical readers and illiterate adults. Dev. Sci. 17, 125–141 10.1111/desc.12102 [DOI] [PubMed] [Google Scholar]

- Fredembach B., de Boisferon A. H., Gentaz E. (2009). Learning of arbitrary association between visual and auditory novel stimuli in adults: the “bond effect” of haptic exploration. PLoS ONE 4:e4844 10.1371/journal.pone.0004844 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith U. (1986). A developmental framework for developmental dyslexia. Ann. Dyslexia 36, 67–81 10.1007/BF02648022 [DOI] [PubMed] [Google Scholar]

- Grainger J., Dufau S., Montant M., Ziegler J. C., Fagot J. (2012). Orthographic processing in baboons (Papio Papio). Science 336, 245–248 10.1126/science.1218152 [DOI] [PubMed] [Google Scholar]

- Hannagan T., Ziegler J. C., Dufau S., Fagot J., Grainger J. (2014). Deep learning of orthographic representations in baboons. PLoS ONE 9:e84843 10.1371/journal.pone.0084843 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolinsky R., Verhaeghe A., Fernandes T., Mengarda E. J., Grimm-Cabral L., Morais J. (2011). Enantiomorphy through the looking glass: literacy effects on mirror-image discrimination. J. Exp. Psychol. Gen. 140, 210–238 10.1037/a0022168 [DOI] [PubMed] [Google Scholar]

- Lachmann T. (2002). Reading Disability as a Deficit in Functional Coordination and Information Integration. Neuropsychology and Cognition. Vol. 20. Springer US. 10.1007/978-1-4615-1011-6_11 [DOI] [Google Scholar]

- Lachmann T., Khera G., Srinivasan N., van Leeuwen C. (2012). Learning to read aligns visual analytical skills with grapheme-phoneme mapping: evidence from illiterates. Front. Evol. Neurosci. 4:8 10.3389/fnevo.2012.00008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lachmann T., van Leeuwen C. (2008). Differentiation of holistic processing in the time course of letter recognition. Acta Psychol. (Amst.) 129, 121–129 10.1016/j.actpsy.2008.05.003 [DOI] [PubMed] [Google Scholar]

- Longcamp M., Anton J.-L., Roth M., Velay J.-L. (2003). Visual presentation of single letters activates a premotor area involved in writing. Neuroimage 19, 1492–1500 10.1016/S1053-8119(03)00088-0 [DOI] [PubMed] [Google Scholar]

- Longcamp M., Anton J.-L., Roth M., Velay J.-L. (2005). Premotor activations in response to visually presented single letters depend on the hand used to write: a study on left-handers. Neuropsychologia 43, 1801–1809 10.1016/j.neuropsychologia.2005.01.020 [DOI] [PubMed] [Google Scholar]

- Longcamp M., Boucard C., Gilhodes J. C., Anton J. L., Roth M., Nazarian B., et al. (2008). Learning through hand-or typewriting influences visual recognition of new graphic shapes: behavioral and functional imaging evidence. J. Cogn. Neurosci. 20, 802–815 10.1162/jocn.2008.20504 [DOI] [PubMed] [Google Scholar]

- Longcamp M., Boucard C., Gilhodes J.-C., Velay J.-L. (2006). Remembering the orientation of newly learned characters depends on the associated writing knowledge: a comparison between handwriting and typing. Hum. Mov. Sci. 25, 646–656 10.1016/j.humov.2006.07.007 [DOI] [PubMed] [Google Scholar]

- Margolin D. I. (1984). The neuropsychology of writing and spelling: semantic, phonological, motor, and perceptual processes. Q. J. Exp. Psychol. A 36, 459–489 10.1080/14640748408402172 [DOI] [PubMed] [Google Scholar]

- Monzalvo K., Fluss J., Billard C., Dehaene S., Dehaene-Lambertz G. (2012). Cortical networks for vision and language in dyslexic and normal children of variable socio-economic status. Neuroimage 61, 258–274 10.1016/j.neuroimage.2012.02.035 [DOI] [PubMed] [Google Scholar]

- Morais J., Cary L., Alegria P. B., Bertelson J. (1979). Does awareness of speech as a sequence of phones arise spontaneously? Cognition 7, 323–331 [Google Scholar]

- Morais J., Kolinsky R. (1994). Perception and awareness in phonological processing: the case of the phoneme. Cognition 50, 287–297 10.1016/0010-0277(94)90032-9 [DOI] [PubMed] [Google Scholar]

- Nakamura K., Dehaene S., Jobert A., Le Bihan D., Kouider S. (2007). Task-specific change of unconscious neural priming in the cerebral language network. Proc. Natl. Acad. Sci. U.S.A. 104, 19643–19648 10.1073/pnas.0704487104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura K., Honda M., Hirano S., Oga T., Sawamoto N., Hanakawa T., et al. (2002). Modulation of the visual word retrieval system in writing: a functional MRI study on the Japanese orthographies. J. Cogn. Neurosci. 14, 104–115 10.1162/089892902317205366 [DOI] [PubMed] [Google Scholar]

- Nakamura K., Kuo W.-J., Pegado F., Cohen L., Tzeng O. J. L., Dehaene S. (2012). Universal brain systems for recognizing word shapes and handwriting gestures during reading. Proc. Natl. Acad. Sci. U.S.A. 109, 20762–20767 10.1073/pnas.1217749109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nicolson R. I., Fawcett A. J., Dean P. (2001). Developmental dyslexia: the cerebellar deficit hypothesis. Trends Neurosci. 24, 508–511 10.1016/S0166-2236(00)01896-8 [DOI] [PubMed] [Google Scholar]

- Orton S. T. (1937). Reading, Writing and Speech Problems in Children. New York, NY: W.W. Norton and Co. Ltd [Google Scholar]

- Pegado F., Nakamura K., Braga L. W., Ventura P., Filho G. N., Pallier C., et al. (2014). Literacy breaks mirror invariance for visual stimuli: a behavioral study with adult illiterates. J. Exp. Psychol. Gen. 143, 887–894 10.1037/a0033198 [DOI] [PubMed] [Google Scholar]

- Pegado F., Nakamura K., Cohen L., Dehaene S. (2011). Breaking the symmetry: mirror discrimination for single letters but not for pictures in the visual word form area. Neuroimage 55, 742–749 10.1016/j.neuroimage.2010.11.043 [DOI] [PubMed] [Google Scholar]

- Perea M., Moret-Tatay C., Panadero V. (2011). Suppression of mirror generalization for reversible letters: evidence from masked priming. J. Mem. Lang. 65, 237–246 10.1016/j.jml.2011.04.005 [DOI] [Google Scholar]

- Rapcsak S. Z., Beeson P. M. (2004). The role of left posterior inferior temporal cortex in spelling. Neurology 62, 2221–2229 10.1212/01.WNL.0000130169.60752.C5 [DOI] [PubMed] [Google Scholar]

- Rollenhagen J. E., Olson C. R. (2000). Mirror-image confusion in single neurons of the Macaque inferotemporal cortex. Science 287, 1506–1508 10.1126/science.287.5457.1506 [DOI] [PubMed] [Google Scholar]

- Roux F.-E., Draper L., Köpke B., Démonet J.-F. (2010). Who actually read Exner? Returning to the source of the frontal “writing centre” hypothesis. Cortex 46, 1204–1210 10.1016/j.cortex.2010.03.001 [DOI] [PubMed] [Google Scholar]

- Roux F.-E., Dufor O., Giussani C., Wamain Y., Draper L., Longcamp M., et al. (2009). The graphemic/motor frontal area Exner's area revisited. Ann. Neurol. 66, 537–545 10.1002/ana.21804 [DOI] [PubMed] [Google Scholar]

- Schott G. D. (2007). Mirror writing: neurological reflections on an unusual phenomenon. J. Neurol. Neurosurg. Psychiatry 78, 5–13 10.1136/jnnp.2006.094870 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szwed M., Qiao E., Jobert A., Dehaene S., Cohen L. (2014). Effects of literacy in early visual and occipitotemporal areas of chinese and French readers. J. Cogn. Neurosci. 26, 459–475 10.1162/jocn_a_00499 [DOI] [PubMed] [Google Scholar]

- Van Atteveldt N., Formisano E., Goebel R., Blomert L. (2004). Integration of letters and speech sounds in the human brain. Neuron 43, 271–282 10.1016/j.neuron.2004.06.025 [DOI] [PubMed] [Google Scholar]

- Vuilleumier P., Schwartz S., Duhoux S., Dolan R. J., Driver J. (2005). Selective attention modulates neural substrates of repetition priming and “Implicit” visual memory: suppressions and enhancements revealed by fMRI. J. Cogn. Neurosci. 17, 1245–1260 10.1162/0898929055002409 [DOI] [PubMed] [Google Scholar]