Abstract

We report on an algorithm for fast wavefront sensing that incorporates sparse representation for the first time in practice. The partial derivatives of optical wavefronts were sampled sparsely with a Shack-Hartmann wavefront sensor (SHWFS) by randomly subsampling the original SHWFS data to as little as 5%. Reconstruction was performed by a sparse representation algorithm that utilized the Zernike basis. We name this method SPARZER. Experiments on real and simulated data attest to the accuracy of the proposed techniques as compared to traditional sampling and reconstruction methods. We have made the corresponding data set and software freely available online. Compressed wavefront sensing offers the potential to increase the speed of wavefront acquisition and to defray the cost of SHWFS devices.

Random variations in the wavefront of optical signals result from propagation through turbid media such as the atmosphere or eye. If not corrected, such wavefront aberrations limit the maximum range of optical communication systems [1] or the quality of retinal images [2]. If the point spread function of the optical system is known, then deformable mirrors [3, 4] or inverse deblurring [5] techniques can be applied to correct the wavefront. Shack-Hartmann wavefront sensors (SHWFSs) are a popular tool that can be used to derive the point spread function of an optical system [5, 6], but the speed of a SHWFS is limited by both the noise in the system and the camera hardware [7–9].

Sparse representation is a promising method [10, 11] for reconstructing undersampled signals by representing them as a linear combination of a small number of basis elements. Our target sparse representation algorithms draw upon the framework set by compressive sensing [12, 13], but unlike compressive sensing [14], the point-wise samples lack information about the complete image [15, 16]. Moreover, contrary to active learning [17], the location of each point-wise sample is randomly selected and is independent of previous measurements. Previous research in compressed sampling has led to developments in imaging applications, such as holography [18, 19] and single pixel cameras [20, 21]. The wavefront sensing described in this letter is a sister-field of holographic imaging where the aberrometry measurements are of particular interest for ophthalmic [3, 22, 23] and atmospheric imaging [5, 24, 25] applications.

The marriage of sparse reconstruction algorithms with wavefront sensing has the capacity to improve the speed of SHWFSs by changing only the way that data is acquired and processed. The work of [26] first suggested the utilization of sparse representation for compressed wavefront measurement. However, all validations in [26] were based on pure simulation, which we show may have resulted in a suboptimal design as compared to our proposed method. Cross-derivative constrained [27] sparse representation (cdcSR) modifies Shack-Hartmann devices by reducing the number of lenslets required to construct an optical wavefront [26]. Using fewer lenslets decreases the complexity of the apparatus and allows for fewer pixels on the SHWFS camera to be read and recorded. Reading fewer pixels increases the frame rate [9] at which the sensor can operate without altering the physical hardware of current SHWFS devices.

In a traditional SHWFS system, an optical wavefront is measured and stored as two high lenslet density (HLD) phase slopes, fx and fy, which represent the partial derivatives in the x and y directions, respectively. These HLD slopes are used to reconstruct the phase map and the point spread function of the optical system. Our goal is to accurately reconstruct the phase map from a low density point-wise phase slopes measurement (bx and by).

In this work, we follow the cdcSR framework to obtain an HLD representation of the phase slopes from limited samples. But unlike [26] which utilizes a wavelet basis, we represent the phase slopes using the Zernike [28] orthonormal basis:

| (1) |

where Z is a matrix that transforms the phase slopes in the Zernike domain (cx and cy) into the HLD partial derivative space. The rationale behind our approach, which we name sparse Zernike representation (SPARZER), is that for many practical applications, including ophthalmic imaging, the majority of the Zernike coefficients of the target wavefront are near zero [29].

The SPARZER framework is formulated as

| (2) |

where ψ is a random sparse sampling operator, λ is a regularization coefficient, c = [cx, cy]T, and b = [bx, by]T. In this equation, the first term relates the final solution to the measurements and the second term evokes the sparsity condition. SPARZER formulation is simpler and less computationally complex as compared to cdcSR, because Zernike space inherently imposes the condition that the phase map be continuously differentiable in the x and y directions. Thus, SPARZER does not require the additional cross-derivative term utilized in cdcSR [26].

Following [26], we solve the above minimization problem using the fast iterative shrinkage-thresholding algorithm [30]. Using this algorithm, most of our solutions converged in fewer than 30 iterations. Once cx and cy are obtained, we use (1) to reconstruct the HLD phase slopes.

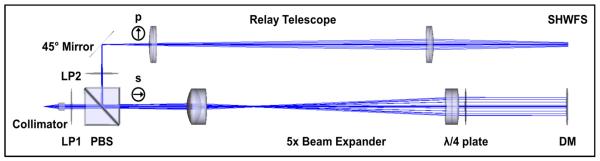

The optical setup used to measure compressed wavefront slopes is depicted in Fig. 1. A fiber-coupled 635 nm diode laser was collimated to a beam diameter of ~3 mm. The paraxial light was relayed through a polarizing beam splitter, 5x beam expander, and quarter-wave plate to the surface of a deformable mirror (Imagine Eyes, France). The deformable mirror both introduced aberrations and reflected the light back through the quarter-wave plate and 5x beam expander. The light then reflected from the polarizing beam splitter, rejecting spurious reflections from the optical elements. Finally, the light was relayed by a second telescope to the plane of a [32, 40] lenslet array of a SHWFS (Imagine Eyes, France). All wavefront data reconstructed by the various algorithms was measured by this setup.

Fig. 1.

Schematic of optical setup used to acquire wavefront data. Red laser light is collimated and passed through linear polarizers (LP), a polarizing beam-splitter (PBS), a 5x beam expander, a quarter-wave plate, a deformable mirror (DM), and a relay telescope before being measured by a Shack-Hartmann wavefront sensor (SHWFS). The symbols represent the polarization of the light while traveling from left to right in the system.

The goal of our first experiment, which examined the effect of adding simulated noise to raw wavefront data, was to visually demonstrate the capability of our algorithm in recovering very sparsely sampled phase slopes. As depicted in Fig. 2, a wavefront modeled after the high-order aberrations (HOAs) of the human eye [29] was generated by the deformable mirror. By definition, HOAs do not include the first four Zernike terms, piston, tip, tilt, and defocus. We created a denoised phase map by averaging the Zernike coefficients of ten independent SHWFS measurements (Fig. 2a) recorded from the optical setup of Fig. 1. To simulate phase slopes acquired with different signal-to-noise ratios, we added white Gaussian noise with the variance ranging between 1–10% of the maximum slope value (Fig. 2b). The noisy HLD images were then down sampled randomly by a given compression ratio, creating a sparsely sampled compressed wavefront slope (Fig. 2c). For an [n, m] array of lenslets and a compression ratio of 0.05, n×m/20 total lenslet measurements were taken from the HLD data at random. Note that this definition of compression ratio differs from that of [26], which only subsampled the rows. We then reconstructed the down sampled data back to full size [n, m] using linear interpolation (Fig. 2d) and SPARZER (Fig. 2e). A quick consultation with Fig. 2 shows the qualitative accuracy of the SPARZER method.

Fig. 2.

Flow diagram of experiments depicting the denoised HLD (a), the simulated noisy HLD (b), the sparse samples of a compressed (c), the linearly interpolated (d), and the SPARZER reconstructed (e) wavefront slope in the x-direction with simulated noise. The mean squared error of the wavefront slope is 0.1451 (d) and 0.0254 (e).

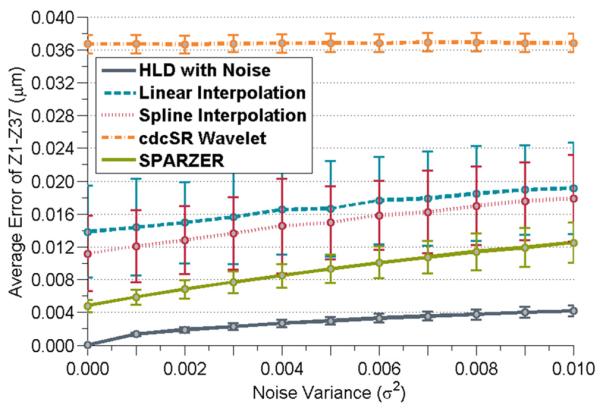

For quantitative comparisons, the above process was repeated 1,000 times at different noise levels using a fixed compression ratio of 0.10. The reconstructed full-scale data subsequently was projected into the Zernike space and the errors compared to the denoised phase map over the first 37 Zernike terms were averaged. The median error among 1000 repetitions was used in order to mitigate the effects of major outliers from biasing the data set. For comparison, the down sampled data was also reconstructed using the wavelet-based algorithm of [26] (named cdcSR Wavelet) and spline interpolation (Fig. 3).

Fig. 3.

Reconstruction accuracy of the sparsely sampled HOAs of a typical human eye for various amounts of simulated noise at a 0.10 compression ratio. Error bars correspond to the 1000 realizations o f additive noise (10 for cdcSR Wavelet).

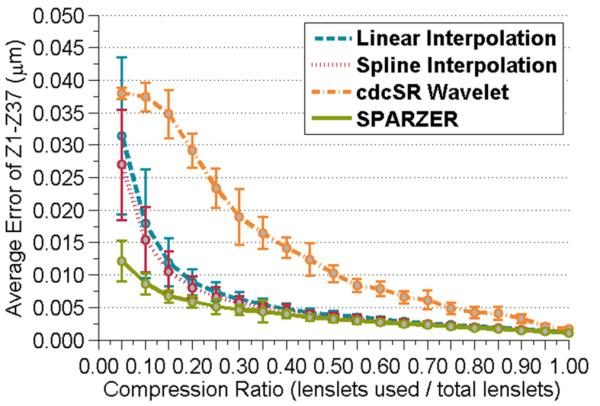

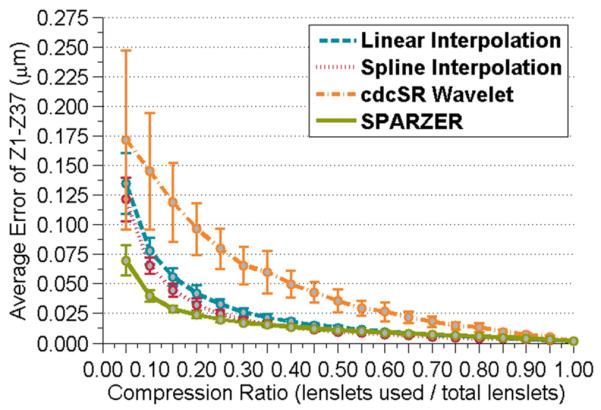

In the next experiment (in which no simulated noise was added to the measured wavefronts), raw phase slopes modeled after ocular HOAs were acquired for a variety of compression ratios from the optical setup of Fig. 1. At each compression ratio, 100 random sampling patterns were used to generate different subsampled realizations of the eye's HOAs. The average error in the first 37 Zernike coefficients for the data reconstructed by the various methods was compiled in Fig. 4. Please note that only 10 realizations of the wavelet-based algorithm were performed due to its slower rate of convergence.

Fig. 4.

Error in reconstructing the HOAs of a typical human eye sparsely sampled with various compression ratios. Error bars correspond to the 100 different sampling patterns at each compression rate (10 for cdcSR Wavelet).

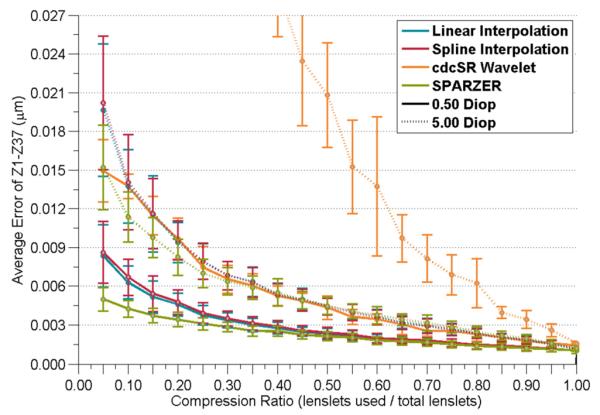

Our third experiment mimicked the previous experiment but used a wavefront primarily composed of defocus aberration, which tends to dominate HOAs in real eyes by an order of magnitude [29, 31]. Note that since the phase slopes of defocus aberration have linear profiles, this experiment can be considered as the worst-case scenario for the SPARZER performance as compared to linear interpolation. We sparsely sampled the unmodified wavefront data at various compression ratios for defocus powers ranging from 0.50 diopters to 5.00 diopters in 0.50 diopter increments. The mean Zernike term errors for the minimum (0.50 diopters) and maximum (5.00 diopters) defocus scenarios are plotted in Fig. 5.

Fig. 5.

Error in reconstructing wavefronts dominated by defocus aberration for various compression ratios (considered the worst-case scenario for our SPARZER method). Half diopter (solid lines) and five diopters (dotted lines) of defocus are presented. Error bars correspond to the 100 different sampling patterns at each compression rate (10 for cdcSR Wavelet).

In the final experiment, we expanded the applicability of SPARZER beyond ophthalmic applications and assessed its merit towards the reconstruction of random HOAs. The coefficient values of the HOAs were determined by a random number generator and input into the deformable mirror. Due to the limitations of the mirror, Zernike terms above the first 20 could not be input explicitly. Five such realizations of random wavefronts were recorded and the average Zernike term error as a function of compression ratio was determined (Fig. 6).

Fig. 6.

Error in reconstructing randomly generated high-order wavefronts sparsely sampled with various compression ratios. Error bars correspond to the five random HOA patterns at each compression rate.

Fig. 3 shows that SPARZER performed better than the other techniques at all tested noise levels. Fig. 4 shows how the HOA wavefront from a typical human eye could be accurately reconstructed by SPARZER at a variety of compression ratios. The wavefront in this experiment was defocus compensated, which is common in most adaptive optics and ophthalmic imaging systems.

Most practical ophthalmic imaging instruments include external defocus compensation, but for the sake of completeness, we considered optical systems that may be dominated by focus aberration in Fig. 5. Defocus is a linear term in the x and y phase slopes, so we expect linear interpolation to do a good job reconstructing the wavefront. We can see in Fig. 5 that the SPARZER algorithm is able to rival linear interpolation for phase slopes composed of mainly linear Zernike terms at all compression ratios. Additionally, SPARZER excels throughout the defocus range tested in our experiments (additional defocus wavefronts not shown). We believe that SPARZER is able to outperform linear interpolation in this scenario because of its denoising capabilities.

Fig. 6 measures SPARZER's strength when reconstructing wavefronts with the first 20 Zernike terms randomly generated by a deformable mirror. Because we cannot emulate all realistic optical wavefronts, we decided that a randomly generated wavefront was the fairest way to test the algorithm's ability to reconstruct wavefronts with minimal a priori knowledge. We tested five random wavefronts in order to insure that we did not select an outlying case which may be particularly well suited to our algorithm. The data presented in Fig. 6 is a compilation of the average errors from the five different random wavefronts. We see that even with very little prior knowledge about the wavefront, SPARZER is able to accurately reconstruct the high density phase slopes.

For the first time to our knowledge, we performed compressed wavefront sensing successfully in practice. A variety of wavefront curvatures common to ophthalmic applications were investigated (Figs. 2–5) as well as randomly generated HOA wavefronts (Fig. 6), which were studied in an attempt to broaden the scope of this work. For all of the different types of wavefronts examined, SPARZER outperformed the other methods by an increasing margin at the higher compression ratios. These results imply that SPARZER is the preferred reconstruction method, especially in the case where a minimum number of lenslets and a maximum increase in wavefront detection speed is desired. For a fair comparison, we did our best to optimize the parameters for the wavelet-based cdcSR method [26]. However, since the code for the cdcSR method is not publicly available, our implementation may differ in some details with what the authors had originally intended. To enable other researchers to utilize our method in their future research, we have made our code and dataset available online at http://people.duke.edu/~sf59/Polans_OL_2014.htm. We note that other sparsity-based techniques, such as those applying dictionary learning [32], might improve the reconstruction accuracy but at the cost of computational complexity. The compressed wavefront sampling presented in this work provides a potential solution for applications where high-speed, cost-effective wavefront sensing is desirable.

Acknowledgments

This work was supported by the NSF GRFP through a graduate research fellowship to James Polans. The authors acknowledge financial support from the NIH (EY023039) and North Carolina Biotechnology Center (IDG 2012-1015). We thank Mohammad Rostami of the University of Waterloo for his helpful comments.

Full References

- 1.Mackey R, Dainty C. Adaptive optics compensation over a 3 km near horizontal path. 2008. 71080I-71080I-71089. [Google Scholar]

- 2.Hofer H, Artal P, Singer B, Aragon JL, Williams DR. Dynamics of the eye's wave aberration. J Opt Soc Am A. 2001;18:497–506. doi: 10.1364/josaa.18.000497. [DOI] [PubMed] [Google Scholar]

- 3.Liang JZ, Williams DR, Miller DT. Supernormal vision and high-resolution retinal imaging through adaptive optics. J Opt Soc Am A. 1997;14:2884–2892. doi: 10.1364/josaa.14.002884. [DOI] [PubMed] [Google Scholar]

- 4.Dubra A, Sulai Y, Norris JL, Cooper RF, Dubis AM, Williams DR, Carroll J. Noninvasive imaging of the human rod photoreceptor mosaic using a confocal adaptive optics scanning ophthalmoscope. Biomed. Opt. Express. 2011;2:1864–1876. doi: 10.1364/BOE.2.001864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Primot J, Rousset G, Fontanella JC. Deconvolution from Wave-Front Sensing - a New Technique for Compensating Turbulence-Degraded Images. J Opt Soc Am A. 1990;7:1598–1608. [Google Scholar]

- 6.Liang JZ, Grimm B, Goelz S, Bille JF. Objective Measurement of Wave Aberrations of the Human Eye with the Use of a Hartmann-Shack Wave-Front Sensor. J Opt Soc Am A. 1994;11:1949–1957. doi: 10.1364/josaa.11.001949. [DOI] [PubMed] [Google Scholar]

- 7.Nirmaier T, Pudasaini G, Bille J. Very fast wave-front measurements at the human eye with a custom CMOS-based Hartmann-Shack sensor. Opt Express. 2003;11:2704–2716. doi: 10.1364/oe.11.002704. [DOI] [PubMed] [Google Scholar]

- 8.Monteiro DWD, Vdovin G, Sarro PM. High-speed wavefront sensor compatible with standard CMOS technology. Sensor Actuat a-Phys. 2004;109:220–230. [Google Scholar]

- 9.Pathak B, Das A, Boruah BR. Highspeed zonal wavefront sensing. Optical Design and Testing V. 2012:8557. [Google Scholar]

- 10.Elad M, Aharon M. Image denoising via sparse and redundant representations over learned dictionaries. Ieee T Image Process. 2006;15:3736–3745. doi: 10.1109/tip.2006.881969. [DOI] [PubMed] [Google Scholar]

- 11.Fang LY, Li ST, Nie Q, Izatt JA, Toth CA, Farsiu S. Sparsity based denoising of spectral domain optical coherence tomography images. Biomed Opt Express. 2012;3:927–942. doi: 10.1364/BOE.3.000927. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Donoho DL. Compressed sensing. Ieee T Inform Theory. 2006;52:1289–1306. [Google Scholar]

- 13.Candes EJ, Romberg J, Tao T. Robust uncertainty principles: Exact signal reconstruction from highly incomplete frequency information. Ieee T Inform Theory. 2006;52:489–509. [Google Scholar]

- 14.Xu D, Huang Y, Kang JU. Compressive sensing with dispersion compensation on non-linear wavenumber sampled spectral domain optical coherence tomography. Biomedical Optics Express. 2013;4:1519–1532. doi: 10.1364/BOE.4.001519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Baron D, Sarvotham S, Baraniuk RG. Bayesian Compressive Sensing Via Belief Propagation. Ieee T Signal Proces. 2010;58:269–280. [Google Scholar]

- 16.Candes EJ, Tao T. Near-optimal signal recovery from random projections: Universal encoding strategies? Ieee T Inform Theory. 2006;52:5406–5425. [Google Scholar]

- 17.Farsiu S, Christofferson J, Eriksson B, Milanfar P, Friedlander B, Shakouri A, Nowak R. Statistical detection and imaging of objects hidden in turbid media using ballistic photons. Appl. Opt. 2007;46:5805–5822. doi: 10.1364/ao.46.005805. [DOI] [PubMed] [Google Scholar]

- 18.Brady DJ, Choi K, Marks DL, Horisaki R, Lim S. Compressive Holography. Opt Express. 2009;17:13040–13049. doi: 10.1364/oe.17.013040. [DOI] [PubMed] [Google Scholar]

- 19.Rivenson Y, Stern A, Javidi B. Overview of compressive sensing techniques applied in holography. Appl Optics. 2013;52:A423–A432. doi: 10.1364/AO.52.00A423. [DOI] [PubMed] [Google Scholar]

- 20.Duarte MF, Davenport MA, Takhar D, Laska JN, Sun T, Kelly KF, Baraniuk RG. Singlepixel imaging via compressive sampling. Ieee Signal Proc Mag. 2008;25:83–91. [Google Scholar]

- 21.Clemente P, Duran V, Tajahuerce E, Andres P, Climent V, Lancis J. Compressive holography with a single-pixel detector. Opt Lett. 2013;38:2524–2527. doi: 10.1364/OL.38.002524. [DOI] [PubMed] [Google Scholar]

- 22.Hermann B, Fernandez EJ, Unterhuber A, Sattmann H, Fercher AF, Drexler W, Prieto PM, Artal P. Adaptive-optics ultrahigh-resolution optical coherence tomography. Opt Lett. 2004;29:2142–2144. doi: 10.1364/ol.29.002142. [DOI] [PubMed] [Google Scholar]

- 23.Zawadzki RJ, Jones SM, Olivier SS, Zhao MT, Bower BA, Izatt JA, Choi S, Laut S, Werner JS. Adaptive-optics optical coherence tomography for high-resolution and high-speed 3D retinal in vivo imaging. Opt Express. 2005;13:8532–8546. doi: 10.1364/opex.13.008532. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Beckers JM. Adaptive Optics for Astronomy - Principles, Performance, and Applications. Annu Rev Astron Astr. 1993;31:13–62. [Google Scholar]

- 25.Guyon O. Limits of adaptive optics for highcontrast imaging. Astrophys J. 2005;629:592–614. [Google Scholar]

- 26.Rostami M, Michailovich O, Wang Z. Image Deblurring Using Derivative Compressed Sensing for Optical Imaging Application. Ieee T Image Process. 2012;21:3139–3149. doi: 10.1109/TIP.2012.2190610. [DOI] [PubMed] [Google Scholar]

- 27.Hosseini MS, Michailovich O. Derivative Compressive Sampling with Application to Phase Unwrapping. 17th European Signal Processing Conference; 2009. pp. 115–119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Porter J, Queener H, Lin J, Thorn K, Awwal AA. Adaptive optics for vision science: Principles, practices, design and applications. Vol. 171. John Wiley & Sons; 2006. [Google Scholar]

- 29.Porter J, Guirao A, Cox IG, Williams DR. Monochromatic aberrations of the human eye in a large population. J Opt Soc Am A. 2001;18:1793–1803. doi: 10.1364/josaa.18.001793. [DOI] [PubMed] [Google Scholar]

- 30.Beck A, Teboulle M. A Fast Iterative Shrinkage-Thresholding Algorithm for Linear Inverse Problems. Siam J Imaging Sci. 2009;2:183–202. [Google Scholar]

- 31.Jaeken B, Artal P. Optical Quality of Emmetropic and Myopic Eyes in the Periphery Measured with High-Angular Resolution. Invest Ophth Vis Sci. 2012;53:3405–3413. doi: 10.1167/iovs.11-8993. [DOI] [PubMed] [Google Scholar]

- 32.Fang LY, Li ST, McNabb RP, Nie Q, Kuo AN, Toth CA, Izatt JA, Farsiu S. Fast Acquisition and Reconstruction of Optical Coherence Tomography Images via Sparse Representation. Ieee T Med Imaging. 2013;32:2034–2049. doi: 10.1109/TMI.2013.2271904. [DOI] [PMC free article] [PubMed] [Google Scholar]