Abstract

PURPOSE

The Canadian Primary Care Sentinel Surveillance Network (CPCSSN) is Canada’s first national chronic disease surveillance system based on electronic health record (EHR) data. The purpose of this study was to develop and validate case definitions and case-finding algorithms used to identify 8 common chronic conditions in primary care: chronic obstructive pulmonary disease (COPD), dementia, depression, diabetes, hypertension, osteoarthritis, parkinsonism, and epilepsy.

METHODS

Using a cross-sectional data validation study design, regional and local CPCSSN networks from British Columbia, Alberta (2), Ontario, Nova Scotia, and Newfoundland participated in validating EHR case-finding algorithms. A random sample of EHR charts were reviewed, oversampling for patients older than 60 years and for those with epilepsy or parkinsonism. Charts were reviewed by trained research assistants and residents who were blinded to the algorithmic diagnosis. Sensitivity, specificity, and positive and negative predictive values (PPVs, NPVs) were calculated.

RESULTS

We obtained data from 1,920 charts from 4 different EHR systems (Wolf, Med Access, Nightingale, and PS Suite). For the total sample, sensitivity ranged from 78% (osteoarthritis) to more than 95% (diabetes, epilepsy, and parkinsonism); specificity was greater than 94% for all diseases; PPV ranged from 72% (dementia) to 93% (hypertension); NPV ranged from 86% (hypertension) to greater than 99% (diabetes, dementia, epilepsy, and parkinsonism).

CONCLUSIONS

The CPCSSN diagnostic algorithms showed excellent sensitivity and specificity for hypertension, diabetes, epilepsy, and parkinsonism and acceptable values for the other conditions. CPCSSN data are appropriate for use in public health surveillance, primary care, and health services research, as well as to inform policy for these diseases.

Keywords: primary health care, chronic disease, validation studies, electronic health records, sensitivity and specificity

INTRODUCTION

The continuing, worldwide use of electronic health records (EHRs) in primary care practices provides a potential source of clinical data. These data can improve our understanding of the epidemiology of disease and effectiveness of disease prevention and management through disease surveillance, primary care–focused health services research, practice evaluation, and quality improvement.1–4 EHRs provide clinical data not typically available from health administrative data sources or population surveys.5

The Canadian Primary Care Sentinel Surveillance Network (CPCSSN) has assembled Canada’s first national EHR data repository for primary care research and surveillance.1 Given the nature of EHR data, disease case definitions used in its analysis must accurately reflect diagnoses within the EHR before being used for either surveillance or research. The purpose of this study was to develop and validate EHR-based case definitions and case-finding algorithms used to identify 8 common chronic conditions found in primary care (chronic obstructive pulmonary disease [COPD], dementia, depression, diabetes, hypertension, osteoarthritis, parkinsonism, and epilepsy) against EHR-based diagnosis of these conditions by chart review.

METHODS

Study Sample

CPCSSN consists of 10 primary care research networks (PCRNs) across Canada with 475 participating primary care sentinel clinicians (family physicians and nurse practitioners) contributing quarterly data on more than 600,000 patients. All 10 PCRNs have received approval from their host institution research ethics boards, as well as Health Canada ethics approval for collecting this information. Our study included patient data extracted on June 30, 2012, from 6 of the 10 PCRNs. Four of the 10 networks were excluded for a variety of reasons, including being the pilot test site for this study and data collection problems. Each of the participating networks reviewed 400 patient charts, except in British Columbia, which had a smaller number of participating sentinel clinicians and accordingly reviewed 200 charts. Charts were randomly selected using an age-stratified method, with 90% of the charts drawn from those patients older than 60 years. In addition, 25 charts each for epilepsy and parkinsonism were chosen nonrandomly because of the lower prevalence rates of these diseases. The total sample of 2,200 charts from all the sites ensured a margin of error of less than 10% for all outcomes of interest, with the exception of epilepsy and parkinsonism.

Chart Review Procedures

Research assistants who were blinded to the algorithmic diagnosis of cases reviewed the charts. All reviewers were trained using a standard manual. A standardized electronic data abstraction tool was developed to extract anonymous information from patients’ charts and record the reviewers’ assessments in a consistent way. The manual, training procedures, and abstraction tool were based on those developed for a previous study on data validation in primary care practices.6 A pilot study conducted at one PCRN verified the feasibility of the method, refined the data collection tool, and identified issues with case definitions or audit procedures.4 Reviewers examined the entire electronic chart for evidence of the presence or absence of each of the 8 conditions under study. In circumstances where the reviewer was uncertain about the diagnosis, the study epidemiologist and a physician from the study team reviewed the chart. All chart review data were entered into an electronic database built using FileMaker Pro 11 (FileMaker, Inc).

Case Definitions

The CPCSSN case definitions were specifically developed for use in primary care contexts. The definitions use a combination of International Classification of Diseases, Ninth Revision (ICD-9) codes (used by primary care physicians for service billing purposes in Canada) and numeric and textual data (including spelling variants, etc) drawn from a number of sections within the EHR, including the problem and encounter diagnoses, billing, laboratory test results, and prescribed medications (Supplemental Appendix). The case definitions were constructed with guidance from published evidence and both general and specialist physicians, and required several revisions before validation and implementation using computerized case finding algorithms. Table 1 provides a description of the case definitions. Each is unique to the respective chronic condition and includes varying EHR data elements. The detailed case definitions are available from CPCSSN at http://www.cpcssn.ca/research-resources/case-definitions.

Table 1.

Summary of CPCSSN Case Definitions for 8 Index Conditions

| Case Definition | ||

|---|---|---|

|

|

||

| Condition | Inclusions | Exclusions |

| Diabetes | Diabetes mellitus type 1 and type 2, controlled or uncontrolled | Gestational diabetes, chemically induced (secondary) diabetes, neonatal diabetes, polycystic ovarian syndrome, hyperglycemia, prediabetes, or similar states or conditions (such as impaired fasting glucose or glucose intolerance) |

| Hypertension | Essential hypertension, hypertensive heart disease, hypertensive chronic kidney disease, hypertensive heart and kidney disease, secondary hypertensiona | Myocardial infarction, congestive heart failure, angina, portal hypertension, white-coat hypertension, gestational hypertension, or pregnancy-induced hypertension (unless there was a preexisting diagnosis of hypertension), borderline hypertension, or similar nonspecific comment |

| COPD | Obstructive chronic bronchitis, emphysema, chronic airway obstruction (ever)b | All types of asthma, including chronic obstructive asthma |

| Depression | Episodic mood disorders, depressive disorder not elsewhere classified, bipolar, manic affective disorder, manic episodes, mild depression (not simply clinical depression)c | Anxiety disorders, alcohol or drug-induced mental disorders, schizophrenic disorders, delusional disorders, other nonorganic psychoses, pervasive developmental disorders, or other intellectual disabilities |

| Osteoarthritis | Osteoarthritis and allied disorders, spondylosis and allied disorders such as kissing spine, ankylosing vertebral hyperostosis | Intervertebral disc disorders, spinal stenosis, ankylosing spondylitis, and other inflammatory spondylopathies |

| Epilepsy | Epilepsy, recurrent seizures or general convulsions, petit mal (absence) seizures, grand mal (tonic-clonic) seizures, myoclonic epilepsy, childhood-onset epilepsy, childhood-related epilepsy | Provoked seizure, alcohol withdrawal seizure, hypoglycemic seizure, or possible or suspected variants of the inclusionsd |

| Parkinsonism | Parkinson disease, paralysis agitans, parkinsonism | Tremor, Wolf-Parkinson-White syndrome, and “suspected” or “possible” variants of the inclusions |

| Dementia | Alzheimer disease, frontotemporal dementia, Pick disease, senile degeneration of the brain, corticobasal degeneration, cerebral degeneration, dementia with Lewy bodies, mild cognitive impairment, senile dementia, presenile dementia, vascular dementia, senility without mention of psychosis | |

COPD = chronic obstructive pulmonary disease; CPCSSN = Canadian Primary Care Sentinel Surveillance Network.

Requires an explicit diagnosis of hypertension (eg, elevated blood pressure values alone are insufficient).

Only persons aged 35 years and older are eligible for inclusion. Classification of COPD requires more than a single x-ray or spirometry finding and must include either a specific or similar diagnosis of COPD.

This definition identifies lifetime prevalence.

Individuals with a diagnosis of petit mal, grand mal, or convulsions and not receiving antiepileptic drugs are excluded, along with individuals on antiepileptic drugs only and no diagnosis in the chart.

Statistical Analysis

Sensitivity, specificity, and positive and negative predictive values (PPVs and NPVs) for each case definition were calculated. The data were summarized using 2 × 2 tables comparing the CPCSSN case definition diagnosis (either a case or noncase) with the chart review diagnosis (either a case or noncase) for each condition. Generalized estimating equations quantified the effect of clustering at the physician, site, or network level. The estimated intracluster correlation was then used to more accurately estimate the appropriate 95% confidence intervals for sensitivity, specificity, PPV, and NPV. For all metrics, the lower limit of the 95% confidence interval was considered the lower limit of the plausible range and was compared with standard cutoffs. Because acceptable limits for individual metrics need to be suited to the question of interest, we considered all measures above 70% acceptable, with any falling into the 70% to 80% range meriting additional investigation. All data were analyzed using the SAS 9.3 statistical platform (SAS Institute).

RESULTS

Overall Study Sample

In total, 1,920 patient charts were reviewed from regional PCRNs from the provinces of British Columbia, Alberta (2 PCRNs), Ontario, Nova Scotia, and Newfoundland. Collectively, these PCRNs included 126 sentinel clinicians from 33 practice sites, using 4 different EHR systems (Nightingale On Demand [Nightingale Informatix Corporation], PS Suite EMR [TELUS Health], Wolf EHR [TELUS Health], and Med Access [TELUS Health]). A description of the patients selected for the study is displayed in Table 2. This sample was age stratified with oversampling in older age-groups; we expected higher prevalence rates for the 8 chronic conditions.

Table 2.

Summary of Patient Characteristics (n=1,920)

| Patient Characteristics | Percent |

|---|---|

| Male | 44.5 |

| Age ≥60 y | 85.4 |

| Disease prevalence | |

| Diabetes | 16.8 |

| Hypertension | 50.1 |

| Depression | 20.2 |

| COPD | 7.9 |

| Osteoarthritis | 31.6 |

| Dementia | 4.9 |

| Epilepsy | 7.3 |

| Parkinsonism | 4.3 |

| Number of chronic conditions (of 8 CPCSSN conditions) | |

| 0 | 22.7 |

| 1 | 34.6 |

| 2 | 26.1 |

| 3 or more | 16.6 |

COPD = chronic obstructive pulmonary disease; CPCSSN = Canadian Primary Care Sentinel Surveillance Network.

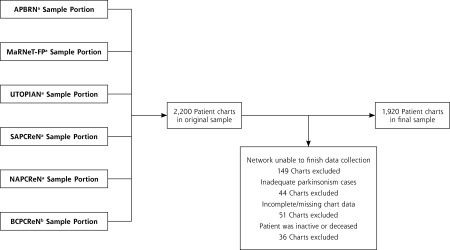

There was a shortfall of 280 charts from the planned sample of 2,200. One hundred forty-nine charts were excluded because of EHR access challenges. Forty-four charts were excluded because of an insufficient number of patients with parkinsonism to satisfy the additional 25 per network. Eighty-seven charts were excluded because the EHR record was incomplete (n = 51), the patient had left the practice (n = 12), or was deceased (n = 24) (Figure 1).

Figure 1.

Sample inclusion and exclusion flow chart.

APBRN = Atlantic Practice Based Research Network; BCPCReN = British Columbia Primary Research Care Network; MaRNet-FP = Maritme Family Practice Research Network; NAPCPReN = Northern Alberta Primary Care Research Network; SAPCReN = Southern Alberta Primary Care Research Network; UTOPIAN = University of Toronto Practice Based Research Network.

a 350 Patients chosen at random, 25 patients case positive for epilepsy, 25 patients case positive for parkinsonism.

b 175 Patients chosen at random, 13 patients case positive for epilepsy, 12 patients case positive for parkinsonism.

Validation

Table 3 summarizes the validation metrics of sensitivity, specificity, PPV, and NPV for each of the 8 case definitions. Overall, sensitivity ranged from 77.8% (osteoarthritis) to 98.8% (parkinsonism). Specificity was high for all 8 conditions, with the lowest being observed for hypertension (93.5%). COPD had the lowest PPV (72.1%) and hypertension the highest (92.9%). Hypertension had the lowest NPV (86.0%) with values greater than 90% for all other conditions and many above 99%.

Table 3.

Summary of Validation Results

| Condition | Sensitivity % (95% CI) | Specificity % (95% CI) | PPV % (95% CI) | NPV % (95% CI) |

|---|---|---|---|---|

| Hypertension | 84.9 (82.6–87.1) | 93.5 (92.0–95.1) | 92.9 (91.2–94.6) | 86.0 (83.9–88.2) |

| Diabetes | 95.6 (93.4–97.9) | 97.1 (96.3–97.9) | 87.0 (83.5–90.5) | 99.1 (98.6–99.6) |

| Depression | 81.1 (77.2–85.0) | 94.8 (93.7–95.9) | 79.6 (75.7–83.6) | 95.2 (94.1–96.3) |

| COPD | 82.1 (76.0–88.2) | 97.3 (96.5–98.0) | 72.1 (65.4–78.8) | 98.4 (97.9–99.0) |

| Osteoarthritis | 77.8 (74.5–81.1) | 94.9 (93.8–96.1) | 87.7 (84.9–90.5) | 90.2 (88.7–91.8) |

| Dementia | 96.8 (93.3–100.0) | 98.1 (97.5–98.7) | 72.8 (65.0–80.6) | 99.8 (99.6–100.0) |

| Epilepsy | 98.6 (96.6–100.0) | 98.7 (98.2–99.2) | 85.6 (80.2–91.1) | 99.9 (99.7–100.0) |

| Parkinsonism | 98.8 (96.4–100.0) | 99.0 (98.6–99.5) | 82.0 (74.5–89.5) | 99.9 (99.8–100.0) |

COPD = chronic obstructive pulmonary disease; NPV = negative predictive value; PPV = positive predictive value.

DISCUSSION

CPCSSN provides the first pan-Canadian primary care EHR data repository. This work shows that CPCSSN has developed valid primary care EHR case definitions for identifying patients with hypertension, diabetes, depression, COPD, osteoarthritis, dementia, epilepsy, and parkinsonism. These case definitions could be used for a variety of data-driven activities in primary care, including surveillance, routine practice evaluation, feedback and quality improvement, and research.

Comparison With Other Studies

The validation metrics for the CPCSSN case definitions are comparable to, or better than, similar international databases. CPCSSN’s sensitivity rate for diabetes (95.6%) compares favorably with the United Kingdom’s Clinical Practice Research Datalink (formerly General Practice Research Database) (90.6%),7 as well as with a sample of EHR data from 17 physicians in 1 Canadian province (83.1%)8 and a general practice in Yorkshire, United Kingdom (98.3%).9 With respect to hypertension, the CPCSSN algorithm was both reasonably accurate (PPV of 92.9%) and comprehensive (sensitivity of 84.9%). Our findings are comparable to those observed in an EHR-based surveillance system in Sweden,10 while performing better than algorithms based exclusively on Canadian administrative data.11 The case definition for depression has validity properties that are comparable to EHR-based algorithms in the United States12 and better than algorithms based exclusively on billing and pharmacy data.13 A diagnostic algorithm for COPD used in a UK Clinical Practice Research Datalink study was less sensitive and specific,14 and algorithms derived from administrative data had lower sensitivity and PPV than those observed in our study.15–17 Little is known about diagnostic algorithms for osteoarthritis, though validation results from a predictive algorithm used in a computerized, diagnostic database in the United States had outcomes similar to those of this study.18

The CPCSSN case definition for dementia performed better than those constructed from Quebec billing data (sensitivity of 12.9%–39.7%)19 and Canadian administrative hospital discharge data (sensitivity of 32.3%–66.9%, specificity of 100.0%).20 The epilepsy results were similar to definitions using administrative databases for various Canadian provinces (PPV of 75.5%–98.9%, NPV of 94.0%–97.4%),21 and for definitions combining diagnoses and medications to identify epilepsy cases in a US managed care organization data system (PPV of 79.2%–84.1%).22 Lastly, cases of parkinsonism validated in an American Veteran’s Health Administration database showed strong PPV (81%) when using ICD-9 codes alone but had poor sensitivity (18.7%); sensitivity improved when ICD-9 codes and medications were combined (42.5%), but PPV suffered (53.3%).23

Strengths and Weaknesses

Previous validation studies using EHR data have been highly variable in their methodology; diagnostic algorithms and reference standard sources have varied across studies, and as a result, establishing acceptable and comparable values for the conventional epidemiological measures of validity (sensitivity, specificity, PPV, NPV) was a complicated task.24 For our study, randomly selecting charts enabled estimation of sensitivity and specificity, as well as positive and negative predictive value. Other studies have selected patients who were case positive, permitting only an assessment of the positive predictive value of the definition. 25 Further, our study is unique in that the validation of case definitions took place across multiple regional networks involving multiple EHRs. Last, this study has a relatively large total sample size compared with similar studies involving primary care chart abstraction.25 There were some limitations to our study. Using chart abstraction as the reference standard implies that the study is limited to information in the EHR. Missing diagnoses and incomplete documentation will limit the accuracy of the algorithms. Further, each case definition is currently limited to lifetime prevalence and for depression does not distinguish between chronic and episodic depression. The relatively low prevalence of epilepsy and parkinsonism in the general practice population necessitated oversampling with patients who were flagged as having the condition according to the algorithm. If the entire sample was selected this way, it would be inappropriate to speak about the sensitivity and specificity of these 2 case definitions. Given that the chart abstractors were completely blinded to the CPCSSN algorithmic diagnosis and that all charts were reviewed, however, we believe the calculated sensitivity and specificity are reasonable estimates. Another limitation is that interrater reliability was not measured; nevertheless, the standardized training manual and rigorous oversight during the review process promoted consistency. Although there were nonrandom exclusions from the study sample, it is unlikely that this would change the conclusions of the study. The largest exclusion was related to 1 network being unable to finish data collection because of technical problems. Other exclusions, such as insufficient number of parkinsonism cases and charts being incomplete or for deceased patients, are likely to have negligible impact on the overall study results. Including different EHRs is both a strength and a limitation, as doing so replicates reality; however, the study was not powered to detect differences between EHRs.

In conclusion, the CPCSSN case definitions show excellent sensitivity and specificity for hypertension, diabetes, epilepsy, dementia, and parkinsonism, and the validity in general for all 8 conditions was very good. CPCSSN has set precedence for systematically validating the case definitions used within our primary care database by way of an explicit, consistent, and robust methodology. The use of validated EHR-based clinical data from community-based primary care settings is essential to understand, inform, and evaluate disease epidemiology, as well as to improve primary care clinical practice, organizational development, and health system policy and planning.

Acknowledgments

Nathalie Jetté, Ron Postuma, and Frank Molnar contributed to the development of the neurological case definitions. Karim Keshavjee, Marshall Godwin, Wayne Putnam, and Michelle Greiver contributed to the development of the other 5 case definitions. Nathan Coleman assisted in the development of chart review criteria. The Neurological Health Charities Canada, as well as the research assistants and residents, contributed to the success of this initiative.

Footnotes

Conflicts of interest: authors report none.

Funding support: Funding for CPCSSN is provided by the Public Health Agency of Canada through a contribution agreement with the College of Family Physicians of Canada. This study was also funded in part by the National Population Health Study of Neurological Conditions.

Disclaimer: The opinions expressed in this publication are those of the authors/researchers, and do not necessarily reflect the official views of the Public Health Agency of Canada. The author retained full academic control of this work including the right to publish.

Previous presentations: Portions of this work have been presented at the following conferences: Canadian Association of Health Services and Policy Research Conference, May 28–30, 2013, Vancouver, British Columbia; Canadian Society of Epidemiology and Biostatistics Biennial Conference, June 24–27, 2013, St. John’s, Newfoundland; Trillium Primary Health Care Research Day, June 19, 2013, Toronto, Ontario; North American Primary Care Research Group Annual Meeting, November 9–13, 2013, Ottawa, Ontario.

Supplementary materials: Available at http://www.AnnFamMed.org/content/12/4/367/suppl/DC1/

References

- 1.Birtwhistle R, Keshavjee K, Lambert-Lanning A, et al. Building a pan-Canadian primary care sentinel surveillance network: initial development and moving forward. J Am Board Fam Med. 2009;22(4):412–422 [DOI] [PubMed] [Google Scholar]

- 2.Holbrook A, Thabane L, Keshavjee K, et al. ; COMPETE II Investigators. Individualized electronic decision support and reminders to improve diabetes care in the community: COMPETE II randomized trial. CMAJ. 2009;181(1–2): 37–44 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Cebul RD, Love TE, Jain AK, Hebert CJ. Electronic health records and quality of diabetes care. N Engl J Med. 2011;365(9):825–833 [DOI] [PubMed] [Google Scholar]

- 4.Kadhim-Saleh A, Green M, Williamson T, Hunter D, Birtwhistle R. Validation of the diagnostic algorithms for 5 chronic conditions in the Canadian Primary Care Sentinel Surveillance Network (CPCSSN): a Kingston practice-based research network (PBRN) report. J Am Board Fam Med. 2013;26(2):159–167 [DOI] [PubMed] [Google Scholar]

- 5.Virnig BA, McBean M. Administrative data for public health surveillance and planning. Annu Rev Public Health. 2001;22(1):213–230 [DOI] [PubMed] [Google Scholar]

- 6.Green ME, Hogg W, Savage C, et al. Assessing methods for measurement of clinical outcomes and quality of care in primary care practices. BMC Health Serv Res. 2012;12:214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Van Staa TP, Abenheim L. The quality of information recorded on a UK database of primary care records: a study of hospitalizations due to hypogylcemia and other conditions. Pharmacoepidemiol Drug Saf. 1994;3(1):15–21 [Google Scholar]

- 8.Tu K, Manuel D, Lam K, Kavanagh D, Mitiku TF, Guo H. Diabetics can be identified in an electronic medical record using laboratory tests and prescriptions. J Clin Epidemiol. 2011;64(4):431–435 [DOI] [PubMed] [Google Scholar]

- 9.Hassey A, Gerrett D, Wilson A. A survey of validity and utility of electronic patient records in a general practice. BMJ. 2001;322 (7299):1401–1405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hjerpe P, Merlo J, Ohlsson H, Bengtsson Boström K, Lindblad U. Validity of registration of ICD codes and prescriptions in a research database in Swedish primary care: a cross-sectional study in Skaraborg primary care database. BMC Med Inform Decis Mak. 2010;10:23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Quan H, Khan N, Hemmelgarn BR, et al. ; Hypertension Outcome and Surveillance Team of the Canadian Hypertension Education Programs. Validation of a case definition to define hypertension using administrative data. Hypertension. 2009;54(6):1423–1428 [DOI] [PubMed] [Google Scholar]

- 12.Trinh NHT, Youn SJ, Sousa J, et al. Using electronic medical records to determine the diagnosis of clinical depression. Int J Med Inform. 2011;80(7):533–540 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Spettell CM, Wall TC, Allison J, et al. Identifying physician-recognized depression from administrative data: consequences for quality measurement. Health Serv Res. 2003;38(4):1081–1102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Soriano JB, Maier WC, Visick G, Pride NB. Validation of general practitioner-diagnosed COPD in the UK General Practice Research Database. Eur J Epidemiol. 2001;17(12):1075–1080 [DOI] [PubMed] [Google Scholar]

- 15.Lacasse Y, Daigle JM, Martin S, Maltais F. Validity of chronic obstructive pulmonary disease diagnoses in a large administrative database. Can Respir J. 2012;19(2):e5–e9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Smidth M, Sokolowski I, Kærsvang L, Vedsted P. Developing an algorithm to identify people with chronic obstructive pulmonary disease (COPD) using administrative data. BMC Med Inform Decis Mak. 2012;12:38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Mapel DW, Frost FJ, Hurley JS, et al. An algorithm for the identification of undiagnosed COPD cases using administrative claims data. J Manag Care Pharm. 2006;12(6):457–465 [PubMed] [Google Scholar]

- 18.Gabriel SE, Crowson CS, O’Fallon WM. A mathematical model that improves the validity of osteoarthritis diagnoses obtained from a computerized diagnostic database. J Clin Epidemiol. 1996;49(9): 1025–1029 [DOI] [PubMed] [Google Scholar]

- 19.Wilchesky M, Tamblyn RM, Huang A. Validation of diagnostic codes within medical services claims. J Clin Epidemiol. 2004;57(2):131–141 [DOI] [PubMed] [Google Scholar]

- 20.Quan H, Li B, Saunders LD, et al. ; IMECCHI Investigators. Assessing validity of ICD-9-CM and ICD-10 administrative data in recording clinical conditions in a unique dually coded database. Health Serv Res. 2008;43(4):1424–1441 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Jetté N, Reid AY, Quan H, Hill MD, Wiebe S. How accurate is ICD coding for epilepsy? Epilepsia. 2010;51(1):62–69 [DOI] [PubMed] [Google Scholar]

- 22.Holden EW, Grossman E, Nguyen HT, et al. Developing a computer algorithm to identify epilepsy cases in managed care organizations. Dis Manag. 2005;8(1):1–14 [DOI] [PubMed] [Google Scholar]

- 23.Swarztrauber K, Anau J, Peters D. Identifying and distinguishing cases of parkinsonism and Parkinson’s disease using ICD-9 CM codes and pharmacy data. Mov Disord. 2005;20(8):964–970 [DOI] [PubMed] [Google Scholar]

- 24.Khan NF, Harrison SE, Rose PW. Validity of diagnostic coding within the General Practice Research Database: a systematic review. Br J Gen Pract. 2010;60(572):e128–e136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Herrett E, Thomas SL, Schoonen WM, Smeeth L, Hall AJ. Validation and validity of diagnoses in the General Practice Research Database: a systematic review. Br J Clin Pharmacol. 2010;69(1):4–14 [DOI] [PMC free article] [PubMed] [Google Scholar]