ABSTRACT

BACKGROUND

We designed a continuing medical education (CME) program to teach primary care physicians (PCP) how to engage in cancer risk communication and shared decision making with patients who have limited health literacy (HL).

OBJECTIVE

We evaluated whether training PCPs, in addition to audit-feedback, improves their communication behaviors and increases cancer screening among patients with limited HL to a greater extent than only providing clinical performance feedback.

DESIGN

Four-year cluster randomized controlled trial.

PARTICIPANTS

Eighteen PCPs and 168 patients with limited HL who were overdue for colorectal/breast/cervical cancer screening.

INTERVENTIONS

Communication intervention PCPs received skills training that included standardized patient (SP) feedback on counseling behaviors. All PCPs underwent chart audits of patients’ screening status semiannually up to 24 months and received two annual performance feedback reports.

MAIN MEASURES

PCPs experienced three unannounced SP encounters during which SPs rated PCP communication behaviors. We examined between-group differences in changes in SP ratings and patient knowledge of cancer screening guidelines over 12 months; and changes in patient cancer screening rates over 24 months.

KEY RESULTS

There were no group differences in SP ratings of physician communication at baseline. At follow-up, communication intervention PCPs were rated higher in general communication about cancer risks and shared decision making related to colorectal cancer screening compared to PCPs who only received performance feedback. Screening rates increased among patients of PCPs in both groups; however, there were no between-group differences in screening rates except for mammography. The communication intervention did not improve patient cancer screening knowledge.

CONCLUSION

Compared to audit and feedback alone, including PCP communication training increases PCP patient-centered counseling behaviors, but not cancer screening among patients with limited HL. Larger studies must be conducted to determine whether lack of changes in cancer screening were due to clinic/patient sample size versus ineffectiveness of communication training to change outcomes.

KEY WORDS: communication, shared decision making, health literacy, cancer screening, standardized patients

INTRODUCTION

Health literacy (HL) is defined as “the degree to which individuals have the capacity to obtain, process, and understand basic health information and services needed to make appropriate health decisions.”1 Limited HL is associated with poor patient adherence to cancer screening.2,3 Patients with limited HL tend to have inadequate knowledge about cancer control concepts; display more misunderstandings about cancer susceptibility and benefits of early cancer detection; and lack numeracy skills to understand risk reduction.3 They often want health information clarified, but ask physicians fewer questions.4,5 Research demonstrates that provider recommendation is strongly associated with patient adherence to cancer screening.6–8 Physician communication style when discussing screening may be as important as the mere act of recommending it.9 Cancer risk communication includes exploring perceptions of susceptibility to cancer, barriers and facilitators to screening ,as well as motivation and self-efficacy to adhere to screening. Patients often want to engage in discussions with their primary care physician about cancer risk.10 However, physicians may alter health education about cancer screening based on patient sociodemographics.11

We designed a multi-component continuing medical education (CME) program using unannounced standardized patients (SPs) to teach primary care physicians (PCP) how to engage in cancer risk communication and shared decision making (SDM) with patients who have limited HL.12 We conducted a randomized controlled trial to compare this CME program to a single-intervention program of provider audit-and-feedback. The primary objective is to determine whether the CME program improves physician communication behaviors and increases patient receipt of breast/cervical/colorectal cancer screening to a greater extent than audit-feedback alone.

MATERIALS AND METHODS

Study Setting and Participants

This 4-year study (2008 to 2012) targeted seven clinics in New Orleans that serve patients at risk for low HL—minorities, middle-aged or older, publicly insured and uninsured.2 Five clinics agreed to participate (one federally-qualified health center; two academic clinics, and two clinics with faith-based affiliations). Two clinics declined participation—a multi-specialty practice engaged in other initiatives and one federally qualified health center (FQHC) that did not respond to the invitation. Family physicians and general internists who practice at participating clinics at least one-half day weekly were eligible. Physicians planning to leave before 1 year were excluded.

During the planning of this study, we could not find similar studies with estimates of intraclass correlations (ICC) for cancer screening. We initially aimed to recruit 30 physicians and 10–15 patients per physician based on prior interventions to improve physician–patient communication. Eligible patients included men (age 50–75 years) and women (age 40–75 years) who were enrolled in clinics for ≥ 6 months or had seen their PCP at least 3 times; spoke English; were identified as having limited HL via the Rapid Estimate of Adult Literacy in Medicine [REALM ≤60 equivalent to ≤8th grade]13; and were due for breast/cervical/colorectal cancer screening based on American Cancer Society’s (ACS) 2009 guidelines.14 Patients were excluded if they planned to change PCPs within 1 year. Tulane University’s Institutional Review Board approved this study.

Recruitment Strategy

The study's principal investigator (EPH), a general internist, collaboratively recruited physicians with clinic medical directors. Among 19 physicians, one physician never responded to invitations to participate. During recruitment, study objectives, study design, and unannounced SP visits were explained. All physicians received $100 per visit for three unannounced visits to compensate for encounters with actors that resulted in lost revenue (Cost: $16,200). Physicians earned up to 20 credits from Tulane’s CME Office (Cost: $3,940).

The research team worked with clinics’ management and nursing staff to determine recruitment strategies deemed least disruptive to workflow. Nursing staff referred patients to on-site recruiters for interviews in private areas. Eligible patients were consented for enrollment, completed brief surveys, and received a $10 gift card (Cost: $1,680). Recruiters were blinded to physicians’ study group assignment.

Two physicians in the communication intervention group left their practices within 2 years; however, they completed all SP encounters. Patients who were recruited prior to their physicians’ departure continued in the study as long as they continued to receive care at the same clinic.

Study Design and Intervention

Clinics were randomized using a computer random number generator. Each clinic was assigned to one of two groups (communication training and chart audit vs. audit-only). Communication intervention clinics included one FQHC, one faith-based and one academic community site. We randomized at the practice level to minimize chances of patients crossing over to different study groups by seeing different physicians within the same clinic. This design allowed use of the same SPs at communication intervention and audit-only sites without revealing their identities. SPs were assigned to physicians and practice sites only once.

We recruited 19 actors from Tulane’s SP program (nine males/ten females; 14 Caucasian/four African-American/one Hispanic), and developed case scenarios based on SPs’ personal experience with cancer screening and family histories of cancer. SPs underwent 18 h of training to standardize case portrayal and ratings of communication (Cost:$5,900). SPs were not allowed to schedule their first clinic visits until they demonstrated accuracy of case portrayals (gave correct history, did not withhold/volunteer information, displayed appropriate affect), accuracy of checklist ratings (followed specific criteria for rating behavior as “poor”/“good”/“excellent”), and quality of feedback (comments relevant to behavior checklist, focused on changeable behavior, offered specific examples, used non-threatening tone).

SPs called clinics to schedule new patient evaluations as uninsured patients. Four of five clinics required $20–$25 co-pays. At these sites, neither clinic managers nor staff knew when SPs visited. At the hospital clinic, the study team worked with the billing office and clinic manager to obtain pre-approved discount co-pays. SP compensation included reimbursement for co-pays ($858) and $275 per-visit-fee ($14,850), regardless of the physicians’ group assignment.

Physicians in both groups underwent three unannounced SP visits (baseline; 6 months; 12 months) to measure communication behaviors regarding cancer screening. The study team employed unannounced visits to minimize physicians conscientiously altering their behaviors. SPs portrayed new patients who presented for physicals, had family histories of colon and breast cancer, and were overdue for cancer screening. SP medical history prompted physicians to discuss cancer screening. Given the nature of the communication intervention, SPs were not blinded to physicians’ group assignment. Communication intervention physicians were un-blinded after their first SP encounter.

The communication intervention is described in a previous publication.12 Intervention physicians received training in cancer risk communication and SDM. At the end of each visit with intervention physicians, SPs revealed themselves as actors and gave structured verbal feedback. One week after baseline SP visits, intervention physicians underwent one-on-one 30-min academic detailing with a study investigator (EPH) to review the most recent ACS guidelines, clinical red flags for identifying patients with limited HL, and strategies for effective counseling. Physicians were taught to present information in small “chunks”; use simple language, pictures, and “teach back” to discuss complex concepts15; and discuss and check understanding of cancer risks, discuss potential benefits/risks of screening, explore preferences, and negotiate plans. Communication intervention physicians were directed to WebSP (web-based service for SP event management) to review SP ratings of their communication and changes in ratings over time. They received written reports of SP ratings, which included narrative summaries of SP perceptions of the clinic, staff and physicians. Physicians in the audit-only group did not receive SP feedback or communication training. Both groups’ patient medical records were audited. All study physicians received two annual cancer screening status reports and aggregate baseline patient ratings of their communication measured using the Perceived Involvement in Care Scale (PICS; 13-item questionnaire measuring doctor facilitation of patient involvement, level of information exchange, and patient participation in decision making).16

Data Collection

At recruitment, physicians completed questionnaires to assess demographics, self-rated communication skills and cancer screening knowledge.12 Patients were administered surveys to assess socio-demographics, health and well-being [SF12v2],17,18 cancer screening knowledge, and the PICS.

Details of SP rating scales to assess communication behaviors were described previously.12 SPs completed behavior checklists that incorporate principles of the Health Belief Model,19 as well as Charles’20 and Braddock’s21 SDM models. SPs assessed general communication about cancer risks (six items; alpha 0.93-0.96) and SDM on colon cancer screening (six items; alpha 0.91-0.92) after each visit. Female SPs completed behavior checklists for SDM on cervical (four items; alpha 0.87-0.88) and breast cancer screening (four items; alpha 0.95-0.98).

All participating clinics use electronic medical records (EMR). Trained chart auditors reviewed patients’ cancer screening status at baseline and in 6-month intervals for 24 months. We assessed whether clinics implemented strategies to promote cancer screening.

Study Variables

The main independent variable is physician/patient study group assignment. Outcome variables are SP ratings of physician communication and patients’ colorectal/breast/cervical cancer screening status at follow-up. Covariates of interest for SP ratings include physician characteristics and SP–physician gender or race concordance. Covariates of interest for cancer screening status at follow-up include patient demographic variables for which there were statistically significant intervention group differences (age, insurance status), length of PCP relationship; family history of cancer, patients’ cancer screening knowledge, and clinic use of strategies to promote screening.

Patients’ cancer screening knowledge was measured during recruitment and 12-month follow-up telephone interviews. Based on 2009 ACS guidelines,14 patients’ knowledge was coded as correct if they responded as follows: Age to start colorectal cancer (CRC) screening, 50; Tests to screen for CRC—stool test/cards, colonoscopy or “full colon/bowel scope”, sigmoidoscopy or “partial colon/bowel scope”, barium enema; Age to start breast cancer screening, 40–50; frequency of mammograms, every 1–2 years; Age to start cervical cancer screening—sexually active or age 21; Frequency of pap smears, every 1–3 years.

Data Analysis

We compared baseline characteristics of study groups using Student’s t-test for continuous variables and chi square analysis for categorical variables. We used Student’s t-test to compare SP ratings of communication intervention versus audit-only physicians at baseline, 6 months and 12 months. Since SPs were not randomly assigned to clinics or physicians, we used multi-linear regression analysis to examine whether observed associations between SP ratings of communication behaviors and physician group assignment were modified by SP–physician racial or gender concordance. We used chi square analysis to compare the proportion of patients in each study group who were up-to-date on cancer screening at baseline and follow-up. To assess changes in patient cancer screening knowledge, we used chi square analysis to compare the proportion of patients who responded correctly to questions about screening guidelines during enrollment and 12-month interviews. Two patients crossed-over between study groups when they switched primary care clinics; however, we used an intent-to-treat approach and analyzed outcomes according to patients’ original group assignment. The data analysis was performed using STATA 10 (StataCorp LP; College Station, TX).

To inform future research with similar design, endpoints, methods of measurement, and target population, we calculated ICC using a mixed model approach (SAS PROC MIXED)22 where patients were nested within providers that were nested within clinics:

|

The variation inflation factor (VIF) or design effect was calculated as VIF = 1 + ICC(m-1), where m is the average number of patients per provider per cancer screening type. We then estimated recommended sample sizes for this type of study.

RESULTS

Participant Recruitment and Follow-Up

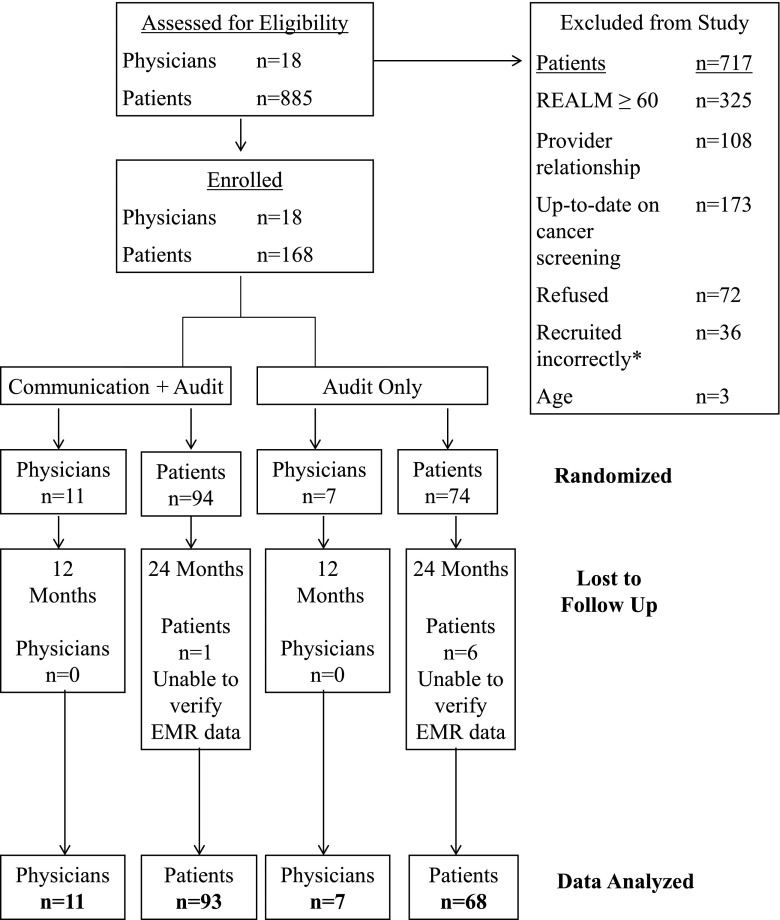

We enrolled 18 PCPs, screened 885 patients for study eligibility and enrolled 168 (Fig. 1). The most common reasons for patient exclusion were REALM >60, being new to physicians, or up-to-date on cancer screening. Patient rates of lost to follow-up in the communication and audit-only groups were 3 % and 8 %, respectively.

Figure 1.

Study participant recruitment, group allocation, and follow-up. *Patients who did not meet study eligibility and were incorrectly enrolled by field recruiters were withdrawn from the study by investigators.

Participant Characteristics

Physician demographics were similar across study groups, except a higher proportion of physicians in the audit-only group had academic appointments (Table 1). Patient characteristics were similar for most variables. Patients of communication intervention physicians were younger than patients of audit-only physicians. A lower proportion of communication intervention physicians’ patients had insurance and relationships with their PCPs ≥1 year. Most patients had a family history of cancer. Patients rated doctor facilitation and information exchange as good/excellent, but rated poorly their own participation in decision-making.

Table 1.

Characteristics of Participants by Intervention Groups *

| Communication Skills + Audit and Feedback | Audit and Feedback Only | P value | |

|---|---|---|---|

| Physicians | N = 11 (%) | N = 7 (%) | |

| Age (mean, SD) | 45.4 (13.8) | 42.2 (8.5) | |

| Gender – Female | 6 (54.5) | 4 (57.1) | |

| Race | |||

| White, not Hispanic | 6 (45.5) | 3 (42.9) | |

| Black, not Hispanic | 5 (54.5) | 3 (42.9) | |

| Medical training in US | 7 (63.6) | 7 (100) | |

| Academic faculty appointment | 6 (54.5) | 7 (100) | P < 0.05 |

| Total years in practice ≥5 | 6 (54.5) | 5 (71.4) | |

| Patients | N = 94 (%) | N = 74 (%) | |

| Gender-Female | 70 (74.5) | 61 (82.4) | |

| Race/Ethnicity – Black, not Hispanic | 82 (87.2) | 70 (94.6) | |

| Age (mean, SD) | 55.8 (7.1) | 60.9 (7.6) | P < 0.001 |

| REALM reading level 7th–8th grade † | 59 (62.8) | 55 (63.5) | |

| Level of Education: High school or higher | 61 (66.3) | 48 (65.8) | |

| Insurance (Medicaid/Medicare/Private) | 40 (44.0) | 57 (82.6) | P < 0.001 |

| Reports having same PCP ≥ 1 year | 55 (58.5) | 63 (87.5) | P < 0.001 |

| Global rating of health care (satisfied/very satisfied) | 74 (78.7) | 56 (76.7) | |

| Health and Well-being (mean, [SD]) | |||

| Physical health | 40.2 (11.6) | 39.4 (11.0) | |

| Mental health | 47.0 (12.4) | 46.5 (12.3) | |

| Patient family history of cancer | |||

| Any type of cancer | 50 (53.2) | 45 (60.8) | |

| Colon cancer | 7 (7.5) | 5 (6.8) | |

| Breast cancer | 14 (14.9) | 11 (14.9) | |

| Gynecologic cancer | 2 (2.1) | 5 (6.8) | |

| Perceived involvement in care (mean, SD) | |||

| Doctor facilitation of patient involvement‡ | 4.4 (1.0) | 4.3 (1.1) | |

| Level of information exchange§ | 3.45 (1.0) | 3.42 (1.0) | |

| Patient participation in decision making║ | 1.49 (1.4) | 1.58 (1.4) | |

* Chi-square analysis for group differences in proportions; Student’s t-test for mean difference between groups

† Rapid Estimate of Adult Literacy in Medicine (REALM) score & grade equivalent: 61–66, High school; 45–60, 7th–8th; 19–44, 4th–6th; 0–18, 3rd and below

Perceived Involvement in Care Scale (1 = agree; 0 = disagree): ‡ 5-item scale (alpha 0.74); § 4-item scale (alpha 0.74); ║ 4-item scale (alpha 0.70)

Standardized Patient Ratings

We conducted 54 unannounced SP encounters. One physician did not have any female SP encounters, nine physicians had only one female encounter, seven physicians had two female encounters and one had three female encounters.

At baseline, there were no significant differences in SP ratings of PCP’s general communication about cancer risks or SDM about CRC screening (Table 2). However, at 6 months, communication intervention physicians were rated significantly higher on general communication and SDM about CRC screening than audit-only physicians. Between-group differences in SP ratings of communication behaviors remained at 12 months. SP ratings of communication behaviors were not associated with physician characteristics or SP–physician gender/racial concordance. Due to inadequate numbers of female SPs, we could not reliably analyze data on SDM about cervical/breast cancer screening.

Table 2.

Standardized Patient Ratings of Physician Communication Behaviors by Intervention Group

| Baseline SP visit | 6-Month SP visit* | 12-Month SP visit* | ||||

|---|---|---|---|---|---|---|

| CS + Audit and feedback | Audit and feedback only | CS + Audit and feedback | Audit and feedback only | CS + audit and feedback | Audit and feedback only | |

| Standardized patient ratings (mean, SD) (1 = poor; 5 = excellent) | ||||||

| General communication about risks for cancer † | 3.3 (1.1) | 2.7 (1.2) | 4.1 (1.1) | 3.1 (1.3) | 4.1 (1.1) | 2.3 (0.8) |

| Shared decision making about colon cancer screening‡ | 3.0 (1.1) | 2.5 (1.2) | 3.9 (1.0) | 2.7 (1.1) | 3.9 (0.8) | 2.1 (0.7) |

CS Communication Skills

* p < 0.05 for between-group difference in SP ratings for both behavior subscales at 6-month and 12-month follow-up. These differences between study groups were not associated with SP–physician gender concordance or SP–physician race concordance. There was an insufficient number female SP encounters to measure shared decision making about pap or mammography

Standardized patient ratings: †6-item scale (alpha 0.93–0.96); ‡ 6-item scale (alpha 0.91–0.92)

Patient Cancer Screening Status

At recruitment, 104 patients were due for CRC screening, 84 were due for mammography, and only 47 were due for pap smears given the high rate of hysterectomies (Table 3). There were no group differences in screening at baseline. Although a higher proportion of patients completed screening at follow-up, there were only significant between-group differences in mammography and no differences in cervical/CRC screening rates. Mammography was associated with insurance status (insured vs. uninsured (OR [95 % CI]: 2.9 [1.3–6.4]). None of the follow-up cancer screening rates were associated with length of PCP relationship, family history of cancer, patients’ cancer screening knowledge, or clinic use of strategies to promote screening.

Table 3.

Between-Group Comparison of Patients’ Cancer Screening Status at Baseline and Follow-Up

| Baseline | Follow-up * | |||

|---|---|---|---|---|

| CS + audit and feedback | Audit and feedback only | CS + Audit and feedback | Audit and feedback only | |

| Mammography (N, %) † | N = 94 | N = 74 | N = 90 | N = 68 |

| Screened | 21 (22.3) | 26 (35.1) | 39 (43.3) | 43 (63.2) |

| Not screened | 49 (52.1) | 35 (47.3) | 27 (30.0) | 12 (17.6) |

| Not applicable (males) | 24 (25.5) | 13 (17.6) | 24 (26.7) | 13 (19.1) |

| Pap smear (N, %) | N = 94 | N = 74 | N = 90 | N = 70 |

| Screened | 19 (20.2) | 8 (10.8) | 31 (34.4) | 14 (20.0) |

| Not screened | 25 (26.6) | 22 (29.7) | 9 (10.0) | 12 (17.1) |

| Not applicable (males; females with hysterectomy) | 50 (53.2) | 44 (59.5) | 50 (55.5) | 44 (62.9) |

| Colon cancer screening (N, %) | N = 94 | N = 74 | N = 91 | N = 67 |

| Screened | 22 (23.4) | 25 (33.7) | 40 (44.0) | 38 (56.7) |

| Not screened | 59 (62.8) | 45 (60.8) | 38 (41.7) | 25 (37.3) |

| Not applicable (under age 50) | 13 (13.8) | 4 (5.4) | 13 (14.3) | 4 (6.0) |

* The total sample size for each category differs between baseline and follow-up due to missing data for patients for whom we were unable to verify cancer screening status at follow-up

† p < 0.05 by chi square analysis for group differences in cancer screening status for receipt of mammography

Patient Cancer Screening Knowledge

Less than 30 % of patients knew the age at which to start CRC screening and less than 50 % knew CRC screening test options (Table 4). Only one-third of patients knew the age at which to start breast cancer screening; however, over 70 % knew that mammography is used for screening. Less than 10 % knew the age at which to start cervical cancer screening; however, more than 60 % knew the frequency with which women should undergo pap smears. Among patients who completed 12-month interviews, screening knowledge did not change. There were no significant between-group differences in knowledge.

Table 4.

Study Group Comparison of the Number of Patients Who Correctly Answered Cancer Screening Knowledge Questions at Baseline and During a 12-month Follow-Up Telephone Interview *

| Baseline | Follow-up | |||

|---|---|---|---|---|

| CS + Audit and feedback | Audit and feedback only | CS + Audit and feedback | Audit and feedback only | |

| N = 94 | N = 74 | N = 72 | N = 61 | |

| Cancer screening knowledge | ||||

| Colon cancer – age to start screening | 24 (25.8) | 10 (13.5) | 29 (40.3) | 14 (23.0) |

| Colon cancer – screening test options | 38 (41.8) | 33 (44.6) | 34 (47.2) | 29 (47.5) |

| Breast cancer – age to start screening | 29 (30.8) | 19 (25.7) | 27 (37.5) | 19 (31.2) |

| Breast cancer – frequency of testing | 67 (72.0) | 59 (79.7) | 55 (76.4) | 54 (88.5) |

| Cervical cancer – age to start screening | 4 (4.3) | 3 (4.1) | 7 (9.7) | 2 (3.3) |

| Cervical cancer – frequency of testing | 62 (66.7) | 54 (74.0) | 54 (75.0) | 51 (83.6) |

* Patient responses to questions were coded as correct or incorrect based on the 2009 American Cancer Society screening guidelines as detailed in the study methods. The baseline and follow-up number of participants differ because the study investigators were not able to reach some participants by telephone at follow-up

Intraclass Correlates for Cancer Screening Outcomes

Table 5 displays ICC estimates and recommended sample sizes for future studies. The ICCs and the VIF (design effect) differ by cancer screening type and have the largest effect on CRC screening and mammography.

Table 5.

Intraclass Correlation of Patient Cancer Screening Within Providers Within Clinics*

| Cancer screening variable | n | ICC | m | VIF | Recommended sample size |

|---|---|---|---|---|---|

| Colorectal cancer screening at baseline | 151 | 0.07568 | 8.39 | 1.559 | 235 |

| Colorectal cancer screening at follow-up | 142 | 0.11919 | 7.89 | 1.821 | 256 |

| Mammography at baseline | 130 | 0.12970 | 7.22 | 1.807 | 235 |

| Mammography at follow-up | 121 | 0.15580 | 6.72 | 1.892 | 229 |

| Pap smear at baseline | 73 | 0.00000 | 4.06 | 1.000 | 73 |

| Pap smear at follow-up | 66 | 0.00459 | 3.67 | 1.012 | 67 |

*Intraclass correlations are presented for patients within providers within clinics using a mixed model approach

Number of Clinics = 5, Number of Providers = 18, n = Number of Patients, ICC = intraclass correlation, m = average number of patients per provider, VIF = variance inflation factor

DISCUSSION

To our knowledge, this is the first randomized controlled trial that assesses the impact of physician communication training (in addition to audit-feedback) on cancer screening behaviors among patients with limited HL. Similar to previous studies,23 our study shows that physicians can be trained to achieve and maintain patient-centered communication skills for at least 12 months. However, to date, the impact of training on specific cancer screening behaviors of patients with limited HL has not been described. Our study did not show an association between physician training and completion of colorectal/breast/cervical cancer screening.

Prior studies substantiate the need to train PCPs in general communication and informed SDM about cancer screening.24–26 Physician training in patient-centered communication skills may be particularly important for addressing disparities in CRC screening. Patients have multiple test options; however, prior research demonstrates that providers often do not counsel patients on or elicit patient preferences for different screening options.27 Comprehensive discussion of alternative options may increase the likelihood of patients completing any test,28 and can ultimately reduce health disparities. Our CME program’s focus on cancer screening among patients with limited HL is salient, given research showing that physicians tend to overestimate literacy,29 which may lead to their use of ineffective counseling strategies.

Multiple factors influence patients’ cancer screening behaviors, including socio-demographics, family history of cancer, physician recommendation, usual source of care, patient knowledge, preventive health care utilization patterns and perceived barriers.6–8,30,31 The Community Services Prevention Task Force recommends several interventions to increase colorectal, breast and cervical cancer screening: patient education, client-directed reminders, reduction of structural barriers, reduction of out-of-pocket costs, and provider-directed audit and feedback.32 Although the strength of evidence for these interventions varies, provider-directed audit and feedback increases screening for all three cancers. All study physicians underwent audit and feedback. Although a higher proportion of patients completed screening, changes in cancer screening rates were nonsignificant.

This study has several limitations. Practice sites that chose not to participate may differ from study participants in communication behaviors and knowledge of and practice adherence to clinical guidelines. Moreover, because we did not meet our recruitment goals, our sample size limited our ability to detect the impact of physician training on patients’ cancer screening behaviors. Our ICC estimates indicate that the design effect differs for each cancer screening outcome, and that a larger sample size was needed to detect differences in CRC screening and mammography. We share our study findings to add to recent literature examining ICC estimates for cancer prevention cluster randomized trials.33,34 This study was designed to assess whether cancer screening would increase within 24 months of the intervention. Longer follow-up is indicated, given current recommended intervals for CRC and cervical cancer screening. The lack of change in knowledge may reflect physician and patient uncertainty in the face of differences in guidelines across professional societies and expert groups, frequent changes in these guidelines, and the resulting conflicting messages regarding these cancer screening. This problem merits further investigation and is a target for future research.

SP ratings may be biased, since they were not blind to physician intervention assignment. Blinding of SPs was not possible, since they both delivered the intervention (if assigned to do so) and measured physician performance. To minimize subjectivity, we anchored each SP checklist item with pre-defined performance criteria to standardize ratings. We did not use audio-recordings to assess role fidelity and validate SP measures. Instead, we used experienced SPs who helped study investigators develop case scenarios to maximize standardization. Unannounced SPs have been used extensively to assess variations between clinicians in quality of care and adherence to clinical guidelines, and to evaluate educational interventions.35 Prior studies have shown that properly trained SPs compare well with independent assessment of recordings of unannounced SP encounters, and may justify their use in comparing quality of care across practice sites and clinicians.36 SPs are regarded by some as the reference standard for assessing performance37 and have been used to reliably measure provider skills.36,38

We did not measure patients’ perceptions of physicians’ communication about cancer screening or audiotape visits with patients. Therefore, we cannot validate whether skills measured in SP encounters were used consistently with patients or whether patients perceived physician use of these skills. We opted not to use clinic patient ratings of physician skills, since these ratings may vary by several factors, including whether or not patients choose or are assigned to their physicians, and by the length of the relationship.39 Finally, we did not activate patients to seek screening, and we did not set up visits to address cancer screening. Instead, we deferred to clinics’ usual practices to minimize observation bias.

For future research, we recommend several methodological considerations. Larger studies must be conducted to determine whether lack of changes in cancer screening were due to our sample size of clinics/patients versus ineffectiveness of communication training to change outcomes. Our study provides ICC estimates that can be used to guide future research. If measures of SDM on colorectal/breast/cervical cancer screening are included in the same study, consideration should be given to employing female SPs only. Finally, cost effectiveness analyses would help to inform implementation and dissemination of both audit/feedback and communication strategies to improve cancer screening among primary care patients with limited health literacy.

Acknowledgements

Contributors

The authors would like to thank Dr. Roy Weiner for his critical review of this manuscript and Dr. John LeFante for his assistance with examining intraclass correlations for cancer screening outcomes.

Funders

This study was funded by the Robert Wood Johnson Foundation Harold Amos Faculty Development Program (Grant # 63523). Dr. Cooper is supported by grants from the National Heart, Lung, and Blood Institute (K24 HL83113 and P50 HL0105187).

Prior Presentations

This work was presented at the American Association for Cancer Research 3rd and 4th Annual Cancer Health Disparities Conferences in October 2010 and September 2011.

Conflict of Interest

The authors declare that they do not have a conflict of interest.

Footnotes

ClinicalTrials.gov identifier: NCT01361035

REFERENCES

- 1.Healthy People 2010: Understanding and Improving Health. 2. Washington, DC: U.S: Government Printing Office; 2000. [Google Scholar]

- 2.Mika VS, Kelly PJ, Price MA, Franquiz M, Villarreal R. The ABCs of health literacy. Fam Community Health. 2005;28:351–7. doi: 10.1097/00003727-200510000-00007. [DOI] [PubMed] [Google Scholar]

- 3.Davis TC, Williams MV, Marin E, Parker RM, Glass J. Health literacy and cancer communication. CA Cancer J Clin. 2002;52:134–49. doi: 10.3322/canjclin.52.3.134. [DOI] [PubMed] [Google Scholar]

- 4.Katz MG, Jacobson TA, Veledar E, Kripalani S. Patient literacy and question-asking behavior during the medical encounter: a mixed-methods analysis. J Gen Intern Med. 2007;22:782–6. doi: 10.1007/s11606-007-0184-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Aboumatar HJ, Carson KA, Beach MC, Roter DL, Cooper LA. The Impact of Health Literacy on Desire for Participation in Healthcare, Medical Visit Communication, and Patient Reported Outcomes among Patients with Hypertension. J Gen Intern Med. 2013 May 21. [Epub ahead of print]. [DOI] [PMC free article] [PubMed]

- 6.Beydoun HA, Beydoun MA. Predictors of colorectal cancer screening behaviors among average-risk older adults in the United States. Cancer Causes Control. 2008;19:339–59. doi: 10.1007/s10552-007-9100-y. [DOI] [PubMed] [Google Scholar]

- 7.Griffith KA, McGuire DB, Royak-Schaler R, Plowden KO, Steinberger EK. Influence of family history and preventive health behaviors on colorectal cancer screening in African Americans. Cancer. 2008;113:276–85. doi: 10.1002/cncr.23550. [DOI] [PubMed] [Google Scholar]

- 8.Schueler KM, Chu PW, Smith-Bindman R. Factors associated with mammography utilization: a systematic quantitative review of the literature. J Womens Health. 2008;17:1477–98. doi: 10.1089/jwh.2007.0603. [DOI] [PubMed] [Google Scholar]

- 9.Fox SA, Heritage J, Stockdale SE, Asch SM, Duan N, Reise SP. Cancer screening adherence: does physician–patient communication matter? Patient Educ Couns. 2009;75:178–84. doi: 10.1016/j.pec.2008.09.010. [DOI] [PubMed] [Google Scholar]

- 10.Buchanan AH, Skinner CS, Rawl SM, Moser BK, Champion VL, Scott LL, Strigo TS, Bastian L. Patients’ interest in discussing cancer risk and risk management with primary care physicians. Patient Educ Couns. 2005;57:77–87. doi: 10.1016/j.pec.2004.04.003. [DOI] [PubMed] [Google Scholar]

- 11.Bao Y, Fox SA, Escarce JJ. Socioeconomic and racial/ethnic differences in the discussion of cancer screening: “between-” versus “within-” physician differences. Health Serv Res. 2007;42:950–70. doi: 10.1111/j.1475-6773.2006.00638.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Price-Haywood EG, Roth KG, Shelby K, Cooper LA. Cancer risk communication with low health literacy patients: a continuing medical education program. J Gen Intern Med. 2010;25(Suppl 2):S126–9. doi: 10.1007/s11606-009-1211-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Davis TC, Crouch MA, Long SW, et al. Rapid assessment of literacy levels of adult primary care patients. Fam Med. 1991;23:433–5. [PubMed] [Google Scholar]

- 14.Smith RA, Cokkinides V, Brawley OW. Cancer screening in the United States, 2009: a review of current American Cancer Society guidelines and issues in cancer screening. CA Cancer J Clin. 2009;59:27–41. doi: 10.3322/caac.20008. [DOI] [PubMed] [Google Scholar]

- 15.Sudore RL, Schillinger D. Interventions to improve care for patients with limited health literacy. J Clin Outcomes Manag. 2009;16:20–29. [PMC free article] [PubMed] [Google Scholar]

- 16.Lerman CE, Brody DS, Caputo GC, Smith DG, Lazaro CG, Wolfson HG. Patients’ perceived involvement in care scale: relationship to attitudes about illness and medical care. J Gen Intern Med. 1990;5:29–33. doi: 10.1007/BF02602306. [DOI] [PubMed] [Google Scholar]

- 17.Ware J, Jr, Kosinski M, Keller SD. A 12-Item Short-Form Health Survey: construction of scales and preliminary tests of reliability and validity. Med Care. 1996;34:220–33. doi: 10.1097/00005650-199603000-00003. [DOI] [PubMed] [Google Scholar]

- 18.Busija L, Pausenberger E, Haines TP, Haymes S, Buchbinder R, Osborne RH. Adult measures of general health and health-related quality of life: Medical Outcomes Study Short Form 36-Item (SF-36) and Short Form 12-Item (SF-12) Health Surveys, Nottingham Health Profile (NHP), Sickness Impact Profile (SIP), Medical Outcomes Study Short Form 6D (SF-6D), Health Utilities Index Mark 3 (HUI3), Quality of Well-Being Scale (QWB), and Assessment of Quality of Life (AQoL) Arthritis Care Res (Hoboken). 2011;63:S383–S412. doi: 10.1002/acr.20541. [DOI] [PubMed] [Google Scholar]

- 19.Stretcher VJ, Rosenstock JM. The Health Belief Model. Health Behavior and Health Education. San Francisco: Jossey-Bass; 1997. p. 41–59.

- 20.Charles C, Gafni A, Whelan T. Decision-making in the physician–patient encounter: revisiting the shared treatment decision-making model. Soc Sci Med. 1999;49:651–61. doi: 10.1016/S0277-9536(99)00145-8. [DOI] [PubMed] [Google Scholar]

- 21.Braddock CH, III, Edwards KA, Hasenberg NM, Laidley TL, Levinson W. Informed decision making in outpatient practice: time to get back to basics. JAMA. 1999;282:2313–20. doi: 10.1001/jama.282.24.2313. [DOI] [PubMed] [Google Scholar]

- 22.Singer JD, Using SAS. PROC MIXED to fit multilevel models, hierarchical models, and individual growth models. J Educ Behav Stat. 1998;24:323–355. doi: 10.2307/1165280. [DOI] [Google Scholar]

- 23.Dwamena F, Holmes-Rovner M, Gaulden CM, et al. Interventions for providers to promote a patient-centred approach in clinical consultations. Cochrane Database Syst Rev. 2012;12 doi: 10.1002/14651858.CD003267.pub2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Culver JO, Bowen DJ, Reynolds SE, Pinsky LE, Press N, Burke W. Breast cancer risk communication: assessment of primary care physicians by standardized patients. Genet Med. 2009;11:735–41. doi: 10.1097/GIM.0b013e3181b2e5eb. [DOI] [PubMed] [Google Scholar]

- 25.Ling BS, Trauth JM, Fine MJ, et al. Informed decision-making and colorectal cancer screening: is it occurring in primary care? Med Care. 2008;46:S23–9. doi: 10.1097/MLR.0b013e31817dc496. [DOI] [PubMed] [Google Scholar]

- 26.Katz ML, Broder-Oldach B, Fisher JL, et al. Patient-provider discussions about colorectal cancer screening: who initiates elements of informed decision making? J Gen Intern Med. 2012;27:1135–41. doi: 10.1007/s11606-012-2045-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zapka JM, Klabunde CN, Arora NK, Yuan G, Smith JL, Kobrin SC. Physicians’ colorectal cancer screening discussion and recommendation patterns. Cancer Epidemiol Biomarkers Prev. 2011;20:509–21. doi: 10.1158/1055-9965.EPI-10-0749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mosen DM, Feldstein AC, Perrin NA, et al. More comprehensive discussion of CRC screening associated with higher screening. Am J Manag Care. 2013;19:265–71. [PMC free article] [PubMed] [Google Scholar]

- 29.Kelly PA, Haidet P. Physician overestimation of patient literacy: a potential source of health care disparities. Patient Educ Couns. 2007;66:119–22. doi: 10.1016/j.pec.2006.10.007. [DOI] [PubMed] [Google Scholar]

- 30.Peterson NB, Dwyer KA, Mulvaney SA, Dietrich MS, Rothman RL. The influence of health literacy on colorectal cancer screening knowledge, beliefs and behavior. J Natl Med Assoc. 2007;99:1105–12. [PMC free article] [PubMed] [Google Scholar]

- 31.Miller DP, Jr, Brownlee CD, McCoy TP, Pignone MP. The effect of health literacy on knowledge and receipt of colorectal cancer screening: a survey study. BMC Fam Pract. 2007;8:16. doi: 10.1186/1471-2296-8-16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Sabatino SA, Lawrence B, Elder R, et al. Community Preventive Services Task Force. Effectiveness of interventions to increase screening for breast, cervical, and colorectal cancers: nine updated systematic reviews for the guide to community preventive services. Am J Prev Med. 2012;43:97–118. doi: 10.1016/j.amepre.2012.04.009. [DOI] [PubMed] [Google Scholar]

- 33.Wu S, Crespi CM, Wong WK. Comparison of methods for estimating the intraclass correlation coefficient for binary responses in cancer prevention cluster randomized trials. Contemp Clin Trials. 2012;33:869–80. doi: 10.1016/j.cct.2012.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hade EM, Murray DM, Pennell ML, et al. Intraclass correlation estimates for cancer screening outcomes: estimates and applications in the design of group-randomized cancer screeningstudies. J Natl Cancer Inst Monogr. 2010;40:97–103. doi: 10.1093/jncimonographs/lgq011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rethans JJ, Gorter S, Bokken L, Morrison L. Unannounced standardised patients in real practice: a systematic literature review. Med Educ. 2007;41:537–49. doi: 10.1111/j.1365-2929.2006.02689.x. [DOI] [PubMed] [Google Scholar]

- 36.Luck J, Peabody JW. Using standardised patients to measure physicians’ practice: validation study using audio recordings. BMJ. 2002;325:679. doi: 10.1136/bmj.325.7366.679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Peabody JW, Luck J, Glassman P, Dresselhaus TR, Lee M. Comparison of vignettes, standardized patients, and chart abstraction: a prospective validation study of 3 methods for measuring quality. JAMA. 2000;283:1715–1722. doi: 10.1001/jama.283.13.1715. [DOI] [PubMed] [Google Scholar]

- 38.Srinivasan M, Franks P, Meredith LS, Fiscella K, Epstein RM, Kravitz RL. Connoisseurs of care? Unannounced standardized patients’ ratings of physicians. Med Care. 2006;44:1092–8. doi: 10.1097/01.mlr.0000237197.92152.5e. [DOI] [PubMed] [Google Scholar]

- 39.Frank P, Friscella K, Shiedls CG, et al. Are patient ratings of their physicians related to health outcomes? Ann Fam Med. 2005;3:229–234. doi: 10.1370/afm.267. [DOI] [PMC free article] [PubMed] [Google Scholar]