Abstract

Functioning program infrastructure is necessary for achieving public health outcomes. It is what supports program capacity, implementation, and sustainability. The public health program infrastructure model presented in this article is grounded in data from a broader evaluation of 18 state tobacco control programs and previous work. The newly developed Component Model of Infrastructure (CMI) addresses the limitations of a previous model and contains 5 core components (multilevel leadership, managed resources, engaged data, responsive plans and planning, networked partnerships) and 3 supporting components (strategic understanding, operations, contextual influences). The CMI is a practical, implementation-focused model applicable across public health programs, enabling linkages to capacity, sustainability, and outcome measurement.

For decades, infrastructure has been promoted as the key to public health achievements.1–3 General reports, models, and frameworks have intended to clarify infrastructure, including Baker et al. and Turnock, among others.4–8 Historically, when public health infrastructure has been discussed in the literature, it has been in reference to the larger, societal system level.1,2,4–8 This level of infrastructure provides the capacity to respond to threats to the nation’s health.2 In this article, we focus on program infrastructure, which is distinct from, but an essential building block of, the larger system level of public health infrastructure. However, program infrastructure is still broadly described with abstract terms such as “platform” or “organizational capacity” and rarely operationalized in logic models or measured in the public health, intervention, or evaluation literatures.9 There remains a lack of definition and few clear depictions of program-level infrastructure, making it difficult for public health programs attempting to design evaluations and build an evidence base for the role of infrastructure in achieving health outcomes. Program infrastructure is the foundation that supports program capacity, implementation, and sustainability.9,10 Components of a functioning program infrastructure lead to capacity, which enables action (implementation) and is linked to outcomes and sustainability. Therefore, components of program infrastructure are best defined in a practical manner that lends itself to straightforward implementation and evaluation.11

In previous work, we reviewed and discussed 1 model of oral health program infrastructure, the Ecological Model of Infrastructure (EMI), and assessed its applicability across a broader context of public health programs.9 Although this model was a first step toward defining program infrastructure, additional work was necessary to fully construct a measurable model of public health program infrastructure. In particular, the EMI was lacking concrete examples and 2 vital elements: outcomes and sustainability. Moreover, the EMI’s narrow focus on state plans overlooked the planning process’s importance to program infrastructure, as well as the significance of other plans (e.g., evaluation, communication, sustainability plans), and did not consider the model as a complex system with connections across its core elements.

Our new model of public health program infrastructure addresses the EMI’s limitations and defines infrastructure in a practical, actionable, and evaluable manner. It demonstrates how grant planners, evaluators, and program implementers can ultimately link infrastructure to capacity, measure success, and increase the likelihood of sustainable health achievements. The model contains core and supportive components that link to capacity, outcomes, and sustainability.

LESSONS LEARNED FROM TOBACCO CONTROL PROGRAMS

Key public health organizations, including the Institute of Medicine and the US Department of Health and Human Services, have recommended infrastructure development for the past decade.1,2,12 Over the past several decades, state and local tobacco control programs (TCPs) have successfully reduced smoking initiation, eliminated exposure to secondhand smoke, and promoted smoking cessation.12,13–15 Given their history of success in improving the public’s health, they often serve as an example for other public health initiatives.16–19 TCPs have long recognized the importance of developing program infrastructure to reach goals and outcomes.12,20,21 Even the tobacco industry has acknowledged the importance of TCP infrastructure in preventing tobacco use and promoting tobacco cessation.22 In an internal document reviewing ASSIST, the Tobacco Institute considered infrastructure at the national and local levels a threat to its goals:

In California our biggest challenge has not been the anti-smoking advertising created with cigarette excise tax dollars. Rather, it has been the creation of an anti-smoking infrastructure, right down to the local level. It is an infrastructure that for the first time has the resources to tap into the anti-smoking network at the national level.22(p1)

The tobacco industry feared the implications of an integrated tobacco control infrastructure able to work on multiple levels more than it did the evidence-based practice of tobacco control media campaigns.14,22 Neglecting program infrastructure disregards the “organizational platform that creates the capacity to deliver”10(p8) and often results in an unsteady foundation and overstretched staff and partners.10,23

The CMI model presented in this article can be pertinent to a wide range of public health programs in that it draws on data from tobacco prevention and control initiatives,16–19 as well as multiple types of public health programs. It is grounded in data from a broader evaluation of state-level TCPs conducted by the Office on Smoking and Health at the Centers for Disease Control and Prevention (CDC). Over 3 phases, we explored program infrastructure, capacity, progress on outcomes, and sustainability in 18 state-level TCPs. Table 1 provides an overview of selection criteria, data collection, and evaluation team composition for all 3 phases. In addition, we incorporated what we had learned from previous work, including published literature related to TCPs, a literature review of diverse public health program infrastructure articles (e.g., asthma, diabetes, oral health, physical activity, violence and injury, HIV/AIDS, substance abuse) and theories from other disciplines such as sociology, organizational development, anthropology, and economics.9,10,23

TABLE 1—

Overview of Data Collection During the 3 Phases of Infrastructure Evaluation of 18 State-Level TCPs

| Purpose, Selection Criteria, Data Collection, and Evaluation Team | Phase 1, State TCP Evaluation Case Study | Phase 2, State TCP Infrastructure Evaluation Call Study | Phase 3, State TCP Evaluation Site Visits |

| Original purpose of data collection | Case study to explore themes related to tobacco control and prevention activities after the passage of a smoke-free law, infrastructure, and ARRA | Call study to explore themes related to program infrastructure and progress on ARRA | Regularly scheduled technical assistance site visits to discuss infrastructure, progress, and technical assistance needs |

| Selection criteria for states participating | ARRA components (is state successfully making progress on ARRA tobacco control initiatives? Achieve ≥ 90% of state work plan tasks by selection date)23,24 | ARRA components (is state successfully making progress on ARRA tobacco control initiatives? Achieve ≥ 90% of state work plan tasks by selection date)23,24 | Geographic diversity |

| ARRA component 2 | ARRA component 2 | (are states located in areas throughout the United States?) | |

| (does state have competitive funding for ARRA?) | (does state have competitive funding for ARRA?) | Technical assistance (evaluator attended technical assistance site visit) | |

| Smoke-free law | Geographic diversity | ||

| (does the state have a comprehensive smoke-free law? All 4 states in this case study did) | (are states located in areas throughout the United States?) | ||

| Geographic diversity | |||

| (are states located in areas throughout the United States?) | |||

| Technical assistance (evaluator attended technical assistance site visit) | |||

| States participating | CO, MA, MI, WA | KS, KY, MN, MS, NM, OK, OR, TX, UT | ID, NH, NC, ND, OH |

| Data collection methods | 60- to 90-min in-person group discussions | 60- to 90-min telephone group discussions | 30-min to 8-h in-person discussions |

| Data | Recorded and transcribed verbatim conversations and field notes | Recorded and transcribed verbatim conversations and field notes | Field notes |

| No. of interviews conducted | 43 (including 1 combined group discussion with all 4 sites at an ARRA technical assistance meeting in Atlanta, GA) | 9 phone calls with each state plus 4 group calls with 2–3 of the same 9 states participating in each call | 5 site visits (ranging from 2 to 4 d) |

| Dates of data collection | March 2011–May 2011 | April 2011–June 2011 | May 2011–September 2011 |

| Evaluation team composition—lead PI is same for all 3 phases | Lead PI; 3 senior researchers–evaluators; 4 supervised fellows plus evaluation contractors | Lead PI; 2 senior researchers–evaluators; 2 supervised fellows plus evaluation contractors | Lead PI; 1 senior researcher–evaluator plus 1 evaluation contractor |

Note. ARRA = American Reinvestment and Recovery Act; PI = principal investigator; TCP = tobacco control program.

SAMPLE

We used purposive and criteria-based sampling methods to select states representing diverse geography and progress in program achievements in tobacco control and prevention. We defined progress as achieving 90% or better of stated work plan tasks or having a statewide smoke-free air law. First, we generated a list of TCPs meeting the progress criteria. From that list, we chose programs that met geographic diversity criteria (spread and 7 of 10 US Department of Health and Human Services regions represented). A committee of CDC program consultants then met with the evaluation team to select programs for each phase on the basis of their perception of programs deemed to have information-rich informants. Although participation was voluntary, all programs selected chose to participate in each phase, which allowed for a deeper understanding of the variation in infrastructure contexts and factors critical to achieving program goals, as well as information-rich informants.24,25 Table 1 provides an overview of selection criteria for each phase.

OVERALL DATA COLLECTION AND ANALYSIS

Over 3 phases, we used a combination of in-person and telephone group discussions to collect data (Tables 1 and 2). We entered all recorded and transcribed data and field notes into ATLAS.ti (ATLAS.ti Scientific Software Development GmbH, Berlin, Germany). The project evaluation team undertook an extensive review of background documents, including the period in which ASSIST was implemented (1991–1999), as appropriate per site in preparation for each visit or call.

TABLE 2—

Data Collection via Participant Type Across State-Level TCPs by State and Number of Groups: March 2011–May 2011

| Discussion Group Participants | Colorado | Massachusetts | Michigan | Washington |

| Program staff (internal to program) | 1 | 1 | 1 | 1 |

| Chronic disease programs collaborating with TCPs (external to program) | 1 | 1 | 1 | 1 |

| Coalitions and partners (external to program) | 2 | 4 | 3 | 4 |

| Quitline staff (both internal and external to program) | 1 | 1 | 1 | 1 |

| Voluntary organizations (e.g., heart, lung, and cancer associations; external to program) | 1 | 1 | 1 | 1 |

| ARRA partners (external to program) | 1 | 1 | 2 | 1 |

| Program director (internal to program) | 2 | 2 | 2 | 2 |

| Single joint state discussion (internal to program) | ✓ | ✓ | ✓ | ✓ |

Note. ARRA = American Recover and Reinvestment Act; TCP = tobacco control program.

We used multiple systematic qualitative analytic techniques, including theme analysis, memos, network diagrams, member checking, and triangulation of data.26 To verify and test the applicability of findings and cross-verify emergent interpretations, we used member checking and data triangulation techniques.25,27 The purpose was to generate theoretical concepts, not to apply theory. The model framework grew out of the constant interaction between the participants’ lived experiences, our previous knowledge, and constructs that emerged through member checking and feedback loops.

PHASE 1, CASE STUDY EVALUATION

We conducted 43 group discussions (see Table 2) across 4 state-level TCPs. Participants included program directors and staff, staff from other chronic disease programs within the health department, state- and local-level coalition members, partners and stakeholders external to the program, quitline vendor staff, and staff from voluntary organization (e.g., American Lung Association). We used an unstructured guide with 6 key domains: program infrastructure, program implementation, capacity, progress on outcomes, sustainability, and elimination of disparities experienced. Participants were not prompted on specific categories of infrastructure components but described the themes in an organic, open-ended way as they discussed their program progress and growth. A typical starter question was “Describe for us what it looked like on the ground—if we could see it in action—how you started and implemented your program.” Probes included asking what aspects participants thought were most important to success, what the barriers were, and how infrastructure they described related to progress.

We used a grounded theory approach to data analysis during this phase because the flexible analytic techniques allowed us to focus our data collection and build our model through successive levels of data analysis and conceptual development.28,29 We first used line-by-line coding and then focused on conceptual coding, and we used an inductive approach to progressively collect, code, and analyze data between site visits.29 Participants interpreted their experiences related to infrastructure, capacity, strategies, progress, and outcomes at each site visit. We in turn made successive analytic sense of their meanings and actions, integrating memos and codes and diagramming actions. Regular meetings were held with the entire evaluation team to interpret and discuss findings, construct thematic areas, and theoretical concepts. This progressive analysis allowed us to seek specific details and fill in data gaps at each successive visit. The relationships between experiences and events were revised and confirmed as the model itself and its components emerged.29,30

We used structural codes based on our original work and the EMI9 to improve code wording and fit after our initial line-by-line coding for each site visit (essential elements, state plans, partnerships, leadership, managed resources, engaged data, strategic understanding, tactical action, and contextual influences). The initial line-by-line coding was conducted by at least 2 raters, and discrepancies were resolved through group discussion. On completion of phase 1, the code book reflected the CMI components as they stood at that point, and it was used in phases 2 and 3 to code data (e.g., continued support, strategic understanding, operations, networked partnerships, responsive plans and planning, managed resources, engaged data, multilevel leadership, capacity, outcomes, sustainability, contextual influences, and subcodes related to component characteristics). Examples of new codes not previously found in the EMI included “continued support,” “capacity,” “outcomes,” and “sustainability.” In addition, we refined some codes, such as “responsive plans/planning” instead of “state plans” and “multilevel leadership” instead of “leadership.”

Grounded theory was particularly adept in this phase of analysis because it provides tools for analyzing processes and identifying participants’ meanings and actions and how their worlds are constructed.29 The resulting model shows patterns and connections drawn from the participants’ collective experience.

PHASE 2, CALL PROJECT EVALUATION

For thematic confirmation, senior evaluators and the principal investigator conducted 13 phone discussions with state-level TCP program managers and internal staff using a semistructured guide based on the evolving CMI from phase 1 (Table 1). Recorded interviews and discussions were transcribed. Participants were presented with the draft CMI graphic and asked to add or delete components, as well as probed for additional stories related to infrastructure and progress on outcomes. Participants were also probed for explicit details relating to linkages among components, capacity, and outcomes. In this phase of the project, participants were asked to describe specific infrastructure components with prompts such as “Describe what role leadership played in implementing your program.”

We used an applied thematic analysis technique to confirm and enhance our understanding of the infrastructure model that emerged from the data in phase 1.30 We used a priori or structural codes related to the infrastructure components developed during phase 1 to code the transcripts from phase 2.31 However, we remained open to emergent codes based on close reading of the text by at least 2 team members. We began to identify confirmation and saturation points during analysis of the first TCP transcript but continued the iterative process through all 9 transcripts.28,29,32,33

SITE VISIT REVIEW EVALUATION

Even though we experienced saturation29,33,34 early in phase 2, we implemented phase 3 with 5 state TCPs to further validate model components identified in phases 1 and 2 (Table 1). Site visit discussions were not recorded in this phase; instead, an experienced evaluator who participated in phases 2 and 3 took detailed field notes. A state TCP program manager, as well as other internal staff and external partners, were present at each site visit. Stories collected during site visits regarding program implementation and progress on outcomes were compared with data collected during phases 1 and 2. Participants were prompted to provide responses related to specific components of infrastructure, using questions such as “What role did partners have in the success of this project?”

We used applied thematic analysis techniques to validate model components and structures,33 using the coding scheme developed in phases 1 and 2. We compared phase 1 and 2 data with phase 3 data to examine the differential expression of themes related to program infrastructure across groups.30

To develop a model in which we could see program infrastructure from different vantage points and create new meaning, we used our studied experiences and took them apart to make comparisons, follow leads, and build on ideas. To strengthen this process, we presented the model to different practitioner groups for further interpretation and feedback. We wanted to know whether our theoretical rendering followed what public health practitioners from many disciplines experienced in their day-to-day activities—how they interpreted the actions and processes of public health program infrastructure. We reviewed initial findings and a draft of the model with experts on tobacco control and prevention, sociology, community psychology, organizational psychology, education, evaluation, and other CDC staff. We presented detailed information on methods and preliminary model components at a CDC evaluation forum on public health program infrastructure (August 2011), at 2 Coordinated Chronic Disease Prevention and Health Promotion Program regional grantee meetings (February and March 2012) and at the evaluation ancillary meeting for the National Conference on Tobacco or Health (August 2012). Extensive field notes were taken at every meeting. After each presentation, participants worked in small groups with a facilitator to discuss model components. The facilitator took notes at each table so that this information could be added to our analysis. In addition, participants at these meetings provided written feedback on model components, the model graphic, and component naming structures on their evaluation forms. In total, we engaged in member-checking activities with more than 400 public health practitioners.27 We integrated these informed responses into our analysis to refine and validate model components.

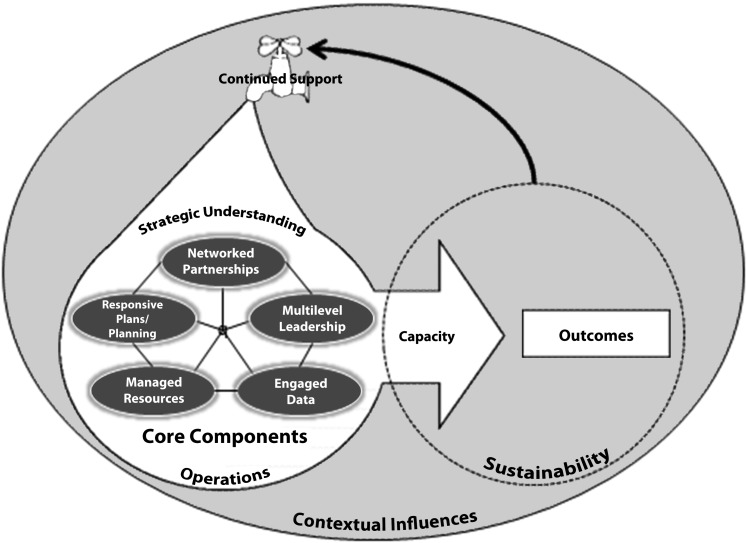

The CMI was developed to portray a deeper, expanded understanding of program infrastructure than the EMI9 and to describe infrastructure in a manner that is practical and broadly applicable as well as conducive to measurement and implementation. The CMI is more comprehensive and nuanced than the EMI in that it portrays the link among infrastructure, outcomes, and sustainability, as well as the critical nature of continued support to functioning program infrastructure. Figure 1 illustrates the interaction of the CMI’s core components and the connection to capacity, outcomes, and sustainability that was missing from the EMI.

FIGURE 1—

Component Model of Infrastructure.

CORE COMPONENTS

At the heart of the CMI are 5 interrelated core components: multilevel leadership, managed resources, engaged data, responsive plans and planning, and networked partnerships. These components are crucial to the program’s existence and essential for progress regardless of funding because they are the more tangible building blocks that funders and others can apply to guidance documents, technical assistance, and best practices on infrastructure implementation. Similar unelaborated elements were also found to be core to the EMI and supported by the literature review across public health programs.9 An abundance of literature has been published in the organizational and public health arena about the individual components. However, there has been little discussion about the interaction of these components, nor have they been depicted within a schema of public health program infrastructure leading to capacity and sustainability.

Multilevel Leadership

“Multilevel leadership” is defined as the people and processes that make up leadership at all levels that interact with and have an impact on the program. It includes leadership in the state health department or other organizational unit in which the program is located, as well as leadership from other decision-makers, leadership within the program beyond the program manager and across programs that have related goals, and leadership at the local level. Several TCP staff made statements such as the following:

I think we also had a lot of support from our leadership, our Secretary of Health, bringing the heads of our sister agencies together and making sure that—that everyone was on the same page.

We’ve been extremely lucky that we’ve had leaders from many different sectors, not just the major nonprofits, but we’ve also had champion legislators. And, we’ve also had local partners that have joined us to help with the sustainability.

Our [local health department] is on board, and they support what we do. And that’s good. . . . And it’s crucial to have their support.

Most program staff interviewed agreed that without upper-level support and leadership, they would not have been able to make progress on health achievements, or at times even maintain the progress they had already achieved. All staff stated that engaging lateral leadership and leadership at the local level extended the program’s reach, facilitated leveraging resources, and promoted sustainability. In addition, promoting leadership within the program developed a sense of program ownership and contributed to staff succession planning. Shared ownership contributes to members taking responsibility and initiating innovations that may result in further progress on health achievements.

As a core component, leadership has to be sought out and cultivated at all levels. It does not appear because staff or partners are in place or a program director is hired. Leadership is a team function that is more concerned with interactions with others than with individual knowledge.35 Strong leadership at all levels is crucial to promote the development of relationships, communication, funding, and direction, and it can enhance the interactive link among program components.9,36 For example, in the partnership component, leadership has been shown to be key in all phases of successful partnership development.37

Managed Resources

“Managed resources” refers to funding and social capital or relationships that produce social benefits.38 Participants went beyond the obvious definition of funding to describe other resources that are essential to programs, including staff and partners who continue to grow through training, financial acumen, and technical assistance:

managed resources, to include, . . . human resources—not HR, but the staff capacity, the relationships that we have with our partners, the ability to share resources with our partners, whether they’re in terms of staffing, finance, technical assistance and training, all of those things.

Program staff explained that just having staff was not sufficient. They benefited from staff who could be experts in a number of areas, which often requires continuous cross-training. Additionally, quality of staff was more important than quantity of staff. As Goodman et al.38 noted, quality staff can be the most valued managed resource. As one participant stated,

Managing resources, when I think of that, the first thing that came to my mind is hiring good staff. We talk here in [state] about getting the right people on the bus. And, when you’ve got the right people on the bus, you’ll know where to drive it.

Furthermore, managed resources include actively leveraging and diversifying funds to ensure that the loss of no single source of funding could close the program doors: “So—and I say all our staff is [sic] always on the lookout for other grant opportunities outside of CDC. We just got a small grant for a women’s college initiative on cessation.”

Moreover, resources are managed so that the program is not seeking all potential sources of funding but instead focusing on funding with a purpose. This characteristic links directly to the responsive plans and planning and leadership components of the CMI. Orton et al.35 emphasized chasing grants as poor management of resources, likening it to a business that lets the profit motive eclipse the good of the community.

Engaged Data

“Engaged data” refers to identifying and working with data in a way that promotes action and ensures that data are used to promote public health goals. “And then, the information that’s collected, we stress not only collecting it, but sharing it, sharing it profusely within the coalition and in the community.” Facilitating the development of data sources and systems for local communities, as well as encouraging partner buy-in and support for state systems, is important to fully using data (i.e., engaging data) and creating functioning infrastructure.

As far as the data [go] . . . we see as a backbone, and really a core value, that we’ve really tried to infuse in all the work we do. And I think we’ve done a good job of creating a culture where data [aren’t] something that threaten people, but [are] something that we embrace.

Moreover, program staff described engaged data as essential to the evolution of their initiatives and overall sustainability. Data should not merely be collected and displayed on a Web site but should be followed up with action and technical assistance to ensure use.23,27 This process is one of the critical ways in which leaders (multilevel leadership) will engage partners (networked partnerships) in understanding the shared risks and rewards of taking part in the implementation of the strategic plan (responsive plans and planning).

Responsive Plans and Planning

“Responsive planning” (as part of the state strategic plan) is defined as a dynamic process that evolves and responds to contextual influences such as changes in the science, health department priorities, funding levels, and external support from the public and leadership. It also promotes action and the achievement of public health goals. This component is an example of another difference between the EMI and the CMI.9 With the additional data and analyses, it was clear that this component consisted of not just the plan itself but also the planning process.

The Institute of Medicine3 report Living Well recommended that the US Department of Health and Human Services support states in developing strategic plans with specific goals, objectives, actions, time frames, and resources. It further recommended planning that includes multiple stakeholders’ viewpoints, uses clear and consistent criteria for priority selection, develops clear and consistent objectives, and includes values of stakeholders.3 As one respondent said, “If we didn’t have strategic planning, we wouldn’t have a clue where we should go next.”

Responsive plans are collaboratively developed. They embody a shared ownership and responsibility for goals and objectives among the state program, partners, and local programs. Local coalition program plans often connect with the state plan: “We did not want to do a strategic plan and just give it to our partners and say, ‘Here, this is what you need to implement.’ We wanted the buy-in from those partners.”

Responsive plans evolve over time:

We are constantly revising it, based on new directions, new learning, shifts in funding, there’s all kinds of ways that the strategic plan is used and modified. So, it’s a living document. It’s not something that we create and then put in a drawer.

Orton et al.35 described plans as theoretical documents that are acceptable until they come up against barriers or changes in the environment (contextual influences) that affect how the program and partners (networked partnerships) can move forward. Therefore, the effective plan must be a living, dynamic document, created to respond to changes and good leaders (multilevel leadership), who must plan to continually plan. The effective business plan can drive public health ideas toward implementation and sustainability.

Participants indicated that, increasingly, the strategic plan is not the only plan that is central to program infrastructure. More and more, programs and funding opportunity announcements discuss the importance of communications plans, evaluation plans, and program integration plans. These subplans often have goals and objectives that are drawn from the strategic plan and include many similar characteristics, such as being collaboratively developed, dynamic, flexible, and including evaluation feedback, illustrating another distinction between the EMI and the CMI—the inclusion of multiple plans.

Networked Partnerships

“Networked partnerships” is defined as strategic partnerships at all levels (national, state, and local), with multiple types of organizations (government, nonprofit), content areas (diabetes, mental health), and groups (champions, networks, research institutions) that are interconnected in such a way as to promote achievement of public health goals. In the data analysis, the partnership theme that emerged is that networked partnerships are diverse and not exclusively formed with one type of organization: “We built partnerships with these different disciplines in order to better understand each other’s way of working, and what would be an example of success.”

Much like social capital in managed resources, the networked partnerships component includes the concept of the right partnerships. It is more important to have fewer but strategic partnerships than a plethora of acquaintances. Partnerships are most effective when they are connected with one another as well as with the program. In addition, state program staff constantly mentioned that it was “all about the relationship” and that building relationships takes time: “You develop relationships over time. And you can—you can call on those relationships as needed. . . . It’s still always about relationships.”

Although many partners are working toward a common mission, they may fill different roles. In this way, networked partnerships can work to ensure all activities necessary to achieve public health outcomes are accomplished. Networked partnerships can be a platform from which programs are able to take advantage of opportunities and defend against threats to public health achievements: “The partnerships and coalitions have been essential to us in achieving the successes that we have.”

Networked partnerships can perform many roles for the public health program. They bring diversity to the forefront of the discussion, have the potential to expand financial and knowledge resources (managed resources), and add to the multilevel leadership base.39 To achieve sustainability and impact, these partners must do more than just share resources; they must share perspectives on the nature of the challenges and possible solutions.40 It is important that networked partners engage with the data and share in the planning and implementation of plans (responsive plans and planning).

SUPPORTIVE COMPONENTS OF INFRASTRUCTURE

The core components are the foundation of the CMI, but supportive components are also warranted to create a fully functioning infrastructure. Although the supportive components may not be as tangible as the core components, they are equally as important and critical for functioning program infrastructure. These components are strategic understanding, operations, and contextual influences. In the CMI, these supportive components interact with and envelop the core components.

Strategic Understanding

Strategic understanding encompasses the ideas, guidelines, and thinking that initiate, nurture, and sustain infrastructure. The 3 defining characteristics of strategic understanding are perception of the problem as a public health issue, timed visibility, and planning for sustainability.

Health issues are often not perceived as public health issues. After changing the paradigm and frame of the lens to ensure the issue is perceived as a public health problem, additional effort is still warranted, including identifying new champions and novel discussion points and developing diverse partnerships. Timed visibility is the understanding by program staff and partners that a deeper awareness of the sociocultural and institutional environment is warranted before announcing or proceeding with one’s plans. For example, it may sometimes be beneficial to assess the contextual influences before proceeding. Sustainability planning should start at the beginning of the program, not at the conclusion of a funding cycle. As one respondent stated, “And so, I think that is a huge contributor to the future of this program . . . looking at sustainability [now].” Sustainability can be found in the CMI in responsive plans and planning, strategic understanding, enveloping capacity and outcomes, and the feedback loop back to functioning infrastructure. Its foundation is strategic understanding that sustainability starts at the beginning of the funding cycle, not at the end.

Operations

Operations consist of the day-to-day work structures, communications, and procedures used to implement the program. Operations include staff roles, communications within the program and between the program and its partners, the program’s and health department’s structure and organization, and the program’s work coordinating with other public health areas and partners.

Formalized operations can facilitate better communication with networked partners, greater ability to manage resources, and more effective implementation of the strategic plan and planning. Without transparency, clearly established roles, and communication facilitating collaboration among staff, programs, and partners, the interconnections of infrastructure begin to fail. It is in operations that we see the foundation of coordination within and across program areas. All components, core and supportive, are necessary for the interactive nature of functioning program infrastructure to work.

Contextual Influences

Contextual influences envelop all of the other components, including outcomes, sustainability, and the feedback loop to continuing support and functioning infrastructure.

These broad influences, which consist of cultural values and shifting political, economic, and social priorities, are not always predictable, amenable to measurement, or under a program’s control. However, they are important to monitor because they can have both inhibiting and facilitating effects on functioning infrastructure.41

INTERACTION AMONG THE MODEL’S COMPONENTS

We believe that the CMI’s power lies in the interaction of its core components and their connection to a program’s outcomes. The CMI outlines complex, dynamic interactions within this schema. No single component of the CMI was found to be more important than the others, indicating the absence of a hierarchy. The core components interact with each other and with the supporting components to create a synergistic effect. We assessed this effect by considering the connection between the core and supporting components and their link to capacity and outcomes. Ideally, this synergism would produce the capacity for the implementation of evidence-based strategies to achieve positive public health outcomes and eventually sustainability (Figure 1). Multiple core components interact to accomplish public health outcomes.

When you have partnerships, you have people in the right places, and they have the right data that they need to know what they need to do, and you have good leadership leading those groups, who are managing resources wisely, and it’s all captured in a strategic plan, the ultimate fair and feasible outcome, really, is that [smoking] rates will decline.

CAPACITY AND SUSTAINABILITY

Capacity is the ability to implement interventions. One significant characteristic of the CMI is that once infrastructure is built and properly supported, it facilitates the program’s capacity to take advantage of opportunities, create opportunities, and defend against threats to achievement of its goals. The CMI is a framework for preparing a program to act swiftly in alignment with goals and partners no matter what challenges (or opportunities) it confronts. The American Reinvestment and Recovery Act (ARRA)42 is a good example of how this process works during unanticipated opportunities:

By having strong infrastructure in place . . . strategically you know the directions you’d like to go if resources were available. . . . So you are ready to move if resources do become available.

[Program name] had built a strong foundation for conducting this work before receiving the ARRA grant funds. . . . This 2-year project would not have been as successful if the program had not been well-positioned to “hit the ground running,” once funded . . . such funding [ARRA] would not be useful if a program lacks necessary infrastructure.

All states agreed that functioning infrastructure was essential to achieving and maintaining health outcomes. Anecdotal evidence from each state provided numerous examples of how infrastructure and outcomes were successfully linked, for example,

And almost instantly, when the program was shut down, we started to see declines in consumption slow, and possibly even increase. And we were able to show that this happened in those 4 years when our funding was redirected and our infrastructure shattered. CDC funding maintained our surveillance infrastructure while the program was reeling. That was really important to have those data to point to what happens when you dismantle a program. We were able to show with data and evidence-based practices that it is not a good use of our funds when we didn’t have other essential elements [infrastructure components] in place for a comprehensive program.

Previous work has defined sustainability as the ability to effectively implement evidence-based practices over time.43 Our data support this definition and that the core components are necessary for creating sustainability to achieve health outcomes: “One of the other outcomes will be that our programs will be sustainable. And that’s what I foresee as fair and feasible outcomes [of infrastructure] because we have these elements in place.”

Further illustrating the linkages among components, capacity, and outcomes is an example from a state with functioning infrastructure that enabled it to capitalize on an unanticipated funding opportunity. The state applied for ARRA funding to reduce the high prevalence of tobacco use in its population with mental illness by lessening exposure to secondhand smoke in treatment facilities and offering evidence-based cessation services. This state had all CMI components in place before the funding was awarded. The funding enhanced the state’s capacity to implement a new initiative focusing on the population with mental illness, which was in alignment with its strategic plan to reduce smoking in disparate populations. Specifically, its infrastructure and funding enabled it to

• Manage its current resources to train quitline staff and adapt protocols to meet the new responsibilities of staff in these institutions to address cessation with patients and provide them with treatment options. This included setting up systems to accept nicotine replacement therapy from the quitlines, monitor medication use, facilitate access to counseling calls from the quitlines, and adapt quitline intake questions to identify mental health issues;

• Build champions among the institutions, clients, and partners who would promote the program and ensure continuation past the funding period; and

• Create new data sources at the quitlines and institutions to track the program’s progress in reaching health outcomes.

One marker of the program’s success was engaging leaders with data, resulting in several new program champions and changes in approaches concerning the use of smoking as a behavioral reward. By the end of the project, many of these leaders supported access to smoke-free public facilities. This, along with the work of partners, led to the outcome of having a smoke-free policy and cessation protocol in place in all public mental health facilities in the state. Sustainability was addressed by having policies and leadership to support enforcement in place, nurturing a sense of ownership among leaders and partners, obtaining new quitline data about this priority population, training and educating staff and partners, and increasing relationships to support this effort.

We have, now, champions within those departments who will carry the tobacco message forward, where they haven’t been in the past. And we’re building capacity, I think, in a lot of different partners and in a lot of different levels. So, I think those may be some different ways of looking at infrastructure, but I think those are some really important measures that will carry forward, long beyond this project, that I think can be classified as infrastructure.

NEW CHALLENGES

Because the CMI is an evidence-based model that is just being introduced, its broad applicability and predictability has yet to be evaluated. One way to do this is to construct an instrument that operationalizes the CMI components and assesses its validity with other programs, which is already under way. Another way to validate the model is to consider its theoretical similarities to other established frameworks. For example, one relevant issue relates to the issue of whether the CMI is a systems model and, if so, what the implications of that classification are. We raise this issue because systems thinking has been part of the public health dialogue for the past decade.44 In addition, work advocating for the development and application of systems science45 and integrative systems models46 to explain and assess complex public health interventions has increased. The CMI can be defined as a systems approach to program infrastructure because it is a dynamic conceptual model for understanding public health outcomes, and it exhibits many of the characteristics of complex systems identified by other researchers.44–46 However, developing a fully realized systems model of program infrastructure remains a task for the future; our focus here was on presenting the descriptive data and examples elaborating the CMI’s core components.

Finally, in the future, it would also be beneficial to compare the similarities and differences between the CMI and other significant public health frameworks. One example would be the Public Health Accreditation Board47 framework of voluntary standards, which was designed to evaluate how well state and local health programs carry out the core functions of public health. It has 12 domains that apply to all health departments, and within those domains are standards that pertain to a broad group of public health services. Although some overlap exists between the standards and the CMI components, a comparison is beyond the scope of this article. It is our hope that the CMI will spur many applications and research that will advance public health goals.

At this point, the limits of the model are not completely known. We should note that data were collected from state-level programs only. Although some partners represented local-level programs, the CMI at this point is intended to apply to state-level program infrastructure.

FUTURE APPLICATIONS

The CMI is a practical and actionable model of infrastructure. As represented in the CMI, infrastructure is built on 5 clearly defined core components. With these components, the CMI provides a framework for public health programs, not just TCPs, to develop and maintain their infrastructure. The CMI can also serve as a framework for developing guidance documents and best practices for infrastructure implementation. For example, funders across various public health programs can use the CMI components to write funding announcements that support program infrastructure. Because the 5 core components provide a tangible foundation, funders may use the model and characteristics in providing technical assistance.

The model can also be used as the basis for surveillance and evaluation of infrastructure. Public health programs can use the CMI’s components and their defining characteristics to assess progress on and maintenance of infrastructure. Such measurement may indicate movement toward public health goals when long-term outcomes are not yet available. In addition, surveillance data could be used to identify areas in which sustainability may not occur.

Developing a measurement tool for the CMI is a logical next step. Previous attempts to measure infrastructure were initiated without the guiding framework of a clear, evidence-based model of infrastructure that was applicable across public health programs.9 Therefore, a need exists to develop and pilot test an infrastructure measurement instrument for surveillance and evaluation. The CMI, by defining the characteristics of its core components (Table 3), provides a first step for surveillance methods to measure infrastructure. Currently, CDC’s Office on Smoking and Health is reviewing the combined project data to further define the characteristics that can facilitate the development of a such an instrument.

TABLE 3—

Examples of Core Component Characteristics of Component Model of Infrastructure

| Core Components | Examples of Characteristics |

| Multilevel leadership | Connected to a vision, plan, or direction |

| Occurs at multiple levels (above, below, within, and laterally) | |

| Identification of, development of, and nurturing of champions | |

| Concept of ownership of programs at multiple levels | |

| Succession planning | |

| Formal and informal leadership, people and their expertise, and a dynamic process | |

| Can be teams across programs or levels | |

| Managed resources | Diversified funding streams, leveraging, integration, coordination |

| Staff expertise nurtured and sustained | |

| Technical assistance and extended training | |

| Sustainability planning, succession planning | |

| Staff and partners who continue to grow through training, financial acumen, and technical assistance | |

| Relationships | |

| Directed by strategic plan, vision, and mission instead of by funding source | |

| Engaged data | Use of data to |

| Surveillance | Increase program visibility |

| Evaluation | Attract partners |

| Monitoring | Secure and manage scarce resources |

| Needs assessment | Assist ready communication |

| Understand community achievements and public health burden | |

| Drive program direction and planning | |

| Ignite passion | |

| Facilitate evolution of initiatives and overall sustainability | |

| Responsive plans and planning | Dynamic, evolving, responsive, flexible—adjustments to state plan that result from learning from experience, science, and contextual influences |

| Shared ownership—partners, coalitions, advisory groups, and consultants take an active role in designing and implementing objectives in the state plan | |

| Direction or roadmap—coordination point for program and partners; the plan is used, it does not just sit on a shelf | |

| Education and recruitment tool | |

| Progress yardstick—milestones for health achievements (objectives and evaluation included) | |

| Dynamic process and living documents that evolve and respond to the contextual influences while maintaining evidence-based strategy integrity and data-driven directives | |

| Specific goals, objectives, actions, time frames, and resources | |

| Planning that includes viewpoints from multiple stakeholders and uses clear and consistent criteria for priority selection | |

| Responsibility for goals and objectives are shared and local coalition and program plans connect with or grow out of the state plan | |

| Communication tool for partners and external constituents | |

| Evidence-based and context appropriate | |

| Networked partnerships | Diversity beyond specific focus (integration and coordination) |

| Partners | Facilitate progress on health achievements and implementation of strategies |

| Coalitions | Extend program’s reach |

| Advisory groups | Fit state needs, structure, and political context |

| Networks | Contribute to leadership, diversified funding, sustainability, integration, coordination, and program growth |

| Nurtured beyond fundee relationship | |

| Networked partnerships at all levels (national, state, and local), with multiple types of organizations (government, nonprofit), content areas (diabetes, mental health), and groups (champions, networks, research institutions) | |

| Multiply the work that the program can accomplish | |

| Fills different roles so diversity among partners is needed |

The CMI provides a framework for programs seeking to further elaborate the inputs section in the initial stages of their logic models. By building the infrastructure components contained in the CMI, public health programs can create a strong programmatic foundation and thereby position themselves to move to the latter stages of the logic model, including the achievement of public health outcomes. Improving public health depends on understanding complex adaptive models such as program infrastructure. Because the CMI is a practical, evidence-based model for planning, implementing and sustaining public health programs, it provides a solid basis for facilitating a common understanding of program infrastructure.

Acknowledgments

K. Snyder and P. Rieker were supported under contract by funding through the CDC. Funding for the case study discussed in this work was provided by the CDC. State Tobacco Control and Prevention Program funding was provided through the CDC.

We thank the 18 state tobacco control and prevention programs that participated in the associated study: Colorado, Idaho, Kansas, Kentucky, New Hampshire, North Carolina, North Dakota, Massachusetts, Michigan, Minnesota, Mississippi, New Mexico, Ohio, Oklahoma, Oregon, Texas, Utah, and Washington. We also thank Deloitte Consulting (and subcontractors) for their participation in conceptualization, data collection, and initial analysis of the case study data as well as all of the public health practitioners and subject matter experts who participated in the interpretation meetings.

Note. The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the CDC.

Human Participant Protection

As public health practice, this study was approved by the CDC institutional review board as not needing institutional review board approval.

References

- 1.Board on Health Promotion and Disease Prevention, Institute of Medicine. The Future of the Public’s Health in the 21st Century. Washington, DC: Institute of Medicine; 2002. [Google Scholar]

- 2.US Department of Health and Human Services. Healthy People 2020—public health infrastructure. Available at: http://www.healthypeople.gov/2020/topicsobjectives2020/objectiveslist.aspx?topicId=35. Accessed May 4, 2012.

- 3.Institute of Medicine. Living Well With Chronic Illness: A Call for Public Health Action. Washington, DC: Institute of Medicine; 2012. [Google Scholar]

- 4.Baker EL, Potter MA, Jones DL et al. The public health infrastructure and our nation’s health. Annu Rev Public Health. 2005;26:303–318. doi: 10.1146/annurev.publhealth.26.021304.144647. [DOI] [PubMed] [Google Scholar]

- 5.Handler A, Issel M, Turnock B. A conceptual framework to measure performance of the public health system. Am J Public Health. 2001;91(8):1235–1239. doi: 10.2105/ajph.91.8.1235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Powles JCF. Public health infrastructure and knowledge. Available at: http://www.who.int/trade/distance_learning/gpgh/gpgh6/en/index.html. Accessed December 17, 2012.

- 7.Turnock BJ. Public Health: What It Is and How It Works. Sudbury, MA: Jones & Bartlett; 2007. [Google Scholar]

- 8.US Department of Health and Human Services. Public Health’s Infrastructure: Every Health Department Fully Prepared; Every Community Better Protected; A Status Report. Atlanta, GA: Centers for Disease Control and Prevention; 1999. [Google Scholar]

- 9.Lavinghouze R, Snyder K, Rieker P, Ottoson J. Consideration of an applied model of public health program infrastructure. J Public Health Manag Pract. 2013;19(6):E28–E37. doi: 10.1097/PHH.0b013e31828554c8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Chapman RC. Organization development in public health: the root cause of dysfunction. 2012. Available at: http://www.leadingpublichealth.com/LPH_White%20Paper_Root%20Causes.pdf. Accessed May 13, 2014.

- 11.Green LW. Public health asks of systems science: to advance our evidence-based practice, can you help us get more practice-based evidence? Am J Public Health. 2006;96(3):406–409. doi: 10.2105/AJPH.2005.066035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.National Cancer Institute. Greater Than the Sum: Systems Thinking in Tobacco Control. Bethesda, MD: National Cancer Institute; 2007. Tobacco Control Monograph 18. [Google Scholar]

- 13.Farrelly MC, Pechacek TF, Thomas KY, Nelson D. The impact of tobacco control programs on adult smoking. Am J Public Health. 2008;98(2):304–309. doi: 10.2105/AJPH.2006.106377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.National Center for Chronic Disease Prevention and Health Promotion, Office on Smoking and Health. Best Practices for Comprehensive Tobacco Control Programs—2007. Atlanta, GA: Centers for Disease Control and Prevention; 2007. [Google Scholar]

- 15.Cowling DW, Yang J. Smoking-attributable cancer mortality in California, 1979–2005. Tob Control. 2010;19(suppl 1):i62–i67. doi: 10.1136/tc.2009.030791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jay SJ, Torabi MR, Spitznagle MH. A decade of sustaining best practices for tobacco control: Indiana’s story. Prev Chronic Dis. 2012;9:E37. doi: 10.5888/pcd9.110144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Lien G, DeLand K. Translating the WHO Framework Convention on Tobacco Control (FCTC): can we use tobacco control as a model for other non-communicable disease control? Public Health. 2011;125(12):847–853. doi: 10.1016/j.puhe.2011.09.022. [DOI] [PubMed] [Google Scholar]

- 18.West R. What lessons can be learned from tobacco control for combating the growing prevalence of obesity? Obes Rev. 2007;8(suppl 1):145–150. doi: 10.1111/j.1467-789X.2007.00334.x. [DOI] [PubMed] [Google Scholar]

- 19.Wipfli HL, Samet JM. Moving beyond global tobacco control to global disease control. Tob Control. 2012;21(2):269–272. doi: 10.1136/tobaccocontrol-2011-050322. [DOI] [PubMed] [Google Scholar]

- 20.National Cancer Institute. ASSIST: Shaping the Future of Tobacco Prevention and Control. Bethesda, MD: National Cancer Institute; 2005. Tobacco Control Monograph 16. [Google Scholar]

- 21.National Cancer Institute. Evaluating ASSIST: A Blueprint for Understanding State-Level Tobacco Control. Bethesda, MD: National Cancer Institute; 2006. Tobacco Control Monograph 17. [Google Scholar]

- 22.Tobacco Institute. Overview of state ASSIST programs. Bates no. TI25390805. Available at: http://legacy.library.ucsf.edu/tid/qlr45b00. Accessed May 4, 2012.

- 23.Senge PM. The Fifth Discipline: The Art & Practice of the Learning Organization. New York, NY: Doubleday; 2006. [Google Scholar]

- 24.Brinkerhoff RO. The Success Case Method: Find Out Quickly What’s Working and What’s Not. San Francisco, CA: Berrett-Koehler; 2003. [Google Scholar]

- 25.Brinkerhoff RO. The success case method: a strategic evaluation approach to increasing the value and effect of training. Adv Developing Hum Resources. 2005;7(1):86–101. [Google Scholar]

- 26.Friese S. Qualitative Data Analysis with ATLAS.ti. Thousand Oaks, CA: Sage; 2012. [Google Scholar]

- 27.Patton MQ. Qualitative Research and Evaluation Methods. 3rd ed. Thousand Oaks, CA: Sage; 2002. [Google Scholar]

- 28.Corbin JSA. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. 3rd ed. Thousand Oaks, CA: Sage; 2007. [Google Scholar]

- 29.Charmaz K. Constructing Grounded Theory: A Practical Guide Through Qualitative Analysis. Thousand Oaks, CA: Sage; 2006. [Google Scholar]

- 30.Guest G, MacQueen KM, Namey EE. Applied Thematic Analysis. Thousand Oaks, CA: Sage; 2011. [Google Scholar]

- 31.MacQueen KM, McLellan E, Kay K, Milstein B. Codebook development for team-based qualitative analysis. Cultural Anthropol Methods. 1998;10(2):31–36. [Google Scholar]

- 32.Glaser BG, Strass A. The Discovery of Grounded Theory: Strategies for Qualitative Research. Chicago, IL: Aldine; 1967. [Google Scholar]

- 33.Guest G, Bunce A, Johnson L. How many interviews are enough? An experiment with data saturation and variability. Field Methods. 2006;18(1):59–82. [Google Scholar]

- 34.Strauss ACJ. Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. London, UK: Sage; 1998. [Google Scholar]

- 35.Orton SN, Menkens AJ, Santos P. Public Health Business Planning: A Practical Guide. Sudbury, MA: Jones & Bartlett Learning; 2009. [Google Scholar]

- 36.Ricketts KG, Ladewig H. A path analysis of community leadership within viable rural communities in Florida. Leadership. 2008;4(2):137–157. [Google Scholar]

- 37.Baker EL, Porter J. Practicing management and leadership: creating the information network for public health officials. J Public Health Manag Pract. 2005;11(5):469–473. doi: 10.1097/00124784-200509000-00018. [DOI] [PubMed] [Google Scholar]

- 38.Goodman RM, Speers MA, McLeroy K et al. Identifying and defining the dimensions of community capacity to provide a basis for measurement. Health Education Behav. 1998;25(3):258–278. doi: 10.1177/109019819802500303. [DOI] [PubMed] [Google Scholar]

- 39.Agranoff R. Inside collaborative networks: ten lessons for public managers. Public Adm Rev. 2006;66(suppl s1):56–65. [Google Scholar]

- 40.Porter J, Baker EL. Partnering essentials. J Public Health Manag Pract. 2005;11(2):174–177. doi: 10.1097/00124784-200503000-00013. [DOI] [PubMed] [Google Scholar]

- 41.Lavinghouze SR, Price AQ, Parsons B. The environmental assessment instrument: harnessing the environment for programmatic success. Health Promot Pract. 2009;10(2):176–185. doi: 10.1177/1524839908330811. [DOI] [PubMed] [Google Scholar]

- 42. American Recovery and Reinvestment Act of 2009, Pub L No. 111–5, 123 Stat. 115.

- 43.Center for Tobacco Policy Research, Washington University in Saint Louis. Program sustainability assessment tool project. Available at: http://cphss.wustl.edu/Projects/Pages/Sustainability-Project.aspx. Accessed May 11, 2012.

- 44.Trochim WM, Cabrera DA, Milstein B, Gallagher RS, Leischow SJ. Practical challenges of systems thinking and modeling in public health. Am J Public Health. 2006;96(3):538–546. doi: 10.2105/AJPH.2005.066001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Luke DA, Stamatakis KA. Systems science methods in public health: dynamics, networks, and agents. Annu Rev Public Health. 2012;33:357–376. doi: 10.1146/annurev-publhealth-031210-101222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Diez Roux AV. Conceptual approaches to the study of health disparities. Annu Rev Public Health. 2012;33:41–58. doi: 10.1146/annurev-publhealth-031811-124534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Public Health Accreditation Board. Standards and measures. Available at: http://www.phaboard.org/accreditation-process/public-health-department-standards-and-measures. Accessed December 31, 2013.