Abstract

We examined the effects of acoustic bandwidth on bimodal benefit for speech recognition in adults with a cochlear implant (CI) in one ear and low-frequency acoustic hearing in the contralateral ear. The primary aims were to (1) replicate with a steeper filter roll-off to examine the low-pass bandwidth required to obtain bimodal benefit for speech recognition and expand results to include different signal-to-noise ratios (SNRs) and talker genders, (2) determine whether the bimodal benefit increased with acoustic low-pass bandwidth and (3) determine whether an equivalent bimodal benefit was obtained with acoustic signals of similar low-pass and pass band bandwidth, but different center frequencies. Speech recognition was assessed using words presented in quiet and sentences in noise (+10, +5 and 0 dB SNRs). Acoustic stimuli presented to the nonimplanted ear were filtered into the following bands: <125, 125–250, <250, 250–500, <500, 250–750, <750 Hz and wide-band (full, nonfiltered bandwidth). The primary findings were: (1) the minimum acoustic low-pass bandwidth that produced a significant bimodal benefit was <250 Hz for male talkers in quiet and for female talkers in multitalker babble, but <125 Hz for male talkers in background noise, and the observed bimodal benefit did not vary significantly with SNR; (2) the bimodal benefit increased systematically with acoustic low-pass bandwidth up to <750 Hz for a male talker in quiet and female talkers in noise and up to <500 Hz for male talkers in noise, and (3) a similar bimodal benefit was obtained with low-pass and band-pass-filtered stimuli with different center frequencies (e.g. <250 vs. 250–500 Hz), meaning multiple frequency regions contain useful cues for bimodal benefit. Clinical implications are that (1) all aidable frequencies should be amplified in individuals with bimodal hearing, and (2) verification of audibility at 125Hz is unnecessary unless it is the only aidable frequency.

Keywords: Bimodal hearing, Bimodal benefit, Cochlear implants, Acoustic bandwidth

Introduction

According to Dorman and Gifford [2010], approximately 60% of modern-day adult implant recipients have potentially usable (i.e. aidable) residual hearing in the nonimplanted ear, defined as audiometric thresholds ≤ 80–85 dB HL at 250 Hz. Combining electric stimulation with a cochlear implant (CI) and acoustic stimulation is now commonly referred to as electric and acoustic stimulation (EAS). Combining electric hearing with acoustic hearing in the nonimplanted ear is also commonly termed ‘bimodal hearing’. The presence of acoustic hearing in the nonimplanted ear has prompted recent research to evaluate bimodal benefit.

Studies examining bimodal hearing have found bimodal benefit in quiet as well as in noise in CI listeners and simulations with normal-hearing listeners [Turner et al., 2004; Dorman et al., 2005; Dunn et al., 2005; Kong et al., 2005; Chang et al., 2006; Ching et al., 2006; Mok et al., 2006; Gifford et al., 2007; Kong and Carlyon, 2007; Dorman et al., 2008; Brown and Bacon, 2009b; Zhang et al., 2010]. As benefit has been repeatedly established, research has turned to evaluating which speech cues contained in the low-frequency acoustic stimulus provide the measured benefit. By determining the speech cues or frequency regions required to obtain bimodal benefit, clinical recommendations for hearing aid and CI use can be improved.

One speech cue that has been found to provide consistent bimodal benefit in multitalker babble is the fundamental frequency (F 0 ) [Kong and Carlyon, 2007; Brown and Bacon, 2009b; Carroll et al., 2011; Sheffield and Zeng, 2012; Visram et al., 2012]. Visram et al. [2012] noted, however, that the previous studies had presented noise in the CI stimulus but not the acoustic stimulus – a very unrealistic listening environment. Visram et al. [2012] found bimodal benefit when repeating this condition but no significant benefit from F 0 cues when including multitalker babble in the acoustic stimulus. Additionally, none of the studies found bimodal benefit in quiet with only F 0 . The findings suggest that the F 0 contour and envelope amplitude modulation alone are not sufficient to provide bimodal benefit in quiet or with noise included in the acoustic signal. However, Zhang et al. [2010] suggest that F 0 provides most of the benefit, especially in quiet. Additionally, multiple studies have found that low-pass-filtered speech including more speech cues (e.g. F 1 /F 2 ) provides more bimodal benefit than the F 0 alone [Brown and Bacon, 2009a, b; Sheffield and Zeng, 2012].

Because low-pass-filtered speech provides more bimodal benefit than the F 0 alone, cues available in higher-frequency regions (e.g. 250–500 Hz) might provide a similar or more benefit than F 0 or the <125 Hz frequency region. This could be examined using band-pass-filtered acoustic stimuli. Band-pass-filtered acoustic stimuli have not been used to examine bimodal benefit. Band pass filters can be used to help determine what cues or frequency regions are required for bimodal benefit. For example, can bimodal benefit be obtained with energy <125 Hz? It is clinically important to know whether energy <125 Hz is necessary for bimodal benefit for two reasons. First, hearing aid output is rarely verified at 125 Hz. Second, current hearing aid receiver frequency responses roll off around 100–125 Hz. Should speech energy <125 Hz be necessary for bimodal benefit, then hearing aid fitting prescriptions, verification and receiver output should be adjusted to ensure audibility in this frequency range. Thus, band pass filters without energy <125 or <250 Hz were used for testing to determine whether bimodal benefit can be obtained without energy at these frequencies. We hypothesized that a similar bimodal benefit would be obtained with filter bands of equivalent low-pass and pass band bandwidths but different center frequencies because multiple speech cues contribute to bimodal benefit [Li and Loizou, 2007, 2008; Sheffield and Zeng, 2012].

A few studies have used low-pass-filtered speech to determine the low-pass bandwidth necessary for the bimodal benefit in both unilateral CI listeners and simulations with normal-hearing listeners [Chang et al., 2006; Qin and Oxenham, 2006; Cullington and Zeng, 2010; Zhang et al., 2010]. Although specific speech cues were not examined, filtering helps to determine frequency ranges necessary for bimodal benefit and possible speech cues can be inferred. Zhang et al. [2010] published a study evaluating the low-pass bandwidth needed for maximum bimodal benefit in individuals with CIs rather than simulations in normal-hearing listeners. They used low-pass filter cutoffs for the contralateral acoustic stimulus ranging wider than the previous studies including 125, 250, 500, 750 Hz and wide band (unprocessed). Results showed that all of the bimodal benefit in quiet was obtained with the 125-Hz cutoff low-pass filter; however, significantly more benefit was seen with the 750-Hz cutoff and wideband stimuli than with the 125-Hz stimulus for speech recognition in multitalker babble. The filters used in the study of Zhang et al. [2010] had a shallow roll-off possibly allowing audibility above the filter cutoff frequencies and influencing their conclusion that the F 0 provides the majority of the benefit (see fig.2 and 3 in Zhang et al. [2010]). The current study sought to replicate the finding of bimodal benefit with <125 Hz with a steeper filter roll-off so as to more precisely isolate the individual frequency regions.

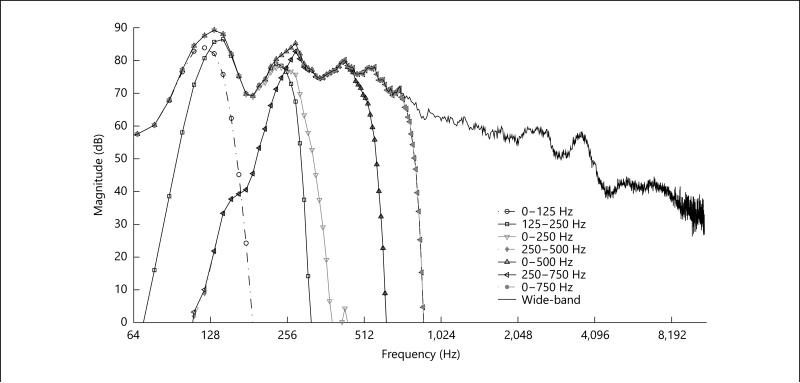

Fig. 2.

Mean acoustic spectrum envelope of 50 CNC words for each filtered condition.

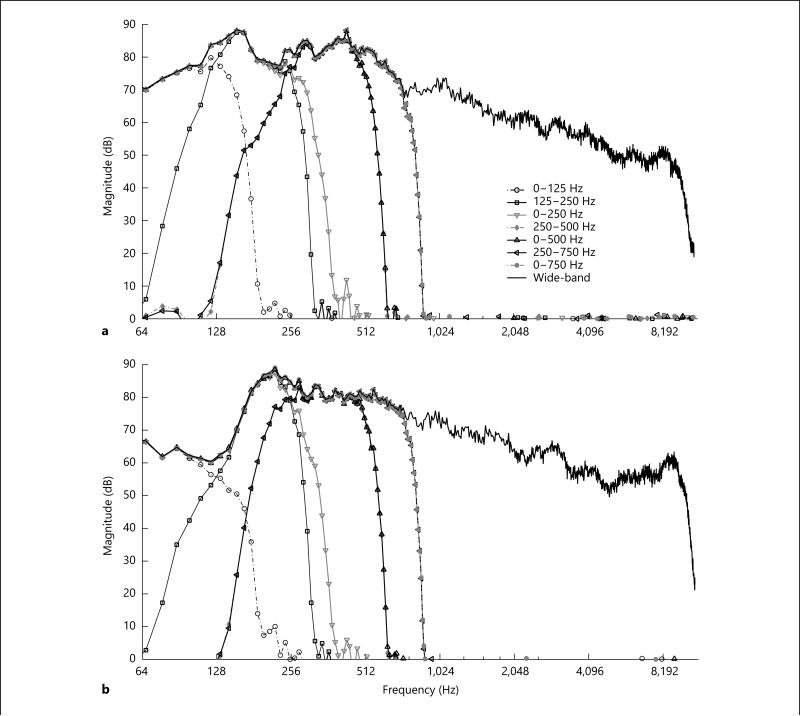

Fig. 3.

Mean acoustic spectrum envelope of 20 AzBio sentences for the male ( a ) and female ( b ) talkers for each filtered condition.

Some research has indicated that the bimodal benefit increases with decreasing signal-to-noise ratio (SNR) [Gifford et al., 2007; Dorman et al., 2008]. Thus, it is also possible that the effect of acoustic low-pass or pass band bandwidth on bimodal benefit will vary across SNRs. To date, this has not been tested. Zhang et al. [2010] also noted that the effects of talker gender on bimodal benefit have not been fully examined. Differences in bimodal benefit by talker gender are probably due to differences in formant frequencies and harmonics between the genders. Talker gender could be very important in attempting to examine the importance of the F 0 in this study. Therefore, the current study also examined both SNR and talker gender effects.

The current investigation examined bimodal benefit relevant to three study aims. The first was to replicate the results of Zhang et al. [2010] with a steeper filter roll-off to examine the minimum low-pass bandwidth required to obtain bimodal benefit for speech recognition in quiet and multitalker babble. This first aim was also expanded to include different SNRs and talker genders. The second aim was to determine whether bimodal benefit increased with acoustic low-pass bandwidth as Zhang et al. [2010] reported no increase in benefit with wider low-pass bandwidth for speech in quiet, contrary to previous research [Chang et al., 2006; Qin and Oxenham, 2006]. The third aim was to determine whether an equivalent bimodal benefit was obtained with acoustic signals of similar low-pass and pass band bandwidth, but different center frequencies. This aim was included to determine if bimodal benefit can be obtained without energy <125 or <250 Hz. Based on previous research, the hypotheses for the study aims were: (1) a low-pass bandwidth of <125 Hz would provide significant bimodal benefit both in quiet and noise for both male and female talkers [Gifford et al., 2007; Zhang et al., 2010]; (2) the bimodal benefit would increase with acoustic low-pass bandwidth [Chang et al., 2006; Zhang et al., 2010], and (3) an equivalent benefit would be obtained with similar low-pass and pass band bandwidths regardless of low-frequency range or center frequency because multiple cues provide or contribute to bimodal benefit [Sheffield and Zeng, 2012].

Materials and Methods

Participants

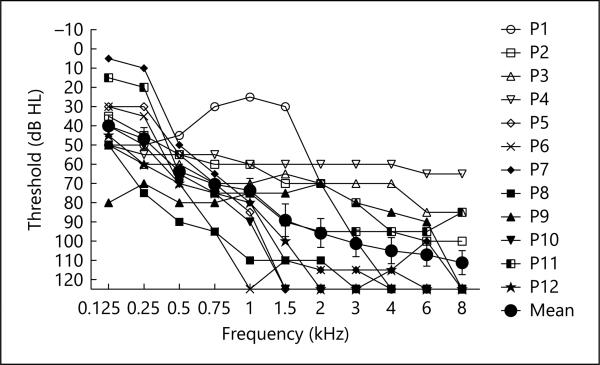

Twelve adult, postlingually deafened, bimodal listeners were recruited and consented to participate in accordance with Vanderbilt University Institutional Review Board approval. Each of the participants had at least 8 months (mean = 54.9 months) of listening experience with their CI and contralateral hearing aid. Each listener also had residual low-frequency acoustic hearing in the nonimplanted ear with pure-tone thresholds ≤ 80 dB HL at or below 500 Hz. These criteria were chosen to ensure that each listener had aidable hearing below 500 Hz based on the half-gain rule. One participant (P8) had a mixed hearing loss due to otosclerosis that was discovered following testing. The data from this participant were included in the analysis because NAL-NL1 [Byrne et al., 2001] targets for output were matched within 5 dB and the pattern of bimodal benefit by filter band was similar to those of the other participants. The individual and mean audiometric thresholds are displayed in figure 1. Demographic information for each CI listener is presented in table 1.

Fig. 1.

Individual participants (P1–P12) and mean nonimplanted ear audiometric thresholds.

Table 1.

Participant data

| Participant | Age, years | Gender | Processor | Implant ear | CI use, months |

|---|---|---|---|---|---|

| 1 | 49 | Female | CP810 | Right | 9 |

| 2 | 82 | Male | CP810 | Right | 138 |

| 3 | 62 | Male | Opus 2 | Left | 87 |

| 4 | 61 | Male | CP810 | Left | 77 |

| 5 | 52 | Female | CP810 | Right | 8 |

| 6 | 47 | Female | CP810 | Right | 8 |

| 7 | 46 | Female | Opus 2 | Left | 33 |

| 8 | 42 | Female | Harmony | Left | 60 |

| 9 | 64 | Female | Opus 2 | Left | 31 |

| 10 | 60 | Female | CP810 | Right | 96 |

| 11 | 53 | Female | CP810 | Left | 40 |

| 12 | 57 | Female | CP810 | Right | 73 |

| Mean | 56.3 | 54.9 | |||

| SD | 10.7 | 40.7 |

Speech Stimuli and Test Conditions

Speech recognition in quiet was completed using consonant-nucleus-consonant (CNC) monosyllabic words [Peterson and Lehiste, 1962] for the following listening conditions: cochlear implant alone (CI-alone), acoustic alone (A-alone), and combined cochlear implant and acoustic hearing (bimodal). The CNC stimuli consist of 10 phonemically balanced lists of 50 monosyllabic words recorded by a single male talker. Zhang et al. [2010] reported that the mean F 0 of the CNC words was 123 Hz with a standard deviation (SD) of 17 Hz.

Speech recognition in noise was assessed using the AzBio sentences [Spahr et al., 2012] at 0, +5 and +10 dB SNRs in a competing 20-talker babble. As with testing in quiet, testing at +10 dB SNR was evaluated in three listening conditions: CI-alone, A-alone and bimodal. Given that the majority of bimodal listeners’ A-alone scores would expectedly approach 0% at +5 dB SNR [Gifford et al., 2007], +5 and 0 dB SNRs were only tested in the CI-alone and bimodal conditions. The AzBio sentences are organized into 33 lists of 20 equally intelligible sentences each of which contains 6–10 words, and they are spoken by 2 male and 2 female talkers. Zhang et al. [2010] reported that the mean F 0 of the male talkers is 131 Hz (SD = 35 Hz) and the mean F 0 of the female talkers is 205 Hz (SD = 45 Hz). The 20-talker babble was presented in both the electric and acoustic signals unlike previous studies that only included noise in the electric or CI stimulus [Brown and Bacon, 2009a, b]. The specific SNRs were chosen to replicate the study of Zhang et al. [2010] for +10 dB and to ensure that performance in the CI-alone condition was low enough for each participant to observe the maximum bimodal benefit. Performance was described as percent of total words correct for each condition. Only one list was used per listening condition due to the number of testing conditions. However, Spahr et al. [2012] report a high list equivalency and low across-list variability for the AzBio sentences in individual listeners with CIs. The signal delivered to the CI was unprocessed. The acoustic signal was presented in an unprocessed (wide-band) as well as filtered manner for both the A-alone and the bimodal conditions in the following bands: <125, <250, <500, <750, 125–250, 250–500 and 250–750 Hz. These filter bands were chosen to replicate the study of Zhang et al. [2010] with the first 4 bands and to evaluate the effects of band-pass-filtered stimuli with the last 3 bands. Thus, benefits for bands with similar low-pass and pass band bandwidths – but different low-frequency regions – can be compared. Bands 250–500 and 250–750 Hz were also included to determine whether bimodal benefit is present without presentation of the F 0 fre quency range in the acoustic signal (for at least the male talkers) The order of presentation for the filtered stimuli (including the wide-band one) was counterbalanced across listeners using a Latin square technique. Each listener was tested in a total of 17 conditions in quiet and 35 conditions in noise.

The CNC word and AzBio sentence lists used for each participant were randomized across listening and acoustic filter conditions. As there are only 33 sentence lists for the AzBio sentences, two lists for which the listener scored near 0% were reused for one other condition. Similarly, as there are only 10 CNC word lists and 17 test conditions in quiet, 7 of the lists were used twice. The lists in which scores were lowest were reused in random order for each participant. This is not uncommon practice in speech recognition research with CI recipients [Balkany et al., 2007; Gantz et al., 2009], particularly given that learning effects are minimal for monosyllabic words [Wilson et al., 2003].

Signal Processing

Filtering was implemented using MATLAB version 11.0 software with a finite impulse response filter with a specific order (256, 512 or 1,024) for each filter to achieve a 90-dB/octave roll-off in each band. The AzBio sentences and multitalker babble signals were mixed at the appropriate SNR prior to filtering. Both the CNC words and the AzBio sentences were processed through each of the filter conditions. A frequency analysis of each stimulus was conducted using fast Fourier transform following the filtering of the stimuli to demonstrate the effect of the various filters. The average long-term spectra of 50 filtered and unprocessed CNC words are shown in figure 2. The average long-term spectra of 20 sentences from the male and female talkers in the AzBio sentences are shown in figure 3 a and b, respectively.

Presentation of Speech Stimuli

Electric stimulation for the CI listeners was accomplished through direct audio input using the processor-specific personal audio cable for the CI-alone and bimodal conditions. Testing was completed in each listener’s ‘everyday’ listening program. The microphone input was disabled during testing for both recipients of Advanced Bionics and Med El CIs. For recipients of Cochlear Corporation CIs – for which complete disabling of the external microphone is not possible – the sensitivity of the microphone was reduced to its minimum, and the accessory-mixing ratio was set at 6: 1. The CI volume level was adjusted to a comfortable listening level for each listener.

The acoustic stimuli were presented via an ER-1 insert earphone to the nonimplanted ear. To account for the hearing loss in the nonimplanted ear, the acoustic stimuli were adjusted according to the frequency gain prescription for a 60-dB SPL input dictated by NAL-NL1 [Byrne et al., 2001]. In other words, linear gain was applied using the gain prescription defined by NAL-NL1. The output of the acoustic stimuli for each filter band were verified to match NAL-NL1 targets for a 60-dB SPL input measured with probe microphone measurements in KEMAR with the ER-1 insert earphones in order to verify audibility. The maximum peak levels of all speech stimuli were below the maximum output of the ER-1 earphones, i.e. 100–120 dB SPL for 125–8,000 Hz in a Zwislocki coupler. Two participants (No. 6 and 8) had peak levels for the AzBio sentences near but still below the maximum output of the ER-1 earphones at 125–750 Hz: 100–105 dB SPL. All other participants had peak speech levels well below the maximum output of the earphones at all frequencies.

The perceived loudness level of the electric and acoustic signals was matched by adjusting the acoustic signal. Each participant was asked to indicate whether the perceived loudness of the acoustic stimulus was less than or equal to that of the electric stimulus. If the participant indicated that the acoustic stimulus was equal to or greater than the CI, no adjustments were made. The gain was increased by 5 dB for 25% of the participants (P1, P2 and P9) and was not adjusted for the remaining 9 listeners. Loudness matching was completed using the wide-band or ‘unprocessed’ acoustic stimulus. The overall gain used to match loudness for the wide-band acoustic stimulus was used for all of the filtered acoustic stimuli. We acknowledge that by using this method the narrower-band acoustic stimuli may have been perceived as softer, despite the matched overall gain. However, it is unreasonable to adjust each filtered band to match loudness for two reasons. First, the gain required would exceed the limits of the equipment for the narrowest bands. Second, the output at individual frequencies would vary across acoustic stimuli impeding the comparison of benefit between the acoustic stimuli.

Analysis

A sample size of 12 was determined using a power analysis for an ANOVA test with three groups (A-alone, CI-alone, bimodal), an α-value of 0.05 and a power of 80%. The difference in means and the SD used in the sample size estimate were obtained from the data of Zhang et al. [2010] due to the similarity in methods. Analyses were completed using repeated-measure ANOVAs and paired comparisons based on a priori hypotheses for bimodal benefit. For all analyses using bimodal benefit, it was defined as the percentage point difference between the specific acoustic filter bimodal score and the CI-alone score. The rationalized arcsine transform [Studebaker, 1985] was used for sentence recognition in noise to control for the effects of scores being near the ceiling and the floor. The significance level was defined as α= 0.05.

Three participants were not tested at 0 dB SNR due to time constraints (P2) or performance being near 0% (P1 and P11). Therefore, analyses for this SNR were made on the basis of data obtained from 9 participants. The overall ANOVA for the sentence recognition in noise testing including a factor of SNR included only the 9 participants who completed testing at all 3 SNRs.

The mean CI-alone performance was better than the mean A-alone performance both in quiet and at a +10 dB SNR. However, some participants performed better in the A-alone wideband filter condition than the CI-alone condition (P1, P3, P4 and P9 in quiet; P3, P9 and P11 in noise). For this study bimodal benefit was defined exclusively as bimodal minus the CI-alone condition to examine the effect of the acoustic filter band. The bimodal benefit obtained from the acoustic signal cannot be calculated as bimodal minus the A-alone condition. Thus, the degree of bimodal benefit was larger for these participants than if bimodal benefit had been calculated as bimodal minus the A-alone condition.

Results

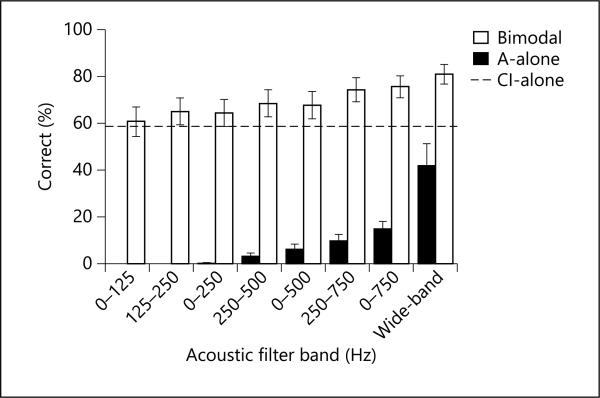

Figure 4 shows CNC word recognition in quiet (percent words correct) as a function of the listening device condition (bimodal and A-alone) and acoustic filter band. Mean CI-alone performance is plotted as a black-dotted line at 59.2%. All error bars represent 1 standard error of the mean. Bimodal benefit can be visualized by the increase in performance from the CI-alone line to the bimodal acoustic filter bands. Bimodal benefit increased with wider low-pass and pass band bandwidth. A repeated measures single factor (filter band) ANOVA confirmed an effect of acoustic filter band on bimodal benefit (F 7, 77 = 12.47, p< 0.000001).

Fig. 4.

Mean percent CNC words correct by acoustic filter condition for the A-alone and bimodal hearing conditions. The CI-alone mean score is represented with a black dashed line.

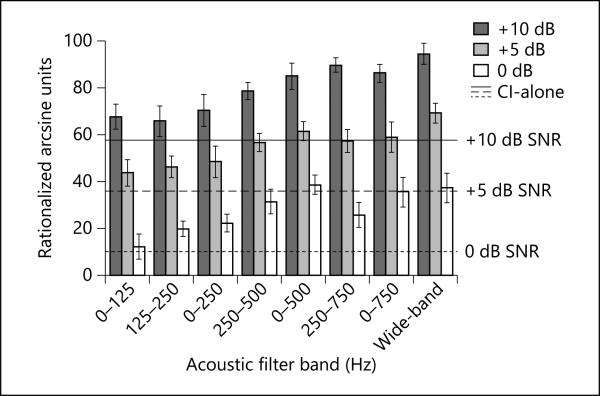

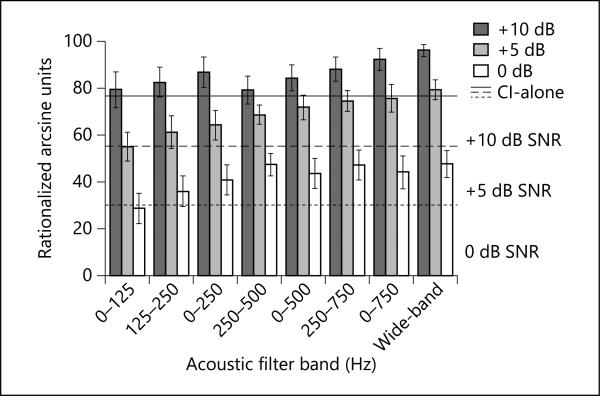

Figures 5 and 6 show performance for AzBio sentences in noise (rationalized arcsine units, RAUs) as a function of acoustic filter band and SNR for male and female talkers, respectively. All error bars represent 1 standard error of the mean. The mean CI-alone performance is plotted for each SNR and gender talker as solid gray, solid black and dashed black lines for +10, +5 and 0 dB SNRs, respectively. Bimodal benefit can be visualized by the increase in performance from the CI-alone line to the bimodal acoustic filter bands for each SNR separately. The mean CI-alone performance for female talkers was better than for male talkers by an average of 19.6% across the three SNRs. A clear bimodal benefit was again present in the wide-band condition with an apparent increasing benefit with a wider low-pass and pass band bandwidth. A 3-factor (filter band, SNR, talker gender) repeated measures ANOVA on the bimodal benefit scores (in RAUs) of the 9 participants who completed testing at all three SNRs revealed significant main effects of filter band (F 7, 368 = 2.85, p < 0.007) and talker gender (F 1, 368 = 5.60, p < 0.02) but no significant main effect of SNR (F 1,368 = 3.26, p < 0.072). The significant main effect of talker gender is driven by the bimodal benefit being greater for male than female talkers: 32 RAUs for male and 22 RAUs for female talkers when averaging the bimodal benefit in the wide-band filter condition across the three SNRs (t 8 = 4.51, p < 0.002). Participants obtained a greater bimodal benefit for male talkers than females consistently across all filter bands and SNRs. No significant interactions were found between filter band and talker gender (F 7, 368 = 0.28, p < 0.97), filter band and SNR (F 7, 368 = 0.69, p < 0.694), talker gender and SNR (F 1,368 = 0.12, p < 0.735) or filter band, talker gender and SNR (F 7, 368 = 0.21, p < 0.983). A priori planned paired comparisons were made for the main effect of filter band for word recognition in quiet and male and female talker sentence recognition in multitalker babble. Analyses for word recognition in quiet were corrected for multiple comparisons using the Holm-Sidak method. Analyses for sentence recognition in multitalker babble used a Fisher's least significant difference of p < 0.05 due to the large number of comparisons and to maximize power to detect talker gender effects. Because there was no main effect or interaction of SNR for sentence recognition, bimodal benefit values were averaged across the three SNRs for all paired comparisons to simplify results. For the 3 participants who did not complete testing at 0 dB SNR, their values were averaged across +10 and +5 dB SNRs for all comparisons. Analyses with and without those 3 participants showed no difference in results; thus, their data was included.

Fig. 5.

Mean RAUs for AzBio sentences spoken by male talkers for bimodal hearing by acoustic filter condition. CI-alone performances at each +10, +5 and 0 dB SNR are represented by black solid, black dashed, and black dotted lines, respectively.

Fig. 6.

Mean RAUs for AzBio sentences spoken by female talkers for bimodal hearing by acoustic filter condition. CI-alone performances at each +10, +5 and 0 dB SNR are represented by black solid, black dashed, and black dotted lines, respectively.

To examine the low-pass bandwidth required to obtain bimodal benefit in aim 1, the significance of bimodal benefit was tested in 2 acoustic filters: <125 and <250 Hz in quiet and in noise. These 2 bands were chosen based on previous research suggesting bimodal benefit should be obtained with <250 Hz and to determine whether a benefit can be obtained with narrower bands [Chang et al., 2006; Zhang et al., 2010]. A significant benefit was obtained with the <250 Hz band for word recognition in quiet (t 11 = 3.18, p < 0.018) but not with the <125 Hz filter (t 11 = 0.68, p < 0.512). In multitalker babble, the low-pass bandwidth required to obtain bimodal benefit was evaluated for male and female talkers separately. A significant benefit was obtained with the <125 and <250 Hz bands for sentences spoken by male talkers in multitalker babble (t 11 = 3.03, p < 0.012; t 11 = 2.90, p < 0.015). For female talkers in multitalker babble, a significant bimodal benefit was obtained with the <250 Hz band (t 11 = 2.80, p < 0.017) but not with the <125 Hz band (t 11 = 0.05, p < 0.958). These results indicate that participants obtained bimodal benefit with a narrower acoustic low-pass bandwidth for sentences in multitalker babble than words in quiet and for sentences spoken by male rather than female talkers.

To determine if the bimodal benefit increased with acoustic low-pass bandwidth for aim 2, paired comparisons were made between the bimodal benefit obtained with the wide-band acoustic stimulus and that gained with the low-pass filter bands in both quiet and noise. For words in quiet, the bimodal benefit obtained with the wide-band acoustic stimulus was significantly greater than that obtained with the <125, <250 and <500 Hz filter bands (t 11 = 4.84, p < 0.003; t 11 = 4.03, p < 0.006; t 11 = 3.57, p < 0.010; respectively). However, there was no significant difference between bimodal benefit obtained with the <750 Hz band and the wide-band acoustic signal (t 11 = 1.79, p < 0.102). For sentences spoken by a male talker in multitalker babble, bimodal benefit with the wide-band acoustic stimulus was significantly greater than the <125 and <250 Hz filter bands (t 11 = 6.34, p < 0.001; t 11 = 4.59, p < 0.001). However, there was no significant difference between bimodal benefit obtained with the <500 and <750 Hz filter bands and that obtained with the wideband acoustic signal (t 11 = 1.62, p < 0.134; t 11 = 1.71, p < 0.116). For sentences spoken by a female talker in multi-talker babble, bimodal benefit with the wide-band acoustic stimulus was significantly greater than the <125, <250 and <500 Hz filter bands (t 11 = 4.97, p < 0.001; t 11 = 2.70, p < 0.021; t 11 = 2.68, p < 0.022). However, there was no significant difference between bimodal benefit obtained with the <750 Hz filter band and the wideband acoustic signal [t 11 = 1.17, p < 0.266]. These results indicate that bimodal benefit increased significantly with low-pass bandwidth up to 750 Hz for male-spoken words in quiet and female-talker sentences in multitalker babble but only up to 500 Hz for male-talker sentences in multitalker babble.

To determine whether equivalent bimodal benefit was obtained with acoustic signals of similar low-pass and pass band bandwidth but different center frequencies for aim 3, the following paired comparisons of bimodal benefit were made in quiet and in noise: <125 vs. 125–250 Hz, <250 vs. 250–500 Hz, and <500 vs. 250– 750 Hz filter bands. Paired comparisons for word recognition in quiet indicated no significant difference between bimodal benefit obtained with the <125 and 125– 250 Hz as well as <250 and 250–500 Hz filter bands (t11 = 1.55, p < 0.15; t 11 = 2.25, p < 0.10); however, a greater bimodal benefit was obtained with the 250–750 Hz filter band than the <500 Hz filter band (t 11 = 3.15, p < 0.03). For sentences spoken by a male talker in multitalker babble, no significant differences in bimodal benefit were found for the three comparisons: <125 vs. 125– 250 Hz, <250 vs. 250–500 Hz, and <500 vs. 250–750 Hz (t 11 = 0.59, p < 0.568; t 11 = 1.69, p < 0.119; t 11 = 0.58, p < 0.571). For sentences spoken by a female talker in mul titalker babble, no significant differences in bimodal benefit were found for the three comparisons: <125 vs. 125–250 Hz, <250 vs. 250–500 Hz, and <500 vs. 250– 750 Hz (t 11 = 1.04, p < 0.319; t 11 = 0.65, p < 0.527; t 11 = 1.23, p < 0.244). Results suggest that an equivalent bimodal benefit can be obtained, for word recognition in quiet and both male- and female-talker sentence recognition in multitalker babble, with similar acoustic low-pass and pass band bandwidths below 750 Hz regardless of center frequency.

Discussion

Aim 1

The first aim was to replicate the results of Zhang et al. [2010] with a steeper filter roll-off to examine the low-pass bandwidth required to obtain bimodal benefit for speech recognition in quiet and multitalker babble. This first aim was also expanded to include different SNRs and talker genders.

Contrary to the hypothesis that <125 Hz would provide benefit in all conditions, participants only obtained a significant bimodal benefit with the <125 Hz filter band for male-talker sentence recognition in multitalker babble. The difference in acoustic low-pass bandwidth required for bimodal benefit for male voices in quiet (<250 Hz) and in multitalker babble (<125 Hz) could be due to the presence or lack of noise or the difference in contextual cues for word versus sentence stimuli. No effect of SNR was found on the low-pass bandwidth required for bimodal benefit. Effects of SNR will be discussed further in the General Discussion section below.

Zhang et al. [2010] reported a significant bimodal benefit with a <125-Hz acoustic band for the CNC words in quiet and combined male- and female-talker sentences in multitalker babble. It is difficult to directly compare the sentence recognition in noise results for the current study with their results because they used the average of all sentences spoken by both male and female talkers. Talker gender was separated for the current study because bimodal benefit was larger for male talkers than female ones. Differences in word recognition in quiet between the studies are most likely due to differences in the filtering of the acoustic stimuli (finite impulse response orders of 256 – shallow roll-off in the prior study). The current study used filters with a steeper roll-off. Therefore, Zhang et al. [2010] had higher frequency energy present in their 0–125 Hz bandwidth filter – even including 250 Hz. Other reasons for the differences in the results might be that participants in the current study had higher (i.e. poorer) and more variable audiometric thresholds, especially at 500 Hz and below. An additional difference is that Zhang et al. [2010] used NAL-RP targets, which prescribe more gain than NAL-NL1; therefore, their participants may have had greater low-frequency audibility than those in the current study. We used NAL-NL1 targets because (1) the participants had mild to moderately severe hearing losses at the low frequencies where amplification was provided and (2) it is most commonly used in the clinic given that it is integrated into hearing aid analyzer equipment such as that marketed by Frye Electronics and Audioscan. By using a 90-dB/octave roll-off, only F 0 appears to be present in the spectra for the male talkers in the <125 Hz band ( fig.2, 3 a) and for the female talkers in the <250 Hz band ( fig.3 b). In addition to the differences in filtering and participants previously mentioned from the study of Zhang et al. [2010], the CI-alone performance for AzBio sentence recognition at +10 dB SNR was better in the current study (71 vs. 40%). It should be noted, however, that mean performances for CNC words in the CI-alone and acoustic wide-band conditions were different for each of the two studies. This difference in CI-alone performance for sentence recognition in noise leaves less room for bimodal benefit in the current study and might have contributed to a larger low-pass bandwidth being required in quiet and less bimodal benefit being present with low-pass-filtered bands.

The current results for the low-pass bandwidth required to obtain significant bimodal benefit are similar to those of previous studies showing bimodal benefit with F 0 cues in multitalker babble, but not in quiet [Kong and Carlyon, 2007; Brown and Bacon, 2009a, b; Carroll et al., 2011; Visram et al., 2012]. Although these results are similar to those in previous studies, less benefit was obtained with these small bands than with similar low-pass bandwidths or F 0 simulations in the other studies using similar dependent variables [Brown and Bacon, 2009a, b; Zhang et al., 2010]. The smaller amount of benefit might be due to multiple factors. Unlike the studies of Brown and Bacon [2009a, b], the current study included multitalker babble in the electric and the acoustic stimulus, which would expectedly reduce bimodal benefit. The current study demonstrates that a significant bimodal benefit can be obtained even with the presentation of the multitalker babble to the nonimplanted ear, which is more similar to a realistic environment. These results are contrary to those found by Visram et al. [2012], which found no significant bimodal benefit with noise in both stimuli. This difference could be due to a number of factors including the use of a different multitalker babble, the larger sample size in the current study, or that more cues were present in the <125 Hz band in the current study than in the F 0 stimuli in Visram et al. [2012]. Nevertheless, these results support previous evidence that bimodal benefit in multi-talker babble can be obtained with only F 0 information [Brown and Bacon, 2009a, b; Zhang et al., 2010; Sheffield and Zeng, 2012].

As previously mentioned, no other study has systematically examined the effects of talker gender on bimodal benefit. The current study found bimodal benefit was significantly larger for male than for female talkers, and bimodal benefit was obtained with a narrower low-pass bandwidth for male talkers. There are two possible reasons for these differences. First, as mentioned in the Results section, CI-alone performance was significantly higher for female talkers than for male talkers at all SNRs, leaving less room for benefit. Second, male speech features are generally lower in frequency than corresponding female features. Therefore, it is possible that a given low-pass band contains more speech-relevant features for the male talkers than the female ones. There was no significant interaction between talker gender and SNR. However, the main effect of SNR approached significance indicating that there might have been a small effect of SNR. Follow-up analyses indicated that the significance was largely driven by female-talker sentences at a +10 dB SNR where ceiling effects might have affected results. It is possible that talker gender effects might differ based on SNR. Additionally, we only used a male talker for testing word recognition in quiet. The low-pass bandwidth required to obtain bimodal benefit for male-talker words in quiet was larger (<250 vs. <125 Hz) than for male-talker sentences in multitalker babble. Therefore, it is possible that a higher cutoff frequency, even 250 Hz, might be required to obtain bimodal benefit for a female talker speaking CNC words in quiet.

Aim 2

The second aim was to determine whether bimodal benefit increased with acoustic low-pass bandwidth. Bimodal benefit increased significantly with increasing acoustic low-pass bandwidth up to 750 Hz for word recognition in quiet and female-talker sentences in multitalker babble but only up to 500 Hz for male-talker sentences in multitalker babble. Contrary to the hypothesis, however, the participants did not obtain significantly more bimodal benefit from the additional energy above 750 and 500 Hz in those conditions. Recent studies have found decreased electric and acoustic speech cue integration efficiency in bimodal CI recipients compared to normal-hearing listeners with simulations and probabilistic models [Kong and Braida, 2011; Yang and Zeng, 2013]. This integration deficit, as well as the severity of hearing loss above 500 Hz, could account for the minimal bimodal benefit being obtained from the addition of energy above those frequencies.

Zhang et al. [2010] found no significant increase in bimodal benefit with increasing low-pass bandwidth above 125 Hz for word recognition in quiet, which was a surprising finding for participants with aidable hearing above 125 Hz. The difference between these results and those in Zhang et al. [2010] may again be due to filtering and amplification techniques as well as participant differences. The current study expanded previous results in finding increasing bimodal benefit with increased acoustic low-pass bandwidth at multiple SNRs (+10, +5 and 0 dB) [Chang et al., 2006; Qin and Oxenham, 2006; Zhang et al., 2010].

It is noteworthy that a narrower low-pass bandwidth provided a maximum bimodal benefit in the current participants for male-talker sentence recognition in noise (500 Hz) compared to male-talker word recognition in quiet (750 Hz). Two possible reasons for this statistical difference are noted here. First, it is possible that different low-pass bandwidths are necessary for maximum bimodal benefit in noise versus quiet listening conditions. Previous research has shown a greater bimodal benefit at poorer SNRs [Gifford et al., 2007], although the current investigation found no such effect. The second factor is the added context of sentences versus monosyllabic words. The addition of context may reduce the low-pass bandwidth needed to obtain the maximum bimodal benefit. Unfortunately, the current design does not allow differentiation of these two factors. However, though there is no statistical difference between wide-band and some narrower low-pass bandwidths in quiet and noise, performance continued to slightly increase with low-pass bandwidth to the wideband condition. Therefore, the lack of statistical difference might have been due to the number of participants or sample characteristics.

Aim 3

The third aim was to determine whether an equivalent bimodal benefit was obtained with acoustic signals of similar low-pass and pass band bandwidth, but different center frequencies.

This was the first study to use bandpass filtering of the acoustic signal in examining bimodal benefit in CI lis teners. Therefore, it is the first study in which different filtered acoustic stimuli with similar low-pass and pass band bandwidths, but different frequency ranges, can be contrasted for providing bimodal benefit. Consistent with the hypothesis no difference was found between bimodal benefit in the <125 and 125–250 Hz bands or the <250 and 250–500 Hz bands in quiet or noise for male or female talkers. Additionally, no difference was found between bimodal benefit in the 250–750 and <500 Hz bands for male and female talkers in noise. More bimodal benefit was obtained for word recognition in quiet, however, with the 250–750 Hz band compared to the <500 Hz band. These results indicate that a significant bimodal benefit can be obtained without the <250 Hz energy. Furthermore, higher-frequency bands (250– 750 Hz) provided more bimodal benefit than the <250 and <500 Hz bands. This is not surprising as F0 information can be obtained by resolving harmonics, and other features (e.g. F 1 , F 2 ) available in higher frequency regions may be just as if not more important in providing bimodal benefit. These results are consistent with previous research showing a synthesized tone modulated for the amplitude and frequency of the F 0 provided a significant benefit, but not as much benefit as low-pass filtered speech [Brown and Bacon, 2009a, b; Sheffield and Zeng, 2012].

General Discussion

No effect of SNR on bimodal benefit was found in the current study. Previous research has indicated that bimodal benefit can vary across SNRs [Gifford et al., 2007; Kong and Carlyon, 2007; Dorman et al., 2008]. The limited range of SNRs used in the study, as well as the sample size, could have inhibited SNR effects.

The current data provide evidence that different bands of low-frequency energy, such as <250 and 250– 500 Hz, provide a significant bimodal benefit independently. This result might be consistent with the suggestion of Li and Loizou [2007, 2008] that multiple speech cues (e.g. F 0 and F 1 ) provide glimpses of the speech signal over multitalker babble during temporal troughs in the noise and peaks in the speech. We used 20-talker babble noise to replicate Zhang et al. [2010]. With 20-talker babble, the spectral-dependent changes in SNR are much smaller than with fewer talkers limiting opportunities to glimpse the signal. However, glimpsing has been found with 20-talker babble [Li and Loizou, 2007]. An equal bimodal benefit with different frequency bands would indicate that one particular feature to compare across ears or extract from the acoustic signal is not necessary for bimodal benefit, unless it is a feature that is present in both spectral bands, i.e. periodicity. Additionally, bimodal benefit increased with increasing low-pass bandwidth, and band-pass-filtered stimuli including higher frequencies provided more bimodal benefit than low-pass-filtered stimuli. These results indicate that other features not present in the <250 Hz range provide more or additional benefit than features in the <250 Hz range.

The magnitude of the bimodal benefit obtained in the acoustic wide-band condition of the current study is similar to that reported in previous studies [Dorman and Gifford, 2010; Zhang et al., 2010]. However, as has been reported in previous literature, not all participants of this study obtained bimodal benefit in all conditions [Dorman and Gifford, 2010]. Two participants (P6 and P8) had a limited bimodal benefit in many filter band and SNR conditions. Their limited bimodal benefit might be due to the fact that (1) these participants had the best CI-alone scores in quiet and in multitalker babble, leaving less room for improvement, (2) their A-alone wide-band recognition scores in quiet and in noise were the poorest of all 12 participants, and (3) limited audibility of the acoustic stimuli in these participants despite audibility being verified in an average adult ear (KEMAR). Statistical analyses were completed with and without P6 and P8 with no difference in significant results. Therefore, P6 and P8 were included in the analyses.

Clinical Implications

Based on the minimum low-pass acoustic bandwidth necessary for bimodal benefit in this study (<250 Hz for CNC words and female talkers in noise and <125 Hz for male talkers in noise), any unilateral CI listener with aidable residual hearing in the nonimplanted ear at or above 125 or 250 Hz may benefit from amplification in that ear in at least some listening situations. It is important to note that some hearing aids do not produce sufficient output at 125 Hz. Additionally, too much gain in the low frequencies can cause occlusion effects preventing some listeners from using acoustic amplification in the nonimplanted ear. It is also important to remember that the current results indicate that bimodal benefit can be obtained without the <125 Hz frequency range present in the acoustic stimulus. Therefore, it is not critical that audibility at 125 Hz be verified in order to obtain bimodal benefit, unless 125 Hz is the only aidable threshold.

In addition to amplification at 125 and 250 Hz, if uni-lateral CI users have aidable hearing at higher frequen cies, current results indicate that bimodal benefit will increase by providing amplification at these frequencies. Generally, amplification should be provided at all aidable frequencies in the nonimplanted ear.

Limitations

A limitation to the current study is that different materials were used for testing in quiet and in multitalker babble. It is impossible to determine whether differences in bimodal benefit patterns between words in quiet and sentences in multitalker babble are due to the effects of the multitalker babble or the difference in contextual cues in the test stimuli. For the current study, the stimuli used in the different conditions were used to avoid ceiling and floor effects in bimodal CI listeners. Additionally, different SNRs were used to examine differences in bimodal benefit patterns across SNR, limiting the need for comparisons with the quiet listening condition.

Some other limitations to the current study are the relatively small sample size, which may inhibit the generalization of data to the larger total population of CI users, and the lack of a female target talker in quiet. The sample size for the current study, however, was determined via a priori power analysis based on the means and standard deviations in the data set of Zhang et al. [2010]. One important limitation that was already mentioned is that specific speech features necessary for bimodal benefit were not examined. However, the steep roll-off of the filters (90 dB/octave) used in this study may increase the accuracy of these inferences. Lastly, the current study used participants with residual acoustic hearing in the nonimplanted ear. It is very possible that the comparison and integration of speech features in the CI and acoustic signal within an ear, such as ipsilateral EAS with hearing preservation (CI and acoustic signals in the same ear), might be different than that observed across ears. Therefore, the mechanism for benefit from the acoustic hearing might be different in the two populations and the current results cannot necessarily be extrapolated to the hearing preservation population. However, Kong and Carlyon [2007] compared bimodal benefit with EAS benefit in a CI simulation with normal-hearing listeners and found similar results, indicating that the benefit derived from residual acoustic hearing might not greatly depend on the ear. Additionally, CI and acoustic stimuli in the same ear can mask or interfere with detection of the other signal influencing benefit from the acoustic signal [Lin et al., 2011]. Therefore, further study is needed in populations with pre-served hearing in the implanted ear rather than simulations with normal-hearing listeners for comparison. Another limitation is that all testing was completed without visual cues that are often present in communication in daily life. It is possible that the effect of acoustic bandwidth on bimodal benefit would be different for audio-visual stimuli.

A last limitation is that the participants were not given extensive practice or training with the filtered acoustic stimuli prior to data collection. Therefore, the increasing bimodal benefit with increasing acoustic low-pass bandwidth could be due to the greater speech information in the wider low-pass bandwidth conditions or the participants not being familiar with the filtered acoustic stimuli. The study of Zhang et al. [2010] provided no training or practice strengthening the comparison between the results either. Nevertheless, further research is needed to determine the effects of training with these stimuli.

Conclusion

Bimodal benefit was obtained with low-pass-filtered speech presented to the nonimplanted ear for a cutoff as low as 250 Hz for male talkers in quiet and female talkers in multitalker babble noise and as low as 125 Hz for male talkers in multitalker babble. However, in both quiet and multitalker babble, a systematic increase in bimodal benefit with increasing acoustic low-pass bandwidth in the nonimplanted ear was observed. A similar bimodal benefit was obtained with different frequency ranges of equal low-pass and pass band bandwidths (e.g. <250 and 250– 500 Hz). These results indicate that multiple frequency regions contain cues providing bimodal benefit. No effect of SNR on bimodal benefit was present. Clinical implications are that (1) all aidable frequencies should be amplified in individuals with bimodal hearing, and (2) verification of audibility at 125 Hz is unnecessary unless it is the only aidable frequency.

Acknowledgments

The research reported here was supported by grant R01 DC009404 from the NIDCD to the senior author. We thank Dr. Ting Zhang for providing us with the raw data from which a power analysis was completed for the current study. We also sincerely thank Dr. Tony Spahr and Dr. Ting Zhang for their counsel regarding software and implementation for the current experiments and Dr. Daniel Ashmead for consultation on statistical analyses. We would also like to extend thanks to Dr. Todd Rick-etts and Dr. Ralph Ohde for their helpful comments on earlier versions of this paper. Portions of these data were presented at the 2012 conference of the American Auditory Society in Scottsdale, Ariz., USA.

References

- Balkany T, Hodges A, Menapace C, Hazard L, Driscoll C, Gantz B, Kelsall D, Luxford W, McMenomy S, Neely JG. Nucleus freedom North American clinical trial. Otolaryngol Head Neck Surg. 2007;136:757–762. doi: 10.1016/j.otohns.2007.01.006. [DOI] [PubMed] [Google Scholar]

- Brown CA, Bacon SP. Achieving electric-acoustic benefit with a modulated tone. Ear Hear. 2009a;30:489–493. doi: 10.1097/AUD.0b013e3181ab2b87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brown CA, Bacon SP. Low-frequency speech cues and simulated electric-acoustic hearing. J Acoust Soc Am. 2009b;125:1658–1665. doi: 10.1121/1.3068441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Byrne D, Dillon H, Katsch R, Keidser G. The NAL-NL1 procedure for fitting non-linear hearing aids: characteristics and comparisons with other procedures. J Am Acad Audiol. 2001;12:37–51. [PubMed] [Google Scholar]

- Carroll J, Tiaden S, Zeng FG. Fundamental frequency is critical to speech perception in noise in combined acoustic and electric hearing. J Acoust Soc Am. 2011;130:2054–2062. doi: 10.1121/1.3631563. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang JE, Bai JY, Zeng F-G. Unintelligible low-frequency sound enhances simulated cochlear-implant speech recognition in noise. IEEE Trans Biomed Eng. 2006;53:2598–2601. doi: 10.1109/TBME.2006.883793. [DOI] [PubMed] [Google Scholar]

- Ching TY, Incerti P, Hill M, van Wanrooy E. An overview of binaural advantages for children and adults who use binaural/bimodal hearing devices. Audiol Neurotol. 2006;11(suppl 1):6–11. doi: 10.1159/000095607. [DOI] [PubMed] [Google Scholar]

- Cullington HE, Zeng FG. Bimodal hearing benefit for speech recognition with competing voice in cochlear implant subject with normal hearing in contralateral ear. Ear Hear. 2010;31:70–73. doi: 10.1097/AUD.0b013e3181bc7722. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman M, Spahr AJ, Loizou PC, Dana CJ, Schmidt JS. Acoustic simulations of combined electric and acoustic hearing (EAS). Ear Hear. 2005;26:371–380. doi: 10.1097/00003446-200508000-00001. [DOI] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH. Combining acoustic and electric stimulation in the service of speech recognition. Int J Audiol. 2010;49:912–919. doi: 10.3109/14992027.2010.509113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorman MF, Gifford RH, Spahr AJ, McKarns SA. The benefits of combining acoustic and electric stimulation for the recognition of speech, voice and melodies. Audiol Neurotol. 2008;13:105–112. doi: 10.1159/000111782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dunn CC, Tyler R, Witt S. Benefit of wearing a hearing aid on the unimplanted ear in adult users of a cochlear implant. J Speech Lang Hear Res. 2005;48:668–680. doi: 10.1044/1092-4388(2005/046). [DOI] [PubMed] [Google Scholar]

- Gantz BJ, Hansen MR, Turner CW, Oleson JJ, Reiss LA, Parkinson AJ. Hybrid 10 clinical trial. Audiol Neurotol. 2009;14:32–38. doi: 10.1159/000206493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gifford R, Dorman M, McKarns S, Spahr AJ. Combined electric and contralateral acoustic hearing: word and sentence recognition with bimodal hearing. J Speech Lang Hear Res. 2007;50:835–843. doi: 10.1044/1092-4388(2007/058). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong Y-Y, Stickney GS, Zeng F-G. Speech and melody recognition in binaurally combined acoustic and electric hearing. J Acoust Soc Am. 2005;117:1351. doi: 10.1121/1.1857526. [DOI] [PubMed] [Google Scholar]

- Kong YY, Braida LD. Cross-frequency integration for consonant and vowel identification in bimodal hearing. J Speech Lang Hear Res. 2011;54:959–980. doi: 10.1044/1092-4388(2010/10-0197). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kong YY, Carlyon RP. Improved speech recognition in noise in simulated binaurally combined acoustic and electric stimulation. J Acoust Soc Am. 2007;121:3717–3727. doi: 10.1121/1.2717408. [DOI] [PubMed] [Google Scholar]

- Li N, Loizou PC. Factors influencing glimpsing of speech in noise. J Acoust Soc Am. 2007;122:1165–1172. doi: 10.1121/1.2749454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li N, Loizou PC. A glimpsing account for the benefit of simulated combined acoustic and electric hearing. J Acoust Soc Am. 2008;123:2287–2294. doi: 10.1121/1.2839013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin P, Turner CW, Gantz BJ, Djalilian HR, Zeng FG. Ipsilateral masking between acoustic and electric stimulations. J Acoust Soc Am. 2011;130:858–865. doi: 10.1121/1.3605294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mok M, Grayden D, Dowell RC, Lawrence D. Speech perception for adults who use hearing aids in conjunction with cochlear implants in opposite ears. J Speech Lang Hear Res. 2006;49:338–351. doi: 10.1044/1092-4388(2006/027). [DOI] [PubMed] [Google Scholar]

- Peterson GE, Lehiste I. Revised CNC lists for auditory tests. J Speech Hear Disord. 1962;27:62–70. doi: 10.1044/jshd.2701.62. [DOI] [PubMed] [Google Scholar]

- Qin MK, Oxenham AJ. Effects of introducing un-processed low-frequency information on the reception of envelope-vocoder processed speech. J Acoust Soc Am. 2006;119:2417. doi: 10.1121/1.2178719. [DOI] [PubMed] [Google Scholar]

- Sheffield BM, Zeng FG. The relative phonetic contributions of a cochlear implant and residual acoustic hearing to bimodal speech perception. J Acoust Soc Am. 2012;131:518–530. doi: 10.1121/1.3662074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spahr AJ, Dorman M, Litvak LM, Wie SVW, Gifford R, Loizou PC, Loiselle L, Oakes T, Cook S. Development and validation of the AzBio sentence lists. Ear Hear. 2012;33:112–117. doi: 10.1097/AUD.0b013e31822c2549. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Studebaker GA. A ‘rationalized’ arcsine transform. J Speech Hear Res. 1985;28:455–462. doi: 10.1044/jshr.2803.455. [DOI] [PubMed] [Google Scholar]

- Turner CW, Gantz BJ, Vidal C, Behrens A, Henry BA. Speech recognition in noise for cochlear implant listeners: benefits of residual acoustic hearing. J Acoust Soc Am. 2004;115:1729. doi: 10.1121/1.1687425. [DOI] [PubMed] [Google Scholar]

- Visram AS, Azadpour M, Kluk K, McKay CM. Beneficial acoustic speech cues for cochlear implant users with residual acoustic hearing. J Acoust Soc Am. 2012;131:4042–4050. doi: 10.1121/1.3699191. [DOI] [PubMed] [Google Scholar]

- Wilson RH, Bell TS, Koslowski JA. Learning effects associated with repeated word-recognition measures using sentence materials. J Rehabil Res Dev. 2003;40:329–336. doi: 10.1682/jrrd.2003.07.0329. [DOI] [PubMed] [Google Scholar]

- Yang HI, Zeng FG. Reduced acoustic and electric integration in concurrent-vowel recognition. Sci Rep. 2013;3:1419. doi: 10.1038/srep01419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang T, Dorman M, Spahr AJ. Information from the voice fundamental frequency (f0) region accounts for the majority of the benefit when acoustic stimulation is added to electric stimulation. Ear Hear. 2010;31:63–69. doi: 10.1097/aud.0b013e3181b7190c. [DOI] [PMC free article] [PubMed] [Google Scholar]