Abstract

Our brains readily decode human movements, as shown by neural responses to face and body motion. N170 event-related potentials (ERPs) are earlier and larger to mouth opening movements relative to closing in both line-drawn and natural faces, and gaze aversions relative to direct gaze in natural faces (Puce and Perrett, 2003; Puce et al., 2000). Here we extended this work by recording both ERP and oscillatory EEG activity (event-related spectral perturbations, ERSPs) to line-drawn faces depicting eye and mouth movements (Eyes: Direct vs Away; Mouth: Closed vs Open) and non-face motion controls. Neural activity was measured in 2 occipitotemporal clusters of 9 electrodes, one in each hemisphere. Mouth opening generated larger N170s than mouth closing, replicating earlier work. Eye motion elicited robust N170s that did not differ between gaze conditions. Control condition differences were seen, and generated the largest N170. ERSP difference plots across conditions in the occipitotemporal electrode clusters (Eyes: Direct vs Away; Mouth: Closed vs Open) showed statistically significant differences in beta and gamma bands for gaze direction changes and mouth opening at similar post-stimulus times and frequencies. In contrast, control stimuli showed activity in the gamma band with a completely different time profile and hemispheric distribution to facial stimuli.

ERSP plots were generated in two 9 electrode clusters centered on central sites, C3 and C4. In the left cluster for all stimulus conditions, broadband beta suppression persisted from about 250 ms post-motion onset. In the right cluster, beta suppression was seen for control conditions only. Statistically significant differences between conditions were confined between 4 – 15 Hz, unlike occipitotemporal sites where differences occurred at much higher frequencies (high beta/gamma).

Our data indicate that N170 amplitude is sensitive to the amount of movement in the visual field, independent of stimulus type. In contrast, occipitotemporal beta and gamma activity differentiate between facial and non-facial motion. Context and stimulus configuration likely play a role in shaping neural responses, based on comparisons of the current data to previously reported studies. Broadband suppression of central beta activity, and significant low frequency differences were likely stimulus driven and not contingent on behavioral responses.

Keywords: N170, EEG, facial motion, gaze changes, mouth movements, biological motion, gamma band, beta band

1. INTRODUCTION

Visually equipped organisms must interpret movements of conspecifics and other organisms in their surroundings so as to adjust behavior for the current situation. Motion perception is an old information source that species spanning the evolutionary ladder have as a tool for survival (Frost, 2010). Since many species must deal with complex and coordinated social life, facial and body motion and vocalizations have become important sources of information (Blake and Shiffrar, 2007).

Biological motion is motion that originates from animate beings or living organisms, and from the pioneer work of Gunnar Johansson, a `biological motion' stimulus became synonymous with a schematic depiction of this articulated motion with point-light displays (Johansson, 1973). It is well known that is possible to induce the perception of animacy through a simulation of the motion of many different human actions in these point-light displays (Dittrich, 1993). Human infants show distinct preferences for biological motion stimuli relative to other forms of visual motion (Bertenthal et al., 1984; Simion et al., 2011). Remarkably, cats decode biological motion displays depicting the locomotion of other cats (Blake, 1993), and monkeys recognize the human walking as depicted with either point-light or line-drawn displays (Oram and Perrett, 1994, 1996). This type of visual perception indicates that the visual system is sensitive to invariant higher-order stimulus information imbedded in the motion pattern (Blake and Shiffrar, 2007). The invariance in biological motion perception allows relevant information to be extracted regarding an individual's identity (Cutting and Kozlowski, 1977) and gender (Barclay et al., 1978; Troje, 2002). Emotional expression can be gleaned from point-light displays of the whole body (Clarke et al., 2005) or from isolated body parts such as arms (Pollick et al., 2002) or face (Bassili, 1978).

Human neuroimaging studies and non-human primate neurophysiological studies indicate that dynamic stimulus attributes are mainly processed by brain regions considered to be part of the dorsal visual system, and that processing of progressively more complex motion information occurs within this system (Giese and Poggio, 2003; Jastorff and Orban, 2009; Thompson and Parasuraman, 2012). In a region of macaque superior temporal sulcus known as STPa, sensitivity of single neurons to direction of biological motion as shown by human figures walking in profile (Oram and Perrett, 1994) is seen, in addition to responses to (static) heads and bodies (Oram and Perrett, 1994). STPa has been proposed to integrate form and motion information (Oram and Perrett, 1996).

In the human brain, motion sensitive loci such as hMT+, which reside in highly folded cortex on the lateral aspect of the occipito-temporal junction, respond vigorously to coherent motion of linear and non-linear forms e.g. optic flow (e.g. Grossman et al., 2000). A nearby region in the posterior superior temporal sulcus is selectively sensitive to biological motion as depicted by point-light displays (Bonda et al., 1996; Grossman et al., 2000) or by natural images that depict motion of the face, hands or body (Puce et al., 1998; Wheaton et al., 2004). This region is also thought to integrate form and motion information (Beauchamp, 2005; Kourtzi et al., 2008; Puce and Perrett, 2003) and is active to static stimuli that depict different forms of implied human motion (Kourtzi and Kanwisher, 2000; Kourtzi et al., 2008). Neuropsychological investigations in rare cases with lesions to the superior temporal region have also reported difficulties with processing biological motion relative to other forms of motion perception (Vaina and Gross, 2004). The putative mirror neuron, which includes the cortex of the anterior intraparietal sulcus as well as premotor cortex, is also known to activate to viewing the articulated motion of others (see Van Overwalle and Baetens, 2009 for a meta-analysis).

Neurophysiological investigations in humans using motion stimuli have typically used dynamic grating or checkerboard stimuli with fairly large visual fields for stimulation e.g. 20 degrees of visual field (Kuba and Kubova, 1992). Typically, the elicited event-related potentials (ERPs) occur over the posterior scalp and consist of a triphasic positive-negative-positive complex with the most prominent and robust ERP component being the negativity, which occurs at around 150–160 ms post-motion onset.

To date very few neurophysiological investigations of biological motion and motion from faces, hands and bodies in natural images have been performed in humans. Robust motion sensitive ERPs from the bilateral occipitotemporal scalp have been elicited to viewing dynamic images of face, hand and body, and over the centroparietal scalp for the hand and body (Wheaton et al., 2001). A prominent negativity at around 170–220 ms (N170) post-motion onset is seen in the posterior scalp to apparent motion of a natural face (Puce et al., 2000) or a line-drawn face (Puce et al., 2003). A corresponding magnetoencephalographic response, the M170, has also been described to the apparent motion of natural faces (Watanabe et al., 2001) or to facial avatars (Ulloa et al., 2012) Larger and earlier N170s occurred to gaze aversions relative to direct gaze movements in both natural faces and isolated eyes, and to mouth opening relative to mouth closure (Puce et al., 2000). Similar results have been demonstrated using images of line-drawn faces making mouth movements (Puce et al., 2003). These findings likely reflect the potential salience of a diverted gaze or opening mouth: diverted gaze signals a change in social attention away from the viewer, and an opening mouth may signal an impending vocalization (Puce and Perrett, 2003). A point-light walker also elicits larger N170 activity to upright relative to inverted walkers or scrambled motion, and a subsequent positivity that was greatest to the point-light walker in either orientation relative to the scrambled control (Jokisch et al., 2005). Taken together, the small ERP literature describes a neural differentiation where N170s are: (i) significantly larger to biological motion relative to a scrambled control; and (ii) can be significantly different across biological motion conditions.

Traditionally, human neurophysiological investigations have focused on task and condition effects on ERPs. ERPs are phase-locked neural responses that are identified by averaging across multiple trials of the same condition, so that any (evoked) activity that is not phase-locked to the stimulus will be diminished in the average. However, this neural activity constitutes only part of the total neural response to a delivered stimulus: stimulus induced, but non-phase-locked (induced) activity can also be extracted as a function of frequency over the duration of the experimental trial (Galambos, 1992; Herrmann et al., 2005; Makeig, 1993; Tallon-Baudry et al., 1996). Total and induced activity is most typically displayed as a time-frequency analysis in the form Event Related Spectral Perturbation (ERSP) plots (Delorme and Makeig, 2004; Herrmann et al., 2005; Tallon-Baudry et al., 1996). Changes in alpha, beta, and gamma EEG frequency bands have been described in a number of tasks and conditions. Changes in a given frequency band may come about from more than one process or underlying mechanism. Simplistically speaking, decreases in alpha band activity have been related to attentive processing of stimuli, increases in the beta band to maintenance of the current brain state, and increases in the gamma band to facilitation of cortical processing (Engel and Fries, 2010; Foxe and Snyder, 2011; Herrmann et al., 2010; Palva and Palva, 2007). In reality, a more complex picture is emerging where interactions within and between frequency bands might represent multiplexing mechanisms for information processing and communication (Akam and Kullmann, 2010; Canolty and Knight, 2010; Schyns et al., 2011;Varela et al., 2001).

A number of studies have compared EEG changes to viewing point-light and real human motion stimuli and have typically focused their analyses on EEG power in the 8–13 Hz range [alpha, and one part of the mu rhythm] in central electrodes overlying the sensorimotor scalp. Mu rhythm is a complex rhythm seen typically over the sensorimotor scalp with components spanning both alpha and beta EEG bands (Hari, 2006)]. Typically, 8–13 Hz power in the central scalp is typically suppressed relative to the pre-stimulus baseline more when biological motion is viewed, as opposed to viewing non-biological motion (e.g. Oberman et al., 2005; Ulloa and Pineda, 2007). Additionally, this suppression is augmented for social versus non-social tasks (Oberman et al., 2007; Perry et al., 2010a), and when oxytocin is given to participants, as opposed to placebo (Perry et al., 2010b). Interestingly, 8–13 Hz activity suppression appears to be greater over the central scalp for viewing conditions depicting (hand) action, and greater over the occipital scalp for conditions presenting non-action related visual material (Perry et al., 2011). Suppression in the beta band power has also been reported for viewing hand motion relative to moving scenery (Darvas et al., 2013). In contrast, activity in the gamma band has been reported to be augmented to viewing biological motion relative to non-biological motion in occipital cortices within 100 ms of motion onset (Pavlova et al., 2004, 2006). Attentional task demands will produce subsequent gamma band increases when viewing biological motion (Pavlova et al., 2006). Importantly, oscillatory EEG changes can occur across a number of frequency bands to viewing hand motion stimuli (Perry et al., 2011), as well as to executing hand movements (Waldert et al., 2008).

Other studies using static faces have shown that oscillatory activity in the beta band is increased to viewing a familiar face relative to an unfamiliar one (Ozgoren et al., 2005), and differential frontocentral activity to some emotions, as displayed by static faces, has been observed in alpha, beta (Guntekin and Basar, 2007) and gamma (Balconi and Lucchiari, 2008) bands. Interestingly, occipitotemporal ERP activity (N170/M170) and oscillatory EEG/MEG (gamma) activity can be dissociated by different stimulus manipulations involving static faces and face parts (Zion-Golumbic et al., 2007, 2008; Gao et al., 2013). The relationship between the N170 and gamma activity appears to be a complex one and both phenomena can exist at the same recording locations, as evidenced by direct invasive recordings from human occipitotemporal cortex (Engell and McCarthy, 2011; Caruana et al., 2013).

The current study had two purposes. First, using line-drawn face stimuli, we investigated if viewed eye gaze changes in their most basic and schematic form would produce similar ERP changes to those seen previously with natural images of faces (Puce et al., 2000), potentially paralleling results observed with mouth movements in real and line-drawn faces (Puce et al., 2003). Here we used mouth movements and a non-face motion control as comparison conditions. Second, we also investigated ERSP data to these visual motion stimuli, with the explicit purpose of characterizing the total neural activity during changes in the apparent motion stimuli. We analyzed the spectral components in the alpha (8 – 12 Hz), beta (12 – 30 Hz), and gamma (30 – 50 Hz) EEG frequency-bands as they evolved over the post-movement epoch. So as to be able to make a direct comparison between ERP and ERSP data, we analyzed data from occipitotemporal electrodes in each hemisphere. An additional analysis as also performed for ERSP data from central sites overlying the sensorimotor strip.

We hypothesized that N170 elicited to line-drawn face movements: (i) would be larger and earlier to mouth opening relative to closing, replicating our previous study (Puce et al., 2003); (ii) would be larger and earlier to averted compared to direct gaze, as previously observed with images of natural faces (Puce et al., 2000); (iii) would distinguish between biologically related motion (eye and mouth movements) relative to non-biologically related motion (consisting of two scrambled motion conditions) as observed previously (Puce et al., 2000; Puce et al., 2003). Based on the very small existing literature, we hypothesized that oscillatory activity in alpha, beta and gamma bands in the occipitotemporal scalp would be more prominent for the biological motion stimuli relative to the controls.

2. MATERIALS AND METHODS

2.1 Participants

High-density EEG and behavioral data were collected from 25 healthy, right-handed participants. All participants had normal or corrected-to-normal vision. The study protocol was approved by the Institutional Review Board at Indiana University (Bloomington), and all participants provided their written informed consent for the study.

Data from 3 participants were excluded from further analysis due to a large amount of artifactual EEG contamination from facial and neck muscle activity. Hence, data from 22 participants (11 male, 11 female) with an average of age 25.7 years (range 19 – 37 years) were submitted for analysis. The 22 participants were right-handed, as assessed by the Edinburgh Handedness Inventory (mean: R63.5, SD: 21) (Oldfield, 1971).

2.2 Stimuli and procedure

Stimuli

The face stimuli had been originally created from a multimarker recording of facial expressions using specialized biological motion creation software from which lines were generated between some of the point lights [Elite Motion Analysis System (BTS, Milan, Italy)]. The control stimulus had originally been created by extracting line segments from the line-drawn face and spatially re-arranging them in the visual space in an earlier version of the Photoshop Creative Suite (Adobe Systems, Inc.), so that the face was no longer recognizable (Puce et al., 2003).

The existing line-drawn face and control stimuli were modified for the current study in Photoshop CS5 (Adobe Systems, Inc.) by adding a schematic iris to the face which when spatially displaced could signal a change in gaze on the stimulus face. A direct gaze consisted of a diamond-shaped schematic pupil added to the center of each schematic eye. Averted gaze consisted of an arrow-shaped schematic pupil that was moved to the extremity of the schematic eye (Fig. 1). Thus, by toggling the two schematic eye conditions, observers saw a convincing `direct' vs `away' (averted) gaze transition in the line-drawn face. The effect of smooth movement was generated and no odd transition was possible (e.g. eyes looking to the right followed by eyes looking to the left). Similarly, mouth movements could also be clearly seen by toggling the stimuli across the two mouth conditions as used in a previous study (Puce and Perrett, 2003). The motion control condition was similar to that used previously, but the features making up the schematic eye stimuli were added to selected parts of the scrambled face image using Photoshop CS5. The control stimulus, had movement comparable in size and type to facial [mouth] motion. Examples of images depicting a face stimulus with direct and deviated gaze, a closed and open mouth and the two forms of control stimuli are presented in Figure 1.

Figure 1. Example of stimulus conditions.

Eye conditions are depicted in the two leftmost images [Eyes Direct and Eyes Away], where a direct gaze and deviated gaze to the left is displayed. Gaze could be also deviated to the right (not shown). Mouth conditions are depicted in the two middle images [Mouth Closed and Mouth Open]. The two control conditions [Control 1 and Control 2] are depicted in the two rightmost images. Controls were spatially scrambled versions of the face stimuli. Note that in the continuous and dynamic stimulation used in the experiment, the physical stimulus configuration for Eyes Direct and Mouth Closed are identical.

Note that the face stimulus depicting direct gaze with a closed mouth provided a type of `baseline', that is, a physically identical stimulus from which the respective gaze changes and mouth opening movements were generated. Red and white line drawn stimulus versions were constructed on a black background for faces and controls in Photoshop CS5.

Procedure

Face or control stimuli were presented using the apparent motion and additionally, the stimuli could change their appearance from white or red and vice-versa in a random manner, with one particular color persisting on average over a number of trials. The participant's task was to respond with a mouse button press to each apparent motion stimulus transition, and specifically indicate if the observed current stimulus was white or red on that particular trial. Participants pressed the left mouse button for white stimuli using the thumb of the left hand, and the right mouse button for red stimuli using the thumb of the right hand. Over the entire experiment, equal numbers of red and white stimulus transitions were presented, and were also equated across the different stimulus types (eyes, mouth, control).

The experiment consisted of 3 conditions (i.e. eyes, mouth, and control), which were presented in individual experimental runs. The `Eyes' runs consisted of the line-drawn face pseudo-randomly changing its gaze position to look at the subject directly [Eyes Direct (ED)] or averted from the subject in either the left or right direction [Eyes Away (EA)]. In the `Mouth' run, the mouth of the line-drawn face would open and close [Mouth Closed (MC) and Mouth Open (MO)]. The `Control' runs consisted of two scrambled face stimuli being toggled so as to produce an apparent motion stimulus [Control 1 (C1) – Control 2 (C2)]. The motion deflection in C2 was larger than that in C1, similar to those in the mouth opening and closing conditions. Stimulus onset asynchrony was randomly varied between 1000 and 1500 ms on each experimental trial. Each condition [Eyes, Mouth, Control] was presented in 2 separate runs to each subject, yielding a total of six experimental runs. The order of presentation of stimulus runs was randomized for all participants. Relatively short runs (approximately 5 min duration) were used to allow participants to remain still for the EEG recording and maintain their level of alertness. Each run consisted of 250 trials continuously displaying one apparent motion condition (Eyes, Mouth, Control) for approximately 5 minutes after which the participants had a self-paced break (4 breaks in total).

The experiment was run using Presentation Version 14 (NeuroBehavioral Systems, 2010). Participant reaction time and accuracy were logged, and time stamps for different stimulus types as well as accuracy for each trial were sent to the EEG system.

Participants viewed the stimuli displayed on a 64-inch plasma screen (Samsung SyncMaster P63FP, refresh rate of 60Hz) resulting in an overall visual angle of 5.4 × 3.5 (vertical × horizontal) degrees. Participants were asked to maintain fixation on the bridge of the nose on the face and on the equivalent spatial region in the control condition. Participants were instructed to press a button indicating the color perceived for each apparent motion trial (i.e. right/left mouse button for red/white, counterbalanced). The displayed color was randomly assigned to each stimulus trial (i.e. a red stimulus could be followed by several red stimuli). The task's purpose was to keep participants attentive and not to focus attention on the particular aspects of the face and control stimuli.

2.3 Behavioral data

Participants indicated the color of the stimulus on each apparent motion change by a two alternative mouse button press, using the index fingers of both hands. A 2X6 repeated measures ANOVA for stimulus color [red, white] and stimulus type [different motion conditions] was performed to assess differences in response times or in accuracies across conditions.

Only trials with correct responses were included in subsequent ERP and EEG analyses.

2.4 EEG data acquisition and preprocessing

EEG data acquisition

A Net Amps 300 high-impedance EEG amplifier and NetStation software (V4.4) was used to record EEG from a 256-electrode HydroCel Geodesic Sensor Net (Electrical Geodesics Inc.) while the participant sat quietly in a comfortable chair and performed the task in a dimly lit, humidified room. Continuous 256-channel EEG data were recorded with respect to a vertex reference using a sampling rate of 500 Hz and filter bandpass of 0.1 to 200 Hz. Impedances were maintained below 70 kΩ as per the manufacturer's recommended guidelines. Impedances were tested at the beginning of the experimental session and then once more at the half-way point of the experiment (after run 3 of 6), allowing any high-impedance electrode contacts to be corrected if necessary.

EEG data preprocessing

EEG data were first exported from EGI Netstation software as simple binary files so that all EEG pre-processing procedures could be performed using functions from the EEGLAB toolbox (Delorme and Makeig, 2004) running under the MATLAB R2010b (The Mathworks, Natick, MA, USA), including in-house routines written to run in EEGLAB. EEG data were first segmented into 1700 ms epochs with 600 ms pre-stimulus baseline and 1100 ms after apparent motion onset. [The long epoch length was chosen only for padding purposes for time-frequency analyses.]

Only EEG data from correct behavioral trials were included in the analyses. Time zero indicated the onset of apparent motion (e.g. eyes looking right). The ERP analysis was focused on a period of 200 ms pre-apparent motion and 600 ms after the apparent motion transition, and individual epochs were normalized relative to a 200 ms pre-stimulus baseline based on the event-markers, which identified each trial type. Stimulus types for each condition (i.e. eyes, mouth, and control) were averaged across all 6 runs for all participants.

EEG epochs were visually inspected to identify bad channels and sources of artifacts (e.g. channel drifts). We used Independent component analysis (ICA) in order to eliminate artifacts such as eye movements, eye blinks, carotid pulse artifact, and line-noise (Bell and Sejnowski, 1995; Delorme and Makeig, 2004). A total of 32 ICA components were generated for each participant's EEG dataset.

After pre-processing, data were re-referenced to a common average reference. Since our previous work (Puce et al., 2000; Puce and Perrett, 2003) has been reported using a nose reference the current data were also referenced to the nasion, so that ERP data could be compared across studies. Previously, N170 and vertex positive potential (VPP) amplitude has been shown to be very sensitive to reference location (Joyce and Rossion, 2005), and based on these data, the average reference has been suggested as being the most advantageous to use as it captures finer hemispheric differences and shows the most symmetry between positive and negative peaks (Joyce and Rossion, 2005).

2.5 Analysis of event-related potentials (ERPs)

ERP averaging

For ERP analysis, a digital 40 Hz infinite impulse response low-pass filter was applied to the artifact-free EEG data. Averages of artifact-free correct trials were generated for each condition and for each subject. The ERPs from all participants were averaged to generate a grand-average ERP waveform for each condition. ERP waveform morphology and also voltage topography were characterized based on group-averaged data. Based on the changes in N170 observed in our previous work (Puce et al 2003), here we explicitly focused on the N170 ERP, which was observed to have maximum amplitude topography in the occipito-temporal scalp in the data of both reference locations (i.e. nose and average references).

Two clusters of 9 electrodes, each centered on equivalent 10–10 system sites P7 and P8 (e.g. see Fig. 2), similar to locations that showed maximal amplitudes to facial motion in previous studies (Puce et al., 2000; Puce et al., 2003), were selected in each hemisphere and the aggregated data from each hemispheric cluster were used in all subsequent [ERP and ERSP] analyses.

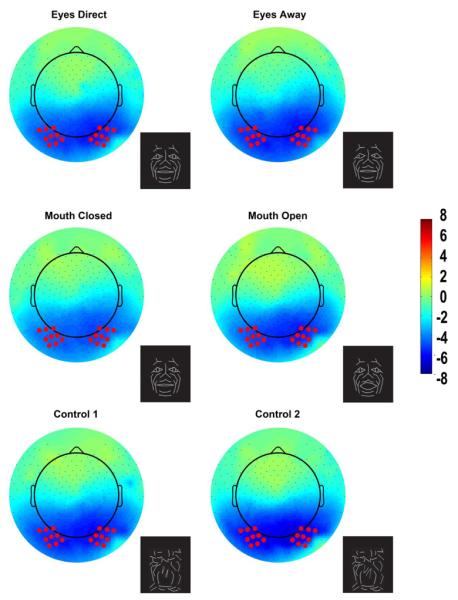

Figure 2. Group data, nose reference: Topographic voltage maps of peak N170 activity.

The N170 is distributed across the bilateral occipitotemporal scalp and appears in all conditions including the control conditions. The topographic maps are displayed in a top-down view with nose at top and left hemisphere on left. Color scale calibration bars depict amplitude in microvolts. Red circles on the maps depict the 9 electrode clusters in each hemisphere that provided input for N170 statistical analyses. Small black dots depict additional sensor locations.

Using an automated peak-picking procedure with a search time window of 150 – 250 ms after apparent motion onset, N170 amplitudes and latencies were extracted as a function of condition in the ERP data of individual participants. The mean N170 amplitude and latency for each 9-electrode cluster was calculated for each condition in each subject and provided the input for the statistical analysis.

Statistical analysis of ERP data

Differences in occipito-temporal N170 peak amplitude and latency were tested using a three-way mixed-design ANOVA: Subject Gender (Male, Female) X Hemisphere (Left, Right) X Condition (ED, EA, MC, MO, C1, C2) using SPSS for MAC 18.0 (SPSS Inc). Significant effects were identified at P values of less than 0.05 (after Greenhouse-Geisser correction). Post-hoc contrasts were evaluated using the Bonferroni criterion to correct for multiple comparisons.

2.6 Analysis of event-related spectral perturbations (ERSP)

ERSP analysis

We examine how the spectral content of the EEG varied as a function of viewing condition for EEG data re-referenced to an average reference. Hence, artifact-free behaviorally correct EEG epochs were convolved with a Morlet-based wavelet transform with a linearly increased width of cycles. Specifically, the length of the wavelet was increased linearly from 1 to 12 cycles across the frequency range of 5 to 50 Hz. The linear increment of wavelet cycles is a commonly used practice when calculating spectral components in neurophysiological data, so that temporal resolution can be comparable for lower and higher EEG frequencies (Le Van Quyen et al., 2001)(for a detailed account on methods see Hermann et al., 2005). Due to computational limitations, we limited our analyses using a frequency window that extended from 5 to 50 Hz.

Induced activity is defined as EEG activity that is elicited to the stimulus, but may not be precisely time- or phase-locked to the stimulus transition (in this case apparent motion onset). However, each individual EEG epoch will also contain evoked activity, hence a calculation of `total power', or `total activity' (i.e. sum of evoked and induced activity) in each frequency band was made for EEG epoch (Tallon-Baudry et al., 1996). In order to do this, the signals in each trial were convolved with a mother wavelet and the absolute resulting values were then averaged across trials. Hence all ERSP data presented here depict total activity.

For each step of the wavelet transform a mean amplitude-based baseline-correction procedure was applied within the −200 and 0 ms pre-stimulus range. All analyses were performed using custom in-house routines written using the EEGLAB toolbox (Delorme and Makeig, 2004) running under the MATLAB environment. ERSP plots were generated for each of the 6 stimulus conditions. Additional ERSP plots were generated for the differences between pairs of conditions within each motion type (Eyes = Eyes Direct-Eyes Away, Mouth = Mouth Closed-Mouth Open, Control = Control1-Control2), in a comparison similar to that performed with the ERP data. Finally, a comparison was generated for biological motion versus non-biological motion, consisting of (Eyes Direct, Eyes Away, Mouth Closed, Mouth Open) vs (Control1, Control2).

Statistical analysis in the time-frequency domain

We used a bootstrap approach on a cluster mass statistic in order to identify regions of significant differences in our time-frequency data (Pernet et al., 2011). The bootstrap provided an estimate of the distribution under the null hypothesis of no differences between conditions, while the cluster mass statistic identified temporal regions with significant differences while avoiding false-positives arising from multiple comparisons at different time points.

First, for each comparison between two conditions at a given frequency, the cluster mass statistic was computed for the observed data. For each time point, a t-statistic was calculated for the observed differences in means between conditions. Time points passed a threshold if their t-statistics corresponded to a p-value less than 0.05 according to the Student's t-distribution. Temporally-contiguous thresholded time points were grouped into temporal clusters. The cluster mass was computed as the sum of the t-statistics corresponding to the time points in each cluster.

To test the significance of the observed clusters' masses, bootstrap testing was performed. Bootstrap replicates of each condition were created by sampling with replacement each subject's trials irrespective of condition. In each replicate, cluster masses were computed using the procedure described above and the maximum cluster mass was recorded. Clusters in the observed data were deemed significant if their mass exceeded the maximum cluster mass of 95% of all bootstrap replicates (corresponding to significance level of 0.05). We initially ran bootstraps with 100, 500, and 1000 replicates. Because the significant differences between the latter 2 bootstraps did not differ, 1000 replicates were used for all bootstrap analyses.

All ERSP analyses and statistical testing were performed on the two respective nine electrode clusters on each occipitotemporal scalp that were centered on equivalent 10–10 electrode sites P7 and P8, and compared with ERP data at those same sites. An additional ERSP analysis was also performed in two respective nine electrode clusters on each sensorimotor scalp centered on equivalent electrode sites C3 and C4. All ERSP data were expressed relative to an average reference.

3. RESULTS

3.1 Behavioral data

Participants indicated the color of the stimulus with 98% accuracy on each apparent motion change by button press. The mean reaction time for color detection was 595.0 ± 133.0 ms (s.d) for red, and 593.8 ±141.0 ms (s.d) for white. A repeated measures ANOVA showed no significant differences in reaction time to stimulus as a function of color or condition (motion type).

3.2 N170 ERPs

N170 amplitudes and latencies for each electrode cluster were extracted for each participant and condition for subsequent statistical testing using both an overall average reference and nose reference. All stimulus conditions produced a robust N170 (Fig. 4), which was maximal over the occipito-temporal scalp for both nose (Fig. 2) and average reference (Fig. 3) data. The topographic maps were plotted at the time point at which the N170 was maximal in amplitude. The results for analyses using the average and nose reference are reported separately below.

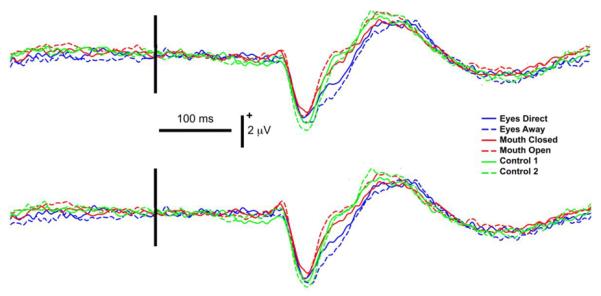

Figure 4. Group data, average reference: ERPs from left (L) and right (R) occipitotemporal electrode clusters as a function of stimulus type.

Legend: Line colors indicate corresponding stimulus conditions with respective eye conditions shown in blue, mouth conditions in red and controls in green. Vertical black bar superimposed on the ERP waveforms denotes motion onset. Vertical and horizontal calibration bars denote amplitude in microvolts and time in milliseconds, respectively.

Figure 3. Group data, average reference: Topographic voltage maps of peak N170 activity.

The N170 is distributed across the bilateral occipitotemporal scalp and appears in all conditions including the control conditions. The topographic maps are displayed in a top-down view with nose at top and left hemisphere on left. Color scale calibration bars depict amplitude in microvolts. Red circles on the maps depict the 9 electrode clusters in each hemisphere that provided input for N170 statistical analyses. Small black dots depict additional sensor locations.

Nose Reference

The mean N170 amplitudes and latencies for each condition and hemisphere are shown in Table 1 and corresponding topographic maps appear in Figure 2. Statistical testing via three-way repeated-measures ANOVA for N170 peak amplitude differences did not show any significant main effects or interaction effects. The repeated-measures ANOVA for N170 latency revealed a significant main effect of condition [F(3.3, 66.1)=3.60, P=0.015]. No other statistically significant main effects of hemisphere or gender, or interaction effects were observed for N170 latency. For the significant main effect of condition, contrasts revealed that the effect was driven by the shorter latencies to the Mouth Open condition compared to Eyes Away (mean difference: 14.3 ± 4.0 ms). No other differences between conditions were observed.

Table 1.

Group data, nose reference: Mean and standard (Std) errors of N170 peak amplitude (μV) and latency (ms) as a function of stimulus type for right and left hemispheres.

| Hemisphere | Condition | Peak Ampl (μV) | Std Error | Peak Lat (ms) | Std Error |

|---|---|---|---|---|---|

| Left | Control 1 | −6.36 | 0.51 | 212.81 | 3.40 |

| Control 2 | −7.35 | 0.45 | 215.13 | 3.96 | |

| Mouth Closed | −5.92 | 0.61 | 218.68 | 5.11 | |

| Mouth Open | −6.24 | 0.59 | 208.00 | 3.97 | |

| Eyes Away | −6.69 | 0.54 | 222.00 | 4.72 | |

| Eyes Direct | −6.35 | 0.55 | 218.72 | 4.16 | |

| Right | Control 1 | −6.34 | 0.48 | 212.36 | 3.60 |

| Control 2 | −7.20 | 0.49 | 213.09 | 2.92 | |

| Mouth Closed | −6.09 | 0.62 | 217.31 | 4.77 | |

| Mouth Open | −6.39 | 0.55 | 214.63 | 4.25 | |

| Eyes Away | −7.20 | 0.50 | 229.27 | 5.00 | |

| Eyes Direct | −6.50 | 0.58 | 214.45 | 3.52 |

Data from male and female participants have been combined. Legend: Ampl = amplitude; Lat = latency.

Average Reference

N170 latency and amplitude data for each condition and hemisphere are shown in Table 2. Statistical analysis of N170 peak amplitude differences revealed a significant main effect for condition [F(3.310, 66.195)= 6.135, P<0.001]. The main effects for hemisphere and gender were not significant. Moreover, no interaction effects were found between condition, hemisphere, and gender.

Table 2.

Group data, average reference: Mean and standard (Std) errors of N170 peak amplitude (μV) and latency (ms) as a function of stimulus type and hemisphere.

| Hemisphere | Condition | Peak Ampl (μV) | Std Error | Peak Lat (ms) | Std Error |

|---|---|---|---|---|---|

| Left | Control 1 | −3.02 | 0.30 | 218.73 | 3.56 |

| Control 2 | −3.57 | 0.30 | 216.00 | 3.94 | |

| Mouth Closed | −2.63 | 0.30 | 222.91 | 5.94 | |

| Mouth Open | −3.37 | 0.38 | 219.55 | 4.88 | |

| Eyes Away | −2.86 | 0.24 | 239.41 | 6.25 | |

| Eyes Direct | −2.86 | 0.28 | 233.68 | 5.73 | |

| Right | Control 1 | −3.19 | 0.27 | 223.45 | 4.86 |

| Control 2 | −3.79 | 0.33 | 224.09 | 5.51 | |

| Mouth Closed | −3.06 | 0.32 | 225.32 | 5.96 | |

| Mouth Open | −3.65 | 0.34 | 213.41 | 3.46 | |

| Eyes Away | −3.41 | 0.29 | 233.91 | 5.90 | |

| Eyes Direct | −3.30 | 0.32 | 227.36 | 5.70 |

Data from male and female participants have been combined. Legend: Ampl = amplitude; Lat = latency.

For the significant main effect of condition, contrasts revealed that N170 amplitude was greater for Mouth Open relative to Mouth Closed (mean difference: 0.66 ± 0.26 μV), replicating a previous study (Puce et al., 2003). Additionally, a significant difference was observed in the amplitudes between the two control conditions: Control 2 N170 amplitude was greater than that of Control 1 (mean difference: 0.57 ± 0.13 μV). Other significant differences between conditions consisted of Control 2 to Mouth Closed (mean difference: 0.83 ± 0.17 μV); and Control 2 to Eyes Away (mean difference: 0.54 ± 0.16 μV).

The repeated-measures ANOVA for N170 latency revealed a significant main effect of condition [F(3.333,66.667)=5.424, P<0.001]. No other statistically significant main effects of hemisphere or gender, or interaction effects were observed for N170 latency. For the significant main effect of condition, contrasts revealed that the effect was driven by the shorter latencies to the Mouth Open condition compared to Eyes Away (mean difference: 20.2 ± 4.9 ms). Both of these conditions occur following the `baseline' (physically identical) stimulus, which consists of a direct gaze and closed mouth. No other differences between conditions were observed.

3.3 Event related spectral perturbations (ERSPs)

Total activity

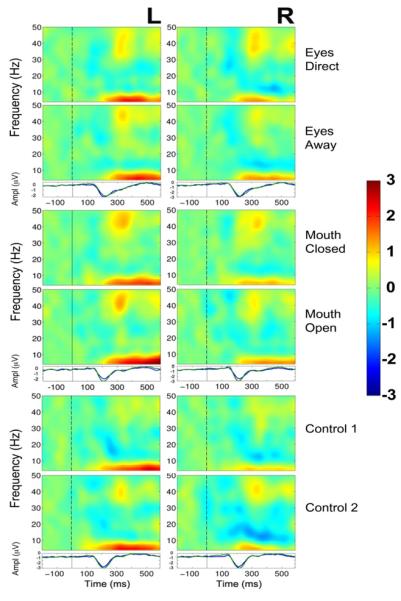

All ERSP analyses were performed on EEG data that had been digitally re-referenced to an average reference. ERSP plots of wavelet decomposition of single EEG trials demonstrated clear activity in selected EEG bands in all conditions (Fig. 5). Indeed, the activity profile was quite similar across all conditions and consisted of a prolonged burst of activity in the theta (4 - 8 Hz) and alpha (8 – 12 Hz) frequency bands in both electrode clusters. Other increases in activity in the form of a 30 – 50 Hz gamma burst peaked at 300 ms after apparent motion onset, predominantly for eyes and mouth conditions and control 2. In contrast, prolonged apparent decrements of activity in the beta range (12 – 30 Hz) were seen in most of the conditions, extending from approximately 150 ms post-movement onset until the end of the trial.

Figure 5. Group data, average reference: ERSP plots and averaged ERPs as a function of condition pair and hemisphere.

Left (L) and right (R) hemisphere data are presented in left and right columns, respectively. In each three-part display panel total ERSP activity is shown for each respective stimulus condition in each pair (top two plots) together with the ERP waveforms for the same two conditions (bottom plot). The top panel depicts activity for the two eye conditions: Eyes Direct and Eyes Away. The middle panel displays activity for the 2 mouth conditions: Mouth Closed and Mouth Open, and the bottom panel displays data for the two control conditions, Control 1 and Control 2. For ERSP plots the y-axis displays frequency (Hz) and the x-axis displays time (ms). Power (decibels) of ERSP activity, decibels being a default unit used in analysis packages such as EEGLAB (Delorme and Makeig, 2004), is depicted by the color calibration bar at the right of the figure. For ERP plots, the y-axis depicts amplitude (microvolts) as a function of time. For ERSP and ERP plots, the vertical black broken line displays time of motion onset.

In order to better identify differences in these total activity neural profiles across conditions, differential ERSP plots were created between the respective pairs of conditions (Eyes, Mouth and Control) and statistically significant differences between conditions were seen in the beta (12 – 30 Hz) and gamma (30 – 50 Hz) frequency bands. We discuss these differences for each type of stimulus below, and the overall findings are summarized in Table 3.

TABLE 3.

Summary of ERSP findings by hemisphere and condition differences.

| Conditions | Oscillatory activity differences | |||

|---|---|---|---|---|

| R Hemisphere | L Hemisphere | |||

| < 250 ms | > 250 ms | < 250 ms | > 250 ms | |

| Eyes | ||||

| Direct–Away | - | - | β ↑ EA: 100 | β ↑ EA: 350–550 |

| (ED-EA) | β ↑ ED: 390–490 | γ ↑ ED: 550 | ||

| Mouth | ||||

| Closed – Open | - | β ↑ MC: 450 | - | |

| (MC-MO) | γ ↑ MO: 550 | γ ↑ MO: 550 | ||

| Control | ||||

| C1–C2 | - | - | γ ↑ C1: 100 | γ ↑ C1: 450–500 |

Increases in relative ERSP power in one condition relative to another occurred only in the beta and gamma EEG bands, seen as discrete bursts of activity with approx. timing indicated in milliseconds (ms) after motion onset. LEGEND: R=right, L=left, ↑ = ERSP power increase, ED = Eyes direct, EA = Eyes Away, MC = mouth closed, MO = mouth open, C1 = Control 1.

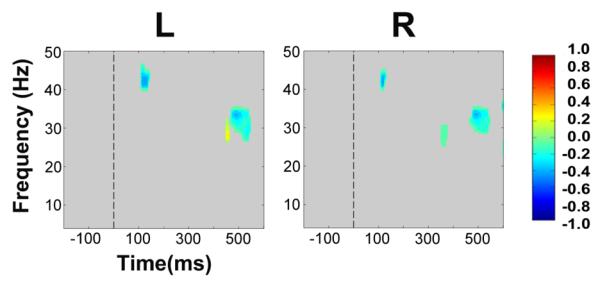

Eyes

The differential ERSPs for the Eyes conditions produced the most extensive differences in total activity. Statistically significant differences between the two eye conditions were seen at approximately 100 ms after the gaze change for the difference between Eyes Direct and Eyes Away in the beta band between 20 – 25 Hz in the left hemisphere (Fig. 6 upper left panel). Specifically, increased power in the beta frequency band occurred for Eyes Away relative to Eyes Direct (as depicted by the cool colors in Fig. 6). Later in time, a subsequent beta band signal, peaking between 20 – 30 Hz, occurred after the gaze change in both hemispheres. In the left hemisphere, as for the earlier beta band activity, there was increased power in the Eyes Away condition relative to Eyes Direct at 20 – 30 Hz between 350 – 550 ms after the gaze change. In contrast, in the right hemisphere, a shorter and more focused burst of beta oscillatory power was observed which peaked at around 25 Hz between approximately 390 – 490 ms, with increased power to the Eyes Direct condition relative to Eyes Away. The only other statistically significant observation was a late high-frequency component in the gamma band at around 550 ms in the left hemisphere. Oscillatory power peaked between 30 – 40 Hz and was strongest for the Eyes Direct condition.

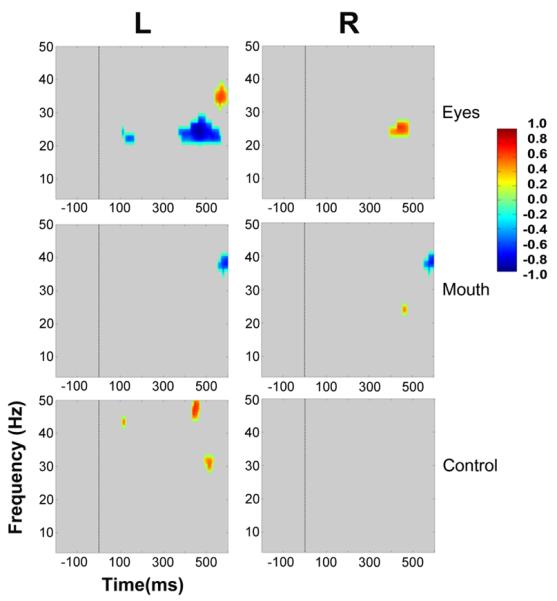

Figure 6. Group data, average reference: Statistically significant ERSP plot differences between stimulus conditions as a function of hemisphere.

Eyes, Mouth and Control conditions appear in top, middle and bottom panels, respectively. For Eyes, the ERSP plot from Eyes-Away has been subtracted from Eyes-Direct. For Mouth, Mouth-Open was subtracted from Mouth-Closed, and for the Control, Control 2 was subtracted from Control 1. Frequency (Hz) is displayed as a function of time (ms). Differential power in decibels for ERSP activity is depicted by the color calibration bar at the right of the figure. Warm colors depict relative EEG signal power increases, and cool colors indicate relative EEG signal power decreases. Grey areas in the plot indicate regions where the differences between conditions were not significant.

Mouth

In contrast to Eyes, we observed no statistically significant differences in oscillatory power for the Mouth conditions (the difference between Mouth Closed and Mouth Open) before approximately 400 ms. In the right hemisphere statistically significant beta activity peaked at 25 Hz with a much more restricted time and frequency distribution when compared to its Eye condition counterpart. This occurred at 450 ms and was stronger for the Mouth Closed condition. Finally, stronger gamma band power for the Mouth Open condition was observed bilaterally at around 550 ms after the mouth movement and peaked between 35 and 40 Hz.

Control

Interestingly, no significant differences were seen in the right hemisphere cluster for ERSP differences between control conditions. In the left hemisphere, for the control condition, the only statistically significant differences that were seen occurred in the gamma band. An early (peaking at 100 ms) difference between Control 1 and Control 2 displayed augmented gamma power at 45 Hz for Control 1. Changes occurring later in time were seen at 450 ms after the display change and extended between 45 – 50 Hz, and also at 500 ms peaking at 30 Hz. All these differences were consistently stronger for Control 1.

Biological motion vs non-biological motion

We pooled ERSP data from all biological (face) motion conditions separately to non-biological motion conditions and generated difference ERSP plots to identify activity that was selective to viewing biological (facial) motion (Fig. 7). Overall, bilateral occipitotemporal activity consisted of mainly of a augmentation of activity for viewing the non-biological motion condition: (1) a brief gamma (40 –45 Hz) burst at, or after, 100 ms; (2) gamma activity at a lower set of frequencies between 25 – 35 Hz occurred relatively late in the epoch, at around 450 – 550 ms in the left occipitotemporal cluster and at around 325 ms and also 450 – 550 ms in the right cluster. Given the relative nature of this comparison, an alternative explanation for these data could be a suppression of activity to viewing biological motion, in line with existing literature (see Discussion).

Figure 7. Group data, average reference: Statistically significant ERSP plot differences between biological (face) motion versus non-biological motion conditions as a function of hemisphere.

Left (L) and right (R) hemisphere data are presented in left and right columns, respectively. Frequency (Hz) is displayed as a function of time (ms). Differential power in decibels for ERSP activity is depicted by the color calibration bar at the right of the figure. Warm colors depict relative EEG signal power increases for biological motion, and cool colors indicate relative EEG signal power decreases for biological motion. Grey areas in the plot indicate regions in the ERSP plot where differences between conditions were not significant.

ERSP activity at central sites overlying the sensorimotor strip

So as to be able to place our data into the context of existing literature dealing with oscillatory EEG/MEG responses to biological motion, we chose a 9 electrode cluster centered around international 10–20 sites C3 and also C4 [left hemisphere electrodes 51,52,58,59 (C3),60,64,65,66,72, and in the right hemisphere 155,164,173,182,183 (C4),184,195,196,197]. ERSP plots were generated for each condition. Difference plots between respective eye, mouth and control conditions were generated and statistically significant data points were identified [1000 bootstraps].

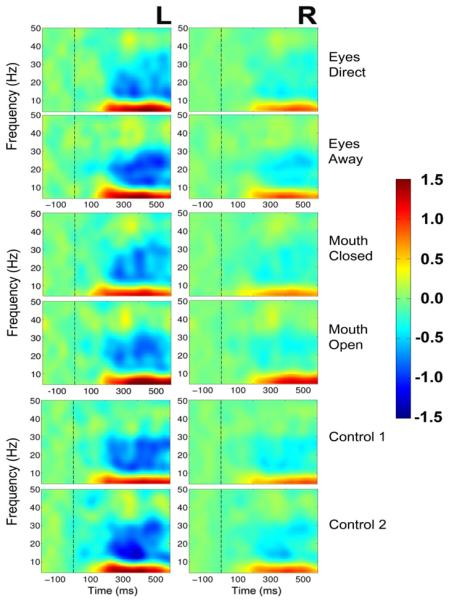

Figure 8 depicts ERSP activity from central sites for all stimulus conditions. Bilateral theta and low alpha band enhancement begins around 180 ms post-motion onset and persists for the entire epoch in all stimulus conditions similar to that elicited in both occipitotemporal clusters (compare Figs. 5 and 8). At central sites there was a striking broadband suppression of activity in the beta band for all stimulus conditions, in the left central cluster from about 250 ms post-motion onset, which persisted for the entire epoch. In the right central cluster, similar broadband suppression of activity was observed for the control conditions only. In contrast, the eye and mouth conditions showed a much lesser degree of beta broadband suppression in the right central cluster.

Figure 8. Central sites, group data, average reference: ERSP plots as a function of condition pair and hemisphere.

Left (L) and right (R) hemisphere data are presented in left and right columns, respectively. The top panel depicts activity for the two eye conditions: Eyes Direct and Eyes Away. The middle panel displays activity for the 2 mouth conditions: Mouth Closed and Mouth Open, and the bottom panel displays data for the two control conditions, Control 1 and Control 2. For ERSP plots the y-axis displays frequency (Hz) and the x-axis displays time (ms). Power (decibels) of ERSP activity is depicted by the color calibration bar at the right of the figure. The vertical black broken line displays time of motion onset.

Statistically significant differences between conditions at central sites were confined to frequencies between 4 – 15 Hz (theta, alpha and low frequency beta) (Fig. 9), contrasting with occipitotemporal sites where condition differences occurred at much higher frequencies (high beta/gamma ranges) (Fig. 6). For the eye conditions, isolated bursts of significantly different activity occurred at around 150 – 200 ms in the left central clusters encompassing theta/alpha/low beta bands [signifying larger activity to Eyes Away, or alternatively smaller activity to Eyes Direct]. In the right central cluster a similar relative power change occurred in the same time range, but was confined to a narrower frequency band (10 – 15 Hz). For the Mouth conditions significantly different activity was seen only in the left central cluster only [larger for Mouth Closed than Mouth Open] in the alpha/low beta range (8 – 15 Hz) at around 150 ms. Significant differences in 4 – 12 Hz (theta/alpha) activity in the Control Conditions occurred later in the epoch than for the Eye and Mouth conditions – at 220 – 300 ms in both left and right central clusters. An additional change in activity also occurred around 325 – 350 ms in the left central cluster in the low beta band. All changes in activity to the control conditions were attributed to a larger amount of activity in Control 2 relative to Control 1.

Figure 9. Central sites, Group data, average reference: Statistically significant ERSP plot differences between stimulus conditions as a function of hemisphere.

Eyes, Mouth and Control conditions appear in top, middle and bottom panels, respectively. For Eyes, the ERSP plot from Eyes-Away has been subtracted from Eyes-Direct. For Mouth, Mouth-Open was subtracted from Mouth-Closed, and for the Control, Control 2 was subtracted from Control 1. Frequency (Hz) is displayed as a function of time (ms). Differential power in decibels for ERSP activity is depicted by the color calibration bar at the right of the figure. Warm colors depict relative EEG signal power increases, and cool colors indicate relative EEG signal power decreases. Grey areas in the plot indicate regions where the differences between conditions were not significant.

Evoked and induced activity

We also assessed differences in time-frequency analyses of evoked and induced activity. Evoked activity was calculated from the averaged ERP data. Induced activity was calculated by subtracting the evoked activity from the total activity. Statistical analyses were performed between the difference ERSP plots across conditions, in a similar manner to the analysis performed for total activity described above. No significant differences were observed in evoked activity ERSPs, and differences in induced activity paralleled those described for total activity above. We chose to report total activity here instead of induced activity because of constraints in computing power.

4. DISCUSSION

4.1 ERP effects

We aimed to extend our previous work with ERPs as well as examining differences in oscillatory activity (ERSPs). Real dynamic faces with mouth opening and gaze aversion elicit larger and earlier N170s relative to mouth closing and eyes gazing at the observer (Puce et al. 2000, 2003), and Johansson-like faces also show similar N170 effects with mouth movements (Puce et al., 2003). Additionally, the same types of N170 effects were also seen using dynamic isolated eye stimuli derived from real face images (Puce et al., 2000). Here we studied ERPs to apparent eye motion expecting to find similar differential effects to gaze aversions as depicted in real faces: namely that larger N170 amplitude and longer N170 latencies would be observed to averted gaze relative to direct gaze. However, ERP data in this study showed no significant differentiations in N170 amplitude or latency across eye gaze changes: robust N170 components were seen to both conditions. Thus, unlike for images of real faces, the Johannson-like face stimuli showing gaze changes did not elicit the differentiation of ERP activity. In contrast, N170 to mouth opening movements was significantly larger to mouth closing movements – replicating findings with both real and Johannson-like images of faces (Puce et al., 2003). Having said that, in the previous study, N170s to faces (be it real or line-drawn) were significantly larger than the N170s elicited to motion controls – a finding that was not observed in the current study. Indeed, one of the control stimuli (C2), in which the motion profile was comparable to that of mouth motion, actually elicited the largest N170 amplitude of all stimulus categories. The data acquired in the current study raise some interesting issues regarding the use of impoverished dynamic face stimuli in social cognition that we address below.

4.1.1 Articulated versus non-articulated biological motion

Dynamic displays of facial emotion have previously been created by placing markers on regions of the facial musculature that exhibit the greatest amount of movement during the emotional display (Bassili, 1978; Delorme et al., 2004). Behavioral data indicate that the Johannson face displays of emotion must be dynamic to be recognized optimally, and that overall participants are more accurate on emotion judgments in real faces relative to Johannson faces (Bassili, 1979). In the stimuli used in the current study, only isolated changes to the facial stimulus occurred involving only the mouth or only the eyes, unlike those occurring in Johannson face displays in which the configuration of the entire face can change. One possibility for the lack of differentiation in ERPs attributes in the current study, relative to other studies using real images of faces, might be that the changes in the visual display might not produce detectable changes in neural activity across conditions, as the stimuli are too subtle.

Biological motion stimuli, depicting hand or body motion, are typically constructed with markers on the joints, allowing the essential elements of articulated human motion to be captured unambiguously (Bonda et al., 1996; Grossman et al., 2000; Johansson, 1973; Perry et al., 2010). However, the face does not have this type of structure – apart from a pivot point for the jaw e.g. during mouth opening and closing movements. Interestingly, the articulated mouth motion in the current study did produce differences between viewing conditions, paralleling differential ERP findings with real images of faces with articulate movements of the mouth (Puce et al., 2000), as well as movements of the hands and legs (Wheaton et al., 2001). Lateral or vertical gaze movements or blinks do not involve articulated biological motion, and in the current study using line-drawn faces no differences were demonstrated between eye movement conditions. If the neural response to a biological motion stimulus is driven more strongly by the motion of articulated body parts (Beauchamp et al., 2002; Peuskens et al., 2005) then that might explain some of the findings observed in the current study.

4.1.2 The nature of the face stimulus itself

The impoverished nature of the line-drawn dynamic face-stimulus may produce a number of contrast and brightness effects in the visual system. In a natural human face the iris and sclera typically have quite a high contrast relative to the rest of the face as well as to each other, unlike those in non-human primates (Emery, 2000; Rosati and Hare, 2009). This ensures that gaze changes in human social interactions can be monitored even when the individual is well beyond our personal space. The idea that the human brain is sensitive to changes in eye white area has been previously suggested, with activation in the amygdala and other regions being sensitive to this stimulus dimension (Hardee et al., 2008; Whalen et al., 2004). From a neurophysiological perspective, a high contrast stimulus such as a checkerboard has long been known to elicit stronger responses in sensory systems relative to lower contrast stimuli (e.g. see Chiappa and Yiannikas, 1983; Reagan, 1972). Therefore, human eyes signaling gaze changes are a visual stimulus that should produce a vigorous response in the visual system based on luminance and contrast changes alone from the movement of the high-contrast white sclera and colored iris. Behavioral studies indicate that apparent shifts in gaze direction are perceived when lateral parts of the sclera are selectively darkened (Ando, 2002, 2004). Thus, the previously seen differences in the neurophysiological signal triggered by eye movements may somehow depend on the white sclera-to-dark iris relation, which was not present in our face stimuli (Fig. 1).

One additional possibility is that brain does not treat the line-drawn face stimulus as a face. Our eye stimuli in the direct gaze configuration consisted of a diamond shape to signal direct gaze. Averted gaze was signaled by diamond shape changing to an arrow-like stimulus (> or <) within the outline of the eye. [Note however, that at debriefing on completion of the experiment, all participants claimed that the eye and mouth movements of the face were convincing.] We note that some studies of spatial attention cuing have used both arrows as cues, as well as schematic eyes. Most of these studies indicate that both types of cue can induce reflexive shifts of spatial attention, leading to the suggestion that a common brain mechanism underlies this spatial cueing effect (Ando, 2004; Friesen and Kingstone, 1998; Frith and Frith, 2008). Interestingly, spatial cueing from eye gaze has been found to be disrupted in a patient with a lesion of the right superior temporal sulcus, while spatial cues from arrows remained intact (Akiyama et al., 2007). A similar dissociation was shown in a group of 5 patients with unilateral amygdala lesions (3 left, 2 right) (Akiyama et al., 2007), suggesting that multiple brain loci are active to this important stimulus category. Taking into account this existing literature on spatial cueing, we speculate that our lateral gaze cues in the form of arrows may not have elicited activation in the brain systems known to respond to eye gaze cues. Additionally, there would have been little advantage in viewing these arrows in a paradigm where spatial cueing was not the active experimental manipulation. Therefore it might well be that our ERPs to the dynamic eye changes reflect a response to the arrow-like nature of the stimulus, so that there was no effective difference between the `face' and non-face control.

4.1.3 Neural responses to motion in general, or generalized visual processing

From fMRI studies it is known that the human STS shows a sensitivity to biological motion, whereas hMT+ is highly active to many types of motion stimuli, including biological motion stimuli (Bentin and Golland, 2002; Peuskens et al., 2005; Puce et al., 1998). In the human brain, these anatomical regions are located in relatively close proximity to one another. Averaged ERPs cannot readily discriminate neural activity of spatially proximal generators whose activity overlaps in time e.g. hMT+ and STS. If indeed neural activity to the dynamic line-drawn faces and their associated motion controls was generated by both STS and hMT+, then it is likely that differences between the face conditions and the control conditions might not be detectable with ERP measures, unlike with fMRI (see Peuskens et al., 2005).

The amount of motion in the visual field and its relationship to the neural response needs also to be considered. In the current experiment, motion in the control stimulus was directly comparable to mouth motion. Stimulus C2 and Mouth Opening had identical motion excursions (as did C1 and mouth closing) and notably, the N170s elicited to C2 and Mouth Opening were not significantly different from one another, but were significantly larger to other stimulus categories (including C1, Mouth Closing, Eyes Away). These observations are consistent with neural responses being driven by the size of the motion transition itself, again suggesting that the line-drawn face stimulus might not be treated as a face early in the processing stream when examining our evoked neural activity data. If the neural activity was driven mainly by hMT+ in this experiment, then it would be predicted that the amount of motion in the stimulus would predominantly drive the response, rather than the difference between the face stimulus and a non-face control. Viewing eye changes in a two dimensional image of a dynamic real face, or viewing a face in a live interaction may set-up a mechanism of automatic processing in the brain that is mainly driven by brain structures such as the STS and amygdala, among others (e.g. Hardee et al., 2008). As already noted, our eye stimuli might likely have driven neural activity in hMT+ and perhaps the STS, however, only an fMRI study would allow us to resolve this issue.

There is also the possibility that the observed visual ERPs (N170) might not reflect responses to motion per se, but might instead be consistent with activity in non-motion sensitive visual cortex. Alternating visual checkerboard stimuli can elicit very robust visual ERPs over the occipitotemporal scalp (Chiappa and Yiannakis, 1983), and observers often report the sensation of viewing a set of moving checks. The full-field checkerboard response can be elicited in electrodes spread from T5, O1, Oz, O2, to T6 and clinical recording configurations to hemi-field stimulation will include these lateral temporal sites (Misulis and Fakhoury, 2001).

4.1.4 Effects of stimulus context

The presence of other stimulus types within an experimental design might have an influence on how neural activity manifests during the experiment. Stimulus-induced context effects have been reported in the literature (Bentin and Golland, 2002; Latinus and Taylor, 2006). For example, a stimulus-induced context effect was observed in an ERP study using (static) schematic faces and spatial rearranged versions of these same stimuli (Bentin and Golland, 2002). The spatially jumbled stimuli were presented in experimental blocks 1 and 3, with the schematic faces being presented in block 2, and line drawings of objects were presented in block 4. N170s were elicited to all stimulus categories, and as expected were largest to the intact schematic faces. Yet strikingly, N170s to jumbled schematic faces in block 3 were larger than those observed in block 1 – being modulated or primed after exposure to the intact schematic faces in block 2 (Bentin and Golland, 2002). In our previous study, we have presented our line-drawn facial stimuli and associated controls in an experiment where all stimulus conditions occurred within each experimental block, together with images of real faces and their associated controls e.g. Puce et al., 2003. It is possible, then, that the amplitude modulation in N170 to the line-drawn face mouth movements was primed by the presence of their corresponding real face counterparts making equivalent facial movements.

4.1.5 Task requirements

Notably, in all of our previous studies, we obtained neural responses to implicitly processed stimuli: most our stimulus paradigms involved passive viewing (Puce et al., 2000; 2003), with the exception of one task in which participants had to detect a non-face target stimulus that was superimposed on the train of dynamic real face stimuli (Brefczynski-Lewis et al., 2011). In this latter study similar modulatory effects of eye aversion were seen on N170. Here, we asked participants to respond to each motion transition with a button press, in a color judgment task. Task requirements are thought to modulate how these social cognitive neural responses evolve, making it an important consideration to study neural responses to the same stimuli under implicit and explicit viewing conditions (Frith and Frith, 2008). It is known that N170s in static face manipulations are larger and are more delayed when the subject's task does not specifically involve face processing (Latinus and Taylor, 2006). Hence, in our current study we predicted that we would observe significant N170 differences between our respective dynamic face conditions, as well as differences of facial motion relative to a motion control, since we were using an incidental experimental manipulation. It is possible that our color judgment task interfered with the processing of the facial stimulus. We believe that this is unlikely, as N170 does not appear to be modulated by chromatic to greyscale manipulations – at least to static real faces (Allison et al., 1999), however, it should be noted that negatives of real face stimuli elicit larger and slower N170s, similar to those observed with inverted real faces (Itier and Taylor, 2002).

4.1.6 Effects of reference electrode

One potential variable that may influence how N170 characteristics appear between conditions is that of the reference electrode (e.g. Joyce and Rossion 2005; Rellecke et al., 2013; Murray et al., 2008) In a study using static faces that depicted emotional faces, N170 differences between conditions were less pronounced for mastoid references relative to the average reference (Rellecke et al., 2013). Also, the N170 and VPP have also been shown to vary in amplitude considerably as a function of a discrete reference electrode (e.g. nose, mastoid) and an average reference. The differences in reference electrode configuration might likely influence how the activity (of multiple generators) might play out at the scalp (George et al., 1996; Murray et al., 2008). The average reference is being advocated as a reference of choice in high-density EEG datasets (Murray et al., 2008) in which source modeling and functional connectivity analyses are being performed. With this in mind, we analyzed our data both using a nose reference and an average reference.

To allow comparison with future studies we expressed and analyzed our data with this reference configuration. So as to be able to more directly compare the current data with our previous studies, we chose to express the data using a nose reference configuration (e.g. Puce et al., 2000; Puce et al., 2003). What is interesting about the current dataset is that similar to what has been reported in the literature using static faces: the ERP data relative to nose reference did not show any significant differences in N170 amplitudes or latencies across any of our dynamic stimulus conditions. The average reference dataset did show differences between the mouth conditions, similar to those seen to real faces analyzed with a nose reference. This does indeed suggest that the average reference may be able to pull out more subtle differences between stimulus conditions relative to discrete reference electrode configurations.

4.2 ERSP effects

Overall, the N170 data argue for the idea that the line-drawn (impoverished) face stimuli might not have been treated as faces/eyes in early stages of the processing stream (< 250 ms). We conducted a statistical analysis on differences between stimulus conditions in our ERSP data obtained from the same electrodes in which ERP analysis was completed. Statistically significant differences between conditions were observed in the time-frequency domain when total (evoked + induced) activity was examined. Hence, given that no significant differences in oscillatory behavior were observed in a separate analysis on evoked activity, it appears that the statistically significant differences in total activity profile were driven by induced activity. While the significant effects were complex (summarized in Table 3), they were nevertheless confined to the beta and gamma EEG bands only.

Strikingly, no significant changes were observed in the right occipitotemporal region at times less than 250 ms post-motion onset – the time interval at which the largest ERPs were observed. Earlier we claimed that the stimuli might not be treated as faces (as seen in the ERP data), so it is possible that the early beta burst (100 to ~180 ms) in the left hemisphere may not be a response to facial motion, as that the literature indicates a predominantly right hemispheric bias for face processing (Corballis, 1997; Davidson, 1988; Perrett et al., 1988; Rossion et al., 2000; Rossion et al., 2003; Simon-Thomas et al., 2005). Given its early occurrence, it is possible that this activity may index the processing of motion per se rather than being related to observing facial motion, as also reflected by the gamma burst for difference between control conditions, for reasons already outlined in the previous section on ERPs.

We have made the claim that neural responses to the line-drawn face stimuli (and their controls) are likely to be driven by motion per se, where considering N170 ERP data. However, there were some intriguing differences in the ERSP data between conditions. The bulk of significant differences in ERSP power across conditions occurred for latencies greater than 250 ms, and were observed for the Eye and Mouth conditions in both hemispheres. It is interesting that the only late gamma differences for the Control conditions were again confined to the left hemisphere. In contrast, late effects in the Eye and Mouth conditions were observed in both the beta and gamma EEG bands in the right hemisphere, with beta activity being augmented first for the mouth closed condition and then followed in time by increased gamma activity to the mouth open condition in the right hemisphere. An additional late gamma burst coincident with the right hemisphere burst was also seen in the left hemisphere. That there are significant differences in oscillations across the eye and mouth conditions at certain time points that are not present to the control conditions might be indicative that the eye and mouth stimuli are being processed as faces to some degree, despite the large overall responses that are related to motion per se as indicated by the responses to the controls.

If indeed the changes in oscillatory activity reflected neural activity due to face processing, then the increased power in the right occipitotemporal beta band (peaking at ~25 Hz ~380 to 450 ms) with similar properties for both Mouth Closed and Eyes Direct conditions (Fig. 5) could be interpreted in the light of previous literature that has linked activity in the beta band to face processing and recognition, with factors such as facial expression and familiarity modulating activity in this frequency band (Guntekin and Basar, 2007; Ozgoren et al., 2005). We thus suggest that this later beta component (~400 to ~500 ms), elicited only for face-like stimuli and significantly augmented for Mouth Closed and Eyes Direct in the right hemisphere cluster, might reflect the processing of the motion of faces or face-like stimuli. Importantly, this is a distinction occurring late in time, consistent with the observation of no face/non-face differences seen in the N170 ERP component.

Given the fact that the mouth closed and eyes direct stimuli were physically identical to each other and only differ in the context of the previous stimulus that was presented; it is not surprising that they share some properties in total neural activity. Indeed, a statistical comparison between differences of these two conditions (not shown) showed no significant differences in oscillatory activity in any frequency band, We therefore believe that the differences in oscillatory activity within mouth or eye conditions is driven by the context provided by the preceding apparent motion stimulus (i.e. averted gaze or mouth opening).

Overall, the differences in oscillatory activity between the eye conditions were the most extensive relative to our other statistical comparisons (see Fig. 6, top row). This is interesting, given that the motion changes in the eye stimuli were smaller than those to the mouth stimuli and the controls, arguing against the idea that this neural activity merely reflects the extent of the motion change in the visual field. Alternatively, then, the activity might reflect processing of the eye gaze changes in the face – a socially important stimulus (Conty et al., 2007; Puce et al., 2000). Beta oscillations peaked at ~25 Hz in 2 time windows, first between 100 and ~180 ms and then between ~380 and ~550 ms in the left hemisphere cluster only for Eyes Away. The first beta burst might reflect the salience of the movement represented by the Eyes Away condition when compared to Eyes Direct, eliciting significant activity within the first 200 ms after movement onset. The second left hemisphere beta burst to Eyes Away, sharing similar time/frequency properties with a right-sided beta burst to Eyes Direct further supports the idea that changes in gaze direction are important in terms of facial biological motion (Puce et al., 2000). The functional significance of these hemispheric differences in oscillatory activity to the gaze changes is not known and will require further experimentation to determine what drives these differences.

Interestingly, a late gamma burst in the left electrode cluster, elicited preferentially to Eyes Direct shared similar time/frequency properties to a bilateral gamma burst that was significantly stronger for Mouth Open. In real life, a direct gaze is a signal of potential social contact, and an opening mouth is a signal of an impending vocalization. An intriguing possibility is that both these stimuli generate approach-related neural responses. It is generally acknowledged that approach and withdrawal are two important behavioral dimensions in social behaviors (Elliot, 2006; Young, 2002). Schutter et al. (2001) suggested that hemispherically asymmetrical high-frequency activity might be linked to approach and withdrawal behaviors, as observed to faces depicting anger and happiness. Specifically, increments in beta oscillations in the right parietal scalp were suggested as reflecting an index of avoidance (Schutter et al., 2001). Additionally, induced high-frequency oscillations in the gamma-band have been linked to enhanced processing of positive affect in static face stimuli (Heerebout et al., 2013). It is difficult to relate our results to these studies, as in our study there was no affective component to the stimuli, nor was the task one that required a social judgment. Additionally, our study used a dynamic line-drawn face stimulus, as opposed to the other studies that presented static images of real faces. If we consider the hemispheric approach/avoidance distinction of Schutter (2013), our data to not conform neatly to this schema. The gamma burst in our data was bilaterally stronger for Mouth Open (an approach type stimulus), and stronger for Eyes Direct only in the left hemisphere. Eyes Away (an potential avoidance type stimulus) elicited beta burst in the left hemisphere – completely at odds to this schema. Interestingly, the control stimuli, which cannot be regarded in the approach/withdraw dimension, did not induce activity at the particular time and frequency points in question. Thus, we could make the case that these late high-frequency oscillations might be correlated with the processing of some aspect of the social relevance of our stimuli. Further experimentation using tasks where participants make explicit social judgments would be needed to understand the functional significance of these differences in oscillatory activity.