Abstract

Background

The Carotid Revascularization Endarterectomy versus Stenting Trial (CREST) received five years’ funding ($21,112,866) from the National Institutes of Health to compare carotid stenting to surgery for stroke prevention in 2,500 randomized participants at 40 sites.

Aims

Herein we evaluate the change in the CREST budget from a fixed to variable-cost model and recommend strategies for the financial management of large-scale clinical trials.

Methods

Projections of the original grant’s fixed-cost model were compared to the actual costs of the revised variable-cost model. The original grant’s fixed-cost budget included salaries, fringe benefits, and other direct and indirect costs. For the variable-cost model, the costs were actual payments to the clinical sites and core centers based upon actual trial enrollment. We compared annual direct and indirect costs and per-patient cost for both the fixed and variable models. Differences between clinical site and core center expenditures were also calculated.

Results

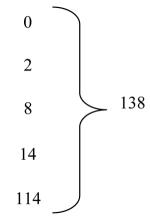

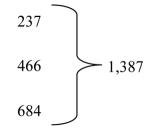

Using a variable-cost budget for clinical sites, funding was extended by no-cost extension from five to eight years. Randomizing sites tripled from 34 to 109. Of the 2,500 targeted sample size, 138 (5.5%) were randomized during the first five years and 1,387 (55.5%) during the no-cost extension. The actual per-patient costs of the variable model were 9% ($13,845) of the projected per-patient costs ($152,992) of the fixed model.

Conclusions

Performance-based budgets conserve funding, promote compliance, and allow for additional sites at modest additional cost. Costs of large-scale clinical trials can thus be reduced through effective management without compromising scientific integrity.

Keywords: Clinical trial, Cost factors, Economics, Carotid endarterectomy, Carotid stenting, Stroke

Introduction

Large multi-site randomized clinical trials are threatened by increasing costs1,2 and often deplete funding prior to achieving targeted enrollment.3 Creative strategies can maximize the yield of the dollar amounts available and increase the sources of financial support for these mega-trials. An analysis of the effect of changing from one budgetary model to another can evaluate the efficient use of trial funds and serve as a knowledge base for future trial budgets.4

Aims

This paper reports a fee-for-performance approach to the management of a large randomized clinical trial. We describe mechanisms used to conserve funding and provide proactive financial planning to inform management of future multicenter trials.

Methods

The Carotid Revascularization Endarterectomy versus Stenting Trial (CREST)5,6 is a consortium of 1) the core centers (university-based administrative, statistical and data management, recruitment, and central reading laboratories), 2) the clinical sites, and 3) the trial device manufacturer (the Investigational Device Exemption [IDE] sponsor). To evaluate the change in the CREST budget from a fixed to variable model, financial projections of the original fixed-cost model were compared to the actual costs of the revised variable-cost model based upon a per-patient fee-for-performance structure. Both models were evaluated in the context of a 5-year grant award of $21,112,866 and an approval of 40 clinical sites by the United States National Institutes of Health (NIH) to enroll 2,500 patients.

Operational costs were calculated from the start date of the award until funding would have been exhausted. For the fixed-cost model, costs were based upon the simulated scope of work and the projected costs contained in the grant. Site payments were calculated from the date of contract execution with each of the first 40 sites. The core centers’ direct and indirect costs in the five-year award were added. Enrollment was based upon the number of patients randomized from trial initiation until grant funding would have been expended.

For the variable-cost model, the costs were payments to the clinical sites and core centers based upon the actual trial enrollment and follow-up visits. The line-items for physicians’, coordinators’, and technicians’ salaries, mailings, and indirect costs were replaced by payments for trial visits completed and case report forms submitted. Site subcontract budgets included payments linked to the importance of the visit: $1,500 for enrollment, $450 for long clinic visits, $225 for short clinic visits, $100 for telephone contacts, and $500 for the final clinic visit.Reimbursement for enrollment visits was increased (March 2005) from $1,500 to $2,500 per patient when recruitment lagged. Budgets for several core centers were converted to fee-for-service for consultants or per-test reading payments, and the number of core centers was reduced. Nonessential study visits were eliminated.

We compared annual direct and indirect costs and a per-patient cost for both the fixed and variable models. For awarded dollars, we compared the maximum years of operation, the number of sites activated annually, the number of patients randomized, and the maximum months of patient follow-up that would be supported by each of the models. Differences between clinical site and core center expenditures were also calculated.

We determined the costs supported by industry for monitoring, regulatory personnel, regulatory travel, and regulatory consultants. The costs funded by the primary academic grant holder (University of Medicine and Dentistry of New Jersey [UMDNJ]-New Jersey Medical School) for previously unbudgeted contractual and legal consultant costs were assessed as well.

Results

A comparison of fixed- and variable-cost models is shown in Table 1. For the fixed-cost model, the NIH National Institute of Neurological Disorders and Stroke (NINDS) award of $21,112,866 would have been expended in 5.2 years. The 40 clinical sites would have randomized 138 participants with a mean follow-up of 9.5 months at a per-patient cost of $152,992. The variable-cost model used in CREST resulted in 8.0 rather than 5.2 years of trial activity with 109 clinical sites (nearly three times more) activated to randomize 1,525 participants (1,387 more) with a mean follow-up of 16.9 months (7.4 months longer) at a $13,845 per-patient cost (9% of the fixed model cost). Expenditures for both models were similar in the total amount spent on the centralized core centers as opposed to the clinical sites, 72.8% versus 27.2% for the fixed model, and 71.6% versus 28.4% for the variable model.

Table 1.

Comparison of the fixed and variable models.

| Years of Operation |

# Sites Activated | # Randomizations | Mean (Maximum) Follow-up Months |

Operational Costs (Direct and Indirect) |

||||

|---|---|---|---|---|---|---|---|---|

| Fixed Model |

Variable (Actual) |

Fixed Model |

Variable (Actual) |

Fixed Model |

Variable (Actual) |

Fixed Model |

Variable (Actual) |

|

| Year 1 | 0 | 0 | 0 | 0 | 0 | 0 | 2,605,781 | 559,135 |

| Year 2 | 3 | 1 | 2 | 2 | 0 | 0 | 2,872,113 | 637,477 |

| Year 3 | 21 | 1 | 8 | 8 | 310 | 660 | 4,122,613 | 2,081,926 |

| Year 4 | 15 | 6 | 14 | 14 | 266 | 756 | 5,419,289 | 2,128,128 |

| Year 5 | 1 | 26 | 104 | 114 | 728 | 4788 | 5,383,104 | 2,654,320 |

| Year 6* | 0 | 17 | 10* | 237 | 10* | 7110 | 709,966* | 3,244,169 |

| Year 7 | 0 | 38 | 0 | 466 | 0 | 8388 | 0 | 4,511,547 |

| Year 8 | 0 | 20 | 0 | 684 | 0 | 4104 | 0 | 5,296,164 |

| TOTAL | 40 | 109 | 138 | 1525 | 1314 | 25806 | 21,112,866 | 21,112,866 |

Fixed model ended first quarter of year 6.

Because activity was matched to funding in the variable-cost model budget, the NINDS-funding period could be lengthened by a no-cost extension from five to eight years (Table 2). During this three-year period, activation of study sites increased from 52 to 98, randomizing sites tripled (34 to 109), and 9,528 follow-up visits occurred. Of the targeted sample size, 138 (5.5%) were randomized during the first five years and 1,387 (55.5%) during the no-cost extension years contributing to completion of enrollment in 20087 and publication of results in 2010.6

Table 2.

Effect of financial management on site activation and recruitment in CREST.

| Five-Year Award 1999 - 2003 |

Actual Expenditures |

Sites Activated for Randomization |

Participants Randomized |

|

|---|---|---|---|---|

| Year 1 (1999) | 636,000 | 559,135 | 0 |

|

| Year 2 (2000) | 5,117,671 | 637,477 | 1 | |

| Year 3 (2001) | 5,047,412 | 2,081,926 | 1 | |

| Year 4 (2002) | 5,077,718 | 2,128,128 | 6 | |

| Year 5 (2003) | 5,234,065 | 2,654,320 | 26 | |

| Year 6 (2004) | 0 | 3,244,169 | 17 |

|

| Year 7 (2005) | 0 | 4,511,547 | 38 | |

| Year 8 (2006) | 0 | 5,296,164 | 20 | |

| TOTAL | $21,112,866 | $21,112,866 | 109 | 1525 |

The device manufacturer (Abbott Vascular) provided $5,469,097 in additional funding from 2003 through 2006, to support the regulatory requirements of the IDE (not part of the grant or grant scope of work); $2,780,920 for site monitoring, $1,189,010 for clinical research personnel, $335,641 for FDA regulatory consultant services, $345,025 for site initiation and physician training, $518,068 for research coordinator training meetings, $40,000 for other costs, and $260,433 for indirect costs. The academic grant holder (UMDNJ) provided $313,200 from 2003 to 2006 ($78 300 annually) for a full-time legal professional, and reduced indirect costs from 25% to 5% for disbursements provided by the device manufacturer.

Discussion

In CREST, changing from a fixed-cost to a variable-cost model allowed extension of the project period by three years, tripling of the number of clinical sites, and marked acceleration of patient enrollment. The fee-for-performance principle was accomplished through reimbursement for completed clinical research forms (CRFs) resulting from actual patient visits and evaluations. CRF reimbursement rewarded centers during times of activity and conserved funds during times of inactivity (e.g. when recruitment was temporarily stopped for regulatory review of a device modification8).

Per-case payments linked to the number of cases needed to reach target enrollment allowed for the activation of a substantially larger number of clinical sites without increasing the amount spent from the award. This expansion of sites accelerated enrollment at a significantly lower per-patient cost, and the lower per-patient cost provided three additional productive years of training, site activation, enrollment, and patient follow-up (Table 1). By the end of 2006, enrollment and site activation were sufficient to convince NINDS-peer reviewers to approve continued funding from 2007 through 2011.

Financial management and budgeting cannot be the sole explanations for the success or failure of a large complex multicenter clinical trial. For CREST, the costs to maintain the regulatory training and oversight required by the IDE were not covered by the grant. The device manufacturer (Abbott Vascular) contributed $5,469,097; direct costs of site monitoring alone were $2,780,920, or $1,115 per patient. The academic grant holder provided $313 200 and reduced indirect charges from 25% to 5% for payments from the device manufacturer.

Conclusions

Applying business methods and practices to clinical trial management can improve efficiencies. Performance-based budgets that reimburse sites for data submitted conserve funding during slow recruitment, promote visit and data compliance, and allow for site expansion at a modest cost. Additional support from industry for unbudgeted resources enhances productivity. Costs of large-scale clinical trials can thus be reduced through effective management without compromising scientific integrity.

Acknowledgments

The study was funded by the National Institute of Neurological Disorders and Stroke of the National Institutes of Health (NS038384) with supplemental funding provided by Abbott Vascular (formerly Guidant).

Footnotes

ClinicalTrials.gov Identifier: NCT00004732

Conflicts of Interest: None declared.

References

- 1.Hilbrich L, Sleight P. Progress and problems for randomized clinical trials: from streptomycin to the era of megatrials. Eur Heart J. 2006;27:2158–64. doi: 10.1093/eurheartj/ehl152. [DOI] [PubMed] [Google Scholar]

- 2.Marler JR. NINDS clinical trials in stroke: lessons learned and future directions. Stroke. 2007;38:3302–7. doi: 10.1161/STROKEAHA.107.485144. [DOI] [PubMed] [Google Scholar]

- 3.McDonald AM, Treweek S, Shakur H, et al. Using a business model approach and marketing techniques for recruitment to clinical trials. Trials. 2011;12:74. doi: 10.1186/1745-6215-12-74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kovacevic M, Odeleye OE, Sietsema WK, Schwarz KM, Torchio CR. Financial concepts to conducting and managing clinical trials within budget. Drug Information Journal. 2001;35:1031–8. [Google Scholar]

- 5.Sheffet AJ, Roubin G, Howard G, et al. Design of the Carotid Revascularization Endarterectomy vs. Stenting Trial (CREST) Int J Stroke. 2010;5:40–6. doi: 10.1111/j.1747-4949.2009.00405.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Brott TG, Hobson RW, 2nd, Howard G, et al. Stenting versus endarterectomy for treatment of carotid-artery stenosis. N Engl J Med. 2010;363:11–23. doi: 10.1056/NEJMoa0912321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Longbottom ME, Roberts JN, Tom M, et al. Interventions to increase enrollment in a large multicenter phase 3 trial of carotid stenting vs. endarterectomy. Int J Stroke. 2012;7:447–53. doi: 10.1111/j.1747-4949.2012.00833.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hobson RW, 2nd, Howard VJ, Brott TG, et al. Organizing the Carotid Revascularization Endarterectomy versus Stenting Trial (CREST): National Institutes of Health, Health Care Financing Administration, and industry funding. Curr Control Trials Cardiovasc Med. 2001;2:160–4. doi: 10.1186/cvm-2-4-160. [DOI] [PMC free article] [PubMed] [Google Scholar]