Abstract

The catalytic potential of community-based organizations to promote health, prevent disease, and address racial, ethnic, and socio-economic disparities in local communities is well recognized. However, many CBOs, particularly, small- to medium-size organizations, lack the capacity to plan, implement, and evaluate their successes. Moreover, little assistance has been provided to enhance their capacity and the effectiveness of technical assistance to enhance capacity is likewise limited. A unique private-academic partnership is described that simultaneously conducted program evaluation and addressed the capacity needs of 24 CBOs funded by the Pfizer Foundation Southern HIV/AIDS Prevention Initiative. Assessments of key program staff members at 12 and 18 months after the initial cross-site program assessment survey indicated a significant improvement in the CBOs’ knowledge, skills, and abilities and a substantial reduction in their technical assistance needs for HIV/AIDS prevention. Full participation of CBOs in technical assistance and a concurrent empowerment evaluation framework were necessary to enhance prevention capacity.

Keywords: Capacity building, Technical assistance, Prevention, HIV/AIDS, Community-based organizations, Planning, Evaluation

1. Introduction

Perhaps more so than any other phenomenon of public health importance and threat, the HIV/AIDS pandemic resoundingly ushered forward the essential participatory role of community-based organizations (CBOs) in better understanding social and behavioral determinants of health and providing guidance for local community disease prevention activities. The solicitation of CBOs in prevention efforts by academia and health agencies is also a professional acknowledgement of the lack of understanding of sociocultural and contextual factors of local communities and an honest recognition of the CBOs’ expertise in this regard. Today, CBOs are considered a basic component of the public health infrastructure and a cornerstone of strategies in eliminating racial, ethnic, and socioeconomic health disparities.

Significant funding has been provided directly to CBOs or to CBOs and their academic or health and human services agency partners to enhance health education, promote health, improve health status and healthcare quality, and prevent disease in communities that are at greater risk for HIV/AIDS, obesity, chronic conditions, infant mortality, under-immunization, and other conditions. The Centers for Disease Control and Prevention (CDC) has been instrumental in funding and supporting community-based interventions to (1) assess the primary health issues in local communities, (2) develop measurable objectives to assess progress in addressing these health issues, (3) select effective interventions to help achieve these objectives, (4) implement the selected interventions, and (5) evaluate the selected interventions (CDC community tool box). Furthermore, the CDC HIV/AIDS Prevention Initiatives position CBOs as a core component of this national strategy and focus on strengthening their capacities to monitor the HIV/AIDS epidemic and plan, implement, and evaluate their programs (Centers for Disease Control and Prevention, 2001b).

While the catalytic potential of CBOs to promote and sustain health, prevent disease, and address health disparities in local communities is well recognized, the measurable effectiveness of CBOs is yet to be realized. They have yet to develop beyond a foundational, albeit very important, infrastructure of coalition building, general planning, and limited action plan development, whereby reduction in disease risk and disparities is expected in the future (Compton, Glover-Kudon, Smith, & Avery, 2002; Love, 1991; Stockdill, Baizerman, & Compton, 2002). Many CBOs, particularly, small- to medium-size organizations, lack the capacity to plan, implement, and evaluate their successes (Richter et al., 2000). Thus, in an era of increasing fiscal accountability and evidence-based practices, CBOs may be perceived as having limited effectiveness or an effectiveness that is yet undeterminable (Centers for Disease Control and Prevention, 2001a,b; Richter et al., 2000; Rugg et al., 1999). Moreover, little assistance beyond the CDC’s initiatives has been provided to CBOs to enhance their capacity to implement effective and sustainable HIV/AIDS prevention programs (Cheadle et al., 2002; Gibbs, Napp, Jolly, Westover, & Uhl, 2002; Napp, Gibbs, Jolly, Westover, & Uhl, 2002; Richter et al., 2000). Furthermore, information on the impact of technical assistance to enhance the capacity of CBOs is likewise limited (Richter et al., 2007; Chinman et al., 2005; Sobeck & Agius, 2007).

While CBOs’ capacity may be defined here as the ability to plan and deliver HIV/AIDS education and prevention programs (Hawe, Noort, King, & Jordens, 1997; Meissner, Bergner, & Marconi, 1992; Roper, Baker, Dyal, & Nicola, 1992; Schwartz et al., 1993), it also involves knowledge, skills, and willingness of individuals, the funding agency, and, in this case, the academic evaluation team to collaborate to establish common goals, articulate technical assistance needs, and develop agreed upon and appropriate responses to maximize program success (Biegel, 1984; Eng & Parker, 1994; Raczynski et al., 2001; Thomas, Israel, & Steuart, 1984). In this article, we describe the impact of the efforts of a unique private–academic partnership which simultaneously conducted program evaluation and addressed the capacity needs of CBOs to plan and implement HIV/AIDS prevention interventions. The Pfizer Foundation funded, from 2004 to 2006, 24 previously established small to mid-size CBOs to provide HIV/AIDS education and prevention programs to multicultural, rural, and urban communities throughout 9 states of the southern region of the United States (Alabama, Florida, Georgia, Louisiana, Mississippi, North Carolina, South Carolina, Tennessee, and Texas) (Mayberry et al., 2008). The Pfizer Foundation contracted with Morehouse School of Medicine Prevention Research Center (MSM PRC) in March 2004 to provide overall evaluation of the “Pfizer Foundation Southern HIV/AIDS Prevention Initiative” and to provide technical assistance to enhance the CBOs’ capacity to successfully impact the HIV/AIDS epidemic in local communities and for the entire high-prevalence southern region.

As part of its overall evaluation, MSM PRC developed and conducted an initial cross-site program assessment survey (C-PAS) of the CBOs to determine their knowledge, skills, and abilities for planning and conducting community interventions and their technical needs to be successful in their efforts (Mayberry et al., 2008). The C-PAS was conducted at 2 subsequent time periods to determine changes in knowledge, abilities, and technical needs as a result of our capacity-building efforts with the funded CBOs. While there is no prevailing theory of technical assistance for capacity building (Sobeck & Agius, 2007), we actively engaged CBOs’ executives and program staff members in formal didactic presentations, in-person training workshops, teleconferences, and web conferences (all with feedback and discussion sessions) designed to enhance CBOs’ abilities to plan, implement, and evaluate their community-level interventions to prevent HIV/AIDS. We report here the enhanced capacity of CBOs participating in this 3-year Southern HIV/AIDS Initiative for HIV/AIDS interventions as captured by the C-PAS.

2. Methods

2.1. Description of the initiative CBOs

We have previously described the Pfizer Foundation Southern HIV/AIDS Initiative, its participating CBOs, the methods and procedures of C-PAS, and the results of the initial C-PAS (Mayberry et al., 2008). In brief, the initiative originally funded 24 CBOs in 9 southern states to provide effective HIV education and prevention programs in multicultural, rural, and urban communities for the calendar years 2004 through 2006. Twenty-three of the organizations re-applied and were successful in obtaining continuation funding from Pfizer for year 2005; 22 received continuation funding in year 2006.

The CBOs were small to mid-size organizations, with as few as two full-time paid program staff members and as many as 40 paid and volunteer staff reported. All CBOs had annual budgets under $1 million. Based on the initial C-PAS, the vast majority of the CBOs had strong community bases of volunteers and established collaborations and partnerships with other CBOs (Mayberry et al., 2008). The CBOs also had established relationships with local clinics and health facilities, colleges and universities, and local churches. Most of the CBOs intervened with a specific racial/ethnic minority group even though services were provided to other ethnic groups in the community. Through the initiative, CBOs served peer educators, youth community organizations, high schools and middle schools, substance abusers, and incarcerated individuals. The more common types of interventions or services provided were health communication and public information, group-level interventions, outreach, testing, and counseling and referrals (Mayberry et al., 2008).

2.2. Data collection: C-PAS

The first C-PAS was conducted among the grantees in February to March 2005, prior to MSM-PRC evaluation capacity-building activities and as our initial assessment of CBOs’ initial capacity for planning, implementing, and evaluating community-based HIV/AIDS education and prevention interventions. The second C-PAS was conducted in February to March 2006, prior to Year 3 evaluation capacity-building activities. The final C-PAS was conducted in September to October 2006 at the conclusion of Year 3 activities. The second and third C-PAS administrations were conducted to determine whether knowledge, skill, and abilities improved over time.

The self-administered C-PAS questionnaire was mailed through the U.S. Postal Service and sent electronically to the executive director and a primary program staff member of each of the CBOs. The executive director was involved in the conceptualization of the program and had ultimate responsibility for its success. The program staff member was responsible for day-to-day program activities. The questionnaire was sent again approximately 2 weeks later. Two final follow-up phone calls were made and e-mails sent to each key staff member as a reminder to complete and return the questionnaire. The survey response rate was 84.8% for the first C-PAS, 78.3% for the second C-PAS, and 93.2% for the third C-PAS.

The C-PAS questionnaire was designed to be completed in 15–20 min and to capture the key personnel’s self-perceived knowledge and skills relating to key steps in developing, implementing, and evaluating a community-based intervention as well as the organization’s specific abilities to perform essential functions such as develop community relationships, educate and train program staff and participants, collect appropriate data, analyze collected data, and conduct specific program activities, such as HIV/AIDS counseling, testing, and referrals. It was adapted from a previous instrument used successfully in substance abuse intervention training, pretested among community coalition board members, and reviewed and modified after the initial C-PAS.

We used knowledge here to refer to the individual understanding of queried activities and skills to represent individual proficiency to perform certain activities. Knowledge and skills were measured on a five-point Likert scale using the six key variable constructs of community program planning and implementation: problem identification, needs assessment, developing goals and objectives, gathering program input and feedback, prevention implementation planning and implementation, and evaluation of community intervention. The C-PAS ability items (also a five-point Likert scale) required the staff members to assess their organizational ability to perform each of 20 activities of program planning and implementation (Mayberry et al., 2008). Technical assistance needs were assessed (as a yes/no variable) for the following: logic model development, data collection tool development, data management, protocol development, qualitative and quantitative methods, and evaluation.

2.3. Technical assistance for capacity building

A thorough review of the grantees’ first-year proposals, initial conference calls, and the initial conduct of the C-PAS provided a basis on which to plan and implement strategies to meet technical assistance needs of the CBOs. While technical assistance was more inclusive than evaluation capacity, our ultimate goal was to enhance the CBOs’ abilities to articulate their successes and accomplishments of proposed interventions. Thus we thought it appropriate to label all technical assistance as evaluation capacity building, which encompassed knowledge, skills, and abilities measures of program interventions development, implementation, and evaluation, as captured by the C-PAS questionnaire. Technical assistance was provided by the MSM PRC team (3.3 full-time equivalents), which included a team manager (with academic training in evaluation), a lead evaluator (with academic training in epidemiology and evaluation), a psychologist, a mid-level epidemiologist, a graduate research assistant, and an administrative assistant. Technical assistance used a participatory evaluation framework (Cheadle et al., 2002; Miller, Kobayashi, & Noble, 2006), which included formal didactic presentations, in-person training workshops, teleconferences, site visits, and web conferences designed to enhance CBOs’ abilities to plan and implement their community-level interventions to prevent HIV/AIDS and participate fully as key partners in the evaluation. All sessions were planned to be interactive with scheduled feedback and discussions to maximize input from CBO participants and insure they had opportunities to ask questions, provide comments and anecdotes of experiences, and help set priorities for upcoming technical assistance sessions. Clarifying measurable objectives and developing logic models became core to the initial technical assistance provided. Table 1 contains the schedule and content description of the technical assistance provided.

Table 1.

Schedule and content description of technical assistance provided.

|

Pfizer Foundation Southern HIV/AIDS Prevention Initiative Orientation to Evaluation, June 2, 2004: An introduction to evaluation philosophy and methods and procedures of community intervention planning, implementation, and evaluation. |

| 1st cross-site program assessment survey (C-PAS) conducted: February-March 2005 |

|

Cross-site Evaluation Teleconferences and Site Visits, Fall 2004: Conducted teleconference with each grantee to gain better insights into the background of the programs, current status of intervention(s) development, and future plans. Subsequent site visits to each organization were also conducted by a member of the evaluation team and a member from Pfizer to gain additional insights of organizations environments, HIV/AIDS and other programs. |

|

Training Workshop, May 11–12, 2005: Conducted in response to identified evaluation challenges and capacity-building needs during project year 2004, including data collection methods, tools, and analysis; database development; and logic model review. |

|

Training Teleconferences, April–August 2005: A total of 12 teleconferences were designed and conducted to facilitate evaluation capacity-building opportunities through (a) reinforcement of intervention skills attained, (b) provision of an outlet for evaluation resource-sharing, and (c) discussion of real-time evaluation case studies that may be applied to individual program activities. |

| 2nd C-PAS conducted: February–March 2006 |

|

Training Conference, June 14–16, 2006: Provided in-depth training in areas identified through evaluation of C-PAS findings, capacity-building activities, and feedback from grantees, including developing survey questions and focus group guides; qualitative and quantitative data entry, management, and analysis; and use of collected data. |

|

Training Web Conferences, March and August, 2006: Two capacity-building training web conferences were developed and facilitated Year 3, designed to prepare grantees for sustained programmatic and evaluation activities beyond the last year of the initiative. Each web conference was offered twice, on separate days, to allow for small group interaction among 9–11 grantees, and to accommodate scheduling needs. |

|

Program Assessment Teleconferences, August 9–10, 2006: One-on-one, semi-structured teleconferences were conducted to gain better insight into grantees’ technical assistance needs for completion of the 2006 Program Assessment and continued evaluation challenges that remain at the conclusion of the initiative. |

| 3rd C-PAS conducted: September–October 2006 |

2.4. Data analyses

Descriptive analyses of the first C-PAS, conducted during February and March of 2005, have been previously reported (Mayberry et al., 2008). Here we assess changes in CBOs’ capacities for planning, implementing, and evaluating community-based HIV/AIDS education and prevention interventions at the second C-PAS, conducted a year later during February and March of 2006, and the third and final C-PAS, conducted during September and October of 2006, i.e., at the conclusion of Year 3 activities. Initial analyses indicated no statistically significant differences between executive directors and primary program staff members in knowledge and skill scores and technical needs assessment. This pattern continued in both the second and third C-PAS administrations, and the 2 groups were combined in all subsequent analysis.

For each C-PAS, descriptive analyses were used to evaluate each survey response variable (Pagano & Gauvreau, 2000; Zar, 1999). Ordinal variables were expressed as means and standard deviations or medians and interquartile ranges (IQR) when C-PAS data were not normally distributed or did not have the same distributions for time periods being compared. Categorical variables were expressed as proportions. Analysis of variance (ANOVA) was used to determine whether mean values were different across the 3 administrations of C-PAS (Zar, 1999). We used the median test or the Kruskal–Wallis rank test to assess whether there were statistically significant differences in knowledge, skill, and ability scores over the 3 C-PAS administrations, when scores were not normally distributed (Pagano & Gauvreau, 2000). Post hoc analyses of specific item score comparisons used non-parametric tests as appropriate. The chi-square test for homogeneity of the three proportions was conducted on each dichotomous variable of technical skills (Zar, 1999).

3. Results

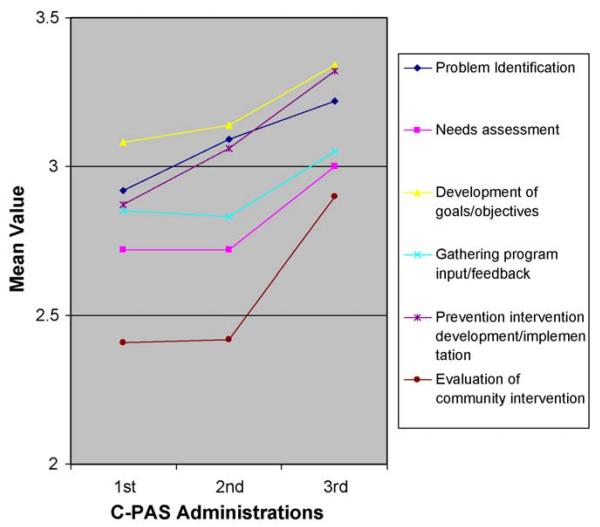

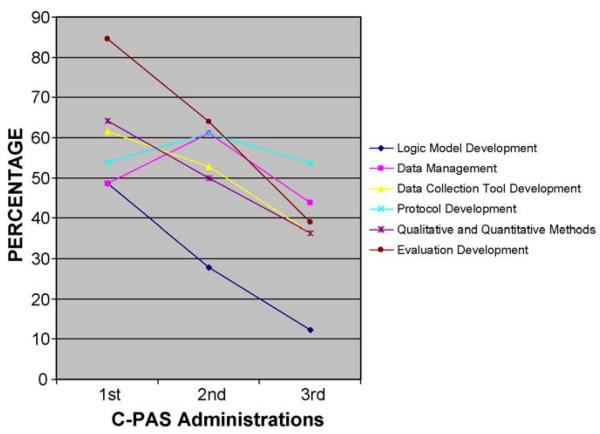

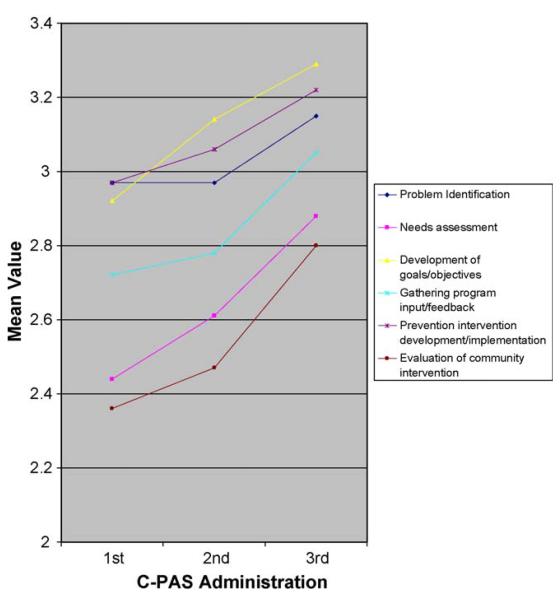

The upward trend in the key program staff self-assessment of individual knowledge and skill levels to plan, implement, and evaluate community-based programs, as captured by the three administrations of C-PAS, are depicted in Figs. 1-3. Fig. 1 indicates a modest but steady increase over the time periods in knowledge scores for problem identification, development of goals and objectives, and development of prevention intervention, that is, areas in which staff indicated knowing “a lot” on a five-point scale of 0 (none) to 4 (extensive) level of knowledge. Indications of knowledge gain were more dramatic between the second and third C-PAS for gathering program input and feedback from community participants, needs assessment, and evaluation of community interventions. These were the areas with initially lower knowledge scores (Mayberry et al., 2008). An indication of a gradual upward trend in the program staff’s self-assessed abilities to perform each of the six steps in community intervention planning and implementation was also observed (Fig. 2). Regarding organizational abilities, program staff indicated a consistent, although mostly marginal, increasing trend in their CBOs’ abilities to plan and implement community interventions in 12 of 20 specific activities (Table 2). These activities included abilities to recruit community volunteers; develop intervention-specific logic models; assess knowledge, behavior, and attitude of program participants; enter collected data into the computer; analyze collected data; train peer mentors; and develop educational brochures and pamphlets. Statistically significant trends in ability scores, however, were observed only for developing data collecting tools (p = 0.002), analyzing collected data (p = 0.011), and training public speakers (p = 0.017).

Fig. 1.

Cross-site program assessment survey (C-PAS) knowledge level* for steps in community intervention planning and implementation. *Item mean score on a five-point Likert scale of 0 (none), 1 (a little), 2 (some), 3 (a lot), 4 (extensive). †Significant trend in knowledge score for problem identification, p = 0.047.

Fig. 3.

Technical assistance needs identified by initiative’s community-based organizations. *Significant trend in technical assistance needs in logic model development, p = 0.002. †Significant trend in technical assistance needs in qualitative and quantitative methods, p = 0.048. ‡Significant trend in technical assistance needs in evaluation development, p = 0.004.

Fig. 2.

Cross-site program assessment survey (C-PAS) skills level* for steps in community intervention planning and implementation. *Item mean score on a five-point Likert scale of 0 (none), 1 (a little), 2 (some), 3 (a lot), 4 (extensive). †Significant trend in skill score for developing goals and objectives, p = 0.045.

Table 2.

Cross-site program assessment survey (c-pas) responsesa of program staff’s perception of their community-based organization’s ability to plan and implement community interventions.

| Program participants |

|||

|---|---|---|---|

| Activity | Mean (S.D.)b 1st administration (n = 39) |

Mean (S.D.) 2nd administration (n = 36) |

Mean (S.D.) 3rd administration (n = 41) |

| A. Develop community relationships and coalition | 4.46 (0.68) | 4.56 (0.56) | 4.39 (0.70) |

| B. Identify appropriate resources (financial, volunteer, etc.) to plan and carry out community intervention programs |

4.08 (0.96) | 4.06 (0.63) | 4.07 (0.69) |

| C. Recruit community volunteers to plan and participate in interventions | 3.69 (0.80) | 3.83 (0.81) | 3.95 (0.74) |

| D. Develop Intervention-Specific Logic Models | 3.49 (1.07) | 3.94 (0.82) | 4.17 (0.70) |

| E. Assess the knowledge, behavior, and attitudes of program participants | 3.92 (0.93) | 4.08 (0.87) | 4.29 (0.76) |

| F. Develop data collection tools | 3.51 (0.91) | 3.64 (0.96) | 4.12 (0.75)c |

| G. Conduct interviews | 4.08 (0.74) | 4.19 (0.89) | 4.24 (0.83) |

| H. Conduct focus groups | 3.97 (0.93) | 4.08 (0.97) | 4.10 (0.89) |

| I. Collect data | 3.87 (0.86) | 4.28 (0.70) | 4.24 (0.73) |

| J. Enter collected data into the computer | 3.85 (0.93) | 3.97 (0.91) | 4.98 (4.54)c |

| K. Analyze collected data | 3.36 (1.04) | 3.72 (0.97) | 3.93 (0.88) |

| L. Train peer educators | 4.26 (0.97) | 4.56 (0.65) | 4.49 (0.64) |

| M. Train peer mentors | 3.74 (l.25) | 4.28 (0.78) | 4.29 (0.68)c |

| N. Train public speakers | 3.49 (1.07) | 3.94 (0.89) | 4.05 (0.95) |

| O. Develop educational brochures, pamphlets | 3.64 (1.09) | 4.08 (0.77) | 4.32 (0.72) |

| P. Provide HIV/AIDS counseling | 4.36 (1.01) | 4.31 (1.19) | 4.27 (1.32) |

| Q. Provide HIV/AIDS testing | 3.77 (1.77) | 3.50 (1.92) | 3.85 (1.74) |

| R. Provide HIV referrals | 4.67 (0.90) | 4.72 (0.57) | 4.71 (0.87) |

| S. Develop public service announcements (PSAs) | 3.51 (1.34) | 3.78 (1.25) | 3.83 (1.02) |

| T. Develop newsletters | 3.87 (1.06) | 4.00 (1.15) | 3.98 (1.17) |

Bold indicates statistical significance.

On a Likert scale of 1 (low) to 5 (high).

S.D. = standard deviation.

Statistically significant trend in higher ability scores for developing data collection tools (p = 0.002), analyzing collected data (p = 0.011), and training public speakers (p = 0.017). (Post hoc test for each variable indicates that the score at third C-PAS was significantly higher than score at first C-PAS, p < 0.05, respectively.

Furthermore, key program staff members were specifically asked to identify their technical assistance needs at the initial C-PAS and at the two subsequent C-PAS administrations. Fig. 3 indicates that the CBOs’ technical assistance needs trended downward, particularly for logic model development, qualitative and quantitative methods, and evaluation development. As indicated by key program staff response, technical assistance needs decreased substantially for logic model development from 48.7% for the initial CPAS to 27.8% for the second CPAS and 12.2% for the third C-PAS (p = 0.002). The technical assistance needs for qualitative and quantitative methods also decreased, from 64.1% in the first C-PAS to 50.0% and 36.3% in the second and third C-PAS, respectively (p = 0.048). The need for technical assistance in evaluation development decreased from 84.6% at the initial C-PAS to 63.9% in the second C-PAS and 39.0% in the third C-PAS (p < 0.001), according to responses of key program staff.

We further assessed these results using composite scores for knowledge, skills, and abilities across each administration of C-PAS. We also assessed statistical significance in trends in individual items that made up the composite score. The composite scores for knowledge and skills, i.e., the respective summary scores for all of items, or steps, in community program development, are shown in Table 3. The C-PAS data were not normally distributed, but they had the same distributions for each C-PAS administration, so we used the Kruskal–Wallis rank test to determine if the C-PAS median scores were statistically different. The test indicated a significant increase in the median knowledge scores in the second and in the final C-PAS compared to the initial C-PAS (p = 0.022). Although suggestive of an increase in community program development skills, the Kruskal–Wallis rank test indicated no statistically significant differences in composite median skill scores among the results of 3 C-PAS administrations, p = 0.057 (Table 3).

Table 3.

Cross-site program assessment survey (C-PAS) summary scores of organizational skills, knowledge, and abilities.

| C-PAS administrations | Skillsa median (IQR) | Knowledgea median (IQR) | Abilitiesb mean (S.D.) |

|---|---|---|---|

| 1st (n = 39) | 2.83 (0.67) | 2.83 (1.33) | 3.88 (0.42) |

| 2nd (n = 36) | 3.00 (0.75) | 3.00 (0.66) | 4.07 (0.45) |

| 3rd (n = 41) | 3.16 (0.83)c | 3.17 (0.34)d | 4.21 (0.46)e |

IQR = interquartile range. S.D. = standard deviation.

Skills and knowledge based on a five-point Likert scale of 0 (none) to 4 (extensive); summary score of six items.

Abilities based on a five-point Likert scale of 1 (low) to 5 (high); summary score of 20 items.

Overall trend in higher summary skills scores is not statistically significantly, p = 0.057.

Overall trend in higher summary knowledge scores is statistically significantly, p = 0.022.

Overall trend in higher summary abilities scores is statistically significantly, p = 0.004.

In assessing each individual item in the knowledge composite score, a statistically significant increase in knowledge was observed for problem identification in the second and the third compared to the initial C-PAS (p = 0.047). More than a third of the respondents (39.0%) scored higher than the median score in the third C-PAS versus 15.4% of respondents scoring higher than the median in the initial C-PAS (p = 0.03), with no statistically significant difference in median scores between the first and second C-PAS or between the second and third C-PAS. Median skill scores also increased significantly for goals and objectives development at the second and third C-PAS (p = 0.045). Further analyses indicated that 48.8% of the respondents scored higher than the median score for the third C-PAS versus 21.1% of the respondents scoring higher than the median score for the first C-PAS (p = 0.017).

In assessing the composite score for CBOs’ organizational abilities, we initially evaluated the data for normality and homoscedasticity. There was no violation of either assumption. Therefore, the ANOVA test was used to determine whether composite mean scores were significantly different among the results of the three administrations of C-PAS. The results indicated that mean scores for the first, second, and third C-PAS were significantly different and indicated that the overall abilities for the CBOs to conduct community interventions significantly increased over the project period (p = 0.004). A post hoc ANOVA test, using Bonferroni adjustment, indicated that the mean composite score for the third C-PAS was significantly higher than the mean for the initial C-PAS (4.21 versus 3.88, p = 0.003). There were no statistically significant differences between mean score for the initial C-PAS and the second C-PAS, nor was there a difference in the mean score between the second C-PAS and the third C-PAS.

Our post hoc analyses also indicated that most significant gains over time in the CBOs’ abilities to conduct community interventions were for 3 specific activities: developing data collection tools (p = 0.002), analyzing collected data (p = 0.011), and training public speakers (0.017). Using the median test to assess change over the 3 time periods, the results indicated that the significant improvement was between the third and the initial C-PAS; 34.1% of the respondents of the third C-PAS versus 12.8% in the initial C-PAS scored higher than the median score of 4.0 for developing data collection tools (p = 0.025). Similar results were obtained for CBOs’ abilities to analyze data and train public speakers.

4. Discussion

Significant gains were observed in the knowledge and skills of key program staff members and in their CBOs’ abilities to plan, conduct, and evaluate community-level interventions. Technical assistant needs identified by key program staff members also decreased substantially over the 2-year period of assessment. These gains in knowledge, skills, and abilities and decreases in technical assistance needs were captured by the self-assessment surveys in the last 2 years of the 3-year Pfizer Foundation Southern HIV/AIDS Prevention Initiative. They were the results of the CBOs’ active participation and engagement with the MSM PRC evaluation team in formal presentations and interactive workshops, teleconferences, and web-based conferences specifically provided, concurrently with routine assessment, to address the technical assistance needs of the CBOs.

The self-reported enhanced capacity of CBOs for community interventions gradually and consistently improved over time and was substantially improved at the end of this 3-year initiative. The overall knowledge scores of key staff members in community intervention planning and implementation were observed at 1 year and at approximately 18 months after the initial assessment. The results were also suggestive of overall enhancement of key staff members’ skills to plan and implement community interventions (comparable to improvements in knowledge levels) over the same time period, although these results were not statistically significant. Overall improvement in the CBOs’ abilities to plan and conduct community intervention activities was likewise observed at 1 year and further improvements were seen 6 months later.

In addition to overall improvement in summary measures of knowledge, skills, and abilities, we also observed upward trends in improvement in many of these specific areas in the subsequent second and third C-PAS assessments, including statistically significant gains in problem identification, development of goals and objectives and data collection tools, and abilities to analyze data and train public speakers. It is also interesting to note that the significant reductions in technical assistance needs were observed for the now enhanced areas, but initially assessed lower competence areas (i.e., logic model development and developing data collection tools). Reduction in technical assistance needs for qualitative and quantitative methods is also consistent with the significant increase in the CBOs’ ability to analyze data. Evaluation of community interventions represented the lowest knowledge and skill levels in the initial C-PAS as the key staff reported only modest, i.e., “some,” knowledge of and skills to conduct evaluation (Mayberry et al., 2008). Evaluation development knowledge and skills did not increase in the subsequent administrations of C-PAS.

Regarding evaluation capacity, we wanted an evaluation framework that would allow program leadership to tell their own story of success and share lessons among themselves during this process of concurrent technical assistance and cross-site evaluation. Furthermore, we wanted the evaluation framework and procedures to be applicable to other CBOs developing and conducting similar HIV/AIDS interventions or interested in conducting similar community-level prevention interventions. We believe the empowerment evaluation framework was necessary to maximize the likelihood of success in this specific initiative and enhance long-term sustainability and effectiveness. We recognize, however, that the CBOs were not likely to significantly enhance and/or sustain an in-house evaluation capacity as provided by this outside cross-site evaluation team (Miller et al., 2006). And despite not observing improvements in evaluation knowledge, skills, or abilities, an appreciation of evaluation capacity was evident. Our initial assessment indicated that 53.8% of the CBOs had a project evaluator. At the final assessment, 80.5% of the CBOs indicated having a project evaluator to enhance their programs’ evaluation capacity (Final Report to Pfizer).

This report demonstrates that providing technical assistance concurrent with the primary role of cross-site evaluation can markedly enhance CBOs’ capacity to plan, implement, and evaluate HIV/AIDS education and prevention interventions. The approach taken here was unique among previous reports that describe the impact of technical assistance to CBOs in that it was more comprehensive than evaluation capacity building, was based on the assessed needs of participatory CBOs, and, as mentioned earlier, was fully integrated with evaluation as empowerment for CBOs to be more effective and sustainable.

Our findings are similar to those found in previous work, sponsored by CDC, which also demonstrated the effectiveness of a capacity-building educational program for CBOs “program managers” of HIV prevention activities (Richter et al., 2007). Both efforts were longitudinal, with a significant enhancement in prevention capacity observed over a 15-month assessment period by Richter et al. (2007) versus an 18-month assessment period in our study. However, the domains of knowledge and skills were quite different in this national cohort of managers. Furthermore, our efforts were tailored to the needs of the participating CBOs of this initiative and were conducted in real time of their interventions planning and implementation. Richter et al. (2007) did show, however, that capacity gains, i.e., frequency and confidence in performing prevention activities, continued post-training sessions, as assessed 6-months later. We did not assess sustainability of prevention capacity gains beyond the end of the initiative.

An underlying assumption of capacity building is that it is a determinant of the sustainability of CBOs’ efforts (Altman, 1995; Goodman et al., 1998) and that organizational contexts and cultures may represent varying degrees of readiness for change (Green & Plsek, 2002). While the process of capacity building may take different approaches, arguably, a CBO’s successes and sustainability depend on its key capacity to rigorously plan, conduct, and evaluate activities; document effectiveness; and articulate its success stories to the community and funding agencies alike. Our results demonstrate that many tenets and assumptions of capacity building have been met or are being realized and that CBOs in this initiative have an enhanced capacity for programmatic successes in HIV/AIDS prevention.

A few notes of caution are necessary in interpreting these results of technical assistance and capacity building. Firstly, the MSM PRC evaluation team was contracted to provide cross-site evaluation after the CBOs had developed their initial proposal and first-year program activities had begun. This precluded evaluation and technical assistance planning in early stages of program planning as is most desirable. Likewise, our initial assessment of the CBOs’ HIV/AIDS prevention capacity was not conducted until the beginning of the second year of this 3-year initiative, precluding the assessment of capacity and capacity building prior to initiating intervention activities.

Secondly, we assessed capacity through self-reported measures using a self-administered survey questionnaire. Knowledge, skills, and abilities were self-reported by key program staff members; there was no formal testing of staff members. Thus, this assessment may not reflect the reality of the CBOs to plan, implement, and evaluate their interventions. However, the consistent directions of our findings across all the domains and within variable constructs of knowledge, skills, and abilities, along with the concomitant consistent reduction in self-perceived technical assistance needs, give incredible confidence in the reliability of these results.

Thirdly, while some turnover in program staff was noted during the initiative period, we did not account for staff turnover in this assessment. The problem of staff turnover in longitudinal capacity building is well recognized (Miller et al., 2006), and we do not know whether staff turnover resulted in an underestimation or overestimation of the prevention capacity enhancement observed among the initiative’s CBOs. Staff turnover, however, was limited to primary program staff members and was not observed among executive directors; this may have minimized the overall impact of staff turnover on core prevention capacity. Regarding non-response bias, the good-to-excellent response rate in each C-PAS may have minimized any non-response bias in assessing prevention capacity. The consistent directions of findings in this assessment also support the validity of these data in this regard.

Fourthly, while we emphasized summary scores of knowledge, skills, and abilities, the relatively small number of respondents in each survey limited the power to detect statistically significant trends in some of the specific items which comprised the summary score. Lastly, while we did not report here on the relationship of prevention capacity and measures of program success, we have previously reported that higher knowledge and skills are indicative of completing planned activities and meeting program objectives (Mayberry et al., 2008). Others have also reported that technical assistance has helped to improve program quality and outcomes (Centers for Disease Control and Prevention, 1999). The totality of these results indicate clearly that at two points-in-time beyond the initial assessment, the prevention capacity among the initiative’s CBOs were enhanced and that the CBOs were more likely to achieve desired intervention outcomes.

5. Lessons learned

We have the benefit of hindsight in providing technical assistance in the context of our primary role of cross-site evaluation. Realistically, technical assistance and evaluation were indistinguishable given the relatively formative stage of HIV/AIDS prevention capacity for the CBOs participating in this initiative. Our desire was to empower the CBOs to tell their own stories of successes and share lessons among themselves during this process. To the extent that the purpose of evaluation was to assist CBOs in generating and presenting evidence of their success, the technical assistance provided was intended to improve their abilities to set intervention-specific and measurable goals and objectives, develop logic models, and collect and analyze appropriate data for the purposes of program evaluation. Throughout the process, we recognized and addressed many issues and complex challenges of community-level intervention planning and implementation.

Below are five recommendations from our shared experience in collaborating with the initiative’s CBOs:

Conduct systematic needs assessment (if possible) prior to and throughout the course of funding support, and before initiation of prevention interventions.

Adopt practical, hands-on learning opportunities for adult learners.

Employ quantitative and qualitative methods to measure the successes of program delivery and outcomes.

Offer or facilitate ongoing technical support.

Provide opportunities for sharing evidence-based practices.

The Pfizer Foundation Southern HIV/AIDS Prevention Initiative engaged the CBOs with the MSM PRC evaluation team early in the project period. The MSM PRC evaluation team proceeded to immediately integrate evaluation and technical assistance, had the input of the CBOs throughout the initiative, and used appropriate tools of technical assistance including C-PAS, teleconference, web conferences, and face-to-face, hands-on workshops. The MSM PRC evaluation team, however, was not recruited until after the initiative had begun. This delay put the team at a disadvantage because the development of goals, objectives, resources, and other important aspects of intervention planning had already been established. In this regard, the potential impact of capacity building among the initiative’s CBOs was not fully realized.

The evaluation team, in its initial telephone conferences with the individual grantees, spent time revising and helping to develop logic models and establish other technical assistance needs of the individual grantees to aid in the implementation process of their programs. Because the CBOs were not at the same level of development, it was essential to assess technical assistance needs of each individual grantee regarding intervention objectives and design; data collection, entry and analysis; qualitative and quantitative methods; and so on. Open communication between the CBOs and the evaluation team allowed the grantees to receive individual technical assistance throughout the project period, facilitating targeted learning opportunities. Having the input of the grantees on an ongoing basis was essential in providing and meeting the technical assistance needs of the individual grantees and the initiative as a whole.

We also believe that in our regular interactions with the CBOs, we achieved the important goal of fully integrating evaluation into the planning process, implementation, and monitoring of program success, so that the evaluation was not perceived as an outside activity. Most capacity building with CBOs is evaluation capacity (Sobeck & Agius, 2007). While evaluation is important and key to evidence-based success, CBOs have other technical assistance needs which should be fully integrated into capacity building to make for seamless assistance. As observed here, CBOs do value evaluation as a means of articulating their success.

Capacity building is a continuous process synonymous with continuous quality improvement. Here we have indicated gains in specific capacity areas, described technical assistance needed and provided, and demonstrated ways in which prevention capacity can be enhanced. We have also described the challenges faced by evaluators and providers of technical assistance, as well as the challenges faced by the CBOs in prevention interventions. In doing so, we have also shown the specific potential of CBOs to impact HIV/AIDS prevention.

Finally, CBO-academic partnerships represent an important model for addressing disparities and social determinants of health. A unique, foundation-funded private–academic partnership, as described here, provides the resources for technical assistance, evaluation capacity and, as a consequence, the enhancement of CBOs’ HIV/AIDS prevention capacity.

Acknowledgement

This work was supported by a grant from the Pfizer Foundation.

Biographies

Robert M. Mayberry, M.S., M.P.H., Ph.D., has more than 25 years of experience in research, teaching, program evaluation, and policy analysis. He received his Ph.D. degree (epidemiology) from the University of California at Berkeley and is Adjunct Professor, Department of Community Health and Preventive Medicine, Morehouse School of Medicine.

Pamela Daniels, M.P.H., M.B.A., has 11 years of experience in public health research, program evaluation, and health disparities research with expertise in maternal and child health issues, environmental health and access to and quality of care. She received her M.P.H. degree from the University of Miami (Miami, FL).

Elleen M. Yancey, M.A., Ph.D., has 40 years experience in research, teaching, clinical behavioral science, and program analysis. She is Assistant Clinical Professor, Department of Community Health and Preventive Medicine, and Director, Prevention Research Center, Morehouse School of Medicine. Dr. Yancey received her M.A. degree from Columbia University (Developmental Psychology) and her Ph.D. degree from Clark Atlanta University (Counseling Psychology).

Tabia Henry Akintobi, Ph.D., M.P.H., has evaluated health initiatives funded by federal, private and state agencies centered on the reduction of health disparities with expertise in the areas of formative research, community-based participatory research and social marketing. She received her Ph.D. Degree (Public Health) from University of South Florida’s College of Public Health.

Jamillah Berry, M.S.W., Ph.D., has professional experience in community-based organizational management, health/mental health practice and research, and program evaluation. She earned a Master of Social Work degree from Wayne State University and a Ph.D. degree in social work planning and administration with a cognate in public health from Clark Atlanta University. She has served as a Doctoral Fellow with the Grady Center for Health Equality funded by the National Center on Minority Health and Health Disparities, and currently works as a Research Assistant with the Morehouse School of Medicine Prevention Research Center.

Nicole Clark received her Bachelor of Arts degree in psychology from Spelman College. She is a Graduate Research Assistant with Morehouse School of Medicine Prevention Research Center and has research experience in HIV, reproductive health education and policy, and young women of color.

Ahmad Dawaghreh, Ph.D., is a senior biostatistician in the Clinical Research Center and an Assistant Professor of Biostatistics in the Department of Community Health and Preventive Medicine at Morehouse School of Medicine. He has over 16 years of experience as a faculty member and data analyst. His expertise includes statistical methods, large sample theory and prediction analysis.

References

- Altman DG. Sustaining interventions in community systems: On the relationship between researchers and communities. Health Psychology. 1995;14(6):526–536. doi: 10.1037//0278-6133.14.6.526. [DOI] [PubMed] [Google Scholar]

- Biegel DE. Studies in Empowerment. Steps Towards Understanding Action. Haworth Press; New York: 1984. Help seeking and receiving in urban ethnic neighborhoods: Strategies for empowerment. [DOI] [PubMed] [Google Scholar]

- Centers for Disease Control and Prevention . Final report for the evaluation of the CDC-supported technical assistance network for community planning. 1999. [Google Scholar]

- Centers for Disease Control and Prevention Evaluating CDC-Funded Health Department HIV Prevention Programs. 2001a;1 Guidance. Retrieved May 7, 2007, from http://www.cdc.gov/hiv/aboutdhap/perb/hdg.htm#vol1. [Google Scholar]

- Centers for Disease Control and Prevention HIV Prevention Strategic Plan Through 2005. 2001b Retrieved May 7, 2007, from http://www.cdc.gov/hiv/resources/reports/psp/pdf/prev-strat-plan.pdf.

- Cheadle A, Sullivan M, Krieger J, Ciske S, Shaw M, Schier JK, et al. Using a participatory approach to provide assistance to community-based organizations: the Seattle Partners Community Research Center. Health Education Behavior. 2002;29(3):383–394. doi: 10.1177/109019810202900308. [DOI] [PubMed] [Google Scholar]

- Chinman M, Hannah G, Wandersman A, Ebener P, Hunter SB, Imm P, et al. Developing a community science research agenda for building community capacity for effective preventive interventions. American Journal of Community Psychology. 2005;35(3-4):143–157. doi: 10.1007/s10464-005-3390-6. [DOI] [PubMed] [Google Scholar]

- Compton D, Glover-Kudon R, Smith I, Avery M. Ongoing capacity building in the American Cancer Society (ACS) 1995-2001. 2002;93:47–62. [Google Scholar]

- Eng E, Parker E. Measuring community competence in the Mississippi Delta: The interface between program evaluation and empowerment. Health Education Quarterly. 1994;21:199–200. doi: 10.1177/109019819402100206. [DOI] [PubMed] [Google Scholar]

- Gibbs D, Napp D, Jolly D, Westover B, Uhl G. Increasing evaluation capacity within community-based HIV prevention programs. Evaluation and Program Planning. 2002;25(3):261–269. [Google Scholar]

- Goodman RM, Speers MA, McLeroy K, Fawcett S, Kegler M, Parker E, et al. Identifying and defining the dimensions of community capacity to provide a basis for measurement. Health Education and Behavior. 1998;25(3):258–278. doi: 10.1177/109019819802500303. [DOI] [PubMed] [Google Scholar]

- Green PL, Plsek PE. Coaching and leadership for the diffusion of innovation in health care: A different type of multi-organization improvement collaborative. Joint Commission Journal on Quality Improvement. 2002;28(2):55–71. doi: 10.1016/s1070-3241(02)28006-2. [DOI] [PubMed] [Google Scholar]

- Hawe P, Noort M, King L, Jordens C. Multiplying in health gains: The critical role of capacity-building within health promotion programs. Health Policy. 1997;39:29–42. doi: 10.1016/s0168-8510(96)00847-0. [DOI] [PubMed] [Google Scholar]

- Love AJ. Internal Evaluation: Building Organizations from Within. Sage Publications Inc.; Beverly Hills, CA: 1991. [Google Scholar]

- Mayberry RM, Daniels P, Akintobi TH, Yancey EM, Berry J, Clark N. Community-based organizations’ capacity to plan, implement, and evaluate success. Journal of Community Health. 2008;33(5):285–292. doi: 10.1007/s10900-008-9102-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meissner H, Bergner L, Marconi K. Developing cancer control capacity in state and local public health agencies. Public Health Reports. 1992;107:15–23. [PMC free article] [PubMed] [Google Scholar]

- Miller T, Kobayashi M, Noble P. Insourcing, not capacity building, a better model for sustained program evaluation. American Journal of Evaluation. 2006;27(1):83–94. [Google Scholar]

- Napp D, Gibbs D, Jolly D, Westover B, Uhl G. Evaluation barriers and facilitators among community-based HIV prevention programs. AIDS Education and Prevention. 2002;14(3 Suppl A):38–48. doi: 10.1521/aeap.14.4.38.23884. [DOI] [PubMed] [Google Scholar]

- Pagano M, Gauvreau K. Principles of Biostatistics. 2nd ed. Duxbury; 2000. [Google Scholar]

- Raczynski JM, Cornell CE, Stalker V, Phillips M, Dignan M, Pulley L, et al. A multi-project systems approach to developing community trust and building capacity. Journal of Public Health Management and Practice. 2001;7(2):10–20. doi: 10.1097/00124784-200107020-00004. [DOI] [PubMed] [Google Scholar]

- Richter DL, Dauner KN, Lindley LL, Reininger BM, Oglesby WH, Prince MS, et al. Evaluation results of the CDC/ASPH Institute for HIV Prevention Leadership: a capacity-building educational program for HIV prevention program managers. Journal of Public Health Management and Practice, Supplement. 2007:S64–71. doi: 10.1097/00124784-200701001-00011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richter D, Prince M, Potts L, Reininger B, Thompson M, Fraser J, et al. Assessing the HIV prevention capacity building needs of community-based organizations. Journal of Public Health Management and Practice. 2000;6(4):86–97. doi: 10.1097/00124784-200006040-00015. [DOI] [PubMed] [Google Scholar]

- Roper W, Baker E, Dyal W, Nicola R. Strengthening the public health system. Public Health Reports. 1992;107(6):609–615. [PMC free article] [PubMed] [Google Scholar]

- Rugg D, Buehler J, Renaud M, Gilliam A, Heitgerd J, Westover B, et al. Evaluating HIV prevention: A framework for national. State and Local Levels American Journal of Evaluation. 1999;20:35–56. [Google Scholar]

- Schwartz R, Smith C, Speers MA, Dusenbury LJ, Bright F, Hedlund S, et al. Capacity building and resource needs of state health agencies to implement community-based cardiovascular disease programs. J Public Health Policy. 1993;14(4):480–494. [PubMed] [Google Scholar]

- Sobeck J, Agius E. Organizational capacity building: Addressing a research and practice gap. Evaluation and Program Planning. 2007;30(3):237–246. doi: 10.1016/j.evalprogplan.2007.04.003. [DOI] [PubMed] [Google Scholar]

- Stockdill S, Baizerman M, Compton D. Toward a definition of the ECB process: A conversation with the ECB literature. New Directions for Evaluation. 2002;93:1–25. [Google Scholar]

- Thomas R, Israel B, Steuart G. Cooperative problem solving: The neighborhood self help project. In: Cleary H, Kichen J, Grinso P, editors. Case Studies in the Practice of Health Education. Mayfield; Palo Alto, CA: 1984. [Google Scholar]

- Zar JH. Biostatistical Analysis. 4th ed. Prentice Hall; Englewood Cliffs: 1999. [Google Scholar]