Abstract

Various types of data sources have been used to recognize user intent for volitional control of powered artificial legs. However, there is still a debate on what exact data sources are necessary for accurately and responsively recognizing the user's intended tasks. Motivated by this widely interested question, in this study we aimed to (1) investigate the usefulness of different data sources commonly suggested for user intent recognition and (2) determine an informative set of data sources for volitional control of prosthetic legs. The studied data sources included eight surface electromyography (EMG) signals from the residual thigh muscles of transfemoral (TF) amputees, ground reaction forces/moments from a prosthetic pylon, and kinematic measurements from the residual thigh and prosthetic knee. We then ranked included data sources based on the usefulness for user intent recognition and selected a reduced number of data sources that ensured accurate recognition of the user's intended task by using three source selection algorithms. The results showed that EMG signals and ground reaction forces/moments were more informative than prosthesis kinematics. Nine to eleven of all the initial data sources were sufficient to maintain 95% accuracy for recognizing the studied seven tasks without missing additional task transitions in real time. The selected data sources produced consistent system performance across two experimental days for four recruited TF amputee subjects, indicating the potential robustness of the selected data sources. Finally, based on the study results, we suggested a protocol for determining the informative data sources and sensor configurations for future development of volitional control of powered artificial legs.

Keywords: Artificial legs, data source selection, real time, user intent recognition, volitional control

I. Introduction

Limb loss is a physically and emotionally devastating event that renders people less mobile and at risk for loss of independence. It has been estimated that 664,000 persons have been living with major limb loss in the U.S. in 2005 [1], with lower limb amputations occurring much more frequently than upper limb amputations. With the increasing incidence of dysvascular amputations, the number of lower limb amputations in the U.S. is expected to increase to 58,000 per year by 2030 [2, 3]. Therefore, there is a pressing need to restore as much function as possible to the large and increasing population of lower limb amputees.

Recent advances in powered artificial legs [4-6] have allowed lower limb amputees to efficiently perform activities that are difficult or impossible when wearing passive devices (e.g. climbing a staircase). However, smoothly switching tasks (e.g. from level-ground walking to stair ascent) has been difficult for patients wearing powered prostheses. This is due to the lack of a user interface that can identify the user's intent. Because the control parameters in current powered artificial legs are modulated by the user's performing tasks [4], an intent-recognition interface is essential for natural and easy use.

Several approaches to intent recognition for powered artificial legs have been explored. These include manual approaches, such as use of a remote key fob [7] or performance of extra body motions [8]; or more automated approaches, such as echo control [9], intent recognition based on intrinsic mechanical feedback [10], or intent recognition based on EMG signals recorded from the residual limb [5, 11-13]. To date, manual approaches have proven cumbersome and sometimes unreliable. As for more automated approaches, echo control [9, 14] has been adopted to allow for smooth gait initiations and terminations. This approach requires the user to don and doff an instrumented orthosis on the unimpaired leg. A preliminary intent-recognition system based on mechanical feedback from a powered prosthesis [10] has been shown to identify gait initiations, terminations, and transitions between sitting and standing of one TF amputee. The study reported 100% accuracy in recognizing task transitions with 500ms delay and 3 false identifications in a 570s trial period. Two recent studies [11, 12] of intent-recognition systems based on EMG signals recorded from residual muscles demonstrated accurate decoding of the intended motion of the missing knee and ankle based on EMG signals recorded from TF amputees in a seated position. However, the performance of these designs during locomotion has not been reported. Au et al. [5] used EMG signals from residual shank muscles to identify locomotion modes of one transtibial amputee; however, their approach can only separate two locomotion modes. Huang et al. developed a phase-dependent EMG pattern recognition strategy [13] that can identify seven locomotion modes with approximately 90% accuracy as demonstrated with two transfemoral (TF) amputees. Motivated by previous approaches, our group further improved the interface design by fusing both EMG and mechanical information [15]. This approach, we have termed neuromuscular-mechanical fusion, outperformed the approaches based on only EMG signals or mechanical measurements.

Various types of data sources including EMG signals recorded from residual limbs and ground reaction forces/moments measured from prosthetic pylon have been fused together to recognize user intent for volitional control of powered artificial legs. However, there is still a debate on what exact data sources are necessary for accurately and responsively recognizing the user's intended tasks. Although the fusion of multiple data sources have demonstrated performance improvement over the approaches using single data sources, the use of a large number of data inputs would complicate the design of the instrumented prostheses, the intent-recognition algorithm, and the hardware necessary for its real-time application on powered artificial legs. Therefore, further investigation is needed to identify the informative data sources, reduce the number of system inputs, and create a more efficient real-time intent-recognition interface for artificial legs.

The goals of this study were to (1) investigate the usefulness of different data sources commonly suggested for user intent recognition, and (2) determine an informative set of data sources for volitional control of prosthetic legs. In this study, we first implemented our previously designed neuromuscular-mechanical-fusion interface in real time. We then ranked included data source based on the usefulness for user intent recognition and selected a reduced number of data sources that ensured accurate recognition of the user's intended task by using three source selection algorithms. The online performance of the intent-recognition algorithm was tested on four patients with TF amputations and compared with and without the source selection. Finally, this study suggested a protocol for determining the informative data sources and sensor configurations for future development of volitional control of powered artificial legs.

II. METHODS

A. Participants and Experimental Measurements

This study was conducted with Institutional Review Board (IRB) approval at the University of Rhode Island and the written, informed consent of all the recruited subjects. Two male and two female patients with unilateral TF amputations (TF01–04) were recruited (see Table I). All subjects were regular prostheses users.

TABLE I.

Demographic information of four subjects with transfemoral amputations (TF01-TF04).

| Age | Weight (kg) | Height (cm) | Gender | Years post-amputation | Residual limb length ratio* | Prosthesis for daily use | |

|---|---|---|---|---|---|---|---|

| TF01 | 40 | 65.7 | 162.6 | F | 31 | 68% | RHEO Knee |

| TF02 | 49 | 71.2 | 170.1 | M | 12 | 93% | C-Leg |

| TF03 | 54 | 64.0 | 164.0 | F | 33 | 84% | RHEO Knee |

| TF04 | 59 | 75.8 | 175.3 | M | 23 | 51% | RHEO Knee |

Note

Residual limb length ratio: the ratio between the length of the residual limb (measured from the ischial tuberosity to the distal end of the residual limb) to the length of the non-impaired side (measured from the ischial tuberosity to the femoral epicondyle). “RHEO” stands for a microprocessor-controlled prosthetic knee designed by Ossur.

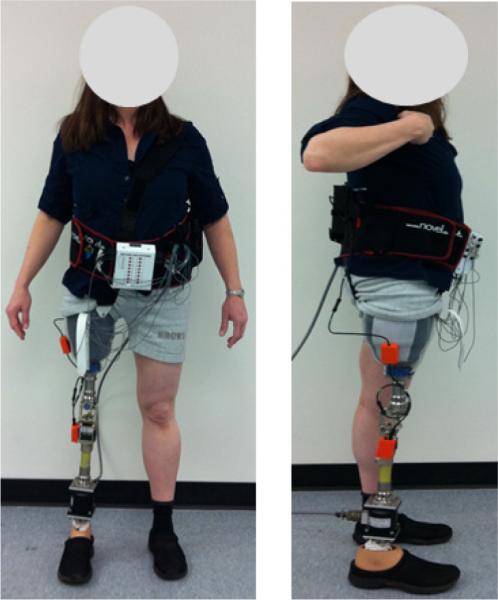

All available data sources were used for initial testing of the intent recognition interface. These measurements included EMG signals recorded from the residual thigh of TF amputees, mechanical loads on the prosthetic pylon, and the kinematics of the prosthesis. Eight active bipolar surface EMG electrodes were placed on the residual thigh. The targeted muscles included the rectus femoris (RF), vastus lateralis (VL), vastus medialis (VM), tensor fasciae latae (TFL), biceps femoris long head (BFL), semitendinosus (SEM), biceps femoris short head (BFS), and adductor magnus (ADM). The exact locations of electrode placement for each individual subject were determined by EMG recordings and muscle palpation. A ground electrode was placed on the bony area near the anterior iliac spine. It is noteworthy that it is not always possible to measure the activity of all muscles due to lower limb amputation and scar or fatty tissues on the residual limb. If fewer than eight muscles were identified, additional EMG sites were selected where strong signals could be recorded when the subject performed hip flexion/extension, hip adduction/abduction, or executed knee flexion/extension. When executing knee flexion/extension, the subjects were asked to attempt to flex/extend their amputated knee joints. The electrodes contained a preamplifier that band-pass filtered the EMG signals between 10 and 2000 Hz with a pass-band gain of 20. The EMG electrodes were embedded in customized gel liners (Ohio Willow Wood, US) for both the users’ comfort and reliable electrode-skin contact. A 16-channel EMG system (MA 300, Motion Lab System, US) was used to collect EMG signals. The cut-off frequency of the anti-aliasing filter for EMG channels was 450 Hz. Mechanical ground reaction forces (Fx, Fy, Fz) and moments (Mx, My, Mz) were measured by a six-degree-of-freedom (DOF) load cell (PY6, Bertec Corporation, OH, US) mounted on the prosthetic pylon, with the x, y, and z axes aligned with the mediolateral, superoinferior, and anteroposterior axes of the subject, respectively. Both analog EMG signals and mechanical load values were sampled at 1000 Hz by a data acquisition board (DATAQ DI-720, DATAQ Instruments, Inc., Ohio, US). In addition, two inertial measurement units (IMUs) (Xsens Technologies B.V., Enschede, Netherlands) were used to measure the kinematics of the prosthesis. Both IMUs were tightly affixed to the lateral side of the prosthetic socket and pylon. The IMUs’ coordinate systems were aligned with the coordinate system of the load cell in the standing position. A total of 12 kinematic data were derived from the IMU measurements, including three-DOF linear accelerations of the thigh segment (TAcc_x, TAcc_y, TAcc_z), angular velocity (TAV_x, TAV_y, TAV_z) and acceleration (TAA_x, TAA_y, TAA_z) of the thigh segment, knee angle (KA), knee angular velocity (KAV), and knee angular acceleration (KAA). The kinematics of the residual thigh segment and prosthetic knee were specifically monitored because (1) motion of the residual thigh is still controlled by a transfemoral amputee and therefore represents the user's voluntary control, (2) the selected kinematic parameters of the prosthetic socket and knee have been used to demonstrate the movement state of prosthesis [16] and classify user intent [10], and (3) all of these data sources can be measured by sensors mounted on the prosthesis. The kinematic measurements were sampled at 100 Hz and were synchronized with EMG and mechanical load measurements. All sampled data were streamed into a desktop computer (Dell OptiPlex 380 with 2.93 GHz Core 2 Duo E7500 CPU and 2 GB RAM) for real-time intent recognition.

B. Real-time User Intent Recognition

The architecture of our neuromuscular-mechanical-fusion interface has been previously reported [15]. The multichannel data are preprocessed and segmented into analysis windows. Features believed to capture the signal patterns are extracted and fused into one feature vector. The feature vector is then fed to a phase-dependent pattern classifier, composed of a gait-phase detector and multiple sub-classifiers corresponding to individually defined gait phases for mode recognition. The classification decisions are further post-processed to improve system accuracy. There are two procedures involved in real-world application of the intent-recognition interface: (1) offline training and (2) real-time testing. In this study, both procedure were implemented and tested using MATLAB (The Mathworks, Massachusetts, US).

1) Interface Training Strategy and Offline Training Algorithm

Training data were collected while lower limb amputees performed the assigned locomotion modes. In this study, the considered modes included level-ground walking, stair ascent, stair descent, ramp ascent, ramp descent, sitting, and standing. The latter two modes were included because they were important for gait initiation and termination and were difficult for leg amputees. For the task of level-ground walking, we instructed subjects to start from standing, walk along a straight walkway, and stop and stand. For sitting and standing tasks, subjects were asked to transition between sitting and standing. When in standing positions, subjects were allowed to make small steps and shift their weight; during sitting, subjects were allowed to move the prosthetic limb. During collection of training data for stair ascent/descent or ramp ascent/descent, subjects switched from level-ground walking to stair ascent/decent or ramp ascent/descent and then switched back to level-ground walking. Stair ascent/descent and ramp ascent/descent were negotiated with a 5-step stair and a 10-foot ramp with 10 degrees of inclination, respectively. At least 30 seconds of data were collected in each mode and five repetitions of each task transition were captured.

Training data were preprocessed and segmented into overlapped analysis windows with a window length of 150ms and a window increment of 50ms. EMG signals were band-pass filtered by a 20–450 Hz sixth-order Butterworth digital band-pass filter. The mechanical forces/moments were filtered by a low-pass filter with a 45 Hz cut-off frequency. The linear accelerations and angular velocities of the thigh segment and knee angle were low-pass filtered with a 20 Hz cut-off frequency before the derivation of other kinematic data. Each window was labeled with a gait phase index and task index. The gait phase index was determined automatically by the gait phase detection algorithm based on the vertical GRF measured from the six-DOF load cell [17]. The labeling of tasks required manual indication of mode transitions from an experimenter. Four commonly used EMG time-domain (TD) features [18] (mean absolute value, number of slope sign changes, waveform length, and number of zero crossings) were extracted from each EMG signal in each analysis window. The mean, maximum, and minimum values of mechanical loads and motion parameters were extracted as features from each of these data sources. The features extracted from EMG signals (4 feaures×8 channels), mechanical loads (3 features×6DOF), and kinematic measurements (3 features×12 sources), were then concatenated into one feature vector (86×1). The fused feature vectors for the same gait phase index were used to train the sub-classifier corresponding to that phase. A nonlinear support vector machine (SVM) classifier based on the “one-against-one” (OAO) scheme [19] and C-Support Vectors Classification (C-SVC) [20], was used to design the classifier. Finally, the parameters of each sub-classifier were calculated and stored for real-time testing.

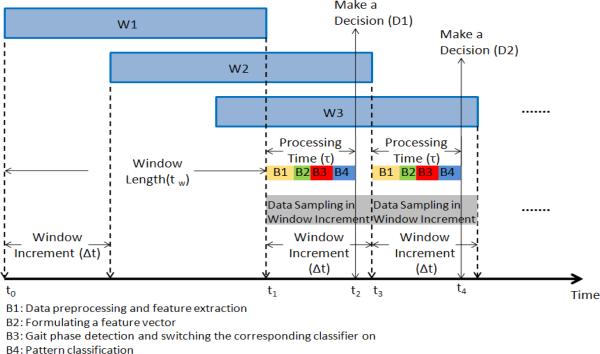

2) Real-time Implementation of User Intent Recognition

In real-time testing, the classifier parameters calculated in the training session were reloaded into the memory of the computer. The time sequence of the real-time algorithm is shown in Fig. 2. Each analysis window (e.g. W1, W2, and W3 in Fig. 2) had the same window length (tw = 150ms). The window increment (Δt = 50ms) determined the time delay for each decision. The processing time τ, consisted of the time required for preprocessing the data and extracting the features from each analysis window (B1), formulating and normalizing the feature vector (B2), detecting the gait phase and activating the corresponding classifier (B3), and classifying the user intent (B4). In order to make use of all of the streamed data for continuous decision making, the window increment (Δt) was required to be greater than or equal to the processing time (τ) [21]. At time t0, the EMG, mechanical, and kinematic signals were simultaneously streamed into the computer. These acquired data were stored in a first-in first-out (FIFO) buffer. At time t1, when the data for the first analysis window, W1, were available, the data were transferred from the FIFO buffer to system memory and execution of the real-time algorithm began. At the same time, new incoming data were stored in the buffer. At time t2, the computation for W1 completed. The first decision, D1, was made to recognize the user intent for window W1. At t3, the data for the second window, W2, were available for processing. Similarly, new incoming data continued to be stored in the FIFO buffer. At time t4, the decision, D2, for window W2 was made. In addition, a five-point majority vote scheme was applied to eliminate erroneous decisions.

Fig. 2.

Continuous windowing scheme and time sequence for real-time implementation of user-intent-recognition interface.

C. Evaluation of the Intent-recognition Interface

1) Experimental Protocol

Each TF subject participated in two experimental days. On each day, the experiment took around three hours. In the first experimental day, all of the previously discussed measurements (EMG, kinematics, and pylon forces/moments) were used for real-time intent recognition. All subjects wore a hydraulic passive knee (Total Knee, ÖSSUR, Germany) and performed the instructed tasks in multiple trials. During the real-time testing session, the subjects were asked to transition among seven task modes in a fixed sequence: sitting, standing, level-ground walking, stair ascent, level-ground walking, ramp descent, level-ground walking, ramp ascent, level-ground walking, stair descent, level-ground walking, standing, and sitting. Each trial lasted approximately 1 minute. A total of 15 real-time testing trials were conducted. For the subjects’ safety, they were allowed to use a hand rail when walking on the staircase or ramp if necessary. Rest periods were allowed between trials to avoid fatigue. All of the data and real-time decisions collected during the experiment were saved for system evaluation and source selection analysis. In addition, a pressure-measuring mat was attached to the gluteal region of the subject to indicate the states of sitting and standing. The experiment was also videotaped for evaluation purposes.

The second experiment was conducted after the informative set of data sources (those that carried the majority of information for accurate intent recognition) was determined via an offline analysis of the data collected in the first experimental day. The experimental protocol was the same as the first experimental, except that only a subset of data sources was used for interface training and real-time testing. The time between the two experimental days was 3 weeks, 2 weeks, 1 month, and 3 weeks for TF01-04, respectively.

2) Evaluation Parameters

Three parameters were used to evaluate the real-time performance of the intent-recognition interface: (1) recognition accuracy in static states (RA), (2) the number of missed task transitions, and (3) transition prediction time. Static states were defined as states when subjects continuously performed the same task. More details about the definition of these evaluation parameters can be found in previous study [15].

D. Source Selection Analysis

1) Overview of Source Selection Methods

Data source or feature selection is commonly used in the field of machine learning to reduce the dimensionality of inputs and create an efficient classification model. If the considered unit for input selection is individual features, it is called feature selection; if the selected input is data source (consisting of multiple features), it is called source selection. Exhaustive searching method, which enumerates all the possible combinations of subsets, is generally used to find the globally optimal subset. However, due to computational complexity, this approach is not practical for real application. Instead in this study, we considered more efficient and commonly used searching methods to find the sub-optimal subset of data sources. The source/feature selection methods can be divided into two broad classes: wrapper methods and filter methods [22]. Wrapper methods require one predetermined classification algorithm and use the classifier as a black box to select the subset of sources based on the discriminatory power [22]. The most commonly used wrapper methods are sequential forward selection (SFS) and sequential backward selection (SBS). Filter methods select the sources based on discriminating criteria, which are relatively independent from the classifier. Examples of such criteria are correlation coefficients [23], mutual information [24], and statistical tests (t-test and F-test) [25]. Recent studies [24, 26] used a minimum-redundancy-maximum-relevance (mRMR) criterion. This method considered the relevant and redundant features simultaneously when selecting the sources/features; it expanded the representative power of useful data sources/features and improved the generalization of the source/feature selection algorithm. In this study, two wrapper methods (e.g. SFS and SBS) and a filter method (e.g. mRMR) were applied to the data collected in the first experimental day to select the informative data sources for intent recognition.

2) Sequential Forward and Backward Selection Algorithms

The SFS algorithm was applied as follows. The algorithm started with two data source sets: the selected set, A, was initially empty; and the remaining set, B, included all 26 data sources. In the first search iteration, the data from each individual source were used to train and test the intent-recognition system. The source that yielded the highest average classification accuracy across all modes in the static state was selected as the most important source for the system and was added to set A. In the following iterations, each of the sources in set B was paired with the selected sources in set A to train and test the classifier. The source from set B that produced the maximum recognition accuracy when combined with the selected sources from set A was added to set A. Only one source was selected in each search step. The sequence in which sources were selected produced a rank of the sources in terms of their importance for accurate mode recognition.

The SBS method began with set B empty and set A containing all 26 sources. In each search iteration, the source from set A that produced the lowest decrease in recognition accuracy when removed was moved to set B. Only one source was removed from set A in each iteration. The first source removed was considered to contain the least information; while the last source remaining in set A was considered to be the most informative.

3) mRMR Source Selection Algorithm

The mRMR algorithm select the feature fi to satisfy the criterion in (1) [24, 26]. This approach simultaneously maximizes the relevance between this feature and the classes (intended tasks) and minimizes the redundancy among the studied features.

| (1) |

In (1), fi and fj are different features. S and ΩS represent the selected important feature set and the remaining unselected feature set, respectively. |S| is the number of selected features in S. F(fi, K) denotes the F-statistic test value of feature fi in the K studied classes (K=7 in this study), which is used to evaluate the relevance between the feature and classes [24, 26]. The F-statistic value was calculated by

| (2) |

In (2), f̄ is the mean value of feature fi across all observations; f̄k is the mean value of fi within the kth class; nk is the size of the feature in the kth class. σk is the variance of the feature in the kth class and N denotes the total number of observations. The Pearson correlation coefficient c(fi, fj) in (1) measures the redundancy among the features [24], defined as

| (3) |

where fi,m denotes the mth observation of fi; f̄i and f̄j denote the mean value of fi and fj, respectively; Sfi and Sfj are standard deviations of fi and fj.

It is noteworthy that the mRMR algorithm is usually used on the feature level. Therefore, in order to search the sub-optimal data source, two steps were involved in this study: (1) each feature from all sources was ranked in terms of their importance for accurate intent recognition based on the mRMR criteria in equation (1); (2) the data sources were ranked based on the highest rank of the features associated with it. For all source selection methods applied, the number of selected data sources was determined based on two criteria: (1) the recognition accuracy in static states had to be greater than 95%; and (2) no additional missed mode transitions could be induced by the reduction in data sources. The 95% threshold chosen for the first criterion in this study can be modified based on the specific requirements for system performance.

III. RESULTS

A. Performance of Source Selection Algorithms

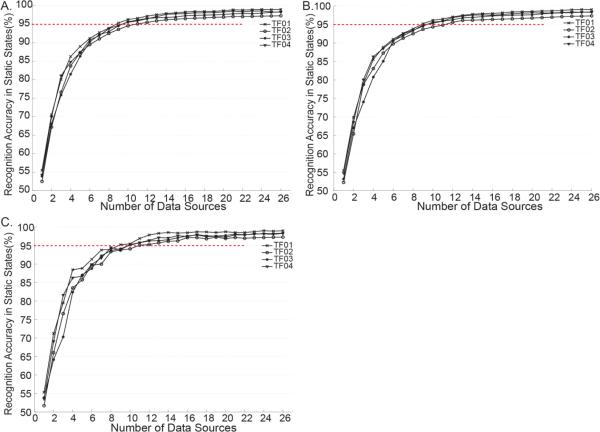

The data collected in the first experiment were used for offline source selection. The effect of increasing the number of selected data sources on the intent recognition accuracy in static states was similar among the three tested algorithms (SFS, SBS, and mRMR) (Fig. 3): when the number of applied data sources increased, the classification accuracy increased dramatically and gradually plateaued. When 95% accuracy (indicated by a red dashed line in Fig. 3) was chosen as the criterion to select the informative set of data source for all studied selection methods, the number of selected sources was 9, 11, 10, and 10 for TF01-TF04, respectively.

Fig. 3.

Accuracy of intent recognition in static states when the number of selected data sources increased. The applied source selection methods were (A) sequential forward selection (SFS), (B) sequential backward selection (SBS), and (C) minimum-redundancy–maximum-relevance (mRMR).

Table II listed the informative set of data sources selected by SFS, SBS, and mRMR for TF01-TF04. The sources were ranked in descending sequence in terms of the order they were selected or removed for the sequential forward/backward searching or based on the calculated relevance-redundancy value for mRMR selection method. The number of selected sources and the rank of the selected sources varied among the different subjects. However, the majority of the top ranked data sources selected by SFS, SBS, and mRMR overlapped for individual subject. The number of EMG signals in the selected set was larger than half of the total number of selected sources across all the subjects and selection algorithms. Of all the data sources, only the vertical ground reaction force was consistently selected across all the subjects and all selection methods. Except for the thigh segmental acceleration, no other kinematic measurements were selected as the important sources for intent recognition.

TABLE II.

The sources selected for four recruited TF subjects (TF01-TF04).

| TF01 | TF02 | TF03 | TF04 | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| SFS | SBS | mRMR | SFS | SBS | mRMR | SFS | SBS | mRMR | SFS | SBS | mRMR |

| Fz | VM | TFL | My | BFL | TFL | VL | VM | VL | E2* | Mx | SEM |

| BFL | BFS | RF | Mx | Mx | RF | Mx | TFL | RF | E1* | TFL | ADM |

| My | ADM | My | VL | VL | TAcc y | SEM | Fy | VM | Mx | E2* | E2* |

| RF | My | VM | Fz | TFL | SEM | BFS | Mx | TFL | TAcc_y | E1* | Fz |

| VL | RF | BFL | BFL | BFS | ADM | Fz | BFL | Fy | VL | Fz | TFL |

| TFL | BFL | Fz | ADM | Fy | VM | ADM | SEM | SEM | Fz | VL | E1* |

| Mz | Fz | TAcc y | BFS | RF | BFS | TFL | VL | ADM | ADM | Mz | TAcc_y |

| VM | TAcc y | Mx | TFL | ADM | VL | Fy | ADM | Mx | TFL | Fy | VL |

| TAcc y | TFL | Fy | TAcc y | Fz | Fz | VM | Fz | Fz | SEM | SEM | VM |

| SEM | TAcc y | Mx | RF | TAcc_z | BFS | Mz | ADM | Mx | |||

| TAcc_z | SEM | BFL | |||||||||

Note

indicated the extra muscles which were not targeted. E1: a distal quadriceps muscle; E2: a distal hamstring muscle. Underlined data sources: the sources that were selected by all three selection algorithms.

Additionally, we compared the execution time of three different source selection algorithms. mRMR required the shortest execution time (an average of 84 seconds over the four subjects), while SBS was the most time-consuming algorithm (requiring an average of 1978 seconds). SFS took approximately 383 seconds for data source selection. Since mRMR was the most computationally efficient algorithm and yielded similar performance to the other two searching algorithms (Table II and Fig. 3), the sources selected by mRMR were used in the second experiment for real-time mode recognition in order to evaluate the robustness of the selected data sources across days.

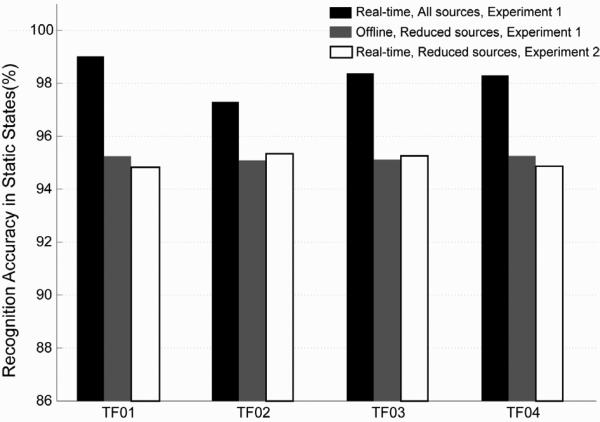

B. Real-time Performance with and without Data Sources Selection

The performance of the intent-recognition interface in static states was compared with and without data source reduction (Fig. 4). The accuracy decreased to approximately 95% when the data sources selected by mRMR were used (gray and white bars in Fig. 4), compared to around 98% accuracy derived from all data sources (the black bars in Fig. 4). This was to be expected, as 95% accuracy was used as the threshold in one of the searching criteria. Importantly, the system produced stable performance in the static states by using the same data sources selected by mRMR across days, which implied that the selected data sources robustly captured important information for accurate intent recognition.

Fig. 4.

Intent recognition accuracy in static states. The black bars were derived from the real-time decisions in the first experiment when all the data sources were used. The gray bars were computed offline based on the data in the first experiment, but with a reduced number of data sources selected by mRMR. The white bars denote the real-time results derived from the second experiment when the same data sources as used for gray bars were applied.

When all data sources were used for online testing (in the first experimental day), the average processing time for one decision (i.e. the duration of B1–B4 in Fig. 2) was 45.2 ± 2.7ms. When the number of data sources was reduced by the mRMR method (in the second experiment), the online processing time ranged from 21.3ms to 28.8ms for the four subjects, which would result in more frequent decision-making.

The real-time system performance during transitions derived from the informative data sources in the second experiment was similar to that derived from all data sources in the first experiment. For both days, 3 out of the 720 transitions (12 transitions* 15 trials* 4 TF subjects) tested in all four subjects were missed. These missed transitions included the transitions from level-ground walking to ramp ascent and ramp descent to level-ground walking. The prediction times for the 12 transitions were averaged across all the testing trials and all the subjects, excluding the missed task transitions. Similar prediction times were observed when using all data sources and when using selected sources (Table III).

TABLE III.

Average task transition prediction time across subjects in the first and second real-time experiments.

| Prediction Time for Different Task Transitions (ms) | W→SA | SA→W | W→SD | SD→W | W→RA | RA→W | W→RD | RD→W | W→ST | ST→W | S→ST | ST→S |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| All Sources (Real-time results in the experiment 1) | 120.8 | 114.5 | 127.1 | 115.4 | 107.7 | 104.4 | 105.9 | 116.5 | 86.2 | 118.4 | 88.0 | 324.4 |

| Selected Sources (Real-time results in the experiment 2) | 119.3 | 112.4 | 115.9 | 111.9 | 118.3 | 113.4 | 102.4 | 134.7 | 96.4 | 124.7 | 94.2 | 305.8 |

Note: W: level-ground walking; SA: stair ascent; SD: stair descent; RA: ramp ascent; RD: ramp descent; ST: standing; S: sitting

IV. DISCUSSION

In this study we aimed to investigate the usefulness of each data source used for user intent recognition, and determine an informative set of data sources for efficient real-time intent-recognition for the control of artificial legs. The offline source-selection analysis showed that when the number of data sources was reduced from 26 to approximately 10, the interface was still able to accurately recognize the subject's intents (above 95% accuracy in static states) without missing additional mode transitions. These results indicate that not all of the studied data sources carried important information for intent recognition and input redundancy existed in the initial design of fusion-based interface. Therefore, reduction of system redundancy is necessary to improve the efficiency of the design for its eventual clinical use for artificial legs. At the hardware level, removing sources that capture less important or redundant information can simplify the design of the instrumented prosthetic leg, I/O circuits, wiring, and embedded system. At the software level, reducing the number of data sources decreases the computational complexity of the intent-recognition algorithm. For example, in this study we showed that the computational speed for recognizing intents with a reduced number of data sources was almost 1.9 times faster than that when using all data sources.

By ranking the data sources based on the usefulness for user intent recognition, a reduced number of data sources that ensured accurate recognition of the user's intended task was selected by using three source selection algorithms for individual subject. Although there was a variation in types and ranks of data sources selected across the different selection methods and subjects, some consistency was still observed. First, more than half of the selected sources for all subjects were EMG signals, which implied that neuromuscular information measured from the residual limbs may be the essential data source for accurate and responsive intent recognition. However, it was difficult to unify which muscles were necessary for intent recognition across different subjects, which may be due in part to the variations in the level of amputation, cause of amputation, surgical approach, and locomotion pattern among the TF amputees. Therefore, the optimization of EMG signal inputs should be customized for each individual in the future clinical application for artificial legs. Second, with the exception of thigh acceleration, no kinematic information was selected by the algorithms, implying that the kinematics of the prosthetic knee is not important for differentiating user intent and predicting the studied task transitions. Additionally, the vertical ground reaction force was selected as an important source by all searching methods for all tested subjects. This indicates different patterns of GRF on the prostheses even before the users switched locomotion modes. Since the GRF was also used as the input for gait phase detection in the current system design and is usually available for intrinsic control of lower limb prostheses, it is necessary to include it as one of the informative data sources. At the sensor level, based on the results from this study, we suggested that the sub-optimal sensor configuration for future design of intent-recognition interface for powered artificial legs should include at least four surface EMG sensors (two targeting quadriceps and two targeting hamstring muscles), a 6-DOF load cell mounted on prosthetic pylon, and one accelerometer instrumented on prosthetic socket.

The results of this study showed that all three methods selected similar data sources when at least 95% accuracy for recognizing user intent was required. However, the mRMR method is suggested for future clinical application because (1) it was much more computationally efficient than the other two searching methods; (2) the mRMR algorithm can be used regardless of the type and structure of the classifier; while SFS and SBS involve the direct goal of maximizing the classification accuracy of one particular classifier. Furthermore, the data sources selected by mRMR were robust over time. Similar performance was observed across experimental days when the mRMR-selected sources were used to classify task modes.

Another important contribution of this study was the real-time PC implementation of our previously designed intent-recognition interface. Unlike previous design, in which we used offline cross validation to evaluate the interface [15], this study included a system-training protocol for quick calibration and real-time algorithm for online system testing. The real-time interface could make a decision every 50ms and produced high accuracy for intent recognition and task transition prediction, similar to the results of the offline analysis [15]. These results imply the soundness of our designed training protocol and real-time intent-recognition algorithm; these designs can be used for the future embedded implementation of an intent recognition interface for artificial legs.

In summary, this study suggested a protocol for determining the informative data sources and sensor configurations for future development of volitional control of powered artificial legs. Software based on the mRMR algorithm can be developed for prosthetists to allow them to customize the sub-optimal sensor placement for each TF amputee. The prosthetists may simply choose the optimization criteria and run the program for quick sensor placement guidance. In addition, our real-time mode-recognition algorithm can be directly implemented in the embedded control units in prosthetic knees. Nevertheless, several study limitations were also identified. First, the evaluation of the system was done in the laboratory, due to the limitations of our experimental setup. It will be important to further test our system in more realistic environments. In addition, the reported execution speed for locomotion mode recognition was not the true online processing speed for CPU implementation. This is because we did not ignore background programs in the PC, and MATLAB does not allow multi-threaded programs. The execution speed should be faster when a powerful digital signal processor is used. Furthermore, this study fixed the optimization criteria to 95% classification accuracy. Further studies are needed to justify whether or not this criterion is sufficient for safe prosthesis control. Finally, it was noteworthy that the suggestion of sub-optimal sensor configuration for volitional control of power artificial legs was made only based on the results from the recruited subjects. Customization of sensor configuration for each individual user is desired in the future clinical application.

V. CONCLUSION

In this study, we analyzed the usefulness of different data sources for user intent recognition and identified an informative set of data sources for volitional control of prosthetic legs. First, our previously designed interface based on neuromuscular-mechanical fusion was implemented in real time. We then ranked data sources based on the usefulness for user intent recognition and selected a reduced number of data sources that ensured accurate recognition of the user's intended task by using three source selection algorithms. The real-time performance of the intent-recognition algorithm with and without source selection was evaluated and compared on four patients with TF amputations on different experimental days. Based on the study results, we suggested a protocol for determining the informative data sources and sensor configurations for future development of volitional control of powered artificial legs.

Fig. 1.

Experimental setup on one transfemoral amputee subject (TF01).

Acknowledgment

The authors thank Michael J. Nunnery and Becky Blaine at Nunnery Orthotic and Prosthetic Technology, LLC, for their suggestion and assistance in this study and Aimee Schultz for editing the manuscript. This work was partially supported by the National Science Foundation (NSF)/CPS #0931820 and #1149385, National Institutes of Health (NIH)/NICHD #RHD064968A, and NIDRR #H133G120165.

Biography

Fan Zhang (S'11) received the B.S. degree in biomedical engineering from Tianjin Medical University, Tianjin, China, in 2006, and the M.S. degree in biomedical engineering from Tianjin University, Tianjin, China, in 2008. He is currently working the Ph.D. degree in biomedical engineering at the University of Rhode Island, Kingston, RI.

Fan Zhang (S'11) received the B.S. degree in biomedical engineering from Tianjin Medical University, Tianjin, China, in 2006, and the M.S. degree in biomedical engineering from Tianjin University, Tianjin, China, in 2008. He is currently working the Ph.D. degree in biomedical engineering at the University of Rhode Island, Kingston, RI.

His research interests include signal processing, pattern recognition, the design of neural-machine interface for the powered artificial limb control, and analysis and modeling of normal and neurologically disordered human motion.

He Huang (S'03–M'06–SM'12) received the B.S. degree from the School of Electronics and Information Engineering, Xi'an Jiao-Tong University, Xi'an, China and the M.S. and Ph.D. degrees from the Harrington Department of Bioengineering, Arizona State University, Tempe.

He Huang (S'03–M'06–SM'12) received the B.S. degree from the School of Electronics and Information Engineering, Xi'an Jiao-Tong University, Xi'an, China and the M.S. and Ph.D. degrees from the Harrington Department of Bioengineering, Arizona State University, Tempe.

She was a Research Associate in the Neural Engineering Center for Artificial Limbs at the Rehabilitation Institute of Chicago, Chicago, IL. Currently, she is an Assistant Professor in the Department of Electrical, Computer, and Biomedical Engineering, University of Rhode Island, Kingston, RI. Her primary research interests include neural–machine interface, modeling and analysis of neuromuscular control of movement in normal and neurologically disordered humans, virtual reality in neuromotor rehabilitation, and design and control of therapeutic robots, orthoses, and prostheses.

References

- 1.Ziegler-Graham K, MacKenzie EJ, Ephraim PL, Travison TG, Brookmeyer R. Estimating the prevalence of limb loss in the United States: 2005 to 2050. Arch Phys Med Rehabil. 2008 Mar;89(3):422–9. doi: 10.1016/j.apmr.2007.11.005. [DOI] [PubMed] [Google Scholar]

- 2.Cutson TM, Bongiorni DR. Rehabilitation of the older lower limb amputee: a brief review. J Am Geriatr Soc. 1996 Nov;44(11):1388–93. doi: 10.1111/j.1532-5415.1996.tb01415.x. [DOI] [PubMed] [Google Scholar]

- 3.Fletcher DD, Andrews KL, Hallett JW, Jr., Butters MA, Rowland CM, Jacobsen SJ. Trends in rehabilitation after amputation for geriatric patients with vascular disease: implications for future health resource allocation. Arch Phys Med Rehabil. 2002 Oct;83(10):1389–93. doi: 10.1053/apmr.2002.34605. [DOI] [PubMed] [Google Scholar]

- 4.Sup F, Bohara A, Goldfarb M. Design and Control of a Powered Transfemoral Prosthesis. Int J Rob Res. 2008 Feb 1;27(2):263–273. doi: 10.1177/0278364907084588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Au S, Berniker M, Herr H. Powered ankle-foot prosthesis to assist level-ground and stair-descent gaits. Neural Netw. 2008 May;21(4):654–66. doi: 10.1016/j.neunet.2008.03.006. [DOI] [PubMed] [Google Scholar]

- 6.Martinez-Villalpando EC, Herr H. Agonist-antagonist active knee prosthesis: a preliminary study in level-ground walking. J Rehabil Res Dev. 2009;46(3):361–73. [PubMed] [Google Scholar]

- 7.I. Otto Bock Orthopedic Industry . Manual for the 3c100 Otto Bock C-LEG. Duderstadt, Germany: 1998. [Google Scholar]

- 8.OSSUR The POWER KNEE. http://bionics.ossur.com/Products/POWER-KNEE/SENSE.

- 9.Bedard S, Roy P. Actuated leg prosthesis for above-knee amputees. 2003 [Google Scholar]

- 10.Varol HA, Sup F, Goldfarb M. Multiclass real-time intent recognition of a powered lower limb prosthesis. IEEE Trans Biomed Eng. 2010 Mar;57(3):542–51. doi: 10.1109/TBME.2009.2034734. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Hargrove LJ, Simon AM, Lipschutz RD, Finucane SB, Kuiken TA. Real-time myoelectric control of knee and ankle motions for transfemoral amputees. JAMA. 2011 Apr 20;305(15):1542–4. doi: 10.1001/jama.2011.465. [DOI] [PubMed] [Google Scholar]

- 12.Ha KH, Varol HA, Goldfarb M. Volitional control of a prosthetic knee using surface electromyography. IEEE Trans Biomed Eng. 2011 Jan;58(1):144–51. doi: 10.1109/TBME.2010.2070840. [DOI] [PubMed] [Google Scholar]

- 13.Huang H, Kuiken TA, Lipschutz RD. A strategy for identifying locomotion modes using surface electromyography. IEEE Trans Biomed Eng. 2009 Jan;56(1):65–73. doi: 10.1109/TBME.2008.2003293. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Flowers WC, Mann RW. Electrohydraulic knee-torque controller for a prosthesis simulator. ASME J. Biomech. Eng. 1977;99(4):3–8. doi: 10.1115/1.3426266. [DOI] [PubMed] [Google Scholar]

- 15.Huang H, Zhang F, Hargrove L, Dou Z, Rogers D, Englehart K. Continuous Locomotion Mode Identification for Prosthetic Legs based on Neuromuscular-Mechanical Fusion. IEEE Trans Biomed Eng. 2011 Jul 14; doi: 10.1109/TBME.2011.2161671. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Hale SA. Analysis of the swing phase dynamics and muscular effort of the above-knee amputee for varying prosthetic shank loads. Prosthet Orthot Int. 1990 Dec;14(3):125–35. doi: 10.3109/03093649009080338. [DOI] [PubMed] [Google Scholar]

- 17.Zhang F, Dou Z, Nunnery M, Huang H. Real-time implementation of an intent recognition system for artificial legs. Conf Proc IEEE Eng Med Biol Soc. 20112011:2997–3000. doi: 10.1109/IEMBS.2011.6090822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hudgins B, Parker P, Scott RN. A new strategy for multifunction myoelectric control. IEEE Trans Biomed Eng. 1993 Jan;40(1):82–94. doi: 10.1109/10.204774. [DOI] [PubMed] [Google Scholar]

- 19.Anguita D, Boni A, Ridella S. A digital architecture for support vector machines: Theory, algorithm, and FPGA implementation. Ieee T Neural Networ. 2003 Sep;14(5):993–1009. doi: 10.1109/TNN.2003.816033. [DOI] [PubMed] [Google Scholar]

- 20.Frey BJ. Graphical models for machine learning and digital communication. The MIT Press; Cambridge, Mass: 1998. [Google Scholar]

- 21.Englehart K, Hudgins B. A robust, real-time control scheme for multifunction myoelectric control. IEEE Trans Biomed Eng. 2003 Jul;50(7):848–54. doi: 10.1109/TBME.2003.813539. [DOI] [PubMed] [Google Scholar]

- 22.Liu J, Ranka S, Kahveci T. Classification and feature selection algorithms for multi-class CGH data. Bioinformatics. 2008 Jul 1;24(13):i86–95. doi: 10.1093/bioinformatics/btn145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Golub TR, Slonim DK, Tamayo P, Huard C, Gaasenbeek M, Mesirov JP, Coller H, Loh ML, Downing JR, Caligiuri MA, Bloomfield CD, Lander ES. Molecular classification of cancer: class discovery and class prediction by gene expression monitoring. Science. 1999 Oct 15;286(5439):531–7. doi: 10.1126/science.286.5439.531. [DOI] [PubMed] [Google Scholar]

- 24.Ding C, Peng H. Minimum redundancy feature selection from microarray gene expression data. J Bioinform Comput Biol. 2005 Apr;3(2):185–205. doi: 10.1142/s0219720005001004. [DOI] [PubMed] [Google Scholar]

- 25.Model F, Adorjan P, Olek A, Piepenbrock C. Feature selection for DNA methylation based cancer classification. Bioinformatics. 2001;17(Suppl 1):S157–64. doi: 10.1093/bioinformatics/17.suppl_1.s157. [DOI] [PubMed] [Google Scholar]

- 26.Peng H, Long F, Ding C. Feature selection based on mutual information: criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans Pattern Anal Mach Intell. 2005 Aug;27(8):1226–38. doi: 10.1109/TPAMI.2005.159. [DOI] [PubMed] [Google Scholar]