Abstract

Nearly 300 million people worldwide have moderate to profound hearing loss. Hearing impairment, if not adequately managed, has strong socioeconomic and affective impact on individuals. Cochlear implants have become the most effective vehicle for helping profoundly deaf children and adults to understand spoken language, to be sensitive to environmental sounds, and, to some extent, to listen to music. The auditory information delivered by the cochlear implant remains non-optimal for speech perception because it delivers a spectrally degraded signal and lacks some of the fine temporal acoustic structure. In this article, we discuss research revealing the multimodal nature of speech perception in normally-hearing individuals, with important inter-subject variability in the weighting of auditory or visual information. We also discuss how audio-visual training, via Cued Speech, can improve speech perception in cochlear implantees, particularly in noisy contexts. Cued Speech is a system that makes use of visual information from speechreading combined with hand shapes positioned in different places around the face in order to deliver completely unambiguous information about the syllables and the phonemes of spoken language. We support our view that exposure to Cued Speech before or after the implantation could be important in the aural rehabilitation process of cochlear implantees. We describe five lines of research that are converging to support the view that Cued Speech can enhance speech perception in individuals with cochlear implants.

Keywords: cued speech, cochlear implants, brain plasticity, phonological processing, audiovisual integration

Cochlear implants are devices installed surgically in the skull of individuals with sensorineural deafness or lesions of the inner ear. Cochlear implants are arguably the most effective neural prostheses ever developed for enabling people who are deaf to recognize speech. Under direct electrical stimulation of the auditory nerve, these devices provide the brain's central auditory system with peripheral input. Despite that input being highly unnatural and impoverished relative to the normally functioning cochlea, most adults who had hearing ability prior to their hearing loss can understand speech well enough to converse easily by telephone (Moore & Shannon, 2009). This suggests that their brain can learn to remap the distorted and impoverished auditory input from the cochlear implant once it has already memorized phonological representations during normal language development.

The very fact that humans who are born deaf can learn to interpret an entirely new set of peripheral inputs delivered through cochlear implants is even more extraordinary and demonstrates the functional plasticity of the auditory system. The outcome of cochlear implantation is recognized as being highly variable. A large part of this variation is related to two well-identified factors: age at implantation and mode of communication. For example, O'Donoghue, Nikolopoulos, and Archbold (2000) reported that 55% of the variance in cochlear implant outcomes is accounted by these two variables together, whereas socioeconomic status of the family and number of inserted electrodes have no significant impact. Data collected both on animals and humans suggest that chronic electrical stimulation may protect the developing auditory system from degeneration and may modify the functional organization of the auditory system (Klinke, Hartmann, Heid, Tillein, & Kral, 2001). Many congenitally deaf children have never had clear, patterned, auditory input; and, thus, they must use degraded input from the cochlear implant to fuel the development of central phonological processing. For individuals receiving cochlear implants as children, there is strong evidence that earlier implantation produces better outcomes than later implantation in language acquisition and related processes such as short-term memory and reading acquisition (Archbold et al., 2008; Dillon, Burkholder, Cleary, & Pisoni, 2004; Svirsky, Teoh, & Neuburger, 2004).

Besides age at implantation, the method of communication to which children are exposed postimplantation appears to be an important factor in determining speech perception outcomes of children with a cochlear implant. Children in oral educational programs have been found to exhibit better speech perception performance 48 months after implantation than children in total communication (i.e., sign-supported speech) programs. This finding supports the view that oral stimulation facilitates the auditory system learning to interpret the new auditory stimuli from the cochlear implant (O'Donoghue et al., 2000).

This article is organized into three sections. In the first section, we discuss the limitations of cochlear implants for speech perception, both at the level of the device itself, and of brain plasticity; and we argue that it is possible to overcome those limitations by “awakening the deafened brain” (Moore & Shannon, 2009). Our argument is complementary to that of Moore and Shannon, who argue that auditory training can enhance performance of a cochlear implant. We maintain that training children to use audiovisual information, and even visual information delivered by Cued Speech, can further enhance the benefits they can get from the implant. We support our view that combining cochlear implants and Cued Speech could overcome some of the limitations related to the device itself as well as limitations related to the time course of the brain plasticity. In the second section, we argue that speech perception is a multimodal experience in hearing people, in deaf people without cochlear implant, and in deaf people with a cochlear implant. In the third section, we describe the Cued Speech system, explain how it allows for the development of precise phonological representations, and we describe findings from five lines of research that are converging to support our view that combining visual information from Cued Speech with the auditory information from the cochlear implant can “awaken the deafened brain” and enhance speech perception of individuals who are deaf.

Limitations of Cochlear Implants for Speech Perception

In the normal auditory system, a spectral analysis of complex sounds reveals an array of overlapping “auditory filters” with center frequencies spanning from 50 to 15,000 Hz (corresponding to frequency channels on the basiliar membrane). The output of each filter is like a bandpass filtered version of the sound, which contains time-varying fluctuations in the envelope (i.e., relatively slow variations in amplitude over time) superimposed on more rapid fluctuations of the carrier, the temporal fine structure (TFS). The normal auditory system can use both envelope and TFS cues to achieve perfect identification of speech in quiet but requires TFS cues to optimally segregate speech from background noise (Hopkins, Moore, & Stone, 2008; Lorenzi, Gilbert, Carn, Garnier, & Moore, 2006; Shannon, Zeng, Kamath, Wygonski, & Ekelid, 1995). Damage to the peripheral auditory system (e.g., cochlear lesions) degrades the ability to use TFS cues but preserves the ability to use envelope cues: that is, listeners with sensorineural hearing impairment typically exhibit a speech perception deficit in noise (Hopkins et al., 2008; Lorenzi et al., 2006).

Current cochlear implants provide relatively good information about the slow variations in amplitude of the envelope. However, they are poor at transmitting frequency information and information about TFS, which may limit specific aspects of speech perception (Glasberg & Moore, 1986; Grosgeorges, 2005; Lorenzi et al., 2006, but see Shannon, 2009 for a discussion of this point).

Regarding perception of phonetic features, voicing and manner are transmitted better by a cochlear implant than place of articulation (note that place of articulation is also more poorly perceived than voicing and manner by hearing individuals listening in noise, see Miller & Nicely, 1955). This reflects the fact that temporal cues, which play an important role in the transmission of manner and voicing, are better transmitted through the cochlear implant than spectral cues, which supply information about place of articulation (Donaldson & Kreft, 2006). Consequently, individuals with a cochlear implant often confound minimal word pairs (e.g., buck/duck), which differ only by place of articulation in an auditory-only situation (Giraud, Price, Graham, Truy, & Frackowiak, 2001). Regarding perception in noise, hearing-impaired adults and children with a cochlear implant perform more poorly than normally hearing peers in a background of steady sound; however, they perform even more poorly when listening in a background of fluctuating sound as compared with their normally hearing peers (Fu & Nogaki, 2004; Leybaert, 2008; Lorenzi et al., 2006). Hearing-impaired participants do not show the “masking release effect” exhibited by normally hearing people who get an important benefit from temporal dips in background sounds (Fullgrabe, Berthommier, & Lorenzi, 2006).

A further “limitation” of cochlear implants relates to the diminished plasticity of the auditory cortex as a function of increased age at implantation. Late implantation (i.e., after the age of 2 years) has been found to result in diminished abilities to develop normal language or cortical evoked responses (Sharma, Gilley, Dorman, & Baldwin, 2007). Poor speech perception of prelingually deafened, but later implanted, children is likely the result of diminished plasticity for auditory pattern recognition (Gilley, Sharma, & Dorman, 2008). After years of deafness, the auditory cortex of deaf persons might be reorganized by cross-modal plasticity, and it can no longer respond to signals from a cochlear implant (Champoux, Lepore, Gagné, & Théoret, 2009; Doucet, Bergeron, Lassonde, Ferron, & Lepore, 2006). Children and adults implanted at later ages are at a relative disadvantage compared with children implanted early because the auditory cortex has already been appropriated by the visual modality. As Shannon (2007, p. 6883) notes, the auditory system of children implanted at early ages “competes for cortical real estate whereas late implantation may be unable to dislodge existing cortical ‘squatters’.”

Although it is generally well accepted among audiologists that benefits of cochlear implants are likely to augment with improved cochlear implant technology and lowering of the age of implantation, it is less well known by audiologists that cueing a language via Cued Speech can also enhance the benefits of cochlear implants by training the brain to make better use of the signal from the cochlear implant. From this perspective, the concept of “awakening the brain” (Moore & Shannon, 2009) seems very useful. These authors emphasize that speech perception of implant users can be improved by more formalized auditory training consisting of repetitive exercises focused on the speech perception skill to be learned, such as hearing through the telephone. Positive results have been found with only a small amount of training and transfer of learning to untrained speech tests (Fu & Galvin, 2008; Moore, Ferguson, Halliday, & Riley, 2008). These studies suggest that, despite the fact that the implant signal may limit the speech perception performance level, most individuals can benefit from training, indicating that they have not extracted all the information available from the signal before the training.

We argue here that the concept of “awakening the deafened brain” should be extended to audiovisual training. More precisely, exposure to Cued Speech, before and/or after implantation can provide children with a cochlear implant with a substantial benefit to process the acoustic signal delivered by the cochlear implant, and achieve more in oral language development. Before entering into this discussion, we will review the literature showing that cochlear implantees benefit from audiovisual information.

Speech Perception Is a Multimodal Experience

Contrary to the view that speech perception is a purely auditory phenomenon, research during the past 50 years has shown that normally hearing people routinely incorporate information from audition with visual information from speechreading. The ability to extract speech information from the visually observed action of jaws, lips, tongue, and teeth, is a natural skill in hearing people. The visual cues from a speaker's mouth play a role in speech perception in everyday life. Adding the visual cues considerably enhances speech perception not only when auditory speech is degraded (Sumby & Pollack, 1954) but also when auditory speech is perfectly clear (Reisberg, McLean, & Goldfield, 1987). The brain tends to combine cross-modal inputs also when they are incongruent, as reflected in the McGurk effect (McGurk & MacDonald, 1976). For instance, if we see a speaker saying ga and hear a different syllable (ba), we may perceive a syllable distinct from either the auditory or the visual ones (like da). In this case, the percept is an integrated product of information from the two sensory modalities.

Behavioral Studies

Behavioral studies have shown that hearing infants are sensitive to audiovisual synchronization (Dodd, 1979) and to the fit of the seen and heard speech characteristics, including the vowel that is uttered, the identity of the speaker, and the type of utterance produced (Kuhl & Meltzoff, 1982). Susceptibility to the McGurk effect has also been demonstrated in infants (Rosenblum, Schmuckler, & Johnson, 1997).

How can the interaction between the two modalities be explained? First, audition and vision are complementary: Visual speech is advantageous for conveying information about place of articulation (e.g., at the lips or inside the mouth) whereas auditory speech is robust for conveying both manner of articulation and voicing. Second, speechreading also enhances sensitivity to acoustic information, thereby diminishing the auditory threshold of speech embedded in noise (Grant and Seitz, 2000). Noise-masked stimuli were better perceived by hearing participants when presented in an audiovisual condition than when presented in an audio-alone condition, because of the fact that visual information precedes the arrival of the sound information and helps the extraction of the acoustic cues (Schwartz, Berthommier, & Savariaux, 2004). Only models of speech perception that go beyond auditory processing and implicate “supramodal” or “amodal” procedures can accommodate such findings (Green, 1998; Summerfield, 1987). In sum, speechreading is intrinsic to an understanding of speech processing in normally hearing people, and the phonological analysis of speech is multimodal, making use of mouth movements and auditory information together.

A number of behavioral studies have examined the effect of speechreading on speech perception of individuals with a cochlear implant. The combination of visual information from speechreading with information from the cochlear implant would seem to be a “happy marriage” because what the former is lacking (i.e., transmitting the place of articulation information) is the specialty of the other (i.e., speechreading). Indeed, there are numerous demonstrations showing that children with cochlear implants get better speech identification performance from an audiovisual input than from an auditory alone input, and the difference is larger for deaf children than for normally hearing children. Deaf individuals with a cochlear implant seem to be “better integrators” of auditory and visual information (Rouger et al., 2007; Strelnikov, Rouger, Barone, & Deguine, 2009). In one of our studies (Huyse, Berthommier, & Leybaert, 2010), we compared the performance of comparably aged hearing and prelingually deaf children and adolescents on a syllable identification (/apa/, /ata/, /asa/, etc.) task in an auditory alone condition (hearing participants were given 16 sub-band spectrally reduced speech). The two groups were also matched on speechreading of the same syllables. In audiovisual recognition of the same syllables, the performance of the children with a cochlear implant significantly surpassed that of the normally hearing participants, suggesting that the children with a cochlear implant made better use of the combination of audio and visual information.

Neuroimaging Studies Revealing the Auditory Brain's Plasticity

Findings from neuroimaging studies also support the multimodal nature of speech perception. Specifically, studies of the neural mechanisms underlying speechreading in normally hearing people have revealed that “visible speech” has the potential to activate parts of the “auditory” speech-processing system, which were once thought to be modality specific. For example, a functional magnetic resonance imaging (fMRI) study by Calvert et al. (1997) demonstrated that silent speechreading of digits from one to 10 activates the temporal lobes, including some activation in primary auditory areas (BA 41/42) on the lateral surface of the planum temporale, area MT/V5, and temporal lobe language areas outside the primary auditory cortex. Paulesu et al. (2003) found that lexical access through speechreading was associated with bilateral activation of the auditory association cortex around Wernicke's area, of the left dorsal premotor cortex, the left opercular–premotor division of the left inferior frontal gyrus (Broca's area), and the supplementary motor area. All of these areas are implicated in phonological processing, speech and mouth motor planning, and speech execution. Interestingly, activation in the portions of the superior temporal cortex appears to be modulated by speechreading skill in hearing participants (Hall, Fussell, & Summerfield, 2005).

fMRI studies of natural audiovisual speech (MacSweeney et al., 2002; Calvert et al., 1997) also show consistent and extensive activation of the superior temporal gyrus in hearing people. Sekiyama, Kanno, Miura, and Sugita (2003) explored how brain activations in audiovisual speech perception are modulated by the conditions of intelligibility of the auditory signal. In the low intelligibility condition, in which the auditory component of the speech was harder to hear, the visual influence was much stronger, and increased activations in the posterior part of the left superior temporal sulcus (STS) was observed. As the increased activations of the STS were confined to the left language hemisphere, it could be interpreted as reflecting a cross-modal binding occurring as a linguistic event.

Deaf people generally outperform hearing people in getting meaning from speechreading (Bernstein, Demorest, & Tucker, 2000; Mohammed, Campbell, MacSweeney, Barry, & Coleman, 2006; Capek et al., 2008). Earlier reports suggested that superior temporal activation for speechreading was less reliably observed in deaf than in hearing people (MacSweeney et al., 2001). More recent work (Capek et al., 2008) showed opposite results: greater activation was found for deaf than for hearing participants in left middle and posterior portions of superior temporal cortex, including regions within the lateral sulcus and the superior and middle temporal gyri. Of interest is the fact that the activation pattern survived when speechreading skill, which was better in deaf than hearing participants, was taken as a covariate. These findings may have practical significance for the rehabilitation of children with a cochlear implant, in addition to their general theoretical significance. According to Capek et al. (2008),

Current practice in relation to speech training for prelingually deaf children preparing for cochlear implantation emphasizes acoustic processing. In auditory–verbal training, the speaking model is required to hide her or his lips with the aim of training the child's acoustic skills [×] Thus a neurological hypothesis is being advanced which suggests that the deaf child should not watch spoken (or signed) language since this may adversely affect the sensitivity of auditory brain regions to acoustic activation following cochlear implantation. Such advice may not be warranted if speechreading activates auditory regions in both deaf and hearing individuals. (p. 1240)

We will extend this point of view by arguing that the current evidence suggests that visual information provided by Cued Speech could interact positively with information provided by the cochlear implant to enhance speech perception.

Cued Speech and Its Role in Developing English and Other Traditionally Spoken Languages

The Cued Speech System

Cued Speech, a system of manual gestures conceived by Orin Cornett, accompanies speech production in real time (Cornett, 1967). Cued Speech has been adapted to 63 languages and dialects (http://www.cuedspeech.org/sub/cued/language.asp). The system is designed to provide deaf children a complete, unambiguous, phonological message based exclusively on visual information. Cornett's system of delivering accurate information regarding phonological contrasts via a purely visual input was designed to produce equivalent abstract (phonemic) speech representations in the perceiver's mind. Cornett hypothesized that if phonemic representations could be elaborated on the basis of a visual well-specified input, then linguistic development, as well as all of the cognitive abilities that depend on such linguistic abilities, would be equivalent in deaf and hearing children.

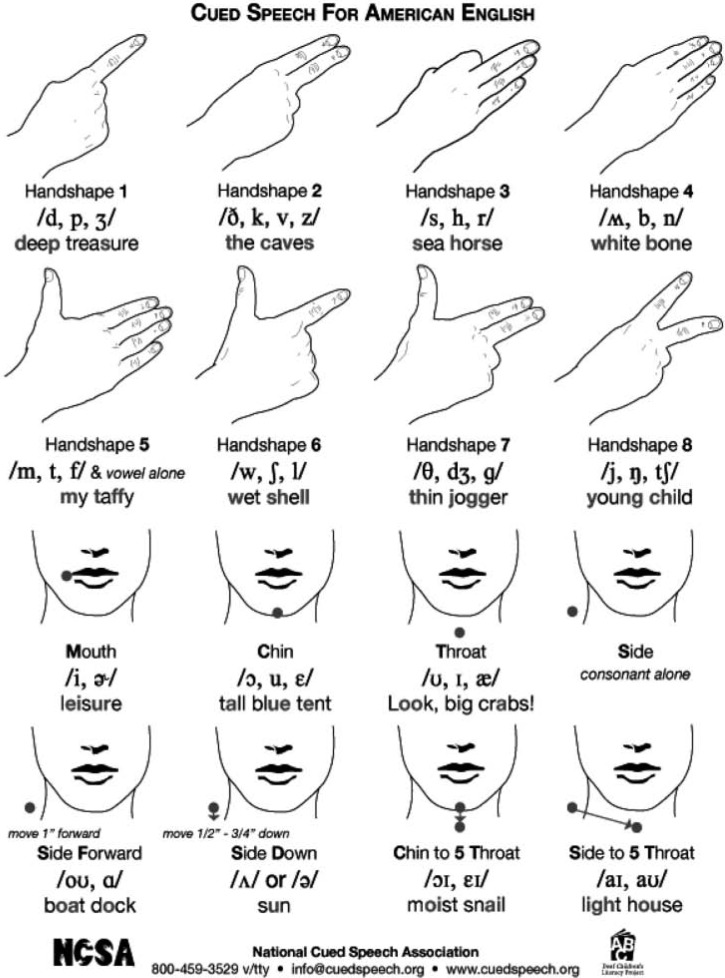

A cue in Cued Speech is composed of two visual articulatory components: a visual manual component (i.e., a handshape produced at a specific hand placement near the mouth) and a visual nonmanual articulatory component (i.e., a mouthshape1). When combined with a mouthshape, the manual handshape provides fully specified, unambiguous information about consonants, whereas hand placements do the same for vowel information. In Cornett's system, a single handshape code for two-to-three phonemes that are easily distinguished by a mouthshape. For example, the consonants /p/, /d/ and /j/, all coded with Handshape 1, are fully specified, or distinguished, by the appropriate mouthshape. Conversely, phonemes, which have similar mouthshapes are coded with different handshapes; for example, the bilabials /p/, /b/ and /m/, have identical mouthshapes and cannot be distinguished via speechreading alone; however, they are fully specified by their handshapes (i.e., Handshapes 1, 4, and 5, respectively). Consequently, a bilabial lip movement accompanied by Handshape 1 represents the phoneme /p/ in the absence of either speech or hearing without ambiguity. The same rule governs the coding of vocalic phonemes by using hand locations instead of handshapes.

Information provided by the manual component of Cued Speech (i.e., combined handshape/hand placement) and nonmanual component (i.e., mouthshape) is thus complementary. Each time a speaker pronounces a consonant–vowel (CV) syllable, a specific handshape at a specific location is produced simultaneously (see Figure 1). Syllabic structures other than CV (e.g., VC, CCV, CVC) are produced with additional specific manual cues. It is important to emphasize that handshapes and placements alone are not interpretable as linguistically relevant features by themselves. They must be integrated with the visual information provided by mouthshapes in speechreading. It is the integrated signal of speechreading and manual cue which points to a single, unambiguous, phonological percept that deaf children could not have achieved from either source alone. Deaf cuers (i.e., users of Cued Speech) are afforded a reliable visual language in which the gestures (i.e., the combination of speechreading and manual cues) are entirely specified, both at the syllabic and at the phonemic levels. Each syllable (and each phoneme) corresponds to one (and only one) combination of labial and manual information, and vice versa, a characteristic that makes Cued Speech, or cueing, entirely functional for speech perception (See Metzger & Fleetwood, 2010 and Shull & Crain, 2010).

Figure 1.

American English cue chart with international phonetic alphabet (IPA) symbols

It has been convincingly demonstrated that early and intensive exposure to cued languages (e.g., cued American English, cued French, cued Spanish) via Cued Speech enhances the perception of English and other traditionally spoken languages by deaf persons. Specifically, more than 90% of cued information is correctly perceived in the absence of sound via cueing, whereas only 30% is identifiable through speechreading alone (Alegria, Charlier, & Mattys, 1999; Duchnowski et al., 2000; Nicholls, & Ling, 1982; Uchanski et al., 1994). Moreover, an important series of studies (see Leybaert & Alegria, 2003; Leybaert, Colin, & LaSasso, 2010 for reviews) has shown that intensive and precocious exposure to Cued Speech enables prelingually, profoundly deaf children to develop precise phonological representations so that they can compete easily with hearing children for rhyming, reading, and spelling acquisition, and phonological short-term memory. The main source of improvement in these cognitive skills is the advantage provided by Cued Speech over other available communication methods (i.e., oral–aural methods, fingerspelling, and manually coded English) for speech perception, which leads to the natural acquisition of English and other traditionally spoken languages in preparation for formal reading instruction (See LaSasso & Crain, 2010 and LaSasso & Metzger, 1998).

Findings From Five Lines of Research Converging to Support That Cued Speech Can “Awaken the Deafened Brain” of Cochlear Implantees

Most children born profoundly deaf are fitted with a cochlear implant during the early language learning years (Loundon, Busquet, & Garabedian, 2009; Spencer & Marschark, 2003). Therefore, the need for using Cued Speech might appear to be less obvious. Improvement in children's hearing via cochlear implants affects strategies of perception of oral language (Geers, 2006). That is, with auditory training, many children with cochlear implants may seem to understand speech sufficiently without having to look at the speaker. However, both theoretical considerations and reports from parents and adult deaf cuers (Crain & LaSasso, 2010) suggest that cueing a traditionally spoken language, such as English, via Cued Speech, can benefit the child's linguistic, social, and emotional development.

In this section, we will consider how deaf children with a cochlear implant and exposed to a cued language (e.g., cued English, cued French, or cued Spanish) in a supportive, socially familiar setting, are in an optimal learning situation, and that this learning “awakens their brain” (to quote Moore & Shannon's, 2009 concept) to process linguistic (phonological, lexical, morphosyntactical) information. We describe findings from five lines of research which are converging to support our view that combining visual information from Cued Speech with the auditory information from the cochlear implant can enhance speech perception of individuals who are deaf.

1. Evidence that Cued Speech promotes a visual language learning experience that can facilitate the learning of the phonological contrasts of the native language. Young children learn their mother tongue rapidly and effortlessly, initiating babbling at 6 months of age, understanding about 150 words and successfully producing 50 words at 18 months of age, and uttering full sentences by the age of 2 to 3 years. Although the precise reasons for this rapid language acquisition are still unknown, it is generally acknowledged that infants are born with an innate set of perceptual, cognitive, and social abilities that are necessary for language acquisition. They then rapidly learn from exposure to ambient language, in ways that are unique to humans, combining pattern detection, statistical learning, and special social skills (Kuhl, 2004; Tomasello, 2006).

Three aspects of language acquisition are of particular relevance in the context of the present discussion. First, natural language learning requires social interaction (Kuhl, Tsao, & Liu, 2003; Tomasello, 2003), which implies contingency, and reciprocity in adult–infant language (Liu, Kuhl, & Tsao, 2003). Second, our innate ability to acquire language(s) changes over time. That is, the absence of early access to the phonological, lexical, and grammatical patterns inherent to natural (signed or spoken) languages produces life-long changes in the ability to learn and use language. For example, children born deaf to hearing parents, whose first experience with sign language occurs after the age of 6 years, show long-term effects of attenuation in their ability to process sign language (Mayberry & Lock, 2003). Third, speech perception, from the very beginning, is not a purely auditory phenomenon, but is rather a multimodal process (Bristow et al., 2009).

Early exposure to a native traditionally spoken language, social interactions with the speakers, and audiovisual processing have all been shown to have an impact on infants sorting out the 40 or so distinct elements (i.e., consonants and vowels), called phonemes, which are language specific. Phonemes, traditionally defined as “speech sounds,”2 are the minimal units that contrast the meaning of two words. For example, English language phonemes /b/, /d/, /t/ and /k/ distinguish words like buck/duck; told/cold). These phonemes are groups of nonidentical “sounds” that are functionally equivalent in the language. The task for infants during their first year of life is to make progress in distinguishing the 40 or so phonemic categories of their own language (Eimas, Siqueland, Jusczyk, & Vigorito, 1971; Werker & Tees, 1984).

For children with a cochlear implant, the ability to learn to perceptually group different “speech sounds” into a phonemic category, normalizing across talker, across rate, and across phonetic context is weaker, and more heterogeneous than in normally-hearing children. Using an auditory protocol based on consonant–vowel (CV) syllables assessing four consonant features (i.e., manner, place, voicing, and nasality) in /a, u, i/ contexts, Medina and Serniclaes (2006, 2009) found that 6- to 11-year-old children with a minimum of 2 years' experience with a cochlear implant achieved a lower average score (75%) than normally hearing peers (98%). The perception of place of articulation feature is very difficult for children with a cochlear implant, and does not progress as a function of age (Medina & Serniclaes, 2006). In contrast, voicing seems well categorized in children with a cochlear implant, who perform similarly to normally hearing children (at least in Medina and Serniclaes' data, but see confusions between voiced and voiceless phonemes in spontaneous language by a deaf child reported by Marthouret [in press], see below). As a result, individuals with a cochlear implant often confound minimal word pairs (e.g., buck/duck; told/cold) that differ by place of articulation in an auditory-only situation.

Cueing English or other traditionally spoken languages, via manual Cued Speech, prepares the brain to process language-dependent phonological contrasts visually that normally hearing children learn to discriminate by ear. The voicing and nasality contrasts (e.g., /b/, /p/, /m/), which cannot be perceived by speechreading alone, can be accurately perceived when cued via Cued Speech, because these three phonemes are coded by different manual cues (see Figure 1). The perception of place of articulation contrasts (between /p/, /t/, /k/ or between /b/, /d/, /g/, which can been seen on the mouth, can be reinforced by adding the manual cues, because these three phonemes are coded by different manual cues (see Figure 1).

Children with early, clear, consistent exposure to Cued Speech prior to receiving a cochlear implant have been shown to have constituted, or formed, clear representations of the phonological contrasts (e.g., the contrast between /p/, /b/, and /m/, the contrast between /z/ and /s/, or the contrast between /t/ and /k/) primarily from visual input. It would appear that when cochlear implant users extract the relevant pieces of auditory information from the continuous auditory phoneme stream, these early Cued Speech users map the new auditory representations to the previous, largely visual, representations of phonemes acquired via Cued Speech. In other words, the phonological system previously formed primarily from visual input (i.e., speechreading and manual cues) could act as a supramodal system, ready to incorporate information provided by another (i.e., auditory) modality.

Children who have stored (visual, cued) phonological representations prior to cochlear implantation will rapidly be able to take advantage of the stimulation delivered by the cochlear implant. Such cases have been documented by Descourtieux, Groh, Rusterholtz, Simoulin, and Busquet (1999). Vincent was a deaf child who received a cochlear implant at 2 years, 9 months. Prior to implantation, Vincent had accurate phonemic discrimination and a rich receptive cued vocabulary (i.e., delivered via Cued Speech) and used cues to express himself. Clinical observations showed that Vincent functioned quite well with the cochlear implant. Specifically, after 6 months of implant use, he understood, via audition alone, the words of his already extensive cued vocabulary. After 2 years' use of his cochlear implant, he was able to follow a conversation without speechreading and was able to speak with intelligible speech (Descourtieux et al., 1999, p. 206). Such cases lead Descourtieux et al. to conclude that children who comprehend vocabulary communicated visually prior to implantation are able, after 6 months of implantation to understand these words via auditory channel alone. The phonological contrasts “acquired by visual channel appear to be transferable to the auditory one” (Descourtieux et al, 1999, p. 206).

2. Evidence that Cued Speech has a training effect on speechreading. Because Cued Speech was invented as a complement to speechreading, the manual cues were envisioned by Cornett as removing the ambiguities conveyed by mouthshapes. Indeed, many syllables, which are fully distinguishable via audition, are indistinguishable via vision alone. For example, although spoken words beer, peer, and mere, can be fully distinguished via audition alone, they are indistinguishable to speechreaders who cannot hear the phonemes. However, when the word beer is accompanied by the manual cue for /b, n, □/ which distinguishes it from all other phonemes, the phoneme /b/ is fully specified by the combination of speechreading (i.e., lip closure) and the manual cue.

It is speculated that the Cued Speech receiver, or cuer, starts from “reading” the manual cues, which are very salient in Cued Speech. Imagine a receiver looking at a talker who is pronouncing the word /but/, accompanying the initial phoneme with the manual cue for /b, n/□. This manual cue reduces the possibilities for the initial phoneme to only two, and by reading the lips, the receiver can get the correct word. This argument is even more salient for nonlabial consonants, which are difficult to discriminate. The use of Handshape 3 (for /s, h, r/, see Figure 1) reduces the language receiver's uncertainty to three phonemes, whereas a lip movement corresponding to /r/ may be compatible with six different phonemes (i.e., /g/, /k/, /t/, /s/, /r/, /l/). The uncertainty between the three possibilities compatible with Handshape 3 could easily be solved by speechreading.

Attina and colleagues (Attina, Beautemps, Cathiard, & Odisio, 2004; Attina, Cathiard, & Beautemps, 2006; Attina, Cathiard, & Beautemps, 2007; Cathiard, Attina, Abry, & Beautemps, 2004; Cathiard, Attina, & Troille, in press) measured the time course of the production of manual cues and lip movements. They found that hand movements preceded mouth movements, conjointly with the well-known anticipation of the lips over the sound (Schwartz et al., 2004).

Children with a cochlear implant are no longer totally dependent on speechreading (as were profoundly deaf children with hearing aids), and they tend to not to pay as much attention to mouth movements than profoundly deaf children without cochlear implant (Marthouret, in press). Theoretically, when manual cues of Cued Speech are delivered together with the sound, children could be pushed to rely maximally on audiovisual information about speech rather than on auditory information alone. More precisely, phonemes which only differ by place of articulation (like /p, t, k/ or /b, d, g/) are produced with different manual cues in Cued Speech (see Figure 1). For instance, if a voiceless plosive syllable is produced with the Handshape 1, and given the fact that Handshape 1 is produced well before the sound, the syllable could be easily perceived by the cochlear implantee as beginning with a /p/ (and not a /t/ or a /k/).

In a recent study, we obtained empirical data supporting the view that cueing has a training effect on speechreading abilities. Young deaf and hearing adults participated in an fMRI experiment and also in a speechreading test outside the scanner. On each trial, a sentence was presented by a video-taped female who articulated the words silently, without sound. Subjects had to choose from among four pictures the one representing the sentence just speechread. The test consisted of 30 sentences. Three groups of young adults were recruited: young deaf adults who had been exposed to the French version of Cued Speech (Langue française Parlée Complétée) before the age of 3 years (N = 12; mean age = 25 years), young deaf adults who had been exposed to sign language before the age of 3 years (N = 12; mean age = 31.6 years), and young hearing adults without any experience with Cued Speech or sign language (N = 12; mean age = 25.2 years). The mean percentage correct responses were 80.8% for the deaf cueing group, 61.9% for the deaf signing group, and 51.9% for the hearing group. The deaf cueing group was significantly different from the deaf signing group and the hearing group, which did not differ from one another. The advantage exhibited by the cueing group could be related to better exploitation of phonemic information read on the mouth, combined with better knowledge of French vocabulary (Aparicio, Peigneux, Charlier, Neyrat, & Leybaert, 2010).

In another study (Colin et al., 2008), children with cochlear implants were presented with syllables (/bi/ or /gi/) in speechreading (without context) which they had to repeat. This allows one to test exploitation of phonemic information read on the mouth, independently of vocabulary's knowledge. Children exposed early and intensively to the French version of Cued Speech reached significantly higher identification scores in this visual phonemic discrimination task than children exposed later and less intensively to Cued Speech. This was true both for children fitted with a cochlear implant before the age of 3 years and for children fitted after the age of 3 years.

3. Evidence that cueing a language via Cued Speech facilitates natural language development in children with a cochlear implant. It is incontrovertible that the auditory signal restored by the cochlear implant is not as precise as the auditory signal provided by natural audition. Some children with cochlear implant have been observed to be overly confident in their auditory perception abilities; that is, they are not aware that what they are receiving auditorily from their implant, is not precise enough to allow them to accurately perceive all that is spoken. Some parents and siblings of deaf children with a cochlear implant have also been observed to be overly confident in their ability to clearly and completely communicate via the auditory channel to the child with the implant. It would appear that some children with cochlear implants are making use of mental compensation to understand the meaning of words they do not perceive accurately. Marthouret (in press) reported examples of errors in spontaneous language production by a French-speaking deaf child with a cochlear implant who tends to rely mainly on audition. These production errors reveal confusions between: (a) voiced and voiceless phonemes (e.g., Karine/Catherine, poney/bonnet, gateau/cadeau) and (b) words with similar mouth movements (e.g., gateau/carton, manger/bonjour; menton/manteau/moto). On a picture-naming task, the child said roué for route; pouchette for fourchette, tioir for tiroir, frégo for frigo, piti for petit, pope for poupée. All targets were very familiar words, which the child had experienced many times. In our interpretation, the words produced incorrectly are a reflection of an inaccurate mental representation due to poor perception of these words.

If auditory perceptions of children with a cochlear implant are not accurate enough, children will develop their language on the basis of ambiguities, which will entail errors, despite the help provided by the facial expressions and body language of the speakers, and by context. Contrary to profoundly deaf children who necessarily must rely on speechreading to receive the oral message, children with cochlear implants do not need to rely on the mouth as extensively or permanently. Parents, speech therapists, audiologists, and other related service providers should be aware of the limitations of the audition perception through the cochlear implant, and they should be encouraged to learn about the supplementary benefits provided by visual information available through Cued Speech, especially at specific moments of linguistic communication, such as: during acquisition of novel words or novel syntactic structures, moments when the child is tired, long discourses with rapid and complex information, and situations with poor speech reception such as situations in noise or when the receiver is too far away from the speaker.

It is our view that the addition of manual cues of Cued Speech to speechreading can improve the reception of lexical and sublexical information (i.e. syllables, phonemes) and can lead to a better development of morphosyntactic structures by children who are users of a cochlear implant (Hage & Leybaert, 2006). It should be noted that, in a contrasting view (Burkholder & Pisoni, 2006, p. 352), the authors predicted that the processing of the manual cues of Cued Speech would “create competition for limited resources in auditory-visual modalities used for both hearing and seeing important speech cues during auditory memory span tasks. That is, when a child with a cochlear implant using Cued Speech or a manually coded English (MCE) sign system,3 is confronted with manual signals (e.g., signs or cues), his or her attention will be drawn to the hand(s) of the speaker in addition to the lips on the speaker's face.” The data summarized below related to word and pseudoword identification in deaf cuers refutes the concern expressed by Burkholder and Pisoni (2006).

Word and pseudoword identification. We designed an experiment to test how well deaf cochlear implant users could repeat aloud words and phonically regular pseudowords (e.g., word enfant; pseudoword eufou). It was deemed important to investigate both words and pseudowords because words which are perceived inaccurately might be recognized and correctly repeated on the basis of lexical knowledge. Words and pseudowords were presented in five different modalities: Visual (V), Auditory (A), V + Cues (V+C), Audio Visual (AV), AV + Cues (AV+ C; see Leybaert et al., 2007; Leybaert, Colin, & Hage, 2010 for a detailed presentation of individual data). The participants (N = 19; mean age = 8.8 years, from 4 years 10 months to 12 years) were fitted with a cochlear implant at a mean age of 3 years 10 months (range = 1 year, 11 months to 7.0 years) and had a mean experience with the cochlear implant of 4 years and 7 months (from 2.0 years to 8 years, 1 month). All had been exposed to Cued Speech in their family and in speech therapy. The proportions of correct repetition for words and pseudowords in V modality (words 49%; pseudowords 19%) and V + C modality (words 89%; pseudowords 52%) suggest that their reception was enhanced when cues were present in addition to speechreading. For words, the mean correct repetition in the A modality was 66% (and >75% of correct responses for half of the participants). The addition of speechreading (AV) and manual cues (A + C) enhanced the mean repetition scores (AV, 87% and AV + C, 99%). In the AV + C condition, performance was equal to or higher than 95% for each participant. For pseudowords, the performance was systematically lower than for the word repetition in the A (53%), AV (67%), and AV + C (86%) modalities. Addition of speechreading to A (AV, 67%), and addition of manual cues to AV increases the correct repetition: in the AV + C condition, the mean correct performance was 86.4%, and 4 participants achieved 100% correct responses (all participants showed improvement).

These data suggest that although AV information might be enough to recognize familiar words, it might not contain enough information to repeat pseudowords, which could be considered as potentially novel words. The processing of manual cues from Cued Speech improves word repetition, but it plays an even greater role in the reception of pseudowords. These results suggest that some deaf children could be at risk for delayed vocabulary development if they are limited to relying solely on auditory and lipread information in order to acquire new words. A more precise sensory input, such as that provided by Cued Speech, could enhance children's acquisition of lexical representations for new words. In conclusion, contrary to concern expressed by Burkholder and Pisoni (2006), we have found that the processing of the manual cues combined with audiovisual information enhances the language receptive abilities of children with cochlear implant.

The development of morphosyntax. Audition through the cochlear implant may not be sufficient to ensure optimum development of morphosyntactical abilities (Le Normand, 2003, Le Normand 2004; Spencer, 2004; Svirsky, Stallings, Lento, Ying, & Leonard, 2002; Szagun, 2001; 2004). In a longitudinal study with German children, Szagun (2001) compared the increase of mean length of utterance (MLU) over a period of 18 months in two groups: deaf children with a cochlear implant and normally hearing peers initially matched for MLU = 1. She found that the cochlear implantees were delayed in the development of MLU, compared with the normally hearing children. Szagun (2004) analyzed a subgroup of children with a cochlear implant whose lexicon was rich. She found that whereas their acquisition of noun plurals and verb inflectional morphology was good, they acquired substantially fewer forms of the definite and indefinite articles, particularly case-inflected forms, and of the copular and modal verbs. Szagun (2004) also found that children with a cochlear implant made morphosyntactic errors related to gender and omissions on articles. She argued that due to their hearing impairment, deaf children frequently miss unstressed articles in incoming speech, which would lead to a reduced frequency of processed article input and to difficulties in constructing a case and gender system.

Theoretically, the Cued Speech system could affect the development of morphosyntactical abilities because Cued Speech increases the salience of the morphemes. Consider the case of a young deaf child, aged 24 months, fitted with a cochlear implant at 18 months, who converses with parents in a noisy context (e.g., a family dinner). “Are you sick?” asks the mother speaking to their child. The auditory input received by this child in this noisy situation might be too incomplete to allow the child to perceive the words; and, thus, the meaning of the question. In contrast, if the mother were cueing, the manual cues would likely attract the child's visual attention to the communicative intention of the mother. If the child knows the word “sick” and is able to decode the manual cues combined with the mouthshapes corresponding to sick, and the interrogative expression of his mother's face, he or she might be able to associate the auditory stimulus /sIk/ to a meaning that he previously accessed via Cued Speech. In our experience with children from both French- and English-speaking homes, Cued Speech helps parents and their deaf child to build up episodes of joint attention and linguistic interaction in which the first lexical and morphosyntactic representations are developed to serve as a foundation for socio-cognitive development.

Data supporting the view that Cued Speech enhances speech perception have been reported by Le Normand (2003), who assessed 50 French-speaking children at 6, 12, 18, 24, and 36 months after cochlear implantation. The children had received a cochlear implant between 21 and 78 months of age. In addition to the socioeconomic status of the families and gender, the mode of communication used with the children was found to be predictive of language production. Specifically, children who used Cued Speech produced a higher number of content words and function words than did those educated with the other modes of communication, including oral, French Sign Language, and signed French (i.e., signs borrowed from French Sign Language but signed with French grammatical word order). In a recent follow-up of the same cohort, Le Normand, Medina, Diaz, and Sanchez (in press) compared children whose initial language acquisition was with Cued French and those whose initial language acquisition was with French Sign Language. Controlling for age at implantation and socio-economic level of the families, the Cued Speech group showed longer MLUs and better development of determiners, prepositions and personal pronouns than the non–Cued Speech group.

To test the hypothesis that the use of Cued Speech can help overcome the “perceptual deficit” in children with a cochlear implant because Cued Speech transmits complete information about these unstressed elements of language, we compared the linguistic development of five children of approximately the same age and same school level, who had been fitted early with a cochlear implant and had equivalent duration of stimulation, but who differed in the mode of communication to which they were exposed. Two of the children (C and L) had been orally educated by their parents, one (D) was exposed to Cued Speech, but relies more on audition and does not consistently pay attention to speechreading and manual cues, whereas the other two (R and O) who had been exposed intensively to Cued Speech by their parents, consistently rely on audition, speechreading, and manual cues.

The children R and O, who had been exposed intensively to Cued Speech, achieved scores that were within the normal distribution of scores for their age level on a standardized test of French morphosyntax. In tasks measuring spontaneous language production, they used closed-class words (e.g., prepositions, articles) at age-appropriate levels. Child D, who relies more on her auditory capacities and does not consistently benefit from the cues, had difficulties with complex sentences on the standardized morphosyntax test, but she used closed-class words in her spontaneous talking. Children C and L, who were educated orally, displayed more important delays on a standardized test of morphosyntax as well as in their spontaneous use of closed-class words (see Leybaert, Colin, & Hage, 2010). These five children were also given a gender generation test (inspired from Hage, 1994), consisting of 24 trials. In each trial, two pictures of the same imaginary animal were presented to the child while saying “Here are two bicrons.” One of the two characters is then hidden, and the child is asked “what remains?” The child is expected to use the gender-marked determinant “le (the) bicron” or “un (one) bicron.” The appeal of this test is that some French phonological endings such as “on,” are statistically associated with the masculine gender, others (like “ette”) with the feminine gender. This test thus examines whether children have established cooccurrences between the suffixes and the grammatical gender, which, in French, is expressed by the determinants (“le”, “un” versus “la”, “une”). A control group of 32 hearing children, mean age 5 years 6 months, was also given the Bicron test, and they made between one and six gender determinant errors (saying “le” when “la” was expected, and vice versa). Among the five deaf children with a cochlear implant, the distribution of errors was as follows: R, 4 errors; C, 13 errors; D, 3 errors; L, 10 errors, and O, 2 errors. To sum up, the children R, D, and O were within the distribution of errors of hearing children, whereas C and L made a number of gender errors, which cannot be found in the distribution of normally hearing children. These findings suggest that exposure to Cued Speech has pushed the limits previously observed in the morphophonological processing of deaf children from non–Cued Speech backgrounds. Children in our study who benefitted from the combination of Cued Speech and a cochlear implant appear to have undergone normal development of morphosyntax. In contrast, the children in our study who rely solely on auditory information do not seem to have extracted the regularities between phonology and morphology; nor have they developed a good command of the syntactic structures or of the production of closed-class words. Thus, exposure to Cued Speech seems to help deaf children with a cochlear implant achieve a better development of delicate aspects of morphosyntax (see also Moreno-Torres & Torres-Monreal, 2008, 2010 for a case study of the development of morphosyntax in a deaf child with a cochlear implant and exposed to the Spanish version of Cued Speech).

4. Evidence that late-implantees who are previous users of Cued Speech benefit more from cochlear implants than deaf persons previously exposed to sign language. Although late implantation becomes increasingly rare, it provides one of the most impressive arguments for using Cued Speech in association with cochlear implants. That is, children who are former users of Cued Speech appear to break the rule related to late implantation in terms of positive outcomes. This phenomenon is documented by a recent study authored by Kos, Deriaz, Guyot, and Pelizzone (2008). These authors examined the language outcomes of children implanted between the age of 8 and 22 years. They found that virtually all subjects, regardless of their previous mode of communication, got better hearing thresholds after implantation and, therefore, rapid access to the world of sounds. However, 4.5 years after implantation, the improvement at the level of vowel and consonant identification without visual and cognitive cues was greater in children who were former users of Cued Speech than in those who were sign language users. The same results were obtained for the category of auditory performance (Archbold, Lutman, & Nikolopoulos, 1998). It should be noted that Cued Speech users and sign language users had similar hearing thresholds before and after cochlear implantation. It thus seems that the former Cued Speech users get better access to oral language through the cochlear implant than the former sign language users. In other words, Cued Speech users make better use of the information delivered by the implant than non–Cued Speech users.

5. Evidence that Cued Speech enhances speech intelligibility in cochlear implant users in noisy conditions. It is well established that speech perception in noise is difficult for cochlear implantees because of the cochlear implant's limitation in conveying temporal fine structure (TFS; Deltenre, Markessis, Renglet, Mansbach, & Colin, 2007; Fu & Nogaki, 2004; Lorenzi et al., 2006). TFS information may be important when listening in background noise, especially when the background is temporally modulated, as is often the case in “real life,” for example, when more than one person are speaking. Normally hearing subjects show better speech intelligibility when listening in a fluctuating background than when listening in a steady background (Fullgrabe et al., 2006), an effect called “masking release.” Hearing impaired subjects show a much smaller masking release effect, suggesting that they are poorer at extraction phonemic information from a fluctuating masker than normal-hearing subjects (Lorenzi et al., 2006; Hopkins et al., 2008).

Although it is evident that an audiovisual context favors speech perception in noise, for hearing (Sumby & Pollack, 1964) as well as for deaf persons (Erber, 1975), we wondered whether an audiovisual context might be more favorable to reveal the masking release effect in cochlear implantees. We speculated that information from cues might help deaf persons separate the phonemic syllable information from the background fluctuating noise, and allow deaf implantees to extract phonemic information from the fluctuating masker to a greater extent than in the auditory-only context. To test this hypothesis, we compared the intelligibility of six voiceless syllables (/apa/, /ata/, /afa/, /asa/, /acha/, /aka/) in four conditions: auditory with steady background noise Suppress (AO ST), auditory with fluctuating noise Suppress (AV MR), audiovisual with steady background noise Suppress (AV ST), and audiovisual with fluctuating noise Suppress (AV MR). We tested 29 deaf subjects who had been fitted with a cochlear implant for at least 2 years and had been exposed at various ages between 1 and 6 years to the French version of Cued Speech, and we then compared them with a sample of hearing sixth graders, matched with deaf subjects for mean age as well as for identification performance in speechreading (Leybaert, 2008). The results, extremely clear, show that (a) adding the visual information to the auditory information masked by steady background improves the intelligibility both for hearing participants and for deaf persons with cochlear implants; (2) hearing participants show a masking release effect, both in the auditory modality and in the audiovisual modality; and (3) participants with a cochlear implant did not show any masking release effect, either in the auditory modality or in the audiovisual modality. This preliminary data reveals that adding spreechreading cues to the auditory signal is not sufficient to allow deaf children with a cochlear implant access to sufficiently clear phonemic information in fluctuating noise. In the audiovisual condition with fluctuating background noise, cochlear implantees still made confusions between /ata/ /aka/ and /asa/ and /aka/, indicating a partially inaccurate phonemic perception of syllables. Listening in temporally modulated background noise, as is often the case when listening in “real life,” is thus difficult for cochlear implantees, even with audiovisual information. There is room for improvement of speech intelligibility in noise. Cued Speech may provide the input for this improvement, particularly for the perception of place of articulation in noisy conditions. Further testing is needed to confirm this hypothesis.

Concluding Remarks

Cochlear implants have limitations both in the peripheral signal and in the time course of plasticity. Despite this limitation, the fact that most children can develop language with the cochlear implant suggests that the implant signal does not impose absolute limitations on the development of speech and language. A considerable degree of brain plasticity exists. The incredible capacity of the brain to use the sensory input at its disposal, including multisensory input, can be enhanced further to expand the benefits of cochlear implants for individuals who are deaf. In this process, Cued Speech still plays an important role.

Data collected over the past 30 years have demonstrated that the use of Cued Speech can be a powerful tool for language development and subsequent formal reading achievement by profoundly deaf children without a cochlear implant. Cued Speech enhances speech perception through the visual modality, the acquisition of vocabulary and morphosyntax, and metalinguistic development, as well as the acquisition of reading and spelling (see Leybaert & Alegria, 2003; Leybaert, Colin, & LaSasso, 2010).

The processing of cued information activates in profoundly deaf early cuers some brain areas common with those activated by the processing of spoken language. In an fMRI experiment on adults who were early cuers (Aparicio, Charlier, Peigneux, & Leybaert, in press), preliminary findings indicate that the identification of words presented in Cued Speech activates the bilateral superior temporal gyrus and the left inferior frontal gyrus in deaf subjects who are early cuers (these areas are also activated by words presented audiovisually in normally hearing participants listening to words delivered auditorily, Hickok & Poeppel, 2000). If confirmed by data with a larger number of cueing participants, these data would indicate that the superior temporal gyrus constitutes an area of processing oral language, regardless of the modality (visual vs. spoken) in which the language is delivered. Further research is needed to establish whether this area also constitutes a site of convergence of auditory, visual (speechreading), and visuomotor (manual cues) information for deaf cuers who are fitted with a cochlear implant.

Prior Exposure to Cued Speech, prior to cochlear implantation, also seems to prevent the loss of cerebral plasticity due to late implantation. The fact that children fitted with a cochlear implant, even after 8 years of age, are able to develop oral language abilities (both receptive and expressive) post-implantation, has potential implications regarding the age limit and content of the “critical period” for language development. The data for the late-implantees in the Kos et al. (2008) study suggest that there is no absolute age limit regarding auditory stimulation: even if auditory stimulation occurs “late,” good results can be obtained provided that the auditory cortices have already been “prepared” to process the information about the phonological contrasts of oral languages. What seems important is not the fact that deaf children in the Kos et al. study could hear during their first years of life, but that they could get complete, effortless, unambiguous access from an early age to a set of clear, complete phonological contrasts used in their particular language, regardless of the modality (visual vs. auditory) through which these contrasts are delivered. In our view, exposure to a visual language (for example, cued English or another cued language) instigates a process for which infants' brains are neurally prepared, during which the brain's networks commit themselves to the basic detection and recognition of phonological patterns in the native language. It is important that this brain activity related to the processing of visual communicative signals or auditory communicative signals occurs early in life. Experiencing a cued language early in a child's development will have long-lasting effects on the child's ability to learn that language auditorily later, when they receive the cochlear implant.

Early and intensive use of Cued Speech prior to implantation is likely to become increasingly rare because most children are now fitted with a cochlear implant around the age of 1 year. Audiologists and other related service providers for deaf pediatric populations need to remember that during the first months or years of cochlear implant use, speech perception of an implanted child remains imperfect. Oral comprehension does not develop exclusively by the auditory channel but necessitates audiovisual integration. A strong case can be made for the addition of Cued Speech to the signal delivered by the cochlear implant in order to help deaf children overcome present limitations of cochlear implants. It is clear that perception of place of articulation, and speech perception in noisy environment can be enhanced by adding the manual cues to the audiovisual message; and, as a consequence, children with a cochlear implant can benefit from Cued Speech experience for the development of precise phonological representations through audition (Descourtieux et al., 1999). These phonological representations can then serve as a platform to launch subsequent development of morphosyntax (Le Normand, 2003; Le Normand et al., in press; Moreno-Torres & Torres-Monreal, 2008, 2010), phonological awareness, phonological short-term memory (Willems & Leybaert, 2009), reading and spelling (see Bouton, Bertoncini, Leuwers, Serniclaes, & Colé, in press; Leybaert, Bravard, Sudre, & Cochard, 2009, for a description of the effect of the combination of Cued Speech and cochlear implants on reading acquisition).

We acknowledge that the use of Cued Speech by children with a cochlear implant is not an automatic panacea for natural language development of deaf children. Some children may not consistently look at a speaker's mouth and hands, and they may tend to rely on auditory information alone (Marthouret, in press). Furthermore, some parents may lose their motivation to cue, feel discouraged, or simply abandon coding with the hands. Accordingly, it would be important for audiologists, speech therapists, educators, and related service providers to regularly assess whether cueing remains necessary, and under what circumstances after implantation (e.g., during the acquisition of novel syntactic structures, of new words, or periods when the child is tired; speech perception in noisy situations). There is a current lack of knowledge about the precise conditions that this assessment has to be done. It is likely that after some period of auditory rehabilitation, some children fitted with a cochlear implant would be capable of learning new words by auditory means and reading alone. Continued attention, nonetheless, should be devoted to the development of delicate, but vital, aspects of language, such as morphosyntax. As data from studies conducted by Szagun (2004) and Moreno-Torres and Torres-Monreal (2008, 2010) reveal, this domain of language acquisition is particularly important and sensitive to a lack of precise input provided by the cochlear implant. The capacity to develop morphosyntax easily in response to a well-specified input also tends to diminish with age, although the limits of a precise “sensitive period” cannot be fixed at the present time (Szagun, 2001).

Declaration of Conflicting Interests

The author(s) declared no conflicts of interest with respect to the authorship and/or publication of this article.

Funding

Research related to audio-visual integration, speechreading of CS-users, and the absence of masking release reported in this article was supported by a grant of the Fonds de la Recherche Fondamentale collective (FRFC, 2.4621.07).

Notes

Although we refer to handshape, hand placement, and mouthshape, we acknowledge that none of these is static in fluid communication.

So called although the example of Cued Speech indicates that phonemes exist in the absence of either speech or hearing.

MCE sign systems employ signs from American Sign Language with English word order and invented signs for morphological elements such as prefixes and suffixes. See LaSasso and Metzger (1998) and LaSasso and Crain (2010) for a comparison of the theoretical supportability of Cued Speech over MCE sign systems for clearly and completely conveying English in the absence of speech or hearing.

References

- Alegria J., Charlier B. L., Mattys S. (1999). Phonological processing of lipread and Cued Speech information in the processing of phonological information in French-educated deaf children. European Journal of Cognitive Psychology, 11, 451–472 [Google Scholar]

- Aparicio, et al. (in press). Etude neuro-anatomo-fonctionnelle de la perception de la parole en LPC (Neuro-anatomo-fucntional study of speech perception in Cued Speech). In Leybaert J. (Ed.), La Langue française parlée Complétée: Fondements et Avenir (Cued Speech: Foundations and future). Marseille, France: Editions SOLAL [Google Scholar]

- Aparicio M., Peigneux P., Charlier B., Neyrat C., Leybaert J. (2010). Lecture Labiale, Surdité et Langage parlé Complété (Lecture on Speechreading, deafness, and Cued Speech). Actes des Journées d'Etude sur la Parole de l'Université de Mons (Proceedings of the Study days on speech perception): City? University of Mons).

- Archbold S., Harris M., O'Donoghue G., Nikolopoulos T., White A., Hazel L. R. (2008). Reading abilities after cochlear implantation: The effect of age at implantation on outcomes at 5 and 7 years after implantation. International Journal of Pediatric Otorhinolaryngology, 72, 1471–1478 [DOI] [PubMed] [Google Scholar]

- Archbold S., Lutman M. E., Nikolopoulos T. (1998). Categories of auditory performance: Inter-user reliability. British Journal of Audiology, 32, 7–12 [DOI] [PubMed] [Google Scholar]

- Attina V., Beautemps D., Cathiard M.-A., Odisio M. (2004). A pilot study of temporal organization in Cued Speech production of French syllables: Rules for a Cued Speech synthesizer. Speech Communication, 44, 197–214 [Google Scholar]

- Attina V., Cathiard M.-A., Beautemps D. (2006). Temporal measures of hand and speech coordination during French Cued Speech production. Lecture Notes in Artificial Intelligence, 3881, 13–24 [Google Scholar]

- Attina V., Cathiard M.-A., Beautemps D. (2007). French Cued Speech: From production to perception and vice versa. AMSE-Advances in Modeling, 67, 188–198 [Google Scholar]

- Bernstein L., Demorest M., Tucker P. (2000). Speech perception without hearing. Perception & Psychophysics, 62, 233–252 [DOI] [PubMed] [Google Scholar]

- Bouton S., Bertoncini J., Leuwers C., Serniclaes W., Colé P. (in press). Apprentissage de la lecture et développement des habiletés associées à la réussite en lecture chez les enfants munis d'un implant cochléaire (Reading acquisition and development of abilities related to reading achievement in children fitted with a cochlear implant). In: Leybaert J. (ed.), La Langue française parlée Complétée: Fondements.

- Bristow D., Dehaene-Lambertz G., Mattout J., Soares C., Gliga T., Baillet S., Mangin J.-F. (2009). Hearing faces: How the infant brain matches the face it sees with the speech it hears. Journal of Cognitive Neuroscience, 21, 905–921 [DOI] [PubMed] [Google Scholar]

- Burkholder R., Pisoni D. (2006). Working memory capacity, verbal rehearsal speed, and scanning in deaf children with cochlear implants. In Spencer P. E., Marschark M. (Eds.), Advances in the spoken language development of deaf and hard-of-hearing children (pp. 328–359). New York, NY: Oxford University Press [Google Scholar]

- Calvert G. A., Bullmore E. T., Brammer M. J., Campbell R., Williams S. C. R., McGuire P. K., David A. S. (1997). Activation of auditory cortex during silent lipreading. Science, 276, 593–596 [DOI] [PubMed] [Google Scholar]

- Capek C., MacSweeney M., Woll B., Waters D., McGuire P., David A. S., Campbell R. (2008). Cortical circuits for silent speechreading in deaf and hearing people. Neuropsychologia, 46, 1233–1241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cathiard M.-A., Attina V., Abry C., Beautemps D. (2004). La Langue française parlée Complétée (LPC): Sa co-production avec la parole et l'organisation temporelle de sa perception (Cued Speech: Co-production with speech and temporal organization) Cathiard, M.-A., Attina, V. & Troille, E. (in press). La Langue française parlée Complétée: Une phonologie multimodale incorporée (Cued Speech: A multimodal, incorporated, phonology). In: Leybaert J. (ed.), La Langue française parlée Complétée: Fondements et Avenir (Cued Speech: Basis and Future). Marseilles, France: Editions SOLAL [Google Scholar]

- Cathiard M.-A., Attina V., Troille E. (in press). La Langue française Parlée Complétée: une phonologie multimodale incorporée. In Leybaert J. (Ed.), La langue Parlée Complétée: Fondements et Avenir. Marseille, France: Editions SOLAL [Google Scholar]

- Champoux F., Lepore F., Gagné J.-P., Théoret H. (2009). Visual stimuli can impair auditory processing in cochlear implant users. Neuropsychologia, 47, 17–22 [DOI] [PubMed] [Google Scholar]

- Colin C., Leybaert J., Charlier B., Mansbach A.-L., Ligny C., Mancilla V., Deltenre P. (2008). Apport de la modalité visuelle dans la perception de la parole (Benefit of visual information in speech perception). Les Cahiers de l'Audition, 21(2), 42–49 [Google Scholar]

- Cornett R. O. (1967). Cued Speech. American Annals of the Deaf, 112, 3–136043101 [Google Scholar]

- Crain K., LaSasso C. (2010). Experiences and perceptions of cueing deaf adults in the United States. In LaSasso C., Crain K., Leybaert J. (Eds.), Cued Speech and cued language for deaf and hard of hearing children (pp. 183–216). San Diego, CA: Plural [Google Scholar]

- Deltenre P., Markessis E., Renglet T., Mansbach A.-L., Colin C. (2007). La remédiation prothétique des surdités cochléaires. Apport des nouvelles technologies (Prosthetic remediation of cochlear deafness). In: Krahé J.L. (ed): Surdité et langage: prothèse, LPC, et implant cochléaire (Deafness and language: Prosthesis, Cued Speech, and cochlear implants) (pp. 97–127) Paris: Editions Presses Universitaires de Vincennes [Google Scholar]

- Descourtieux C., Groh V., Rusterholtz A., Simoulin I., Busquet D. (1999). Cued Speech and the stimulation of communication: An advantage in cochlear implantation. International Journal of Pediatric Otorhinolaryngology, 47, 205–207 [DOI] [PubMed] [Google Scholar]

- Dillon C. M., Burkholder R. A., Cleary M., Pisoni D. B. (2004). Nonword repetition by children with cochlear implants: Accuracy ratings from normal-hearing listeners. Journal of Speech, Language, and Hearing Research, 47, 1103–1116 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dodd B. (1979). Lipreading in infants: Attention to speech presented in- and out-of-synchrony. Cognitive Psychology, 11, 478–484 [DOI] [PubMed] [Google Scholar]

- Donaldson G., Kreft H. (2006). Effects of vowel context on the recognition of initial and medial consonants by cochlear implant users. Ear and Hearing, 27, 658–677 [DOI] [PubMed] [Google Scholar]

- Doucet M. E., Bergeron F., Lassonde M., Ferron P., Lepore F. (2006). Cross-modal reorganization and speech perception in cochlear implant users. Brain, 129, 3376–3383 [DOI] [PubMed] [Google Scholar]

- Duchnowski P., Lum D., Krause J., Sexton M., Bratakos M., Braida L. D. (2000). Development of speechreading supplements based on automatic speech recognition. IEEE Transactions on Biomedical Engineering, 47, 487–496 [DOI] [PubMed] [Google Scholar]

- Eimas P., Siqueland E., Jusczyk P., Vigorito J. (1971). Speech perception in infants. Science, 171, 303–306 [DOI] [PubMed] [Google Scholar]

- Erber N. P. (1975). Auditory-visual perception of speech. Journal of Speech and Hearing Disorders, 40, 481–492 [DOI] [PubMed] [Google Scholar]

- Fu Q.-J., Galvin J. J., III (2008). Maximizing cochlear implant patients' performance with advanced speech. Hearing Research, 242, 198–208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fu Q., Nogaki G. (2004). Noise susceptibility of cochlear implant users: The role of spectral resolution and smearing. Journal of the Association for Research in Otorhinolaryngology, 6, 19–27 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fullgrabe C., Berthommier F., Lorenzi C. (2006). Masking release for consonant features in temporally fluctuating background noise. Hearing Research, 211, 74–84 [DOI] [PubMed] [Google Scholar]

- Geers A. (2006). Spoken language in children with cochlear implants. In Spencer P. E., Marschark M. (Eds.), Advances in the spoken language development of deaf and hard-of-hearing children (pp. 244–270). New York, NY: Oxford University Press [Google Scholar]

- Gilley P. M., Sharma A., Dorman M. F. (2008). Cortical reorganization in children with cochlear implants. Brain Research, 1239, 56–65 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giraud A., Price C., Graham J., Truy E., Frackowiak R. (2001). Cross-modal plasticity underpins language recovery after cochlear implantation, Neuron, 30, 657–663 [DOI] [PubMed] [Google Scholar]

- Glasberg B., Moore B. (1986). Auditory filter shapes in subjects with unilateral and bilateral cochlear impairments. Journal of the Acoustical Society of America, 79, 1020–1033 [DOI] [PubMed] [Google Scholar]

- Grant K. W., Seitz P. F. (2000). The use of visible speech cues for improving auditory detection of spoken sentences. Journal of the Acoustical Society of America, 108, 1197–1208 [DOI] [PubMed] [Google Scholar]

- Green K. P. (1998). The use of auditory and visual information during phonetic processing: Implications for theories of speech perception. In Campbell R., Dodd B., Burnham D. (Eds.), Hearing by eye II: Advances in the psychology of speechreading and auditory-visual speech (pp. 85–108). Hove, England: Psychology Press [Google Scholar]

- Grosgeorges A. (2005). L'analyse des scènes auditives et reconnaissance de la parole (Analysis of auditive stages and speech recognition), Unpublished doctoral dissertation, Institut de la communication parlée (ICP/INPG), Université Stendhal, Centre National de la Recherche Scientifique.

- Hage C. (1994). Développement de certains aspects de la morphosyntaxe chez l'enfant atteint de surdité profonde: Rô;le du Langage Parlé Complété (Development of some aspects of morpho-syntax in children with profound deafness: Role of Cued Speech. Unpublished doctoral dissertation. Université libre de Bruxelles.

- Hage C., Leybaert J. (2006). The effect of Cued Speech on the development of spoken language. In Spencer P. E., Marschark M. (Eds.), Advances in the spoken language development of deaf and hard-of-hearing children (pp. 193–211). New York, NY: Oxford University Press [Google Scholar]

- Hall D. A., Fussell C., Summerfield A. Q. (2005). Reading fluent speech from talking faces: Typical brain network and individual differences. Journal of Cognitive Neuroscience, 17, 939–953 [DOI] [PubMed] [Google Scholar]

- Hickok G., Poeppel D. (2000). Towards a functional neuroanatomy of speech perception. Trends in Cognitive Sciences, 4, 131–138 [DOI] [PubMed] [Google Scholar]

- Hopkins K., Moore B., Stone M. (2008). Effects of moderate cochlear hearing loss on the ability to benefit from temporal fine structure information in speech. Journal of the Acoustical Society of America, 123, 1140–1153 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huyse A., Berthommier F., Leybaert J. (2010). Degradation of labial information modifies audio-visual integration of speech in cochlear implanted children. Manuscript in preparation. [DOI] [PubMed]