Abstract

The acceptance of cochlear implantation as an effective and safe treatment for deafness has increased steadily over the past quarter century. The earliest devices were the first implanted prostheses found to be successful in compensating partially for lost sensory function by direct electrical stimulation of nerves. Initially, the main intention was to provide limited auditory sensations to people with profound or total sensorineural hearing impairment in both ears. Although the first cochlear implants aimed to provide patients with little more than awareness of environmental sounds and some cues to assist visual speech-reading, the technology has advanced rapidly. Currently, most people with modern cochlear implant systems can understand speech using the device alone, at least in favorable listening conditions. In recent years, an increasing research effort has been directed towards implant users’ perception of nonspeech sounds, especially music. This paper reviews that research, discusses the published experimental results in terms of both psychophysical observations and device function, and concludes with some practical suggestions about how perception of music might be enhanced for implant recipients in the future. The most significant findings of past research are: (1) On average, implant users perceive rhythm about as well as listeners with normal hearing; (2) Even with technically sophisticated multiple-channel sound processors, recognition of melodies, especially without rhythmic or verbal cues, is poor, with performance at little better than chance levels for many implant users; (3) Perception of timbre, which is usually evaluated by experimental procedures that require subjects to identify musical instrument sounds, is generally unsatisfactory; (4) Implant users tend to rate the quality of musical sounds as less pleasant than listeners with normal hearing; (5) Auditory training programs that have been devised specifically to provide implant users with structured musical listening experience may improve the subjective acceptability of music that is heard through a prosthesis; (6) Pitch perception might be improved by designing innovative sound processors that use both temporal and spatial patterns of electric stimulation more effectively and precisely to overcome the inherent limitations of signal coding in existing implant systems; (7) For the growing population of implant recipients who have usable acoustic hearing, at least for low-frequency sounds, perception of music is likely to be much better with combined acoustic and electric stimulation than is typical for deaf people who rely solely on the hearing provided by their prostheses.

1. Introduction

Over two decades ago, when cochlear implants began to emerge as a practical treatment for deafness, expectations of their performance were generally modest. Suitable candidates for implantation were restricted to adults with profound or total hearing loss in both ears, who obtained minimal or no benefit from the use of the best available acoustic hearing aids but who had previously had sufficient hearing to learn and understand spoken language. Early devices were considered to be essentially aids to speech-reading (lip-reading), rather than unique hearing systems that could enable most users to understand speech in the absence of visual cues.

With the continuing development of implant technology, and the growing knowledge in relevant fields such as psychophysics, signal processing, and functional neural excitation, expectations of outcomes have increased steadily. Currently, people with some usable acoustic hearing are receiving cochlear implants and obtaining substantial benefit from them and an increasing number of people have received an implant in both ears.

The current population of implant users presently numbers over 60,000 worldwide, and a large proportion of them can understand most speech and recognize many other types of sound, at least in favorable listening conditions. These advances have led some implant recipients, especially those for whom performing or listening to music was particularly important before their hearing deteriorated, to attempt to use their implants to regain the experience of musical enjoyment.

Unfortunately, existing cochlear implant systems often provide inadequate auditory information about complex musical sounds for their users to enjoy fully that type of listening experience. A number of researchers have been investigating this problem, and several technical improvements to implant systems are now under development that may deliver better performance for listening to music in the future.

One of the earliest published reports of an experimental cochlear implant is that of Djourno and Eyries (1957). In an operation on a man left totally deaf as a consequence of bilateral cholesteatomas, a single-electrode stimulator was placed on the auditory nerve. Stimulation with low-rate electric pulse trains elicited sensations the patient described as “the song of a cicada or cricket, or the turning of a roulette wheel.” Stimulation at higher rates (above 100 Hz) caused a “sharp tonal sound.” Although the patient could not understand more than a very few words with the device, he did appreciate the ability to hear various environmental noises. Whether he tried listening to music is not reported, but if so, it seems unlikely that he could have derived much enjoyment from it.

A multiple-electrode device was implanted by Simmons (1966) in the right auditory nerve of a man who was totally deaf in that ear and profoundly deaf in the left ear. An extensive series of psychophysical experiments was carried out. Loudness was found to be related to stimulus intensity and pitch to stimulation pulse rate, with increases in rate over the range of 100 to 400 Hz producing consistent increases in the perceived pitch. Of importance was that sensations of different pitch (or timbre) were associated with the separate activation of each of the six electrodes. By activating one electrode with pulse trains of varying rate, melodic pitch changes seemed to be perceived by the patient. For example, he was able to identify a few well-known tunes, such as Jingle Bells and Mary had a Little Lamb, but not always reliably.

Over a decade later, Bilger (1977) reported the results of a large number of psychophysical tests conducted with 12 users of single-electrode cochlear implants. He found that most subjects could discriminate changes in frequency of electric stimulation at low frequencies (125 and 250 Hz), but not at higher frequencies (1000 and 2000 Hz). Subjective pitch was consistently associated with the frequency of stimulation only for the lower range of frequencies. However, perception of the duration and temporal pattern of stimulation was adequate for subjects to discriminate changes of rhythm in short sequences of stimuli. The loudness perceived was related to the stimulus intensity. Identification of melodies or perception of musical instrument sounds was not assessed.

Moore and Rosen (1979) briefly reported melody recognition by one totally deaf patient implanted with a single electrode placed on the surface of the cochlea. Although the test conditions were not tightly controlled, the subject appeared to be able to identify each of 10 well-known tunes by hearing alone with little difficulty. This finding suggested that musical pitch information could be conveyed by changes in the temporal pattern of Stimuli, without corresponding changes in other parameters such as the cochlear place at which maximal excitation occurs. In normal hearing, temporal and spatial characteristics of excitation in the cochlea are closely interrelated, and both depend on the frequency of acoustic stimuli.

The ability to recognize tunes was investigated in a preliminary experiment with a multiple-electrode implant described by Eddington et al. (1978). With one subject, stimuli were presented on a single electrode with the frequency controlled to correspond to the musical notes of five commonly known tunes. Rhythm cues were eliminated. The subject spontaneously identified only three of the tunes. This seemingly poor performance was ascribed to a presumably inconsistent relationship between the stimulus frequency and the pitch perceived.

Few, if any, of these relatively early reports describe musical perception with cochlear implant systems configured in the way that they may have been used in the everyday lives of their recipients. Instead, those experiments were generally conducted under artificial conditions in which controlled stimuli were delivered directly to the auditory nerves of the subjects via the implanted electrodes. Sound processors, which were initially developed specifically to enable implant users to understand speech, were not used in the experiments. More recent publications have addressed the question of how well implant recipients perceive music when listening with the sound processors they normally use. However, to gain an adequate understanding of these studies, it is helpful first to review the design and function of modern implant systems.

2. Cochlear Implant Technology

The basic functional principle underlying cochlear implants is that useful hearing sensations can be elicited in a sensorineurally deaf ear by stimulating auditory neurons directly with controlled electric currents. Many different designs of cochlear implants have been described, including both commercial and experimental systems, but all designs have general features in common. All implant systems pick up sound signals with a microphone that is usually packaged in an enclosure worn on the user's pinna, as with conventional behind-the-ear hearing aids.

An electric signal corresponding to the variation of pressure associated with air-borne sound waves is conveyed from the microphone to an electronic signal processor. The processor is designed to convert selected features of acoustic signals into a pattern of electric nerve stimuli that will evoke appropriate hearing sensations in the implant user. Considerable flexibility is available to designers of sound processing circuits and algorithms. This has led to the development and evaluation of many distinct processing schemes; for a detailed review, see Loizou (1998). The schemes most commonly used in current practice are described briefly later.

2.1. Implanted Devices

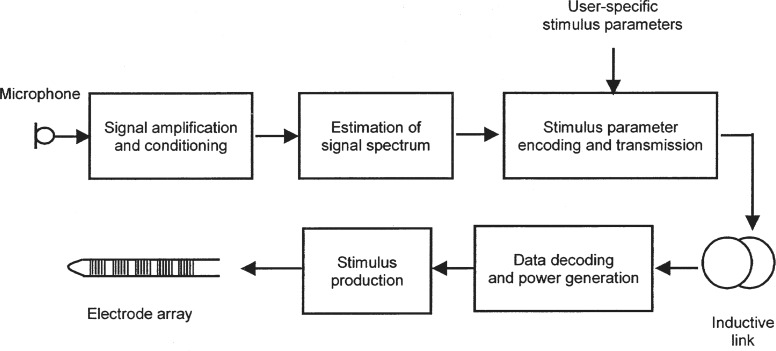

In most cases, the output of the sound processor consists of a digital code specifying the parameters of the electric stimuli to be delivered to the implanted electrode array. The code is usually conveyed to the implanted device via an inductive link (see Figure 1). The link, which is composed of two coils of wire separated by the skin overlying the implant, also serves to provide electric power to the implanted electronics.

Figure 1.

Schematic diagram showing the main functional blocks of a cochlear implant hearing prosthesis. In a typical multiple-channel system, sound signals are picked up by a microphone and passed to an amplifier, where they may undergo preprocessing such as filtering or compression. Next, the short-term spectrum of the signals is estimated. Suitable parameters of the electric stimulation to represent the spectrum are then calculated. These depend on a unique set of values that is determined for the individual implant user during device fitting and programming. The output of the sound processor comprises a digital code that is transmitted across the skin to the implant via a pair of coupled coils. An implanted receiver decodes the data transmitted by the external processor to obtain the parameters of the required pattern of stimulation. These parameters control a stimulator circuit that delivers electric currents to the array of intracochlear electrodes.

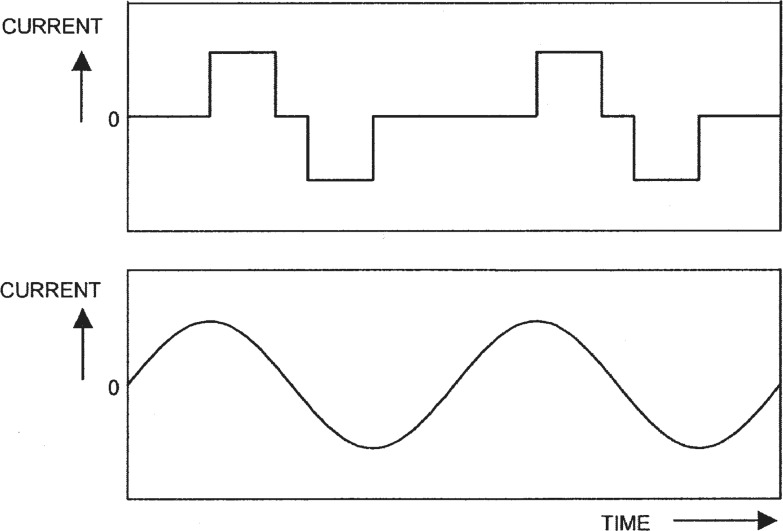

An integrated circuit in the implant demodulates the signal obtained from the subcutaneous coil and decodes the information transmitted by the sound processor. This specifies the amplitude and temporal parameters of the stimulus to be generated and the electrodes that are to conduct the stimulus current. The output of most existing stimulators is a precisely controlled current that is delivered to the active electrodes as a series of symmetric, biphasic pulses (see Figure 2).

Figure 2.

Examples of two general forms of electric stimulus that may be generated by cochlear implants. The ordinate shows current, whereas the abscissa shows time. At the top is a sequence of two biphasic pulses. Each pulse comprises two short intervals during which a constant current is delivered to the active electrodes. The current has equal magnitude in the two phases, but opposite directions. The phases may be separated by a brief time during which no current flows. The lower panel shows a so-called “analog” stimulus, in which the electrode current varies continuously in time.

Some implants are capable of generating continuously varying currents as an alternative to discrete pulses. Such “analog” stimuli can be used to represent some details of the waveform of the sound signal with different processing techniques from those needed to represent a similar input signal with trains of rectangular pulses.

In some early implant designs, the primary objective was to deliver stimuli to the entire surviving population of auditory neurons by using a single electrode placed near the neural elements. The electric circuit was completed through a second electrode that was often located at a remote site. A single active electrode was attractive, mainly because of the relative simplicity of both the surgery and the stimulator electronics; however, it has since been established that being able to stimulate different sectors of the neural population with some degree of independence has advantages.

In the normal cochlea an orderly relationship exists between the frequency of an audible sound and the location of maximal excitation of auditory neurons (Greenwood, 1990). Relatively high frequencies produce the most activity in neurons that innervate hair cells near the base of the cochlea, whereas lower frequencies activate neurons that innervate hair cells located at more apical positions. This tonotopic organization applies not only to hair cells but also to the cell bodies and dendrites of auditory neurons. Therefore, even in cases of profound sensorineural deafness in which few or no hair cells survive, cochlear implants may still take advantage of the tonotopic organization of the residual auditory neurons by means of an array of electrodes.

Such arrays generally comprise a number of discrete electrodes mounted on a carrier that is surgically inserted into the cochlea through or near the round window. When an array is located deeply inside the cochlea, electrodes near the tip preferentially stimulate neurons that, with normal acoustic hearing, would have responded best to low-frequency sounds, whereas electrodes nearer the cochlear base stimulate neurons associated with higher-frequency sounds.

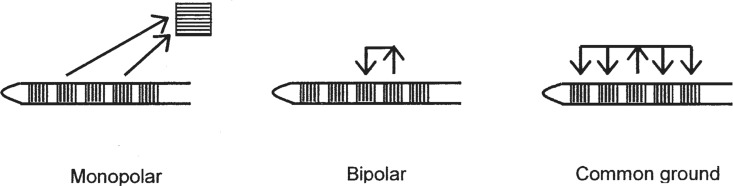

Multiple electrodes can be configured to deliver stimulating currents to the auditory neurons in different ways. The three main configurations available with existing devices are known as monopolar, bipolar, and common ground. As illustrated in Figure 3 (left), the monopolar configuration comprises an active electrode that is located in or close to the cochlea, and one or more separate electrodes that are located further away. These “indifferent” electrodes usually have a larger surface area than the active electrode and may serve as the current return path for many discrete active electrodes. Generally, single-channel implants stimulate by using the monopolar configuration. In multiple-electrode implants employing monopolar stimulation, it is important that the active electrodes be located close to the neural population so that, ideally, stimulation on each electrode excites a spatially distinct set of neurons and consequently elicits a perceptually discriminable auditory sensation.

Figure 3.

Three types of electrode configuration used in multiple-channel implants. The illustration at the left shows the monopolar mode, in which current from the active electrodes on the intracochlear array flows to a single “ground” electrode, which is located remotely. The center illustration shows the bipolar mode, in which current passes between two active electrodes located nearby on the array. The illustration at the right shows the “common ground” mode, in which current from one intracochlear electrode flows to most or all of the remaining electrodes on the array.

In principle, the spatial separation of the stimulating current paths in multiple-electrode implants can be improved by using bipolar stimulation. In this configuration, currents are passed between two electrodes, both of which are located relatively close to the auditory neurons (Figure 3, center). Several variations on the bipolar configuration may provide practical benefits in some conditions:

In one variation, the separation between the two active electrodes (the “spatial extent”) can be increased, for example by activating pairs of electrodes that are separated by one or more inactive electrodes on the array. This usually results in a reduction of the current required to produce an audible sensation (i.e., the threshold current).

Another variation involves arranging the electrodes spatially to direct the current flow in the cochlea more closely around a radial, rather than longitudinal, path. This is intended to increase the electrodes’ spatial selectivity and reduce thresholds by comparison with alternative configurations.

In the third type of electrode configuration, the common-ground mode, one active electrode is selected, and many or all of the remaining intracochlear electrodes are used together as the return path for the stimulating current (Figure 3, right). In several respects, the common-ground arrangement is intermediate between the bipolar and monopolar configurations. Some of the perceptual effects of stimulating with different electrode configurations are discussed briefly later.

Typically, multiple-electrode implants deliver stimulating currents to the active electrodes in a sequence of temporally nonoverlapping pulses, whereas earlier single-channel devices used a continuously varying waveform. However, in some designs it is possible for analog waveforms (or rectangular pulses) to be delivered simultaneously to several electrodes.

Simultaneous stimulation via multiple electrodes may, in theory, have beneficial perceptual effects, particularly because it should enable the normal patterns of the auditory neurons’ responses to acoustic signals to be emulated more closely. Unfortunately, in past experiments with cochlear implants, simultaneous stimulation has frequently been found to produce complicated side effects. For example, the complex summation of currents within the cochlea from multiple active electrodes can result in reduced spatial selectivity of the neural excitation and poorer control of perceived loudness.

2.2. Sound Processors

Sound-processing techniques for cochlear implants can be classified into three broad categories: feature-extracting strategies, spectrum-estimating pulsatile schemes, and analog stimulation schemes.

2.2.1. Feature-Extracting Strategies

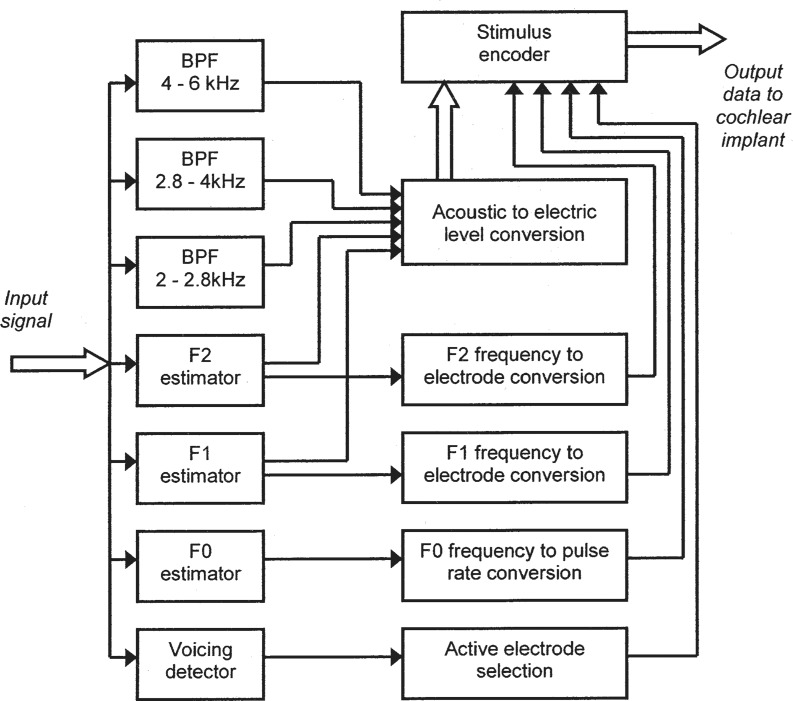

The feature-extraction approach to the design of sound processors is now obsolete, but remains of interest because it was based, in part, on principles derived from psychophysical experiments into the perception of pitch, including musical pitch (discussed later). A series of processing schemes developed mainly during the 1980s at the University of Melbourne and Cochlear Limited (formerly Nucleus Ltd) culminated in the “Multipeak” (or “MPEAK”) strategy (Patrick et al., 1990; Patrick and Clark, 1991) which was implemented in the “Mini Speech Processor” (MSP).

A block diagram of the MPEAK scheme appears in Figure 4. The input signal from the microphone was analyzed with the assumption that it usually contained speech. Three acoustic features of the signal were extracted: the fundamental frequency (F0), and the frequencies of the first two formants (F1 and F2), which convey much of the information available in the signal about the identity of vowels and other voiced speech sounds. The frequencies of F1 and F2 were converted to positions of two active electrodes selected from the 22-electrode array according to the tonotopic principle.

Figure 4.

Functional block diagram of the MPEAK speech-processing strategy. Input signals from a microphone are analyzed to extract or estimate the parameters of a small number of acoustic features that are important for conveying information about speech. These parameters include voicing, the fundamental frequency (F0), and the frequencies and amplitudes of the first two formants (F1 and F2). In addition, the levels of signals in three higher-frequency bandpass filters (BPFs) are determined. As explained in the text, a subset of these parameters is selected for stimulation in each period. The signal levels are converted into appropriate current levels of electric stimulation. The digital data transmitted to the implant specify the currents to be delivered by the active electrodes. The resulting stimulation pattern comprises groups of four sequential pulses delivered at an overall rate dependent on the estimated F0 whenever the acoustic input signal is judged to contain voiced speech.

In addition, three bandpass filters and envelope detectors estimated the amplitude of the incoming signal within three higher frequency regions. These filters were assigned to specific electrodes at the basal end of the electrode array. They were included mainly to improve the processing of certain consonant sounds, such as unvoiced fricatives.

With MPEAK, sequential pulsatile stimulation was generated at a rate that depended on whether voicing was detected in the input signal. If a voiced signal was present, the estimate of F0 was used to control the stimulation frame rate. Within each frame, four pulses were generated representing F1, F2, and the lower two of the three high frequency bands. If no voicing was detected, a stimulation rate of about 250 Hz was used, and the four pulses presented in each period represented F2 and each of the three high frequency bands.

The amplitudes of the selected acoustic features were converted into appropriate current levels determined for each electrode in each implant user. The minimum current level (i.e., the “T-level”) corresponded approximately to the threshold of audibility, and the maximum level (i.e., the “C-level”) evoked a sensation of comfortable loudness.

The rationale for generating pulsatile stimulation at an overall rate determined by the fundamental frequency of voiced speech was justified by psychophysical findings that showed implant users could perceive a pitch related to the pulse rate for rates ranging from about 100 to 300 Hz (discussed later). This range corresponds approximately to the F0 frequency range for many (but not all) speakers, and for other complex sounds such as those produced by certain musical instruments. Similar reasoning supported the development of an early single-channel extra-cochlear prosthesis (Fourcin et al., 1979), which was intended primarily as a speech-reading aid. That device also applied stimulation to the auditory nerve at a frequency derived from an estimate of F0.

The feature-estimating schemes, such as MPEAK, could provide many implant users with enough information to enable the recognition of most speech sounds, but they had several inherent weaknesses. In particular, it was technically difficult to obtain accurate estimates of the relevant parameters of speech signals in a real-time processor that needed to function reliably in unfavorable conditions, such as situations with high levels of background noise. Estimating F0 in noisy or reverberant situations or in conditions where several different sources of F0 are present simultaneously is especially difficult. Another shortcoming is that strategies that extract or emphasize acoustic features specific to speech signals may not provide optimal processing of nonspeech sounds, including music and environmental noises.

2.2.2. Spectrum-Estimating Pulsatile Schemes

Considerations such as these eventually led to the abandonment of the feature-extraction approach to cochlear implant sound processing. For most current users of multiple-electrode devices, spectrum-estimating pulsatile schemes are the preferred choice. The three most widely used schemes are known as “Continuous Interleaved Sampling” (CIS), “SPEAK,” and “Advanced Combination Encoder” (ACE). Each of these sound-processing schemes is designed to present information about prominent spectral features of sound signals, but it is not assumed that those spectral features are necessarily associated with speech. More important, a stimulation pulse rate is applied that is independent of any parameters of the input signal. Pulses are generally delivered to the active electrodes in a sequential cycle at a constant, relatively high rate.

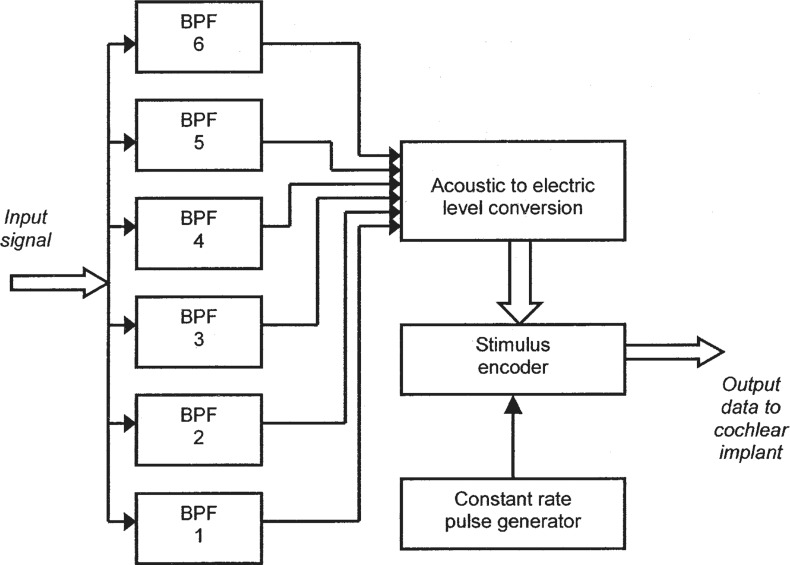

A block diagram of a typical six channel CIS processor is shown in Figure 5 (Wilson et al., 1991). The short-term spectrum of incoming signals is estimated by means of a bank of bandpass filters. At the output of each filter, the envelope of the waveform is estimated. These envelope signals are sampled at regular times, and their amplitudes are converted to appropriate stimulation current levels.

Figure 5.

Functional block diagram of the Continuous Interleaved Sampling (CIS) sound-processing strategy. In this example, six bandpass filters (BPFs) are used to enable the short-term (or instantaneous) levels in each of six partially overlapping frequency bands to be estimated. The filters have center frequencies that are typically spaced regularly along a logarithmic scale. The levels at the outputs of the filters are converted into appropriate current levels of electric stimulation. The digital data transmitted to the implant specify the currents to be delivered by each of the electrodes. The resulting stimulation pattern comprises a series of interleaved pulses delivered at a constant rate.

In the implant, brief electric pulses are delivered by electrodes corresponding to the filters at a rate equal to the sampling rate. In most existing implementations of CIS, the pulse rate is on the order of several thousand pulses per second per channel. Both commercial device manufacturers and independent researchers have implemented and evaluated numerous variations of the CIS scheme; for example, different numbers of filters and electrodes have been used, and alternative techniques have been investigated for converting the filters’ outputs into levels of electric stimulation. However, the essential functional principles of the CIS scheme have been retained. Some alternative sound-processing schemes for multiple-electrode implants, such as SPEAK and ACE, generally have a larger number of bandpass filters than CIS schemes.

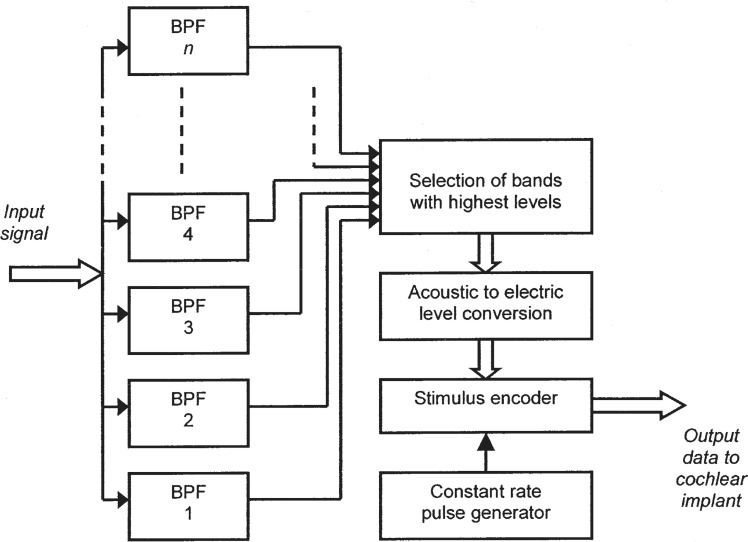

A block diagram of a typical ACE processor for a Nucleus (Cochlear, Lane Cove, NSW Australia) implant is shown in Figure 6 (Vandali et al., 2000). The estimation of the short-term spectrum of incoming signals is performed by a bank of 20 filters having partially overlapping bandpass characteristics. The envelope of the signal at the output of each filter is estimated. In each stimulation period, the amplitudes of the envelopes are compared, and the subset of filter channels with the highest short-term amplitudes is identified. The number of channels selected in this process is limited, for example to 6 or 10. The amplitudes at these channel outputs are converted to appropriate levels of stimulation as in other non-simultaneous pulsatile schemes.

Figure 6.

Functional block diagram of the Advanced Combination Encoder (ACE) sound-processing strategy. A relatively large number of bandpass filters (typically 20) are used to estimate the short-term spectrum of the input signal. The filters have partially overlapping frequency responses covering a wide bandwidth (e.g., 200 Hz–10 kHz). The levels at the outputs of the filters are compared so that only the subset comprising the highest levels is passed to the following stages of processing. The subset typically includes the 10 highest levels, and only the 10 corresponding electrodes in the cochlear implant are activated. The selected signal levels are converted into appropriate current levels of electric stimulation. The digital data transmitted to the implant specify the currents to be delivered by the active electrodes. The resulting stimulation pattern comprises a series of interleaved pulses delivered at a constant overall rate.

The overall stimulation pulse rate is approximately constant and is usually much higher for ACE than for SPEAK. The SPEAK scheme employs a pulse rate of about 250 Hz per channel, mainly because it was derived from the earlier “Spectral Maxima Sound Processor” (SMSP), in which only relatively low pulse rates were practical for technical reasons (McDermott et al., 1992). The overall pulse rate for ACE may be at least 14.4 kHz, depending on the capabilities of the implanted stimulator, but otherwise ACE and SPEAK are functionally similar.

2.2.3. Analog Stimulation Schemes

As mentioned previously, analog stimulation schemes are presently used less often than pulsatile schemes in multiple-electrode prostheses. In one such scheme, “Simultaneous Analog Stimulation” (SAS) (Kessler, 1999), the short-term spectrum of incoming signals is estimated by means of a bank of bandpass filters. However, in contrast to pulsatile strategies, SAS uses the waveform at the output of each filter, rather than the signal envelopes, as the basis for stimulation. Each filtered waveform is compressed in a manner analogous to the conversion of envelope amplitudes to current levels in pulsatile stimulation strategies. This process ensures that the levels of stimulation result in comfortable loudness and adequate audibility of most sounds for each implant user.

In the implant, the compressed waveforms are delivered simultaneously as continuously varying currents to the active electrodes. The SAS scheme is closely related to the earlier CA strategy that was used successfully with the now-obsolete Ineraid multiple-electrode cochlear implant (Eddington, 1980). The performance of numerous other sound-processing schemes, including the ones outlined above, in enabling implant users to understand speech has been investigated and reported extensively, and will not be reviewed here.

3. Music Perception with Cochlear Implants

Music is difficult to define. A purely phenomenologic “I know it when I hear it” definition is unsatisfactory, because few people would be able to agree about all types of music. Strictly objective definitions are also problematic, because it is hard to imagine any type of sound that could not form part of a piece of music, given an appropriate context—environmental noises and synthetic sounds are common elements in certain musical genres. Even a definition that would clearly separate singing (music) from speech (not music) is elusive; utterances in tonal languages, in particular, may sound musical to some listeners, especially those who are unable to comprehend the meaning.

Nevertheless, much of the published research on how cochlear implant users perceive music apparently rests on the assumption that music can be characterized as an organized sequence of sounds that have a small number of fundamental features, including rhythm, melody, and timbre. Additional attributes of sounds, such as harmony and the overall loudness, also contribute to the structure of music. Each of these properties can be described, at least approximately, in terms of physical parameters of acoustic signals. For example, the loudness of a sound is related to its intensity, and rhythm is conveyed in most musical styles by moderately rapid variations in loudness.

Beyond these objective characteristics of sounds, however, are diverse phenomena that are also important in the experience of listening to music. These include subjective quality, mood, and the situational context. For instance, a person's emotional response to music heard at a crowded dance party organized to celebrate a significant event, such as a birthday, is undoubtedly a very different experience from that of listening alone to a high-quality recording of a cerebral work by J. S. Bach. However, it is reasonable to assume that these divergent aspects of the musical experience are common to listeners regardless of the functional mode of their hearing. Because they are not specific to users of cochlear implants, they will not be discussed further here.

3.1. Perception of Rhythm

Temporal patterns in musical sounds that impart a distinctive rhythm generally occur at the approximate frequency range of 0.2 to 20 Hz. Acoustic features that change less rapidly than this are associated with overall variations in loudness (often called “dynamics” in music). Higher frequency components of acoustic signals carry pitch information, which will be discussed later.

The ability of listeners with cochlear implants to discriminate rhythms has been investigated by several researchers. A standardized test used by Gfeller and Lansing (1991; 1992) is known as the “Primary Measures of Music Audiation” (PMMA), and was developed mainly for use with children (Gordon, 1979). The rhythm subtest of the PMMA comprises pairs of short sequences of sounds, each recorded with unvarying pitch and timbre. The sequences in each pair are either identical or different in rhythmic pattern and are randomly presented to the listener, who is instructed to indicate whether the pair of sequences is the same or different. The score that would be obtained with uniformly random responses is 50%.

Performance on this test was assessed with 18 adult users of cochlear implants (Gfeller and Lansing, 1991). Ten subjects were users of a now-superseded feature-extraction speech processing strategy developed for the Nucleus 22-electrode device manufactured by Cochlear Limited. This strategy was a predecessor of MPEAK that extracted and presented information about the speech features F0, F1, and F2, but not the three higher frequency bands described earlier and outlined in Figure 4 (Dowell et al., 1987). The remaining eight subjects were users of the Ineraid prosthesis and a four-channel Compressed Analog sound processor; this system is also now obsolete. The mean score for the group was 88% and individual scores ranged from about 80% to 95%. There appeared to be little difference in performance related to the type of prosthesis each subject used.

A subsequent study also used the PMMA (in a slightly modified form) to examine any differences in rhythm perception between two sound-processing schemes used by 17 recipients of the Nucleus 22-electrode device (Gfeller et al., 1997). The two schemes were MPEAK and the earlier F0/F1/F2 strategy. The mean score for both schemes on the PMMA rhythm subtest was approximately 84% correct. This is not only close to the corresponding finding from the previously mentioned study (Gfeller and Lansing, 1991) but also very close to the average score obtained by a control group of 35 subjects with normal hearing (Gfeller et al., 1997).

Results of assessments of rhythmic pattern recognition and reproduction by eight implant users were compared with corresponding results obtained by seven normally hearing subjects in a study reported by Schulz and Kerber (1994). The recognition task required listeners to identify patterns representative of four common musical rhythms (such as those associated with a waltz or a tango), whereas the reproduction task required listeners to repeat by tapping several distinctive rhythmic patterns comprising three or five beats. Average scores for each group of subjects were at least 80% correct for both types of assessment; however, no statistical analyses were presented to determine whether any significant differences in performance existed between the two groups.

More recently, Leal et al. (2003) assessed rhythm perception by 29 recipients of the Nucleus 24-electrode device. Twenty subjects used the ACE sound-processing scheme, while the remainder used the SPEAK scheme. The test was essentially similar to the PMMA rhythm subtest but with fewer test items. However, in addition to a discrimination test (i.e., indicating whether pairs of sound sequences had the same or different rhythm), an identification test was also done in which subjects were asked to indicate where in each sequence the rhythmic change occurred. Individual results were classified into “good” and “poor” categories depending on whether the subjects’ scores were greater or less than two criterion scores, which were 90% and 75% correct for the rhythm discrimination and identification tests, respectively. On this basis, 24 of the 29 subjects obtained good performance on the rhythm discrimination test, whereas only 12 of the subjects obtained good performance on the rhythm identification test. No differences in performance related to the use of the two sound-processing schemes were reported.

In another test of rhythm perception, 3 implant users and 4 subjects with normal hearing were instructed to identify one of seven distinct rhythmic patterns (Kong et al., 2004). Two of the implanted subjects used the Nucleus 22-electrode device and the SPEAK sound-processing scheme, while the third used the Clarion I system (Advanced Bionics, Sylmar, CA) with the CIS scheme. The normally hearing subjects obtained scores near 100% correct on the test. One Nucleus implant recipient obtained similar near-perfect scores, but the remaining 2 subjects had scores that were 10 to 25 percentage points lower. Interestingly, scores for each subject were very similar for 4 subtests in which the same test materials were presented at different overall speeds (60–150 beats per minute).

In a related study, the performance in discriminating differences in tempo was assessed with five implant users, including two Ineraid recipients using the CIS scheme (Kong et al., 2004). On average, their results were very close to those obtained with the same four normally hearing subjects mentioned above. The change in tempo that was discriminable by the implant users was approximately 4 to 6 beats per minute across the range of tempi (60–120 beats per minute) presented in the tests.

A study with an unusual experimental procedure compared the temporal integration characteristics of 11 single-channel cochlear implant users with those of matched normally hearing listeners (Szelag et al., 2004). The subjects were asked to accentuate mentally a rhythmic pattern within a sequence of regular tone bursts presented at several burst rates between one and five beats per second. The implant users’ ability to integrate rhythmic patterns subjectively at beat rates below three beats per second was significantly poorer than that of the participants with normal hearing. At higher beat rates, the performance of the two subject groups was similar.

Although these findings appear somewhat inconsistent with those cited above, which generally reported that performance on rhythmic pattern perception tasks was similar for implant users and normally hearing listeners, it seems possible that the differences may be related mainly to the experimental methods employed in each study to determine subjects’ perceptual abilities. In particular, it is difficult to interpret the results of the subjective experiment described by Szelag (2004) directly in terms of musical rhythm perception.

3.2. Perception of Melody

3.2.1. Tune Identification

What enables people to recognize melodies? First, there is the question of which tunes are sufficiently familiar to a listener such that he or she would be able to name them on hearing them. This ability depends on a range of highly variable factors, such as the individual's musical training and listening experience, the social culture within which that experience has been gained, and the person's memory of both the tunes and their titles. Recognition is also likely to be affected by the situational context in which the music is heard. For example, in the Western musical tradition, Happy Birthday is rated amongst the most familiar melodies for the general population (Gfeller et al., 2002a; Looi et al., 2003), and it is immediately recognizable by nearly everyone in the appropriate circumstances regardless of the intonation of the notes, the correctness of the rhythm, or the acoustical quality of the listening situation. Thus, the ability to perceive accurately fundamental features of musical sounds, such as pitch and temporal patterns, is not always a prerequisite for melody recognition.

As previously summarized, the performance of most cochlear implant users in formal tests of rhythm perception is reported to be similar to that of listeners with normal hearing. This observation leads to the expectation that implant users would be able to recognize melodies that have a distinctive rhythmic pattern more readily than melodies that are less rhythmic. This was confirmed in a study involving eight users of a single-channel cochlear implant with an analog sound-processing scheme (Schulz and Kerber, 1994).

In that study, only four different tunes (well-known children's songs) were presented in several different musical arrangements. Not surprisingly, the normally hearing subjects obtained an average recognition score of close to 100% correct across all of the melodies. The average score for the implant users was much lower (about 50% correct). When the implant users’ results were divided equally between those for rhythmically structured songs and those for songs without a distinctive rhythm, a score difference of about 15 percentage points was found in favor of the rhythmic tunes.

A similar pattern of results was reported for a study in which 12 well-known tunes were presented to 49 multiple-channel cochlear implant recipients and 18 normally hearing subjects (Gfeller et al., 2002a). The implant users listened to the test materials through their own sound-processing devices, which were programmed with either the ACE, CIS, or SPEAK schemes. The overall average melody recognition score for the implant users was approximately 19% correct, whereas the corresponding score for the subjects with normal hearing was about 83%. For each subject group, the average score for melodies classified as rhythmic was approximately 12 percentage points higher than the score for arrhythmic melodies. No significant differences in performance were found for the implant users that could be related to the type of sound-processing scheme implemented in the device.

Kong et al. (2004) published further evidence supporting the relative importance of rhythm information for melody recognition by implant users. In their experiments, 6 multiple-channel cochlear implant users were asked to identify 12 familiar songs heard with and without rhythmic cues. Six subjects with normal hearing, who also participated in the study, obtained near-perfect identification scores when the melodies were presented in both conditions. However, the average score for the implant users was only about 63% correct when rhythmic cues were available. When the rhythmic cues were eliminated by equalizing the duration of each note and the silent intervals between notes, the implant users’ average performance was reduced to chance levels.

An earlier study with 8 users of the 22-electrode Nucleus implant system, programmed with the now-superseded MPEAK strategy for 7 subjects and the SPEAK scheme for the remaining subject, investigated recognition of familiar melodies in several musical contexts (Fujita and Ito, 1999). On average, subjects obtained higher scores for closed-set identification of songs when played with words than when played with only an instrumental sound. Discrimination of melodies that lacked rhythmic or verbal cues was relatively poor, with average performance at chance levels.

The notion that songs may be identified more readily when they contain meaningful words seems reasonable in terms of the generally satisfactory performance obtained by many users of recent cochlear implant systems for understanding speech, even in moderately noisy conditions. Recognition of just a few of the words in a well-known song may be sufficient for many listeners to name it correctly.

Identification of a small set of familiar songs was investigated with 29 recipients of the 24-electrode Nucleus system using either the ACE or SPEAK schemes (Leal et al., 2003). The test material was presented with and without sung words, and presumably contained at least some items that also had distinctive rhythmic patterns. Either seven or eight melodies were presented to each subject, depending on their familiarity with the available material. When the melodies were played by an orchestra without verbal cues, only one subject could identify more than half of them in a closed-set procedure. However, 28 of the subjects could identify at least half of the songs when the words were sung with an orchestral accompaniment.

A more recent study (Looi et al., 2004) included results from an experiment in which 15 Nucleus implant users listened to 10 familiar melodies presented without verbal cues or accompaniment. However, the melodies were played with normal rhythmic content as well as appropriate pitch sequences. Six subjects used the SPEAK sound-processing scheme, while the remainder used ACE. They were asked to identify each tune from a closed set. Overall, the averaged results showed that the implant users only correctly recognized about half the tunes, whereas normally hearing listeners who performed the same task scored nearly 100% correct. An analysis of the individual responses of the implant users to each melody suggested that both rhythmic and pitch information probably contributed to the subjects’ recognition performance.

3.2.2. Melodic Pattern Recognition

The task of discriminating between different pitch contours is related to melody identification, but is generally more difficult because of the reduced number of auditory cues available in the test material. In a typical melodic pattern recognition experiment, listeners are asked to label two pitch sequences as the same or different. The notes forming each pair of sequences are presented with identical rhythms, and no coincident verbal cues such as sung words are presented. Thus, discrimination relies on the listener's ability to perceive a pattern of changes in pitch. However, neither the absolute nor the relative pitch of each note needs to be perceived accurately for discrimination of the two sequences to be possible. For example, detection of an overall pitch contour, such as perception of a generally rising or falling pitch across each entire sequence of notes, may be sufficient for a listener to discriminate the sequences.

Results from an experiment in which implant users were asked to determine if a musical scale was played ascending or descending were reported by Dorman et al. (1991). The subjects were 16 users of the Ineraid multiple-channel implant and the CA sound-processing scheme. Most of them were unable to discriminate these pitch sequences reliably. In contrast, eight users of a single-channel device did obtain higher-than-chance scores on a similar test (Schulz and Kerber, 1994). In that study, subjects with normal hearing tested with the same procedure obtained average scores close to 100% correct. These scores were about 15 to 30 percentage points higher than those of the implant users.

A subtest of PMMA has also been used to assess implant users’ ability to discriminate pitch patterns. The test procedure is similar to that of the PMMA rhythm subtest described earlier. For the so-called tonal subtest, the material comprises pairs of short sequences of notes that have identical rhythm. The pattern of note pitches within each pair of sequences is either the same or different, and the listener is asked to label each pair accordingly. This procedure was carried out with 8 users of the Ineraid implant with the CA sound-processing scheme, and 10 users of the Nucleus 22-electrode implant with the F0/F1/F2 feature-extraction strategy (Gfeller and Lansing, 1991). As noted previously, both of these systems are now obsolete.

The average score obtained by all subjects on the tonal subtest was 78% correct. Interestingly, this was 10 percentage points lower than the subjects’ mean score for the rhythm subtest obtained in the same study. When the PMMA has been conducted with normally hearing subjects, scores reported for the tonal subtest tend to be higher than for the rhythm subtest (Gfeller and Lansing, 1991).

A further study using a modified version of the PMMA compared performance on the tonal subtest between two sound-processing schemes formerly used with the Nucleus 22-electrode device (MPEAK and the F0/F1/F2 strategy) (Gfeller et al., 1997). The participants included 17 implant users and 35 normally hearing subjects. The mean score for the implant users was approximately 77% correct and did not differ significantly between the two sound-processing strategies.

In contrast, the average scores for listeners with normal hearing were about 91% correct on the same test.

Another study that compared perception of pitch patterns between two sound-processing schemes used a set of isolated stimuli that varied in the way the fundamental frequency (F0) changed over time (McKay and McDermott, 1993). The stimuli were voiced phonemes produced with a rising, steady, or falling F0 contour. Four users of the Nucleus 22-electrode device were tested when listening with either the MPEAK strategy or an experimental prototype of the SPEAK scheme (i.e., the SMSP scheme mentioned earlier). As outlined previously, the MPEAK strategy converted an estimate of F0 directly into the stimulation pulse rate, whereas the SPEAK scheme employs a constant rate of stimulation. Despite this functional difference, the ability of the subjects to identify the pitch contours in the experiment was similar, on average, for both types of sound processor.

More recently, an assessment similar to the PMMA tonal subtest was conducted with 29 users of the Nucleus system and either the ACE or SPEAK schemes (Leal et al., 2003). The test contained 12 pairs of pitch sequences. About two-thirds of the subjects obtained discrimination scores of at least 90% correct on this test. In a related test, the same subjects were asked to describe whether the pitch in each sequence became higher or lower, and to indicate where within the sequence the pitch change occurred. The mean score for all subjects on this test was about 73% correct. However, because there appear to be no reports of similar tests having being conducted with normally hearing listeners, these findings are rather difficult to interpret.

3.3. Perception of Timbre

3.3.1. Timbre Recognition

One standard definition of timbre is “that attribute of auditory sensation in terms of which a listener can judge that two sounds similarly presented and having the same loudness and pitch are dissimilar” (ASA, 1960). Less strictly, timbre can be described as the quality that characterizes differences in tone (or “tone color”) that are apparent when musical notes are played with the same pitch and loudness on several different instruments. The definition can be generalized to include the perceptual effects of a wide range of properties of acoustic signals (Pratt and Doak, 1976; Grey, 1977). The principal properties are the frequency spectrum and the amplitude envelope of sounds, including changes in those attributes over time, although other characteristics, such as the spatial configuration of sound sources, may also be relevant. However, most published studies on the perception of timbre by users of cochlear implants seem to have focused on the ability of listeners to identify or discriminate the sounds of different musical instruments.

In the study of Schulz and Kerber (1994), eight users of an analog single-channel implant system were asked to identify the instrument playing a melody from a closed set of five alternatives. Even though a small number of different instruments were used in the test, the subjects obtained an average identification score of only about 35% correct. In contrast, listeners with normal hearing scored approximately 90% on the same test.

Gfeller et al. (2002b) reported results from an instrument identification test carried out with 51 implant recipients using a variety of device types and sound-processing schemes. Twenty normally hearing listeners also completed the test. The sound stimuli were recordings of eight different instruments playing the same brief sequence of notes. Subjects selected each of their responses from a set of 16 possible alternatives. The implant users obtained an average score of 46.6% correct on the test. This result was significantly lower than the mean score of 90.9% obtained by the subjects with normal hearing. Furthermore, the confusions present in the implant users’ responses displayed a diffuse pattern, whereas the errors made by the normally hearing subjects were more often confusions between instruments within the same family (i.e., brass, woodwind, percussion, or strings), rather than across instrument families.

Recordings of only three different instruments were used in an identification test reported by Leal et al. (2003). The same melody was played in a similar pitch range and in a similar style on each of the instruments (i.e., trombone, piano, and violin). Subjects were asked to name the instrument after hearing each recording. Twenty of the 29 users of the Nucleus implant system (with either the ACE or SPEAK sound-processing schemes) who participated in the study identified all three instruments correctly. All except one of the remaining subjects could identify two of the instruments.

In a recent study, 10 recipients of Nucleus implants, all users of the SPEAK sound-processing scheme, were asked to identify 16 different musical instruments in a closed-set procedure (McDermott and Looi, 2004). Recognition scores varied widely, both among subjects and across instrument types.

Figure 7 is a confusion matrix that shows the results for all subjects. It includes the instrument sounds that were tested and the subjects’ responses. The instruments were divided equally into a percussive and a nonpercussive group. Overall, the average score for identification of all instruments by the implant users was approximately 44% correct. In contrast, subjects with normal hearing obtained a mean score of 97% on the same test.

Figure 7.

Confusion matrix showing the results of an experiment in which 10 implant users identified 16 musical instruments in a closed-set procedure. The instrument sounds that were presented are shown in the left column. They were divided into two equal groups: eight were percussive (lower half), and the rest were non-percussive (upper half). Each subject heard each instrument sound a total of eight times. Their responses are shown in each cell of the matrix. The maximum possible score is 80 (10 subjects × 8 repetitions). Responses in cells that form the main diagonal of the matrix are correct identifications of the instruments; these are shown in bold type. The total number of times each instrument was named in the subjects’ responses is shown in the row at the bottom of the matrix.

As shown in Figure 7, some instruments, such as the drums or xylophone, were identified correctly much more often by the implant users than other instruments, such as the organ or flute. Not surprisingly, more confusion occurred among instruments within the same group (i.e., percussive or nonpercussive) than between groups. For example, the organ was recognized least often out of all 16 instruments, but most of the subjects’ incorrect responses named the violin; none of the incorrect responses included the tambourine or drums. This pattern of results confirms the relative salience for implant listeners of temporal envelope or rhythmic cues in musical sounds in comparison with other timbre or pitch cues.

3.3.2. Timbre Appraisal

In assessments of timbre appraisal, as distinct from recognition, subjects may be asked to describe the quality of musical instrument sounds by using adjectives such as “beautiful,” or “clear,” or to assign ratings, usually numbers, to the sound quality. The rating scales are typically based on one or more subjective descriptors, such as “pleasantness,” or “naturalness.”

A numerical rating scale that asked listeners to indicate how much the sound quality of 25 different instruments appealed to them was used in the study of Schulz and Kerber (1994). The participants included eight users of an analog single-channel implant system and seven subjects with normal hearing. Although the average quality rating of the implant users for all instruments was significantly lower than that of the normally hearing subjects, the pattern of ratings across the different instrument types was similar for the two subject groups. It seems likely that this pattern represented idiosyncratic variations in the listeners’ liking of each musical instrument rather than a characteristic of the mode of hearing applicable to the subjects in each group or functional details of the sound-processing scheme used by the implant recipients.

Gfeller and Lansing (1991) applied a questionnaire, the “Musical Instrument Quality Rating” form, to obtain simple descriptions of the perceived quality of nine instruments. As mentioned previously, 10 of the 18 subjects who participated in the study were users of the Nucleus F0/F1/F2 feature-extraction speech processing strategy, while the remaining 8 subjects were users of the Ineraid CA scheme. Both of these sound-processing techniques have since been superseded. Nevertheless, the proportion of Ineraid users who rated each of the instrument sounds as “beautiful” or “pleasant” was greater than the corresponding proportion of Nucleus users.

Although the number of participants in each of the two groups was small, it seems plausible that the now-obsolete feature-extraction scheme used by the Nucleus implant may have been less effective than alternative or newer sound processors at transmitting some characteristics of acoustic signals that contribute particularly to perceived sound quality.

Few published reports appear to have examined directly the differences between several sound processing schemes when used by the same implant recipients for listening to music. One study with 63 Nucleus implant users compared the perceptual performance of the SPEAK scheme with that of MPEAK (Skinner et al., 1994). Although the experiments described in that report were aimed mainly at investigating differences in speech recognition associated with the use of each sound processor, the study included a questionnaire that enabled the participants to rate the processors subjectively based on listening situations encountered commonly in their everyday lives. One of the situations the questionnaire addressed was listening to music. The results showed that 83.9% of the subjects preferred the SPEAK scheme to the MPEAK strategy. None preferred MPEAK over SPEAK, although 10.7% stated that the two schemes were about the same for listening to music. The responses of the remaining subjects suggested that they were unable to make a definite judgment for that condition.

In a recent comparison of timbre appraisal between implant users and listeners with normal hearing, two types of measures were obtained (Gfeller et al., 2002b). The first was a rating of overall pleasantness on a scale of 0 to 100. The second obtained separate ratings for three perceptual dimensions: dull–brilliant, compact–scattered, and full–empty, using similar numerical scales.

The sound stimuli were recordings of eight different musical instruments representing the brass, woodwind, and strings (including piano) instrument families. The results of the first experiment showed that, on average, implant users gave ratings that were about 17 points lower than the normally hearing listeners. The pattern of ratings across instrumental families was generally similar for the two subject groups, although the implant listeners gave particularly low ratings to the stringed instruments.

The results of the second experiment were consistent with this finding, showing that the implant users rated the strings as poorer in quality (i.e., more scattered, less full, and more dull) on all three of the perceptual dimensions. Furthermore, compared with the normally hearing listeners, they rated the higher-pitched instruments as sounding more scattered and less brilliant.

Ratings of liking and subjective complexity were compared between cochlear implant users and listeners with normal hearing by Gfeller et al. (2003). The test stimuli included excerpts of music representing three genres: classical, country–western, and pop. The results showed that, on average, the scores for overall appraisal (i.e., liking) given to classical music by the implant users were significantly lower than those given by the normally hearing listeners. Furthermore, the implant users rated excerpts of country–western and pop music as significantly more complex. Appraisal scores for these two genres and complexity ratings for classical music were generally similar for the implant users and the normally hearing subjects.

4. Effects of Training

Some researchers have investigated whether it might be beneficial to train implant users to improve their perception of certain essential characteristics of music. The rationale is principally that when implant recipients use their devices in their everyday lives, they are likely to gain more experience in hearing speech and in learning to understand it than in becoming familiar with music. Thus, the improvements expected in speech recognition with use of a hearing prosthesis over time may not be matched by improvements in perception of music.

This is supported by evidence that some implant users obtain less enjoyment from listening to music postimplantation than they recall from the time before their hearing had deteriorated to levels at which a cochlear implant became the most appropriate form of treatment. For example, in one study, Leal et al. (2003) reported that only 21% of experienced implant recipients agreed that they enjoyed listening to music and took opportunities to listen to it. In contrast, of the subset of those subjects who were able to describe their listening interests before losing their hearing, 41% agreed with the same statement.

In another study, Gfeller et al. (2000b) reported that about one-third of implant users stated that they tended to avoid music because of its aversive sound quality.

Gfeller et al. (1999) and Gfeller (2001) described an aural training program that they had developed specifically to provide implant recipients with structured experience in listening to musical sounds. The program was designed to be self-administered using a personal computer and consisted of 48 sessions (or “lessons”), scheduled 4 times per week. The training materials included musical stimuli containing predetermined pitch sequences and recorded sounds of different instruments. The training procedures included tasks that were designed to help implant listeners to discriminate, identify, or accurately describe the materials.

In a study that investigated the effects of applying this training program in an attempt to improve the perceptual abilities of implant users when listening to music, appraisal ratings were obtained for a set of complex songs representing various musical genres (Gfeller et al., 2000a). Appraisal was measured on two scales: liking and complexity. The scales had endpoints of 0 and 100, with larger numbers indicating better liking and greater perceived complexity.

Twenty-four recipients of the Nucleus 24-electrode cochlear implant system participated. They were divided into a control group and a training group; only the latter completed the 12-week training program. Results for all subjects showed an average liking rating of about 56, and an average complexity rating of about 41. The training program appeared to produce small but significant positive effects, with an increase in liking of approximately 6 points (on the 0–100 scale), and a reduction in perceived complexity of approximately 4 points. The authors of the study argued that a lower rating of complexity was associated with better appreciation of musical sounds.

The study also assessed recognition of melodies (Gfeller et al., 2000a). The results of the experiments showed that the subjects who had participated in the training program obtained an average score increase of approximately 11 percentage points for identifying simple melodies, and about 33 percentage points for complex songs. The average recognition score for the control subjects was only approximately 5% correct for the same tasks.

Although the effects of training were large and statistically significant, the ability of subjects to generalize their learnt ability to recognize melodies was less clear. For example, subjects correctly recognized only a few of the previously unfamiliar simple melody items when they were tested after the completion of the training program. However, the same subjects correctly recognized a larger proportion of unfamiliar complex songs after training.

The same training program was used to determine whether it would improve recognition and appraisal of musical timbre for implant recipients (Gfeller et al., 2002c). The assessments included a test of the subjects’ ability to recognize which of 8 instruments was being played from sound recordings; responses were restricted to 16 possible instruments. In addition, two measures of appraisal were obtained: a rating of overall pleasantness, and three separate ratings for specific perceptual dimensions of the sounds, as described previously (Gfeller et al., 2002b).

The results of the instrument identification test showed that completing the training program had the effect of increasing the average recognition score by nearly 20 percentage points. No increase in recognition ability over a similar interval of time was found for the control implant users, whose average score remained at about 33% correct. Overall quality ratings by the participants in the training group also increased significantly, whereas those of the control subjects did not.

However, the specific effects of training on the appraisal ratings for the three separate perceptual dimensions were found to be generally small.

Few reports appear to have been published on the music listening experience and skills of children with cochlear implants, even though a large proportion of implant recipients are children. Of interest is that information obtained from a questionnaire (Gfeller et al., 1998) suggested that many children who were implant users were involved in either formal or informal musical activities. A music training program designed specially for children with implants was described and evaluated by Abdi et al. (2001). The program involved children in either perceptual tasks (learning to discriminate rhythms and pitches) or production tasks (learning to play a simple musical instrument). Although a detailed description of a formal evaluation of this program's effectiveness does not seem to have been published, brief reports of the musical development of 14 children participating in the program suggested that it might have been beneficial.

5. Psychophysical Studies Relevant to Music Perception

The research studies discussed above may be generally summarized as follows:

First, perception of musical rhythm by users of cochlear implants has been found to be similar to that of listeners with normal hearing. This is not surprising when the results of relevant psychophysical studies are considered. Perception of rhythm in music is related to the perception of the duration of sounds and the gaps between sounds. To perceive rhythm patterns in most types of music adequately, the temporal resolution required for either duration or gaps is probably on the order of tens of milliseconds (ms).

Several psychophysical studies investigating perception of synthetic, nonmusical signals have shown that most implant users have sufficient ability to resolve temporal changes in signals for perceiving musical rhythms. For example, the gap detection threshold for simple signals of moderate loudness has been reported to be usually less than 10 ms (Shannon, 1989; Shannon, 1993), although it may increase beyond 50 ms for signals that are very soft.

Second, users of implant systems typically have great difficulty recognizing melodies, even when the tunes are familiar and are played as a sequence of isolated notes without accompaniment or harmony. If distinctive rhythm patterns are noticeable in the tunes presented in the tests, those patterns appear to provide most of the information that implant recipients use when they identify the melodies. These findings provide evidence of one of the most serious problems that confronts implant users when they listen to music: pitch information is conveyed very poorly. Understanding why this problem is present in existing cochlear implant systems, and what might be done in practice to alleviate it, are substantial topics that are further discussed later.

Third, perception of timbre has generally been reported as much poorer for implant users than for listeners with normal hearing. The major finding is most implant recipients, using only auditory cues, cannot readily identify the musical instrument that is played to them but can sometimes discriminate between instruments when differences in the temporal envelope of the sounds are obvious, for example, distinguishing the sound of a flute from that of a drum. This suggests that information concerning the spectral shape that characterizes musical instrument timbres is represented only crudely in the electric stimuli generated by existing implant systems. This topic is also discussed later in more detail.

Finally, appraisal ratings of musical sounds that indicate the subjective pleasantness of the sounds (i.e., how much listeners like them) have been reported as lower for implant users than with normally hearing listeners. The application of specific music training programs can help improve appraisal ratings. However, it seems likely that subjective judgments of the quality and pleasantness of musical sounds will remain relatively poor unless better information about pitch and timbre can be made available to implant users. Some practical suggestions about how such improvements might be achieved are presented towards the conclusion of this article.

5.1. Perception of Pitch

5.1.1. Introduction

Previous studies have shown that users of multiple-electrode cochlear implants may perceive pitch in two fundamental ways:

The primary mechanism relies on rapid temporal fluctuations in electric stimuli. The percept associated with such temporal patterns is often called rate pitch, although a similar pitch percept exists that is related to modulations in the envelope of a carrier stimulus. Typically the amplitude-modulated carrier consists of a train of pulses presented at a relatively high rate.

The secondary pitch mechanism depends on the position in the cochlea at which the electric stimulus is delivered. The associated percept is usually called place pitch.

However, some researchers have questioned whether varying the place of stimulation elicits a change in the perceived pitch that is able to convey melodic information; instead, they suggest that changes in place mainly affect the perceived timbre (McDermott and McKay, 1997; Moore and Carlyon, 2005). In experimental research with cochlear implant users, it is nearly always impossible to distinguish absolutely between changes in pitch and changes in timbre. Therefore, this distinction will be set aside temporarily in the brief review of relevant psychophysical studies that follows.

First, however, it is important to clarify a few terms that are widely used in the literature published on these topics. In experiments that require subjects to detect whether two sounds differ, or which one of three or more sounds differs from the others, the ability under investigation is discrimination. The ability to discriminate sounds does not imply that the sounds differ in some predetermined characteristic, such as pitch or timbre. In practice, subjects may use any perceptible differences between the sounds to perform the experimental task.

However, if subjects are asked to listen to two sounds presented in sequence, and to judge which one has the higher pitch, the procedure is often called pitch ranking. The experimental context, or the parameters of the stimuli, assumes that the varying sound quality used by the subjects in the task is, in fact, pitch. Of course, it is possible that some other quality of the signals that changes, such as timbre or even loudness, might also enable subjects to successfully rank the stimuli.

Pitch experiments can also involve scaling or identification, in which subjects are asked either to assign numbers in an orderly way to the perceived pitch of each of a set of sounds (scaling) or to recognize and label each one of a small number of sounds (identification). Studies that use ranking, scaling, and especially identification provide only limited information about musical pitch. In particular, no information can be obtained from these procedures about the perceived size of musical intervals (e.g., whether two signals that differ in frequency by a factor of two are perceived as spanning one octave).

The ability to perceive interval size accurately is crucial to music appreciation; for instance, melodies will sound out of tune if the intervals are heard incorrectly. Unfortunately, precise judgments of interval size require listeners to have received considerable formal musical training before they received their cochlear implant, and to have retained that knowledge in a form that is applicable to the unnatural signals heard with the device. Consequently, only a few studies have been published that have investigated this most important aspect of musical pitch perception.

5.1.2. Temporal Pitch Mechanisms

The simplest electric stimuli that have been used by psychophysical researchers who investigate auditory perception with cochlear implants include sine waves and regular pulse trains (see Figure 2). Usually these signals are delivered to a single cochlear location. In single-channel implants there is, of course, no way of changing the site of stimulus delivery, but such variations are possible with multiple-electrode devices. To select a single stimulation site in these implants, either one electrode is activated in a monopolar (or common ground) configuration, or two closely spaced electrodes are used in a bipolar mode (see Figure 3). Although stimuli may be delivered to only one cochlear position at a time, the site is often a parameter that is varied systematically in the experiments by the selection of different active electrode positions.

Numerous researchers have reported that varying the rate of a steady pulse train (or the frequency of a sinusoidal stimulus) presented at one cochlear site results in a change in the pitch perceived. Typically, the pitch increases with increasing rate over a range from about 50 to 300 Hz, although the upper limit varies across electrode positions and among implant recipients (Moore and Carlyon, 2005). At lower rates, the signal tends to be perceived as a buzz or fluttering sound that does not seem to have a salient pitch. With very high rates, the pitch of the percept is affected only slightly by changes in the rate, and may instead be dominated by the location of the active electrode.

As described previously, none of the sound-processing schemes currently used most commonly with cochlear implants (i.e., ACE, CIS, and SPEAK) are designed to vary the rate of stimulation to represent some feature of the acoustic signal. Certain earlier speech-processing strategies, such as MPEAK, used an estimate of the voice F0 to control stimulation rate, but such feature-estimating schemes have since been superseded.

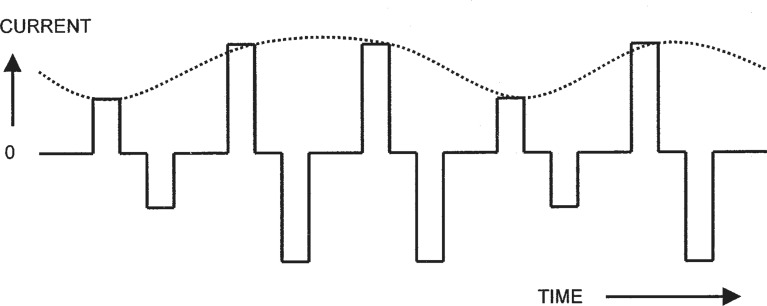

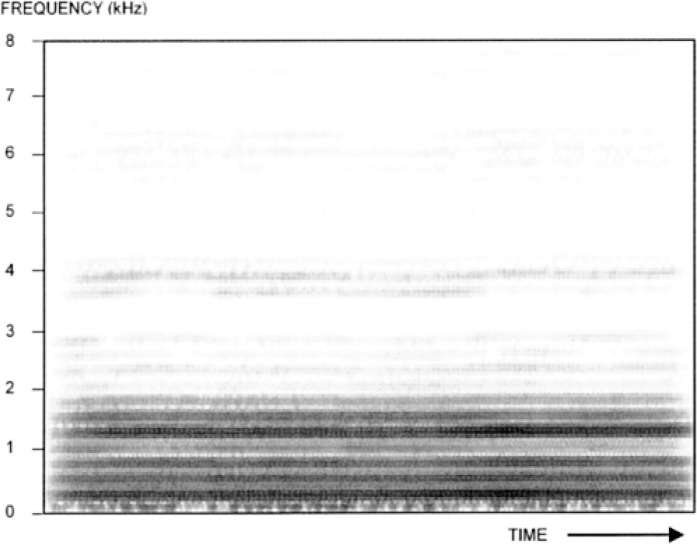

The newer, constant-rate pulsatile stimulation schemes may, however, also present some information about F0 in the temporal domain. Typically, they use a relatively high stimulation rate, and modulate the amplitude of the pulse trains on each active electrode in accordance with the estimated envelope (or amplitude) of the input signal in each of several corresponding frequency bands. The frequency analysis of the input signal is usually performed by a bank of bandpass filters or a digital spectrum estimation technique. In any case, the envelope of the signal in each band generally contains modulations arising from the fundamental frequency of the input signal. Psychophysical studies have been carried out using idealized forms of these stimulation patterns to determine whether pitch information can be derived from amplitude-modulated, high-rate pulse trains (see Figure 8).

Figure 8.

Illustration of an amplitude-modulated current pulse train. The carrier is a sequence of biphasic pulses delivered at a constant rate, as in Figure 2 (upper panel). The level of each pulse is determined by the amplitude of a signal waveform, which is shown as the dotted line.

In general, the results of the studies have shown that a pitch is associated with the frequency of the modulation (McKay et al., 1995; McKay, 2004). The range of frequencies that produce a systematic variation of pitch is similar to that found for changes in pulse rate at low rates (about 50–300 Hz). With amplitude modulation, the rate of the carrier pulse train may also affect the pitch perceived. In particular, to avoid anomalies in the relationship between the pitch and the modulation frequency, the carrier rate must be at least four times higher than the modulation frequency (McKay et al., 1994).

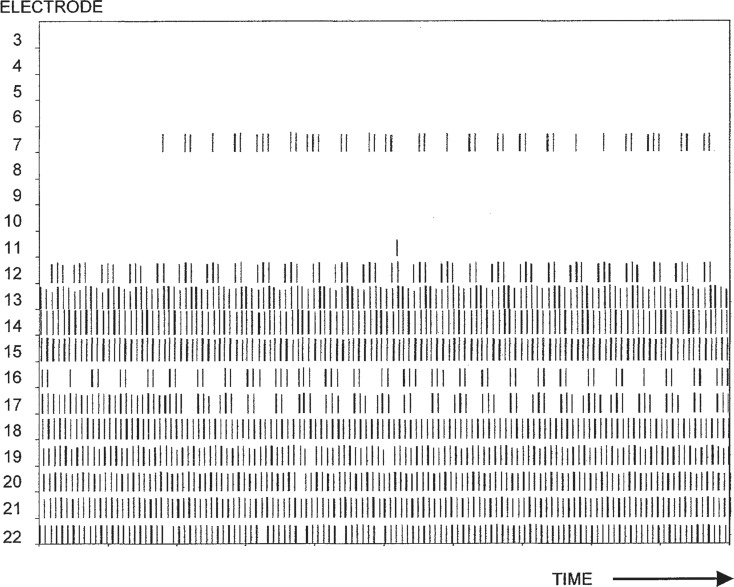

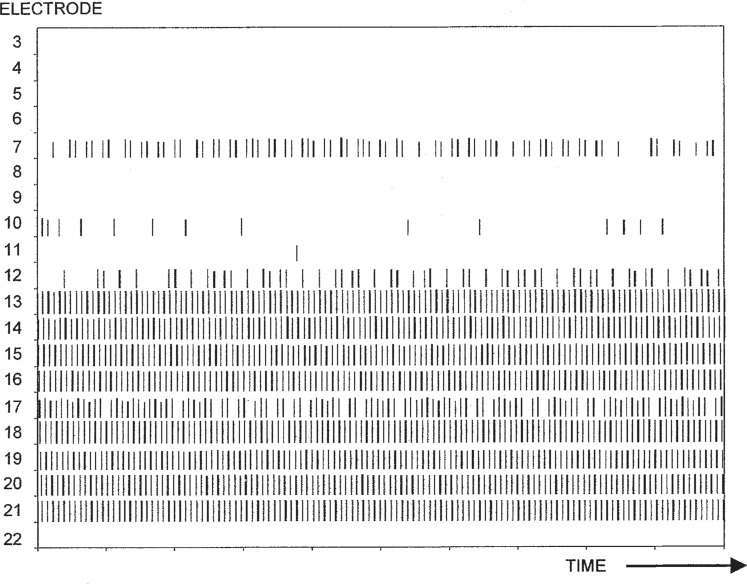

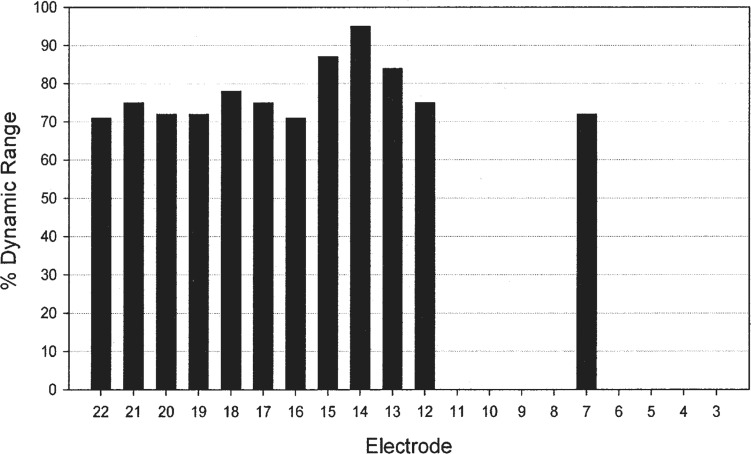

Most of the above studies have employed pitch-scaling procedures. They suggest that musical pitch information may be derived from temporal patterns in electric stimuli delivered to a single intracochlear site over a restricted, relatively low range. For most implant users who have participated in these experiments, the range encompasses only approximately the two to three octaves below middle-C on the piano keyboard.