Abstract

It is widely accepted that hearing loss increases markedly with age, beginning in the fourth decade ISO 7029 (2000). Age-related hearing loss is typified by high-frequency threshold elevation and associated reductions in speech perception because speech sounds, especially consonants, become inaudible. Nevertheless, older adults often report additional and progressive difficulties in the perception and comprehension of speech, often highlighted in adverse listening conditions that exceed those reported by younger adults with a similar degree of high-frequency hearing loss (Dubno, Dirks, & Morgan) leading to communication difficulties and social isolation (Weinstein & Ventry). Some of the age-related decline in speech perception can be accounted for by peripheral sensory problems but cognitive aging can also be a contributing factor. In this article, we review findings from the psycholinguistic literature predominantly over the last four years and present a pilot study illustrating how normal age-related changes in cognition and the linguistic context can influence speech-processing difficulties in older adults. For significant progress in understanding and improving the auditory performance of aging listeners to be made, we discuss how future research will have to be much more specific not only about which interactions between auditory and cognitive abilities are critical but also how they are modulated in the brain.

Keywords: hearing loss, aging, speech perception

Introduction

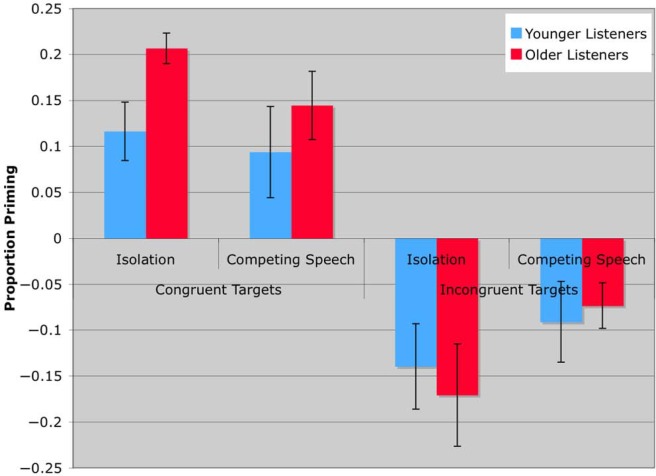

A comprehensive Trends in Amplification (TIA) review (Pichora-Fuller & Singh, 2006) examined the previous two decades of literature on the normal age-related changes in audition and cognition that effect spoken language comprehension. This involves both sensory encoding of acoustic information and the processing of that information by higher cognitive centers. Aging is known to impact both the peripheral sense of hearing and some cognitive functions necessary to make sense of the peripherally degraded auditory input. The authors (Pichora-Fuller & Singh, 2006) concluded that understanding how these auditory and cognitive processes interact when older adults listen, comprehend, and communicate in everyday situations would be influential in future developments in audiologic rehabilitation. However, since then, many researchers working in the areas of cognitive processing and sensory perceptual processing, especially hearing, have continued to approach the special problem of spoken language comprehension in older adults from their respective perspectives, not always fully appreciating or understanding the issues and information available from those viewing the same problem from the opposite perspective. This article aims to bridge that gap by bringing to the readers of TIA an update on our (psycholinguist, neuroscientist) interpretation of recent findings (primarily over the last four years) focused on the psycholinguistic contextual influences associated with speech-processing difficulties in older adults. We frame our discussions within a “top-down” cognitive-auditory interactive model of speech processing. See Figure 1 for an illustration of such a model.

Figure 1.

A schematic of speech processing

Note: Bottom-up processing is illustrated by the white arrows. Acoustic signal proceeds serially through each of the levels of processing in a forward hierarchical manner. Top-down processing is illustrated by the blue colour scheme where each level of speech processing has its own function (distinct colour) but remains directly linked and modulated (bidirectionally) by executive/cognitive processes (all shades of blue).

However, before we start our “top-down” review, we recap briefly in the section on peripheral and central auditory system some of the audiologic “bottom-up” deficits associated with normal aging relevant to spoken language comprehension. For a more comprehensive work, we direct the readers to the excellent handbook (Gordon-Salant, Frisina, Popper, & Fay, 2010). In the section on cognition and contextual influences affecting speech and nonspeech processing, we then review recent evidence for an integrative “top-down” model of speech processing and, in the Pilot Study section, present data from our lab investigating the relationship between normal aging and the contextual influences affecting speech and meaningful sound processing, a topic not systematically explored to date. In the section on age-related differences in brain function during speech processing, we review the neuroimaging data that offers biological markers for this cognitive plasticity in aging listeners. We conclude with a section on rehabilitation discussing ways that cognitive tools may be clinically useful in audiological rehabilitation and hearing aid fitting. It is hoped that by doing so, new insights and novel approaches into the complex nature of the problem might lead to the improvement of spoken language comprehension in older adults.

Peripheral and Central Auditory System

Age-related peripheral hearing loss is highly prevalent, with some level of auditory impairment present for almost all people above 70 years (see Gates & Mills, 2005 for a review). Hearing loss in older people is associated with a number of pathologies each with different consequences for the hearing system: sensory (loss of outer hair cells), metabolic (atrophy of the stria vascularis on the lateral wall of the cochlear), and neural (loss of ganglion-nerve cells). Strial degeneration is probably the dominant source of true presbycusis, affecting the operation of the cochlear amplifier and the sensitivity of the ear to a wide range of intensities. Age-related changes to the auditory nerve may lead to less synchronous activity and commensurate difficulties resolving temporal information (Gates & Mills, 2005). However, damage to the outer hair cells can be largely attributed to non-age-specific noise exposure, although the environmental consequences of noise may be more damaging in elderly populations.

Presbycusis as a label has been suggested to be of limited value because it fails to differentiate age-related auditory problems in terms of etiology, biologic damage independent of peripheral loss, or functional significance (Kiessling et al., 2003). Nevertheless, despite the heterogeneity of their hearing problems, the results are significant psychoacoustic changes for the aging listener. The most pronounced age-related change is reduced auditory thresholds, declining across all frequencies but most pronounced in higher frequencies. However, even accounting for audibility, there are also age-related declines in processing temporal auditory information (e.g., deficits detecting rapid gaps in tone sequences) and binaural information (see Gordon-Salant, 2005; Pichora-Fuller & Souza, 2003). Changes in temporal and binaural processing in the absence of amplitude modulation (AM) differences are frequently attributed to age-related declines in more central auditory processing, for example, in the auditory brainstem (see Walton, 2010 for a review).

Audiometric changes resulting from peripheral hearing loss can account for much of the speech-perception difficulties accompanying age due to a reduction in the amount of auditory information ascending beyond the inner ear. However, audiometric thresholds are most successful at predicting speech performance in the elderly for simple sounds, for example, clear words presented in quiet or simple auditory environments. When more complex stimuli and environments are used, older participants do perform more poorly than expected, given their audiogram (Stewart & Wingfield, 2009). Everyday speech processing (>140 words a minute) requires rapid temporal resolution of the incoming auditory signal that declines with age. For example, listening to time-compressed speech is disproportionately difficult for older participants (Jenstad & Souza, 2007). In addition, older listeners are less sensitive than younger listeners to binaural cues in speech (Dubno, Ahlstrom, & Horwitz, 2008). Binaural cues enhance auditory localization of sound sources, improving speech recognition thresholds in distracting situations (Dubno, Ahlstrom, & Horwitz, 2002). Thus, noisy environments present a particular problem for older listeners, where multiple talkers mask the auditory input and binaural cues play a greater role. Nevertheless, as Humes (2007) argued, although audibility is the primary contributor to the speech-understanding difficulties of older adults in unaided listening, it is other factors, especially cognitive factors, such as working memory and attention, that become evident when the role of audibility has been minimized. In the next section, we will explore how speech perception in complex auditory environments also presents a considerable challenge for nonauditory cognitive processing that is not predictable from the audiogram.

Cognition and Contextual Influences Affecting Speech and Nonspeech Processing

Alongside declines in peripheral and central auditory processing with age, there are also declines in a broad range of nonauditory and non-language-specific cognitive systems that may be relied on to compensate for deteriorating central and peripheral auditory processing (Kiessling et al., 2003; Pichora-Fuller, 2003). Age-related deficits in a number of so-called executive functions, including working memory (the ability to maintain and use information in the service of performing a cognitive task; Bialystok, Craik, & Luk, 2008; Just & Carpenter, 1992) and inhibitory control (the ability to ignore irrelevant information during cognitive processing; Bialystok et al., 2008; Hasher & Zacks, 1988; but compare Murphy, McDowd, & Wilcox, 1999), have been reported, as well as a general reduction in information processing speed for older individuals (Salthouse, 1996). Declines in these aspects of cognitive processing may affect older listeners' capacity to compensate for adverse listening conditions in the perception of speech (compare Cohen, 1987). The first large-scale studies to investigate the contribution of auditory and cognitive factors to speech-processing difficulties found that the degree of audiometric loss accounted for most of the variance in performance, with less variance attributable to general cognitive factors such as processing speed and working memory (Humes, 1996; van Rooij & Plomp, 1992), and reported that the relative balance between auditory and cognitive contributions to speech processing did not change with age (van Rooij & Plomp, 1992). However, the behavioral measures generally used to assess speech intelligibility, such as the repetition of single words, do not necessarily reflect higher level meaning comprehension and may therefore underestimate the role of cognitive factors in the reception of speech in noise. This section will examine the interaction of cognitive processes and spoken language comprehension in normal aging and will consider the performance of older listeners from a psycholinguistic perspective, taking into account the relationship between the sensory encoding of speech and the activation of meaning representations in semantic memory.

One factor that has been shown to have a particular influence on speech perception is the linguistic context in which a speech stimulus occurs. The effect of a meaningful sentence context on word identification in noise for normal-hearing younger adults is well documented (Bilger, Nuetzel, Rabinowitz, & Rzeczkowski, 1984; Gordon-Salant & Fitzgibbons, 1997). Recent evidence suggests that the use of contextual cues in word recognition is spared in older adults, who can show larger context effects than younger listeners, particularly under challenging listening conditions (Pichora-Fuller, 2008; for a comprehensive review, see Pichora-Fuller & Singh, 2006). For example, older adults benefit more from a meaningful sentence context than younger listeners in the identification of speech presented in a multitalker babble (Frisina & Frisina, 1997; Pichora-Fuller, Schneider, & Daneman, 1995; but compare Dubno, Ahlstrom, & Horwitz, 2000). Furthermore, although older listeners experience greater difficulty than younger listeners in recognizing low-frequency words with phonologically similar neighbors, this effect is eliminated when these words are presented in a biasing context (Sommers & Danielson, 1999). Interestingly, older adults' word identification performance also improves for test stimuli produced by familiar speakers, particularly when there is a semantic bias (Yonan & Sommers, 2000). Similarly, when identifying words in noise-vocoded sentences, older adults do both benefit more from semantic bias than younger adults and show a greater priming effect when the vocoded sentence context is preceded by an acoustically intact version (Sheldon, Pichora-Fuller, & Schneider, 2008). This suggests that older listeners are able to use multiple sources of information in a “top-down” manner to aid perceptual processing. The general influence of higher level cognitive processes on speech perception in older adults is supported by a more recent study by George et al. (2007), who report that in older individuals with normal hearing, text reception threshold—a nonauditory task requiring the comprehension of visually masked sentences, thought to reflect modality-independent cognitive skills—is a better predictor of speech reception threshold in noise than pure-tone audiometric thresholds or measures of spectral or temporal acuity. Older adults may also use similar top-down processes in the perception of nonspeech sounds. Murphy, Schneider, Speranza, and Moraglia (2006) report that the use of expectancy-based attentional control to modulate auditory gain is preserved in older listeners, such that the identification of tones based on intensity is disrupted by the introduction of a novel, higher intensity tone in both older and younger adults. Furthermore, in music perception, older listeners demonstrate better performance in tests of melodic processing for culturally known scales (Lynch & Steffens, 1994).

The influence of top-down processing in older individuals may reflect a tendency to draw on intact cognitive resources as a means of compensating for the perceptual decrements associated with normal aging. In the case of speech perception, older listeners may make use of spared conceptual and semantic knowledge to disambiguate a degraded signal (Schneider, Daneman, & Pichora-Fuller, 2002; Wingfield & Tun, 2007). Many studies have suggested that semantic processing is relatively preserved in older adults. Older individuals typically score higher on vocabulary tests than younger participants, and demonstrate semantic organization similar to that of younger adults when tested on word association measures (Burke & Peters, 1986), although the pattern of word association responses in older adults may be less heterogeneous. Further evidence comes from priming studies in which target words in a word-recognition task are preceded by a word or sentence that is either related or unrelated in meaning (Meyer & Schvaneveldt, 1971, 1976; Stanovich & West, 1983; similar results have also been obtained using event-related brain potentials—for review, see Kutas & Federmeier, 2000). Both older and younger individuals are faster to recognize words in the context of a semantically related word or sentence (Laver, 2009; Madden, Pierce, & Allen, 1993), and some studies have reported larger priming effects for older adults than younger adults (see meta-analysis by Laver & Burke, 1993). Age-related differences in the magnitude of sentence priming effects are exaggerated when visual target words are perceptually degraded (Madden, 1988), lending support to the proposal that contextual information may help older individuals to compensate for disturbances of sensory processing. However, Wingfield, Alexander, and Cavigelli (1994) report that older listeners only show increased contextual benefit relative to younger listeners when the context precedes the target, whereas younger individuals are influenced by contextual information presented either before or after the target. Consistent with previous studies (Gordon-Salant & Fitzgibbons, 1997), Wingfield and colleagues attribute the performance of older adults to a difficulty in maintaining sentence-level information in working memory in the service of retrospective analysis. Thus, older individuals appear to show increased benefit from a supportive context only when available attentional and memory resources are sufficient to allow top-down processing to occur.

The possibility that top-down processing incurs increased demands on cognitive resources has particular implications for listening comprehension in older listeners. On one hand, the sensory disturbances experienced by older adults necessitate an increased reliance on meaningful context to aid perceptual processing, particularly under adverse listening conditions; on the other hand, both sensory impairments and noisy auditory environments make greater demands on resources, which may interfere with the effective top-down use of contextual information (Wingfield & Tun, 2007). This interference may be more pronounced for older adults, who may have limitations on working memory capacity or inhibitory control. Thus, older adults may show an increased benefit from context when top-down processing is available to compensate for impoverished sensory input but a diminished benefit under conditions of increased attentional demand. The interaction of sensory disturbances and available cognitive resources was explored by Pichora-Fuller and colleagues (Pichora-Fuller et al., 1995), who report that verbal working memory span is reduced in both older individuals with perceptual deficits and younger adults under conditions of perceptual stress. Similarly, a study of serial position effects in verbal recall (Murphy, Craik, Li, & Schneider, 2000) found poorer performance on items in early serial positions for both older adults tested under quiet conditions and younger adults tested in noise, suggesting that increased perceptual demand interferes with the encoding of information in long-term memory. Verbal memory is also adversely affected in older listeners by the increased cognitive demand imposed by a multitalker environment. Competing speech produced by a single talker disrupts recall of attended word strings to a greater extent for older adults than for younger adults, particularly if the competing speech is a meaningful sentence (Tun, O'Kane, & Wingfield, 2002). This recent finding is consistent with the view that multitalker environments are especially problematic for older listeners due to the combined effects of sensory, attentional, and semantic interference (Moll, Cardillo, & Aydelott Utman, 2001; Schneider, Li, & Daneman, 2007) and reduced inhibitory control (Hasher & Zacks, 1988). Nevertheless, in the Tun study, contextual biases in the target word strings improved recall performance for older and younger listeners to the same extent irrespective of the presence of competing speech, suggesting that the cognitive processing deficits observed in older adults do not necessarily diminish the ability to use contextual information in adverse listening situations.

The influence of meaningful context on speech perception in older adults clearly reflects a complex interaction of perceptual and cognitive processes and suggests that both bottom-up and top-down information play a substantial role in higher level language comprehension. Psycholinguistic studies have indicated that context makes a dual contribution to word recognition. First, contextual information serves to activate compatible meaning representations in semantic memory. A meaningful word or sentence will activate related words in the mental dictionary, or lexicon, so that access to these words is facilitated when they are encountered subsequently. Thus, words related to the context are recognized faster and more accurately than unrelated words or words in a neutral context (Aydelott & Bates, 2004; Meyer & Schvaneveldt, 1971; Stanovich & West, 1983). In addition, a meaningful context encourages the generation of expectancies based on the degree of semantic constraint provided by the context, such that the meaning of a sentence may predict a particular word or set of words (see Kutas & Federmeier, 2000 for a review of the Event-related potential (ERP) literature). These expectancies may be violated by the presentation of an unexpected word, resulting in an interference effect for unrelated items. Thus, recognition of words that are unrelated to the context is slower and less accurate than for related words or words in a neutral context (Aydelott & Bates, 2004; Fischler & Bloom, 1979, 1980; Schuberth & Eimas, 1977; Stanovich & West, 1983). Evidence from studies of sentence-word priming under adverse listening conditions suggests that the facilitation of related words is a relatively rapid, automatic process that depends largely on bottom-up activation of memory representations based on the sensory input, whereas the interference effect for unrelated words depends on the generation of semantic expectancies, which may be relatively slow to emerge and incur increased demands on cognitive resources (Aydelott & Bates, 2004; Moll, Cardillo, et al., 2001). Both of these processes are likely to be at work in spoken language comprehension in older adults and may be vulnerable to varying degrees to the perceptual and cognitive deficits associated with normal aging.

Older listeners may also make use of active meaning representations or expectancies in the interpretation of nonspeech sounds. Recent studies investigating the role of conceptual information in auditory scene analysis in younger adults suggest that a meaningful sound environment can affect the accurate detection of target sounds based on their semantic compatibility with the auditory context (e.g., a rooster crowing in a barnyard scene versus an office scene), such that incongruent sounds show a contextual “pop-out” effect (Gygi & Shafiro, 2007; Leech, Dick, Aydelott, & Gygi, 2007; Leech, Gygi, Aydelott, & Dick, 2009). Thus, a similar interaction of perceptual and semantic processing may be at work in the analysis of both speech and meaningful sounds in older adults. This possibility is explored in our pilot study outlined in the next section.

Pilot Study: Contextual Influences and Identification of Speech and Environmental Sound Stimuli Under Adverse Listening Conditions

In this section, we outline results of our preliminary investigation into the effects of meaningful context on the identification of speech and environmental sound stimuli under adverse listening conditions in both younger and older adults. Investigations of the influence of context on listening comprehension under conditions of distraction provide a useful approach for investigating the interactions of top-down and bottom-up factors in speech and nonspeech processing.

To evaluate the effects of top-down information processing on word recognition, we used a sentence-word priming task. Sentence-word priming provides a sensitive on-line measure of the influence of meaningful context on word recognition and the ways in which this process is affected by competing speech. In this paradigm, a lexical decision is made in response to spoken words presented in sentence contexts with a strong bias in favor of a particular completion. For example, “There are seven days in a” is followed by either the congruent word “week” or an incongruent word, for example, “moon.” We examined the pattern of responses to targets that were congruent or incongruent with the contextual meaning. If the prior sentence is congruent with the target word, this facilitates the lexical decision, resulting in faster reaction times. Conversely, an incongruent sentence interferes with lexical access of the target word, resulting in slower lexical decision reaction times. Previous studies of sentence-word priming in younger adults have shown that a meaningful sentence context facilitates recognition of congruent words and interfere with recognition of incongruent words, relative to a semantically neutral baseline (Aydelott & Bates, 2004). Furthermore, competing speech presented dichotically in a separate auditory channel from the attended sentence has been shown to reduce the interference effect for incongruent targets in younger listeners, without affecting the facilitation of congruent targets (Moll, Cardillo, et al., 2001). This preserved facilitation effect has been accounted for in terms of the two-process model described above, in which the rapid activation of compatible meaning representations on the basis of context is relatively automatic and therefore impervious to attentional interference. In contrast, the generation of semantic expectancies (i.e., the strategic use of activated meaning representations to predict likely continuations of the ongoing context), as reflected in inhibition effects, is a relatively slow process that incurs increased attentional demand, and may therefore be vulnerable to the interference introduced by competing speech, resulting in a release from inhibition for incongruent targets.

This paradigm offers a means of investigating the influence of contextual factors on spoken language comprehension in normal aging. If older listeners rely more on higher level meaning representations, they should show larger contextual facilitation effects overall than younger listeners. However, if the inhibitory deficit hypothesis is correct, older adults should have difficulty suppressing irrelevant information in spoken word recognition (but compare Murphy et al., 1999, for evidence against this hypothesis). Older listeners should therefore show increased interference for targets in incongruent sentence contexts and should also be more vulnerable than younger listeners to the effects of meaningful competing speech. In addition, if the capacity for selective attention in a multitalker environment is reduced in older adults, these individuals should show greater adverse effects of competing speech on sentence-word priming than younger listeners.

In tandem with the sentence-word priming task (assessing meaningful context effects on speech perception), we also conducted an analogous study using naturalistic complex environmental sound stimuli to assess the nonspeech effects of meaningful context and age. Environmental sounds are spectro-temporally complex, meaningful, familiar stimuli that are well suited to investigating non-speech-specific contextual processing and how it changes with age. By embedding target environmental sounds (e.g., a sheep baaing) in naturalistic auditory scenes (e.g., a barn yard), we can assess the influence of background context on target identification. In a previous study (Leech et al., 2009), target/background congruence significantly altered younger adults' target sound identification, with contextually incongruent (e.g., a glass breaking embedded in a barn yard) sounds “popping out” relative to the background auditory scene. The pilot study we present here is part of our ongoing investigation into whether effects of contextual congruence are sensitive to the aging process. We hypothesized that contextual congruence would provide a measure of how well participants were able to maintain a coherent auditory scene and build up expectancies about what comes next. Degraded auditory processing with age would result in reduced ability to build up expectancies, resulting in a greatly reduced ability to use meaningful context to help detect and identify target sounds.

Given that perceptual and cognitive deficits vary considerably with age, we anticipated that there would be meaningful variability between the older participants at processing both tasks. If the speech and meaningful sound tasks both use a shared ability to use meaningful context to aid resolving complex auditory objects, we predicted that there would be significant correlations across the tasks for measures that reflect the influence of contextual information on processing.

Method

Participants

Thirty-four native speakers of British English with no history of neurological illness participated in the experiment. Twenty-one participants were between 18 and 40 years of age, with no reported hearing impairment. Eleven participants were above 50 years of age (of these, two were between 60 and 70 and two were 70+) and were recruited as healthy controls for a larger study of auditory attention in patients with brain injury. These older participants had bilateral audiometric thresholds of no more than 35 dB HL for frequencies between 250 and 2,000 Hz, and no more than 45 dB HL at 4,000 Hz. Pure tone average (PTA) hearing levels for the frequencies 500 to 4,000 Hz were in the normal range (0–20 dB) for eight of the older participants and in the mild hearing loss range for the three remaining older participants, who had a maximum PTA hearing level of 26 dB HL bilaterally. Thus, mild hearing impairment may have contributed to the overall pattern of performance for a subset of the older listeners. Nine of the 11 older participants were right-handed.

Sentence-Word Priming

Task The stimuli consisted of 54 target words (all one syllable in length), a neutral sentence context (“The next item is ×”), and 36 highly constraining sentence contexts. Target words were presented in sentence-final position in one of three context conditions: Congruent (expected target word for biasing context meaning; e.g., “There are seven days in a WEEK”), Neutral (neutral context), or Incongruent (incompatible with biasing context meaning, e.g., “She hung the painting on the BEAR”). Half of the sentence contexts were presented in isolation and half were presented with competing speech (see below), for a total of six test conditions (Isolation: Congruent, Neutral, Incongruent; Competing Speech: Congruent, Neutral, Incongruent) with nine target items per condition. The presentation of test conditions was mixed. The target words did not differ significantly across test conditions in terms of number of phonemes, duration in milliseconds (ms), Kucera-Francis print frequency (Kucera & Francis, 1967), London-Lund spoken frequency (Brown, 1984), concreteness, familiarity, image-ability, or (where available) age of acquisition (all ps > .10 by one-way analysis of variance), based on data from the MRC Psycholinguistic database (Coltheart, 1981). Mass nouns were avoided and all targets were consonant-initial. The biasing sentence contexts were generated by a group of native British English speakers, and cloze probability statistics (i.e., the percentage of congruent target responses) were obtained for the expected final word (M = 98%, SD = 4). The sentences did not differ significantly across test conditions in terms of target cloze probability, number of syllables, duration, number of content words, or number of words semantically related to their expected target (all ps > .30).

A set of 54 nonword distractors was also generated, consisting of phonologically permissible one-syllable pseudowords that did not differ significantly from word targets in terms of number of phonemes or duration in milliseconds (both ps > .30). Nonword targets were presented in a neutral context as well as biasing sentence contexts (mean cloze probability of expected targets = 85%, SD = 11) that did not differ significantly from word target contexts in terms of number of syllables, duration in milliseconds, number of content words, or number of words semantically related to their expected target (all ps > .10). Half of the nonword contexts were presented in isolation and half were presented with competing speech.

The biasing and neutral sentence contexts, word targets, and nonwords were recorded in an Industrial Acoustics Model 403-A soundproof chamber on digital audiotape using a Tascam DAT recorder with a high-quality condenser microphone at gain levels between −6 and −12 dB. A female voice was used for the sentence contexts and a male voice for the word targets and nonwords. Both talkers were native British English speakers. The recorded stimuli were digitized via digital-to-digital sampling onto a Macintosh computer with a Digidesign audio card and SoundDesigner II software at a sampling rate of 22.05 kHz with a 16-bit quantization. The waveform of each sentence, word target, and nonword was then edited and saved in its own mono audio file. The sentence and target audio files were intensity scaled to 70 dB rms amplitude using Praat software. The sentence audio files were converted into stereo versions with one empty channel in SoundEdit16.

A passage from the textbook Profit Patterns (Slywotzky, Morrison, Moser, Mundt, & Quella, 1999) was recorded and edited on the same equipment and under the same conditions by a different female talker (also a native speaker of British English). This recording was then used for the competing speech conditions. Copies of all the stereo sentence files were made and segments of competing speech of the same duration as the sentence context were inserted into the empty channel. Thus all sentences in the competing speech conditions were presented at a signal-to-noise ratio (SNR) of 0 dB. All stimuli (sentences and targets) were converted to System 7 format.

The sentences and targets were presented auditorily in random order with an intertrial interval of 1500 ms using Superlab software via a Macintosh G4 PowerPC. The stimuli were presented through Sennheiser HD-25 headphones in an Industrial Acoustics Model 403-A soundproof chamber. Sentence contexts were presented to the participants' left ear, competing speech to the right ear. Word and nonword targets were presented binaurally. Responses (accuracy and reaction time) were recorded via a Cedrus RB-710 seven-button response box. Participants were instructed to respond as quickly and accurately as possible after hearing the target item (male voice) and to press a green button (Button 2) if they heard a real English word or a red button (Button 6) if they heard a nonword.

Naturalistic Auditory Scene Analysis

The stimuli and design is a simplified version of that used in Leech et al. (2009). Stimuli consisted of 20 recordings of short single-environmental sounds used as targets and 10 recordings of auditory scenes, used as backgrounds. Each target sound had a color image associated with it corresponding to the real-world object being represented. All stimuli (backgrounds and targets) were meaningful, naturally occurring sounds, for example, a restaurant background or a breaking glass target. The target and background sounds were a subset of the sounds used in a similar previous study (Leech et al., 2009). The sounds were chosen to represent a wide range of sound categories (e.g., animals, vocal sounds, machines, actions, nature, etc). Background auditory scenes lasted 5 s and were scaled to have the same average intensity using Praat. Based on contextual similarity ratings from a previous pilot study, each target sound was judged (based on independent ratings) to be contextually congruent with two background sounds and contextually incongruent with two other background sounds.

There were 80 trials in the study. In each trial, a stereophonic background sound was played to the left and right channels. A single, short, monotarget sound (e.g., “car horn,” “door knock,” “flute”) was mixed into one channel of the background sound. This may introduce binaural masking effects, although these effects were equal across congruent and incongruent trials, and so cannot explain context effects. Half the targets were contextually congruent with the background and half incongruent (an example of a congruent sound would be the “car horn” target sound mixed with the left channel of the “racing cars” background sound). Target sounds were either presented at −6 dB or +3 dB SNR relative to the background sound. To increase uncertainty, target onset varied from trial to trial, from simultaneous to the background sound to the end of the background sound. At the start of each trial, the participant heard the target sound. Subsequently, the participant was asked to press the space bar when they heard the target mixed into the background sound. A picture representing the target appeared on screen for the full length of each trial, meaning the experiment did not tax working memory systems.

Results

Sentence-Word Priming Task

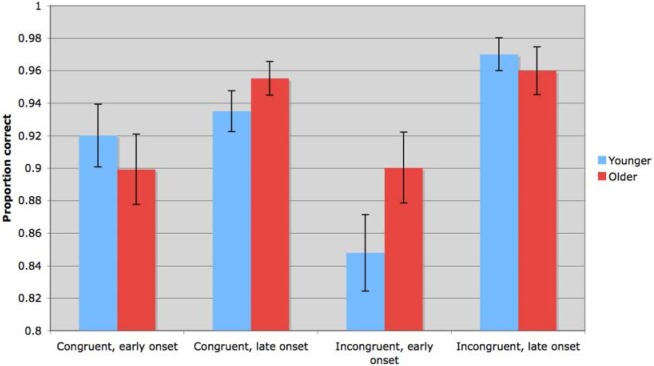

As expected, both younger and older participants showed significant semantic facilitation and interference effects, with and without concurrent competing speech (see Figure 2). Planned means comparisons revealed that competing speech significantly reduced the interference effect for incongruent targets, paired t(31) = 1.78, p < .05, one-tailed, whereas facilitation of congruent targets was unaffected, paired t(31) = −1.14, p > .10, one-tailed. This pattern emerged for both older and younger participants and is consistent with previous studies showing that competing speech presented in a separate auditory channel from the attended signal can produce a release from interference for incongruent targets (Moll, Cardillo, et al, 2001). Age modulated semantic priming with greater facilitation of congruent targets for older participants than younger participants for contexts presented in isolation, independent t(31) = −2.05, p < .025, one-tailed. This difference was not accounted for by hearing sensitivity in older participants: Pearson correlation analyses showed no significant relationship between contextual facilitation and audiometric threshold in the attended ear for frequencies between 250 and 4,000 Hz HL (all ps > .60, two-tailed). In contrast, in the competing speech condition, there was no significant difference between older and younger participants in the magnitude of the facilitation effect. Although older adults showed a smaller facilitation effect for contexts presented in competing speech than in isolation (in contrast to the pattern of performance for younger listeners), this difference did not reach significance, t(10) = −1.44, p = .09, one-tailed). These preliminary data suggest that older participants are using semantic information more in normal spoken language comprehension but that challenging listening conditions may disproportionately affect their ability to make use of semantic context.

Figure 2.

Priming by a sentence context in younger and older listeners

Note: Proportion priming for word targets in biasing sentence contexts relative to neutral baseline based on inverse efficiency scores combining RT (ms) and accuracy. Values greater than zero reflect facilitation (positive priming); scores less than zero reflect interference (negative priming). A three-way repeated measures analysis of variance (ANOVA) was performed on the proportion priming data, with Group (older vs. younger) as a between-subjects factor and Bias (congruent vs. incongruent) and Competing Speech Condition (isolation vs. competing speech) as within-subjects factors. The results revealed a significant main effect of Bias, F(1, 30) = 28.56, p < .0001, and a significant Competing Speech Condition x Bias interaction, F(1, 32) = 5.87, p < .05. There were no other significant main effects or interactions. Note: Proportion priming for word targets in biasing sentence contexts relative to neutral baseline based on inverse efficiency scores combining RT (ms) and accuracy. Values greater than zero reflect facilitation (positive priming); scores less than zero reflect interference (negative priming). A three-way repeated measures analysis of variance (ANOVA) was performed on the proportion priming data, with Group (older vs. younger) as a between-subjects factor and Bias (congruent vs. incongruent) and Competing Speech Condition (isolation vs. competing speech) as within-subjects factors. The results revealed a significant main effect of Bias, F(1, 30) = 28.56, p < .0001, and a significant Competing Speech Condition x Bias interaction, F(1, 32) = 5.87, p < .05. There were no other significant main effects or interactions. Note: Proportion priming for word targets in biasing sentence contexts relative to neutral baseline based on inverse efficiency scores combining RT (ms) and accuracy. Values greater than zero reflect facilitation (positive priming); scores less than zero reflect interference (negative priming). A three-way repeated measures analysis of variance (ANOVA) was performed on the proportion priming data, with Group (older vs. younger) as a between-subjects factor and Bias (congruent vs. incongruent) and Competing Speech Condition (isolation vs. competing speech) as within-subjects factors. The results revealed a significant main effect of Bias, F(1, 30) = 28.56, p < .0001, and a significant Competing Speech Condition x Bias interaction, F(1, 32) = 5.87, p < .05. There were no other significant main effects or interactions.

Naturalistic Auditory Scene Analysis

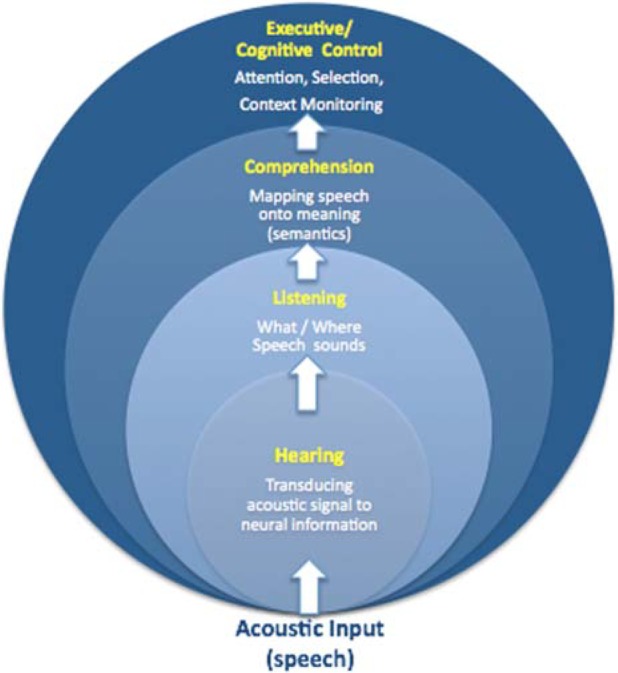

A related effect was seen for a nonlinguistic analogue of the sentence-word priming task—naturalistic auditory scene analysis. With this study, younger controls show strong effects of contextual congruence when identifying target meaningful environmental sounds embedded in naturalistic background scenes, for example, identifying a dog barking in a farmyard scene. Sounds that are incongruent to the background “pop out” and are easier to identify, for example, hearing a trumpet in a farmyard scene. There was also a strong effect of target onset such that early sounds were significantly harder to identify than later sounds, F(1, 39) = 18.043, p < .001. Congruence aided identification of early targets relative to incongruent sounds, but this relationship reversed for late sounds with incongruent targets easier to identify. One explanation could be that when contextual information in the background scene has built up (i.e., with late target onset), then young participants have expectations of what comes next that are violated by the incongruent targets which “pop out” of the auditory scene. In contrast, for the more confusing early onset targets, when the background auditory scene has not been fully resolved, participants make use of contextual similarities between the target and the background to aid identification of the target. This contextual congruence effect was largely eliminated in our older participants (see Figure 3). There was no overall effect of age, F(1, 39) = 0.22, p > .5, suggesting that factors unrelated to context do not explain the interaction between age and context. Similarly, there were no significant relationships between audiometric threshold at any measured frequency and behavioral performance in the incongruent trials (where younger and older subjects showed significant group differences), suggesting that group differences in audiometric thresholds alone do not explain the age effects in contextual processing. One interpretation of these results is that, possibly because of impaired central auditory processing, the older participants are unable to build up meaningful interpretation of the background auditory scene and so are unable to take advantage of this in detection of incongruent targets.

Figure 3.

Target identification accuracy in naturalistic auditory scenes for younger and older listeners

Targets sounds were either congruent or incongruent with the background sound and occurred either with an early onset (< 1 s after the beginning of the background sound) or with a late onset (> 1 s). There was a strong effect of target onset such that early sounds were significantly harder to identify than later sounds, F(1, 39) = 18.043, p < .001. However, this congruence by target onset interaction was modulated by age, F(1, 39) = 10.749, p < .01). Specifically, effects of congruence were much more pronounced for the younger age group both at early and at late onset. Older participants actually performed better than younger participants for early incongruent targets and for late onset congruent targets, suggesting that the older participants were relying less on top-down contextual information about the background auditory scene and so being less distracted by the top-down context of the auditory scene than younger controls.

Shared Speech and Nonspeech (Environmental Sound) Top-Down Processing

If the age-related changes in contextual processing reflect shared speech and nonspeech top-down processing, then they should be expected to vary together within the older age group. To investigate this in meaningful auditory processing, we correlated our older participants' performance across both our speech and environmental sound study conditions. The only significant relationships existed between late onset incongruent sounds and both positive (ρ = 0.65, p < .05) and negative (ρ = −0.63, p < .05) measures of semantic priming. That is, older participants who demonstrated larger semantic priming also demonstrated larger pop-out effects in the naturalistic auditory scene analysis. One tentative interpretation is that both speech and nonspeech contextual processing may key into similar cognitive systems (shared semantic systems) that aid listening in naturalistic settings.

Summary of Evidence Supporting the Interactive Cognitive-Auditory Processing Model Critical for Successful Speech Processing in Older Age

Spoken language comprehension in everyday listening situations relies heavily on top-down cues such as meaningful context. Contextual cues provide an additional source of information that can aid in the perception and identification of both speech and meaningful sounds. Older listeners appear to rely on these cues more than younger listeners to interpret the incoming auditory signal. However, the ability to make use of contextual information fundamentally depends on the interaction between top-down processing involving higher level cognitive systems and bottom-up perceptual processing. In perceptually challenging environments, peripheral and central auditory processing deficits associated with normal aging prevent sufficient sensory information from aiding top-down resolution of the speech stream. In addition, declines in general cognitive processing (e.g., speed of processing, working memory, selective attention, see Park et al., 1996) affect the ability of older participants to use nonauditory cues to segregate multiple speech signals in a noisy environment. Our pilot study highlights ways in which these general bottom-up and top-down processes might interact. Sentence-level contextual priming effects appear to be enhanced in older participants in quiet environments, but this increased contextual benefit disappears when distracting masking sounds are introduced. This result (albeit in a small sample) supports the view that older participants may be more reliant on sentence-level semantic information in normal spoken language comprehension than younger listeners but could be less able to make use of this information in noisy environments. The nonlinguistic auditory scene data also suggest a relationship between speech and nonspeech stimuli in terms of the influence of meaningful context on perceptual identification in older listeners. This finding could suggest that both speech and nonspeech processing in older individuals draw on preserved meaning representations in semantic memory and that these representations may be domain general in nature. However, further studies in larger populations are necessary to support these claims.

The interaction between sensory and cognitive processing in auditory perception in normal aging has important implications for the compensatory strategies that may be employed by individuals with hearing loss and their course of acclimatization to the novel auditory input provided by technologies such as hearing aids. However, before we address rehabilitation of speech processing deficits, it is worth considering how this auditory-cognitive processing interaction is coordinated in the aging brain.

Age-Related Differences in Brain Function During Speech Processing

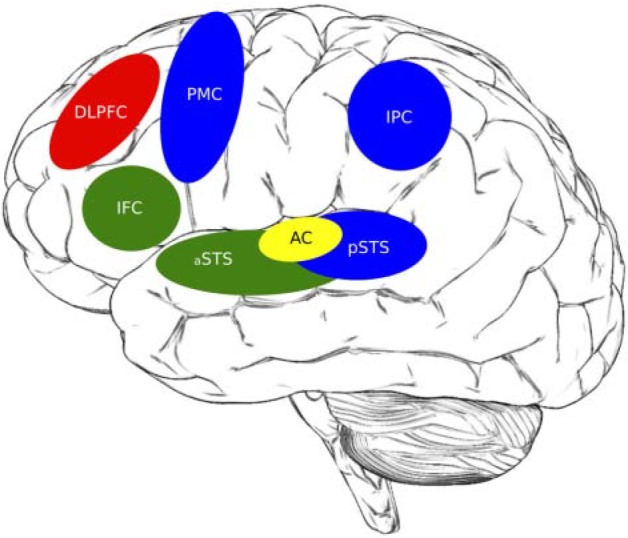

Davis and Silverman (1970) recognized long ago that how brain systems integrate auditory and cognitive information would be crucial to our understanding of speech communication. As there are no animal models for speech and language processing (only humans speak), functional neuroimaging has become a critical tool in this endeavor. Although much neuroimaging research on the aging auditory system has been conducted, the primary concern has been in sensory hearing loss. Reviews such as Hickok and Poeppel (2007) have outlined models of cortical regions involved in speech processing. See Figure 4 for an illustration of these brain regions. Here, acoustic analysis occurs in the middorsal superior temporal region with information then proceeding either anteriorly along the superior temporal gyrus (STG) to inferior frontal cortices (IFC) in mapping speech to meaning (green route) or posteriorly along STG to the inferior parietal lobe and then premotor cortices (blue route). Although influential, these models make no explicit predictions regarding listening to spoken language in difficult contexts, for example, noise, nor pertaining to the aging auditory and language system. Nevertheless, consistent with the cognitive influence on speech processing outlined in the previous section, several neuroimaging studies of young adults have shown that there is more widespread brain activation, including activation of the left dorsolateral prefrontal cortical (PFC) areas that are thought to be involved in semantic processing and working memory, when context is available to assist listening to distorted sentences (Obleser, Wise, Alex Dresner, & Scott, 2007; Zekveld, Heslenfeld, Festen, & Schoonhoven, 2006).

Figure 4.

Brain regions involved in speech processing in older age

Note: This schematic highlights in the left hemisphere the cortical regions involved in speech perception. Based on the dual auditory processing scheme of the human brain (Rauschecker & Scott, 2009), antero-ventral (green) and postero-dorsal (blue) auditory streams originate from the primary auditory cortices (yellow), auditory belt/Heschl's gyrus. The postero-dorsal stream interfaces with premotor areas and pivots around inferior parietal cortex. Object information, such as mapping speech onto semantics, is decoded in the antero-ventral stream to inferior frontal cortex (Brodmann's area 44, 45). In the postero-dorsal (red) route, attention- or intention-related changes in the IPL (Brodmann's area 40) influence the selection of context-dependent action programs in prefrontal cortical (PFC) and PMC (Brodmann's area 6) areas. Both routes can be modulated by activity in the dorsolateral prefrontal cortices (red; Brodmann's area 9, 46), although how this occurs remains to be tested. Techniques with high temporal precision (e.g., magnetoencephalography) would allow determination of the order of events in the respective neural systems. AC = auditory cortex; STS = superior temporal sulcus; IFC = inferior frontal cortex, PMC = premotor cortex; IPL = inferior parietal lobule.

PFC activation is deemed particularly important in aging (e.g., Cabeza, Anderson, Locantore, & McIntosh, 2002) as it is suggested to be reflection of “thinking harder” or recruitment of more working memory resources (Just & Carpenter, 1992; Petrides, Alivisatos, Meyer, & Evans, 1993). Specific to spoken language comprehension, when listeners engage in more executive context-driven contextual processing involving greater activation of prefrontal cortex, then perceptual learning of distorted speech appears to be enhanced (Davis, Johnsrude, Hervais-Adelman, Taylor, & McGettigan, 2005). Similar brain activation patterns have been found when the perception of sentences in noise is facilitated by meaningful semantic context (MacDonald, Davis, Pichora-Fuller, & Johnsrude, 2008). Thus, there is increased prefrontal brain activation when context is used to support comprehension and when the speech signal is degraded.

As Arlinger, Lunner, Lyxell, and Pichora-Fuller (2009) state in their excellent recent review of cognitive hearing science, “Interestingly, when younger and older adults perform equivalently on various perceptual and cognitive tasks, there is more widespread activation in older brains than in younger brains, with one interpretation being that this reflects compensatory processing” (Cabeza et al., 2002). Such compensatory brain activation could be consistent with the finding that older adults are better than younger adults at using context to compensate in challenging listening conditions (for a discussion, see Pichora-Fuller & Singh, 2006; Pichora-Fuller, 2008).

Taken together, this is seen as evidence supporting what has been called the decline-compensation hypothesis. This hypothesis states that cognitive aging involves a combination of decline in sensory processing (and cortical activation) that is accompanied by an increase in the recruitment of more general cognitive areas such as PFC, as a means of compensation. For example, Cabeza and colleagues (Cabeza et al., 2004) found decreased activity in the visual cortex along with an increase in PFC in older subjects across three tasks that involved working memory, visual attention, and episodic retrieval. An alternative is the common-cause hypothesis that argues for a general cerebral functional decline across both sensory and cognitive brain regions due to aging (for a review of different hypotheses, see Arlinger et al., 2009; Li & Lindenberger, 2002).

To date, we have only been able to find one functional magnetic resonance imaging (fMRI) study in the literature that investigated listening to speech in ecologically realistic noise in older subjects (Wong et al., 2009). In this study, two groups of adults (young and old) who both had normal peripheral hearing functions performed an auditory word-picture matching task in quiet and noisy conditions in a sparse-sampling fMRI experiment. Consistent with the decline-compensation hypothesis, they found reduced activation in auditory regions in older compared with younger subjects, with increased activation in frontal and posterior parietal working memory and attention networks. Increased activation in these frontal and posterior parietal regions were positively correlated with behavioral performance in the older subjects, suggesting their compensatory role in aiding older subjects to achieve accurate spoken word processing in noise. Increases in hemodynamic responses have been observed coupled with increases in cognitive effort (Carpenter, Just, Keller, Eddy, & Thulborn, 1999). The activation of this superior temporal-prefrontal pathway in the older subjects may suggest an increase in subjects' effort to integrate auditory and motor information (Hickok & Poeppel, 2004), as well as the effort involved in using phonological working memory to overcome the presence of noise.

These differing neural networks that correlate with adaptive cognitive processes with age are consistent with the revised view that the brain itself has potentially a life-long capacity for neural plasticity and adaptive reorganization. For example, healthy older adults have been shown to benefit from intensive memory training, as evidenced by changes in memory skills (Mahncke et al., 2006) and differences in the patterns of brain activation before and after training (Nyberg et al., 2003). Importantly, in the study by Mahncke and colleagues (2006), the subjects' memory improvement persisted months after training and generalized to other cognitively similar tasks. This offers the possibility that despite normal cognitive and peripheral hearing decline with age, targeted cognitive “top-down” training such as improving auditory working memory relevant to listening could improve speech perception abilities in older subjects. This has significant implications for rehabilitation training that we now discuss in our final section below.

Rehabilitation

As discussed in an earlier review of hearing loss by Arlinger (2003), speech-perception deficits associated with normal aging can be reduced if hearing aids are worn, and remediation of hearing loss has been related to better emotional and social well-being and greater longevity. Indeed, some studies have suggested that corrective hearing aids may have a protective role against cognitive decline and provide better quality of life for elderly people (Cacciatore et al., 1999). Even in those elderly populations with cognitive deficits, there is some evidence that corrective hearing aids can reduce the rate of decline on cognitive screening tests (Allen et al., 2003) and slow cognitive decline in patients with Alzheimer's disease (Peters, Potter, & Scholer, 1988). As such, cognitive tests may serve as sensitive outcome measures of hearing rehabilitation. For example, Lehrl and colleagues (Lehrl, Funk, & Seifert, 2005) found that in a hearing-impaired group of 70-year-olds, use of a hearing aid for 2 to 3 months improved working memory capacity compared with controls matched on IQ, chronological age, and hearing impairment. However, more global measures of cognitive skills, not necessarily measuring working memory capacity, showed no significant improvement after 12 months of hearing aid use (van Hooren et al., 2005), although it is possible that performance may have declined if hearing aids had not been worn. Consistent with this is the finding that auditory speech processing problems were predictive of future manifestation of dementia in longitudinal research conducted over periods of up to 12 years (Gates, Beiser, Rees, D'Agostino, & Wolf, 2002; Gates, Feeney, & Mills, 2008). That hearing rehabilitative interventions could alter the time course of the onset of dementia symptoms is of significant clinical importance.

Related to this is the fundamental issue in rehabilitation planning—determining who is a candidate for amplification, other forms of audiologic rehabilitation, or both. Most hearing aid users are older than 65 years and the average new hearing aid user is 70 years old (Kricos & Holmes, 1996). Rates of hearing aid acceptance are generally low. For example, in the United States, “over the last 20 years, hearing aid adoption has remained stubbornly at about one in five adults with an admitted hearing loss” (Kochkin, 2007), whereas in the United Kingdom, of the 29% of people aged 55 to 74 years that have a better ear > 25 dB hearing loss (averaged over 0.5, 1, 2, and 4 kHz in the better ear) only 6% currently have hearing aids (Davis, Smith, Ferguson, Stephens, & Gianopoulos, 2007). With hearing aid outcomes being poorest for first-time users in the United States, 26% of people end up wearing their hearing aids less than 4 hr a day, 11% less than once a year (Kochkin, 2007), and in the United Kingdom, a total of 22% of all people fitted with hearing aids discontinue using them (Davis et al., 2007). The critical questions then are (a) can we better identify which older adults with hearing impairment seeking help for the first time would benefit from hearing aids and (b) if so, would outcomes then improve? There is some evidence to suggest this may well be the case. There are significant individual differences in acclimatization to hearing aids (Turner, Humes, Bentler, & Cox, 1996). To date, few studies have considered the role of cognitive skills relevant to speech processing. However, a general link between higher cognitive skills and benefit from hearing aids has been found. For example, Gatehouse, Naylor, and Elberling (2003) and Lunner (2003) found that subject's performance on a visual vigilance test correlated significantly with their success with complex signal processing hearing aids with fast time constants. One explanation of the link is that individual differences in cognition may actually be advantageous to older listeners. Those with larger working memory capacities may be better able to succeed with amplification, especially in effortful listening conditions, by recruiting executive prefrontal resources and using context to facilitate learning to remap altered auditory signals to semantics (Pichora-Fuller & Singh, 2006). It is important to note that this does not mean that those with lower cognitive abilities would not benefit from hearing aids. Rather, it suggests that these individuals may require either a different type of hearing aid and/or more active listening training to boost “top-down” processing to help learn and adjust to amplification. Indeed, Lunner and Sundewall-Thoren (2007) and Gatehouse, Naylor, and Elberling (2006a, 2006b) found that for speech intelligibility, subjects with higher cognitive scores performed better with hearing aids that had short release times (RT; “syllabic compression”). However, further studies are necessary to identify which cognitive skills are most sensitive to this effect. Although Foo, Rudner, Ronnberg, and Lunner (2007) also found a relationship between cognitive scores and best RT for intelligibility, it was not the subject's performance on a letter monitoring task (working memory) but a reading span task (sustained attention) that was found to be a strong predictor of speech recognition in noise with new compression release settings. Across studies, a variety of different cognitive tests have been used. Future studies need to identify which cognitive tests will be useful (sensitive and reliable) to guide clinicians in assessing candidacy for or outcomes of rehabilitation. Interestingly, in all these studies, the subject's preference for short or long RT in daily life was not reported. Thus, the question of whether these predictions from laboratory tests actually translate to efficiency in daily life also remain to be answered.

Conclusion

The effect of age on auditory and cognitive processing is not a simple or wholly negative one. Hearing does become more difficult with age but brain plasticity and cognitive compensation continues, offering hope and inspiration for proponents of auditory training programs and older listeners. Future research needs to be specific in identifying not only which cognitive abilities relate to individual differences in speech processing over the normal course of aging but also how they relate. Understanding this, and our ability to learn, or indeed relearn, how to listen to and understand our complex auditory world will have significant implications for clinical practice. An excellent recent review of the development of cognitive hearing research (Arlinger et al., 2009) failed to find a single study that has investigated the influence of hearing loss on cognitive training and its impact on brain function or the effectiveness of cognitive training focusing on cognitive abilities relevant to listening or speech understanding. Thus, the need to integrate auditory and cognitive findings in both basic research and clinical practice is obvious and an exciting direction for the future.

Declaration of Conflicting Interests

The author(s) declared that they had no conflicts of interest with respect to their authorship or the publication of this article.

Funding

The author(s) disclosed that they received the following support for their research and/or authorship of this article: The pilot study presented in this article for the first time was collected as part of study funded by the Nuffield Foundation Small Grants Scheme (SGS/35293) awarded to the authors. RL is funded by RCUK. JC is funded by a MRC Clinical Scientist Fellowship.

References

- Allen N. H., Burns A., Newton V., Hickson F., Ramsden R., Rogers J., Morris J. (2003). The effects of improving hearing in dementia. Age Ageing, 32, 189–193 [DOI] [PubMed] [Google Scholar]

- Arlinger S. (2003). Negative consequences of uncorrected hearing loss—A review. International Journal of Audiology, 42(Suppl. 2), 2S17–20 [PubMed] [Google Scholar]

- Arlinger S., Lunner T., Lyxell B., Pichora-Fuller M. K. (2009). The emergence of cognitive hearing science. Scandinavian Journal of Psychology, 50, 371–384 [DOI] [PubMed] [Google Scholar]

- Aydelott J., Bates E. (2004). Effects of acoustic distortion and semantic context on lexical access. Language and Cognitive Processes, 19(1), 29–56 [Google Scholar]

- Bialystok E., Craik F., Luk G. (2008). Cognitive control and lexical access in younger and older bilinguals. Journal of Experimental Psychology Learning Memory and Cognition, 34, 859–873 [DOI] [PubMed] [Google Scholar]

- Bilger R. C., Nuetzel J. M., Rabinowitz W. M., Rzeczkowski C. (1984). Standardization of a test of speech perception in noise. Journal of Speech and Hearing Research, 27(1), 32–48 [DOI] [PubMed] [Google Scholar]

- Brown G. D. A. (1984). A frequency count of 190,000 words in the London-Lund Corpus of English conversation. Behavioural Research Methods Instrumentation and Computers, 16, 502–532 [Google Scholar]

- Burke D. M., Peters L. (1986). Word associations in old age: Evidence for consistency in semantic encoding during adulthood. Psychology and Aging, 1, 283–292 [DOI] [PubMed] [Google Scholar]

- Cabeza R., Anderson N. D., Locantore J. K., McIntosh A. R. (2002). Aging gracefully: Compensatory brain activity in high-performing older adults. Neuroimage, 17, 1394–1402 [DOI] [PubMed] [Google Scholar]

- Cabeza R., Daselaar S. M., Dolcos F., Prince S. E., Budde M., Nyberg L. (2004). Task-independent and task-specific age effects on brain activity during working memory, visual attention and episodic retrieval. Cerebral Cortex, 14, 364–375 [DOI] [PubMed] [Google Scholar]

- Cacciatore F., Napoli C., Abete P., Marciano E., Triassi M., Rengo F. (1999). Quality of life determinants and hearing function in an elderly population: Osservatorio Geriatrico Campano Study Group. Gerontology, 45, 323–328 [DOI] [PubMed] [Google Scholar]

- Carpenter P. A., Just M. A., Keller T. A., Eddy W., Thulborn K. (1999). Graded functional activation in the visuospatial system with the amount of task demand. Journal of Cognitive Neuroscience, 11(1), 9–24 [DOI] [PubMed] [Google Scholar]

- Cohen G. (1987). Speech comprehension in the elderly: The effects of cognitive changes. British Journal of Audiology, 21, 221–226 [DOI] [PubMed] [Google Scholar]

- Coltheart M. (1981). The MRC psycholinguistic database. Quarterly Journal of Experimental Psychology, 33A, 497–505 [Google Scholar]

- Davis A., Smith P., Ferguson M., Stephens D., Gianopoulos I. (2007). Acceptability, benefit and costs of early screening for hearing disability: A study of potential screening tests and models. Health Technology Assessment, 11, 1–294 [DOI] [PubMed] [Google Scholar]

- Davis H., Silverman R. (1970). Hearing and deafness (3rd ed.). New York, NY: Holt, Rinehart, Winston [Google Scholar]

- Davis M. H., Johnsrude I. S., Hervais-Adelman A., Taylor K., McGettigan C. (2005). Lexical information drives perceptual learning of distorted speech: Evidence from the comprehension of noise-vocoded sentences. Journal of Experimental Psychology: General, 134, 222–241 [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Ahlstrom J. B., Horwitz A. R. (2000). Use of context by young and aged adults with normal hearing. Journal of the Acoustical Society of America, 107, 538–546 [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Ahlstrom J. B., Horwitz A. R. (2002). Spectral contributions to the benefit from spatial separation of speech and noise. Journal of Speech, Language, and Hearing Research, 45, 1297–1310 [DOI] [PubMed] [Google Scholar]

- Dubno J. R., Ahlstrom J. B., Horwitz A. R. (2008). Binaural advantage for younger and older adults with normal hearing. Journal of Speech, Language, and Hearing Research, 51, 539–556 [DOI] [PubMed] [Google Scholar]

- Fischler I., Bloom P. A. (1979). Automatic and attentional processes in the effects of sentence contexts on word recognition. Journal of Verbal Learning and Verbal Behavior, 18(1), 1–20 [Google Scholar]

- Fischler I., Bloom P. A. (1980). Rapid processing of the meaning of sentences. Memory & Cognition, 8, 216–225 [DOI] [PubMed] [Google Scholar]

- Foo C., Rudner M., Ronnberg J., Lunner T. (2007). Recognition of speech in noise with new hearing instrument compression release settings requires explicit cognitive storage and processing capacity. Journal of the American Academy of Audiology, 18, 618–631 [DOI] [PubMed] [Google Scholar]

- Frisina D. R., Frisina R. D. (1997). Speech recognition in noise and presbycusis: Relations to possible neural mechanisms. Hearing Research, 106(1–2), 95–104 [DOI] [PubMed] [Google Scholar]

- Gatehouse S., Naylor G., Elberling C. (2003). Benefits from hearing aids in relation to the interaction between the user and the environment. International Journal of Audiology, 42(Suppl. 1), S77–85 [DOI] [PubMed] [Google Scholar]

- Gatehouse S., Naylor G., Elberling C. (2006a). Linear and nonlinear hearing aid fittings—1. Patterns of benefit. International Journal of Audiology, 45, 130–152 [DOI] [PubMed] [Google Scholar]

- Gatehouse S., Naylor G., Elberling C. (2006b). Linear and nonlinear hearing aid fittings—2. Patterns of candidature. International Journal of Audiology, 45, 153–171 [DOI] [PubMed] [Google Scholar]

- Gates G. A., Beiser A., Rees T. S., D'Agostino R. B., Wolf P. A. (2002). Central auditory dysfunction may precede the onset of clinical dementia in people with probable Alzheimer's disease. Journal of the American Geriatrics Society, 50, 482–488 [DOI] [PubMed] [Google Scholar]

- Gates G. A., Feeney M. P., Mills D. (2008). Cross-sectional age-changes of hearing in the elderly. Ear and Hearing, 29, 865–874 [DOI] [PubMed] [Google Scholar]

- Gates G. A., Mills J. H. (2005). Presbycusis. Lancet, 366, 1111–1120 [DOI] [PubMed] [Google Scholar]

- George E. L., Zekveld A. A., Kramer S. E., Goverts S. T., Festen J. M., Houtgast T. (2007). Auditory and nonauditory factors affecting speech reception in noise by older listeners. Journal of the Acoustical Society of America, 121, 2362–2375 [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S., Fitzgibbons P. J. (1997). Selected cognitive factors and speech recognition performance among young and elderly listeners. Journal of Speech, Language, and Hearing Research, 40, 423–431 [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S. (2005) Hearing loss and aging: new research findings and clinical implications. J Rehabil Res Dev. Jul–Aug; 42 (4 Suppl 2):9–24 [DOI] [PubMed] [Google Scholar]

- Gordon-Salant S., Frisina R. D., Popper A., Fay D. (Eds.). (2010). The aging auditory system: Perceptual characterization and neural bases of presbycusis (Springer handbook of auditory research). Berlin, Germany: Springer [Google Scholar]

- Gygi B., Shafiro V. (2007). General functions and specific applications of environmental sound research. Frontiers in Bioscience, 12, 3152–3166 [DOI] [PubMed] [Google Scholar]

- Hasher L., Zacks R. T. (1988). Working memory, comprehension, and aging: A review and new view. In Bower G. H. (Ed.), The psychology of learning and motivation: Advances in research and theory (Vol. 22, pp. 193–225). New York, NY: Academic Press [Google Scholar]

- Hickok G., Poeppel D. (2004). Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition, 92(1–2), 67–99 [DOI] [PubMed] [Google Scholar]

- Hickok G., Poeppel D. (2007). The cortical organization of speech processing. Nature Reviews Neuroscience, 8, 393–402 [DOI] [PubMed] [Google Scholar]

- Humes L. E. (1996). Speech understanding in the elderly. Journal of the American Academy of Audiology, 7, 161–167 [PubMed] [Google Scholar]

- Humes L. E. (2007). The contributions of audibility and cognitive factors to the benefit provided by amplified speech to older adults. Journal of the American Academy of Audiology, 18, 590–603 [DOI] [PubMed] [Google Scholar]

- Jenstad L. M., Souza P. E. (2007). Temporal envelope changes of compression and speech rate: Combined effects on recognition for older adults. Journal of Speech, Language, and Hearing Research, 50, 1123–1138 [DOI] [PubMed] [Google Scholar]

- Just M. A., Carpenter P. A. (1992). A capacity theory of comprehension: Individual differences in working memory. Psychological Review, 99(1), 122–149 [DOI] [PubMed] [Google Scholar]

- Kiessling J., Pichora-Fuller M. K., Gatehouse S., Stephens D., Arlinger S., Chisolm T., von Wedel H. (2003). Candidature for and delivery of audiological services: Special needs of older people. International Journal of Audiology, 42(Suppl. 2), 2S92–101 [PubMed] [Google Scholar]

- Kochkin S. (2007). Increasing hearing aid adoption through multiple environmental listening utility. Hearing Journal, 60(11), 28–31 [Google Scholar]

- Kricos P. B., Holmes A. E. (1996). Efficacy of audiologic rehabilitation for older adults. Journal of the American Academy of Audiology, 7, 219–229 [PubMed] [Google Scholar]

- Kucera H., Francis W. N. (1967). Computational analysis of present-day American English. Providence, Rhode Island: Brown University Press [Google Scholar]

- Kutas M., Federmeier K. D. (2000). Electrophysiology reveals semantic memory use in language comprehension. Trends in Cognitive Sciences, 4, 463–470 [DOI] [PubMed] [Google Scholar]

- Laver G. D. (2009). Adult aging effects on semantic and episodic priming in word recognition. Psychology and Aging, 24(1), 28–39 [DOI] [PubMed] [Google Scholar]

- Laver G. D., Burke D. M. (1993). Why do semantic priming effects increase in old age? A meta-analysis. Psychology and Aging, 8(1), 34–43 [DOI] [PubMed] [Google Scholar]

- Leech R., Dick F., Aydelott J., Gygi B. (2007). Naturalistic auditory scene analysis in children and adults. Journal of the Acoustical Society of America, 121, 3183–3184 [Google Scholar]

- Leech R., Gygi B., Aydelott J., Dick F. (2009). Informational factors in identifying environmental sounds in natural auditory scenes. Journal of the Acoustical Society of America, 126, 3147–3155 [DOI] [PubMed] [Google Scholar]

- Lehrl S., Funk R., Seifert K. (2005). [The first hearing aid increases mental capacity. Open controlled clinical trial as a pilot study]. HNO, 53, 852–862 (in German) [DOI] [PubMed] [Google Scholar]

- Li K. Z., Lindenberger U. (2002). Relations between aging sensory/sensorimotor and cognitive functions. Neuroscience & Biobehavioral Reviews, 26, 777–783 [DOI] [PubMed] [Google Scholar]

- Lunner T. (2003). Cognitive function in relation to hearing aid use. International Journal of Audiology, 42(Suppl. 1), S49–58 [DOI] [PubMed] [Google Scholar]

- Lunner T., Sundewall-Thoren E. (2007). Interactions between cognition, compression, and listening conditions: Effects on speech-in-noise performance in a two-channel hearing aid. Journal of the American Academy of Audiology, 18, 604–617 [DOI] [PubMed] [Google Scholar]

- Lynch M. P., Steffens M. L. (1994). Effects of aging on processing of novel musical structure. Journals of Gerontology, 49, P165–172 [DOI] [PubMed] [Google Scholar]

- MacDonald H., Davis M. H., Pichora-Fuller K., Johnsrude I. (2008). Contextual influences: Perception of sentences in noise is facilitated similarly in young and older listeners by meaningful semantic context; neural correlates explored via functional magnetic resonance imaging (fMRI). Journal of the Acoustical Society of America, 123, 3887 [Google Scholar]

- Madden D. J. (1988). Adult age differences in the effects of sentence context and stimulus degradation during visual word recognition. Psychology and Aging, 3, 167–172 [DOI] [PubMed] [Google Scholar]

- Madden D. J., Pierce T. W., Allen P. A. (1993). Age-related slowing and the time course of semantic priming in visual word identification. Psychology and Aging, 8, 490–507 [DOI] [PubMed] [Google Scholar]

- Mahncke H. W., Connor B. B., Appelman J., Ahsanuddin O. N., Hardy J. L., Wood R. A., Merzenich M. M. (2006). Memory enhancement in healthy older adults using a brain plasticity-based training program: A randomized, controlled study. Proceedings of the National Academy of Sciences of the United States of America, 103, 12523–12528 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Meyer D. E., Schvaneveldt R. W. (1971). Facilitation in recognizing pairs of words: Evidence of a dependence between retrieval operations. Journal of Experimental Psychology, 90, 227–234 [DOI] [PubMed] [Google Scholar]

- Meyer D. E., Schvaneveldt R. W. (1976). Meaning, memory structure, and mental processes. Science, 192(4234), 27–33 [DOI] [PubMed] [Google Scholar]

- Moll K., Cardillo E., Aydelott Utman J. (2001). Effects of competing speech on sentence-word priming: Semantic, perceptual, and attentional factors. Paper presented at the Twenty-Third Annual Conference of the Cognitive Science Society, Mahwah, NJ.

- Moll S., Miot S., Sadallah S., Gudat F., Mihatsch M. J., Schifferli J. A. (2001). No complement receptor 1 stumps on podocytes in human glomerulopathies. Kidney International, 59, 160–168 [DOI] [PubMed] [Google Scholar]

- Murphy D. R., Craik F. I., Li K. Z., Schneider B. A. (2000). Comparing the effects of aging and background noise on short-term memory performance. Psychology and Aging, 15, 323–334 [DOI] [PubMed] [Google Scholar]

- Murphy D. R., McDowd J. M., Wilcox K. A. (1999). Inhibition and aging: Similarities between younger and older adults as revealed by the processing of unattended auditory information. Psychology and Aging, 14(1), 44–59 [DOI] [PubMed] [Google Scholar]

- Murphy D. R., Schneider B. A., Speranza F., Moraglia G. (2006). A comparison of higher order auditory processes in younger and older adults. Psychology and Aging, 21, 763–773 [DOI] [PubMed] [Google Scholar]

- Nyberg L., Sandblom J., Jones S., Neely A. S., Petersson K. M., Ingvar M., Bäckman L. (2003). Neural correlates of training-related memory improvement in adulthood and aging. Proceedings of the National Academy of Sciences of the United States of America, 100, 13728–13733 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obleser J., Wise R. J., Alex Dresner M., Scott S. K. (2007). Functional integration across brain regions improves speech perception under adverse listening conditions. Journal of Neuroscience, 27, 2283–2289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park D.C., Smith A.D., Lautenschlager G., Earles J.L., Frieske D., Zwahr M., Gaines C.L. (1996) Mediators of long-term memory performance across the life span. Psychol Aging. Dec; 11(4):621–37 [DOI] [PubMed] [Google Scholar]

- Peters C. A., Potter J. F., Scholer S. G. (1988). Hearing impairment as a predictor of cognitive decline in dementia. Journal of the American Geriatrics Society, 36, 981–986 [DOI] [PubMed] [Google Scholar]